A Record–Replay-Based State Recovery Approach for Variants in an MVX System

Abstract

1. Introduction

- •

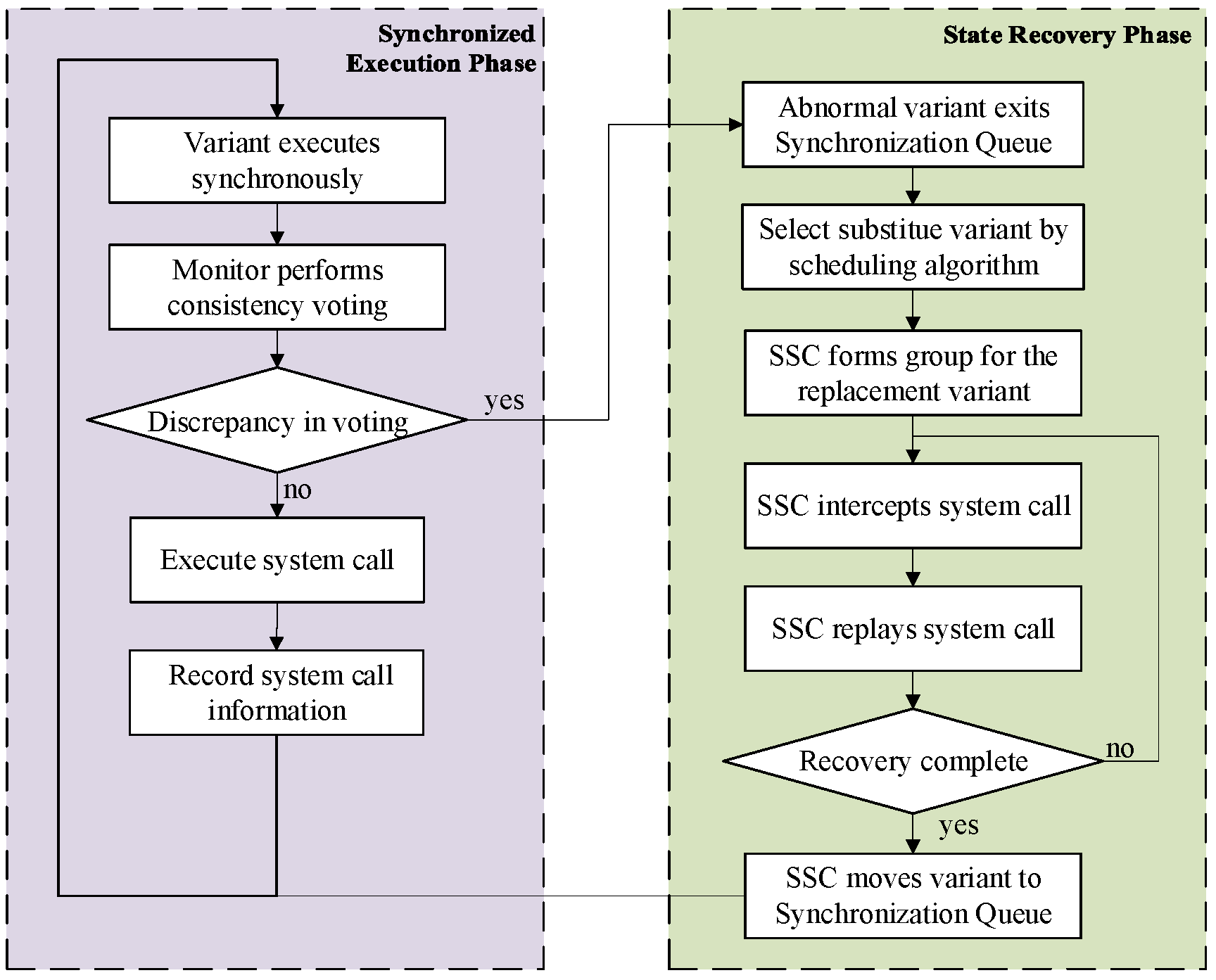

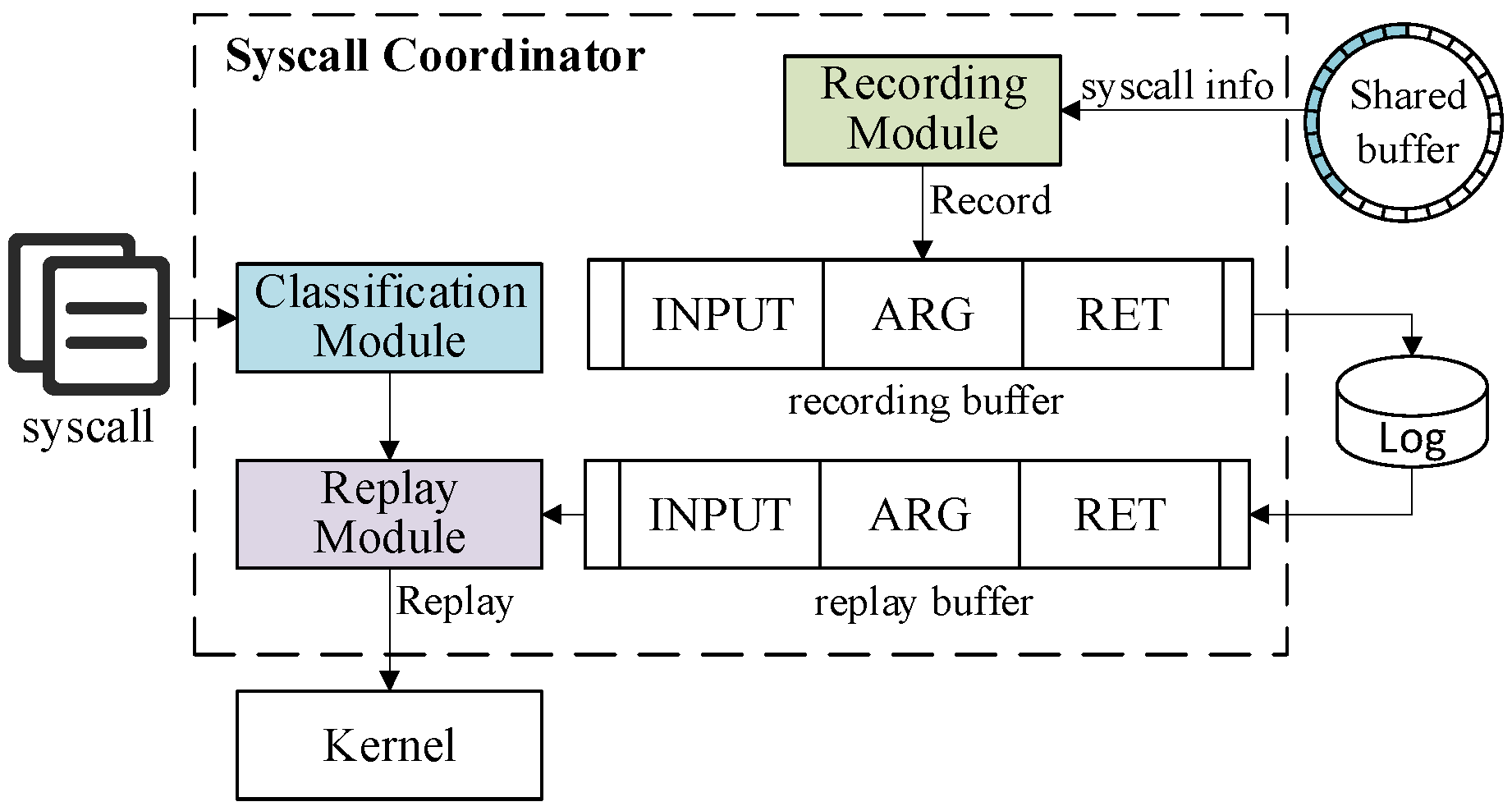

- We design an SSC for an MVX system that supports state recovery of variants through a record–replay mechanism. The SSC consists of a recording module, a classification module, and a replay module. These components are responsible for recording system calls that are validated through a voting process during the synchronized execution phase, and for their deterministic replay during the state recovery phase.

- •

- We design a synchronization and voting algorithm for dynamically managing the synchronized execution queue during the synchronized execution phase. When the voting results are consistent, the algorithm triggers the SSC’s recording module to record system calls. In the case of divergence, the abnormal variant is removed from the Synchronization Queue and terminated. A new successor is then instantiated and assigned to the SSC for state recovery.

- •

- We design a parallel grouped recovery mechanism to enable uninterrupted system service. By decoupling the responsibilities of the Monitor and the SSC, this mechanism allows the parallel execution of normal variants and the state recovery for successor variants. As a result, the MVX system is able to continue providing services without waiting for the recovery to complete.

2. Related Work

2.1. Multi-Variant Execution (MVX) Technology

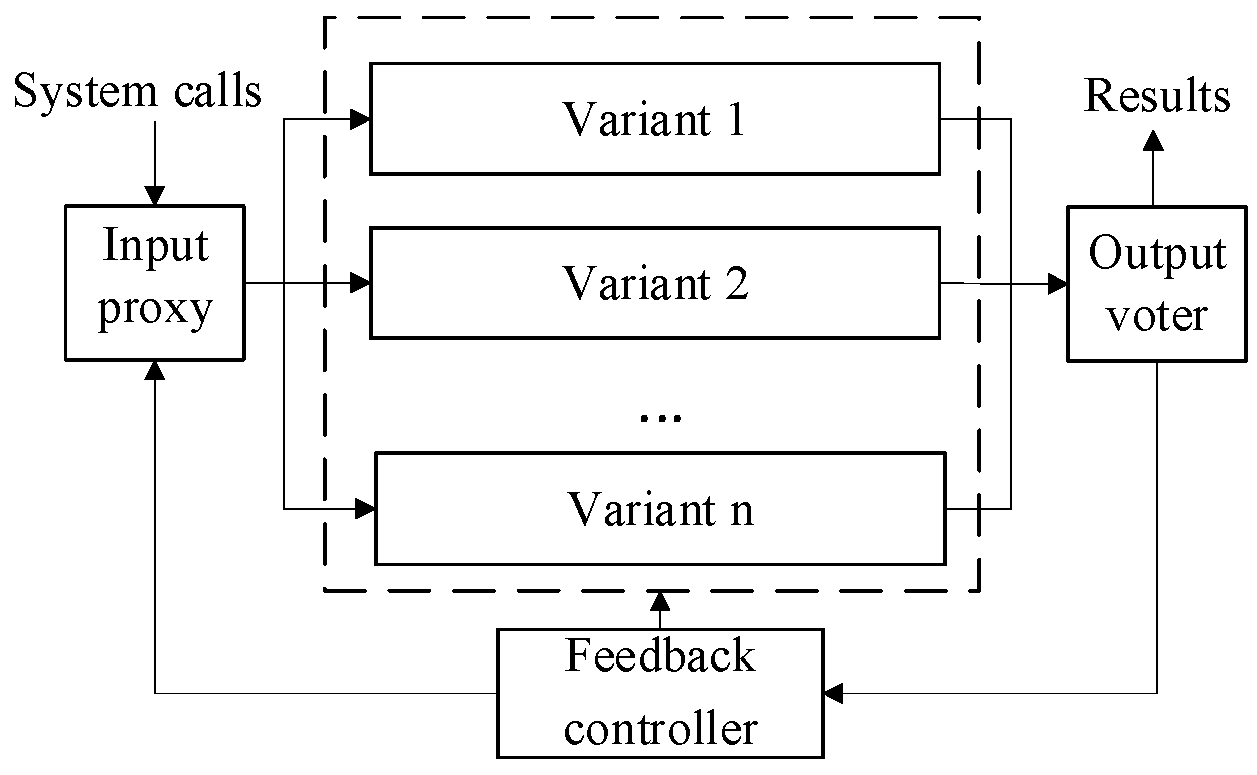

- The variant set consists of multiple variants with the same function but distinct structures. These variants are generated using randomization techniques such as address space layout randomization (ASLR) [10]. Differences in the memory layouts of variants provide the heterogeneity that forms the basis of MVX systems’ security guarantees.

- The input proxy replicates incoming inputs and distributes them to the variant set. Each variant in the set executes independently and produces its own output.

- The output voter collects the outputs from all variants, performs voting on the results, and returns the correct result to the runtime environment.

- The feedback controller handles voting discrepancies. Upon identifying voting discrepancies, the feedback controller reconstructs the variant set using a dynamic scheduling algorithm. This process is called the sanitization and recovery of abnormal variants. After an abnormal variant is removed, a successor variant is instantiated. Before this new variant can take over the execution, its state must be restored to match that of the active variants.

2.2. Software Recovery Technology

- Scalability Overhead: Directly integrating RR into MVX significantly increases recording overhead due to multiple concurrent variants.

- State Inconsistency: Without state consistency guarantees, the output of the recovered variants may differ from that of the normal variants, potentially resulting in false positives.

- Service Disruption: The MVX system must suspend the operation of normal variants during variant recovery, leading to service interruptions until recovery completion.

3. Methodology

3.1. Overview

3.1.1. Architecture

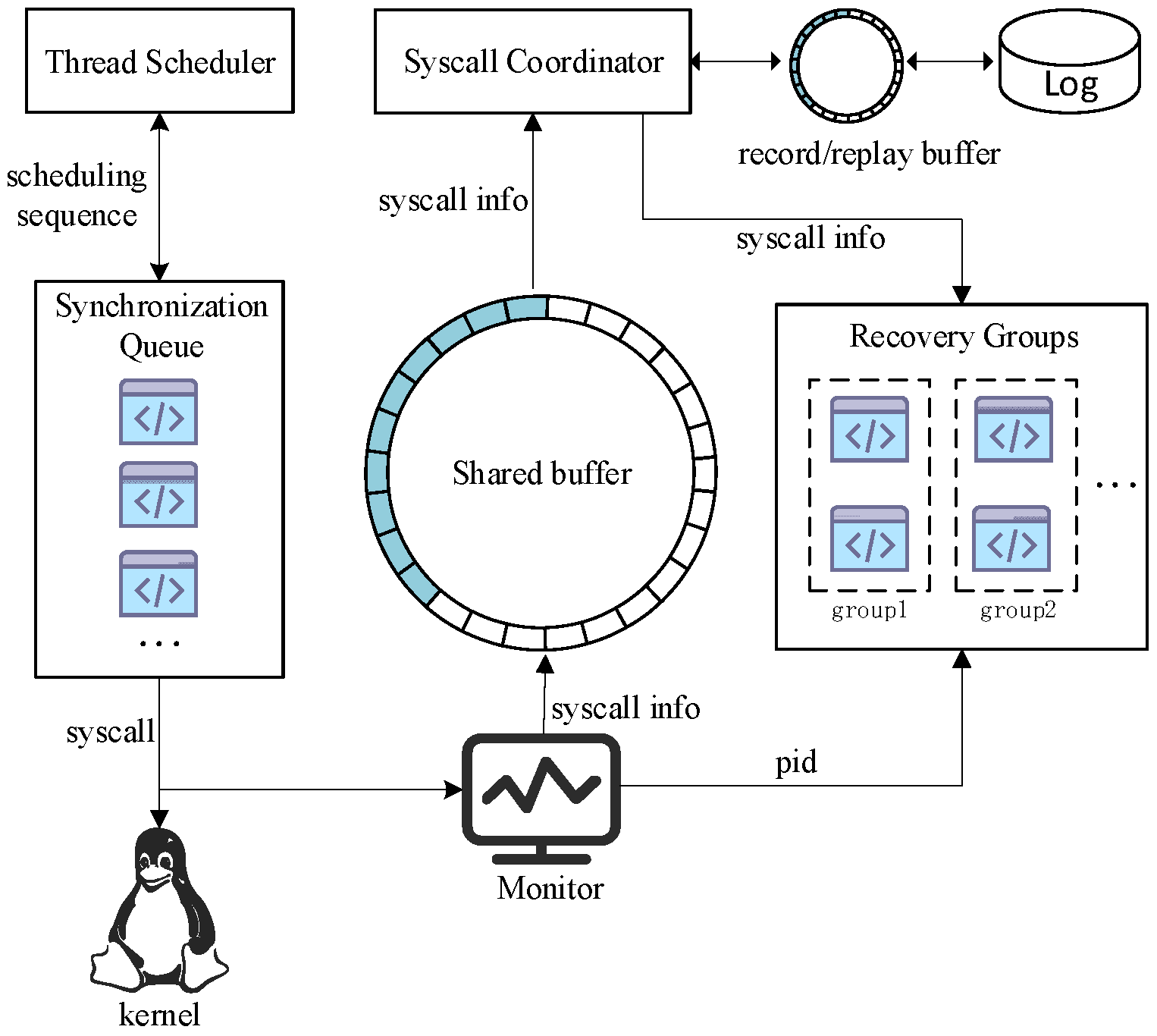

- Monitor: This is the core component of the MVX system. It intercepts system calls issued by variants in the Synchronization Queue and controls both variant synchronization and majority voting.

- Syscall Coordinator (SSC): The SSC is responsible for the state recovery of new variants launched to replace abnormal ones. It records system calls that have been validated through voting and replays them during recovery (details in Section 3.2).

- Shared Buffer: This component temporarily stores system call records from the variants in the Synchronization Queue. The Monitor accesses these records in the Shared Buffer to perform synchronization and voting.

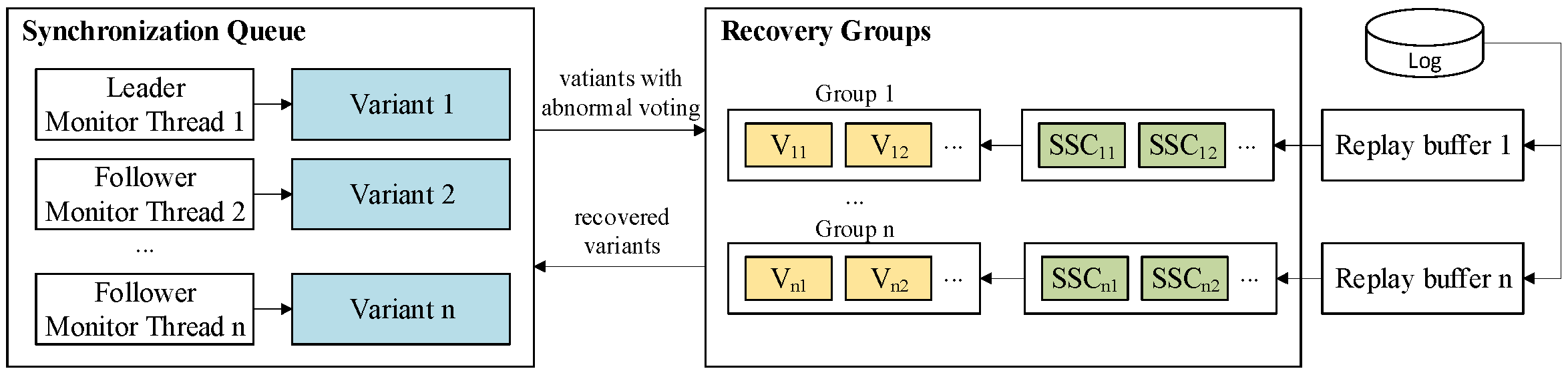

- Synchronization Queue: This queue holds the active variant set, which drives the program’s forward execution. At each system call boundary, these variants must synchronize to perform voting. During the voting process, the abnormal variants are removed from this queue and transferred to the Recovery Groups (details in Section 3.3).

- Recovery Groups: They consist of newly instantiated variants, which we term “successor variants”, that require state recovery. They are created to replace variants that fail in the voting and are grouped together to be recovered. Each group corresponds to a different recovery timestamp and is handled independently. The SSC coordinates the recovery of variants in each group, which runs concurrently with the forward execution of variants (details in Section 3.4).

- Thread Scheduler: This component supports multi-threaded execution across variants. During synchronized execution, it records the thread scheduling sequences of the leading variants and enforces the same sequences across all other variants to ensure consistency.

3.1.2. Workflow

3.1.3. Parallel Execution Mechanism

3.2. Syscall Coordinator (SSC)

- Recording Module

- 2.

- Classification Module

- Non-deterministic system calls (e.g., getpid, random, fstat) may return different values across variants and execution times. During recovery, such calls may lead to inconsistent states. The SSC handles them by skipping actual execution: it modifies the syscall number to an unrelated call (e.g., getppid) and replaces the return value with the one recorded in the ret unit.

- Sensitive system calls (e.g., mkdir, write, send) can affect external system states and are assumed to have already been executed correctly before recovery. Re-executing them during recovery could result in duplicated operations or errors. These calls are also skipped during recovery.

- External input system calls (e.g., read, recv) depend on sender-side input operations, which cannot be re-triggered during recovery. To handle this, the SSC caches the input during synchronous execution in the Input Unit. During recovery, the SSC intercepts the call, fetches the target memory address, writes the cached input content to the variant’s memory, modifies the syscall number to a harmless one, and finally returns the input length as the syscall result.

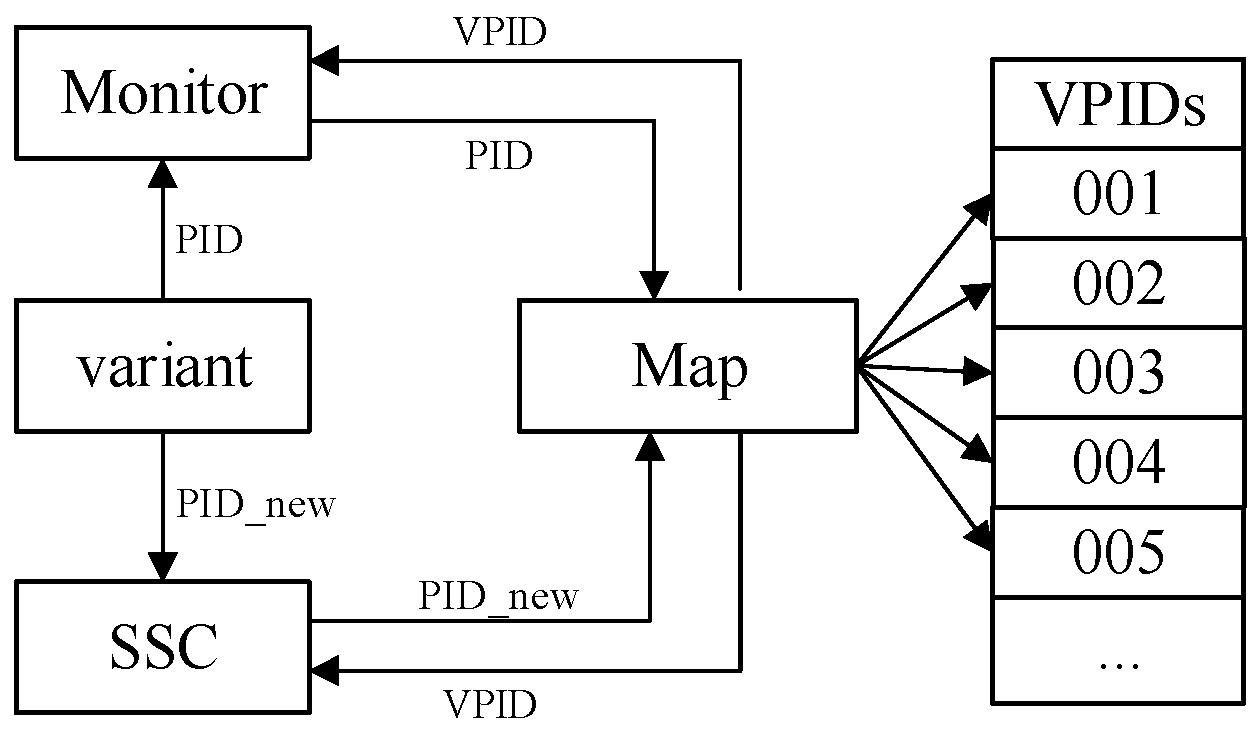

- Process-related system calls, such as fork, pose a unique non-deterministic challenge because each variant receives a different process/thread ID (PID). Unlike other non-deterministic values (e.g., timestamps), these PIDs are crucial for subsequent control operations like wait(PID). Consequently, a naive approach of simply rewriting the PIDs to a common value to pass voting would break program logic, as later operations would reference an invalid PID.

- 3.

- Replay Module

3.3. Synchronization and Voting

| Algorithm 1 Synchronization and Voting Algorithm |

| Input: Synchronization Queue Qsync, Variant Process ID pid, shared_buffer, Primary Monitor Process leader |

| Output: result |

| syn_count ← 0 // Initialize the sync counter syscallInfo ← SyscallGet(pid) // Intercept and fetch the syscall data syn_count ← syn_count + 1 while syn_count < GetQueueNumber(Qsync) do Wait for other variants to sync // Wait all variants are synchronized end while shared_buffer ← syscallInfo // Write to shared buffer for voting voteRes ← Voting(shared_buffer) if voteRes =−1 then result ← valid if getpid() = leader then RecordSyscall(syscallInfo) // Only the leader calls record module end if else result ← invalid if getpid() = leader then RemoveFromQueue(voteRes, Qsync) Pid_new ← scheduling() Recovery(Pid_new) // Trigger recovery for the successor variant while GetQueueNumber(Qsync) = 0 do Waiting for recovery to complete end while end if end if |

3.4. State Recovery for Variants

| Algorithm 2 Parallel Grouped Recovery Algorithm |

| Input: Process ID of successor variant pid, Synchronization Queue Qsync |

| Output: Number of replayed system calls count |

| count ← 0 // Initialize replayed system call counter Gi ← NewRecoveryGroup(pid) buffer ← InitReplayBuffer(Gi) // Initialize the replay buffer while count < GetState(Qsync) do // Check the recovery is complete UpdateBuffer(buffer) // Update buffer contents if needed syscallInfo ← ReadCall(count,buffer) replayStrategy ← Classify(syscallInfo) ReplayCall(pid, syscallInfo, replayStrategy) count ← count + 1 end while AddToQueue(Qsync,pid) //Add variant back to the queue ReleaseGroup(Gi) return count |

3.5. Comparison with Representative MVX Systems

4. Evaluation

4.1. Performance

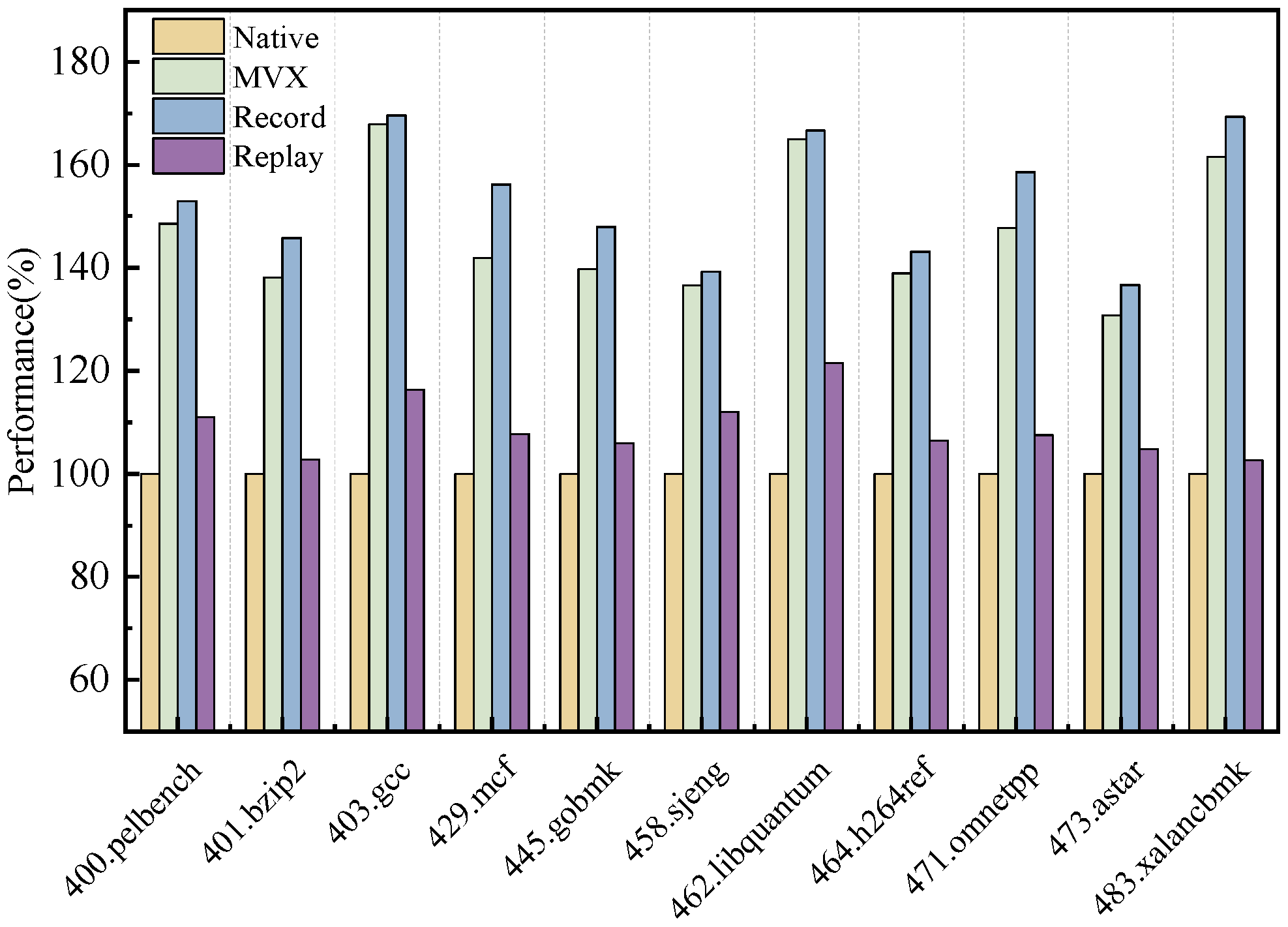

- Performance on Microbenchmark

- 2.

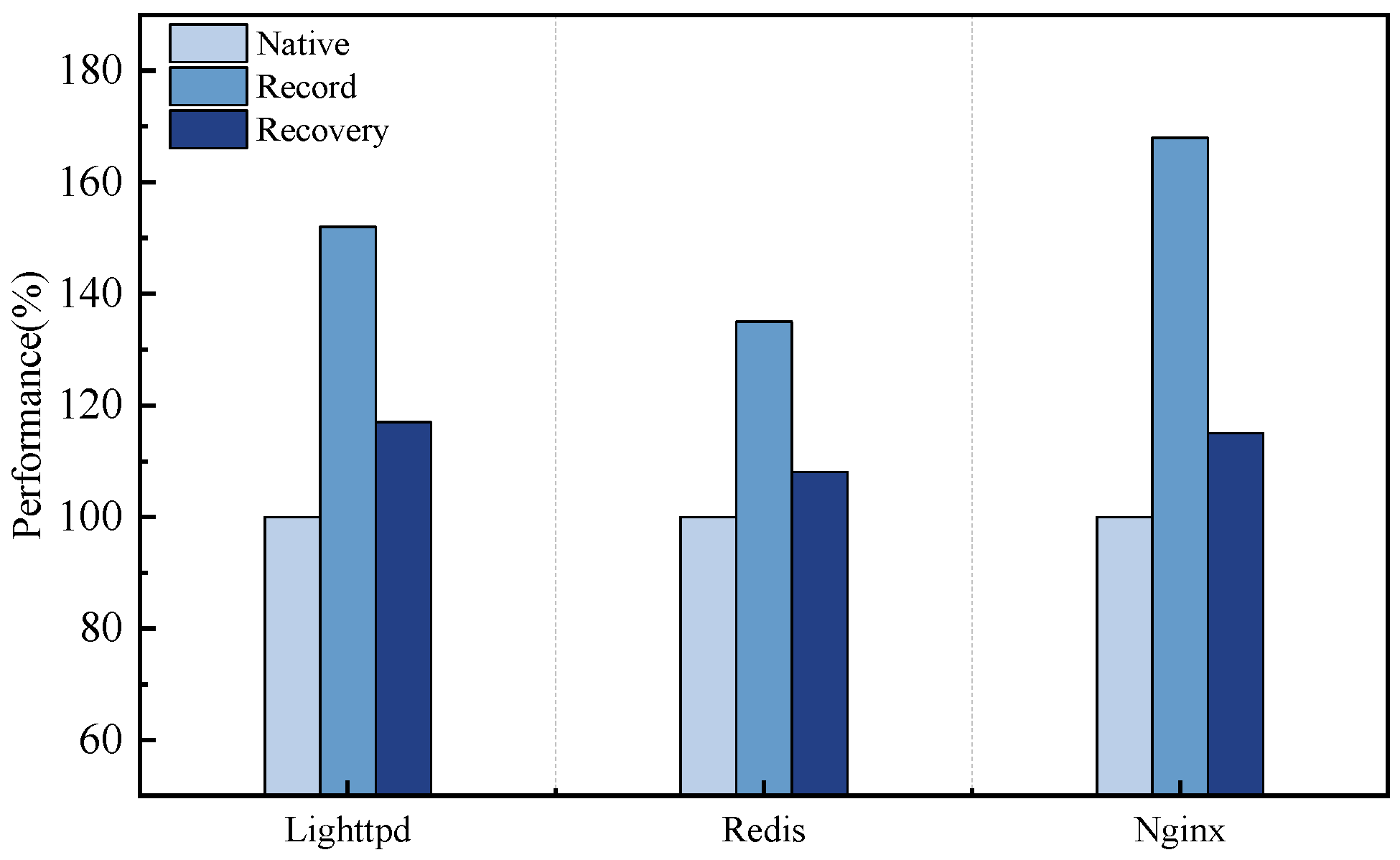

- Performance on Server Application

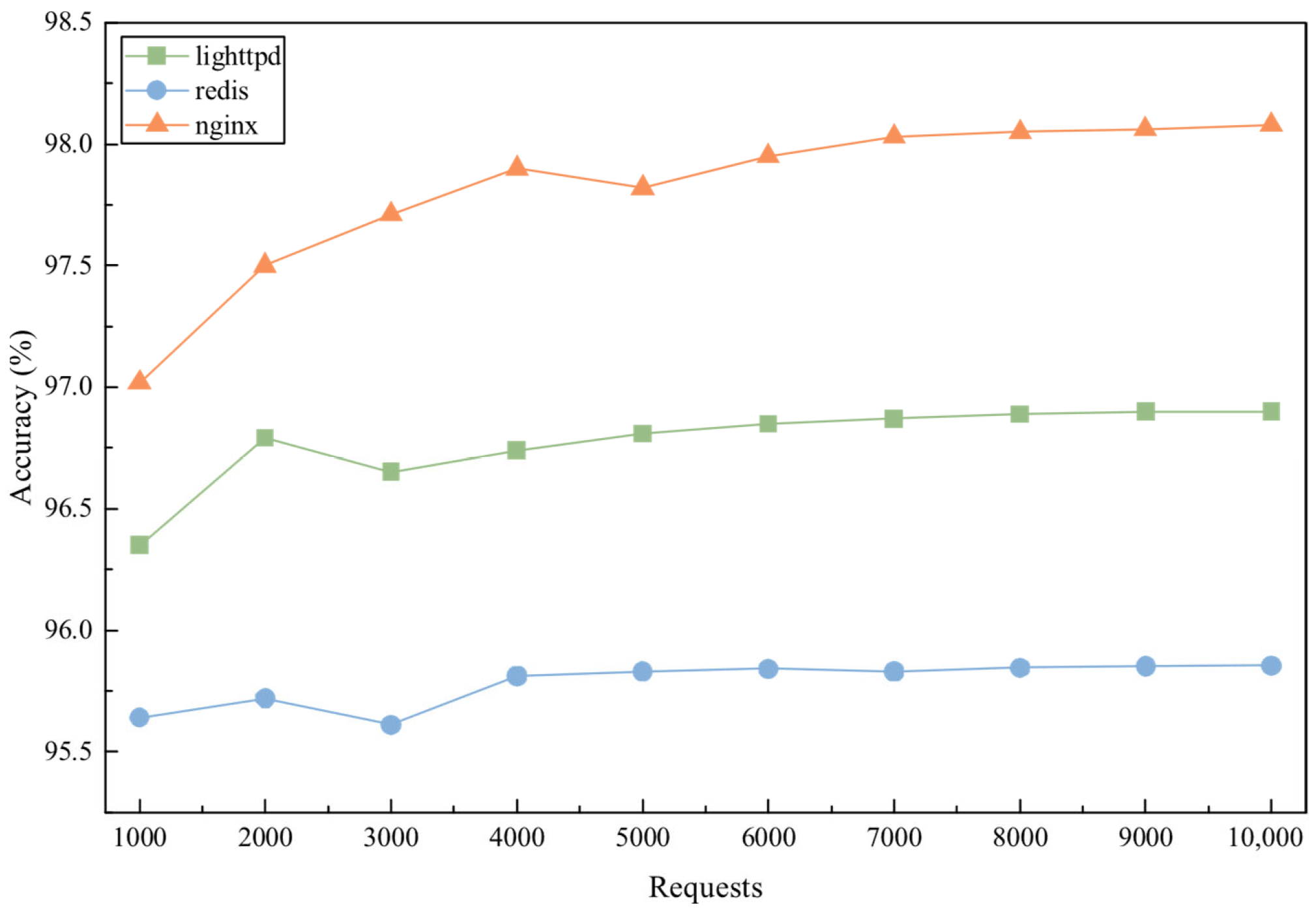

4.2. Effectiveness

4.2.1. Uninterrupted Execution Verification

4.2.2. Recovery Correctness Verification

4.3. Security

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MVX | Multi-Variant Execution |

| SSC | Syscall Coordinator |

| RR | Record–Replay |

References

- Temizkan, O.; Park, S.; Saydam, C. Software diversity for improved network security: Optimal distribution of software-based shared vulnerabilities. Inf. Syst. Res. 2017, 28, 828–849. [Google Scholar] [CrossRef]

- Albusays, K.; Bjorn, P.; Dabbish, L.; Ford, D.; Murphy-Hill, E.; Serebrenik, A.; Storey, M.-A. The diversity crisis in software development. IEEE Softw. 2021, 38, 19–25. [Google Scholar] [CrossRef]

- Cox, B.; Evans, D.; Filipi, A.; Rowanhill, J.; Hu, W.; Davidson, J.; Knight, J.; Nguyen-Tuong, A.; Hiser, J. N-Variant Systems: A Secretless Framework for Security through Diversity. In Proceedings of the 15th USENIX Security Symposium, Vancouver, BC, Canada, 31 July 2006; pp. 105–120. [Google Scholar]

- Yao, D.; Zhang, Z.; Zhang, G.; Liu, H.; Pan, C.; Wu, J. A Survey on Multi-Variant Execution Security Defense Technology. J. Cyber Secur. 2020, 5, 77–94. [Google Scholar]

- Bhamare, D.; Zolanvari, M.; Erbad, A.; Jain, R.; Khan, K.; Meskin, N. Cybersecurity for industrial control systems: A survey. Comput. Secur. 2020, 89, 101677. [Google Scholar] [CrossRef]

- Min, B.H.; Borch, C. Systemic failures and organizational risk management in algorithmic trading: Normal accidents and high reliability in financial markets. Soc. Stud. Sci. 2022, 52, 277–302. [Google Scholar] [CrossRef] [PubMed]

- Ruohonen, J.; Rauti, S.; Hyrynsalmi, S.; Leppänen, V. A case study on software vulnerability coordination. Inf. Softw. Technol. 2018, 103, 239–257. [Google Scholar] [CrossRef]

- Wu, J. Development paradigms of cyberspace endogenous safety and security. Sci. Sin. Informationis 2022, 52, 189–204. [Google Scholar] [CrossRef]

- Chen, N.; Jiang, Y.; Hu, A.Q. An Attack Feedback Dynamic Scheduling Strategy Based on Endogenous Security. J. Inf. Secur. Res. 2023, 9, 2–12. [Google Scholar]

- Goktas, E.; Kollenda, B.; Koppe, P.; Bosman, E.; Portokalidis, G.; Holz, T.; Bos, H.; Giuffrida, C. Position-independent code reuse: On the effectiveness of aslr in the absence of information disclosure. In Proceedings of the 2018 IEEE European Symposium on Security and Privacy (EuroS&P), London, UK, 24–26 April 2018; pp. 227–242. [Google Scholar]

- Chen, Y.; Wang, J.; Pang, J.M.; Yue, F. Diversified Compilation Method Based on LLVM. Comput. Eng. 2025, 51, 275–283. [Google Scholar]

- Chen, Z.; Lu, Y.; Qin, J.; Cheng, Z. An optimal seed scheduling strategy algorithm applied to cyberspace mimic defense. IEEE Access 2021, 9, 129032–129050. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, H.; Zhang, W.; Yu, H. Conditional Probability Voting Algorithm Based on Heterogeneity of Mimic Defense System. IEEE Access 2020, 8, 188760–188770. [Google Scholar] [CrossRef]

- Salamat, B.; Jackson, T.; Gal, A.; Franz, M. Orchestra: Intrusion detection using parallel execution and monitoring of program variants in user-space. In Proceedings of the 4th ACM European Conference on Computer Systems, Nuremberg, Germany, 1–3 April 2009; pp. 33–46. [Google Scholar]

- Volckaert, S.; Coppens, B.; Voulimeneas, A.; Homescu, A.; Larsen, P.; De Sutter, B.; Franz, M. Secure and efficient application monitoring and replication. In Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC 16), Santa Clara, CA, USA, 12–14 July 2017; pp. 167–179. [Google Scholar]

- Volckaert, S.; Coppens, B.; De Sutter, B.; De Bosschere, K.; Larsen, P.; Franz, M. Taming parallelism in a multi-variant execution environment. In Proceedings of the Twelfth European Conference on Computer Systems, Belgrade, Serbia, 23–26 April 2017; pp. 270–285. [Google Scholar]

- Hosek, P.; Cadar, C. Varan the unbelievable: An efficient n-version execution framework. ACM SIGARCH Comput. Archit. News 2015, 43, 339–353. [Google Scholar] [CrossRef]

- Koning, K.; Bos, H.; Giuffrida, C. Secure and efficient multi-variant execution using hardware-assisted process virtualization. In Proceedings of the 2016 46th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Toulouse, France, 28 June–1 July 2016; pp. 431–442. [Google Scholar]

- Yeoh, S.; Wang, X.; Jang, J.-W.; Ravindran, B. sMVX: Multi-Variant Execution on Selected Code Paths. In Proceedings of the 25th International Middleware Conference, Hong Kong, China, 2–6 December 2024; pp. 62–73. [Google Scholar]

- Yao, D.; Zhang, Z.; Zhang, G.; Wu, J. MVX-CFI: A practical active defense framework for software security. J. Cyber Secur. 2020, 5, 44–54. [Google Scholar]

- Pan, C.; Zhang, Z.; Ma, B.; Yao, Y.; Ji, X. Method against process control-flow hijacking based on mimic defense. J. Commun. 2021, 42, 37–47. [Google Scholar]

- Schwartz, D.; Kowshik, A.; Pina, L. Jmvx: Fast Multi-threaded Multi-version Execution and Record-Replay for Managed Languages. Proc. ACM Program. Lang. 2024, 8, 1641–1669. [Google Scholar] [CrossRef]

- Cao, J.; Arya, K.; Garg, R.; Matott, S.; Panda, D.K.; Subramoni, H.; Vienne, J.; Cooperman, G. System-level scalable checkpoint-restart for petascale computing. In Proceedings of the 2016 IEEE 22nd International Conference on Parallel and Distributed Systems (ICPADS), Wuhan, China, 13–16 December 2016; pp. 932–941. [Google Scholar]

- Savin, G.I.; Shabanov, B.M.; Fedorov, R.S.; Baranov, A.V.; Telegin, P.N. Checkpointing Tools in a Supercomputer Center. Lobachevskii J. Math. 2020, 41, 2603–2613. [Google Scholar] [CrossRef]

- CRIU. Available online: https://criu.org/Main_Page (accessed on 8 May 2025).

- Mashtizadeh, A.J.; Garfinkel, T.; Terei, D.; Mazieres, D.; Rosenblum, M. Towards practical default-on multi-core record/replay. ACM SIGPLAN Not. 2017, 52, 693–708. [Google Scholar] [CrossRef]

- Laadan, O.; Viennot, N.; Nieh, J. Transparent, lightweight application execution replay on commodity multiprocessor operating systems. In Proceedings of the 2010 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems, New York, NY, USA, 14–18 June 2010; pp. 155–166. [Google Scholar]

- Lidbury, C.; Donaldson, A.F. Sparse record and replay with controlled scheduling. In Proceedings of the 40th ACM SIGPLAN Conference on Programming Language Design and Implementation, Phoenix, AZ, USA, 22–26 June 2019; pp. 576–593. [Google Scholar]

- Lu, K.; Xu, M.; Song, C.; Kim, T.; Lee, W. Stopping memory disclosures via diversification and replicated execution. IEEE Trans. Dependable Secur. Comput. 2018, 18, 160–173. [Google Scholar] [CrossRef]

- Volckaert, S.; Coppens, B.; de SutterMember, B. Cloning your Gadgets:Complete ROP Attack Immunity with Multi-Variant Execution. IEEE Trans. Dependable Secur. Comput. 2016, 13, 437–450. [Google Scholar] [CrossRef]

- Matthew, M.; David, B. TACHYON: Tandem execution for efficient live patch testing. In Proceedings of the 21st USENIX Conference on Security Symposium (Security’12), Bellevue, WA, USA, 8–10 August 2010; pp. 617–639. [Google Scholar]

- Zhou, D.; Tamir, Y. Hycor: Fault-tolerant replicated containers based on checkpoint and replay. arXiv 2021, arXiv:2101.09584. [Google Scholar]

| Category | Examples |

|---|---|

| Non-deterministic system calls | getpid, time, random, fstat… |

| Sensitive system calls | mkdir, write, sendfile… |

| External input system calls | read, recv, accept… |

| Process-related system calls | fork, create… |

| Approach | Recovery Support | Parallelism During Recovery | Notes |

|---|---|---|---|

| Ours | Yes | Yes | Enables Parallel Recovery Without Halting Normal Execution |

| Orchestra [14] | Full restart only | No | Stop running when an anomaly is detected and restart the variants |

| ReMon [15] | No | No | Implements record–replay for multi-thread parallelism |

| MVEE [16] | No | No | Similar to ReMon |

| Varan [17] | Partial | No | Restricts recovery only to slave variants via leader–follower |

| sMVX [19] | No | No | Recovery is not considered |

| Performance Overhead | ||

|---|---|---|

| Recording | Recovery | |

| Ours | 6.96% | 8.98% |

| Scribe [27] | 5% | / |

| Jmvx [22] | 8% | 13% |

| System | Record | Replay | ||||

|---|---|---|---|---|---|---|

| Lighttpd | Redis | Nginx | Lighttpd | Redis | Nginx | |

| Ours | 1.52× | 1.35× | 1.68× | 1.17× | 1.08× | 1.15× |

| MvArmor [18] | 1.77× | / | 1.47× | / | / | / |

| ReMon [15] | 1.55× | 1.45× | 2.94× | / | / | / |

| Tachyon [31] | 1.48× | / | / | / | / | / |

| sMVX [19] | 2.23× | / | 2.66× | / | / | / |

| Castor [26] | / | / | / | 1.13× | / | 1.09× |

| HyCoR [32] | / | / | / | 1.05× | 1.21× | / |

| Program | Correctness | Uninterrupted Operation | ||

|---|---|---|---|---|

| 25% | 50% | 75% | ||

| pelbench | √ | 2 | 2 | 1 |

| bzip2 | √ | 2 | 2 | 2 |

| gcc | √ | 2 | 2 | 2 |

| mcf | √ | 2 | 2 | 2 |

| gobmk | √ | 2 | 2 | 2 |

| sjeng | √ | 2 | 2 | 2 |

| libquantum | √ | 2 | 2 | 2 |

| h264ref | √ | 2 | 2 | 1 |

| omnetpp | √ | 2 | 2 | 1 |

| astar | √ | 2 | 2 | 2 |

| xalancbmk | √ | 2 | 2 | 2 |

| CVE | Threat | Software Version | Defense Success |

|---|---|---|---|

| CVE-2013-2028 | Stack overflow | Nginx 1.3.9 | √ |

| CVE-2014-0160 | Heap overflow | OpenSSL 1.01 | √ |

| CVE-2021-4790 | Stack overflow | Apache HTTP Server ≤ 2.4.51 | √ |

| CVE-2017-13089 | Integer overflow | Wget < 1.19.2 | √ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, X.; Zhao, X.; Zhang, B.; Li, J.; Wang, Y.; Li, Y. A Record–Replay-Based State Recovery Approach for Variants in an MVX System. Information 2025, 16, 826. https://doi.org/10.3390/info16100826

Zhong X, Zhao X, Zhang B, Li J, Wang Y, Li Y. A Record–Replay-Based State Recovery Approach for Variants in an MVX System. Information. 2025; 16(10):826. https://doi.org/10.3390/info16100826

Chicago/Turabian StyleZhong, Xu, Xinjian Zhao, Bo Zhang, June Li, Yifan Wang, and Yu Li. 2025. "A Record–Replay-Based State Recovery Approach for Variants in an MVX System" Information 16, no. 10: 826. https://doi.org/10.3390/info16100826

APA StyleZhong, X., Zhao, X., Zhang, B., Li, J., Wang, Y., & Li, Y. (2025). A Record–Replay-Based State Recovery Approach for Variants in an MVX System. Information, 16(10), 826. https://doi.org/10.3390/info16100826