1. Introduction

Quantum computing, as a disruptive technology, has received widespread attention from various fields, since it can efficiently accelerate the solving of some fundamental computational problems. For example, Shor’s algorithm [

1] can solve the factorization problem in polynomial time, posing a great threat to modern cryptography in theory. Grover’s algorithm [

2] can achieve a square-root speedup in unstructured search. The HHL algorithm [

3] proposed in 2006 has an exponential speedup in solving linear equations.

As a linear equation solver, the HHL algorithm consists of three main parts. The first part employs quantum phase estimation to achieve the eigenvalues of the matrix being calculated. The second part applies quantum controlled gates to flip the auxiliary qubits based on the given eigenvalues. The third part uses the inverse of quantum phase estimation to undo the entanglement and restore some of the auxiliary qubits. By measuring the remaining auxiliary qubits, one can obtain the target state. By modifying the second step of the HHL algorithm, we can easily apply the idea of the HHL algorithm to matrix–vector multiplication. It should be noted that the complexity of the HHL algorithm depends not only on the size of the matrix but also on the condition number of the matrix. The condition number of a matrix is an indicator of the sensitivity of the matrix to changes in the result, and is an inherent property of the matrix. Therefore, it is difficult to manually constrain the condition number of the matrix. There are some types of matrices that may have a high condition number but contain some specific structures, which could lead to more efficient computation. Circulant matrices are one of these types and, in this paper, we focus on their computation.

Circulant matrices have been well studied in both classical and quantum settings. In the classical setting, it was shown that the product of a circulant matrix and a vector of size

N can be computed with

operations using FFT (Fast Fourier Transformation) [

4]. By expressing a Toeplitz matrix as a circulant matrix, Golub and van Loan showed that a Toeplitz matrix and a vector can also be multiplied in time

[

4]. Circulant matrices and related structured matrices were also studied in [

5,

6,

7]. In the quantum setting, different quantum expressions for the circulant matrix were presented in [

8,

9,

10]. Reference [

8] presents a quantum construction method for cyclic matrices. Where the Toeplitz matrix can serve as a submatrix of the cyclic matrix, reference [

9] provides an asymptotic method for solving Toeplitz systems. Reference [

10] applies cyclic matrices to quantum string processing.

In this paper, we propose two new quantum algorithms for circulant matrix–vector multiplication, which take advantage of the features of quantum mechanics and have better computational complexity compared to classical algorithms. For the first algorithm we propose, the complexity is in most case. For the second algorithm, if the elements in the circulant matrix and vector are randomly chosen from or with a norm no larger than d, then the proportion of matrices and vectors that can be computed with complexity approaches 1 as the dimension of the matrix increases. Similarly, if the elements are randomly chosen from with a norm no larger than d, then the proportion of matrices that can be computed with complexity also approaches 1 as the dimension increases.

Theorem 1. There exists two quantum algorithms which compute the multiplication of the circulant matrix and vector. For the first algorithm, it computes the multiplication of a circulant matrix and a vector over (or with a probability of at least and complexity , where N is the dimension of the matrix, and the proportion of the circulant matrix and vector combinations that can be effectively computed approaches 1 as the dimension increases. For the second algorithm, it computes the multiplication of the most circulant matrix and vector over (or with a probability of at least and complexity , where N is the dimension of the matrix, and the proportion of the circulant matrix and vector combinations that can be effectively computed approaches 1 as the dimension increases.

Compared to the approach similar to the HHL algorithm, our second algorithm has two advantages. Firstly, our second algorithm directly performs calculations on quantum states without the need for Hamiltonian simulations, thus reducing computational complexity and errors. Secondly, our second algorithm does not have the process of quantum phase estimation (QPE), thus reducing the use of auxiliary bits and errors. Our second algorithm also has a measurement step and has the same complexity as the HHL approach during measurement. The comparison is shown in the

Table 1. In particular, when the circulant matrix and vector values are in

, the algorithm complexity is logarithmic with respect to the size of the matrix.

Furthermore, we apply our algorithms in convolution computation, which is an important and fundamental problem in quantum machine learning (QML). The field of quantum machine learning has been developing rapidly in recent years. Machine learning algorithms suffer from computational bottlenecks as the dimensionality increases. To promote experimental progress towards realizing quantum information processors, the approach of using quantum computers to enhance conventional machine learning tasks was proposed, and this led to the development of quantum convolutional neural networks (QCNNs). The earliest QCNN was proposed in [

11] for solving quantum many-body problems. Subsequently, in [

12,

13], QCNNs for image recognition were discussed. The difference of their results is that the quantum circuit in [

12] is a purely random quantum circuit, while the quantum circuit in [

13] has a simple design. In [

12], the authors presented real experiments to confirm that their algorithm still has some effect even when using a fully random quantum circuit. The quantum circuit designed in [

13] only entangles different qubits without a more specific definition of the quantum circuit. In this paper, we present an effective quantum circuit for computing convolutions, which may be used as a sub-circuit in the quantum circuit of QCNNs.

The structure of this article is as follows. In

Section 2, we introduce circulant matrices and some basics of quantum computing, and present some quantum circuit structures that will be used in the quantum algorithms for circulant matrix–vector multiplication. In

Section 3, we describe the details of our new algorithms and analyze their running times. As both algorithms involve quantum measurements,

Section 4 presents a probability analysis of the measurements and, for parts where theoretical analysis is not available, we present experimental results to support our algorithms. In

Section 5, we discuss the specific application of circulant matrix–vector multiplication in convolution computation. Finally, conclusions and future directions are presented in

Section 6.

3. Multiplication of Circulant Matrices and Vectors

We present two different algorithms for computing the product of a circulant matrix and a vector, which result in different representations. It should be noted that, when the entries of the circulant matrix and the vector are all in , our second algorithm can achieve exponential acceleration compared to classical algorithms. In this section, the computation of the indices and the binary numbers (or integers) corresponding to basis states is in the ring , where and n is the number of qubits. For the sake of simplicity, we omit the “” symbol in their expression. Specifically, for a variable or a basis state with or , we mean or , with mod .

3.1. The First Algorithm

Theorem 2. There exists a quantum algorithm that computes the multiplication of a circulant matrix and a vector over (or with a probability of at least and complexity , where N is the dimension of the matrix, and the proportion of the circulant matrix and vector combinations that can be effectively computed approaches 1 as the dimension increases.

We suppose that

, and

is the corresponding circulant matrix for

. Let

. Then, the input of our algorithm is

where

and

are the quantum states corresponding to the vectors

and

, respectively.

If we remove the + sign on the right-hand side, then we can achieve a matrix with . Then, the output of our algorithm is the quantum state , whose amplitudes are equal to .

Our algorithm is presented in Algorithm 1. In the following, we provide a detailed analysis of each step of this algorithm.

| Algorithm 1 Multiplication of a circulant matrix and a vector |

- Input:

The quantum state - Output:

The quantum state -

- 1:

Apply the transformation on the first register. - 2:

Apply the transformation on the second register, then get the state

- 3:

Double the basis state of the first register, then get the state

- 4:

Apply the quantum adder for the states of the first two registers with the result stored in the first register, then get the state

- 5:

Run the quantum state extraction circuit in Figure 6. Then, with some probability the state of the first three registers is

- 6:

Reverse the quantum arithmetic operations performed on the first register, then get the state

- 7:

Apply the transformation on the second register, and then measure the second register, when the measurement results for the second register is , then get the state

- 8:

return.

|

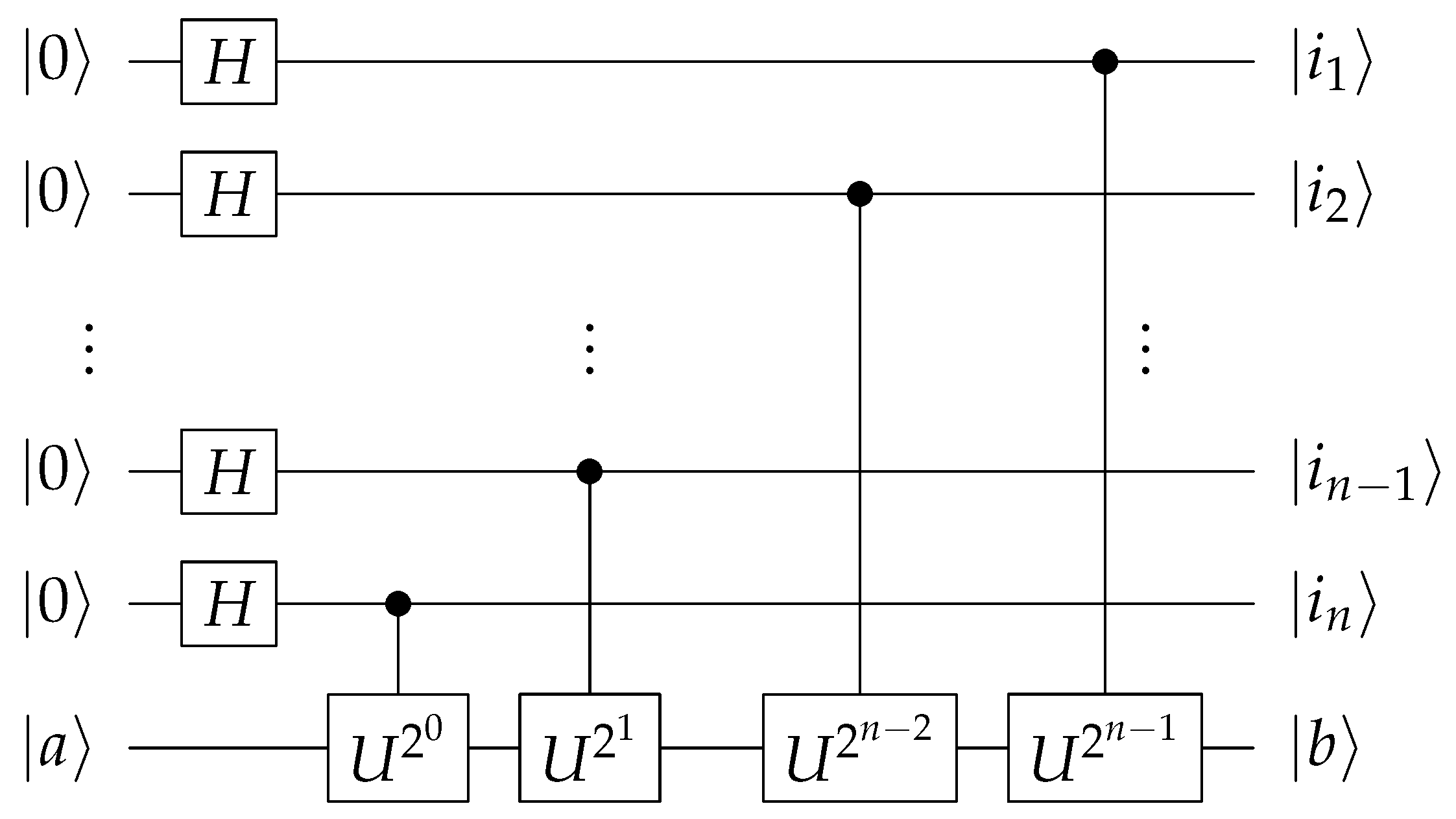

Step 1. We apply the transformation on the first register.

Step 2. We apply the

transformation to the second register, with the qubits in the first register serving as the control qubits. Based on the circuit in

Figure 4 and the properties of the matrix

, we can easily obtain the circuit of the controlled-T operations as shown in

Figure 7:

In this circuit, implementing

and

requires

operations as mentioned earlier, and implementing each controlled-

requires

operations. Therefore, Step 1 requires

operations. It should be noted that, for a basis state

,

; hence,

. Therefore, by modifying the indices of these

s, we can obtain the expression in Equation (

13).

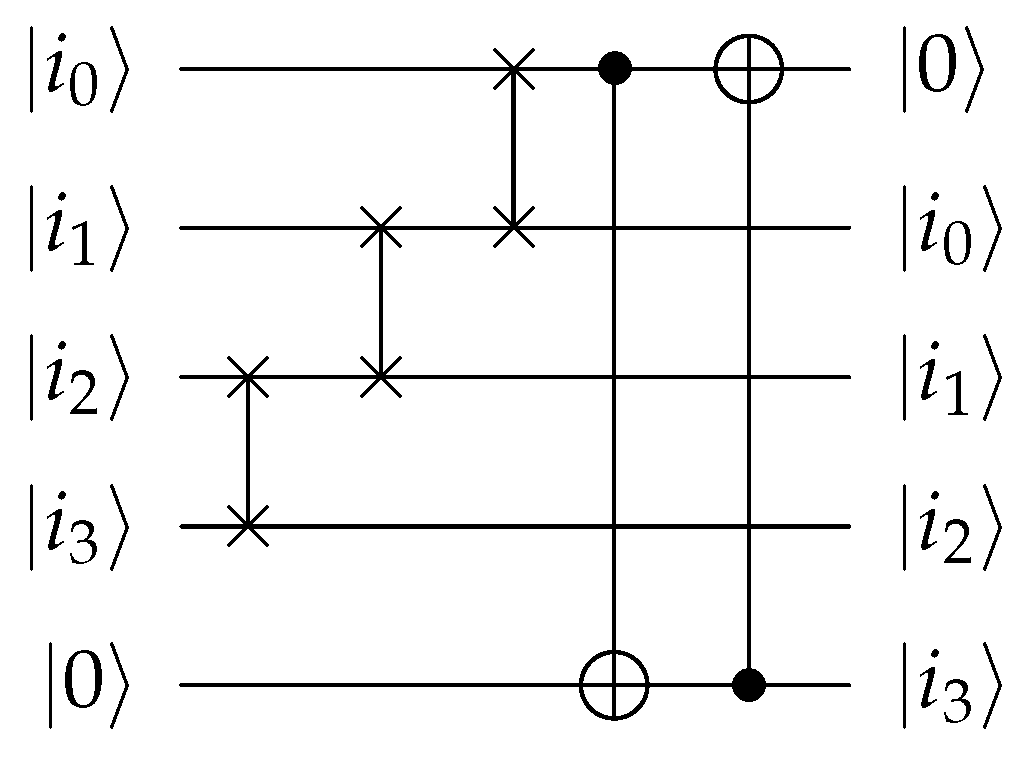

Step 3. We double the basis states of the first register: . This can be implemented directly by some swap gates, CNOT gates, and one auxiliary qubit.

In

Figure 8, we present the circuit for doubling the basis state of four qubits. In this circuit, we want to double

. By using one auxiliary qubit, we convert

to

, and the output we need is

. This means that

on the auxiliary qubit is a garbage output. Here, we do not restore it to

immediately, since its value will not affect the following steps. In Step 5, this auxiliary qubit will be restored to

by uncomputation. Therefore, we omit this qubit in the description of our algorithm.

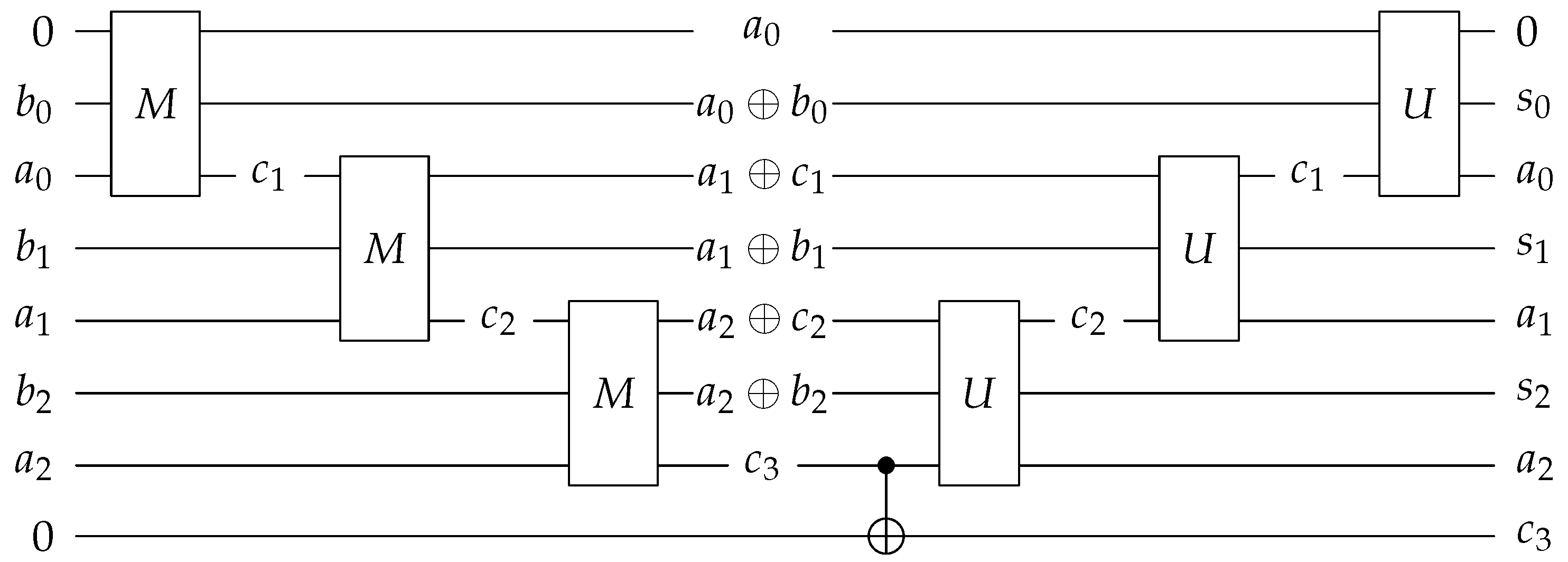

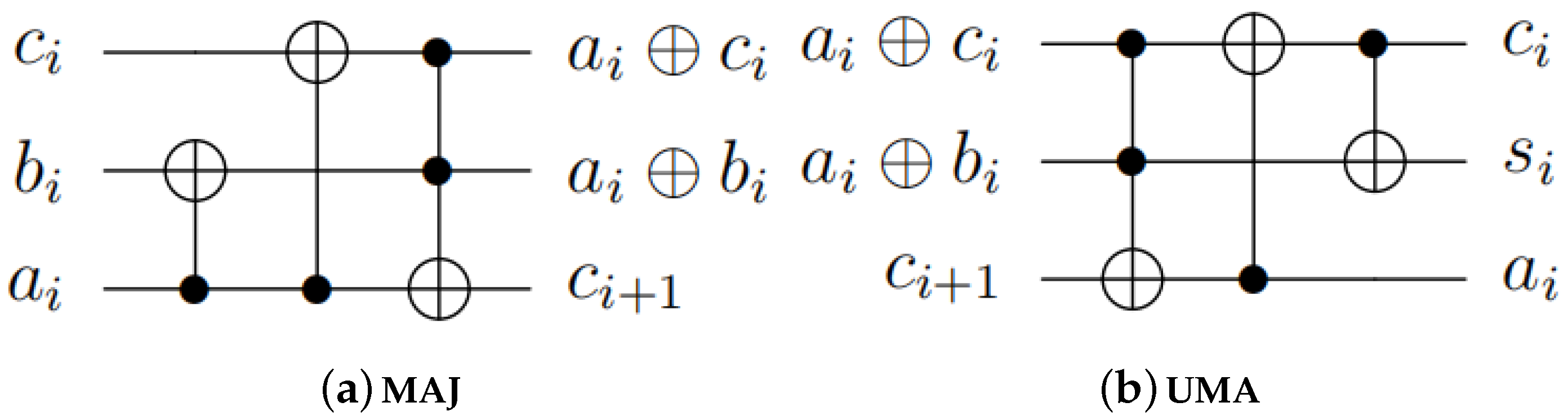

Step 4. We use the quantum adder in

Figure 2 to apply the modular addition for the first two registers and store the result in the first register. It should be noted that the mod

operation can be easily implemented by removing the operations that generate the highest carry in the quantum adder circuit. Therefore, the complexity of this step is

.

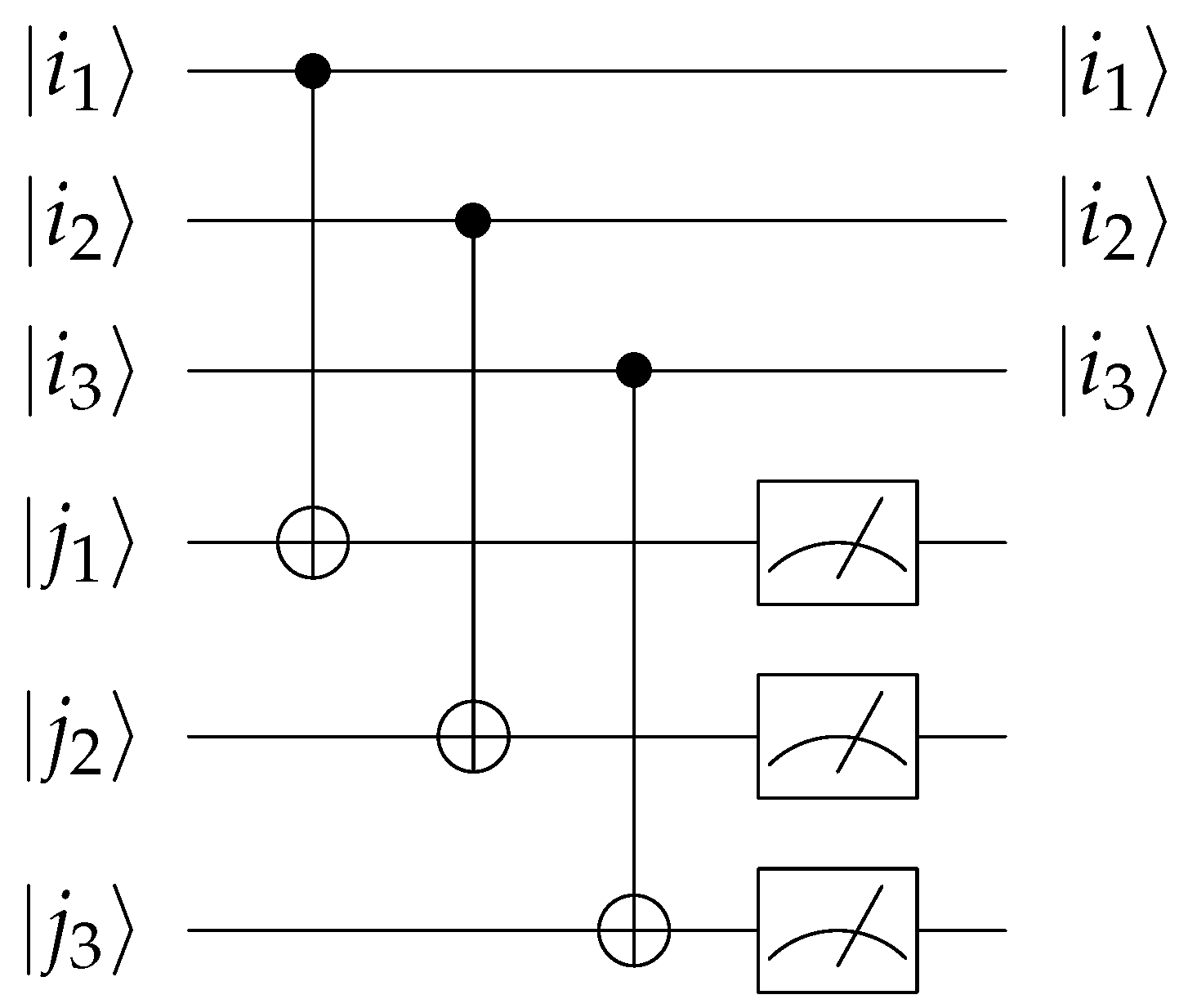

Step 5. We run the quantum state extraction circuit in

Figure 6 for the first and third registers in order to obtain the desired state. The complexity of this step is

. The probability of obtaining this result is

. Since the state of the third register collapses to

, the normalized state is multiplied by a coefficient

c, which is equal to

and can be cancelled out with the denominator. As the probability of the measurement outcome being

is

, the quantum amplitude amplification algorithm can increase the probability to over

with complexity

. A detailed analysis of the measurement success probability will be presented in

Section 4.

Step 6. We reverse the quantum arithmetic operations performed on the first register. This step has a complexity of .

Step 7. We apply the

transformation on the second register, and then measure the second register; the normalized state is multiplied by a coefficient

; the probability of the measurement outcome being

is

in most case. A detailed analysis of the measurement success probability will be presented in

Section 4.

Step 8. We return the quantum state of the three registers.

After organizing the complexity of the previous calculations, the overall complexity of the algorithm was determined to be . Ultimately, we obtained a quantum state containing result information. Compared with classical results, the quantum state we obtained may have more applications, for example, when used in quantum convolutional neural networks.

In this section, we also present a new quantum representation method for circulant matrices, which is given through controlled matrices and related quantum states, with the expectation of having better applications.

3.2. The Second Algorithm

Theorem 3. There exists a quantum algorithm which computes the multiplication of the most circulant matrix and vector over (or with a probability of at least and complexity , where N is the dimension of the matrix, and the proportion of the circulant matrix and vector combinations that can be effectively computed approaches 1 as the dimension increases.

We suppose that , and is the corresponding circulant matrix for . Let ; then, we have that computing is equivalent to computing . Moreover, we have , so can be computed by and . We can then use a quantum extraction circuit to extract the target state, and the whole algorithm is presented in Algorithm 2.

| Algorithm 2 Multiplication of a circulant matrix and a vector |

- Input:

The quantum state - Output:

The quantum state -

- 1:

Apply the inverse quantum Fourier transform ( ) to and the quantum Fourier transform (QFT) to , then get the state

- 2:

Use the quantum extraction circuit in Figure 6 to extract our target state from the first two registers. Then, with some probability, we get the state

- 3:

Perform on the first register, then get the state

- 4:

|

Here, we analyze the complexity of each step in detail.

Step 1. We apply to and QFT to . Obviously, the complexity of this step is .

Step 2. We use the quantum extraction circuit in

Figure 6 to extract our target state from the first two registers. This step has a complexity of

. When the measurement outcome is

, we obtain the target state. Since the state of the second register collapses to

, the normalized state is multiplied by a coefficient

c. If the elements of vectors

and

(not normalized) are randomly chosen from

(over

, or the ball (over

) with a norm of 1, then, for most cases, the probability of the measurement outcome being

exceeds

. With a complexity of

, the quantum amplitude amplification algorithm can increase this probability to at least

. Moreover, if the elements of

and

are randomly chosen from

, then, for most cases, the probability of obtaining

exceeds

. The specific analysis will be discussed in the next section.

Step 3. We perform

on the first register, with a complexity of

. Then, the amplitude of

is

Step 4. We return the quantum state

In summary, the output of this algorithm is exactly the result of the circulant matrix–vector product given in the first algorithm. If the components of the vectors and (not normalized) are randomly selected from (over ), or the ball (over ) with a norm of 1, then, for most cases, the complexity of this algorithm is . If the components of and are randomly selected from , then the complexity of this algorithm is , achieving exponential acceleration compared to classical algorithms.

4. Measurement Success Probability Calculation

In this section, we will present a detailed analysis of the probability of obtaining the target states after measurements in Algorithms

Section 3.1 and

Section 3.2. Here, we also omit the “mod

N” symbol in the indices with

. The vectors

and

in this section are no longer normalized, and the conditions they satisfy will be given below.

For Algorithm 1, we have the following success rate of measurement in

Step 4:

In

Step 7, we have the state

where

is orthogonal to

so the success rate of measurement in

Step 7 is

and is

in most cases. We will use experiments later to illustrate.

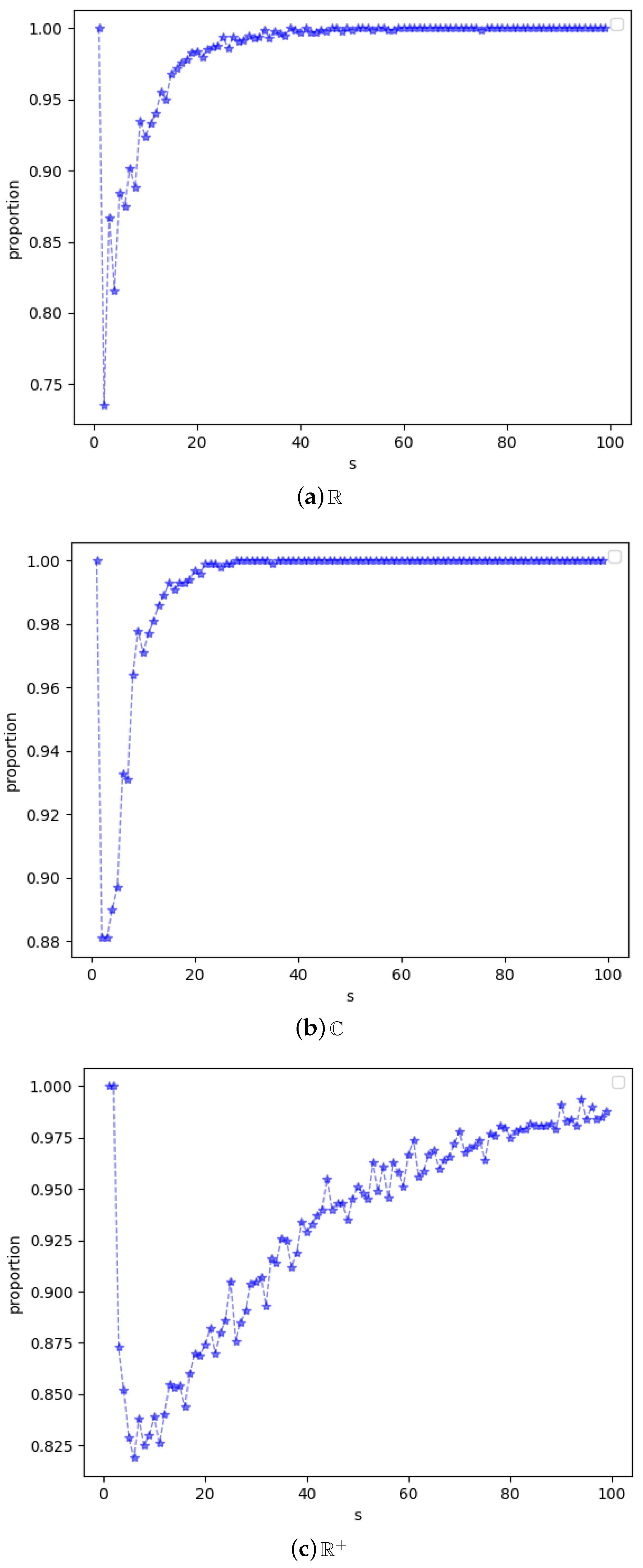

For the probability calculation of Algorithm 2, unfortunately, we were unable to present an explicit formula. Therefore, we used experimental results to demonstrate that, for most matrices, we can effectively compute them in Algorithm 2. Our goal was to estimate the proportion of circulant matrix–vector combinations that our algorithm could effectively compute, in all circulant matrix–vector combinations. We randomly generated matrices and vectors by selecting elements from a given interval, and then used classical algorithms to calculate the required probability. We then recorded the proportion of circulant matrix–vector combinations that we could effectively compute, and observed how this proportion changes with the interval and dimension.

We first considered the circulant matrix–vector multiplication on

with the norm of the elements not exceeding

d. Let

and

be two vectors with each component randomly chosen from

. To satisfy the condition of quantum state, we need to normalize them, which gives

If

is randomly chosen from

, then

is randomly chosen from

. Therefore, to simplify the computation, we can assume

. Similarly, we have

We consider the trend of the proportion of circulant matrix–vector combinations whose probability of obtaining the target state under this interval condition is greater than

as the dimension

s varies. We randomly selected 1000 sets of

and

for each

in the manner described above, and plotted

Figure 9a based on the proportion of circulant matrix–vector combinations whose probability of obtaining the target state was greater than

.

We notice that, as

s increases, the values of the proportions from

Figure 9a gradually approach 1. Therefore, we believe that our algorithm can effectively compute most of the circulant matrix–vector combinations on

with the norm of the elements in the matrix and vector not exceeding

d, and the proportion of circulant matrix–vector combinations that can be effectively computed gradually approaches 1 as

s increases. (It is obvious that the success rate is 1 when

).

Further, we consider the computation of circulant matrix–vector products with the norm of elements not exceeding 1 over

, and discuss it in the same way as over

, obtaining (b) of

Figure 9. We also believe that our algorithm can effectively compute most circulant matrix–vector combinations over

with the elements’ norm in matrix and vector not exceeding 1, and the proportion of circulant matrix–vector combinations that can be effectively computed gradually approaches 1 as

s increases.

When the elements are all in

, we can obtain more exciting results compared to the first two domains under the same conditions. We raised the baseline probability of the target to

. The proportion of circulant matrices and vector combinations that satisfy the condition of having a probability greater than

to measure the target state changes with the dimension

s, as shown in

Figure 9c. We find that this proportion also approaches 1 as

s increases. Since

is a constant, for matrix–vector combinations that satisfy the condition of having a probability greater than

, we need

k measurements to get the target state with a probability of more than

.

Here, we also present some theoretical analysis results that we obtained. Let

; then, we have

The coefficients of the basis state

in the aforementioned state can be denoted as

. Let

; then, we have

The probability of obtaining the target state is

We look forward to someone providing an explicit formula for the probability distribution that correspond to the above expression when the entries of and satisfy some specific distributions.

If the approach modified by the HHL algorithm is used, we only need to calculate the multiplication instead of finding the inverse, so we have the state

where

is chosen to be

and

. Since

,

. The complexity of quantum state extraction is

. So, our second algorithm has the same probability of obtaining the target state as the approach modified by the HHL algorithm.

5. Quantum Convolution Computation

Quantum machine learning is a rapidly developing direction in quantum computing. Quantum convolutional neural networks have been proposed for image recognition and classical information classification. An example of a convolutional neural network was first proposed in [

11]. For a typical image recognition problem, the input image undergoes convolution to generate a feature map, which is then classified. Rather than completing general convolution calculations like classical convolutional neural networks, existing convolutional neural networks use one of the following three approaches to entangle different qubits and improve parameters through optimization algorithms: use a fully random circuit, treat the circuit as a black box, and simply use CNOT gates. In contrast, we can use a quantum circuit to complete general convolution calculations.

Here, we focus on the problem of image recognition. First, we consider the conversion from classical information to quantum information. For this goal, Venegas-Andraca et al. [

19] proposed a storage method based on a “qubit lattice”, where each pixel in an input image is represented by a qubit, requiring at least

bits of storage. Le et al. [

20] proposed an FRQI model, which associates pixel values and positions through the tensor product of quantum states. One qubit is used to encode pixel values, and color information is encoded in the probability amplitude. In [

21], a NEQR model was proposed, which also associates pixel values and positions through tensor products, but uses

d qubits to encode pixel values and encodes grayscale information in the basis state. In [

22], a QImR model is proposed to encode 2D images into quantum pure states. In this model, pixel values are represented by the probability amplitude of the quantum state, and pixel positions are represented by the basis state of the quantum state.

We used the QImR model and assumed that we completed the representation of the image. We considered a one-dimensional convolution calculation and, below, we give the definition of convolution calculation.

and are sequences of length m and n, respectively. Their convolution is denoted as , where .

We set

D to be the minimal integer satisfying

and

for certain

. According to the definition of the QImR model, the quantum states

and

are given as follows:

and

, where

and

satisfy

Their convolution is denoted as

, where

satisfies

We plan to use circular matrix–vector multiplication to compute the convolution. The circular matrix corresponding to

and required for convolution computation should be of the form

It should be noted that the matrix here is the transpose of the matrix we previously introduced. Therefore, we need to construct from , where and . We can first use apply an X gate to each qubit of the state to obtain , then apply to obtain the target state . The complexity of this part is .

Then, our two different multiplication algorithms can be used according to different result requirements. Compared with the classical one-dimensional convolution with a complexity of , our algorithm can effectively accelerate it. The computation of two-dimensional convolution can also be extended in the same way.

In recent years, quantum neural networks have continuously developed, and improving their performance is a worthwhile research topic. For example, reference [

23] proposes a new framework, ResQuNNs, as an improvement direction. The algorithm presented in this paper can improve the circuit of each layer and be combined with other methods to expect good results. On the other hand, quantum machine learning also poses significant challenges, such as the existence of plateaus. For this issue, improvements can be made in terms of framework and structure, as seen in references [

24,

25]. On the other hand, improvements can also be made to each layer of the circuit, combining the two aspects to further reduce the impact of high altitude.