Abstract

Current AI detection systems often struggle to distinguish between Arabic human-written text (HWT) and AI-generated text (AIGT) due to the small marks present above and below the Arabic text called diacritics. This study introduces robust Arabic text detection models using Transformer-based pre-trained models, specifically AraELECTRA, AraBERT, XLM-R, and mBERT. Our primary goal is to detect AIGTs in essays and overcome the challenges posed by the diacritics that usually appear in Arabic religious texts. We created several novel datasets with diacritized and non-diacritized texts comprising up to 9666 HWT and AIGT training examples. We aimed to assess the robustness and effectiveness of the detection models on out-of-domain (OOD) datasets to assess their generalizability. Our detection models trained on diacritized examples achieved up to 98.4% accuracy compared to GPTZero’s 62.7% on the AIRABIC benchmark dataset. Our experiments reveal that, while including diacritics in training enhances the recognition of the diacritized HWTs, duplicating examples with and without diacritics is inefficient despite the high accuracy achieved. Applying a dediacritization filter during evaluation significantly improved model performance, achieving optimal performance compared to both GPTZero and the detection models trained on diacritized examples but evaluated without dediacritization. Although our focus was on Arabic due to its writing challenges, our detector architecture is adaptable to any language.

1. Introduction

Artificial intelligence-generated texts (AIGTs) are synthetic texts that are created by utilizing large language models (LLMs) based on a user prompt or input. In recent years, LLMs such as GPT-3 [1], introduced in 2020, and PaLM, released in 2022 [2], have demonstrated remarkable proficiency in generating coherent and well-structured texts. These models have excelled across diverse natural language processing (NLP) tasks, underscoring significant advancements in machine understanding and text generation capabilities. Subsequently, ChatGPT [3] has arrived as a variant of GPT-3 with an enhancement through a process involving supervised and reinforcement learning. ChatGPT’s arrival has been adopted beyond NLP researchers and has become available for all users across platforms. ChatGPT, particularly GPT-4 Model, has distinguished itself through its ability to mimic human writing closely. Additionally, newer generative models like BARD [4], recently renamed “Gemini” [5], have also garnered attention for their ability to replicate human-like expressions and enhance content generation through internet access and search capabilities.

Prior to the advent of ChatGPT, substantial scholarly research focused on the ethical risks associated with LLMs, especially in the scope of natural language generation (NLG). These studies explored the potential for misuse and highlighted concerns of the widespread dissemination of false information [6,7].

Since the arrival of ChatGPT, concerns have intensified, as highlighted by a study [8] that focused on its risks. The progress and advancements in the generative language models (GLMs) have inadvertently facilitated a range of unethical uses across different sectors. In education, plagiarism emerges as a significant issue which has been thoroughly investigated in this study [9]. In scientific research, the use of ChatGPT to create abstracts has been critically examined in this study [10]. Additionally, the repercussions of these technologies in the medical field have been thoroughly explored in the study [11].

A growing number of businesses are trying to detect multimodal generated texts, such as ChatGPT and other GLMs. This study [12] outlines various commercial and readily available online tools designed for this purpose. However, most studies focus on detecting AIGTs that are Latin-driven scripts such as English, French, Spanish, and more. The only study that has attempted to target the detection of Arabic AIGT and human-written text (HWT) was this [13]. An earlier study [14] indicated the lack of robustness of current detection systems for recognizing the HWTs of Arabic scripts, especially when diacritics are involved. In the following sections, we will address the challenges that Arabic texts bring for the detection systems which do not attend mostly to Latin-based scripts.

1.1. Arabic Diacritized Texts Background

Arabic, a Semitic language, presents numerous challenges within the NLP field. These challenges range from its morphological complexity to the diversity of dialects and writing styles. The omission or presence of diacritics can contribute significantly to ambiguities in NLP interpretation [15,16,17,18]. The diacritization marks are small symbols written above or below the Arabic text to help the reader to correctly articulate the letter. It is also possible to change the meaning of a word by manipulating the diacritics. For example, for a word with three letters, such as ‘سلم’ (slm), its meaning will change with a manipulation in the diacritics place. Thus, ‘سَلَّمَ’ (pronounced as sallama) means he handed over/surrendered. Another shape for the same root could be ‘سُلَّم’ (pronounced as ‘sullam’), which means ladder/stairway, etc. Diacritization marks are integral to the Arabic language. However, most Arabic texts available online or in written form have minimal diacritics, leading some native speakers to classify them as optional. Texts without these diacritics are often understood from the context or with minimal diacritics present. This perspective suggests that diacritics, while helpful, are not essential for comprehension among native speakers.

However, another viewpoint emphasizes the importance of diacritization for accurate understanding and interpretation. A study [19] examining the impact of diacritics on word embeddings revealed that the diacritized corpus had a significantly higher number of unique words (1,036,989) compared to the non-diacritized corpus (498,849). This increased lexical diversity underscores the complexity and specificity that diacritics add to the Arabic language, helping to disambiguate words that would otherwise appear identical in non-diacritized form.

1.2. The Impact of Diacritics on AI Detection of Arabic Texts

The existence of diacritics in Arabic texts significantly impacts AI detection systems. Due to several factors, these systems often face challenges in accurately classifying diacritics-laden texts. Firstly, AI detection systems are frequently trained on non-diacritized Arabic texts, as they are more common online. When these classification models encounter diacritized texts, which they have seen less frequently—except in religious texts where diacritics are more prevalent—they might struggle to classify them correctly due to the unfamiliar diacritic marks. This discrepancy in training data can lead to inaccuracies and misclassifications, as the models are not well-equipped to handle the additional complexity introduced by diacritics. Secondly, the typical habit of researchers is to employ preprocessing steps that include the removal of diacritics from the text in the dataset or excluding them in their downstream tasks, such as [20,21,22,23]. For example, in models like AraBERT [24] and AraELECTRA [25], the documentation recommends using a preprocessing function that enhances the model for downstream tasks by performing tasks such as mapping Indian numbers to Arabic numbers; removing HTML artifacts; replacing URLs, emails, and mentions with special tokens; and removing elongation (tatweel), among others. However, one of the preprocessing steps includes removing diacritics from the text for downstream tasks. This preprocessing step aims to simplify the text, but it also means that the resulting models are not exposed to diacritics during training. Consequently, these models may struggle to process and accurately classify diacritized texts, as they are not familiar with the additional diacritic marks. Moreover, some diacritization tools are built using machine learning (ML) and deep learning (DL) techniques designed to apply the correct diacritic marks to every letter accurately. Notable tools include Farasa [26], MADAMIRA [27], and the CAMeL tools [16], which utilize advanced algorithms to perform diacritization. This process results in texts that are heavily diacritized, and these are often created by AI-based tools. As a result, AI detection systems treat heavily diacritized texts as AIG due to the likelihood that these texts were produced or enhanced using AI technologies. This association further complicates the ability of AI systems to distinguish between HWTs and AIGTs.

To give an illustrative example, Table 1 presents a portion of an example that has been written by a human but detected by GPTZero as an AIGT due to the diacritics. When the diacritics are removed, the same example is detected as HWT. It is important to note that Table 1 presents a portion of the example tested against GPTZero due to the length of the full text, which would take up considerable space. The table compares a diacritized and non-diacritized Arabic text from the AIRABIC benchmark dataset (https://github.com/Hamed1Hamed/AIRABIC/blob/main/Human-written%20texts.xlsx, accessed on 27 June 2024).

Table 1.

Comparison of diacritized and non-diacritized Arabic texts and their classification by GPTZero.

Balancing these perspectives, it becomes clear that, while diacritics enrich the Arabic language and provide essential phonetic and grammatical information, they also introduce challenges for AI detection systems. On the one hand, the clarity provided by diacritics is important for the accurate interpretation and disambiguation of words. On the other hand, the minimal use of diacritics in everyday texts means that AI models should be robust enough to handle both diacritized and non-diacritized forms.

Therefore, the main objective of this research is to tackle the challenges posed by diacritics by developing the first AI detector specifically trained on diacritized HWTs and AIGTs. This novel approach aims to improve detection accuracy compared to previous efforts [13] and provide deeper insights into the impact of diacritization on AI detection systems. By training models on both diacritized and non-diacritized texts, we seek to enhance the robustness of AI detectors, ensuring their effectiveness across various forms of Arabic text.

Considering the above, this study seeks to address the following critical questions:

- Will training the classifier on diacritized texts enhance the detector’s robustness towards such texts?

- Does training the detector on diacritics-laden texts prove more effective than implementing a dediacritization filter prior to the classification step?

- Should the dataset include a diverse range of HWTs from various domains/fields to enhance recognition capabilities, or is it sufficient to focus on one domain with multiple writing styles?

- How do different transformer-based, pre-trained models (AraELECTRA, AraBERT, XLM-R, and mBERT) compare in terms of performance when trained on diacritized versus dediacritized texts?

- What is the impact of using a dediacritization filter during evaluation on the overall accuracy and robustness of the detection models across different OOD datasets?

Therefore, this study aims to investigate these research questions through extensive evaluation and analysis of multiple Encoder-based models and novel datasets.

The rest of this paper is structured as follows: First, we present a review of studies conducted on other languages since the arrival of ChatGPT. Next, we detail our methodology, including the creation of novel datasets and the fine-tuning of transformer-based models. Following this, we discuss the results of our experiments, comparing the performance of models trained on diacritized versus dediacritized texts and the impact of using a dediacritization filter during evaluation. We then present our findings, discussing their implications and providing practical recommendations for improving the detection of AIGT in Arabic, addressing the challenges associated with misclassifying HWTs. Finally, we conclude by summarizing our key findings.

2. Related Works

Since 2023, there has been a significant increase in research interest in detecting AIGTs generated by ChatGPT and other advanced multimodal technologies. Some researchers are developing new datasets for this task and exploring which attributes and algorithms boost classification accuracy. In this section, we will summarize most of the studies attempting to detect AIGTs in the ChatGPT era and the available datasets across different languages. Our review focuses exclusively on academic studies, deliberately excluding web-based, non-academic tools because their training methods and internal mechanisms are unclear. The studies are grouped based on the languages they target: English, Chinese and English, French and English, Japanese, Arabic, and finally, a study that grouped four languages: English, Spanish, German, and French.

One of the earliest studies that aimed to detect ChatGPT-generated texts was conducted [28] by employing the ML algorithm XGBoost on a dataset comprising both HWT- and ChatGPT-generated texts. Their experiment achieved an accuracy rate of 96%. However, the study was limited to only 126 examples from each of HWT and AIGT in English. They also did not test their classification model against out-of-domain (OOD) datasets to test the model’s robustness.

Another early study contributed to this field by introducing various detection models and two extensive datasets containing both HWT and AIGT in English and Chinese [29]. While the study’s primary aim was not solely detection, it sought to compare HWT and ChatGPT outputs. They released their datasets under the name HC3 (Human ChatGPT Comparison Corpus), which comprised approximately 40,000 question-answer pairs, leveraging existing datasets such as OpenQA [30] and Reddit ELI5 [31]. They used the ChatGPT 3.5 model in its early release to answer the questions in these datasets. However, upon examining the AIGT portion of the HC3 dataset for the English language, some entries were incomplete or missing ChatGPT-generated content, resulting in approximately 20,982 examples instead of the 26,903 claimed in their study [13]. For detection purposes, the researchers utilized Transformer-based models, specifically RoBERTa-base [32] for the English dataset and hfl-chinese-roberta-wwm-ext for the Chinese dataset version. Additionally, they implemented an ML model using logistic regression. Their results indicate that the Transformer-based pre-trained models outperformed the ML model. However, they ran the models for only one epoch for the English dataset and two epochs for the Chinese dataset. While the results were satisfactory, they could be improved by increasing the number of epochs and adjusting the learning rate.

Another study [33] focused on detecting ChatGPT-generated texts in English and French. The authors utilized the English version of the HC3 dataset and introduced their own French dataset, utilizing different datasets for the HWT dataset and ChatGPT and BingChat for AIGT. The researchers fine-tuned Transformer-based pre-trained models for binary classification. For the French dataset, they utilized the CamemBERTa [34] and CamemBERT [35] models, while for the English dataset, they employed RoBERTa and ELECTRA [36]. Additionally, they combined the two datasets and used the XLM-RoBERTA-base (XLM-R) [37] model, which supports multiple languages. This was the first study to use an (OOD) dataset for evaluation and to introduce a small dataset for adversarial attacks. The authors noted that the models’ accuracy decreased when encountering OOD texts.

One of the earliest studies that targeted the Japanese language was introduced in [38]. The authors used stylometric analysis, which examines linguistic style, to compare 72 HWT examples from academic papers to 144 examples AIGT from both ChatGPT 3.5 and Model 4. The study employed a classical ML algorithm, random forest, to classify the texts as either human- or AI-written. However, the study did not use an OOD dataset to test the effectiveness of the ML classifier.

The first study that attempted to detect the AIGT for the Arabic language is presented in [13]. The authors introduced two datasets: a native handcrafted dataset containing AIGT and HWT and a large translated AIGT dataset derived from HC3 and supplemented with existing HWT datasets. The studies used two encoder-based, pre-trained models, AraELECTRA and XLM-R, evaluating each model on three OOD testing sets, including the AIRABIC benchmark [14]. The authors compared their results against existing detection tools such as GPTZero [39] and OpenAI Text Classifier [40]. The findings indicated that the detection models trained on the native Arabic dataset outperformed both GPTZero and OpenAI Text Classifier when tested against the AIRABIC benchmark. The study also highlighted the challenges posed by diacritics in HWT. The authors concluded that the robustness of a detection model is heavily dependent on the dataset it was trained on, as the model trained on the translated version of HC3 performed poorly in detecting OOD AIGTs.

The first study that attempted to detect AIGT for four languages was conducted in [41]. The authors examined whether ML could distinguish HWT from AIGT or AI-rephrased text in English, Spanish, French, and German. The authors created a dataset with 10 topics, including 100 examples of HWT, 100 examples of AIGT, and 100 AI-rephrased articles for each language. The study used a variety of features, including perplexity-based features, semantic features, list lookup features, document features (e.g., the number of words per sentence), error-based features, readability features, AI feedback features (asking ChatGPT to classify the text), and text vector features (features based on the semantic content of the text). The best-performing classifier combined all the features examined in the study. The classifier reached high accuracy, but the study did not mention an inference against an OOD dataset.

3. Methodology

3.1. Data Collection and Preparation

This study used several datasets derived from two primary sources. The first dataset was derived from the custom dataset introduced in [13]. We applied diacritics to it and named it the Diacritized Custom dataset. The second dataset was obtained from the Ejaba website, the largest Arabic question-answering platform where users can ask questions and receive answers from other community members and LLMs (https://www.ejaba.com/, accessed on 19 February 2024). To maintain balance, we ensured an even distribution of samples between HWT and AIGT across all datasets.

While preparing our datasets, we allocated 80% of the data for training, 10% for validation, and 10% for testing. This allocation was chosen to prioritize a larger training set, enhancing the model’s robustness and ensuring it achieved more than just high performance on the same dataset as we aimed to prepare the model for OOD datasets, enabling it to effectively generalize to unseen data. The details of these datasets will be explored further in subsequent sections. The information on software and equipment used in this research are listed in Appendix A.

3.1.1. Diacritized Custom Dataset

We applied diacritics to the Custom dataset with the help of Farasa, a comprehensive toolkit developed for Arabic language processing, creating a dataset that included both diacritized and non-diacritized writing styles (https://farasa.qcri.org/diacritization/, accessed on 6 February 2024). The Diacritized Custom dataset contained twice the number of examples as the original Custom dataset, with 4932 samples for training, 612 samples for validation, and 612 samples for testing.

It is worth mentioning that no tool for diacritizing Arabic text is without flaws. Some tools diacritize text inaccurately in terms of grammar, while others require careful post-processing refinement. In our experience with the Farasa diacritization, which was applied to 2466 examples, we observed that 6 examples resulted in empty outputs. There is no definitive explanation for this issue, given that the tool successfully diacritized a significantly larger number of examples. Therefore, we repeated the diacritization process only for the remaining examples. Additionally, we observed that Farasa tends to insert a space before the full stop (.) at the end of a sentence after diacritizing the final letter. Consequently, we implemented post-processing refinements using Python code to correct this issue and ensure that diacritization appeared correctly in the Arabic text.

3.1.2. Ejabah-Driven Datasets

- (1)

- AIGTs Samples

We collected 5968 AIGT responses from the Ejabah platform. These responses were generated by LLMs such as GPT-3.5, GPT-4, and BARD, with the following distribution: 2039 examples from GPT-4, 1518 from GPT-3.5, and 2411 from BARD.

- Inclusion Criteria:

- ⮚

- Length: We included responses that exceeded 350 characters. This criterion was established because shorter responses often do not exhibit the distinct characteristics of AIGTs. For instance, a response to a simple query like “What is the capital city of the USA?” may yield a brief answer such as “Washington DC” (13 characters), which lacks the detailed features typical of LLM responses.

- ⮚

- Balance: We ensured that the number of AIGT examples matched the number of HWT examples for a balanced comparison.

- Exclusion Criteria:

- ⮚

- Short Responses: Responses under 350 characters were excluded as they typically fail to demonstrate the detailed and coherent characteristics of LLM outputs.

- (2)

- HWTs Samples

We obtained over 5494 examples from diverse community answers on the Ejabah platform. However, the HWT examples were the most difficult to inspect as they required careful examination to ensure their authenticity. After this thorough inspection, we excluded approximately 936 examples due to uncertainties about their origins, particularly if they might be AIGT or a mix of AIGT and HWT, as seen in adversarial attacks. Consequently, these 936 examples were not included in our final dataset counts.

- Inclusion Criteria:

- ⮚

- Authenticity: Only responses that were clearly identifiable as HWTs were included. If a response did not provide the source of the reply (such as books or website links), we copied the reply and searched for it on Google. This method consistently revealed that the answers were copied from online sources written by humans.

- ⮚

- Length: Unlike AIGT samples, we accepted human responses regardless of character count because humans tend to provide shorter answers or supplement their brief answers with readily available internet text (from forums and Wikipedia), especially in community Q&A formats.

- Exclusion Criteria:

- ⮚

- Uncertain Origin: To maintain the integrity of the HWT dataset, examples that could not be definitively classified as HWT were excluded.

- ⮚

- Adversarial Texts: Human responses that showed signs of being a combination of AIGT and HWT, which might occur in adversarial attacks, were also excluded.

Religious Dataset

We built a specialized dataset centered around religious HWTs, using these exclusively for the training, validation, and testing phases while integrating AIGTs from various fields. This focus on religious texts was due to their higher frequency of diacritics compared to other Arabic texts. This unique configuration aimed to assess two main objectives:

- (1)

- Generalization Capability: This assessment determines whether a model trained solely on HWTs from a single field (religious texts) can effectively generalize its recognition capabilities to HWTs from other diverse fields in real-world scenarios. This challenge challenges the model to demonstrate its broader applicability, which is important for practical deployments where the model might encounter HWTs from various subjects and styles.

- (2)

- Impact of Diacritization: We evaluated whether training the model on a proportion of diacritized Arabic texts enhanced its ability to generalize its recognition capabilities to diacritized texts. Specifically, we aimed to determine whether this training approach helped the model to distinguish diacritized HWTs from AIGT compared to a model trained on diacritic-free texts.

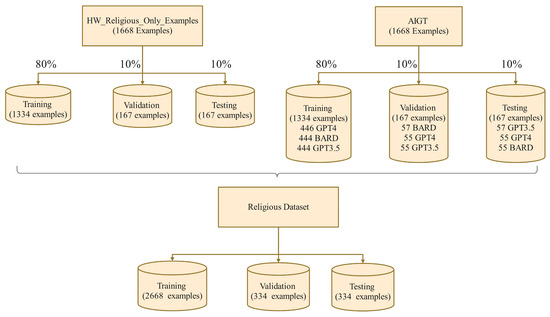

To achieve these objectives, we compiled a dataset consisting of 2668 training examples, 334 validation examples, and 334 testing examples, with each set evenly distributed between religious HWTs and diverse AIGT fields, as shown in Figure 1.

Figure 1.

Religious dataset configuration.

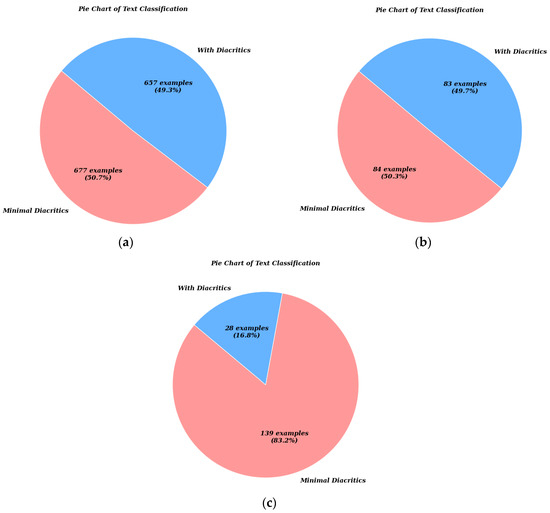

While preparing the religious dataset, we ensured an appropriate distribution of diacritized HWT samples across the training, validation, and testing sets. A key consideration was determining the threshold for categorizing a text as a diacritized sample. We decided that an HWT sample would be classified as diacritized if it contained more than 10% of the possible diacritics relative to the total number of characters in the text. If an HWT sample had less than 10% of the possible diacritics, it was categorized as having minimal diacritics. It is important to note that AIGT samples in the religious dataset did not involve diacritics, as the LLMs used for generating these texts do not produce diacritized text. Figure 2 shows the percentage of diacritized HWT in the religious sets.

Figure 2.

Distribution of diacritization in HWTs across all sets. (a) Training set, showing 49.3% with diacritics and 50.7% with minimal diacritics. (b) Validation set, showing 49.7% with diacritics and 50.3% with minimal diacritics. (c) Testing set, showing 16.8% with diacritics and 83.2% with minimal diacritics.

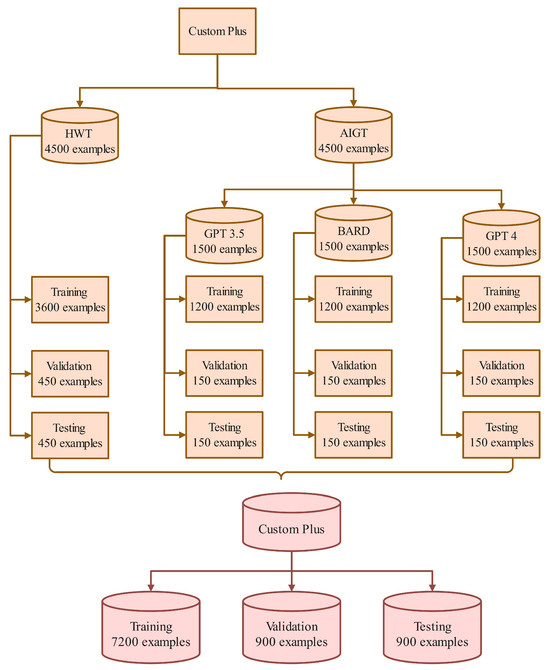

Custom Plus Dataset

To further advance our research and enhance the robustness of our detection models, we recognized the need for a larger and more diverse training corpus. Thus, we developed the Custom Plus dataset. This new dataset, derived from the Ejabah website, included a significantly broader and more varied collection of HWTs and AIGTs than our previously utilized datasets. It was specifically designed to train on a vast array of examples and to thoroughly evaluate models developed from the Custom dataset. It was significantly larger than both the religious and Custom datasets. It consisted of 7200 examples for training and 900 examples each for validation and testing, and these examples were balanced between HWTs and AIGTs. With AIGT samples derived from three distinct LLM sources, we ensured each source was equally represented across all dataset divisions, as detailed in Figure 3.

Figure 3.

Custom Plus dataset configuration.

To avoid a biased overrepresentation of religious texts in the Custom Plus dataset, we strategically included only 689 diacritized examples of religious texts, and this selective inclusion served two main purposes. The first was to incorporate a specific portion of diacritized texts into the training, preparing the model to handle diacritized text during inference effectively; the second was to ensure the inclusion of authoritative HWTs as the diacritized texts, especially in religious contexts, which were not typically generated by LLMs except when quoting holy books, making them reliably human-written.

Custom Max Dataset

The final step in our dataset creation and preparation process involved merging the Custom and Custom Plus datasets. This amalgamation was undertaken to train our model on a significantly larger number of examples, thereby enhancing its robustness and accuracy. Therefore, we ended up with 9666 examples for training, 1206 examples for validation, and 1206 examples for testing. All samples were evenly distributed between HWTs and AIGTs.

3.2. Detector Architecture

In developing our detector, we adopted a methodology centered on fine-tuning pre-trained, transformer-based models that train on language understanding. We selected four state-of-the-art models that offer robust support for the Arabic language. Two of these models are specifically tailored for Arabic: AraBERT [24] (AraBERTv2) and AraELECTRA discriminator [25]. The remaining two models, XLM-R [37] and multilingual BERT (mBERT) [42], support multiple languages, one of which is Arabic. This selection allowed us to leverage the nuanced capabilities of language-specific models alongside the broader, more versatile linguistic understanding of multilingual architectures.

We utilized the AutoModel class from the Hugging Face Transformers library and built our custom classification head on top of it. This head consisted of a dense layer, dropout for regularization, and an output projection layer for binary classification. The output of this head was passed through a sigmoid activation function to produce probabilities for the binary classes.

3.2.1. Training

Our classifier’s training and evaluation strategy was designed to ensure optimal performance and generalization. In the training process, we employed the AdamW optimizer (Adam with weight decay) [43], which is well-regarded in the deep-learning community [44,45], for its effectiveness in training complex models due to its combination of adaptive learning rates and decoupled weight decay. It is a reliable and widely adopted choice for many practitioners. This optimizer was paired with the binary cross-entropy loss function, the standard choice for binary classification tasks [46,47], to accurately measure the model’s ability to predict the likelihood of a text being either human-written or AI-generated.

A key feature of our training approach is the implementation of a custom learning rate scheduler. Initially, we experimented independently with both linear learning rate schedules and cosine annealing schedules. Our experiments revealed that, while the linear learning rate often performed better in some scenarios, the cosine annealing approach excelled in others. However, by combining these two approaches, we achieved superior results compared to using either method alone. This combined strategy allowed the model to navigate and overcome local minima effectively, facilitating more convergence to the global minima. To implement this, we adopted a custom learning rate scheduler that started with a linear warmup phase. During the warmup phase, the learning rate increased gradually from an initial value to the main learning rate over a specified number of epochs (design decision). This phase helped to stabilize the training process and prevent early divergence. After the warmup period, the scheduler transitioned to a cosine annealing schedule, where the learning rate decreased in a cosine pattern.

We implemented a custom early stopping mechanism based on validation loss to avoid overfitting and ensure that the model generalized well to new data. Unlike the existing early stopping functions in some available libraries, which might focus on local minima, our approach concentrated on the global minima. This monitored the model’s performance on the validation dataset and halted training if no improvement was observed on the global minima over a predefined number of epochs, referred to as the patience parameter. This early stopping technique ensured that the training process would be stopped only when it was highly likely that the model had reached the best possible state.

3.2.2. Evaluation

The classifier computed a comprehensive range of performance metrics during the evaluation process to assess its effectiveness. These metrics included precision, recall (sensitivity), F1 score, accuracy, receiver operating characteristic (ROC) curve, area under the ROC curve (AUC), loss, and the confusion matrix. These metrics comprehensively assessed the model’s performance, highlighting its strengths and potential areas for improvement. Performance metrics were logged and visualized, providing a detailed account of the model’s behavior during training and evaluation. Detailed logging and checkpointing captured the best-performing model, ensuring reproducibility. Upon completion of training and evaluation, the final trained model and the best model weights were saved for future inference, making it ready for deployment or further fine-tuning if needed.

3.2.3. Hyperparameters

Extensive experiments were conducted to fine-tune a range of hyperparameters in order to identify the most effective configurations for our models. Due to the unique characteristics of each model and the diverse nature of the datasets, no single set of hyperparameters was universally optimal. As a result, the hyperparameters were carefully adjusted to meet the specific requirements of each dataset, ensuring consistency by using a standardized random seed across all experiments. Thus, the hyperparameters were varied to optimize model performance, as the learning rates ranged from to , with initial learning rates set between and . Warmup epochs were adjusted between 2 and 4; the total training epochs ranged from 6 to 10 depending on the used model; and batch sizes for training, validation, and testing were set between 32 and 64. An out-of-domain (OOD) testing (inference) batch size of 64 was employed for evaluation. Although batch size during inference does not directly affect the detection accuracy rate, experiments showed that a batch size of 64 partially reduced the loss rate compared to a batch size of 32. Therefore, we chose to use a batch size of 64 for inference. Early stopping was utilized to prevent overfitting, with the patience parameter adjusted based on the total number of epochs. For instance, a patience of 5 epochs was applied when training for 8 epochs, and a patience of 6 epochs was used for 10 epochs. The random seed was primarily set to 1, with a few experiments using a seed of 10, to ensure reproducibility across all trials. All hyperparameter details are attached to their respective experiment folders in our repository. Table 2 summarizes the hyperparameters used.

Table 2.

Summary of hyperparameters used.

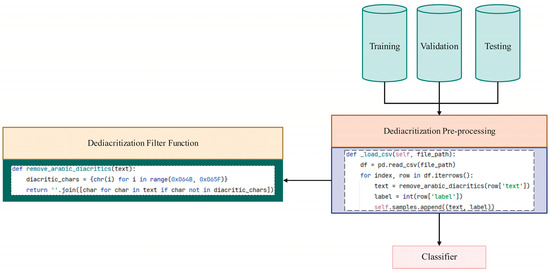

3.2.4. Dediacritization Filter Function

In addition to our primary detector architecture, we explored the impact of diacritics on model performance by developing a diacritics-free variant of our dataset. This exploration aimed to determine whether removing diacritics from Arabic text could potentially improve the model’s performance compared to including diacritics during training. While diacritics provide important phonetic information, their removal simplifies the text. We hypothesized that this simplification could potentially improve the model’s learning efficiency and performance on unseen data. To investigate this, we created a new branch of our code, named “Diacritics-Free”, which retained the same overall architecture but included a modification in the dataset preparation process. To evaluate the impact of this change, we conducted comparative experiments using the original and diacritics-free datasets. It is important to note that this diacritics-free version was employed only after completing all initial experiments related to inspecting the impact of diacritization during training. This ensured that our primary detector architecture and its evaluations were based on the original dataset that did not have a dediacritization filter.

In the diacritics-free branch, we prepared the dataset by removing Arabic diacritics from the text. Rather than relying on available tools or libraries, we implemented the dediacritization process ourselves to ensure efficiency and precision. This was achieved by leveraging the Unicode values of the diacritic characters. Figure 4 illustrates the preprocessing workflow for generating diacritic-free datasets.

Figure 4.

Dediacritization preprocessing applied to training, validation, and testing datasets.

3.3. Evaluation Strategy and Methodology

3.3.1. Diacritization Impact Evaluation

To evaluate the impact of diacritized texts on model performance, we conducted a series of experiments using various datasets. Initially, each model was trained on the Diacritized Custom dataset comprising 4932 examples. The performance of each model was then evaluated on the following diacritized datasets:

- Diacritized Validation set: Contains 612 examples.

- Diacritized Testing set: Contains 612 examples.

- Religious Testing set: Contains 334 examples.

- AIRABIC Benchmark Testing set: Contains 1000 examples.

Next, we trained each model on the Religious dataset, consisting of 2668 examples, and evaluated it against several datasets to examine the impact of diacritization:

- Religious Validation set: Contains 334 examples.

- Religious Testing set: Contains 334 examples.

- Diacritized Custom Testing set: Contains 612 examples.

- Custom Testing dataset (diacritics-free): Contains 306 examples.

- AIRABIC Benchmark Testing set: Contains 1000 examples.

- AIRABIC Benchmark Testing set with dediacritization filter: The diacritics are removed from the testing set using a dediacritization filter.

The purpose of testing the model trained on the Religious dataset against the Custom Testing dataset (diacritics-free) and the AIRABIC Benchmark Testing set with a dediacritization filter was to assess the impact of removing diacritics and compare the results with datasets that included diacritics.

3.3.2. Evaluation of Larger and More Diverse Training Corpus and Comparative Analysis of Preprocessing Approaches

After assessing the weaknesses and strengths related to the impacts of diacritization, we aimed to advance our detection models further and ensure their applicability in real-world scenarios. Consequently, the evaluation strategy for the models trained on the Custom Plus dataset involved testing their performance across five distinct datasets to evaluate their effectiveness and generalizability. We used two approaches for this:

Non-Preprocessing Approach

Initially, the models were trained on the Custom Plus dataset without implementing a dediacritization filter into its training, validation, and testing sets. The only exception was the AIRABIC dataset, which was evaluated both with and without the dediacritization filter. Their performance was evaluated across the following datasets:

- Validation set: Contains 900 examples.

- Testing set: Contains 900 examples.

- Custom dataset: This contains 306 examples.

- AIRABIC Benchmark Testing set: Contains 1000 examples used to benchmark the model’s performance against the standard dataset.

- AIRABIC Benchmark Testing set with dediacritization filter: The diacritics are removed from the testing set using a dediacritization filter to assess the model’s adaptability to diacritic-free text.

We chose not to test the model against the Diacritized Custom testing set for two reasons. Firstly, the primary purpose of the Custom Plus dataset evaluation was to assess the models’ effectiveness and readiness for practical deployment, focusing on performance across diverse and OOD datasets rather than solely on diacritized texts. Secondly, both the Diacritized Custom testing set and the AIRABIC Benchmark Testing set served similar purposes by containing both diacritics-laden and diacritics-free texts. We selected the AIRABIC Benchmark Testing set because it provided a standardized performance measure and was larger than the Custom Plus testing set, allowing for more robust statistical analysis. This enabled us to compare the results with other leading detection models, such as GPTZero, facilitating broader and more meaningful benchmarks in the field.

For the testing against AIRABIC, we evaluated the models on both the diacritized and dediacritized versions of this dataset to compare the results and determine the model’s robustness across different text conditions. This dual evaluation helped us with the comparison between the models’ robustness levels.

Preprocessing Approach

To further refine the evaluation, we incorporated a dediacritization filter as a preprocessing step, applying it to all datasets (training, validation, and testing) to remove diacritics. The models were then retrained on this dediacritized Custom Plus dataset and subsequently evaluated using the same datasets as previously described.

3.3.3. Combining All Datasets for Maximizing the Corpus Size

After determining the most effective approach from the previous experiments, we created the Custom Max dataset, which combined the Custom Plus and Custom datasets. This combined dataset aimed to train the models on the largest collection of non-translated Arabic HWTs and AIGTs available on the internet, thereby maximizing the corpus size and enhancing the robustness and generalizability of our models. We applied the dediacritization preprocessing function to all datasets (training, validation, and testing) to ensure optimal performance.

4. Results

We conducted a series of experiments on the four selected models to evaluate their performances. The validation set results in the following tables represent the best-performing model weights selected based on achieving the lowest validation loss.

4.1. Diacritized Datasets Results

The first experiment aimed to investigate the impact of including diacritized text during training to determine its effectiveness and highlight its pros and cons in terms of the detection models’ performance. Table 3 presents the performance metrics for this evaluation.

Table 3.

Performance metrics of different models evaluated in the diacritization impact evaluation.

4.2. Evaluation of Custom Plus Results

The second set of experiments evaluated the models trained on the Custom Plus dataset. It is important to note that the results displayed in bold font indicate better performance than those from the alternative approach. Additionally, the same hyperparameters were used for both sets of experiments to ensure a fair comparison.

4.2.1. Non-Preprocessing Approach

In this evaluation, the models were trained on the Custom Plus dataset without implementing a dediacritization filter. Table 4 presents the results of the four models trained on the datasets as they were obtained, including some diacritics. This table includes results for the AIRABIC dataset both with and without applying a dediacritization filter during evaluation. It is important to note that the results for “AIRABIC using dediacritization filter” are included in Table 4 to directly compare with the results for AIRABIC in the second approach in the upcoming table.

Table 4.

Performance metrics of models trained on the Custom Plus dataset without preprocessing.

4.2.2. Preprocessing Approach

For this evaluation, the models were trained on the Custom Plus dataset after applying a dediacritization filter to remove diacritics from the training, validation, and testing sets, if any. Table 5 presents the results of the four models trained with this preprocessing approach.

Table 5.

Performance metrics of models trained on the dediacritized Custom Plus dataset.

4.3. Combined Dataset Evaluation

In the final evaluation phase, the models were trained on the Custom Max dataset, which included the largest collection of non-translated Arabic HWTs and AIGTs, as it combined examples from the Custom Plus and Custom datasets. The primary objective of this evaluation was to train and assess the models on the largest dataset available, thereby pushing the detection models toward optimal robustness. Additionally, this phase included an experiment to further investigate the impact of including diacritics in the training process versus applying a dediacritization filter across all sets (training, validation, and testing). Specifically, one run involved training, validating, and testing the models on text with diacritics as they naturally occur in the HWTs. In contrast, the other run used a dediacritization filter on all sets. The same approach used in the Custom Plus evaluation for comparing AIRABIC with and without dediacritization was also applied here. The performance metrics for this evaluation are presented in Table 6 and Table 7.

Table 6.

Performance metrics of models trained on the Custom Max dataset and evaluated against AIRABIC.

Table 7.

Performance metrics of models trained on the dediacritized Custom Max dataset and evaluated against AIRABIC.

5. Discussion

5.1. Comparison of Our Detection Models with GPTZero on the AIRABIC Benchmark Dataset

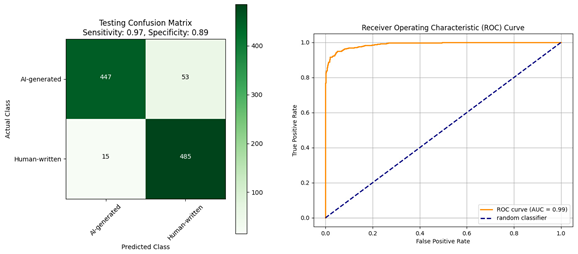

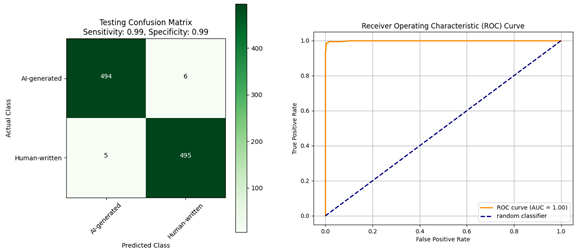

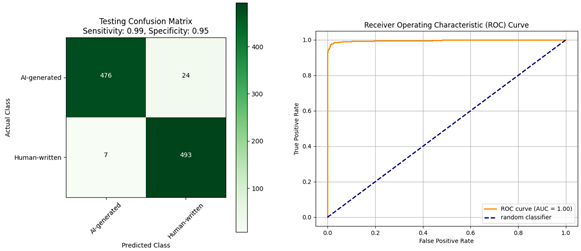

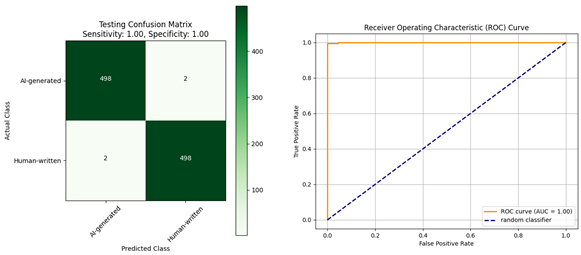

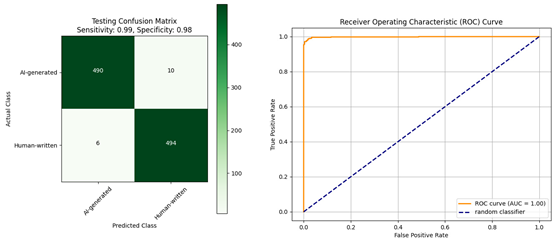

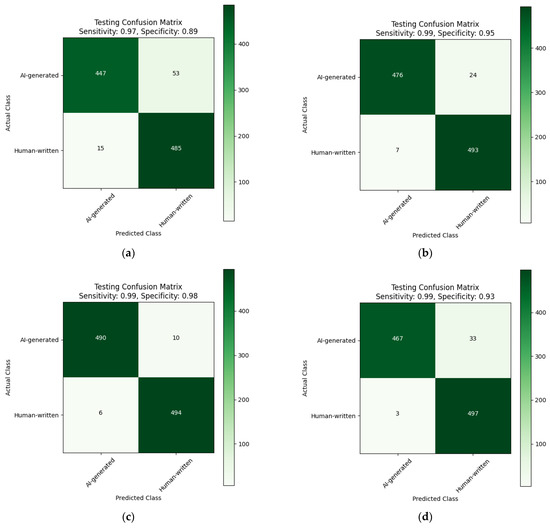

The primary objective of employing the Diacritized Custom dataset was to train our models using diacritized and non-diacritized Arabic text examples. This approach was intended to determine whether such training would enhance the models’ robustness when evaluated against the AIRABIC Benchmark dataset [14]. A prior study [13] demonstrated that the AraELECTRA and XLM-R models, after being trained on the Custom dataset, achieved accuracies of 81% when evaluated against the AIRABIC dataset, underscoring the challenges posed by diacritics. Our study aimed to further address these challenges by specifically training the models to handle diacritized texts better. Table 8 presents a comparative analysis of the performance of our four detection models trained on the Diacritized Custom dataset against GPTZero, with the best performance metrics highlighted in bold. Figure 5 shows the confusion matrix. It is important to mention that the GPTZero results were obtained from [14].

Table 8.

Comparative analysis of the performance of our four detection models trained on the Diacritized Custom dataset against GPTZero on the AIRABIC benchmark dataset.

Figure 5.

Performance of the models that trained on the Diacritized Custom dataset against the AIRABIC benchmark dataset. (a) AraELECTRA; (b) AraBERT; (c) XLM-R; (d) mBERT.

The detection models trained on the Diacritized Custom dataset achieved higher results against AIRABIC than GPTZero and the models trained on the Custom datasets presented in [13]. However, the question remains as to whether including diacritized texts during training consistently benefits detection models in handling diacritized texts or if incorporating a dediacritization filter is a more effective solution. In the following sections, we address these questions in detail.

5.2. Analysis of the Diacritized Datasets Results

Evaluating the models against the Religious, AIRABIC, and Diacritized Custom datasets yielded several findings, which we will categorize into different points in the upcoming subsections.

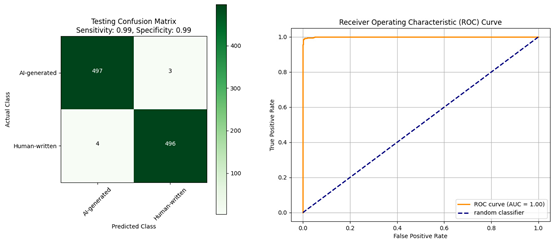

5.2.1. Diacritization Involvement Benefit

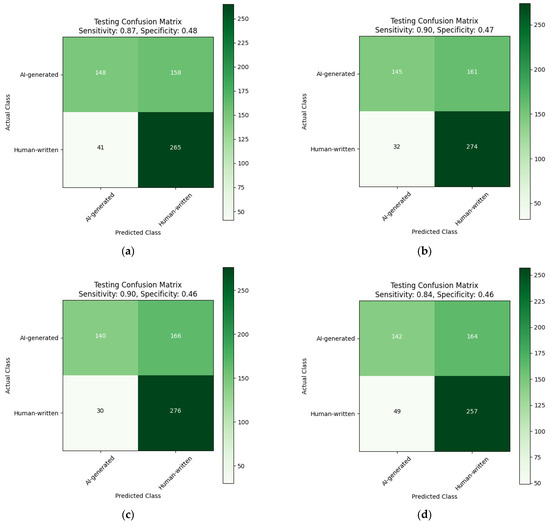

Firstly, including diacritics can enhance the detection models’ ability to handle diacritized texts. This is evident in our evaluation of the models trained on the Diacritized Custom dataset against the AIRABIC benchmark dataset, where they outperformed GPTZero, as reported previously. This advantage was also observed when models trained on the Religious dataset were tested against the Diacritized Custom testing set. The models were more successful in recognizing the HWTs from the Diacritized Custom testing set compared to the AIGTs. This is likely because the Religious dataset, compiled from original sources, included some diacritized HWTs but lacked diacritized AIGTs, as the AIGTs were generated by ChatGPT and BARD, which do not use diacritics. The confusion matrix of the models trained on the Religious dataset and evaluated against the Diacritized Custom testing set is presented in Figure 6.

Figure 6.

Confusion matrix of the models trained on the religious dataset and evaluated against the Diacritized Custom testing set. (a) AraELECRTA; (b) AraBERT; (c) XLM-R; (d) mBERT.

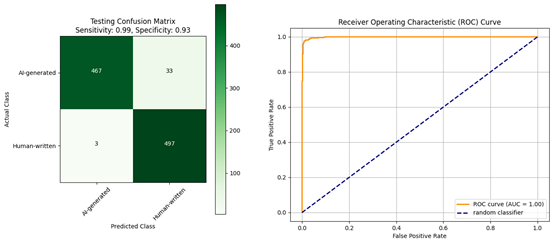

To further reveal this impact, we evaluated the models trained on the Religious dataset against the Custom testing set, which contained only the non-diacritized text from the Diacritized Custom dataset and was half the size of the Diacritized Custom testing set. The improved recognition of AIGTs in this evaluation suggests that the models’ ability to distinguish AIGTs was hindered by the presence of diacritics in the testing set. Since the training dataset involved only diacritized HWTs and non-diacritized AIGTs, the models performed better when tested against a non-diacritized testing set. This improvement is illustrated in Figure 7.

Figure 7.

The confusion matrix for the detection models trained on the Religious dataset and evaluated against the Custom testing set: (a) AraELECTRA; (b) AraBERT; (c) XLM-R; (d) mBERT.

5.2.2. Importance of Diverse Examples

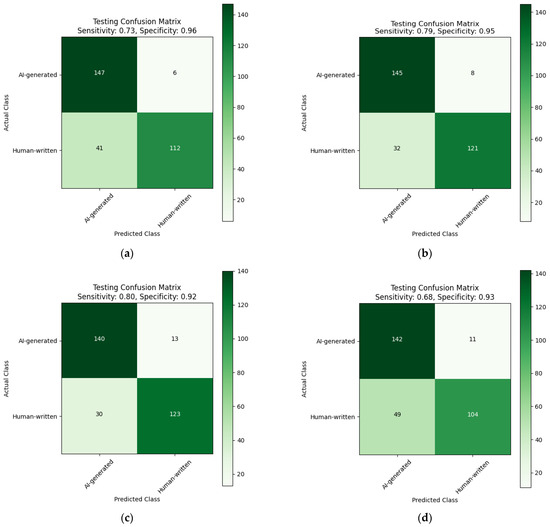

The robustness of detection models necessitates a diverse range of examples from various fields and writing styles to enhance the models’ ability to capture the distinguishing features of HWTs and AIGTs. The Religious dataset, comprising HWTs from a single domain with uniform writing styles, did not perform well against other datasets that included a wider variety of HWTs and diverse writing styles. This limitation led to the underperformance of the model trained on the Religious dataset when evaluated against the benchmark dataset compared to the model trained on the Diacritized Custom dataset. This was evident even after applying the dediacritization filter, as shown in Table 3. Both the scope of content and the variety in writing styles, such as dense paragraphs, bullet points, formal and informal tones, and technical and narrative structures, are crucial for the model’s ability to generalize across different types of text. For instance, if we provide an example within the scope of geography but with a similar structure to the religious text, the models will likely be able to recognize it and classify it properly. However, despite the high performance shown in the confusion matrices in Figure 8, the models trained on the Religious dataset still misclassified some HWTs in both the validation and testing sets.

Figure 8.

Confusion matrices of the models trained on the religious dataset and evaluated against the religious testing set: (a) AraELECTRA; (b) AraBERT; (c) XLM-R; (d) mBERT.

Although the number of misclassifications (six to eight HWTs) may seem relatively small, it highlights an important point: models trained on a narrow range of examples may perform well within that specific domain, but may also struggle with generalizability across more diverse datasets. This indicates that the diversity of subject matter and the variety of writing styles are crucial for enhancing the detection models’ performance and achieving true robustness. The high accuracy within a single domain underscores the need for diverse training examples to achieve robust performance across different text types. While the models exhibit high sensitivity and specificity within the religious domain, their true robustness can only be validated through evaluations on more varied and comprehensive datasets.

5.3. Analysis of the Results from Custom Plus and Custom Max

The evaluations of models trained on the Custom Plus and Custom Max datasets provided comprehensive insights into the impact of diacritization and dediacritization on model performance. Both datasets underwent two sets of experiments: one without any preprocessing and one with a dediacritization filter applied across all training, validation, and testing sets. It is important to note that all experiments were conducted using the same hyperparameters to ensure a fair comparison.

The results from both datasets indicate that diacritization and dediacritization have nuanced effects on model performance, which can vary based on the dataset and evaluation conditions. We will summarize the general observations in the following points.

- (1)

- Impact of Diacritics: Including diacritics in the training data generally enhances the models’ ability toward HWTs, as evidenced by higher recall values across different datasets. This suggests that including diacritized texts during training can be beneficial, especially in scenarios where recall is important. However, the presence of diacritics can sometimes reduce precision, particularly when there is a mismatch between the training and evaluation text formats.

- (2)

- Precision vs. recall Trade-off: The dediacritization preprocessing approach typically improves precision, but at the cost of recall. This trade-off was observed across both the Custom Plus and Custom Max datasets. Applying a dediacritization filter can be advantageous in scenarios where precision is important. Conversely, if recall is more important, maintaining diacritics in the training data may be preferable.

- (3)

- Marginal Differences: While the differences in performance between the two approaches were marginal in some cases, the overall trends varied. In the Custom Plus dataset, models trained with diacritics showed higher recall, but lower precision. In contrast, the Custom Max dataset showed that models trained with dediacritization achieved higher precision, but also maintained competitive recall values. These variations highlight that the impact of diacritization versus dediacritization can depend on the dataset’s size and diversity.

The marginal differences observed suggest that dediacritization in evaluation is beneficial for evaluating the OOD dataset, even if the model has been trained with some diacritics. Whether precision or recall is more important should guide the decision to include diacritics in the training data or to apply dediacritization.

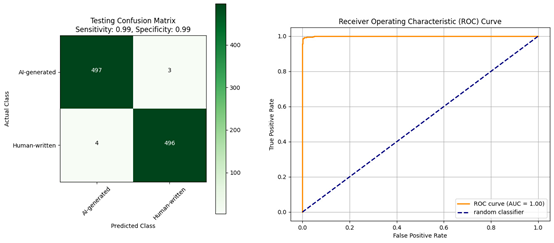

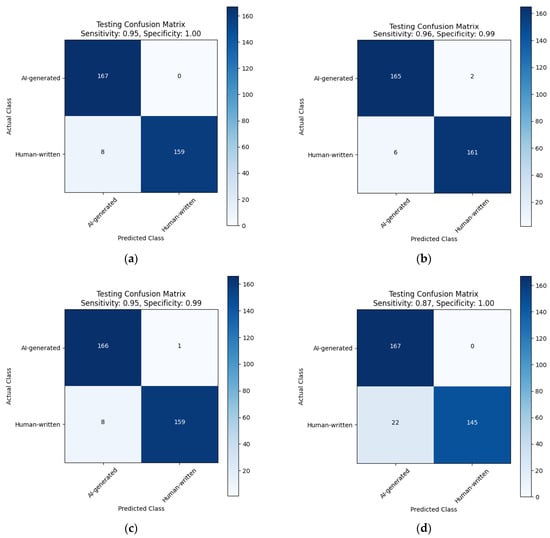

5.4. Takeaway Recommendations for Handling Diacritics in Arabic Text Detection

After conducting an extensive evaluation and analysis of the four models with varying percentages of diacritized samples in the Diacritized Custom, Religious, Custom Plus, and Custom Max datasets, we can make the following recommendations. First, we encourage including diacritized texts as they naturally appear in Arabic. This inclusion enhances the models’ ability to recognize HWTs. However, we advise against heavily diacritizing the datasets, as seen in the Diacritized Custom dataset, because it also necessitates including diacritics-free versions of the same samples to maintain a balanced dataset. This approach can be inefficient, since the outcome remains suboptimal compared to a method where the models do not require duplicating examples of both diacritized and non-diacritized HWTs and AIGTs. Instead, better results can be achieved by implementing a dediacritization filter during evaluation. This filter helps the models process Arabic text without the distraction of diacritics. To demonstrate this, we compared the performance of four fine-tuned models trained on the Custom Dataset, which contained only non-diacritized examples, against the AIRABIC benchmark dataset using a dediacritization filter during evaluation. Then, we trained the four models on the Diacritized Custom dataset, which included double the examples of the Custom Dataset, as both versions (diacritized and non-diacritized) were included, and evaluated them against the AIRABIC benchmark dataset without a use for the dediacritization filter during the evaluation, since the models were already trained on diacritized texts. These results are illustrated in Table 9.

Table 9.

Comparative performance metrics of the four models against the AIRABIC benchmark dataset: trained on diacritized text versus text with a dediacritization filter applied.

All four models reached optimality with the second approach represented in the (b) figures, where the only requirement was the application of a dediacritization filter prior to evaluation. For instance, AraELECTRA demonstrated significant improvements with the dediacritization filter. When trained on the Diacritized Custom dataset, it achieved a sensitivity of 0.97 and a specificity of 0.89, with an ROC AUC of 0.98. In contrast, training on the Custom dataset and evaluation with a dediacritization filter resulted in a sensitivity of 0.99 and a specificity of 0.99, with a perfect ROC AUC of 1.00. This indicates that the dediacritization filter significantly enhances performance by reducing false positives and false negatives. Similarly, AraBERT showed enhanced performance with the dediacritization filter. Initially, training on the Diacritized Custom dataset yielded a sensitivity of 0.99 and a specificity of 0.95, with an ROC AUC of 1.00. Using the dediacritization filter during evaluation, the model’s sensitivity and specificity both improved to 1.00, maintaining a perfect ROC AUC of 1.00. This demonstrates the dediacritization filter’s effectiveness in improving detection accuracy, making it the most effective approach for addressing the challenges posed by diacritics. XLM-R, known for its robustness, also benefited from the dediacritization filter, as both the sensitivity and specificity improved to 0.99, maintaining a perfect ROC AUC of 1.00. Lastly, mBERT exhibited significant improvements with the dediacritization filter. The model initially trained on the Diacritized Custom dataset achieved a sensitivity of 0.99 and a specificity of 0.93, with an ROC AUC of 1.00, and after applying the dediacritization filter, it its sensitivity and specificity improved to 0.99, with a perfect ROC AUC of 1.00. This suggests that, while mBERT performs well, the dediacritization file enhances overall model reliability.

5.5. In-Depth Analysis of Models’ Performance

5.5.1. Preliminary Investigation of Models’ Tokenization and Embeddings

Our preliminary investigation analyzes the tokenization and embeddings generated by four pre-trained models when processing diacritized Arabic text. The purpose was to analyze tokenization differences and understand model behavior in processing these variations on diacritized and non-diacritized texts. By understanding their tokenization, embeddings, and the datasets they were trained on, we aim to uncover why some models are more sensitive to diacritics, which impacts their performance towards diacritized Arabic texts.

We provide one diacritized word, “عُلِم” (transliterated as “ʿulim”), which means “understood”. This word is used to inspect how each model handles diacritics. Table 10 summarizes the tokenization results for each model.

Table 10.

Tokenization results for the diacritized Arabic word “عُلِم” by four pre-trained models.

In the mBERT model, the special tokens [CLS] and [SEP] are used to mark the beginning and the end of the input sequence, respectively. The [CLS] token is typically used to aggregate the representation of the entire sequence for classification tasks. The # symbol in mBERT tokenization represents subword tokens, indicating that the token is a continuation of the previous subword. For instance, in the word ‘عُلِم’ (with diacritics), the tokenization process breaks it into subwords, and the # is used to denote that the tokens following it are part of the same word. Similarly, XLM-R uses <s> and </s> tokens, following the RoBERTa methodology. Both AraELECTRA and AraBERT represent the diacritized input as [UNK] (unknown token), indicating that these models do not handle diacritics well.

Further analysis involved comparing sentence-level embeddings by calculating the cosine similarity between the embeddings of diacritized and non-diacritized versions of a longer text. We provided a passage containing multiple paragraphs to test the similarity score, and we obtained the results summarized in Table 11.

Table 11.

Cosine similarity scores between diacritized and non-diacritized text embeddings.

Our observations revealed the following:

- Multilingual Models (mBERT and XLM-R):

Both models demonstrated a robust ability to handle diacritics by splitting the word into subword tokens, including diacritics as separate entities. The high cosine similarity scores (0.9853 for mBERT and 0.9988 for XLM-R) indicate that these models produce very similar embeddings for diacritized and non-diacritized text, maintaining consistent semantic representations despite diacritic variations. XLM-R followed the RoBERTa [32] methodology, using <s> and </s> tokens instead of [CLS] and [SEP], but still effectively captured the semantic meaning of the text. The reason these models can handle diacritics can be attributed to the datasets used for their training. XLM-R was pre-trained on a large and diverse dataset known as CC-100, which spans 100 languages, including Arabic. This dataset includes a substantial amount of text from CommonCrawl and Wikipedia [37]. In contrast, mBERT was pre-trained on Wikipedia dumps from 104 languages. The Arabic texts on Wikipedia and CommonCrawl likely contain some diacritics, or, at the very least, have not undergone preprocessing procedures that strip away diacritical marks.

- Arabic-Specific Models (AraBERT and AraELECTRA):

Both models failed to handle diacritics effectively, as indicated by the low cosine similarity scores (0.6100 for AraBERT and 0.6281 for AraELECTRA). These models represented the entire input as unknown tokens ([UNK]) when diacritics were present, leading to significant differences in the embeddings for diacritized and non-diacritized text. The Arabic-specific models were trained on more extensive Arabic corpora, but both models underwent preprocessing steps that removed diacritics from the text. This preprocessing step led to their struggle to effectively tokenize and process diacritized texts.

These findings reveal that some pre-trained models are not adequately equipped for diacritized texts, while others can recognize diacritics but are not fully prepared for diacritics-laden texts. The Arabic texts used for training the multilingual models (such as mBERT and XLM-R) likely contained minimal diacritics. In contrast, the Arabic-specific models (such as AraBERT and AraELECTRA) were trained on texts that had undergone preprocessing to remove diacritics entirely. This made their representations sensitive to diacritized texts.

5.5.2. Performance Evaluation of Fine-Tuned Models

The performance evaluation of the four fine-tuned models, AraELECTRA, AraBERT, XLM-R, and mBERT, across various datasets provides insights into their capabilities and limitations. Overall, the AraELECTRA and XLM-R demonstrated robust performances with consistently high precision and recall values, particularly when a dediacritization filter was applied during evaluation. AraBERT showed balanced performance, excelling in both precision and recall in some cases. mBERT, while strong in certain aspects, exhibited more variability in performance compared to the other models.

The results highlighted that XLM-R emerged as the best overall performer due to its consistent and superior metrics across different datasets and preprocessing conditions. XLM-R’s superior performance can be attributed to its RoBERTa-based architecture, which has been shown to be more effective than the original BERT architecture used by mBERT. Additionally, XLM-R employs dynamic masking during training, as opposed to the static masking in mBERT, allowing for the creation of more diverse and contextually rich training scenarios. This feature enhances XLM-R’s ability to generalize across different tasks and datasets. Moreover, the diverse and extensive training corpus of XLM-R, which includes 2.5 TB of cleaned CommonCrawl data and Wikipedia text across 100 languages, enables it to capture a wider range of linguistic patterns. Techniques like shared subword vocabulary and cross-lingual attention facilitate effective knowledge transfer between languages, further boosting its performance in cross-lingual tasks and its ability to handle diverse textual environments.

For the Arabic-specific models, AraELECTRA outperformed AraBERT despite sharing the same training dataset due to the unique ELECTRA [36] architecture. Unlike BERT’s Masked Language Modeling (MLM), which masks tokens and predicts them, ELECTRA uses Replaced Token Detection (RTD). In this approach, a generator replaces some tokens with plausible alternatives, and a discriminator identifies which tokens were replaced. This method forces the model to develop a deeper contextual understanding, making it more effective at distinguishing real tokens from fake ones. Consequently, AraELECTRA achieved a more nuanced and robust representation of the input text, leading to superior performance.

These findings suggest that, while each model has its strengths, the fine-tuned XLM-R and AraELECTRA models provide the most reliable performance in terms of distinguishing between AIGTs and HWTs, particularly in diverse and variable textual environments. The dynamic masking of XLM-R and the RTD mechanism of AraELECTRA contribute significantly to their effectiveness.

5.6. Limitations

While our study leveraged the AIRABIC benchmark to evaluate the performances of our detection models, several limitations inherent to this benchmarking process may have influenced the results. Firstly, the AIGTs in the AIRABIC dataset were limited to the ChatGPT 3.5 model. At the time the dataset was created, BARD/Gemini did not support text generation in Arabic. This limitation means that the benchmark may not capture the full diversity of AIGT characteristics from other advanced models, potentially affecting the comprehensiveness of our evaluation. Additionally, the AIRABIC benchmark has been tested against the OpenAI Text Classifier [40] and GPTZero only. While these provide useful reference points, there are other AI text detection systems that also need to be evaluated against this benchmark for a more comprehensive comparison. Due to the lack of existing studies that evaluate these detection systems in Arabic, it is not feasible to compare our results with those of other existing detection systems without conducting a separate study that involves an in-depth analysis of all current detection systems in the context of the Arabic language. We intentionally did not include the OpenAI Text Classifier results since they have shown a significant bias towards Arabic texts, classifying them incorrectly as AIG. Furthermore, OpenAI no longer supports or recognizes this classifier, making it impractical for comparison. Consequently, we chose not to include it in our evaluation to avoid skewed results and misleading conclusions about the performances of our models. By acknowledging these limitations, we aim to provide a clearer context for our findings and highlight areas for future research to build upon and enhance the robustness of AI text detection methods for Arabic and other languages.

5.7. Ethical Considerations

The development and deployment of AI text detection technologies present some ethical implications that need to be carefully considered. While these technologies offer substantial benefits in detecting AIGTs and preventing plagiarism, they also raise important ethical concerns that need to be addressed. One of the primary ethical considerations is ensuring the privacy and security of data. It is important to handle user data responsibly, ensuring it is anonymized and protected against unauthorized access. In our research, we strictly adhered to data privacy guidelines and used publicly available data. This approach ensured that individuals’ privacy rights were respected and their data were used ethically. Furthermore, open-access AI detection systems must not be designed to store user texts being checked for AIG content, thereby enhancing user privacy and security.

We recommend several guidelines for developers, users, and policymakers to ensure the ethical development and deployment of AI text detection systems. Developers should ensure data privacy and security, regularly assess and mitigate biases, and maintain transparency in the AI development process. Users should understand the system’s limitations and potential errors and use the technology responsibly to avoid unfairly accusing individuals of misconduct. Policymakers should establish regulations and guidelines that promote the ethical use of AI text detection technologies, protecting individual rights and promoting fairness. Additionally, AI detection systems should not claim that their detection results are 100% accurate in all scenarios, as there is always potential for misclassification.

6. Conclusions and Future Work

This study introduces robust Arabic AI detection models by utilizing transformer-based pre-trained models, namely, AraELECTRA, AraBERT, XLM-R, and mBERT. While our focus was on Arabic due to its writing challenges, the model architecture is adaptable to any language. Our primary goal was to detect AIGT in essays to prevent plagiarism and overcome the challenges posed by diacritics often appearing in Arabic religious texts. We extensively evaluated the impact of diacritization and dediacritization on the performance of the detection models using various datasets, including Diacritized Custom, Religious, Custom Plus, and Custom Max. Our findings reveal nuanced effects of diacritization on model performance, highlighting the trade-offs between precision and recall depending on the preprocessing approach used. Key takeaways from our analysis include the observation that, while including diacritics in the training data enhances recall, duplicating examples to include diacritized and non-diacritized versions is inefficient and does not significantly improve performance. Instead, implementing a dediacritization filter during evaluation is a more effective and cost-efficient strategy, ensuring optimal model performance without the need for extensive duplication of training data. Our experiments showed that detection models trained on diacritized examples achieved up to 98.4% accuracy compared to GPTZero’s 62.7% on the AIRABIC benchmark dataset. Among the models evaluated, XLM-R emerged as the best candidate due to its consistent adaptability and superior performance across different datasets and preprocessing conditions. AraELECTRA also showed strong results, particularly in precision, when dediacritization was applied. These findings offer valuable insights for practitioners dealing with detecting AIGTs of Arabic texts. We recommend using a dediacritization filter during evaluation to achieve high precision while maintaining diacritics in the training data, which can enhance recall.

Future work should explore the potential of transfer learning using pre-trained, multilingual models. Given the capabilities of models like XLM-R to support multiple languages, it would be beneficial to investigate the effectiveness of training a model in one language and testing it in another. In our preliminary experiment, we tested our detection model, which utilizes the XLM-R model, on AIGTs in English. The model successfully detected AIGTs in English, indicating the promise of cross-lingual transfer learning. However, we caution against generalizing these results, as our test was conducted on a limited number of examples rather than a comprehensive dataset. A systematic evaluation using larger and more diverse datasets across various languages is necessary to fully understand the potential and limitations of cross-lingual transfer learning for AI text detection.

Furthermore, future research should examine the models’ performance against adversarial text mimicking, where HWTs and AIGTs are mixed to challenge the detection systems. This approach will help to evaluate the detection models’ robustness and effectiveness in more complex and realistic scenarios. Understanding how well the models can discern subtle differences in adversarial settings will provide deeper insights into their practical applications and limitations.

Author Contributions

Conceptualization, H.A. and K.E.; methodology, H.A.; software, H.A.; validation, H.A. and K.E.; formal analysis, H.A.; investigation, H.A.; resources, H.A.; data curation, H.A.; writing—original draft preparation, H.A.; writing—review and editing, H.A. and K.E.; visualization, H.A.; supervision, K.E.; project administration, K.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All materials related to our study, including the detection models, all narrative results, logs, performance metrics, source code, and our novel datasets, are publicly accessible via our dedicated GitHub repository: https://github.com/Hamed1Hamed/AI_Generated_Text_Detector (accessed on 3 June 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Software

- ○

- EdrawMax (version 13.5.0, Wondershare Technology, Shenzhen, China)—used for drawing the diagrams

- ○

- Python (version 3.9.18, Python Software Foundation, Beaverton, OR, USA)

- ○

- PyTorch (version 2.1.0+cu121, Facebook, Inc., Menlo Park, CA, USA)

- ○

- Farasa (version 1.0, QCRI, Doha, Qatar)

- ○

- NumPy (version 1.23.5, NumPy Developers)

- ○

- pandas (version 1.5.3, pandas Development Team)

- ○

- transformers (version 4.31.0, Hugging Face, Inc., New York, NY, USA)

- ○

- scikit-learn (version 1.3.2, Scikit-learn Developers)

- ○

- matplotlib (version 3.8.0, Matplotlib Development Team)

- ○

- seaborn (version 0.13.2, Seaborn Developers)

- ○

- tqdm (version 4.66.1, tqdm Developers)

- ○

- openpyxl (version 3.1.2, OpenPyXL Developers)

- ○

- farasapy (version 0.0.14, QCRI, Doha, Qatar)

- ○

- PyArabic (version 0.6.15, ArabTechies)

Equipment

- ○

- NVIDIA GeForce RTX 3090 GPU (NVIDIA Corporation, Santa Clara, CA, USA)

References

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S. Palm: Scaling language modeling with pathways. arXiv 2022, arXiv:2204.02311. [Google Scholar]

- OpenAI. ChatGPT (Mar 14 Version) [Large Language Model]. Available online: https://chat.openai.com/chat (accessed on 30 March 2023).

- Bard, G.A. BARD. Available online: https://bard.google.com/ (accessed on 10 October 2023).

- Gemini. Available online: https://gemini.google.com/app (accessed on 1 February 2024).

- Weidinger, L.; Uesato, J.; Rauh, M.; Griffin, C.; Huang, P.-S.; Mellor, J.; Glaese, A.; Cheng, M.; Balle, B.; Kasirzadeh, A. Taxonomy of risks posed by language models. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, Seoul, Republic of Korea, 21–24 June 2022; pp. 214–229. [Google Scholar]

- Sheng, E.; Chang, K.-W.; Natarajan, P.; Peng, N. Societal biases in language generation: Progress and challenges. arXiv 2021, arXiv:2105.04054. [Google Scholar]

- Zhuo, T.Y.; Huang, Y.; Chen, C.; Xing, Z. Exploring ai ethics of chatgpt: A diagnostic analysis. arXiv 2023, arXiv:2301.12867. [Google Scholar]

- Cotton, D.R.; Cotton, P.A.; Shipway, J.R. Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innov. Educ. Teach. Int. 2023, 61, 228–239. [Google Scholar] [CrossRef]

- Gao, C.A.; Howard, F.M.; Markov, N.S.; Dyer, E.C.; Ramesh, S.; Luo, Y.; Pearson, A.T. Comparing scientific abstracts generated by ChatGPT to original abstracts using an artificial intelligence output detector, plagiarism detector, and blinded human reviewers. BioRxiv 2022. [Google Scholar] [CrossRef]

- Anderson, N.; Belavy, D.L.; Perle, S.M.; Hendricks, S.; Hespanhol, L.; Verhagen, E.; Memon, A.R. AI did not write this manuscript, or did it? Can we trick the AI text detector into generated texts? The potential future of ChatGPT and AI in Sports & Exercise Medicine manuscript generation. BMJ Open Sport Exerc. Med. 2023, 9, e001568. [Google Scholar]

- Pegoraro, A.; Kumari, K.; Fereidooni, H.; Sadeghi, A.-R. To ChatGPT, or not to ChatGPT: That is the question! arXiv 2023, arXiv:2304.01487. [Google Scholar]

- Alshammari, H.; El-Sayed, A.; Elleithy, K. Ai-generated text detector for arabic language using encoder-based transformer architecture. Big Data Cogn. Comput. 2024, 8, 32. [Google Scholar] [CrossRef]

- Alshammari, H.; Ahmed, E.-S. AIRABIC: Arabic Dataset for Performance Evaluation of AI Detectors. In Proceedings of the 2023 International Conference on Machine Learning and Applications (ICMLA), Jacksonville, FL, USA, 15–17 December 2023; pp. 864–870. [Google Scholar]

- Farghaly, A.; Shaalan, K. Arabic natural language processing: Challenges and solutions. ACM Trans. Asian Lang. Inf. Process. 2009, 8, 1–22. [Google Scholar] [CrossRef]

- Obeid, O.; Zalmout, N.; Khalifa, S.; Taji, D.; Oudah, M.; Alhafni, B.; Inoue, G.; Eryani, F.; Erdmann, A.; Habash, N. CAMeL tools: An open source python toolkit for Arabic natural language processing. In Proceedings of the Twelfth Language Resources and Evaluation Conference; European Language Resources Association: Marseille, France, 2020; pp. 7022–7032. [Google Scholar]

- Darwish, K.; Habash, N.; Abbas, M.; Al-Khalifa, H.; Al-Natsheh, H.T.; Bouamor, H.; Bouzoubaa, K.; Cavalli-Sforza, V.; El-Beltagy, S.R.; El-Hajj, W. A panoramic survey of natural language processing in the Arab world. Commun. ACM 2021, 64, 72–81. [Google Scholar] [CrossRef]

- Habash, N.Y. Introduction to Arabic natural language processing. Synth. Lect. Hum. Lang. Technol. 2010, 3, 1–187. [Google Scholar]

- Abbache, M.; Abbache, A.; Xu, J.; Meziane, F.; Wen, X. The Impact of Arabic Diacritization on Word Embeddings. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–30. [Google Scholar] [CrossRef]

- Al-Khalifa, S.; Alhumaidhi, F.; Alotaibi, H.; Al-Khalifa, H.S. ChatGPT across Arabic Twitter: A Study of Topics, Sentiments, and Sarcasm. Data 2023, 8, 171. [Google Scholar] [CrossRef]

- Alshalan, R.; Al-Khalifa, H. A deep learning approach for automatic hate speech detection in the saudi twittersphere. Appl. Sci. 2020, 10, 8614. [Google Scholar] [CrossRef]

- El-Alami, F.-z.; El Alaoui, S.O.; Nahnahi, N.E. Contextual semantic embeddings based on fine-tuned AraBERT model for Arabic text multi-class categorization. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 8422–8428. [Google Scholar] [CrossRef]