Abstract

Distantly supervised relation extraction (DSRE), first used to address the limitations of manually annotated data via automatically annotating the data with triplet facts, is prone to issues such as mislabeled annotations due to the interference of noisy annotations. To address the interference of noisy annotations, we leveraged a novel knowledge distillation (KD) method which was different from the conventional models on DSRE. More specifically, we proposed a model-agnostic KD method, Multi-Level Knowledge Distillation with Adaptive Temperature (MKDAT), which mainly involves two modules: Adaptive Temperature Regulation (ATR) and Multi-Level Knowledge Distilling (MKD). ATR allocates adaptive entropy-based distillation temperatures to different training instances for providing a moderate softening supervision to the student, in which label hardening is possible for instances with great entropy. MKD combines the bag-level and instance-level knowledge of the teacher as supervisions of the student, and trains the teacher and student at the bag and instance levels, respectively, which aims at mitigating the effects of noisy annotation and improving the sentence-level prediction performance. In addition, we implemented three MKDAT models based on the CNN, PCNN, and ATT-BiLSTM neural networks, respectively, and the experimental results show that our distillation models outperform the baseline models on bag-level and instance-level evaluations.

1. Introduction

Information extraction (IE) is one of the most important tasks of natural language processing (NLP); it focuses on automatically extracting structured information from primitive unstructured or semi-structured machine-readable documents or web pages and plays a crucial role in text mining and financial investigation, and so on. As a fundamental part of IE and information retrieval, relation extraction (RE) is mainly responsible for identifying the relational facts of entity pairs in plain text, which plays an important role in modern artificial intelligence applications, such as question answering and knowledge graph construction. With the advancement of the semantic understanding ability of deep learning technologies, RE has had many breakthroughs. Nevertheless, the development of RE faced a severe challenge brought on by the limited amount of manually annotated data.

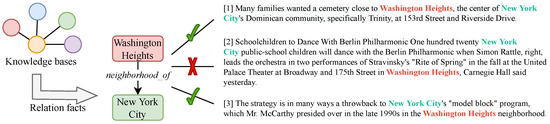

To this end, Ref. [1] proposed a novel learning paradigm, distant supervision, which automatically labels each instance by aligning the relation fact of the particular entity pair to knowledge bases (e.g., DBpedia [2] and Freebase [3]). Distantly supervised relation extraction (DSRE) is based on an overarching assumption: if two entities participate in a relation, then all mentions of the two entities are assumed to express that relation, significantly increasing the quantity of distantly annotated data. Unfortunately, the quantity of incorrect annotations also increases proportionally, eventuating into a new obstacle to overcome. As an example, shown in Figure 1, all the sentences in the corpus that contain “Washington Heights” and “New York City” would be presumed to reveal the relation fact “neighborhood_of”, which is not always the case.

Figure 1.

Example of distantly supervised relation extraction, in which “Washington Heights” is the head entity and “New York City” is the tail entity, and the relation between them from knowledge bases is neighborhood_of. However, the second instance does not reveal the relation.

Recently, a refined assumption was proposed: if two entities participate in a relation, at least one sentence that mentions these two entities might express that relation. Some researchers [4,5,6,7] attempted to model the DSRE task at the bag-level based on multi-instance learning [8], and achieved good results on a bag-level evaluation. However, limited by their modeling intention, the aforementioned models fall short in sentence-level prediction evaluation. Some researchers [9,10,11,12] focused on noise reduction via filtering out instances with incorrect annotations to improve the accuracy of DSRE at the sentence level, with varying results.

Nevertheless, ref. [13] pointed out that although sentences do not directly describe the relation between mentioned entities, they still provide useful information for RE. Therefore, compared to filtering out noisy sentences, alternative methods have been developed for retaining valuable information. Some researchers [14,15,16] worked on knowledge distillation (KD) for noise reduction, where KD is usually a teacher–student architecture where the main idea is that the student model mimics the teacher model in order to obtain a competitive or even a superior performance, and has the characteristics of label softening. Label softening not only reduces inaccurate noise but also provides more useful information than hard (one-hot) labels. Benefiting from label softening, some distilled models achieved a positive result. However, some distillation frameworks are limited by a unified distillation temperature or “softening” process, or ignore multi-level knowledge. Therefore, there is still potential to improve the performance of DSRE.

In this work, we focused on KD for DSRE. To overcome the weakness of KD mentioned above, we proposed a model-agnostic knowledge distillation method, Multi-Level Knowledge Distillation with Adaptive Temperature (MKDAT), which aims at distilling excellent relation extraction models and primarily involves two effective modules: Adaptive Temperature Regulation module (ADR) and Multi-Level Knowledge Distilling module (MKD). The main goal behind ADR is to advance the unified temperature method, by positing that considering the differences among instances should be adaptive rather than fixed. According to the distribution of predictions, the models should have the ability to determine whether the labels need to be softened or hardened, because labels that are too hard or too soft are unacceptable for conveying dark knowledge. MKD aims at combining multi-instance learning and KD to train the teacher model at the bag level for providing multi-level knowledge to the student, and the student model at the sentence level for improving the sentence-level evaluation performance.

To sum up, our contributions are listed as follows:

- Based on the current state of the literature, this paper is the first to propose the possibility of label hardening during softening labels in knowledge distillation.

- We explored the possibility of combining multi-instance learning and knowledge distillation to train a teacher and student model at the bag and/or sentence levels.

- We explored the effects of multi-level training modes for the teacher and student model in knowledge distillation.

- We implemented three distillation instances based on the CNN, PCNN, and ATT-BiLSTM neural networks, which outperformed the baselines.

- Our distilled student models, which are trained on distantly supervised datasets, achieved a passable improvement of sentence-level evaluation performance on manually corrected distantly supervised data.

2. Related Work

2.1. Distantly Supervised Relation Extraction

As a core task of information extraction, RE has been widely used in question-answering systems, knowledge graph construction, semantic analysis, summarization, and other fields. However, RE heavily relies on manually annotated data. To overcome this drawback, ref. [1] proposed distant supervision to automatically annotate instances by aligning the relation facts of entity pairs to knowledge bases, based on which they obtained large-scale training data, resulting in a large quantity with inevitable and massive mislabeled/noisy annotations.

From the perspective of noise handling, there are two main kinds of methods for modeling distantly supervised relation extraction (DSRE) datasets. Firstly, noise filtering methods, which focus on identifying noise instances, and then, filtering them out. Ref. [17] assumed that each bag contained at least one sentence that revealed the true relation, and they ignored other noisy samples when finding the true instance. Refs. [9,18] proposed an adversarial-training-based method to enable the model to recognize noisy data and reduce the introduction of noisy annotations. Refs. [10,12,19] proposed reinforced-learning-based methods to train a high-quality sentence selector for noise filtering, and then, modeled the RE on selected clean data. Ref. [20] adopted the semantic knowledge base and semantic representation of entity pairs to select informative positive instances for model training. It must be noted that, while the implementation of noise filtering may seem second nature and be beneficial, Ref. [13] pointed out that even when sentences do not directly describe the relation between mentioned entities they still provide useful information for relation RE.

Hence, a second approach in handling noisy annotations was introduced, noise reduction methods, which focus on alleviating the effects of noisy data or correcting noisy annotations to some extent. Ref. [21] introduced an entity-pair-level denoising method which exploits semantic information from correctly labeled entity pairs to correct wrong labels dynamically during training. Refs. [22,23,24,25] proposed various multi-level and different attention mechanisms to model a noisy corpus more reasonably. Refs. [4,26,27,28] proposed knowledge-enhanced methods to mitigate the effects of noisy data via introducing additional knowledge, and improved the performance of DSRE. Ref. [29] extended a generative pre-trained transformer (GPT) to handle bag-level, multi-instance training and prediction for distantly supervised datasets by aggregating sentence-level information with selective attention to produce bag-level predictions. Lastly, Refs. [30,31] focused on the correlation of relations to further handle long-tail relations and improve the accuracy of RE.

2.2. Knowledge Distillation for DSRE

Knowledge distillation (KD), a transfer learning technology, has received increasing attention from the research community in recent years. The initial intention of KD is model compression and acceleration; specifically, KD methods distill the knowledge from a larger deep neural network into a small network ([32,33,34]), also known as the teacher–student framework, where the “teacher” usually is the larger deep neural network and the “student” is the lightweight network. When ref. [35] discovered that the label softening ability of KD could be used to weaken the interference of noisy annotations for image classification models, the label softening functionality of KD was widely adopted in a variety of deep learning tasks to confront noisy annotations [36,37].

Furthermore, according to the discovery of [38], students are easier to train if they have the same learning capacity as teachers; hence, researchers prefer students with parameters at the same level rather than lightweight ones. Recently, some KD frameworks achieved positive results within the DSRE field. Ref. [14] proposed a teacher–student co-training mechanism, which utilized information of data credibility and sample confidence and iterated distilling knowledge from external neural networks to an existing RE model. Ref. [15] proposed a cooperative distillation method, which took useful information expressed in a knowledge graph into account and adopted a mutual learning approach based on the adaptive bi-directional knowledge distillation and dynamic ensemble with noisy-varying instances. Ref. [16] proposed a model-agnostic framework, which employed multi-instance dynamic temperature distilling and target fusion mechanisms.

Although these KD frameworks achieved positive results, they are still constrained by the conventional label softening mechanism and single-distillation knowledge. Thus, we propose a model-agnostic distillation method for DSRE, which breaks the constraints of “softening”, combined with multi-level knowledge for the student, and we adopt different training levels for the teacher and student; where the experimental results in Section 4 demonstrate the effectiveness of our approach.

3. Method

3.1. Task Definition

In this work, we focus on distantly supervised relation extraction (DSRE) modeled as a multi-instance learning problem. We denote a bag consisting of n sentences (instances) which contain the mention of an entity pair , in which is the number of words of sentence and . Noting that for simplicity we assume that the mention of the entity is a single word, such as . According to the assumption of DSRE, namely, that a relation exists between an entity pair, e.g., . There is at least one sentence in the bag reflecting that relation of the given entity pair. The task is thus to identify the relation of on the given B.

3.2. Preliminary

There are bag-level and sentence-level DSRE models, where bag-level models are trained and evaluated on bag-level data containing few instances with the same entity pair and sentence-level models are trained and evaluated on each sentence. Actually, although the two kinds of models can both make predictions at the bag and sentence levels, they are both constrained by their individual characteristics. The training and inference tasks of models are usually the same, otherwise the performances of training and inference is inconsistent. More specifically, the models would have poor performance if we adopted models trained on bag-level data with multi-instance learning methods to make predictions on sentence-level data, because the models need to aggregate the information of multiple instances in a bag to make predictions. However, bag-level training can effectively alleviate the noise if the bags contain wrongly labeled instances. The bag-level performance of sentence-level models is usually adequate under some new prediction mechanisms, e.g., adopting the most confident prediction of sentences as result of the bag. However, it is difficult to train a sentence-level model on noisy data without additional assistance, e.g., noise reduction and label softening.

Which method should we choose? The answer is both. As mentioned above, the benefit of bag-level models is the alleviation of the interference of noisy annotation in each bag; however, the sentence-level performance of bag-level models is constrained by their modeling intuition. Nevertheless, sentence-level models are more appropriate for sentence-level prediction and can use the knowledge learned from distantly supervised datasets to make a prediction at the sentence level, which bridges the gap between distant supervision and standard supervision (in general, the former make a prediction on a bag consisting of few samples and the latter on each sample), but noisy annotation will harm the performance training at the sentence level. Coincidentally, both models cover the mutual shortages. Therefore, we take a mixed method to train models at the bag and sentence levels; specifically, we adopt a knowledge distillation method and train the teacher model at the bag level and train the student model at the sentence level; for more details refer to Section 3.6 and the experiments in Section 5.2, which show that our mixed training method is effective. Meanwhile, we discover that the bag-level performance of our proposed sentence-level RE model is even better than the bag-level RE model.

3.3. Method Overview

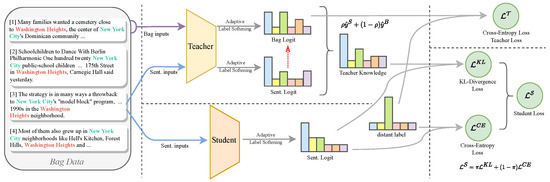

To achieve the goal mentioned above, we propose a model-agnostic knowledge distillation method, Multi-Level Knowledge Distillation with Adaptive Temperature (MKDAT), which aims at distilling an excellent relation extraction model at the bag and sentence levels and mainly involves two effective modules: the Adaptive Temperature Regulation module (ADR) and Multi-Level Knowledge Distilling module (MKD). The entire method which we use to extract relations is shown in Figure 2.

Figure 2.

The entire distillation framework for distantly supervised relation extraction, including how the teacher and student models should be trained, and the red dashed arrow denotes that the bag logit is based on the sentence logit.

The Adaptive Temperature Regulation module (ADR) aims at covering the drawbacks of the original temperature mechanism of knowledge distillation, where the label softening in raw knowledge distillation is usually controlled by a fixed hyperparameter. Nevertheless, it is obvious that the different relations and instances will impact the distributions of the predictions, hence we should take the differences among instances into account, and the experiments show that our novel adaptive temperature module improves the RE performance.

The Multi-Level Knowledge Distilling module (MKD) aims at improving the sentence-level evaluation performance of models trained on distantly supervised datasets. So, we combine multi-instance learning and knowledge distillation and train the teacher model at the bag level and the student model at the sentence level; noting that although the teacher model is pre-trained at the bag level, the teacher can also provide sentence-level supervision to the student via MKD. We dive into more details of the two modules in the following subsections.

3.4. Adaptive Temperature Regulation

The most popular knowledge distillation is known as soft targets. Specifically, soft targets are the probabilities that the instance belongs to the class and can be estimated by a softmax function with temperature as follows:

where is a logit vector and is the temperature, which is introduced to control the softness of each prediction. As stated in [32], soft targets contain the informative dark knowledge from the teacher model. Obviously, different relations and instances will impact the distributions of predictions; it is more reasonable and suitable to choose a dynamic temperature rather than a fixed temperature. Meanwhile, label softening is not always a good thing; label softening means flattening the distributions of predictions, the softest prediction is the worst case and will not convey meaningful information to the student because the predicted probability on each class is equal to .

Accordingly, considering the above-mentioned two points of view, we introduce a novel learnable and entropy-based method to calculate adaptive temperature, which aims at generating adaptive temperature for each instance and controlling the degree of softening (label hardening is possible for too soft predictions). The proposed softmax function with adaptive temperature is as follows:

where is the soft prediction, is the logit vector, and is the adaptive temperature for the i-th instance. Apparently, is a crucial factor for each instance, which decides the direction and degree of softening. can be calculated as follows:

In which can be learnable or a hyperparameter, m is the number of relations, and is the entropy of the i-th instance. Noting that and , and we can adjust the anchor which decides whether to increase or decrease among . For the logit vector , the entropy can be calculated as follows:

where is the probability of the i-th relation without softening. According to Equation (2), the Adaptive Temperature Regulation module assigns lower temperatures to predictions with higher entropy, and vice versa. It is notable that the softmax function with temperature is the same as the raw softmax function when , and tends to soften (or harden) predictions when (or ). Therefore, the ATR module achieves what we desired, which was the ability of flexible softening, more specifically, applying label softening when the prediction is too uneven, and label hardening when the prediction is too flat.

3.5. Multi-Level Knowledge Distilling

In MKDAT, knowledge distillation is based on the general teacher–student architecture, and we leverage the same network for the teacher and student, in which the teacher is a bag-level RE model and the student is a sentence-level RE model. More precisely, the teacher is trained with distantly supervised bag-level annotations, and the student is supervised by the combination of the teacher’s soft predictions and distantly supervised annotations of sentences.

More specifically, we denote the teacher and student models as -net and -net, respectively, and their predictions for sentence as and , in which the are logit vectors and are the temperatures in the last prediction layers of -net and -net. Moreover, since -net is a bag-level model, we introduce an attention combination mechanism to obtain the prediction of the i-th bag annotation, shown as follows:

where is the soft prediction and is the logit matrix of all n instances within the i-th bag, is the softmax function and is a learnable transformation operation which transforms into importance weights of instances with shape . As a consequence, for the i-th instance within the j-th bag, the soft supervision containing dark knowledge, which is the -net output to -net, can be calculated as follows:

in which is a hyperparameter used to control the importance weights of sentence-level and bag-level dark knowledge, and is a combination of the teacher’s soft predictions and one of the supervisions of the i-th instance in -net. The separated training methods for teacher-net and student-net, training -net at the bag-level and -net at the sentence-level, empowers the knowledge distillation framework to properly combine the bag-level and sentence-level knowledge. Namely, bag-level training can alleviate the interference of noisy sentences for -net, because it would harm the performance if we directly adopted the mislabeled sentences to train the sentence-level model, and sentence-level training will avoid incorrect annotations to some extent by regarding the soft predictions of -net as supervisions.

3.6. Loss and Training

We follow the offline knowledge distillation method, which firstly pre-trains the bag-level teacher model, and then, trains the sentence-level student model, additionally supervised with the teacher’s predictions. Specifically, we train the teacher model with cross-entropy loss:

where is the number of bags contained in the dataset, for a given bag, is the bag-level distantly supervised annotation, and is the bag-level prediction of the teacher model. Notably, for a bag with relation instance , and , and is the predicted probability that relation r exists in the bag.

Considering -net is asked to imitate the predictions of -net, we adopt Kullback–Leibler divergence (KL divergence for short) as the loss function of the sentence-level student, which measures how one probability distribution is different from a second. The loss can be calculated as follows:

In which S is the number of sentences in the dataset, for a given i-th sentence, is the soft prediction of -net, which combines bag-level and sentence-level predictions, and is the prediction of -net. In addition, we take the distantly supervised annotations into account via regarding the bag-level annotation as the annotations of sentences which are contained in the bag, and we leverage cross-entropy as the loss function, which can be calculated as follows:

where S is the number of sentences in the dataset, and is the same as but works at the sentence level. Accordingly, we obtain the final loss function of -net:

where is a hyperparameter which indicates the importance weights of the KL loss supervised by the fused predictions of -net and cross-entropy loss supervised by distantly supervised annotations.

3.7. Implementation of MKDAT

It is notable that MKDAT is a model-agnostic knowledge distillation method, we adopt CNN, PCNN, and ATT-BLSTM neural networks to implement the MKDAT models for evaluation. In particular, convolutional neural network (CNN) is able to capture local features effectively; piecewise convolutional neural network (PCNN) is a variant of CNN that can retain richer information than CNN; and attention-based bi-directional long short-term memory network (ATT-BLSTM) is an RNN-based model which can capture global features. In addition, we leverage the same network architecture for -net and -net to clearly evaluate the performance of MKDAT.

For simplicity, we adopt the same text preprocessing method for the CNN, PCNN, and ATT-BLSTM models. Specifically, for a sentence, we firstly insert the special tokens and in front of the mentions of head and tail entities, respectively, and and at the end of them. Moreover, we calculate the relative positions which are some distance away from the mentions of the head and tail entities of each token. For example, assuming that and can be indexed by j and k, for a token indexed by i, the relative position to the mention of the head entity is , and to the mentions of the tail entity is . Eventually, we leverage the pre-trained word embeddings model GloVe [39] to convert tokens to vectors, which are trained on aggregated global word–word co-occurrence statistics from a corpus. Remarkably, we additionally introduce randomly initialized embeddings for four special mark tokens and relative positions.

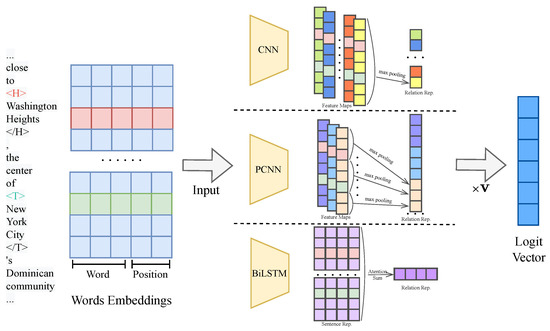

After the preprocessing, we obtain the sentence representations , where n is the number of tokens, d is the dimension of the word embeddings, and is the dimension of the relative position embeddings. The relative position embeddings provide the position-dependent features to models, which helps position-insensitive models (e.g., CNNs) to achieve better semantic understanding. The architectures of the three models are shown in Figure 3. The difference between CNN and PCNN is the pooling process after convolution; for the feature maps , k is the number of convolution filters, the CNN directly makes a max pooling to obtain the relation representations . However, in PCNN each feature map is divided into three parts according to the positions of entity tokens and , and the three divided feature maps are reduced by a pooling function (e.g., max or mean pooling). As a result, we obtain the relation representations of PCNN. Likewise, as shown in Figure 3, we obtain the relation representations of the ATT-BLSTM model, where is the dimensionality of the output space of ATT-BLSTM.

Figure 3.

The CNN-, PCNN-, and ATT-BLSTM-based models, in which the input word embeddings and output logit vector are the same for the three models, but their relation representations are different and relative to their hyperparameters.

The aforementioned processes aims at obtaining the sentence-level logit vectors, additionally referring to Equation (5) to train bag-level -net, and sentence-level logit vectors are enough to train -net; refer to Equations (8) and (9) for details. In general, the prediction process follows the training process; in other words, -net and -net are commonly used for bag-level and sentence-level prediction, respectively. However, -net is able to make a sentence-level prediction by regarding the sentence-level logit vectors as the final outputs. Likewise, -net can make bag-level predictions by an additional step; concretely, after -net makes predictions for all sentences in a bag, we can adopt the most confident prediction of sentences as the result of the bag.

4. Experiments

4.1. Datasets

We evaluate our model on the NYT-H dataset proposed by [40], an enhanced distantly supervised dataset with human annotation, which contains DS-generated training data and human-annotated test data. With the human-annotated test data, we can effectively avoid the inaccurate evaluation problem caused by the assumption of distant supervision to make a true-fact estimation of performance. NYT-H is a large-scale distantly supervised dataset for relation extraction, which contains 22 relation facts, 667,806 instances, and 320,668 sentences. The train set contains 107,093 instances and 17,515 bags, the test set contains 9,955 human-annotated instances and 3,735 bags. It is worth noting that the train and test sets both contain 22 relation facts; i.e., relations in the train and the test sets are fully overlapped. Hence, the dataset does not face an inconsistency problem. We recommend readers to refer to the original paper [40] for more statistical details.

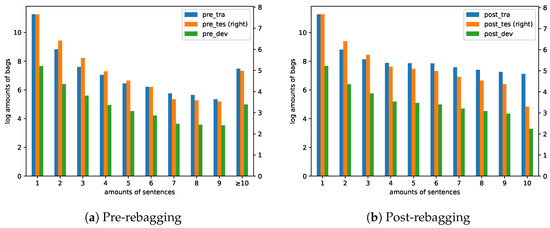

Nevertheless, as Figure 4a shows, the number of instances within bags is extremely imbalanced. Additionally, the biggest bags in the training and test datasets contain 2216 and 210 instances, and there are 1764 and 146 bags that contain at least 10 instances. Accordingly, we apply a rebagging process to reduce the instances within bags while holding the assumption of distant supervision; precisely, we split instances into new bags with the same annotations. Following the principle that the number of bags is in inverse proportion to the number of sentences within them, and we limit the maximum number of sentences in a bag to 10. As a consequence, we obtain the rebagged sizeable dataset, with the distribution of bags containing different numbers of instances as shown in Figure 4b.

Figure 4.

The distributions of the amount of instances within bags on training, development, and test data before and after rebagging. In addition, we combine the bags that contain at least 10 instances in a group for refined plotting before rebagging. Note that the Y-axis is on a log scale, pre_tra and post_tes indicate pre-rebagging training data and post-rebagging test data, likewise the others.

4.2. Comparative Models and Evaluation Metrics

We compare the performance of MKDAT against the models adopted by the original paper [40] proposing the NYT-H dataset. More specifically, we follow [40] in evaluating our distillation models on two test sets: Distantly Supervised Labels as Ground Truths (DSGT), which assumes that all the DS labels are ground truths, and Manual Annotation as Ground Truth (MAGT), where the metrics are calculated on the same evaluation set, but use the human-annotated labels as truths. Meanwhile, we also report the performance of evaluation at both sentence and bag level of -net and -net. For directly observing the effect of our distillation framework, we also report the performance of the aforementioned CNN, PCNN, and ATT-BLSTM models in Section 3.7, trained by the standard multi-instance learning method, as benchmark baselines.

It is noticeable that we adopt the micro-averaged F1 score as a metric throughout all sentence-level and bag-level evaluations. The bag-level micro-averaged F1 score can be calculated as follows:

where m denotes the number of relations; for a specific relation i, indicates the number of true positive predictions, where the model correctly predicts that relation i exists in the bag. indicates the number of false positive predictions, where the model wrongly predicts that relation i exists in the bag. indicates the number of true false negatives, where the model wrongly predicts that relation i does not exist in the bag. Additionally, the sentence-level micro-averaged F1 score is the same as the bag-level one, but calculated at the sentence level.

4.3. Experimental Settings

We firstly conduct hyperparameter (HP) searching experiments, and denote the teacher models of the three instances as CNN-, PCNN-, and BLSTM-; the student models are CNN-, PCNN-, and BLSTM-. For each distillation model, we divide the parameters into three parts: instance parameters, distillation parameters, and training parameters. For the CNN and PCNN models, the instance parameters are the same, which are convolution window size w and number of filters n; and the instance parameters of the BLSTM model are hidden units d, input dropout , and attention dropout . In addition, all distillation models have the same distillation parameters and training parameters, where the distillation parameters are logit dropout , the weight of the teacher’s prediction , the weight of the student’s loss , and the minimal temperature ; the training parameters are batch size b and learning rate . It is worth noting that the minimal temperature is crucial to avoid NaN loss; according to Equation (2), if the temperature is equal to 0 it will lead to infinite logit.

Moreover, in addition to the above-mentioned hyperparameters, we fix other settings, such as we employ the 50-dimensional GloVe, which contains 300,000 vocabularies, as an initialization of word embeddings and Adam as the optimizer to optimize the models. We set the dimension of position embeddings as five and the max length of sentences as 128, and we leverage an early stop mechanism to train the distillation model. Specially, the decrease in loss on evaluation data must be higher than 0.01 for at most two epochs, otherwise stop training. Meanwhile, we evaluate the performance by micro-averaged F1 scores calculated on the MAGT data.

The searched parameters are shown in Table 1; the first column is the searched hyperparameter space, and other columns are the experimental optimum parameters of the corresponding distillation model. It is noticeable that searching all parameters is impractical, thus we randomly search 32 models for CNN and PCNN, and 64 models for BLSTM.

Table 1.

Searched hyperparameters. Notably, we employ the same minimal temperature constraint for teacher and student.

5. Results and Analysis

5.1. Main Results

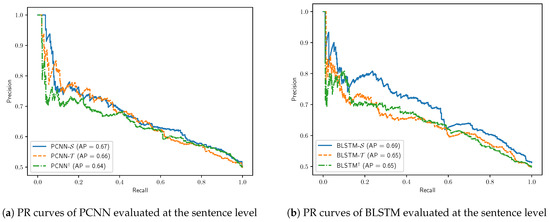

To obtain stable results, we calculate the average values of 10 micro-averaged F1 scores of each model as the final scores for performance evaluation. The main results shown in Table 2 and the PR curves in Figure 5 reveal that our distillation framework is effective and robust, and achieves a good performance. Despite there being an inevitable span of performance between the DSGT and MAGT data, our distillation framework improves the performance on both the DSGT and MAGT data.

Table 2.

The micro-averaged F1 scores calculated on the preprocessed NYT-H dataset. Additionally, the results of models with * superscript are directly obtained from the original paper [40], which are calculated via the macro-averaged method, and the results of models with † superscript are our baselines, whose models are trained merely via a multi-instance learning method. Models ending with - and - correspond to the teacher and student models in the distillation models.

Figure 5.

The PR curves of PCNN and BLSTM models evaluated at the sentence level, where the annotations in the legend are the same as the annotations in Table 2.

By comparing our distillation models against the baselines, which are trained merely via the multi-instance learning method, our distillation models exceed the baselines. Benefiting from label softening, the teacher models can not only alleviate the interference of noisy distant supervision annotations, but also provide useful knowledge to enrich the annotations of the student models. Our distillation models outperform the models evaluated in [40] by a big margin at the sentence and bag levels; specifically, for the MAGT data, our best model improves the F1 score at the bag level by 19.351 and at the sentence level by 20.871. And also, our distillation models outperform the baselines that are trained by us; notably, the distillation models improve the performance of sentence-level prediction, in which the best teacher and student models improve the F1 score by 1.879 and 2.508 at the sentence level, respectively.

Comparing our teacher models and student models, the student models obviously improve the performance of sentence-level relation extraction a lot more than at the bag level. This is what we expected, the student models benefit from the Multi-Level Knowledge Distilling module, which uses the combination of bag-level and sentence-level predictions of the teacher model as partial supervisions to reduce the sentence-level noise induced by hard distant annotation. According to the results shown in Table 2, for the MAGT data our best student model improves the F1 score by 0.580 at the bag level and 0.629 at the sentence level compared to the teacher models. Although the student models focus on sentence-level modeling, they benefit from noise reduction, the bag-level performance improves while using the student models on bag-level evaluation. We can conclude that our novel knowledge distillation framework improves the performance of bag-level and sentence-level prediction of distantly supervised relation extraction.

5.2. Ablation Study

We conduct additional experiments to figure out the effects contributed by the different modules; we take the Adaptive Temperature Regulation module and Multi-Level Knowledge Distilling module into account. For simplicity, we only train a distillation model based on PCNN instances with experimentally optimum parameters, as shown in Table 1, and we iterate the model over 10 epochs and evaluate the bag-level and sentence-level performance on the MAGT data. To clearly elaborate the contribution of different modules, we conduct an ablation study of ADR on PCNN- and MKD on PCNN-, respectively. The ablation study results are shown in Table 3.

Table 3.

The micro-averaged F1 scores of ablation study on MAGT data. Specifically, the ablation study of PCNN- focuses on how label softening works; namely, no label softening (no temp.), original label softening (fixed temp.), and entropy-based label softening (fixed anchor), where we let in Equation (3). The ablation study of PCNN- focuses on how the student model uses the predictions of the teacher as supervision; namely, uses only sentence predictions (only sent.), only bag predictions (only bag), and averaged sentence predictions (averaged sent.) within a bag.

According to the results shown in Table 3, the ADR and MKD modules contribute to improved performance for the teacher and student models, respectively. Especially, the ablation study of PCNN- shows that our ADR module improves the F1 scores. By comparing with modeling on hard distant annotations, benefiting from label softening, ADR improves the F1 score by 1.735. Moreover, due to the benefits of adaptive temperature, ADR improves the F1 score by 0.948 and 0.650 on the sentence-level evaluation compared with common and entropy-based label softening, respectively. Accordingly, this means that ADR has a better ability to handle label softening. The ablation study of PCNN- shows that our MKD module provides an effective way to make full use of the teacher’s knowledge, which improves the performance of the student model. By comparing with the knowledge of three different teachers, the MKD improves the F1 scores by 1.820, 1.227, and 0.536 on sentence-level evaluation. Because MKD takes both sentence- and bag-level predictions into account, and adopts an attention mechanism to fuse the sentence-level prediction into the bag level, MKD provides an effective method for noise reduction.

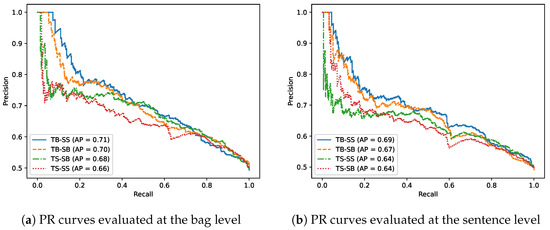

To further dig into the contributions of multi-level training of MKD, we additionally conduct experiments about how training the teacher and student models at the bag or sentence level affects the performance. More specifically, we train the teacher and student model at both the sentence and bag levels, and an evaluation of the PR curves of the four student models at the bag and sentence levels are shown in Figure 6. The PR curves reveal that our multi-level distillation method is reasonable in training the teacher and student models at the bag and sentence levels, respectively. According to both the bag-level PR curves in Figure 6a and sentence-level PR curves in Figure 6b, we discover that training sentence-level models on a distantly supervised dataset without additional knowledge handling of noisy annotations is risky, because it violates the assumption of distantly supervised learning. Namely, not all instances in a bag exist; the annotation of the bag and directly training models at the sentence level with bag annotation is infeasible. Therefore, the teacher trained at the sentence level would convey the incorrect knowledge to the student, so as to harm the performance of student. Nevertheless, training the student at the sentence level is reasonable; at this point the knowledge of the teacher would effectively mitigate the effects of noisy annotations, and sentence-level training would dramatically improve the sentence-level performance.

Figure 6.

The PR curves of student models evaluated at the bag and sentence levels, where TB-SS indicates the teacher is trained at the bag level and the student is trained at the sentence level, and likewise the others.

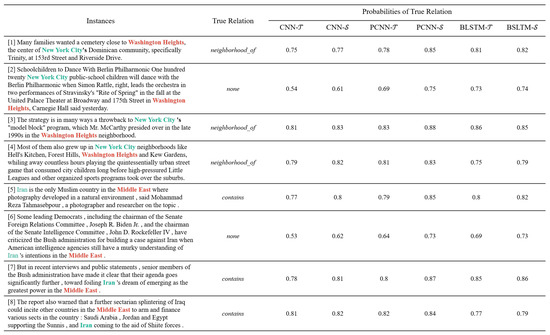

5.3. Case Study

The predictions of a manually annotated bag are shown in Figure 7; we use the examples to clearly elaborate how our distillation model works and the differences between the teacher and student models. In addition, we list the predictions of three distillation models, as the figure shows that the student models usually have better performance than the teacher models and the predictions of the student models are more confident than the teacher models. More specifically, we designed two bags, each containing four sentences. In one of the bags, four sentences all contained the entity mentions of ‘Washington Heights’ and ‘New York City’. Moreover, the manual annotation of the bag was neighborhood_of, and three sentences reveal the relation, but one sentence does not. The four sentences in the remaining bag mention the entities “Middle East” and “Iran”, with the manual annotation contains. Similarly, three sentences reveal the relationship, while one sentence does not. According to the predictions of the teacher and student, we discover that benefiting from the knowledge of the teacher model, the student model increased the probability of a true relation for positive instances, and decreased the probability for negative instances. As a consequence, the student model has more correct and confident predictions at the bag and sentence levels. This means that our distillation framework is effective.

Figure 7.

There are two types of entity combinations in the cases for illustrating predictions of our method. The first type consists of ‘Washington Heights’ and ‘New York City’, while the second type comprises ‘Middle East’ and ‘Iran’. The head entity and tail entity are the first and second entities in each combination, respectively. The true relations between these entities are manually annotated.

6. Conclusions

6.1. Theoretical and Practical Implications

Relation extraction (RE) is receiving increased attention and plays an important role in the research on information extraction, but most supervised RE methods rely on manually annotated clean data, which is too labor-intensive. Ref. [1] proposed distant supervision to automatically annotate large-scale datasets for reducing the cost of data annotation; however it inevitably resulted in a noisy-annotation problem. Furthermore, the multi-instance learning method is mainly adopted to model DS data; however, it is naturally inevitable that the instance-level predictions cannot work as well as those at the bag level. Our distillation framework is proposed to deal with the noisy annotations, and improve the performance on instance-level predictions. Particularly, we employ a novel knowledge distillation framework with Adaptive Temperature Regulation to reduce the effects of noisy annotations, and leverage a Multi-Level Distilling method to train an instance-level model additionally supervised by bag-level and instance-level knowledge to improve the performance of the predictions at the instance level.

6.2. Summary and Future Work

In this work, we introduced a multi-level knowledge distillation method with adaptive temperature for distantly supervised relation extraction (DSRE). The distillation framework contains two parts: Adaptive Temperature Regulation (ADR) module and Multi-Level Knowledge Distilling (MKD) module. The ADR module tends to take the differences of instances into account to provide adaptive label softening for noise reduction. The MKD module focuses on distilling multi-level knowledge into instance level training to improve the performance of predictions at the instance level. According to the results shown in Table 2, we can conclude that our model-agnostic distillation method is effective for improving the performance both at the bag and instance levels, and would reach better performance if equipped with SOTA language-understanding models.

Although, our distillation framework achieved an acceptable improvement on the evaluation performance, there is an inevitable span of performance between the DSGT and MAGT data; this means that there still is an improvement space for modeling on distantly supervised datasets. There are a few drawbacks we should pay attention to in future work; firstly, a larger corpus is better. According to the results shown in Figure 4, we noticed that most bags in the training data contain only one sentence, to some extent this violates the assumption and intention of distantly supervised learning. Furthermore, various knowledge and potential distillation algorithms are promising. Our distillation framework is a common offline teacher–student architecture based on response-based knowledge. However, feature-based knowledge [41] would provide more useful information for knowledge transfer, in which feature-based knowledge from the intermediate layers is a good supplement to response-based knowledge. In addition, exploring more distillation algorithms is reasonable. For instance, a multi-teacher distillation framework is promising, since different teachers can provide their own knowledge for a student, and the individual or combined knowledge can be used for distillation. And online distillation [42] is suggested as a way to enhance the performance of the student model, particularly in cases where a high-performance teacher model with a large capacity is unavailable.

Author Contributions

Conceptualization, Z.Y. and J.L.; methodology, Z.Y.; software, Z.Y.; validation, Z.Y., J.L. and W.H.; formal analysis, Z.Y.; investigation, Z.Y.; resources, Z.Y.; data curation, Z.Y.; writing—original draft preparation, Z.Y. and J.L.; writing—review and editing, J.L., W.H. and Y.H.; visualization, Z.Y.; supervision, J.L., W.H. and Y.H.; project administration, J.L., W.H. and Y.H.; funding acquisition, J.L., W.H. and Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Scientific Research Fund of Hunan Provincial Education Department under Grant No. 21B0462, and in part by the Natural Science Foundation of Hunan Province of China under Grant No. 2024JJ6221, and in part by the Joint Funds of the National Natural Science Foundation of China under Grant No. U2003208.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing does not apply to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mintz, M.; Bills, S.; Snow, R.; Jurafsky, D. Distant supervision for relation extraction without labeled data. In Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP, Suntec, Singapore, 2 July–7 August 2009; pp. 1003–1011. [Google Scholar]

- Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak, R.; Ives, Z. Dbpedia: A nucleus for a web of open data. In The Semantic Web; Springer: Berlin/Heidelberg, Germany, 2007; pp. 722–735. [Google Scholar]

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T.; Taylor, J. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 10–12 June 2008; pp. 1247–1250. [Google Scholar]

- Jiang, H.; Cui, L.; Xu, Z.; Yang, D.; Chen, J.; Li, C.; Liu, J.; Liang, J.; Wang, C.; Xiao, Y.; et al. Relation Extraction Using Supervision from Topic Knowledge of Relation Labels. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019; pp. 5024–5030. [Google Scholar]

- Zhang, N.; Deng, S.; Sun, Z.; Wang, G.; Chen, X.; Zhang, W.; Chen, H. Long-tail Relation Extraction via Knowledge Graph Embeddings and Graph Convolution Networks. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 3016–3025. [Google Scholar] [CrossRef]

- Li, Y.; Long, G.; Shen, T.; Zhou, T.; Yao, L.; Huo, H.; Jiang, J. Self-attention enhanced selective gate with entity-aware embedding for distantly supervised relation extraction. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 8269–8276. [Google Scholar]

- Lin, X.; Liu, T.; Jia, W.; Gong, Z. Distantly Supervised Relation Extraction using Multi-Layer Revision Network and Confidence-based Multi-Instance Learning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 165–174. [Google Scholar] [CrossRef]

- Riedel, S.; Yao, L.; McCallum, A. Modeling relations and their mentions without labeled text. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Barcelona, Spain, 20–24 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 148–163. [Google Scholar]

- Wu, Y.; Bamman, D.; Russell, S. Adversarial training for relation extraction. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; pp. 1778–1783. [Google Scholar]

- Feng, J.; Huang, M.; Zhao, L.; Yang, Y.; Zhu, X. Reinforcement learning for relation classification from noisy data. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- He, Z.; Chen, W.; Wang, Y.; Zhang, W.; Wang, G.; Zhang, M. Improving neural relation extraction with positive and unlabeled learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 7927–7934. [Google Scholar]

- Chen, J.; Guo, Z.; Yang, J. Distant Supervision for Relation Extraction via Noise Filtering. In Proceedings of the 2021 13th International Conference on Machine Learning and Computing, Shenzhen, China, 26 February–1 March 2021; pp. 361–367. [Google Scholar]

- Shang, Y.; Huang, H.Y.; Mao, X.L.; Sun, X.; Wei, W. Are noisy sentences useless for distant supervised relation extraction? In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 8799–8806. [Google Scholar]

- Tang, S.; Zhang, J.; Zhang, N.; Wu, F.; Xiao, J.; Zhuang, Y. ENCORE: External neural constraints regularized distant supervision for relation extraction. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, Tokyo, Japan, 7–11 August 2017; pp. 1113–1116. [Google Scholar]

- Lei, K.; Chen, D.; Li, Y.; Du, N.; Yang, M.; Fan, W.; Shen, Y. Cooperative denoising for distantly supervised relation extraction. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 426–436. [Google Scholar]

- Li, R.; Yang, C.; Li, T.; Su, S. MiDTD: A Simple and Effective Distillation Framework for Distantly Supervised Relation Extraction. ACM Trans. Inf. Syst. (TOIS) 2022, 40, 1–32. [Google Scholar] [CrossRef]

- Zeng, D.; Liu, K.; Chen, Y.; Zhao, J. Distant Supervision for Relation Extraction via Piecewise Convolutional Neural Networks. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1753–1762. [Google Scholar] [CrossRef]

- Shi, G.; Feng, C.; Huang, L.; Zhang, B.; Ji, H.; Liao, L.; Huang, H. Genre Separation Network with Adversarial Training for Cross-genre Relation Extraction. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 1018–1023. [Google Scholar] [CrossRef]

- Zeng, X.; He, S.; Liu, K.; Zhao, J. Large scaled relation extraction with reinforcement learning. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Zhao, H.; Li, R.; Li, X.; Tan, H. CFSRE: Context-aware based on frame-semantics for distantly supervised relation extraction. Knowl.-Based Syst. 2020, 210, 106480. [Google Scholar] [CrossRef]

- Liu, T.; Wang, K.; Chang, B.; Sui, Z. A soft-label method for noise-tolerant distantly supervised relation extraction. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1790–1795. [Google Scholar]

- Wu, S.; Fan, K.; Zhang, Q. Improving distantly supervised relation extraction with neural noise converter and conditional optimal selector. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7273–7280. [Google Scholar]

- Ye, Z.X.; Ling, Z.H. Distant Supervision Relation Extraction with Intra-Bag and Inter-Bag Attentions. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 2810–2819. [Google Scholar] [CrossRef]

- Yuan, Y.; Liu, L.; Tang, S.; Zhang, Z.; Zhuang, Y.; Pu, S.; Wu, F.; Ren, X. Cross-relation cross-bag attention for distantly-supervised relation extraction. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 419–426. [Google Scholar]

- Wang, J.; Liu, Q. Distant supervised relation extraction with position feature attention and selective bag attention. Neurocomputing 2021, 461, 552–561. [Google Scholar] [CrossRef]

- Shen, S.; Duan, S.; Gao, H.; Qi, G. Improved distant supervision relation extraction based on edge-reasoning hybrid graph model. J. Web Semant. 2021, 70, 100656. [Google Scholar] [CrossRef]

- Huang, W.; Mao, Y.; Yang, L.; Yang, Z.; Long, J. Local-to-global GCN with knowledge-aware representation for distantly supervised relation extraction. Knowl.-Based Syst. 2021, 234, 107565. [Google Scholar] [CrossRef]

- Zhou, Q.; Zhang, Y.; Ji, D. Distantly supervised relation extraction with KB-enhanced reconstructed latent iterative graph networks. Knowl.-Based Syst. 2022, 260, 110108. [Google Scholar] [CrossRef]

- Alt, C.; Hübner, M.; Hennig, L. Fine-tuning pre-trained transformer language models to distantly supervised relation extraction. arXiv 2019, arXiv:1906.08646. [Google Scholar]

- Peng, T.; Han, R.; Cui, H.; Yue, L.; Han, J.; Liu, L. Distantly supervised relation extraction using global hierarchy embeddings and local probability constraints. Knowl.-Based Syst. 2022, 235, 107637. [Google Scholar] [CrossRef]

- Gou, Y.; Lei, Y.; Liu, L.; Zhang, P.; Peng, X. A dynamic parameter enhanced network for distant supervised relation extraction. Knowl.-Based Syst. 2020, 197, 105912. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Kang, M.; Kang, S. Data-free knowledge distillation in neural networks for regression. Expert Syst. Appl. 2021, 175, 114813. [Google Scholar] [CrossRef]

- Jose, A.; Shetty, S.D. DistilledCTR: Accurate and scalable CTR prediction model through model distillation. Expert Syst. Appl. 2022, 193, 116474. [Google Scholar] [CrossRef]

- Li, Y.; Yang, J.; Song, Y.; Cao, L.; Luo, J.; Li, L.J. Learning from noisy labels with distillation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1910–1918. [Google Scholar]

- Sarfraz, F.; Arani, E.; Zonooz, B. Knowledge distillation beyond model compression. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 6136–6143. [Google Scholar]

- Helong, Z.; Liangchen, S.; Jiajie, C.; Ye, Z.; Guoli, W.; Junsong, Y.; Zhang, Q. Rethinking soft labels for knowledge distillation: A bias-variance tradeoff perspective. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Furlanello, T.; Lipton, Z.; Tschannen, M.; Itti, L.; Anandkumar, A. Born again neural networks. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; PMLR: New York, NY, USA, 2018; pp. 1607–1616. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Zhu, T.; Wang, H.; Yu, J.; Zhou, X.; Chen, W.; Zhang, W.; Zhang, M. Towards Accurate and Consistent Evaluation: A Dataset for Distantly-Supervised Relation Extraction. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 6436–6447. [Google Scholar]

- Sepahvand, M.; Abdali-Mohammadi, F.; Taherkordi, A. Teacher–student knowledge distillation based on decomposed deep feature representation for intelligent mobile applications. Expert Syst. Appl. 2022, 202, 117474. [Google Scholar] [CrossRef]

- Tzelepi, M.; Passalis, N.; Tefas, A. Online subclass knowledge distillation. Expert Syst. Appl. 2021, 181, 115132. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).