A Comparison of Bias Mitigation Techniques for Educational Classification Tasks Using Supervised Machine Learning

Abstract

1. Introduction

2. Literature Review

2.1. Fairness in Machine Learning: Definition, Causes of Bias, and Dilemmas

2.1.1. Definition

2.1.2. Causes of Bias

2.1.3. Dilemmas

2.2. Potential Harms and Consequences from Lack of Fairness in ML

2.3. The Fairness Module in DALEX

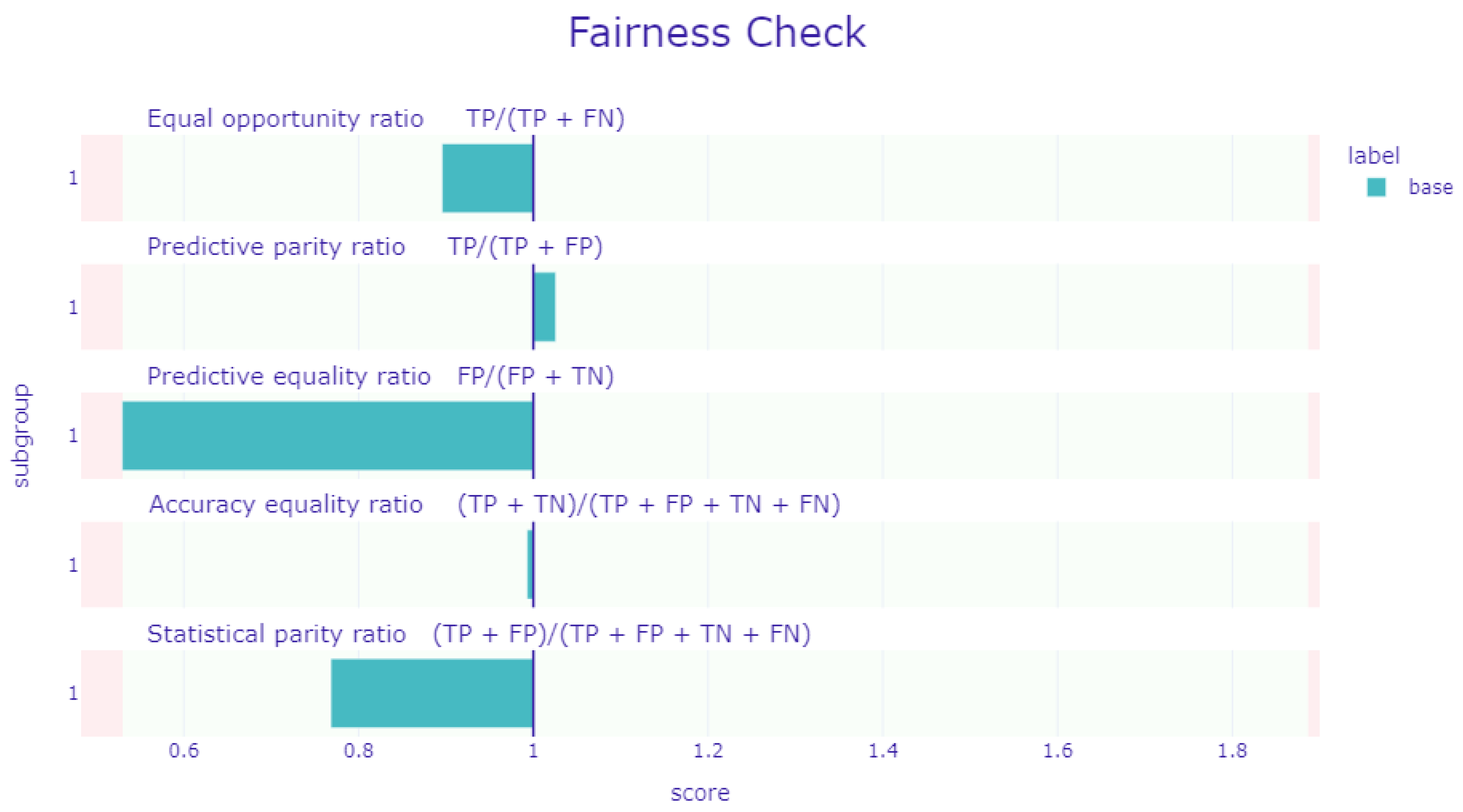

2.3.1. Fairness Check

2.3.2. Bias Mitigation Techniques

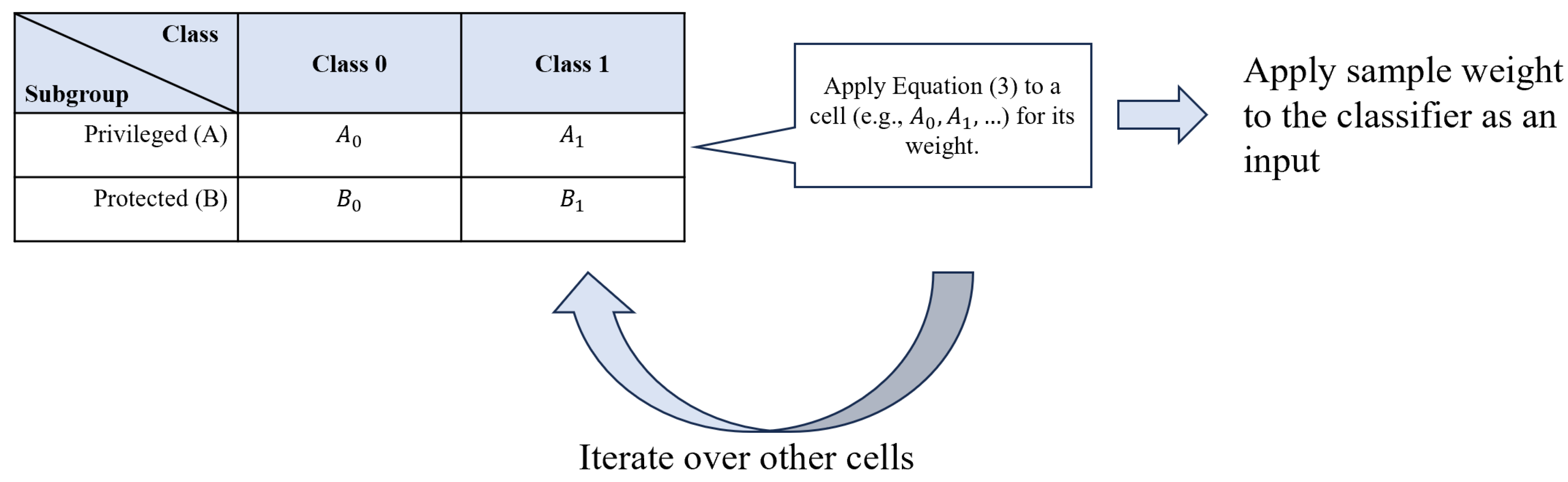

Reweighting

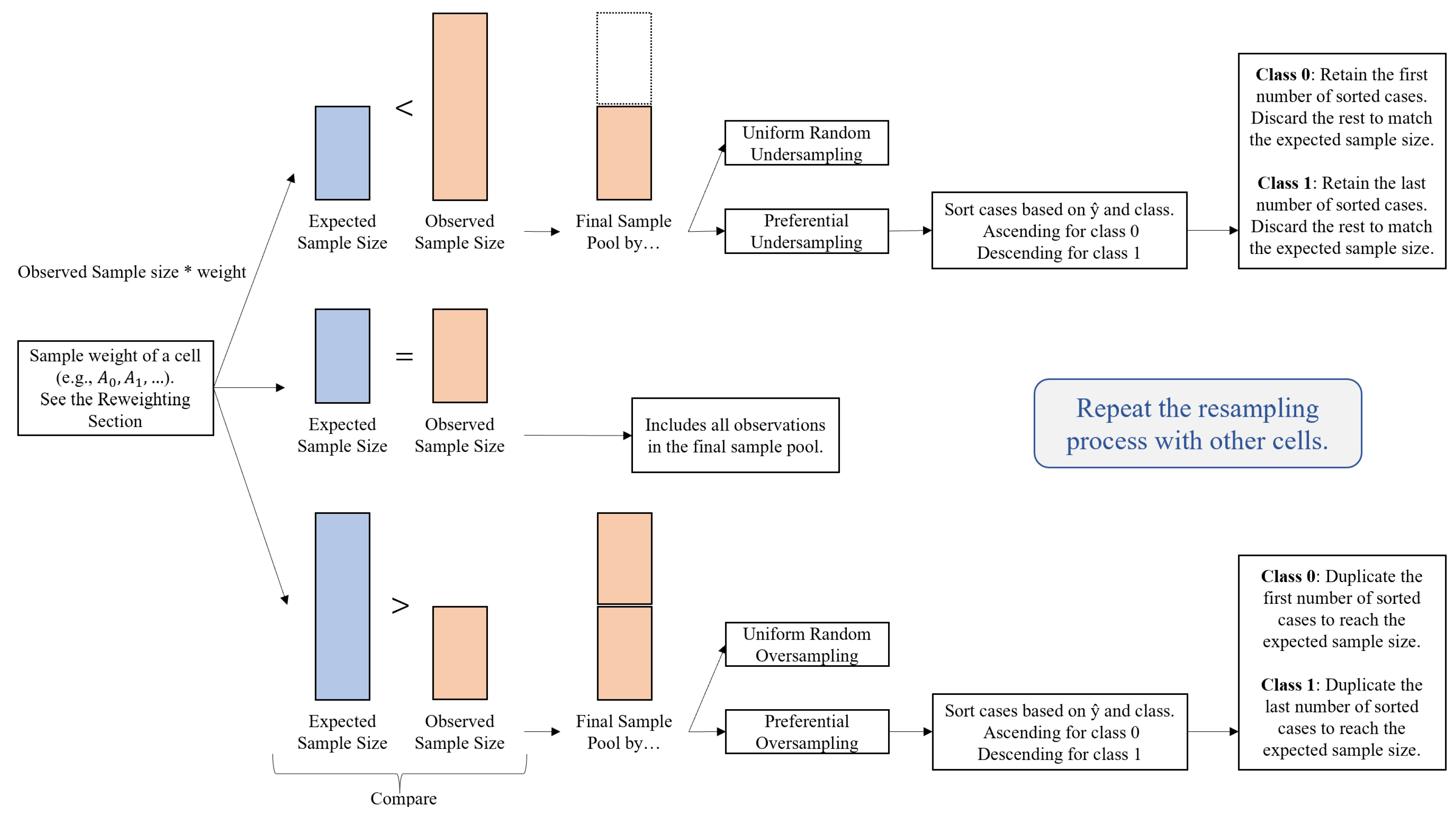

Resampling

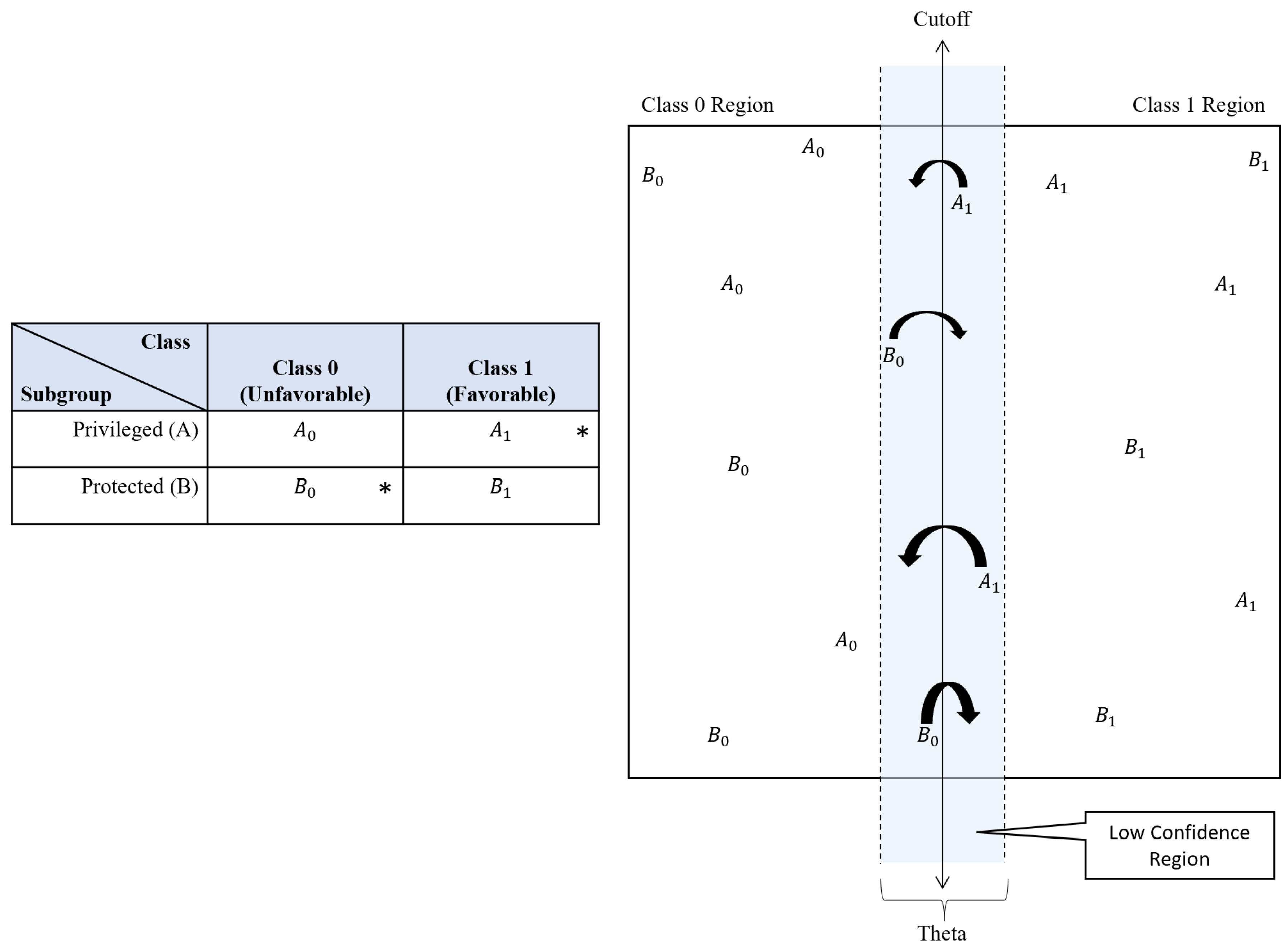

ROC Pivot

3. Present Study

4. Methods

4.1. Dataset and Data Preprocessing

4.2. Classification Model

4.3. Bias Detection and Mitigation

5. Results

5.1. Classification Results

5.2. Bias Detection and Mitigation

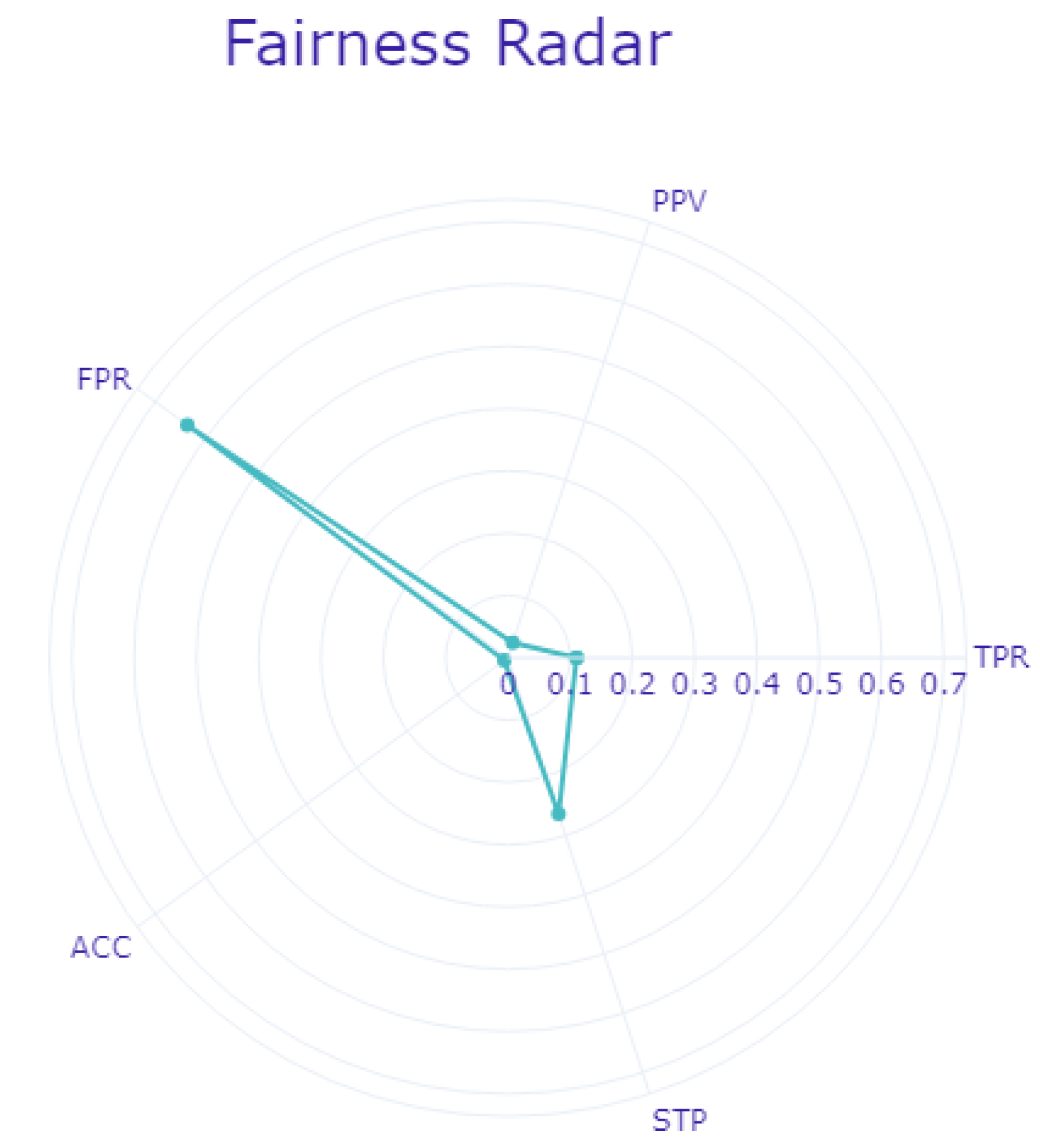

5.2.1. Bias Detection Results

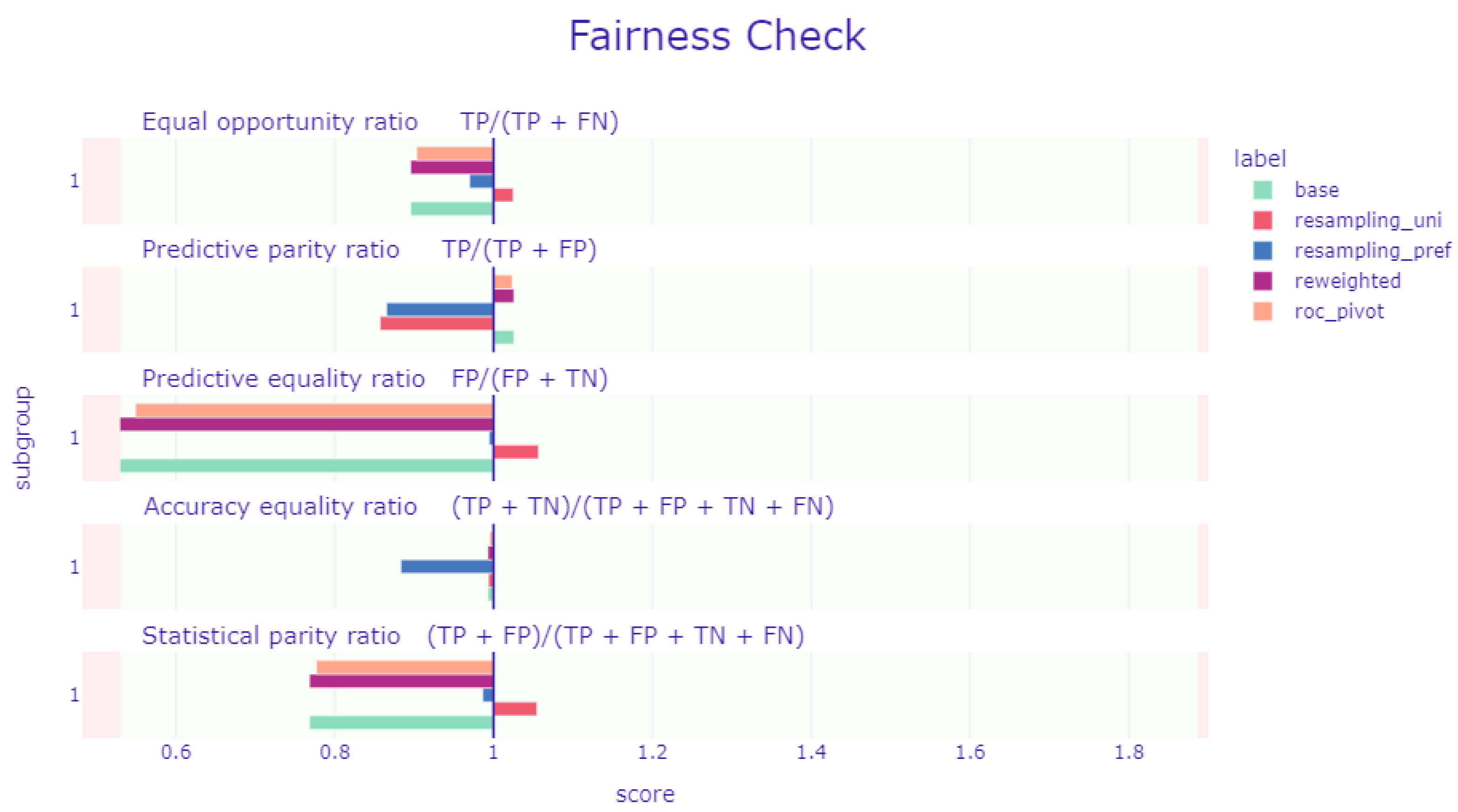

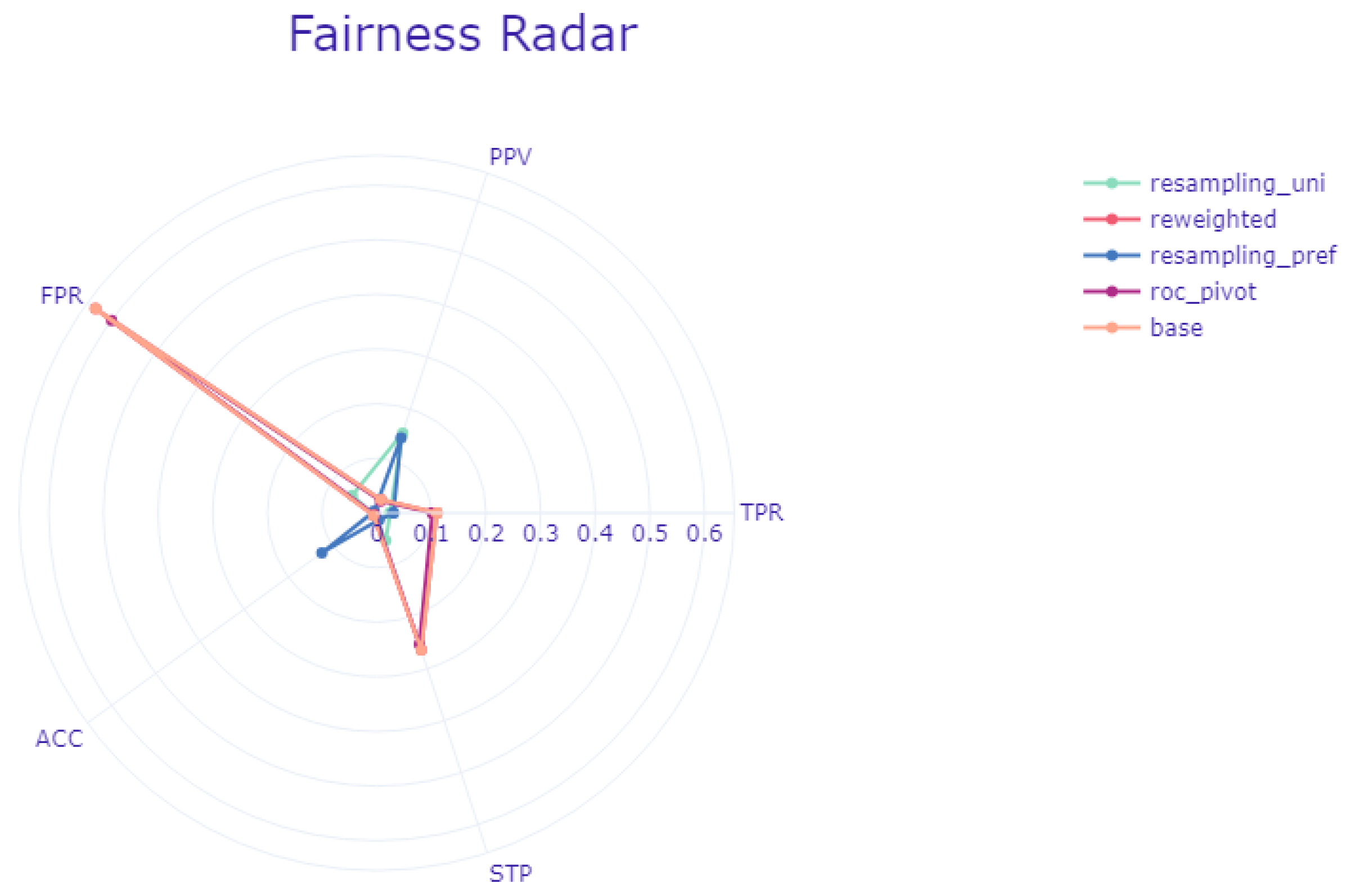

5.2.2. Bias Mitigation Results

5.2.3. Performance Comparison between Bias Mitigation Conditions

6. Discussion and Conclusions

Limitations and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

- The following abbreviations are used in this manuscript:

| ACC | Accuracy |

| AUC | Area Under Curve |

| COMPAS | Correctional Offender Management Profiling for Alternative Sanctions |

| DALEX | Model Agnostic Language for Exploration and Explanation |

| FPR | False Positive Rate |

| HSLS:09 | High School Longitudinal Study of 2009 |

| ML | Machine Learning |

| PPV | Positive Predictive Value |

| ROC | Reject Option-based Classification |

| RUS | Random Undersampling |

| SD | Standard Deviation |

| SES | Socio-economic Status |

| SMOTE-NC | Synthetic Minority Oversampling Technique for nominal and categorical data |

| STP | Statistical Parity |

| TPR | True Positive Rate |

Appendix A. List of Utilized Variables

| Type | Variable Code | Variable Name |

| Continuous | X1SES | Students’ socio-economic status composite score |

| X1MTHEFF | Students’ mathematics self-efficacy | |

| X1MTHINT | Students’ interest in fall 2009 math course | |

| X1SCIUTI | Students’ perception of science utility | |

| X1SCIEFF | Students’ science self-efficacy | |

| X1SCIINT | Students’ interest in fall 2009 math course | |

| X1SCHOOLBEL | Students’ sense of school belonging | |

| X1SCHOOLENG | Students’ school engagement | |

| X1SCHOOLCLI | Scale of school climate assessment | |

| X1COUPERTEA | Scale of counselor’s perceptions of teacher’s expectations | |

| X1COUPERCOU | Scale of counselor’s perceptions of counselor’s expectations | |

| X1COUPERPRI | Scale of counselor’s perceptions of principal’s expectations | |

| X3TGPA9TH | Students’ GPA in ninth grade | |

| Categorical | S1HROTHHOMWK | Hours spent on homework/studying on typical school day |

| X1MOMEDU | Mother’s/female guardian’s highest level of education | |

| X1DADEDU | Father’s/male guardian’s highest level of education | |

| X1SEX | What is [your 9th grader name]’s sex? |

References

- Barocas, S.; Hardt, M.; Narayanan, A. Fairness and Machine Learning: Limitations and Opportunities; MIT Press: Cambridge, MA, USA, 2023. [Google Scholar]

- Crawford, K. The Trouble with Bias. 2017. Available online: https://www.youtube.com/watch?v=fMym_BKWQzk (accessed on 13 May 2024).

- Shin, T. Real-Life Examples of Discriminating Artificial Intelligence. Towards Data Science. 2020. Available online: https://towardsdatascience.com/real-life-examples-of-discriminating-artificial-intelligence-cae395a90070 (accessed on 13 May 2024).

- Dwork, C.; Hardt, M.; Pitassi, T.; Reingold, O.; Zemel, R. Fairness through awareness. In Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, Cambridge, MA, USA, 8–10 January 2012; pp. 214–226. [Google Scholar] [CrossRef]

- Chen, G.; Rolim, V.; Mello, R.F.; Gašević, D. Let’s shine together!: A comparative study between learning analytics and educational data mining. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany, 23–27 March 2020; pp. 544–553. [Google Scholar] [CrossRef]

- Gardner, J.; O’Leary, M.; Yuan, L. Artificial intelligence in educational assessment: ‘Breakthrough? or buncombe and ballyhoo?’. J. Comput. Assist. Learn. 2021, 37, 1207–1216. [Google Scholar] [CrossRef]

- Baker, R.S.; Hawn, A. Algorithmic Bias in Education. Int. J. Artif. Intell. Educ. 2022, 32, 1052–1092. [Google Scholar] [CrossRef]

- Akgun, S.; Greenhow, C. Artificial intelligence in education: Addressing ethical challenges in K-12 settings. AI Ethics 2022, 2, 431–440. [Google Scholar] [CrossRef] [PubMed]

- Lepri, B.; Oliver, N.; Letouzé, E.; Pentland, A.; Vinck, P. Fair, transparent, and accountable algorithmic decision-making processes: The premise, the proposed solutions, and the open challenges. Philos. Technol. 2018, 31, 611–627. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. 2022, 54, 1–35. [Google Scholar] [CrossRef]

- Pessach, D.; Shmueli, E. Algorithmic fairness. In Machine Learning for Data Science Handbook; Rokach, L., Maimon, O., Shmueli, E., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 867–886. [Google Scholar] [CrossRef]

- Caton, S.; Haas, C. Fairness in machine learning: A survey. ACM Comput. Surv. 2023, 56, 1–38. [Google Scholar] [CrossRef]

- Chouldechova, A. Fair prediction with disparate impact: A study of bias in recidivism prediction instruments. Big Data 2017, 5, 153–163. [Google Scholar] [CrossRef]

- Baniecki, H.; Kretowicz, W.; Piatyszek, P.; Wisniewski, J.; Biecek, P. dalex: Responsible machine learning with interactive explainability and fairness in Python. J. Mach. Learn. Res. 2021, 22, 1–7. [Google Scholar]

- Wiśniewski, J.; Biecek, P. Hey, ML Engineer! Is Your Model Fair? Available online: https://docs.mlinpl.org/virtual-event/2020/posters/11-Hey_ML_engineer_Is_your_model_fair.pdf (accessed on 30 May 2024).

- Mashhadi, A.; Zolyomi, A.; Quedado, J. A Case Study of Integrating Fairness Visualization Tools in Machine Learning Education. In Proceedings of the Extended Abstracts of the 2022 CHI Conference on Human Factors in Computing Systems (CHI EA ′22), New York, NY, USA, 30 April–5 May 2022. [Google Scholar] [CrossRef]

- Baniecki, H.; Kretowicz, W.; Piatyszek, P.; Wisniewski, J.; Biecek, P. Module Dalex.Fairness. 2021. Available online: https://dalex.drwhy.ai/python/api/fairness/ (accessed on 30 May 2024).

- Mohanty, P.K.; Das, P.; Roy, D.S. Predicting daily household energy usages by using Model Agnostic Language for Exploration and Explanation. In Proceedings of the 2022 OITS International Conference on Information Technology (OCIT), Bhubaneswar, India, 14–16 December 2022; pp. 543–547. [Google Scholar] [CrossRef]

- Binns, R. Fairness in machine learning: Lessons from political philosophy. In Proceedings of the 1st Conference on Fairness, Accountability and Transparency; Friedler, S.A., Wilson, C., Eds.; Proceedings of Machine Learning Research (PMLR): New York, NY, USA, 2018; Volume 81, pp. 149–159. Available online: https://proceedings.mlr.press/v81/binns18a.html (accessed on 30 May 2024).

- Srivastava, M.; Heidari, H.; Krause, A. Mathematical notions vs. Human perception of fairness: A descriptive approach to fairness for machine learning. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2459–2468. [Google Scholar] [CrossRef]

- Mitchell, S.; Potash, E.; Barocas, S.; D’Amour, A.; Lum, K. Algorithmic fairness: Choices, assumptions, and definitions. Annu. Rev. Stat. Its Appl. 2021, 8, 141–163. [Google Scholar] [CrossRef]

- Feldman, M.; Friedler, S.A.; Moeller, J.; Scheidegger, C.; Venkatasubramanian, S. Certifying and removing disparate impact. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, NSW, Australia, 10–13 August 2015; pp. 259–268. [Google Scholar] [CrossRef]

- Kilkenny, M.F.; Robinson, K.M. Data quality: “Garbage in–garbage out”. Health Inf. Manag. J. 2018, 47, 103–105. [Google Scholar] [CrossRef]

- Fernando, M.; Cèsar, F.; David, N.; José, H. Missing the missing values: The ugly duckling of fairness in machine learning. Int. J. Intell. Syst. 2021, 36, 3217–3258. [Google Scholar] [CrossRef]

- Caton, S.; Malisetty, S.; Haas, C. Impact of imputation strategies on fairness in machine learning. J. Artif. Intell. Res. 2022, 74, 1011–1035. [Google Scholar] [CrossRef]

- Mahesh, B. Machine learning algorithms—A review. Int. J. Sci. Res. (IJSR) 2020, 9, 381–386. [Google Scholar] [CrossRef]

- Roßbach, P. Neural Networks vs. Random Forests—Does It Always Have to Be Deep Learning? 2018. Available online: https://blog.frankfurt-school.de/wp-content/uploads/2018/10/Neural-Networks-vs-Random-Forests.pdf (accessed on 13 May 2024).

- Li, H. Which machine learning algorithm should I use? SAS Blogs, 9 December 2017. [Google Scholar]

- Yang, L.; Shami, A. On hyperparameter optimization of machine learning algorithms: Theory and practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Visalakshi, S.; Radha, V. A literature review of feature selection techniques and applications: Review of feature selection in data mining. In Proceedings of the 2014 IEEE International Conference on Computational Intelligence and Computing Research, Coimbatore, India, 18–20 December 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Friedman, B.; Nissenbaum, H. Bias in computer systems. ACM Trans. Inf. Syst. 1996, 14, 330–347. [Google Scholar] [CrossRef]

- Dobbe, R.; Dean, S.; Gilbert, T.; Kohli, N. A broader view on bias in automated decision-making: Reflecting on epistemology and dynamics. arXiv 2018. [Google Scholar] [CrossRef]

- Prakash, K.B. Analysis, prediction and evaluation of COVID-19 datasets using machine learning algorithms. Int. J. Emerg. Trends Eng. Res. 2020, 8, 2199–2204. [Google Scholar] [CrossRef]

- Haas, C. The price of fairness—A framework to explore trade-offs in algorithmic fairness. In Proceedings of the International Conference on Information Systems (ICIS), Munich, Germany, 15–18 December 2019. [Google Scholar]

- Briscoe, E.; Feldman, J. Conceptual complexity and the bias/variance tradeoff. Cognition 2011, 118, 2–16. [Google Scholar] [CrossRef]

- Speicher, T.; Heidari, H.; Grgic-Hlaca, N.; Gummadi, K.P.; Singla, A.; Weller, A.; Zafar, M.B. A unified approach to quantifying algorithmic unfairness: Measuring individual & group unfairness via inequality indices. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2239–2248. [Google Scholar] [CrossRef]

- Corbett-Davies, S.; Pierson, E.; Feller, A.; Goel, S.; Huq, A. Algorithmic decision making and the cost of fairness. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ′17), Halifax, NS, Canada, 13–17 August 2017; pp. 797–806. [Google Scholar] [CrossRef]

- Veale, M.; Van Kleek, M.; Binns, R. Fairness and accountability design needs for algorithmic support in high-stakes public sector decision-making. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–14. [Google Scholar] [CrossRef]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Noble, S.U. Algorithms of Oppression: How Search Engines Reinforce Racism; New York University Press: New York, NY, USA, 2018. [Google Scholar]

- Selbst, A.D.; Boyd, D.; Friedler, S.A.; Venkatasubramanian, S.; Vertesi, J. Fairness and abstraction in sociotechnical systems. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; pp. 59–68. [Google Scholar] [CrossRef]

- Morozov, E. To Save Everything, Click Here: The Folly of Technological Solutionism, 1st ed.; PublicAffairs: New York, NY, USA, 2013. [Google Scholar]

- Weerts, H.; Dudík, M.; Edgar, R.; Jalali, A.; Lutz, R.; Madaio, M. Fairlearn: Assessing and improving fairness of AI systems. J. Mach. Learn. Res. 2023, 24, 1–8. [Google Scholar]

- Veale, M.; Binns, R. Fairer machine learning in the real world: Mitigating discrimination without collecting sensitive data. Big Data Soc. 2017, 4, 205395171774353. [Google Scholar] [CrossRef]

- Vartan, S. Racial bias found in a major health care risk algorithm. Scientific American, 24 October 2019. [Google Scholar]

- Larson, J.; Mattu, S.; Kirchner, L.; Angwin, J. How we analyzed the COMPAS recidivism algorithm. ProPublica, 23 May 2016. [Google Scholar]

- Biecek, P.; Burzykowski, T. Explanatory Model Analysis; Chapman and Hall/CRC: New York, NY, USA, 2021. [Google Scholar]

- Hardt, M.; Price, E.; Srebro, N. Equality of opportunity in supervised learning. In Proceedings of the 30th International Conference on Neural Information Processing Systems (NIPS’16), Barcelona, Spain, 5–10 December 2016; pp. 3323–3331. [Google Scholar] [CrossRef]

- Zafar, M.B.; Valera, I.; Gomez Rodriguez, M.; Gummadi, K.P. Fairness beyond disparate treatment & disparate impact: Learning classification without disparate mistreatment. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; 2017; pp. 1171–1180. [Google Scholar] [CrossRef]

- Bobko, P.; Roth, P.L. The four-fifths rule for assessing adverse impact: An arithmetic, intuitive, and logical analysis of the rule and implications for future research and practice. In Research in Personnel and Human Resources Management; Emerald Group Publishing Limited: Bingley, UK, 2004; pp. 177–198. [Google Scholar]

- Hobson, C.J.; Szostek, J.; Griffin, A. Adverse impact in black student 6-year college graduation rates. Res. High. Educ. 2021, 39, 1–15. [Google Scholar]

- Raghavan, M.; Kim, P.T. Limitations of the “four-fifths rule” and statistical parity tests for measuring fairness. Georget. Law Technol. Rev. 2023, 8. Available online: https://ssrn.com/abstract=4624571 (accessed on 29 May 2024).

- Watkins, E.A.; McKenna, M.; Chen, J. The four-fifths rule is not disparate impact: A woeful tale of epistemic trespassing in algorithmic fairness. arXiv 2022, arXiv:2202.09519. [Google Scholar]

- Kamiran, F.; Calders, T. Data preprocessing techniques for classification without discrimination. Knowl. Inf. Syst. 2012, 33, 1–33. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Kamiran, F.; Karim, A.; Zhang, X. Decision theory for discrimination-aware classification. In Proceedings of the 2012 IEEE 12th International Conference on Data Mining, Brussels, Belgium, 10–12 December 2012; pp. 924–929. [Google Scholar] [CrossRef]

- Fernández, A.; García, S.; Galar, M.; Prati, R.C.; Krawczyk, B.; Herrera, F. Cost-sensitive learning. In Learning from Imbalanced Data Sets; Springer International Publishing: Cham, Switzerland, 2018; pp. 63–78. [Google Scholar] [CrossRef]

- National Center for Educational Statistics [NCES]. High School Longitudinal Study of 2009; NCES: Washington, DC, USA, 2016. [Google Scholar]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

- Nicoletti, M.d.C. Revisiting the Tinto’s theoretical dropout model. High. Educ. Stud. 2019, 9, 52–64. [Google Scholar] [CrossRef]

- Bulut, O.; Wongvorachan, T.; He, S. Enhancing High-School Dropout Identification: A Collaborative Approach Integrating Human and Machine Insights. Manuscript Submitted for Publication. 2024. Available online: https://www.researchsquare.com/article/rs-3871667/v1 (accessed on 30 May 2024).

- He, H.; Ma, Y. (Eds.) Imbalanced learning: Foundations, Algorithms, and Applications; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2013. [Google Scholar]

- Islahulhaq, W.W.; Ratih, I.D. Classification of non-performing financing using logistic regression and synthetic minority over-sampling technique-nominal continuous (SMOTE-NC). Int. J. Adv. Soft Comput. Its Appl. 2021, 13, 116–128. [Google Scholar] [CrossRef]

- Canbek, G.; Sagiroglu, S.; Temizel, T.T.; Baykal, N. Binary classification performance measures/metrics: A comprehensive visualized roadmap to gain new insights. In Proceedings of the 2017 International Conference on Computer Science and Engineering (UBMK), Antalya, Turkey, 5–8 October 2017; pp. 821–826. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’16), San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 30 May 2024).

- Sun, Y.; Li, Z.; Li, X.; Zhang, J. Classifier selection and ensemble model for multi-class imbalance learning in education grants prediction. Appl. Artif. Intell. 2021, 35, 290–303. [Google Scholar] [CrossRef]

- Barros, T.M.; SouzaNeto, P.A.; Silva, I.; Guedes, L.A. Predictive models for imbalanced data: A school dropout perspective. Educ. Sci. 2019, 9, 275. [Google Scholar] [CrossRef]

- Márquez-Vera, C.; Cano, A.; Romero, C.; Ventura, S. Predicting student failure at school using genetic programming and different data mining approaches with high dimensional and imbalanced data. Appl. Intell. 2013, 38, 315–330. [Google Scholar] [CrossRef]

| Method | Advantages | Disadvantages |

|---|---|---|

| Reweighting | Involves merely adding weights to the machine learning algorithm. Minimal changes to the existing ML workflow. | Different ML algorithm requires different arguments to apply the technique. |

| Resampling | Can be applied consistently across different machine learning algorithms | Potential data representativeness issue due to dataset alteration. |

| ROC Pivot | Does not alter the algorithm or the dataset, only the results. | There are no predefined guidelines for selecting the low-confidence region. |

| Condition | TPR | ACC | PPV | FPR | STP |

|---|---|---|---|---|---|

| Baseline | 0.895 | 0.992 | 1.025 | 0.529 | 0.768 |

| Reweighting | 0.895 | 0.992 | 1.025 | 0.529 | 0.768 |

| Uniform Resampling * | 1.024 | 0.993 | 0.857 | 1.056 | 1.054 |

| Preferential Resampling * | 0.969 | 0.883 | 0.865 | 0.994 | 0.986 |

| ROC Pivot * | 0.903 | 0.995 | 1.023 | 0.549 | 0.776 |

| Condition | TPR | ACC | PPV | FPR | STP |

|---|---|---|---|---|---|

| Baseline | 0.110 | 0.007 | 0.025 | 0.636 | 0.264 |

| Reweighting | 0.110 | 0.007 | 0.025 | 0.636 | 0.264 |

| Uniform Resampling * | 0.024 | 0.007 | 0.154 | 0.055 | 0.053 |

| Preferential Resampling * | 0.031 | 0.124 | 0.145 | 0.006 | 0.014 |

| ROC Pivot * | 0.102 | 0.005 | 0.023 | 0.600 | 0.253 |

| Sex (Code) | Predicted Class (Code) | Sample Weight |

|---|---|---|

| Female (1) | Non-dropout (0) | 0.9942 |

| Male (0) | Dropout (1) | 1.0057 |

| Female (1) | Dropout (1) | 0.9937 |

| Male (0) | Non-dropout (0) | 1.0065 |

| Condition | FPR | Accuracy | F1 Score |

|---|---|---|---|

| Baseline | 0.636 | 0.841 | 0.826 |

| Reweighting | 0.636 | 0.841 | 0.826 |

| Uniform Resampling * | 0.055 | 0.459 | 0.428 |

| Preferential Resampling * | 0.006 | 0.469 | 0.613 |

| ROC Pivot * | 0.600 | 0.842 | 0.827 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wongvorachan, T.; Bulut, O.; Liu, J.X.; Mazzullo, E. A Comparison of Bias Mitigation Techniques for Educational Classification Tasks Using Supervised Machine Learning. Information 2024, 15, 326. https://doi.org/10.3390/info15060326

Wongvorachan T, Bulut O, Liu JX, Mazzullo E. A Comparison of Bias Mitigation Techniques for Educational Classification Tasks Using Supervised Machine Learning. Information. 2024; 15(6):326. https://doi.org/10.3390/info15060326

Chicago/Turabian StyleWongvorachan, Tarid, Okan Bulut, Joyce Xinle Liu, and Elisabetta Mazzullo. 2024. "A Comparison of Bias Mitigation Techniques for Educational Classification Tasks Using Supervised Machine Learning" Information 15, no. 6: 326. https://doi.org/10.3390/info15060326

APA StyleWongvorachan, T., Bulut, O., Liu, J. X., & Mazzullo, E. (2024). A Comparison of Bias Mitigation Techniques for Educational Classification Tasks Using Supervised Machine Learning. Information, 15(6), 326. https://doi.org/10.3390/info15060326