1. Introduction

The advent of advanced language models, particularly those exemplified by GPT (Generative Pre-trained Transformer) and LLMs (Large Language Models), such as GPT-3, has profoundly influenced the landscape of academic writing. These technologies have demonstrated considerable utility in the realm of scholarly endeavors, providing valuable support in idea generation, drafting processes, and surmounting challenges associated with writer’s block [

1,

2,

3]. However, a comprehensive understanding of their implications in the academic context necessitates an acknowledgment of the nuanced interplay between their benefits and limitations, as evidenced by scholarly investigations [

4,

5]. The scholarly discourse on GPT and LLMs reveals a dichotomy wherein their application in academic writing is accompanied by notable advantages and inherent challenges [

1,

2,

3]. Noteworthy studies delve into the intricate dynamics of human–machine interaction, emphasizing the imperative of judiciously integrating AI tools into the fabric of writing practices [

4]. Furthermore, recent contributions extend the conversation to encompass copywriting, elucidating the multifaceted impact of AI on diverse professional roles and creative processes [

5]. Thus, while these technologies offer promising prospects for enhancing research writing, their conscientious and responsible utilization becomes paramount.

The primary challenges identified in recent scholarship pertaining to the utilization of GPT and LLMs in research writing converge on concerns related to accuracy, potential biases, and ethical considerations [

6]. Addressing these challenges requires a concerted effort to establish ethical guidelines and norms, ensuring the judicious use of LLMs in research endeavors [

6]. The academic discourse underscores the significance of upholding scientific rigor and transparency, particularly in light of the potential biases embedded in LLM outputs [

3,

4,

5]. Papers in [

7,

8,

9] collectively suggest that while LLMs offer innovative tools for research writing, their use must be accompanied by careful consideration of ethical standards, methodological rigor, and the mitigation of biases. As highlighted in [

7,

8,

9], one of the darning issues of using GPT or LLM-based technology in authoring academic publications involves the use of AI-based paraphrasing to hide potential plagiarism in scientific publications.

Notwithstanding the concerns associated with the authoring aspect of research, the review at hand strategically narrows its focus to explore alternative dimensions of GPT and LLM applications in scholarly pursuits. Specifically, the examination focuses on data augmentation, where GPT and LLMs play a pivotal role in enhancing research data, generating features, and synthesizing data [

10,

11,

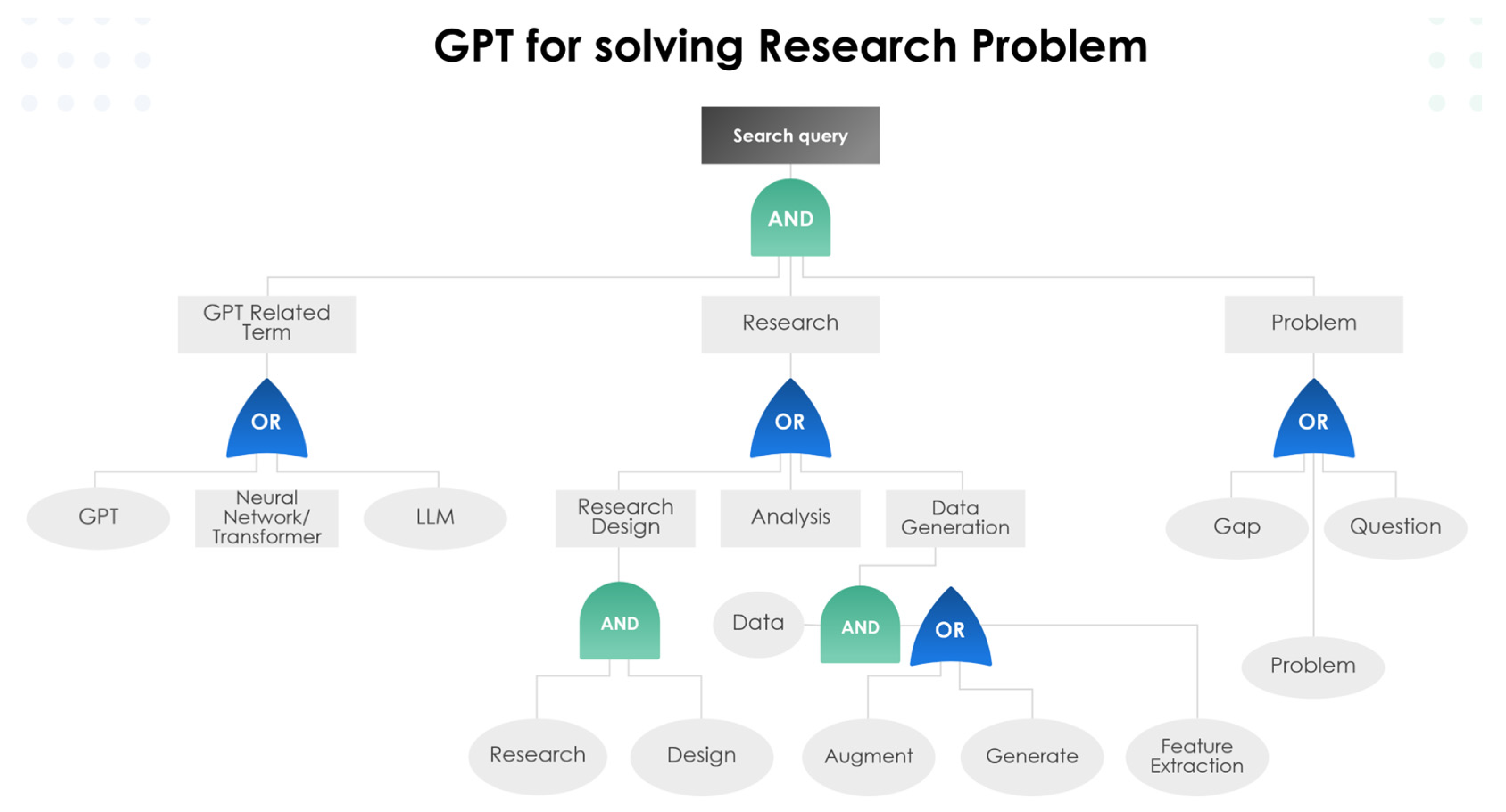

12]. As shown in

Figure 1, with GPT’s advanced language understanding capabilities, features can be extracted from plain text information. It should be noted that previously, feature extraction from plain texts involved various natural language processing techniques like entity recognition, sentiment analysis, classification, and others, as shown in [

13,

14,

15,

16,

17,

18]. With the introduction of GPT and associated technologies, a simple prompt can extract various features from plain text (

Figure 1). Moreover, as depicted in

Figure 1. Semantically similar content could be added by GPT being part of the data augmentation process, improving the diversity and robustness of the data. Furthermore, rows of data could be synthetically generated by GPT, facilitating the training of the machine learning process during times of data scarcity or confidentiality (shown in

Figure 1).

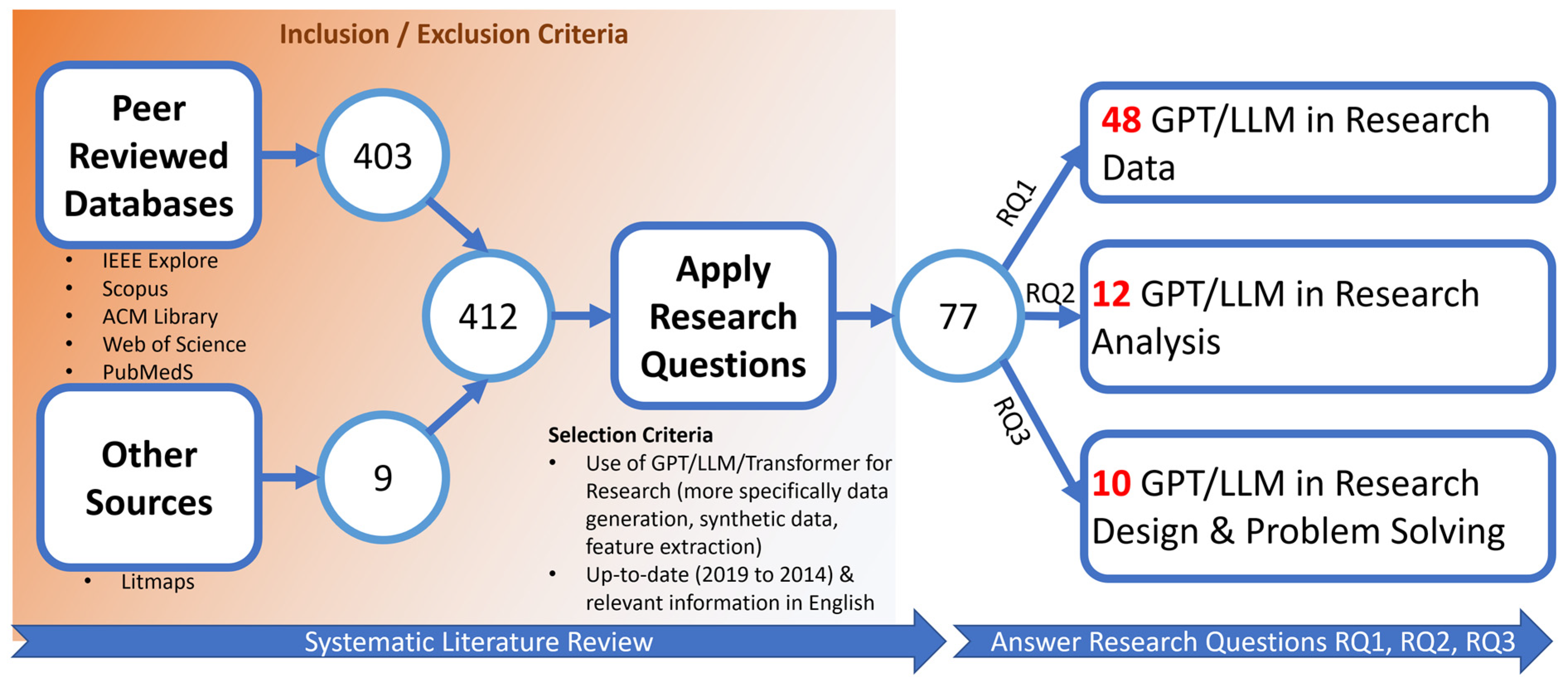

In the recent literature landscape, various reviews have emerged concerning the adoption of GPT in research [

19,

20,

21]. However, a critical research gap persists, as none of these reviews comprehensively explores GPT’s substantial capacity and capability in the realms of generating, processing, and analyzing research data. To address this significant void, this literature review meticulously scrutinizes 412 scholarly works, employing rigorous exclusion criteria to distill a curated selection of 77 research contributions. These selected studies specifically address three pivotal research questions, delineated as follows:

RQ1: How can GPT and associated technology assist in generating and processing Research Data

RQ2: How can GPT and associated technology assist in analyzing Research Data

RQ3: How can GPT and associated technology assist in Research Design and problem solving

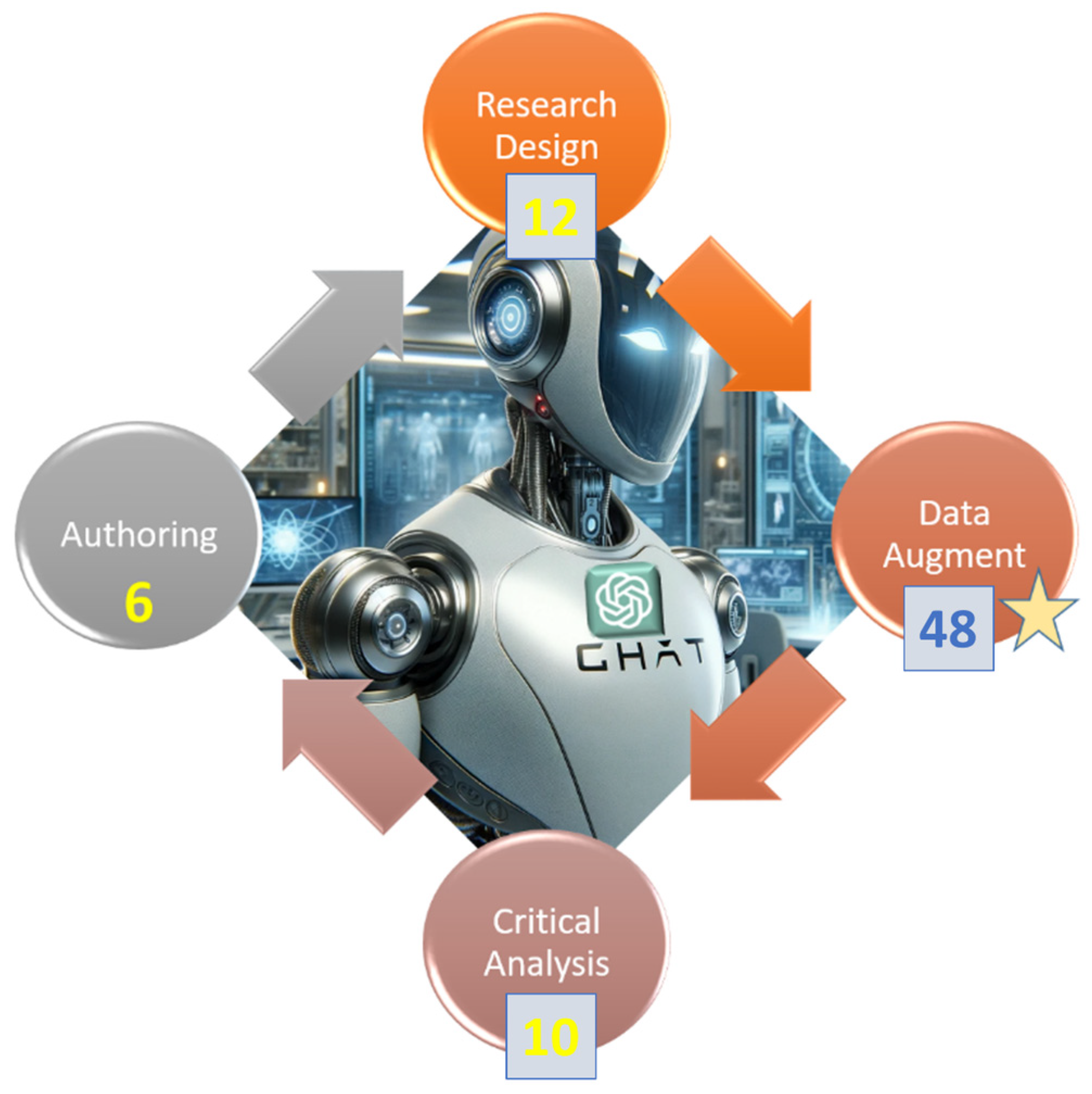

In

Figure 2, the systematic literature review adeptly highlights the central focus on data augmentation with GPT, encapsulating 45 highly pertinent scholarly contributions. Beyond this primary focus, the study delves into the proactive role of GPT in facilitating critical analysis of research data [

22,

23,

24,

25] and shaping research design [

26,

27,

28]. Significantly advancing the scholarly discourse, this study pioneers the development of a comprehensive classification framework for “GPT’s use of research data”, marking a seminal contribution. By critically scrutinizing existing research works on data augmentation, the review uniquely establishes a classification system encompassing six overarching categories and 14 sub-categories, providing a systematic and insightful perspective on the multifaceted applications of GPT in research data. Furthermore, the judicious placement of all 45 seminal works within these meticulously defined sub-categories serves as a sagacious validation of the intellectual rigor and innovation inherent in this classification framework, thereby substantiating its rationale and scholarly significance. Ultimately, the meticulous comparative analysis of 54 extant literary works, rigorously evaluating research domains, methodological approaches, and attendant advantages and disadvantages, provides profound insights to scientists and researchers contemplating the seamless integration of GPT across diverse phases of their scholarly endeavors.

3. Existing Research on GPT’s Use in Research Data

As shown in

Figure 2 and

Figure 4, the major focus of this review study is on the use of GPT in research data. As a result of the systematic literature review process, 48 existing pieces of literature were found to be highly relevant to “GPT on Research Data”. This section would summarize these papers.

GPT and other large language models (LLMs) provide versatile tools for various data-related tasks. They excel at generating coherent, contextually relevant textual data, making them ideal for content creation across diverse fields. LLMs can synthesize realistic synthetic data, which is especially valuable in domains with privacy concerns or data scarcity. In data augmentation, these models enhance existing datasets by adding new, synthesized samples, thereby improving the robustness of machine learning models. Furthermore, LLMs are capable of extracting and generating features from complex datasets, aiding in more efficient and insightful data analysis. The adaptability of these models to different data types and their ability to tailor their output make them powerful tools in data science and AI development.

The study in [

31] presents the GReaT (Generation of Realistic Tabular data) approach, which uses transformer-based large language models (LLMs) for the generation of synthetic tabular data. This method addresses challenges in tabular data generation by leveraging the generative capabilities of LLMs. [

32] discusses a method that combines GPT technology with blockchain technology for secure and decentralized data generation. This approach can be beneficial in scenarios where data privacy and security are paramount. [

26] explores the use of GPT for augmenting existing datasets. It demonstrates how GPT can be used to expand datasets by generating new, realistic samples, which can be particularly useful in fields where data is scarce or expensive to obtain. A study in [

33] presents a novel application of GPT for feature extraction from unstructured data. It showcases how GPT models can be fine-tuned to identify and extract relevant features from complex datasets, enhancing data analysis and machine learning tasks. Ref. [

34] details an early version of a GPT-based system for data anonymization, highlighting its potential to protect sensitive information in datasets while retaining its utility for analysis. Research in [

35] focuses on using GPT for generating synthetic datasets for training machine learning models. This is especially useful in domains where real-world data is limited or sensitive. The work in [

36] investigates the potential of Foundation Models (FMs), like GPT-3, in handling classical data tasks such as data cleaning and integration. It explores the applicability of these models to various data tasks, demonstrating that large FMs can adapt to these tasks with minimal or no task-specific fine-tuning, achieving state-of-the-art performance in certain cases. The study in [

37] discusses advanced techniques for text data augmentation, focusing on improving the diversity and quality of the generated text data. It emphasizes methods that can generate nuanced and contextually appropriate data, enhancing the performance of machine learning models, particularly in natural language processing tasks. Ref. [

38] explores the use of GPT-based models for feature extraction and generation, showcasing their effectiveness in identifying and creating relevant features from complex datasets, which is critical for enhancing data analysis and predictive modeling.

The work in [

39] introduces LAMBADA, a novel method for text data augmentation. It leverages language models for synthesizing labeled data to improve text classification tasks, particularly in scenarios with limited labeled data. Ref. [

40] focuses on the augmentation of medical datasets using transformer-based text generation models. It particularly addresses the challenge of data scarcity in the medical domain by generating synthetic clinical notes, which are then evaluated for their utility in downstream NLP tasks like unplanned readmission prediction and phenotype classification. A study in [

41] presents a method called PREDATOR for text data augmentation, which improves the quality of textual datasets through the synthesis of new, high-quality text samples. This method is particularly useful for text classification tasks and demonstrates a significant improvement in model performance. Work in [

42] presents a novel approach for enhancing hate speech detection on social networks. It combines DeBERTa models with back-translation and GPT-3 augmentation techniques during both training and testing. This method significantly improves hate speech detection across various datasets and metrics, demonstrating robust and accurate results. The work in [

43] presents DHQDA, a novel method for data augmentation in Named Entity Recognition (NER). It uses GPT and a small-scale neural network for prompt-based data generation, producing diverse and high-quality augmented data. This approach enhances NER performance across different languages and datasets, particularly in low-resource scenarios. The study in [

44] introduces the I-WAS method, a data-augmentation approach using GPT-2 for simile detection. It focuses on generating diverse simile sentences through iterative word replacement and sentence completion, significantly enhancing simile detection capabilities in natural language processing. Ref. [

45] explores generation-based data augmentation for offensive language detection, focusing on its effectiveness and potential bias introduction. It critically analyzes the feasibility and impact of generative data augmentation in various setups, particularly addressing the balance between model performance improvement and the risk of bias amplification. Ref. [

46] explores the use of GPT-2 in generating synthetic biological signals, specifically EEG and EMG, to improve classification in biomedical applications. It demonstrates that models trained on synthetic data generated by GPT-2 can achieve high accuracy in classifying real biological signals, thus addressing data scarcity issues in biomedical research. The work in the [

47] document describes a study on fine-grained claim detection in financial documents. The research team from Chaoyang University of Technology uses MacBERT and RoBERTa with BiLSTM and AWD-LSTM classifiers, coupled with data resampling and GPT-2 augmentation, to address data imbalance. They demonstrate that data augmentation significantly improves prediction accuracy in financial text analysis, particularly in the Chinese Analyst’s Report section. Ref. [

48] discusses the role of ChatGPT in data science, emphasizing its potential in automating workflows, data cleaning, preprocessing, model training, and result interpretation. It highlights the advantages of ChatGPT’s architecture, its ability to generate synthetic data, and addresses limitations and concerns such as bias and plagiarism.

Research work in [

49] details an approach for automating the extraction and classification of technical requirements from complex systems’ specifications. It utilizes data augmentation methods, particularly GPT-J, to generate a diverse dataset, thereby enhancing the training of AI models for better classification accuracy and efficiency in requirements engineering. Ref. [

50] investigates data augmentation for hate speech classification using a single class-conditioned GPT-2 language model. It focuses on the multi-class classification of hate, abuse, and normal speech and examines how the quality and quantity of generated data impact classifier performance. The study demonstrates significant improvements in macro-averaged F1 scores on hate speech corpora using the augmented data. The study in [

51] explores the use of GPT models for generating synthetic data to enhance machine learning applications. It emphasizes the creation of diverse and representative synthetic data to improve machine learning model robustness. The paper in [

52] focuses on augmenting existing datasets using GPT models. The method involves generating additional data that complements the original dataset, thereby enhancing the richness and diversity of data available for machine learning training.

The research in [

12] examines the generation of synthetic educational data using GPT models, specifically for physics education. It involves creating responses to physics concept tests, aiming to produce diverse and realistic student-like responses for educational research and assessment design. The reference in [

53] discusses the revolutionary role of artificial intelligence (AI), particularly large language models (LLMs) like GPT-4, in generating original scientific research, including hypothesis formulation, experimental design, data generation, and manuscript preparation. The study showcases GPT-4’s ability to create a novel pharmaceutics manuscript on 3D printed tablets using pharmaceutical 3D printing and selective laser sintering technologies. GPT-4 managed to generate a research hypothesis, experimental protocol, photo-realistic images of 3D printed tablets, believable analytical data, and a publication-ready manuscript in less than an hour. Ref. [

54] focuses on the application of GPT models to augment existing datasets in the field of healthcare. It presents a method for generating synthetic clinical notes in Electronic Health Records (EHRs) to predict patient outcomes, addressing challenges in healthcare data such as privacy concerns and data scarcity. The paper in [

11] deals with textual data augmentation in the context of patient outcome prediction. The study introduces a novel method for generating artificial clinical notes in EHRs using GPT-2, aimed at improving patient outcome prediction, especially the 30-day readmission rate. It employs a teacher–student framework for noise control in the generated data. The study in [

55] focuses on leveraging large language models (LLMs) like GPT-3 for generating synthetic data to address data scarcity in biomedical research. It discusses various strategies and applications of LLMs in synthesizing realistic and diverse datasets, highlighting their potential for enhancing research and decision-making in the biomedical field. Ref. [

56] discusses the use of GPT models for enhancing data quality in the context of social science research. It focuses on generating synthetic responses to survey questionnaires, aiming to address issues of data scarcity and respondent bias.

The seminal work in [

57] explores the use of GPT-3 for creating synthetic data for conversational AI applications. It evaluates the effectiveness of synthetic data in training classifiers by comparing it with real user data and analyzing semantic similarities and differences. The paper in [

58] presents a system developed for SemEval-2023 Task 3, focusing on detecting genres and persuasion techniques in multilingual texts. The system combines machine translation and text generation for data augmentation. Specifically, genre detection is enhanced using synthetic texts created with GPT-3, while persuasion technique detection relies on text translation augmentation using DeepL. The approach demonstrates effectiveness by achieving top-ten rankings across all languages in genre detection, and notable ranks in persuasion technique detection. The paper outlines the system architecture utilizing DeepL and GPT-3 for data augmentation, experimental setup, and results, highlighting the strengths and limitations of the methods used. Ref. [

59] explores using GPT-3 for generating synthetic responses in psychological surveys. It focuses on enhancing the diversity of responses to understand a broader range of human behaviors and emotions. The study in [

60] investigates the use of GPT-3 in augmenting data for climate change research. It generates synthetic data, representing various climate scenarios, to aid in predictive modeling and analysis.

The research in [

61] leverages GPT-3.5 for augmenting Dutch multi-label datasets in vaccine hesitancy monitoring. The paper discusses how synthetic tweets are generated and used to improve classification performance, especially for underrepresented classes. Romero-Sandoval et al. [

62] investigate using GPT-3 for text simplification in Spanish financial texts, demonstrating effective data augmentation to improve classifier performance. Rebboud et al. [

63] explore GPT-3’s ability to generate synthetic data for event relation classification, enhancing system accuracy with prompt-based, manually validated synthetic sentences. Quteineh et al. [

64] present a method combining GPT-2 with Monte Carlo Tree Search for textual data augmentation, significantly boosting classifier performance in active learning with small datasets. Suhaeni et al. [

65] explore using GPT-3 for generating synthetic reviews to address class imbalances in sentiment analysis, specifically for Coursera course reviews. It shows how synthetic data can enhance the balance and quality of training datasets, leading to improved sentiment classification model performance. Singh et al. [

66] introduce a method to augment interpretable models using large language models (LLMs). The approach, focusing on transparency and efficiency, shows that LLMs can significantly enhance the performance of linear models and decision trees in text classification tasks. Sawai et al. discuss using GPT-2 for sentence augmentation in neural machine translation. The approach aims to improve translation accuracy and robustness, especially for languages with different linguistic structures. The method demonstrated significant improvements in translation quality across various language pairs.

The paper in [

10] focuses on using GPT-2 to generate complement sentences for aspect term extraction (ATE) in sentiment analysis. The study introduces a multi-step training procedure that optimizes complement sentences to augment ATE datasets, addressing the challenge of data scarcity. This method significantly improves the performance of ATE models. A study in [

67] explores data augmentation for text classification using transformer models like BERT and GPT-2. It presents four variants of augmentation, including masking words with BERT and sentence expansion with GPT-2, demonstrating their effectiveness in improving the performance of text classification models. Another recent work by Veyseh et al. focuses on enhancing open-domain event detection using GPT-2-generated synthetic data [

68]. The study introduces a novel teacher–student architecture to address the noise in synthetic data and improve model performance for event detection. The experiments demonstrate significant improvements in accuracy, showcasing the effectiveness of this approach. Waisberg et al. discuss the potential of ChatGPT (GPT-3) in medicine [

69]. The study highlights ChatGPT’s ability to perform medical tasks like writing discharge summaries, generating images from descriptions, and triaging conditions [

69]. It emphasizes the model’s capacity for democratizing AI in medicine, allowing clinicians to develop AI techniques. The paper also addresses ethical concerns and the need for compliance with healthcare regulations [

69].

The paper “Investigating Paraphrasing-Based Data Augmentation for Task-Oriented Dialogue Systems” by Liane Vogel and Lucie Flek explores data augmentation in task-oriented dialogue systems using paraphrasing techniques with GPT-2 and Conditional Variational Autoencoder (CVAE) models [

70]. The study demonstrates how these models can effectively generate paraphrased template phrases, significantly reducing the need for manually annotated training data while maintaining or even improving the performance of a natural language understanding (NLU) system [

70]. Shuohua Zhou and Yanping Zhang focus on improving medical question-answering systems [

71]. It employs a combination of BERT, GPT-2, and T5-Small models, leveraging GPT-2 for question augmentation and T5-Small for topic extraction [

71]. The approach demonstrates enhanced prediction accuracy, showcasing the model’s potential in medical question-answering and generation tasks.

7. Results and Discussion

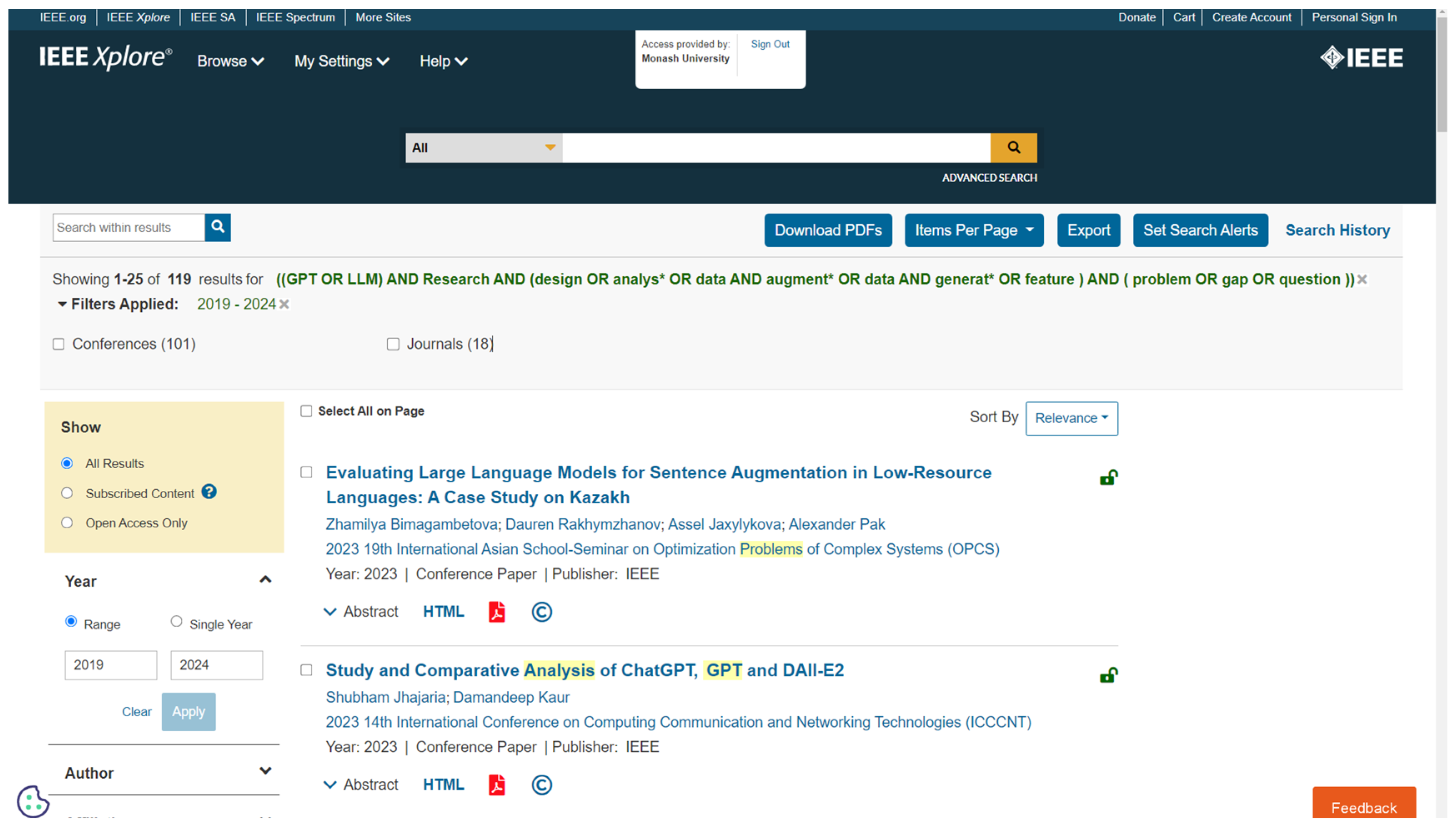

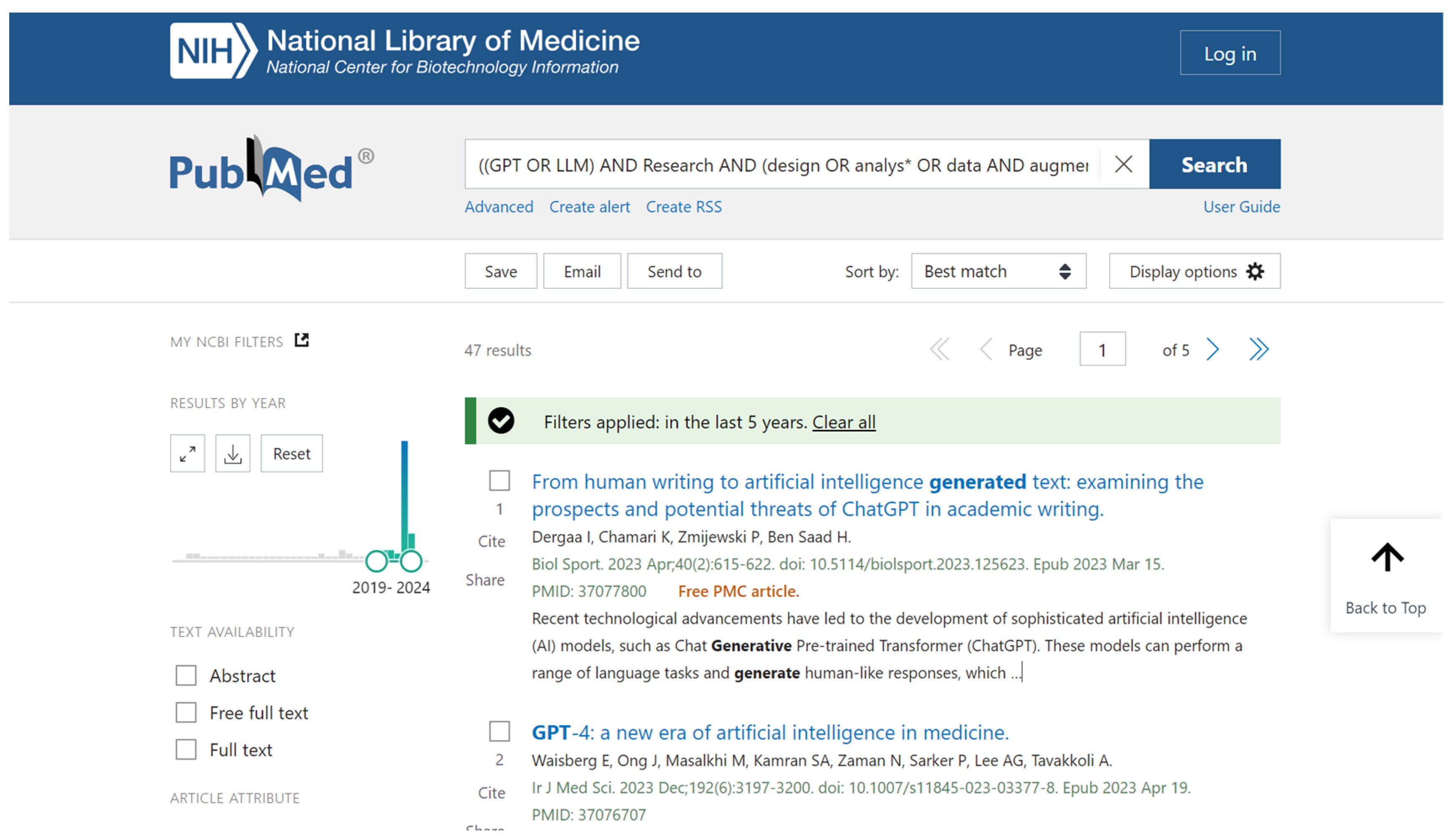

In accordance with the research questions delineated in

Section 2, a sophisticated query mechanism was introduced in

Figure 3. The implementation of this query method varies across databases used to obtain literature, as each database adheres to its own prescribed formulation for queries. This study utilized popular databases supporting advanced queries, including Scopus, IEEE Xplore, PubMed, Web of Science, and the ACM Digital Library, to compile a comprehensive list of literature.

Table 9 details the advanced query applied to each database, along with the number of records retrieved on 30 January 2024, when these advanced queries were executed.

Notably, certain databases like Google Scholar were excluded due to the limitations of their advanced query mechanism. As elucidated in [

81], Google Scholar lacks advanced search capabilities, the ability to download data, and faces challenges related to quality control and clear indexing guidelines. Consequently, Google Scholar is recommended for use as a supplementary source rather than a primary source for writing systematic literature reviews [

82]. As shown in

Table 9, the number of records retrieved from Scopus, IEEE Xplore, PubMed, Web of Science, and the ACM Library was 99, 119, 47, 306, and 102, respectively. Conversely, the use of the same advanced query in Google Scholar yielded over 30,000 results, underscoring the challenges associated with quality control and indexing guidelines within Google Scholar, as noted in [

81,

82].

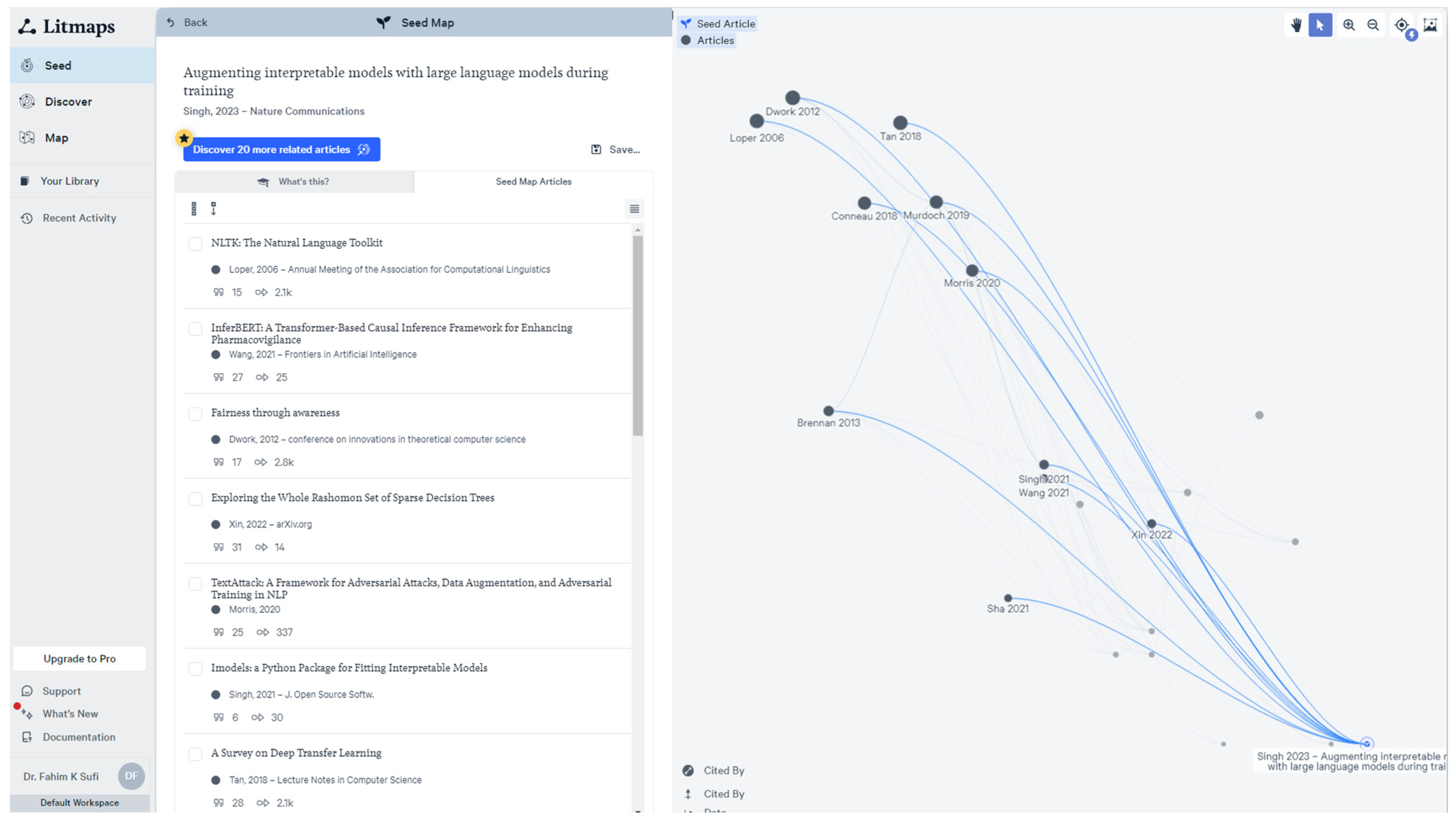

In addition to utilizing the prominent databases outlined in

Table 9, an advanced citation visualization tool, Litmaps, was employed to identify additional relevant studies [

29]. The incorporation of LitMaps in the context of a systematic literature review is indispensable, given its pivotal role in augmenting the efficiency and efficacy of the review process. LitMaps functions as a sophisticated analytical tool facilitating the systematic organization, categorization, and visualization of an extensive corpus of scholarly literature, encompassing both citing and cited articles. As depicted in

Figure 8, Litmaps identified nine studies not present in Scopus, IEEE Xplore, PubMed, Web of Science, or the ACM Digital Library.

The PRISMA flow diagram in

Figure 8 illustrates the identification process, where 412 studies were initially identified after the deduplication of 275 studies. Following a screening process based on title and abstracts, 306 records were excluded due to their lack of relevance to the theme of “using GPT in performing research”, despite the inclusion of keywords like GPT or research in the abstract or title. These studies were found to belong to entirely different areas, with some using the term “research” merely to denote future research endeavors or directions. Following the initial screening, full texts were obtained for 106 studies. Subsequently, a detailed analysis led to the exclusion of 29 full-text articles as they did not address the original research questions regarding GPT’s role in generating and processing research data, analyzing research data, or contributing to research design and problem-solving. Ultimately, 77 studies were included in the systematic literature review. While a few of these studies did not directly answer the original research questions, they provided valuable insights into the limitations, issues, and challenges associated with the adoption of GPT technologies in research activities.

This study conducted a thorough examination of over 77 peer-reviewed papers within the domain of “GPT in Research.” Given the recent and trending nature of the GPT topic, a majority of the relevant papers span the past four years. Consequently, a significant 65% of the scrutinized papers were published in the years 2023 and 2024. The distribution includes 2 papers in 2024, 48 papers in 2023, 18 in 2022, 7 in 2021, and 4 in 2020, as illustrated in

Figure 9.

To ensure the rigor and reliability of this systematic literature review, the following measures were meticulously implemented:

Adherence to Established Guidelines: The review methodologically aligned with established guidelines and recommendations from seminal academic works, particularly the study in [

82]. The work in [

82], categorized Scopus, PubMed, Web of Science, ACM Digital Library, etc. as “Principal Sources”. Hence, we exclusively used these databases.

Exclusion of Unreliable Sources: A stringent criterion was applied to exclude sources lacking in quality control and those without clear indexing guidelines to maintain the overall reliability of the review. Existing scholarly work in [

81,

82] identified Google Scholar as one of the sources that lacks clear indexing guidelines. Hence, Google Scholar was not used as a source.

Utilization of Advanced Visualization Tools: Advanced visualization tools, exemplified by Litmaps, played a pivotal role in assessing the alignment of identified studies with the predetermined domain of interest [

29]. Litmaps facilitated a comprehensive evaluation, highlighting potential gaps and identifying relatively highly cited studies crucial for inclusion in the review process.

Figure 8 illustrates the outcomes of utilizing Litmaps, showcasing the identification of nine significantly cited studies, thereby enhancing the comprehensiveness of the systematic literature review.

Strategic Citation Analysis: In addition to advanced visualization tools, a strategic citation analysis was conducted to ascertain the prominence and impact of selected studies within the scholarly landscape. High-quality studies with a substantial number of citations were accorded due attention, contributing to the refinement and validation of the literature survey (using Litmaps).