Abstract

Attentional orienting is a crucial process in perceiving our environment and guiding human behavior. Recent studies have suggested a forward attentional bias, where faster reactions are observed to spatial cues indicating information appearing in the forward rather than the rear direction. This study investigated how the body position affects attentional orienting, using a modified version of the Posner cueing task within a virtual reality environment. Participants, seated at a 90° angle or reclined at 45°, followed arrows directing their attention to one of four spatial positions where a spaceship will appear, visible either through transparent windows (front space) or in mirrors (rear space). Their task was to promptly identify the spaceship’s color as red or blue. The results indicate that participants reacted more swiftly when the cue correctly indicated the target’s location (valid cues) and when targets appeared in the front rather than the rear. Moreover, the “validity effect”—the advantage of valid over invalid cues—on early eye movements, varied based on both the participant’s body position and the target’s location (front or rear). These findings suggest that the body position may modulate the forward attentional bias, highlighting its relevance in attentional orienting. This study’s implications are further discussed within contexts like aviation and space exploration, emphasizing the necessity for precise and swift responses to stimuli across diverse spatial environments.

1. Introduction

Visual attention orienting is a fundamental process in perceiving the environment and guiding human behaviors [1]. The capacity to direct attention to relevant stimuli is crucial, enabling us to effectively navigate and engage with our complex environment. In everyday scenarios, this skill allows individuals to focus on crucial information while filtering out distractions, thereby ensuring safety and efficiency in activities such as driving, working, or studying. It is therefore important to understand how it works outside the laboratory (see, for example, ref. [2]). Notably, in high-demand professions like aviation and medicine, attentional orienting becomes paramount, facilitating swift decision making and precise responses to sudden changes or emergencies (see, for example, [3,4]).

Posner’s spatial cueing paradigm [5] is widely recognized as the most widely used experimental approach for examining the influence of spatial cues on attention, in particular, their ability to enhance information processing. Many factors influencing the orienting of attention have been explored using this paradigm, such as the cognitive load [6], emotions [7], or aging [8]. In this paradigm, a spatial cue is briefly presented before a target stimulus, prompting participants to respond as quickly and/or accurately as possible upon the target’s presentation. The cue might accurately indicate the target’s spatial location (“valid cue”) or mislead by pointing to an incorrect location (“invalid cue”). This distinction allows researchers to gauge the efficacy of the cue in orienting participants’ attention. Essentially, the paradigm evaluates the effectiveness of attentional direction and its subsequent impact on the speed and precision of target responses.

Studies using this paradigm, such as [9,10,11], traditionally focused on the visual field in front of individuals. Yet, with advances in virtual reality technology, the research landscape has expanded considerably. Virtual reality provides a more naturalistic framework for investigating cognitive processes [12,13] and attentional orienting [14] and enables a deeper understanding of how attentional processes work by exploring previously unexplored influencing factors. Thus, in a virtual reality cueing paradigm, it has been observed that when attention is directed forward or backward, response times are shorter for targets located in the front space than for those located in the rear space. This observation suggests an attentional bias in favor of the space in front of the participant. For example, [15] adapted Posner’s paradigm: Participants, seated within a simulated spaceship, were tasked with swiftly and accurately identifying the color (red or blue) of an approaching spaceship. The target could appear in four different locations—two positions in front (visible through transparent glass) and two positions reflecting rear space (observable through a rearview mirror). Before this, participants were presented with a predictive or non-predictive cue. The results showed faster responses when the target appeared in front (through the transparent glass), rather than behind (through the rearview mirrors). These results suggest faster attentional orienting in front of us rather than behind us. This finding is particularly relevant for human–computer interfaces, where understanding attentional dynamics can lead to more intuitive designs.

The impact of the body position on cognitive function has also gained attention, in particular, the impact of the vestibular system, for example, [16,17]. Indeed, traditional research often limited participants to seated positions facing computer screens. In contrast, recent studies emphasize the role of the body position and body orientation in attentional processes [18,19]. This is particularly pertinent in contexts like aviation and spaceflight, where the body position and movement are variable and critical for performance. In these operational contexts, visual stimuli can appear unexpectedly, and the body position/rotation or aircraft orientation can vary considerably. Pilots must be able to react quickly to unforeseen and dangerous situations, even when the aircraft is tilted forward or backward or experiencing turbulence [20]. Similarly, astronauts must also be able to adapt quickly to unexpected situations while maintaining adequate visual orientation in a microgravity environment, where the body position is constantly changing and spatial cues may be unreliable [21,22].

In summary, the integration of virtual reality with Posner’s paradigm has markedly enhanced our understanding of attentional orienting, enabling the analysis of the entire perceptual space, encompassing both frontal and rear spaces. Such advancements bear substantial implications for human–computer interface design. In this context, our study endeavors to explore a novel factor that can impact the orienting of attention: the body position. To achieve this goal, we employed a modified version of the Posner paradigm within a virtual reality setting with different body orientations—seated at a 90° angle and reclined at 45°. We posit that the body position could modulate the forward attentional bias observed by Soret [14] and Soret et al. [15], due to changes in the vestibular system induced by different body positions. Specifically, in a seated position, we anticipate replicating their findings, with significantly faster responses to targets viewed directly (in front) compared to those discerned via mirror reflection (in rear). In the reclined position, the altered body posture could impact the orienting of attention, potentially resulting in varied response times for targets in front and behind.

2. Materials and Methods

2.1. Participants

Thirty-two healthy volunteers (14 females, aged 28 ± 5 years) participated in the study. All participants had normal or corrected-to-normal vision. In line with the Declaration of Helsinki, all participants provided written consent before the experiment. They did not receive any compensation for their participation.

2.2. Apparatus

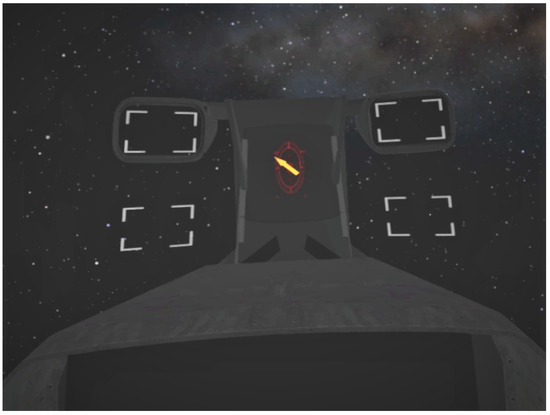

For the study, we used an HTC Vive virtual reality headset equipped with an integrated Tobii eye-tracking system, as well as HTC Vive controllers. The modified Posner task was developed using Unity3D, C#, and OpenVR, with the addition of SteamVR, Tobii, and Tobii Pro Stimuli plugins. We utilized a virtual environment identical to that featured in the study by Soret et al. [15]. See Figure 1 for an overview of the environment.

Figure 1.

A screenshot of the experimental environment as projected through the virtual reality headset. The arrow corresponds to the attentional cue indicating one of the four possible target positions: two corresponding to the direct vision and two corresponding to the rearview mirrors.

However, our research diverged in several key aspects. While the original study employed both auditory and visual cues, we exclusively focused on the use of visual cues in the form of directional arrows. Moreover, we chose to manipulate cue validity rather than predictivity, as the latter did not appear to exert any significant impact on attentional orienting or ocular responses [15]. Cue validity refers to whether a cue correctly indicates the target location on each trial (valid or invalid), while cue predictivity relates to the proportion of valid versus invalid cues within a set of trials (e.g., 50% valid/50% invalid being non-predictive, more than 60% valid being predictive).

The arrangement of rearview mirrors, transparent sights, and response buttons was counterbalanced across participants. This means that half of the participants experienced the setup with rearview mirrors positioned above and sights below, while the other half had the sights above and rearview mirrors below. Additionally, for half of the participants, the right grip button was associated with the red spaceship and the left grip button with the blue spaceship and vice versa for the other half.

We did not provide any specific instructions regarding hand placement. To record eye responses, we used the Tobii eye-tracking system, which operates on the raycast principle. This system determines the direction of the user’s gaze by drawing a virtual line from the eyes to the object being viewed in the virtual reality environment. The eye-tracking system was essential to ensure that participants complied with the instruction not to move their eyes before the target appeared, an important condition in the study of covert orienting of attention. If premature eye movement was detected, the trial was automatically restarted.

The predictability of the cue was set at 80%; i.e., it was valid, accurately indicating the target position, in 80% of the trials and invalid, indicating an incorrect position, in the remaining 20% of trials. Trials were randomized within each block, with the stipulation that the target could not appear in the directly opposite diagonal position for invalid trials. We selected this 80% predictability to motivate participants to use the cue to orient their attention, given that a non-predictive cue could be dismissed as unreliable. In the discrimination task, participants were required to identify the color of the target (a virtual spaceship, either blue or red) and choose the appropriate response (teleport the blue ones and destroy the red ones). The target was colored (blue or red) for 250 ms and then turned white.

Participants were positioned on a medical bed with an adjustable backrest, which was set at either a 45° or 90° angle. When participants were tilted at 45°, the virtual reality view was adjusted so that they perceived themselves as sitting at 90° in virtual reality. In other words, there was no difference perceived in virtual reality in the two positions, only the real body position changed. Each participant performed the experiment in both positions. We counterbalanced the starting position (reclined or seated) across participants.

2.3. Procedure

The participants were instructed to react to the targets as quickly and accurately as possible, using the provided cue. The instruction was to destroy the red ships and teleport the blue ships as quickly as possible without destroying the blue ones or teleporting the red ones. Before the start of each block, participants were informed about the cue’s predictability (80%) and tried the response buttons multiple times to understand the corresponding response and associated animation (spaceship destruction vs. spaceship teleportation).

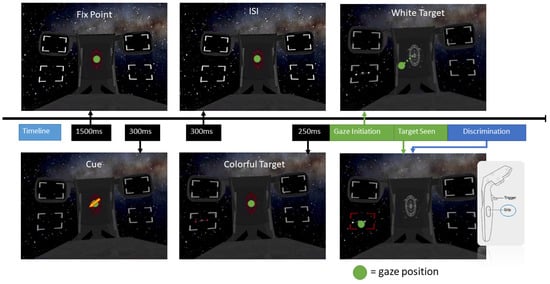

Each trial started with the participant fixating on a central point for 1.5 s, followed by a cue displayed for 300 milliseconds. To ensure fidelity to the covert attention task, if the participant’s gaze deviated from the fixation point prior to the target’s (the spaceship) appearance, the trial was restarted. The spaceship emerged at one of four possible locations 300 milliseconds post-cue disappearance, consistent with the cue validity conditions. Participants were instructed to identify the spaceship’s color and lock onto the target by gazing at it and pressing the “grip” controller button associated with the color of the spaceship (left grip or right grip). They had the flexibility to perform these actions in any order, either looking at the target then pressing the grip or vice versa. Behavioral data recording concluded at this stage; subsequent actions were designed to provide participants with situational awareness of the actual positions of objects around them. Without these actions, participants might process the information perceived through the mirrors as solely in front of them, failing to associate it with the rear space. See Figure 2 for an example of a trial sequence.

Figure 2.

Example of a valid trial sequence: after a fixation point lasting 1500 ms, a directional arrow lasting 300 ms indicates the target’s occurrence through the left transparent sight. After an inter-stimulus interval of 300 ms, the target appears at the indicated location. It remains red for 250 ms then turns white. The response sequence is as follows: move eyes away from the central fixing point (gaze initiation), look at the target (target seen), and press the “grip” button associated with the color of the spaceship (discrimination response). The green dot illustrates the position of the participants’ gaze.

The participants’ actions after the recorded response were as follows:

- If the spaceship appeared in the front view (through a transparent sight), they would immediately press the “trigger” button to either destroy or teleport the spaceship.

- If the spaceship was located behind them (visible through a rearview mirror), they were instructed to turn around, fix their gaze on the actual location of the spaceship, and then press the “trigger” to engage with it.

The experimental phase, comprising 160 trials, was carefully designed within the constraints imposed by the use of virtual reality. Given the necessity to ensure participant comfort and prevent VR-induced fatigue or discomfort, we chose 80 trials for each body position (45°/90°), including 60 valid and 20 invalid trials. This included 40 frontal (30 valid, 10 invalid) and 40 rear trials (30 valid, 10 invalid). The experiment lasted approximately 45 min, with a 30 min main phase and 8 practice trials. This trial count was selected to maximize the data quality while considering the practical limitations of the VR session duration.

For data collection, two different types of ocular response time, “gaze initiation” and “target seen”, were recorded, both from the moment of target onset. Gaze initiation corresponds to the moment when participants move their gaze away from the central fixation point. “Target seen” occurs when the participant fixes the view/mirror that corresponds to the target’s location. In addition, we also recorded the “discrimination response”, which corresponds to the moment when the participant presses the “grip” button to select the type of response according to the color of the spaceship. It is essential to note that the eye responses (gaze initiation, target seen) are similar to the “grip” motor response; they represent a reaction to a stimulus, not a measure of overt attentional orienting. Thanks to our eye-tracking system, we ensured that participants did not move their eyes before the target appeared and therefore used covert orienting to anticipate their response. It is important to note that these measurements are recorded on the target in transparent sights or rearview mirrors. Actions performed after this, necessary for situational awareness, are not included in the measurements of ocular and motor response times.

2.4. Data Processing

To ensure the robustness of our data, we applied rigorous response time filtering criteria. First, we excluded response times shorter than 50 ms from our analysis, as these were considered below the plausible limits of human performance, suggesting potential measurement errors. Second, response times exceeding the calculated threshold (mean response time + 3 × standard deviation) were discarded as outliers, which we assumed were a result of excessive delay or inattention. The implementation of these exclusion criteria resulted in the removal of less than 1% of response times from our analysis.

We used the JASP software 0.17.2.1 to perform a three-factor repeated-measures analysis of variance (RM-ANOVA) on the mean response times for each dependent variable (gaze initiation, target seen, and discrimination response). This analysis allowed us to observe the impact of our manipulated factors: validity (valid/invalid), target location (front/rear), and body position (90°/45°).

3. Results

3.1. Gaze Initiation

FThe analysis revealed a main effect of cue validity, as expected (). Participants had significantly shorter mean response times for valid trials than for invalid trials ( ms). Furthermore, a main effect of target location was also observed (). This effect showed that the mean response times were significantly shorter when the target appeared in front rather than in the rear ( ms). The main effect of the body position was not significant ().

A significant interaction was observed between the three experimental factors: cue validity, target location, and body position (). It showed that the difference in response times between valid and invalid trials (validity effect) was influenced by the target location and body position. To see the descriptive data of the analysis, see Table 1.

Table 1.

Descriptive statistics of initiation reaction times based on body position, target location, and validity of cue.

Analysis of Cueing Effect

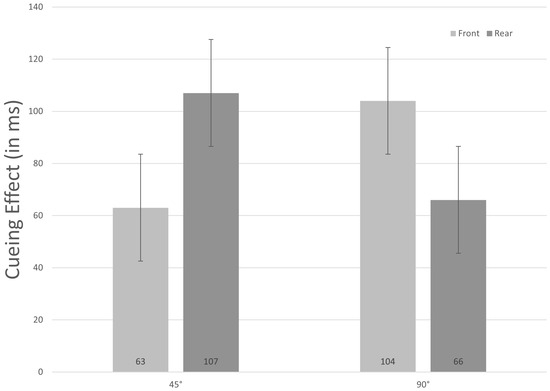

To probe this interaction, a subsequent analysis was conducted on the cueing effect (). This analysis showed a significant interaction effect of the target location and body position on the cueing effect (). The analysis of the simple effect of the target location as a function of the body position showed that the cueing effect was significantly larger when the target appeared in the rear rather than in front when participants were reclined at 45° ( ms). Conversely, when participants were seated at 90°, the cueing effect was larger when the target appeared in front rather than in the rear ( ms). Figure 3 illustrates the mean cueing effect for the gaze initiation measure.

Figure 3.

Cueing effect (in milliseconds) for gaze initiation based on target location and body position. A greater effect means more efficient orientation for valid cues and/or higher cost for invalid cues.

3.2. Target Seen

The analysis revealed a pattern of results similar to that of gaze initiation. Indeed, a main effect of cue validity was observed (), with significantly shorter mean response times for valid trials ( ms). The main effect of the target location was also significant (), with faster responses to targets in front ( ms). However, the triple interaction between our three experimental factors was no longer significant ().

3.3. Discrimination Response

The analysis revealed a main effect of cue validity (), with significantly shorter mean response times for valid trials than for invalid trials ( ms). A main effect of the target location was also observed (), with significantly faster mean response times for targets appearing in the front rather than in the rear ( ms). No other main effects or interactions were observed. No effect on accuracy was observed (all p < 0.05). The average accuracy of participants’ answers was 95% correct.

4. Discussion

This study explored whether the body position impacts the orienting of attention between the front and rear space. Using a modified Posner paradigm in a virtual reality setting, participants were either seated at 90° or reclined at 45°. The orientation of participants’ attention was guided by an arrow with 80% validity, with both ocular and motor response times measured.

4.1. Primary Findings and Replication

The results demonstrated an effect of cue validity and target location on all measurements. Participants responded more quickly when the cue accurately indicated the target location, highlighting their ability to efficiently orient their attention using the cue. Moreover, faster reactions occurred when the target appeared directly in front of participants rather than in the rearview mirrors, indicating a forward bias in orienting, consistent with Soret et al. [15].

Interestingly, in contrast to our study and the previous work [23], Soret et al. [15] found no significant difference in ocular measurements. They suggested that this was due to the manipulation of cue predictability, not cue validity. Our results confirm this idea, as we also observed effects on eye movements when we manipulated cue validity, which aligns with Soret et al. [23]’s findings. However, the second hypothesis from Soret et al. [15], suggesting that the absence of an effect on eye responses was due to the introduction of a motor response requirement, is not supported by our results. Despite the inclusion of both ocular and motor responses in our paradigm, we observed the effects of the cue validity and target location on both types of measurements. This contradicts the hypothesis that a motor response requirement could diminish observable impacts on the eye response.

4.2. Novel Interactions and Implications

The interaction observed between our three experimental factors for gaze initiation shows that the difference in response time between valid and invalid trials (cueing effect) varies according to the body position and target location, indicating that participants’ orientation in front and rear space differed when seated or tilted. By analyzing the cueing effect, which provides a measure of the cue’s ability to orient participants’ attention, we observed that the cueing effect is higher in the front space than in the rear space when participants are seated. This means that the subjects oriented their attention better in the front space than in the rear space in this condition. However, when participants are tilted at 45°, the cueing effect is larger in the rear space than in the front space. Thus, participants orient their attention better to the rear space than to the front space when they are tilted.

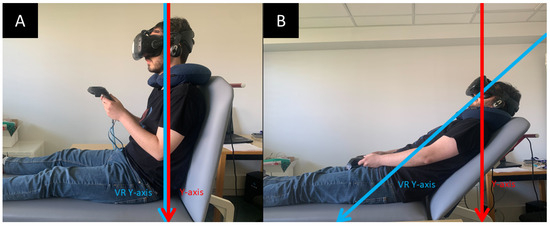

This difference in orienting could result from the interplay between the spatial representations in virtual reality and the real world. When participants are seated (90°), their perception of space in the virtual environment aligns with their real-world experiences, matching the real-world Y-axis. Conversely, when participants are reclined (45°), the virtual Y-axis adjusts according to their position. This adjustment results in a partial overlap of the virtual front space with the actual upper space and the virtual rear space with the actual lower space (see Figure 4).

Figure 4.

Experimental configurations illustrating the alignment of participants’ perception in real (red axis) and virtual spaces (blue axis). (A) In the seated position, the orientation of the virtual environment (blue axis) aligns with the upright orientation of the participant, which is also in alignment with the direction of real-world gravity (red axis). (B) In the reclined position, the orientation of the virtual environment (blue axis) is adjusted to align with the body orientation of the participant. However, the direction of real-world gravity (red axis) remains constant and does not align with the virtual environment’s orientation. As a result, a portion of the “front” in the virtual environment overlaps with the space above in the real-world orientation, and a part of the “rear” in the virtual environment overlaps with the space below in the real-world orientation.

Previous research has demonstrated that the lower visual field is often associated with more efficient orienting and information processing than the upper visual field [24,25], potentially due to evolutionary adaptations favoring attention to potential threats typically coming from the ground rather than the sky. This bias towards the lower field could explain the results observed when participants were reclined at 45°. Indeed, in this condition, the rear space in the virtual environment partially overlaps with the lower space in the real world, while the front space overlaps with the upper space. As the rear space aligns with the actual lower space, it might be prioritized in this reclined position. Thus, this rearward bias could reflect a downward bias in the real world. These findings underscore the complex interaction between real-world spatial cognition and virtual reality representation, revealing how the alignment of virtual and real-world axes can influence the orienting of spatial attention.

However, it is important to note that the interaction effects we observed were only evident in the “gaze initiation” response times and not in “target seen” or “discrimination response”. This could suggest that the orientation effects might have influenced only the first motor response. Since participants had the freedom to choose the order of performing “target seen” and “discrimination response”, it is possible that the attentional orienting effect was only captured in the first recorded response, the “gaze initiation” response.

4.3. Limitations and Future Directions

One limitation of the present study is that it did not control the position of the participants’ hands during the experiment. Hands play an important role in the perception of personal space, and their position and movements could potentially influence attentional orienting and the representation of space [26,27,28,29]. For instance, research has shown that a disconnected hand avatar can be integrated into the peripersonal space, suggesting that a person’s representation of space can extend beyond their physical body to include virtual body representations [27,28]. Therefore, in the context of virtual reality, the free position of participants’ hands might have had an impact on attentional orienting. In future studies, it would be beneficial to control or manipulate the position and representation of hands in virtual space, to further elucidate its role in attentional orienting and spatial perception.

Furthermore, it is also important to emphasize that the interpretation of the cueing effect could benefit from the inclusion of neutral cues in future studies. Indeed, in our interpretation we assume that a higher cueing effect has an increased treatment benefit for valid trials, but it is also possible that a higher cueing effect is due to an additional treatment cost for invalid trials. The use of neutral cues, which do not provide information on the location of the target onset, would more clearly distinguish between these two possibilities. In the absence of neutral cues, we cannot determine precisely whether the observed difference between valid and invalid trials is the result of better orientation for valid cues or an increased cost for invalid cues.

Another limitation of our study is the possibility that motor preparation influences reaction times, particularly with regard to the distinction between reactions to front and rear cues. Our behavioral measurements were recorded prior to any physical movement of the participants to avoid artificially lengthening reaction times, particularly for the rear targets. The implementation of a turning movement for rear targets was intended to create situational awareness, enabling participants to correctly associate information perceived in the rearview mirror with a rear origin. However, it is possible that motor preparation for these movements, even if not performed at the time of recording, occurs between the cue and target onset, subtly affecting reaction times. This anticipated preparation to turn around for backward targets could therefore influence the results, suggesting that the differences observed between forward and backward orienting could reflect not only attentional capture but also variations in motor preparation between forward and backward orientations. Future studies should explore this hypothesis to better understand the impact of motor preparation on the attention orientation in similar contexts.

Finally, a potential avenue for future research lies in exploring a wider range of body positions. In our study, we restricted our participants to two positions: seated at 90° or reclined at 45°. However, in real-world scenarios, especially in aviation and spaceflight, individuals may experience a vast array of body orientations. It would be interesting to see how these varying orientations might impact attentional orienting and the perception of space. Moreover, it would be valuable to investigate how these findings can be incorporated into training programs to help individuals adapt to different body orientations, particularly in aviation and space contexts where individuals are often required to maintain attentional focus despite unusual body positions.

5. Conclusions

In summary, this research offers new insights into how the body position affects the orienting of visual attention, revealing significant variations based on participants’ positions. These findings highlight the need to consider body position influences when examining attentional orienting, not only in everyday contexts but also in specialized settings.

For example, for airplane pilots, who often need to swiftly switch attention between various spatial locations while managing the aircraft’s movement, understanding how their body position may influence attention can be crucial. Traditional flight training programs may need to be re-evaluated to incorporate these findings, possibly leading to improved training techniques that can help manage cognitive load more effectively [30,31]. Similarly, astronauts in space are subject to unique body positions due to microgravity, which could also influence their visual attention. Research into the effects of the body position on the orientation of attention could be a consideration when designing the user interface of spacecraft and training simulations, helping astronauts adapt to the extreme conditions they experience, particularly when rapid attentional shifts are necessary [22].

Given the specific effects we observed, further research is required to better understand the mechanisms underlying the impact of the body position on the orienting of visual attention and to explore potential strategies for improving the performance.

Author Contributions

Conceptualization, R.S. and V.P.; software, R.S.; validation, V.P.; formal analysis, R.S.; investigation, R.S. and N.P.; data curation, R.S. and N.P.; writing—original draft preparation, R.S. and V.P.; writing—review and editing, R.S. and V.P.; visualization, R.S. and V.P.; supervision, V.P.; project administration, V.P.; funding acquisition, V.P. All authors have read and agreed to the published version of this manuscript.

Funding

This work was supported by the AID/DGA program (ATTARI project).

Institutional Review Board Statement

This study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of ISAE-SUPAERO.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Carrasco, M. Visual attention: The past 25 years. Vis. Res. 2011, 51, 1484–1525. [Google Scholar] [CrossRef]

- Kaushik, P.; Moye, A.; Vugt, M.V.; Roy, P.P. Decoding the cognitive states of attention and distraction in a real-life setting using EEG. Sci. Rep. 2022, 12, 20649. [Google Scholar] [CrossRef] [PubMed]

- Stiegler, M.P.; Tung, A. Cognitive processes in anesthesiology decision making. Anesthesiology 2014, 120, 204–217. [Google Scholar] [CrossRef]

- Azuma, R.; Daily, M.; Furmanski, C. A review of time critical decision making models and human cognitive processes. In Proceedings of the 2006 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2006; IEEE: Piscataway, NJ, USA, 2006. [Google Scholar]

- Posner, M.I. Orienting of attention. Q. J. Exp. Psychol. 1980, 32, 3–25. [Google Scholar] [CrossRef]

- Lee, Y.C.; Lee, J.D.; Ng Boyle, L. The interaction of cognitive load and attention-directing cues in driving. Hum. Factors 2009, 51, 271–280. [Google Scholar] [CrossRef]

- Müller-Oehring, E.M.; Schulte, T. Cognition, emotion, and attention. Handb. Clin. Neurol. 2014, 125, 341–354. [Google Scholar]

- Erel, H.; Levy, D.A. Orienting of visual attention in aging. Neurosci. Biobehav. Rev. 2016, 69, 357–380. [Google Scholar] [CrossRef]

- Posner, M.I.; Cohen, Y. Components of visual orienting. Atten. Perform. Control. Lang. Process. 1984, 32, 531–556. [Google Scholar]

- Rizzolatti, G.; Riggio, L.; Dascola, I.; Umiltá, C. Reorienting attention across the horizontal and vertical meridians: Evidence in favor of a premotor theory of attention. Neuropsychologia 1987, 25, 31–40. [Google Scholar] [CrossRef]

- Klein, R.M. Inhibition of return. Trends Cogn. Sci. 2000, 4, 138–147. [Google Scholar] [CrossRef]

- Pieri, L.; Tosi, G.; Romano, D. Virtual reality technology in neuropsychological testing: A systematic review. J. Neuropsychol. 2023, 17, 382–399. [Google Scholar] [CrossRef] [PubMed]

- Reggente, N.; Essoe, J.K.Y.; Aghajan, Z.M.; Tavakoli, A.V.; McGuire, J.F.; Suthana, N.A.; Rissman, J. Enhancing the ecological validity of fMRI memory research using virtual reality. Front. Neurosci. 2018, 12, 408. [Google Scholar] [CrossRef]

- Soret, R. Posner Paradigm from Laboratory to Real Life: Attentional Orienting in Front and Rear Space (Paradigme de Posner du Laboratoire au Monde Réel: Orientation de L’attention en Espace Avant et Arrière). Ph.D. Thesis, ISAE-SUPAERO, University of Toulouse, Toulouse, France, 2021. [Google Scholar]

- Soret, R.; Charras, P.; Hurter, C.; Peysakhovich, V. Attentional Orienting in Front and Rear Spaces in a Virtual Reality Discrimination Task. Vision 2022, 6, 3. [Google Scholar] [CrossRef]

- Bigelow, R.T.; Agrawal, Y. Vestibular involvement in cognition: Visuospatial ability, attention, executive function, and memory. J. Vestib. Res. 2015, 25, 73–89. [Google Scholar] [CrossRef]

- Hanes, D.A.; McCollum, G. Cognitive-vestibular interactions: A review of patient difficulties and possible mechanisms. J. Vestib. Res. 2006, 16, 75–91. [Google Scholar] [CrossRef] [PubMed]

- McAuliffe, J.; Johnson, M.J.; Weaver, B.; Deller-Quinn, M.; Hansen, S. Body position differentially influences responses to exogenous and endogenous cues. Atten. Percept. Psychophys. 2013, 75, 1342–1346. [Google Scholar] [CrossRef]

- Kaliuzhna, M.; Serino, A.; Berger, S.; Blanke, O. Differential effects of vestibular processing on orienting exogenous and endogenous covert visual attention. Exp. Brain Res. 2019, 237, 401–410. [Google Scholar] [CrossRef]

- Stokes, A.F.; Kite, K. Flight Stress: Stress, Fatigue and Performance in Aviation; Routledge: Oxfordshire, UK, 2017. [Google Scholar]

- Clark, T.K. Effects of spaceflight on the vestibular system. In Handbook of Space Pharmaceuticals; Spinger: Berlin/Heidelberg, Germany, 2022; pp. 273–311. [Google Scholar]

- Oman, C. Spatial orientation and navigation in microgravity. In Spatial Processing in Navigation, Imagery and Perception; Springer: Boston, MA, USA, 2007; pp. 209–247. [Google Scholar]

- Soret, R.; Charras, P.; Khazar, I.; Hurter, C.; Peysakhovich, V. Eye-tracking and Virtual Reality in 360-degrees: Exploring two ways to assess attentional orienting in rear space. In Proceedings of the ACM Symposium on Eye Tracking Research and Applications, Stuttgart, Germany, 2–5 June 2020; pp. 1–7. [Google Scholar]

- Danckert, J.; Goodale, M.A. Superior performance for visually guided pointing in the lower visual field. Exp. Brain Res. 2001, 137, 303–308. [Google Scholar]

- Previc, F.H. Functional specialization in the lower and upper visual fields in humans: Its ecological origins and neurophysiological implications. Behav. Brain Sci. 1990, 13, 519–542. [Google Scholar] [CrossRef]

- Amemiya, T.; Ikei, Y.; Kitazaki, M. Remapping peripersonal space by using foot-sole vibrations without any body movement. Psychol. Sci. 2019, 30, 1522–1532. [Google Scholar] [CrossRef] [PubMed]

- Mine, D.; Yokosawa, K. Remote hand: Hand-centered peripersonal space transfers to a disconnected hand avatar. Atten. Percept. Psychophys. 2021, 83, 3250–3258. [Google Scholar] [CrossRef] [PubMed]

- Mine, D.; Yokosawa, K. Disconnected hand avatar can be integrated into the peripersonal space. Exp. Brain Res. 2021, 239, 237–244. [Google Scholar] [CrossRef] [PubMed]

- Mine, D.; Yokosawa, K. Adaptation to delayed visual feedback of the body movement extends multisensory peripersonal space. Atten. Percept. Psychophys. 2021, 84, 576–582. [Google Scholar] [CrossRef]

- Casner, S.M.; Schooler, J.W. Vigilance impossible: Diligence, distraction, and daydreaming all lead to failures in a practical monitoring task. Conscious. Cogn. 2015, 35, 33–41. [Google Scholar] [CrossRef] [PubMed]

- Wickens, C.D.; McCarley, J.S.; Gutzwiller, R.S. Applied Attention Theory; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).