Integrating Knowledge Graphs into Autonomous Vehicle Technologies: A Survey of Current State and Future Directions

Abstract

1. Introduction

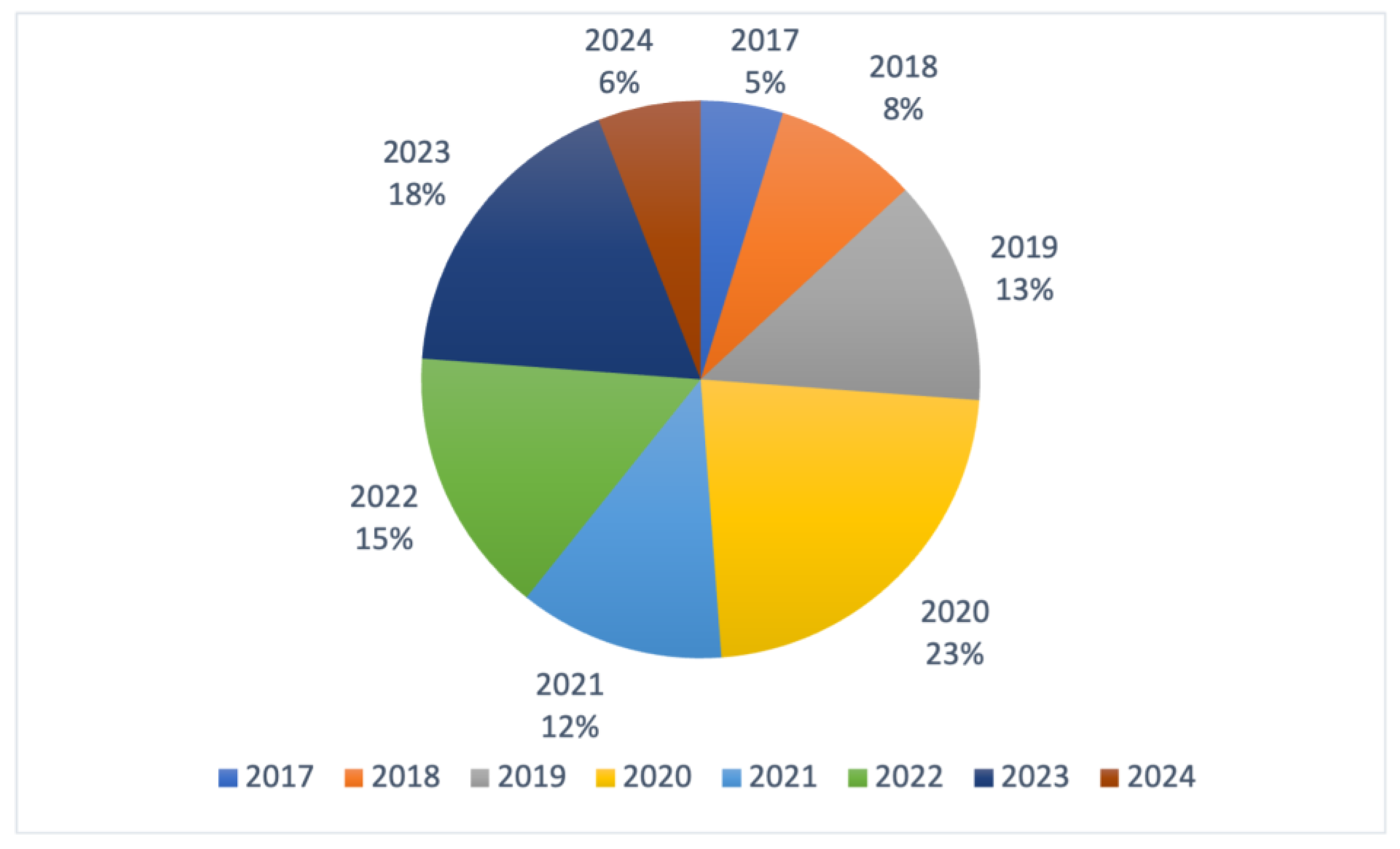

2. Review Strategy

- Relevance: Articles were selected not only for focus on the integration of KGs with autonomous vehicle technologies but also for their contribution to understanding the background and fundamentals of AV technologies.

- Manual Screening: Abstracts of the identified articles were manually reviewed to assess alignment with the research questions. Only studies that were directly relevant to AV technologies and KG integration or offered valuable insights into AV technologies were retained for further analysis.

- Institutional Expertise: To capture cutting-edge research and institutional expertise, we specifically included sources from Toyota Research Institute, Kanazawa University, The University of Tokyo, and the National Institute of Advanced Industrial Science and Technology (AIST) as part of the filtering process.

- Peer-Review Status: We ensured that the review is based on peer-reviewed sources. Preprint articles from repositories such as ArXiv were excluded unless their final, peer-reviewed versions were available. Articles with discrepancies between preprint and published versions were cross-checked, and only the final published versions were retained for analysis.

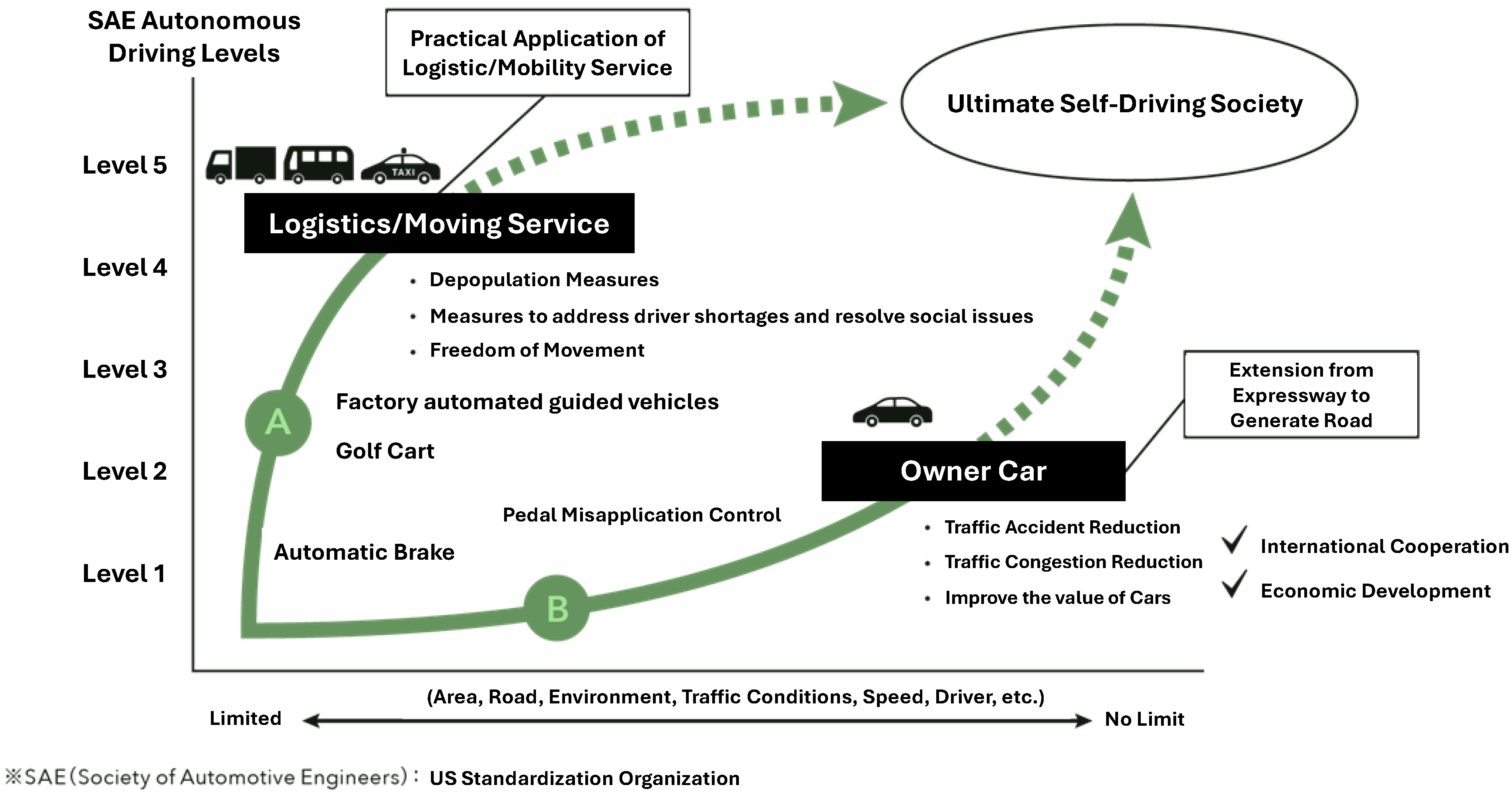

3. Background and Fundamentals

3.1. Perception

3.2. Localization and Mapping

3.3. Path Planning

3.4. Control

3.5. Decision-Making

3.6. Human–Machine Interaction (HMI)

4. Knowledge Graphs and Ontologies

4.1. Knowledge Graphs Integrated into AV Technologies

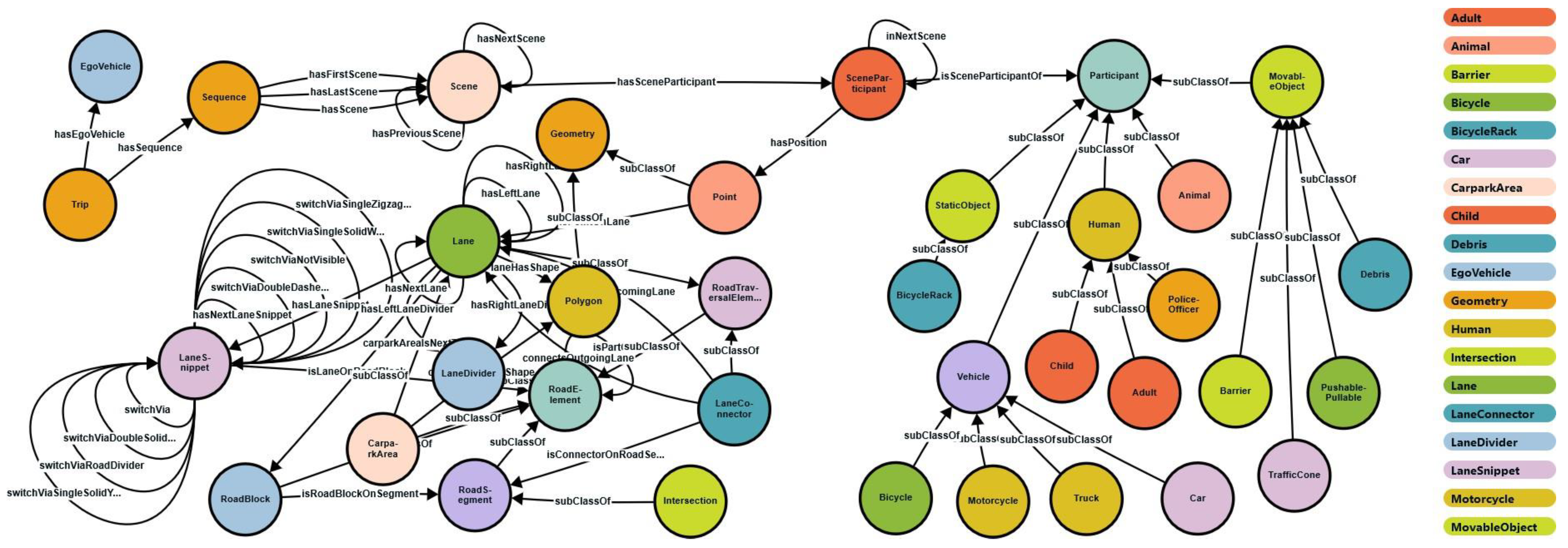

4.1.1. Scene Representation

- Scene: Refers to a snapshot of the environment, including both static and dynamic elements, as well as the self-representations of actors and observers and the relationships among these entities.

- Situation: Represents the complete set of circumstances considered when choosing an appropriate behavioral pattern at a specific moment. It includes all relevant conditions, options, and factors influencing behavior.

- Scenario: Describes the progression over time across multiple scenes, including actions, events, and goals that define this temporal development.

- Observation: Involves the process of performing a procedure to estimate or determine the value of a property of a feature of interest.

- Driver: A user with attributes specific to the driving context.

- Profile: Structured representation of user characteristics.

- Preference: A concept used in psychology, economics, and philosophy to describe a choice between alternatives. For instance, a person shows a preference for A over B if they would opt for A rather than B.

4.1.2. Object Tracking

4.1.3. Road Sign Detection

4.1.4. Scene Graph Augmented Risk Assessment

4.1.5. Scene Creation

4.1.6. Lane Graph Estimation for Urban Driving

4.2. Challenges and Potential Solutions in Existing Work

4.2.1. Maturity of Semantic Technologies

4.2.2. Knowledge Graph Embeddings (KGE) and Data Preparation

4.2.3. Handling Long Frame Gaps and Occlusions in Tracking

4.2.4. Intersection and Lane Detection Challenges

4.2.5. Expanding Predictive Capabilities and Scalability

4.2.6. Validation of Automated Driving Systems

5. Discussion for Ethical and Practical Considerations in AV Technologies

5.1. Challenges of Knowledge Graphs in AVs

5.2. Ethical Decision-Making in AVs: Moving Beyond Human Analogy

5.3. Addressing Fake Ethics in AVs

5.4. Accountability in AV Decision-Making

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Guizilini, V.C.; Li, J.; Ambrus, R.; Gaidon, A. Geometric Unsupervised Domain Adaptation for Semantic Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 8517–8527. [Google Scholar]

- Hou, R.; Li, J.; Bhargava, A.; Raventós, A.; Guizilini, V.C.; Fang, C.; Lynch, J.P.; Gaidon, A. Real-Time Panoptic Segmentation from Dense Detections. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 8520–8529. [Google Scholar]

- Faisal, A.; Kamruzzaman, M.; Yigitcanlar, T.; Currie, G. Understanding autonomous vehicles: A systematic literature review on capability, impact, planning and policy. J. Transp. Land Use 2019, 12, 45–72. [Google Scholar] [CrossRef]

- Waymo Safety Report; Waymo LLC: Mountain View, CA, USA, 2020.

- 2023 Sustainability Report; Journey to Zero; General Motors (GM): Detroit, MI, USA, 2023.

- Automated Driving Systems; A Vision for Safety; U.S. Department of Transportation, National Highway Traffic Safety Administration (NHTSA): Washington, DC, USA, 2017.

- Ministry of Industrial and Information Technology of the People’s Republic of China. Available online: https://wap.miit.gov.cn/jgsj/zbys/qcgy/art/2022/art_6fae62605ce34907939028daf6021c48.html (accessed on 30 September 2024).

- Society 5.0 and SIP Autonomous Driving. Available online: https://www.sip-adus.go.jp/exhibition/a2.html (accessed on 30 September 2024).

- Automated Driving Safety Evaluation Framework Ver. 3.0; Sectional Committee of AD Safety Evaluation, Automated Driving Subcommittee; Japan Automobile Manufacturers Association, Inc.: Tokyo, Japan, 2022.

- Xu, R.; Xia, X.; Li, J.; Li, H.; Zhang, S.; Tu, Z.; Meng, Z.; Xiang, H.; Dong, X.; Song, R.; et al. V2V4Real: A Real-World Large-Scale Dataset for Vehicle-to-Vehicle Cooperative Perception. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 13712–13722. [Google Scholar]

- Ogunrinde, I.O.; Bernadin, S. Deep Camera–Radar Fusion with an Attention Framework for Autonomous Vehicle Vision in Foggy Weather Conditions. Sensors 2023, 23, 6255. [Google Scholar] [CrossRef] [PubMed]

- Alaba, S.Y.; Gurbuz, A.C.; Ball, J.E. Emerging Trends in Autonomous Vehicle Perception: Multimodal Fusion for 3D Object Detection. World Electr. Veh. J. 2024, 15, 20. [Google Scholar] [CrossRef]

- Baczmanski, M.; Synoczek, R.; Wasala, M.; Kryjak, T. Detection-segmentation convolutional neural network for autonomous vehicle perception. In Proceedings of the 2023 27th International Conference on Methods and Models in Automation and Robotics (MMAR), Miedzyzdroje, Poland, 22–25 August 2023; pp. 117–122. [Google Scholar]

- Luettin, J.; Monka, S.; Henson, C.; Halilaj, L. A Survey on Knowledge Graph-Based Methods for Automated Driving. In Knowledge Graphs and Semantic Web. KGSWC 2022. Communications in Computer and Information Science; Villazón-Terrazas, B., Ortiz-Rodriguez, F., Tiwari, S., Sicilia, M.A., Martín-Moncunill, D., Eds.; Springer: Cham, Switzerland, 2022; Volume 1686. [Google Scholar] [CrossRef]

- Rezwana, S.; Lownes, N. Interactions and Behaviors of Pedestrians with Autonomous Vehicles: A Synthesis. Future Transp. 2024, 4, 722–745. [Google Scholar] [CrossRef]

- Sayed, S.A.; Abdel-Hamid, Y.; Hefny, H.A. Artificial intelligence-based traffic flow prediction: A comprehensive review. J. Electr. Syst. Inf. Technol. 2013, 10, 1–42. [Google Scholar] [CrossRef]

- Liu, Q.; Li, X.; Tang, Y.; Gao, X.; Yang, F.; Li, Z. Graph Reinforcement Learning-Based Decision-Making Technology for Connected and Autonomous Vehicles: Framework, Review, and Future Trends. Sensors 2023, 23, 8229. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2019, 8, 58443–58469. [Google Scholar] [CrossRef]

- Badue, C.S.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.F.; Berriel, R.; Paixão, T.M.; Mutz, F.W.; et al. Self-Driving Cars: A Survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Zhao, J.; Zhao, W.; Deng, B.; Wang, Z.; Zhang, F.; Zheng, W.; Cao, W.; Nan, J.; Lian, Y.; Burke, A.F. Autonomous driving system: A comprehensive survey. Expert Syst. Appl. 2023, 242, 122836. [Google Scholar] [CrossRef]

- Guizilini, V.C.; Vasiljevic, I.; Chen, D.; Ambrus, R.; Gaidon, A. Towards Zero-Shot Scale-Aware Monocular Depth Estimation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 9199–9209. [Google Scholar]

- Irshad, M.; Zakharov, S.; Liu, K.; Guizilini, V.C.; Kollar, T.; Gaidon, A.; Kira, Z.; Ambrus, R. NeO 360: Neural Fields for Sparse View Synthesis of Outdoor Scenes. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 9153–9164. [Google Scholar]

- Codevilla, F.; Santana, E.; López, A.M.; Gaidon, A. Exploring the Limitations of Behavior Cloning for Autonomous Driving. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9328–9337. [Google Scholar]

- DeCastro, J.A.; Liebenwein, L.; Vasile, C.I.; Tedrake, R.; Karaman, S.; Rus, D. Counterexample-Guided Safety Contracts for Autonomous Driving. In Proceedings of the Workshop on the Algorithmic Foundations of Robotics, Mérida, Mexico, 9–11 December 2018. [Google Scholar]

- Alcantarilla, P.F.; Stent, S.; Ros, G.; Arroyo, R.; Gherardi, R. Street-view change detection with deconvolutional networks. Auton. Robot. 2016, 42, 1301–1322. [Google Scholar] [CrossRef]

- Guizilini, V.C.; Li, J.; Ambrus, R.; Pillai, S.; Gaidon, A. Robust Semi-Supervised Monocular Depth Estimation with Reprojected Distances. In Proceedings of the Conference on Robot Learning, Virtual, 16–18 November 2020; Volume 100, pp. 503–512. [Google Scholar]

- Ambrus, R.; Guizilini, V.C.; Li, J.; Pillai, S.; Gaidon, A. Two Stream Networks for Self-Supervised Ego-Motion Estimation. In Proceedings of the Conference on Robot Learning, Osaka, Japan, 30 October–1 November 2019. [Google Scholar]

- Kanai, T.; Vasiljevic, I.; Guizilini, V.C.; Gaidon, A.; Ambrus, R. Robust Self-Supervised Extrinsic Self-Calibration. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 1932–1939. [Google Scholar]

- Lee, K.; Kliemann, M.; Gaidon, A.; Li, J.; Fang, C.; Pillai, S.; Burgard, W. PillarFlow: End-to-end Birds-eye-view Flow Estimation for Autonomous Driving. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 2007–2013. [Google Scholar]

- Guizilini, V.C.; Ambrus, R.; Pillai, S.; Gaidon, A. 3D Packing for Self-Supervised Monocular Depth Estimation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 2482–2491. [Google Scholar]

- Manhardt, F.; Kehl, W.; Gaidon, A. ROI-10D: Monocular Lifting of 2D Detection to 6D Pose and Metric Shape. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2064–2073. [Google Scholar]

- Chiu, H.; Li, J.; Ambrus, R.; Bohg, J. Probabilistic 3D Multi-Modal, Multi-Object Tracking for Autonomous Driving. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 14227–14233. [Google Scholar]

- Guizilini, V.C.; Senanayake, R.; Ramos, F.T. Dynamic Hilbert Maps: Real-Time Occupancy Predictions in Changing Environments. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4091–4097. [Google Scholar]

- Amini, A.; Rosman, G.; Karaman, S.; Rus, D. Variational End-to-End Navigation and Localization. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8958–8964. [Google Scholar]

- Koide, K.; Yokozuka, M.; Oishi, S.; Banno, A. Globally Consistent and Tightly Coupled 3D LiDAR Inertial Mapping. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 5622–5628. [Google Scholar]

- Koide, K.; Oishi, S.; Yokozuka, M.; Banno, A. Tightly Coupled Range Inertial Localization on a 3D Prior Map Based on Sliding Window Factor Graph Optimization. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 1745–1751. [Google Scholar]

- McAllister, R.T.; Wulfe, B.; Mercat, J.; Ellis, L.; Levine, S.; Gaidon, A. Control-Aware Prediction Objectives for Autonomous Driving. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 01–08. [Google Scholar]

- Lee, K.; Ros, G.; Li, J.; Gaidon, A. SPIGAN: Privileged Adversarial Learning from Simulation. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Guizilini, V.C.; Hou, R.; Li, J.; Ambrus, R.; Gaidon, A. Semantically-Guided Representation Learning for Self-Supervised Monocular Depth. In Proceedings of the International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 26–30 April 2020; 27 February 2020. Available online: https://openreview.net/pdf?id=ByxT7TNFvH (accessed on 1 August 2024).

- DeCastro, J.A.; Leung, K.; Aréchiga, N.; Pavone, M. Interpretable Policies from Formally-Specified Temporal Properties. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–7. [Google Scholar]

- Honda, K.; Koide, K.; Yokozuka, M.; Oishi, S.; Banno, A. Generalized LOAM: LiDAR Odometry Estimation With Trainable Local Geometric Features. IEEE Robot. Autom. Lett. 2022, 7, 12459–12466. [Google Scholar] [CrossRef]

- Koide, K.; Yokozuka, M.; Oishi, S.; Banno, A. Globally Consistent 3D LiDAR Mapping With GPU-Accelerated GICP Matching Cost Factors. IEEE Robot. Autom. Lett. 2021, 6, 8591–8598. [Google Scholar] [CrossRef]

- Liu, B.; Adeli, E.; Cao, Z.; Lee, K.; Shenoi, A.; Gaidon, A.; Niebles, J. Spatiotemporal Relationship Reasoning for Pedestrian Intent Prediction. IEEE Robot. Autom. Lett. 2020, 5, 3485–3492. [Google Scholar] [CrossRef]

- Ghaffari Jadidi, M.; Clark, W.; Bloch, A.M.; Eustice, R.M.; Grizzle, J.W. Continuous Direct Sparse Visual Odometry from RGB-D Images. In Proceedings of the Robotics: Science and Systems (RSS), Breisgau, Germany, 22–26 June 2019; Available online: https://www.roboticsproceedings.org/rss15/p44.pdf (accessed on 1 August 2024).

- Cao, Z.; Biyik, E.; Wang, W.Z.; Raventós, A.; Gaidon, A.; Rosman, G.; Sadigh, D. Reinforcement Learning based Control of Imitative Policies for Near-Accident Driving. In Proceedings of the Robotics: Science and Systems (RSS), Virtually, 12–16 July 2020. [Google Scholar]

- Gideon, J.; Stent, S.; Fletcher, L. A Multi-Camera Deep Neural Network for Detecting Elevated Alertness in Drivers. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2931–2935. [Google Scholar]

- Mangalam, K.; Adeli, E.; Lee, K.; Gaidon, A.; Niebles, J. Disentangling Human Dynamics for Pedestrian Locomotion Forecasting with Noisy Supervision. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2019; pp. 2773–2782. [Google Scholar]

- Aldibaja, M.; Suganuma, N.; Yoneda, K.; Yanase, R. Challenging Environments for Precise Mapping Using GNSS/INS-RTK Systems: Reasons and Analysis. Remote Sens. 2022, 14, 4058. [Google Scholar] [CrossRef]

- Aldibaja, M.; Suganuma, N. Graph SLAM-Based 2.5D LIDAR Mapping Module for Autonomous Vehicles. Remote Sens. 2021, 13, 5066. [Google Scholar] [CrossRef]

- Aldibaja, M.; Suganuma, N.; Yanase, R. 2.5D Layered Sub-Image LIDAR Maps for Autonomous Driving in Multilevel Environments. Remote Sens. 2022, 14, 5847. [Google Scholar] [CrossRef]

- Yoneda, K.; Kuramoto, A.; Suganuma, N.; Asaka, T.; Aldibaja, M.; Yanase, R. Robust Traffic Light and Arrow Detection Using Digital Map with Spatial Prior Information for Automated Driving. Sensors 2020, 20, 1181. [Google Scholar] [CrossRef]

- Yanase, R.; Hirano, D.; Aldibaja, M.; Yoneda, K.; Suganuma, N. LiDAR- and Radar-Based Robust Vehicle Localization with Confidence Estimation of Matching Results. Sensors 2022, 22, 3545. [Google Scholar] [CrossRef]

- Aldibaja, M.; Yanase, R.; Suganuma, N. Waypoint Transfer Module between Autonomous Driving Maps Based on LiDAR Directional Sub-Images. Sensors 2024, 24, 875. [Google Scholar] [CrossRef]

- Yan, Z.; Yang, B.; Wang, Z.; Nakano, K. A Predictive Model of a Driver’s Target Trajectory Based on Estimated Driving Behaviors. Sensors 2023, 23, 1405. [Google Scholar] [CrossRef]

- Nacpil, E.J.; Nakano, K. Surface Electromyography-Controlled Automobile Steering Assistance. Sensors 2020, 20, 809. [Google Scholar] [CrossRef] [PubMed]

- Yoneda, K.; Suganuma, N.; Yanase, R.; Aldibaja, M. Automated driving recognition technologies for adverse weather conditions. Iatss Res. 2019, 43, 253–262. [Google Scholar] [CrossRef]

- Aldibaja, M.; Suganuma, N.; Yoneda, K.; Yanase, R.; Kuramoto, A. Supervised Calibration Method for Improving Contrast and Intensity of LIDAR Laser Beams. In Proceedings of the International Conference on Multisensor Fusion and Integration for Intelligent Systems, Daegu, South Korea, 16–18 November 2017. [Google Scholar]

- Yoneda, K.; Kuramoto, A.; Suganuma, N. Convolutional neural network based vehicle turn signal recognition. In Proceedings of the International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 24–26 November 2017; pp. 204–205. [Google Scholar]

- Cheng, S.; Wang, Z.; Yang, B.; Li, L.; Nakano, K. Quantitative Evaluation Methodology for Chassis-Domain Dynamics Performance of Automated Vehicles. IEEE Trans. Cybern. 2022, 53, 5938–5948. [Google Scholar] [CrossRef] [PubMed]

- Hou, L.; Li, S.E.; Yang, B.; Wang, Z.; Nakano, K. Structural Transformer Improves Speed-Accuracy Trade-Off in Interactive Trajectory Prediction of Multiple Surrounding Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 24778–24790. [Google Scholar] [CrossRef]

- Cheng, S.; Yang, B.; Wang, Z.; Nakano, K. Spatio-Temporal Image Representation and Deep-Learning-Based Decision Framework for Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 24866–24875. [Google Scholar] [CrossRef]

- Huang, C.; Yang, B.; Nakano, K. Impact of duration of monitoring before takeover request on takeover time with insights into eye tracking data. Accid. Anal. Prev. 2023, 185, 107018. [Google Scholar] [CrossRef]

- Shimizu, T.; Koide, K.; Oishi, S.; Yokozuka, M.; Banno, A.; Shino, M. Sensor-independent Pedestrian Detection for Personal Mobility Vehicles in Walking Space Using Dataset Generated by Simulation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 1788–1795. [Google Scholar]

- Wang, Z.; Guan, M.; Lan, J.; Yang, B.; Kaizuka, T.; Taki, J.; Nakano, K. Classification of Automated Lane-Change Styles by Modeling and Analyzing Truck Driver Behavior: A Driving Simulator Study. IEEE Open J. Intell. Transp. Syst. 2022, 3, 772–785. [Google Scholar] [CrossRef]

- Hogan, A.; Blomqvist, E.; Cochez, M.; d’Amato, C.; de Melo, G.; Gutierrez, C.; Gayo, J.E.; Kirrane, S.; Neumaier, S.; Polleres, A.; et al. Knowledge Graphs. ACM Comput. Surv. (CSUR) 2020, 54, 1–37. [Google Scholar] [CrossRef]

- Cai, H.; Zheng, V.W.; Chang, K.C. A Comprehensive Survey of Graph Embedding: Problems, Techniques, and Applications. IEEE Trans. Knowl. Data Eng. 2017, 30, 1616–1637. [Google Scholar] [CrossRef]

- Ji, S.; Pan, S.; Cambria, E.; Marttinen, P.; Yu, P.S. A Survey on Knowledge Graphs: Representation, Acquisition, and Applications. IEEE Trans. Neural Netw. Learn. Syst. 2020, 33, 494–514. [Google Scholar] [CrossRef]

- Lilis, Y.; Zidianakis, E.; Partarakis, N.; Antona, M.; Stephanidis, C. Personalizing HMI Elements in ADAS Using Ontology Meta-Models and Rule Based Reasoning. In Universal Access in Human–Computer Interaction. Design and Development Approaches and Methods, Proceedings of the 11th International Conference, UAHCI 2017, Held as Part of HCI International 2017, Vancouver, BC, Canada, 9–14 July 2017; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio’, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the 6th International Conference on Learning Representations (ICLR), Conference Track Proceedings, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Monka, S.; Halilaj, L.; Rettinger, A. A Survey on Visual Transfer Learning using Knowledge Graphs. Semant. Web 2022, 13, 477–510. [Google Scholar] [CrossRef]

- Halilaj, L.; Dindorkar, I.; Lüttin, J.; Rothermel, S. A Knowledge Graph-Based Approach for Situation Comprehension in Driving Scenarios. In Proceedings of the Extended Semantic Web Conference, Heraklion, Greece, 6–10 June 2021. [Google Scholar]

- Malawade, A.V.; Yu, S.Y.; Hsu, B.; Kaeley, H.; Karra, A.; Faruque, M.A. roadscene2vec: A Tool for Extracting and Embedding Road Scene-Graphs. Knowl. Based Syst. 2021, 242, 108245. [Google Scholar] [CrossRef]

- Zipfl, M.; Zöllner, J.M. Towards Traffic Scene Description: The Semantic Scene Graph. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 3748–3755. [Google Scholar]

- Mlodzian, L.; Sun, Z.; Berkemeyer, H.; Monka, S.; Wang, Z.; Dietze, S.; Halilaj, L.; Luettin, J. nuScenes Knowledge Graph—A comprehensive semantic representation of traffic scenes for trajectory prediction. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Paris, France, 2–6 October 2023; pp. 42–52. [Google Scholar]

- Zaech, J.; Dai, D.; Liniger, A.; Danelljan, M.; Gool, L.V. Learnable Online Graph Representations for 3D Multi-Object Tracking. IEEE Robot. Autom. Lett. 2022, 7, 5103–5110. [Google Scholar] [CrossRef]

- Kim, J.E.; Henson, C.; Huang, K.; Tran, T.A.; Lin, W.Y. Accelerating Road Sign Ground Truth Construction with Knowledge Graph and Machine Learning. In Intelligent Computing. Lecture Notes in Networks and Systems; Arai, K., Ed.; Springer: Cham, Switzerland, 2021; Volume 284. [Google Scholar]

- Yu, S.Y.; Malawade, A.V.; Muthirayan, D.; Khargonekar, P.P.; Faruque, M.A. Scene-Graph Augmented Data-Driven Risk Assessment of Autonomous Vehicle Decisions. IEEE Trans. Intell. Transp. Syst. 2020, 23, 7941–7951. [Google Scholar] [CrossRef]

- Bagschik, G.; Menzel, T.; Maurer, M. Ontology based Scene Creation for the Development of Automated Vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 1813–1820. [Google Scholar]

- Urbieta, I.R.; Nieto, M.; García, M.; Otaegui, O. Design and Implementation of an Ontology for Semantic Labeling and Testing: Automotive Global Ontology (AGO). Appl. Sci. 2021, 11, 7782. [Google Scholar] [CrossRef]

- Zürn, J.; Vertens, J.; Burgard, W. Lane Graph Estimation for Scene Understanding in Urban Driving. IEEE Robot. Autom. Lett. 2021, 6, 8615–8622. [Google Scholar] [CrossRef]

- Li, T.; Chen, L.; Geng, X.; Wang, H.; Li, Y.; Liu, Z.; Jiang, S.; Wang, Y.; Xu, H.; Xu, C.; et al. Graph-based Topology Reasoning for Driving Scenes. arXiv 2023, arXiv:2304.05277. [Google Scholar]

- Wickramarachchi, R.; Henson, C.A.; Sheth, A. An Evaluation of Knowledge Graph Embeddings for Autonomous Driving Data: Experience and Practice. In Proceedings of the AAAI Spring Symposium Combining Machine Learning with Knowledge Engineering, Palo Alto, CA, USA, 23–25 March 2020. [Google Scholar]

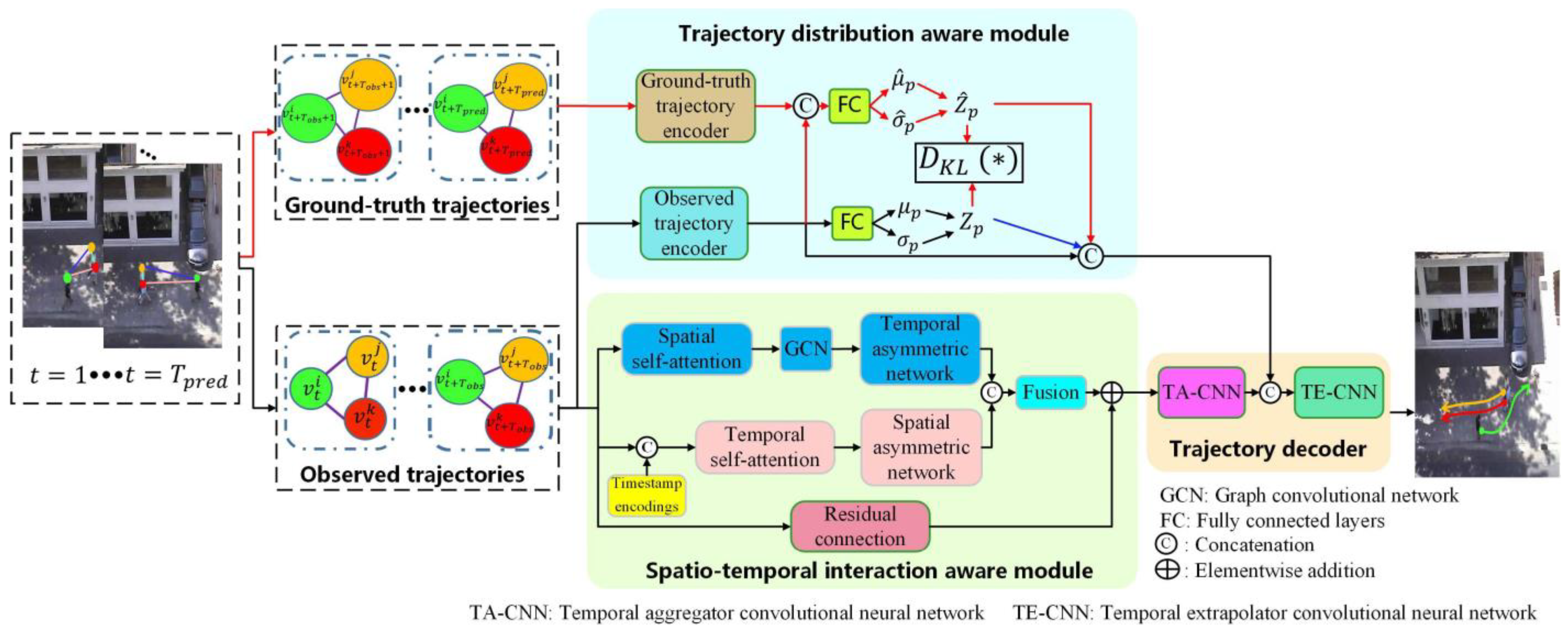

- Wang, R.; Song, X.; Hu, Z.; Cui, Y. Spatio-Temporal Interaction Aware and Trajectory Distribution Aware Graph Convolution Network for Pedestrian Multimodal Trajectory Prediction. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, Z.; Halilaj, L.; Luettin, J. SemanticFormer: Holistic and Semantic Traffic Scene Representation for Trajectory Prediction Using Knowledge Graphs. IEEE Robot. Autom. Lett. 2024, 9, 7381–7388. [Google Scholar] [CrossRef]

- Sun, R.; Lingrand, D.; Precioso, F. Exploring the Road Graph in Trajectory Forecasting for Autonomous Driving. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Paris, France, 2–6 October 2023; pp. 71–80. [Google Scholar]

- Herkert, J.; Borenstein, J.; Miller, K. The Boeing 737 MAX: Lessons for Engineering Ethics. Sci. Eng. Ethics 2020, 26, 2957–2974. [Google Scholar] [CrossRef]

| Survey Coverage | [18] | [19] | [20] | [14] | Ours |

|---|---|---|---|---|---|

| Perception | √ | √ | √ | √ | √ |

| Localization | √ | √ | √ | √ | √ |

| Mapping | √ | √ | √ | √ | √ |

| Moving Object Detection and Tracking | √ | √ | √ | √ | √ |

| Traffic Signalization Detection | √ | ||||

| Path Planning | √ | √ | √ | ||

| Behavior Selection | √ | ||||

| Motion Planning | √ | √ | √ | ||

| Obstacle Avoidance | √ | ||||

| Control | √ | √ | |||

| Sensors and Hardware | √ | ||||

| Road and Lane Detection | √ | ||||

| Assessment | √ | ||||

| Decision-Making | √ | √ | √ | √ | √ |

| Human–Machine Interaction | √ | √ | |||

| Datasets and Tools | √ | ||||

| Semantic Segmentation | √ | ||||

| Trajectory Prediction | √ | ||||

| Simulator and Scenario Generation | √ | √ | |||

| KGs Applied to AVs | √ | √ | |||

| √ | ||||

| √ | √ | |||

| √ | ||||

| √ | √ | |||

| √ | ||||

| √ | ||||

| √ | ||||

| √ | ||||

| √ | ||||

| √ | ||||

| √ | ||||

| √ | ||||

| Current Challenges and Limitations | √ | √ | |||

| Future Directions | √ | ||||

| Development in Industry | √ | ||||

| Ethical and Practical Considerations in AV Technologies | √ |

| Research Question | Focus |

|---|---|

| What are the key applications in AV technologies and KGs? | Application |

| Which aspects of KG integration does the article address? | Contribution |

| What methods do the article discuss for integrating KGs with AV systems? | Methodology |

| What are the limitations of AVs, and what future research does the article suggest? | Limitations and Future Work |

| Conferences/Journals | Publisher | References/Published Year |

|---|---|---|

| IEEE/CVF International Conference on Computer Vision (ICCV) | IEEE | [1] 2021, [21] 2023, [22] 2023, [23] 2019 |

| Workshop on the Algorithmic Foundations of Robotics | Springer | [24] 2018 |

| Autonomous Robots | Springer | [25] 2016 |

| Conference on Robot Learning (CoRL) | PMLR | [26] 2020, [27] 2019 |

| IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) | IEEE | [28] 2023, [29] 2020 |

| IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) | IEEE | [2] 2020, [10] 2023, [30] 2019, [31] 2018 |

| International Conference on Robotics and Automation (ICRA) | IEEE | [32] 2020, [33] 2019, [34] 2018, [35] 2022, [36] 2024, [37] 2022 |

| International Conference on Learning Representations (ICLR) | ICLR | [38] 2018, [39] 2020 |

| IEEE/International Conference on Intelligent Transportation Systems (ITSC) | IEEE | [40] 2020 |

| IEEE Robotics and Automation Letters | IEEE | [41] 2022, [42] 2021, [43] 2020 |

| Robotics: Science and Systems (RSS) | MIT Press | [44] 2019, [45] 2020 |

| IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) | IEEE | [46] 2018 |

| IEEE Winter Conference on Applications of Computer Vision (WACV) | IEEE | [47] 2019 |

| Remote Sensing | MDPI | [48] 2022, [49] 2021, [50] 2022 |

| Sensors (Basel, Switzerland) | MDPI | [11] 2023, [17] 2023, [51] 2020, [52] 2022, [53] 2024 [54] 2023, [55] 2020, |

| International Association of Traffic and Safety Sciences (IATSS) | Elsevier | [56] 2019 |

| International Conference on Multisensor Fusion and Integration for Intelligent Systems | IEEE | [57] 2017 |

| International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS) | IEEE | [58] 2017 |

| IEEE Transactions on Cybernetics | IEEE | [59] 2022 |

| IEEE Transactions on Intelligent Transportation Systems | IEEE | [60] 2022, [61] 2022 |

| Accident Analysis and Prevention | Elsevier | [62] 2023 |

| International Conference on Pattern Recognition (ICPR) | IEEE | [63] 2020 |

| Scholar | Research Focus | No. of Published Papers | Conferences/ Journals |

|---|---|---|---|

| V.C. Guizilini | Semantic segmentation [1,2,39]; Monocular Depth Estimation [21,26,30,39]; Sparse View Synthesis [22]; Calibration [28]; Ego-Motion Estimation [27]; Occupancy Prediction [33] | 10 | ICCV, IROS, ICLR, CVPR, CoRL, ICRA |

| A. Gaidon | Semantic segmentation [1,2,39]; Monocular Depth Estimation [21,26,30,39]; Sparse View Synthesis [22]; Calibration [28]; Object Detection [31]; Flow Estimation [29]; Ego-Motion Estimation [27]; Occupancy Prediction [33]; Sparse Visual Odometry [44]; Pedestrian Locomotion Forecasting [47]; Near-Accident Driving [45]; Behavior Cloning [23]; Pedestrian Intent Prediction [43] | 18 | ICCV, IROS, ICLR, CVPR, CoRL, ICRA, IEEE Robotics and Automation Letters, RSS, WACV |

| R. Ambrus | Semantic segmentation [1,39]; Monocular Depth Estimation [21,26,30,39]; Sparse View Synthesis [22]; Calibration [28]; Multi-Object Tracking [32]; Ego-Motion Estimation [27] | 9 | ICCV, CoRL, IROS, ICRA, ICRL, CVPR |

| Key Component | Popularity | Reasons |

|---|---|---|

| Sensors and perception systems | Very high | High research volume on sensor accuracy and data processing. |

| Localization and mapping | High | Significant focus on SLAM and GPS-based localization. |

| Path planning | High | Advancements in planning algorithms for efficient AV navigation. |

| Control systems | Moderate to high | Ongoing research in control mechanisms integral to AV operation. |

| Decision-making | High | Significant focus on machine learning and AI-based decision-making. |

| Human–machine interface (HMI) | Moderate | Increasing considerations for user experience. |

| Communication systems | Moderate | Growing interest in 5G and V2X technologies. |

| Safety and redundancy | Moderate to high | Substantial interest in AV reliability and public acceptance. |

| Ethical and legal considerations | Moderate | Rising importance due to regulatory and societal impact. |

| Societal impact and infrastructure | Moderate | Long-term AV integration amid growing policy discussions. |

| Key Component | Research Focus |

|---|---|

| Perception | Segmentation [1,2,38]; Street-View Change Detection [25]; Monocular Depth Estimation [21,26,30,39]; Sparse View Synthesis [22]; Calibration [28,57]; Multi-Object Tracking [32]; Object Detection [31]; Flow Estimation [29]; Ego-Motion Estimation [27]; Occupancy Prediction [33]; Visual Odometry and Image Registration [44]; Driver Alertness Detection [46]; Pedestrian Locomotion Prediction [47,63]; Traffic Lights and Arrow detection [51]; Interpreting Environmental Conditions [56]; Recognition and Matching Road Surface Features [52]; Turn Signal Recognition [58]; Gaze Tracking [62]; Spatio-Temporal Image Representation [61] |

| Localization and Mapping | Updating and Maintaining Maps [25]; Depth-Aware Map [26]; Multi-Camera Maps [28]; Ego-Motion Estimation [27]; Creating and Updating Occupancy Maps [33]; Visual Odometry [41,44]; Probabilistic Localization [34]; Mapping with GNSS/INS-RTK [48]; Transferring Lane Graphs and Different Map Representation [53]; Generating 2.5D maps using LIDAR and Graph SLAM [49]; 2.5D Maps for Multilevel Environments and Vehicle Localization [50]; Map Generation and Localization [57]; 3D LiDAR Mapping and Location [35]; 3D Mapping [36,42] |

| Path Planning | Flow Estimation [29]; Point-to-Point Navigation [34]; Control-Aware Prediction [37]; Planning Near-Accident Driving Scenarios [45]; Safety Trajectory Generation [58]; Driver’s Target Trajectory [54]; Interactive Trajectory Prediction [60]; Lane-Change Styles Classification [64] |

| Control | Generating Control Commands [34]; Control-Aware Prediction [37]; Controlling Vehicle’s Actions [45]; Automated Lane-Change Control [64]; Safety Verification [24]; Interpretable Policies [40]; Behavior Cloning [23]; Chassis Performance [59]; Controlling Vehicle Steering [55] |

| Decision-Making | Weather Conditions in Decision-Making [56]; Predicted Probability Distribution [34]; Control-Aware Prediction [37]; Switching Between Different Driving Modes [45]; Decision-Marking on Predicted Driver’s Behavior [58]; Driver’s Target Trajectory [54]; Decision-Making on Lane-Changes [64]; Interpretable Policies [40]; Behavior Cloning [23]; Chassis Performance [59]; Decision-Based Dynamic Traffic Conditions [61]; Pedestrian Intent Prediction [43]; Monitoring Duration on Takeover Time [62] |

| Human–Machine Interaction (HMI) | Sparse View Synthesis and Scene Visualization [22]; Real-Time Feedback based on Driver State [46]; Driving Simulation and User Interaction [64]; Controlling Vehicle Steering [55]; Monitoring Duration and Eye Tracking [62] |

| Sensor Type | Placement in Automated Car | Some Exampled Use Cases |

|---|---|---|

| Cameras | Front, sides, rear, roof | Lane-keeping, pedestrian detection, object recognition |

| LiDAR 1 | Roof, bumpers, sides | 3D object detection, terrain mapping, localization |

| Radar 2 | Front and rear bumpers | Adaptive cruise control, collision detection |

| Ultrasonic Sensors | Front and rear bumpers, sides | Parking assistance, close-range obstacle detection |

| GPS | Roof, dashboard | Route planning, navigation, localization |

| IMU | Integrated in-vehicle systems | Stabilization, motion tracking, localization |

| Odometry Sensors | Wheels or chassis | Localization, motion planning, distance tracking |

| V2X 3 Sensors | Roof, exterior antennas | Traffic management, safety alerts, cooperative driving |

| Infrared (IR) Sensors | Front bumper, roof | Night vision, obstacle detection in low visibility |

| Magnetic Sensors | Bottom of the vehicle | Lane-keeping in autonomous shuttles |

| Barometric Pressure Sensors | Inside vehicle sensor suite | Altitude measurement, terrain planning |

| Laser Rangefinders | Front and rear of the vehicle | Object detection, parking assistance |

| Proximity Sensors | Front and rear bumpers | Parking, collision avoidance |

| Environmental Sensors | Exterior, often on the roof | Adjusting driving in response to weather |

| Research Focus | Approach | Key Contributions |

|---|---|---|

| Scene Representation | CoSI [71] | Integrates heterogeneous sources into a unified KG structure for situation classification, difficulty assessment, and trajectory prediction using GNN architecture. |

| roadscene2vec [72] | Generates scene graphs for risk assessment, collision prediction, and model explainability. | |

| Semantic Scene Graph [73] | Captures traffic participants’ interactions and relative positions. | |

| nSKG [74] | Represents scene participants and road elements, including semantic and spatial relationships. | |

| Object Tracking | 3D multi-object tracking [75] | Graph structures integrate detection and track states to improve 3D multi-object tracking accuracy and stability. |

| Road Sign Detection | KGs with VPE [76] | Combines KGs with variational prototyping encoder (VPE) for improved road sign classification and accurate annotation. |

| Scene Graph-Augmented Risk Assessment | Scene graph sequence [77] | Scene graphs with multi-relation GCN, LSTM, and attention layers assess driving maneuver risks, improving object recognition and scene comprehension. |

| Scene Creation | Ontologies [78] | Ontologies model expert knowledge for generating diverse traffic scenes and enhancing scenario creation for AV testing. |

| AGO [79] | Automotive global ontology (AGO) as a knowledge organization system (KOS) for semantic labeling and scenario-based testing. | |

| Lane Graph Estimation | LaneGraphNet [80] | Estimates lane geometry from BEV images by framing it as a graph estimation problem. |

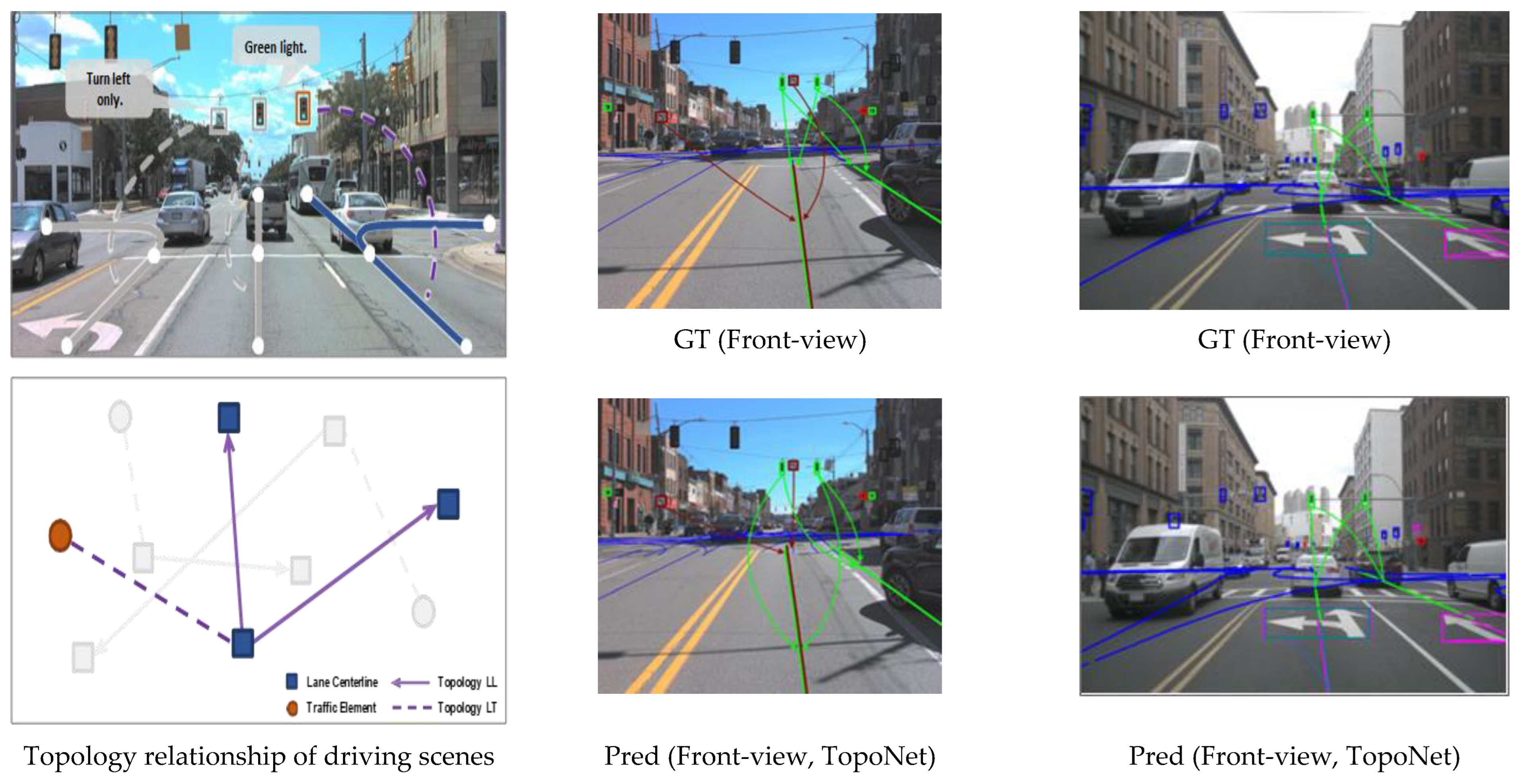

| TopoNet [81] | Uses a scene graph neural network to model relationships in driving scenes, understanding traffic element connections. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Htun, S.N.N.; Fukuda, K. Integrating Knowledge Graphs into Autonomous Vehicle Technologies: A Survey of Current State and Future Directions. Information 2024, 15, 645. https://doi.org/10.3390/info15100645

Htun SNN, Fukuda K. Integrating Knowledge Graphs into Autonomous Vehicle Technologies: A Survey of Current State and Future Directions. Information. 2024; 15(10):645. https://doi.org/10.3390/info15100645

Chicago/Turabian StyleHtun, Swe Nwe Nwe, and Ken Fukuda. 2024. "Integrating Knowledge Graphs into Autonomous Vehicle Technologies: A Survey of Current State and Future Directions" Information 15, no. 10: 645. https://doi.org/10.3390/info15100645

APA StyleHtun, S. N. N., & Fukuda, K. (2024). Integrating Knowledge Graphs into Autonomous Vehicle Technologies: A Survey of Current State and Future Directions. Information, 15(10), 645. https://doi.org/10.3390/info15100645