1. Introduction

In today’s continually developing world, there has been a significant surge in the demand for bespoke products. Customisable and autonomous roles employing orthodox resources are not possible with a traditional factory (TF) setup [

1]. Personalised and small-lot items cannot be manufactured economically and efficiently by conventional manufacturing as it is unable to monitor and regulate automated and complex operations. Consequently, the challenges posed by rapidly evolving technologies are beyond the reach of conventional manufacturing [

2]. The advent of Industry 4.0 has completely transformed the paradigm of the industrial sector by bring automation and digitization in smart factories to attention. A fundamental component of smart factories is the integration of cutting-edge technology, such as big data analytics, digital information and predictive analytics, which provides opportunities for improved production and operational efficiency [

3].

Robots have historically been employed for industrial processes to carry out laborious, precise and repetitive tasks. However, as technology has advanced, researchers have begun to discover ways to merge human skills, decision-making and critical thinking with the strength, repeatability and accuracy of robots to achieve intelligent systems able to execute complex tasks [

4]. There exists a requirement for robots to coexist with humans to achieve desired levels of efficiency, quality and customisation as automation technologies are used in industrial setups progressively [

5]. A practical solution for fulfilling these goals is human–robot collaboration (HRC), where humans and robots collaborate, and their respective capabilities can be merged to maximise productivity and achieve the required level of automation. The conventional method of using primitive robots is therefore being altered by the inclusion of HRC in manufacturing setups [

6]. To adapt to variable consumer needs and requirement for bespoke products, manufacturers are upgrading their workplaces. HRC strategies with adaptable solutions can substantially boost productivity and efficiency in smart manufacturing setups [

7].

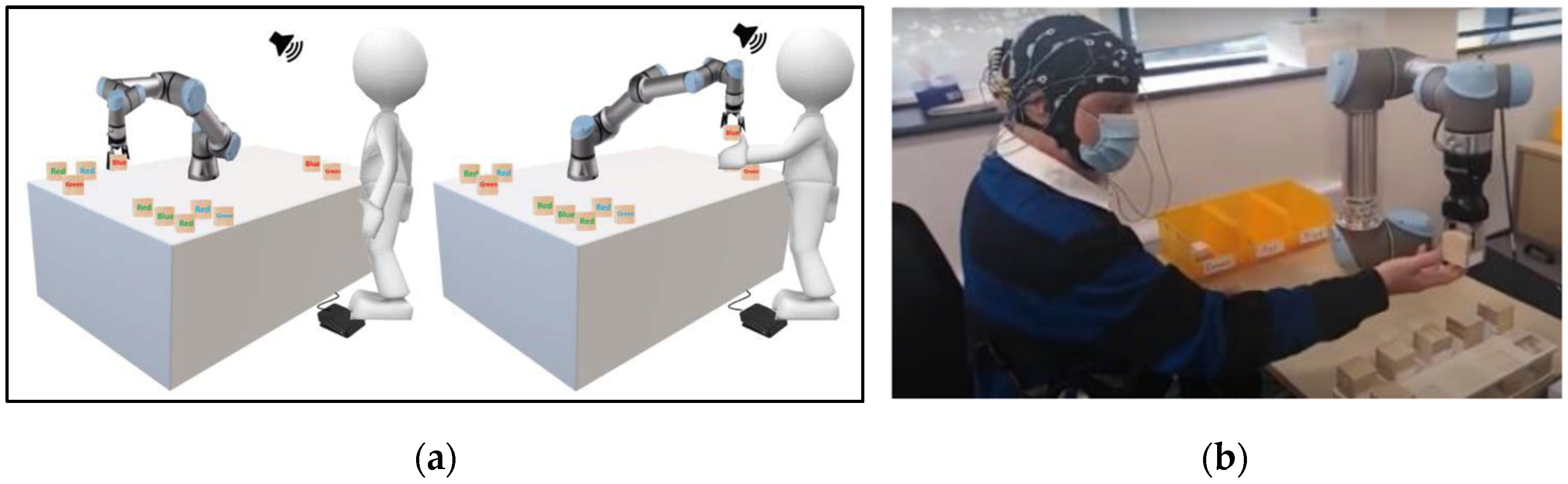

Cobots, or collaborative robotic systems, are made to operate safely alongside human workers in collaborative workspaces [

8]. In contrast to conventional robots, cobots use advanced sensors, intelligent algorithms and safety features to avoid collisions and enable safe interaction. Their versatility, ease of programming and adaptability to variable tasks make them appropriate for a wide range of applications in sectors like manufacturing, healthcare and logistics [

9].

Even though HRC is one of the emerging fields among researchers, there is still a lack of knowledge about the psycho-physical impact of close interaction of humans with cobots on human workers’ efficiency and productivity [

10]. There can be several causes of HRC leading to cognitive stress and ergonomic issues. Psychological stress and compromised performance of human workers while working in close proximity to cobots can be a consequence of cobots’ inability to give cues and respond to unforeseen circumstances [

11], however, cobots may become capable of giving audio and visual reactions as technology is advancing. The inability to immediately control the undesired rapid movement of robots may be overwhelming for human employees. Enhancing human productivity and occupational safety in the context of HRC requires digging deep to understand these stressors and proposing ways to mitigate them.

Mental stress assessment has been done by many researchers using traditional measures which include subjective and behavioural measures. Behavioural measures focus on observable actions such as changes in task performance and physical actions like speech patterns, facial expressions and gaze variables, indicating how mental stress manifests physically [

12,

13,

14]. In 2017, Aylward, J. and Robinson, O. J. have used behavioural measures like accuracy and target response time to analyse the threat-intensified performance on an inhibitory control task [

15]. Assessments of psychological domains related to attention, execution and psychomotor speed are often conducted using reaction time (RT) as the behavioural assessment method. Auditory and visual reaction times have been examined by Khode, V. et al. to assess the mental state of hypertensive and non-hypertensive participants using mini-mental state examination (MMSE) as the task [

16]. In a study done in 2016, Huang, M., et al. explored the impact of mental stress on the gaze-click pattern by investigating the trends in gaze data, as a behavioural parameter, during a mouse-click task [

17]. On the other hand, subjective measures depend on self-reported information from individuals administering tools such as questionnaires, interviews, surveys, self-report diaries, rating scales and psychological evaluations to gauge their degree of cognitive stress [

18]. Perceived stress and mental workload are frequently measured using tools like the Perceived Stress Scale (PSS) [

19] and the NASA Task Load Index (NASA-TLX) questionnaire [

20]. A 14-item PSS self-report questionnaire, to gauge respondents’ feelings of overload, unpredictability and uncontrollability, has been used by Lesage, F. X., and Berjot, S. to validate visual analogue scale (VAS) for stress assessment in clinical occupational health setups [

19,

21]. Instantaneous Self-Assessment (ISA) is another subjective assessment tool for mental workload, validated by Leggatt, A., et al. in 2023 [

22]. To investigate how human behaviour towards other humans can be translated into robot behaviour, Jost, J., et al. administered the user experience questionnaire (UEQ) [

23], the negative attitude towards robots scale (NARS) [

24] and the godspeed questionnaire [

25] in 2019 [

26]. To analyse versatility and validity, the state-trait anxiety inventory (STAI) has been used by Legler, F., et al. in a virtual-reality (VR) experiment, creating a human–robot collaborative environment, to compare the outcome to a real-world experiment [

27]. Using the technology acceptance model (TAM) and NASA-TLX, which measure a user’s acceptance of technology and cognitive task load, respectively, Rossato et al. compared the subjective experiences of senior and younger employees in HRC [

28].

While subjective and behavioural assessments provide genuine insights into cognitive stress, physiological measures are a more objective way to monitor mental stress by tracking physical changes in the human body. Since the last decade, researchers have been using either physiological measures or multimodal approaches to combine conventional measures with physiological measures to achieve a comprehensive understanding of the mental state of humans [

29,

30]. Heart rate variability (HRV) is one of the physiological measures that is usually reduced due to cognitive stress, indicating reduced parasympathetic activity [

31]. Cortisol levels, the stress hormone elevate as a result of mental stress and can be measured through saliva, blood or urine [

32]. Other physiological measures include electrodermal activity (EDA), gaze variables, body temperature etc. [

33,

34]. In 2021, ECG signals have been administered for real-time stress monitoring using deep learning methods including convolutional neural networks (CNN) and bidirectional long short-term memory (BiLSTM) [

35]. In another study of 2022, ECG, voice and facial expressions have been employed for acute stress detection using a real-time deep learning framework, where stress-related features are extracted using ResNet50 and I3D and the temporal attention module highlighting the differentiating temporal representation for facial expressions about stress [

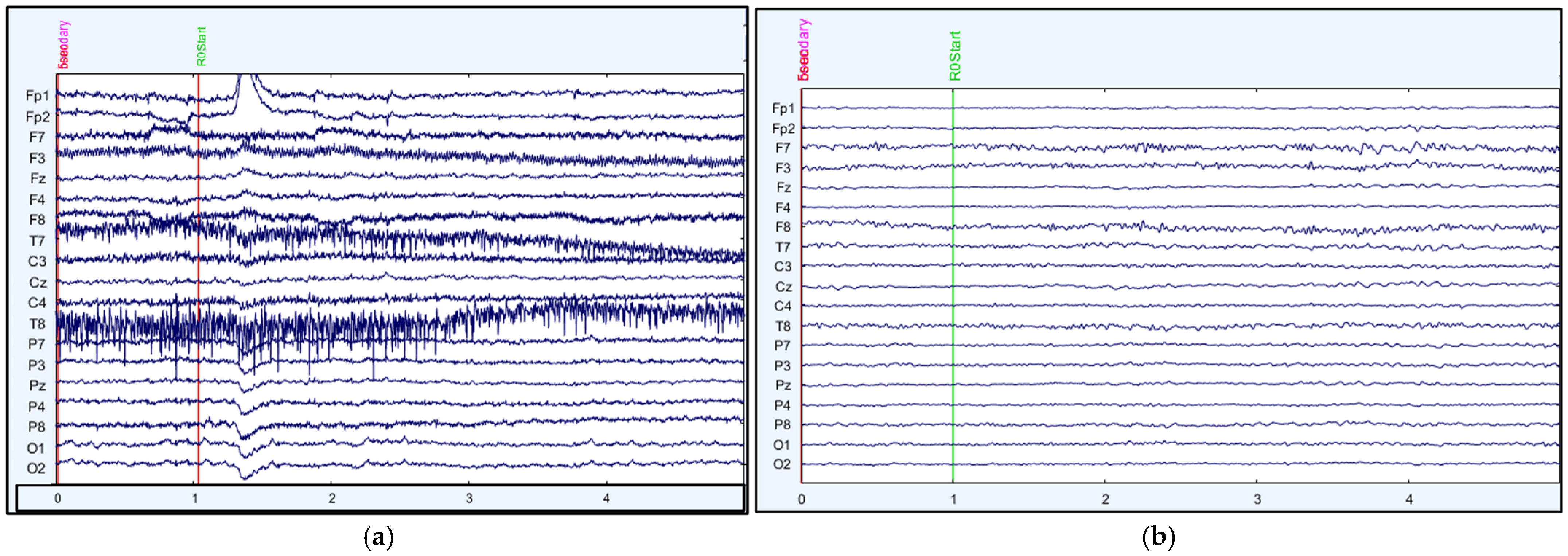

36]. Brain signals are one of the most important physiological indicators for evaluating mental stress, offering insights into the neurological processes involved in mental stress responses. Several techniques including electroencephalography (EEG) [

37], functional magnetic resonance imaging (fMRI) [

38] and functional near-infrared spectroscopy (fNIRS) [

39], can be used to monitor the electrical and hemodynamic activity of the brain.

The assessment of mental stress has been a long-standing challenge in the field of psychology and healthcare. As stated earlier, traditional measures can produce inaccurate results due to problems such as issues of recall, attention, falsification and fabrication, specifically in case of subjective measures [

40]. The participant has full control over his responses when filling out a subjective assessment questionnaire as well as his actions during behavioural assessment. While using the behavioural measures, results can come out to be inaccurate as a consequence of biased behaviour and intentional false performance of the participant [

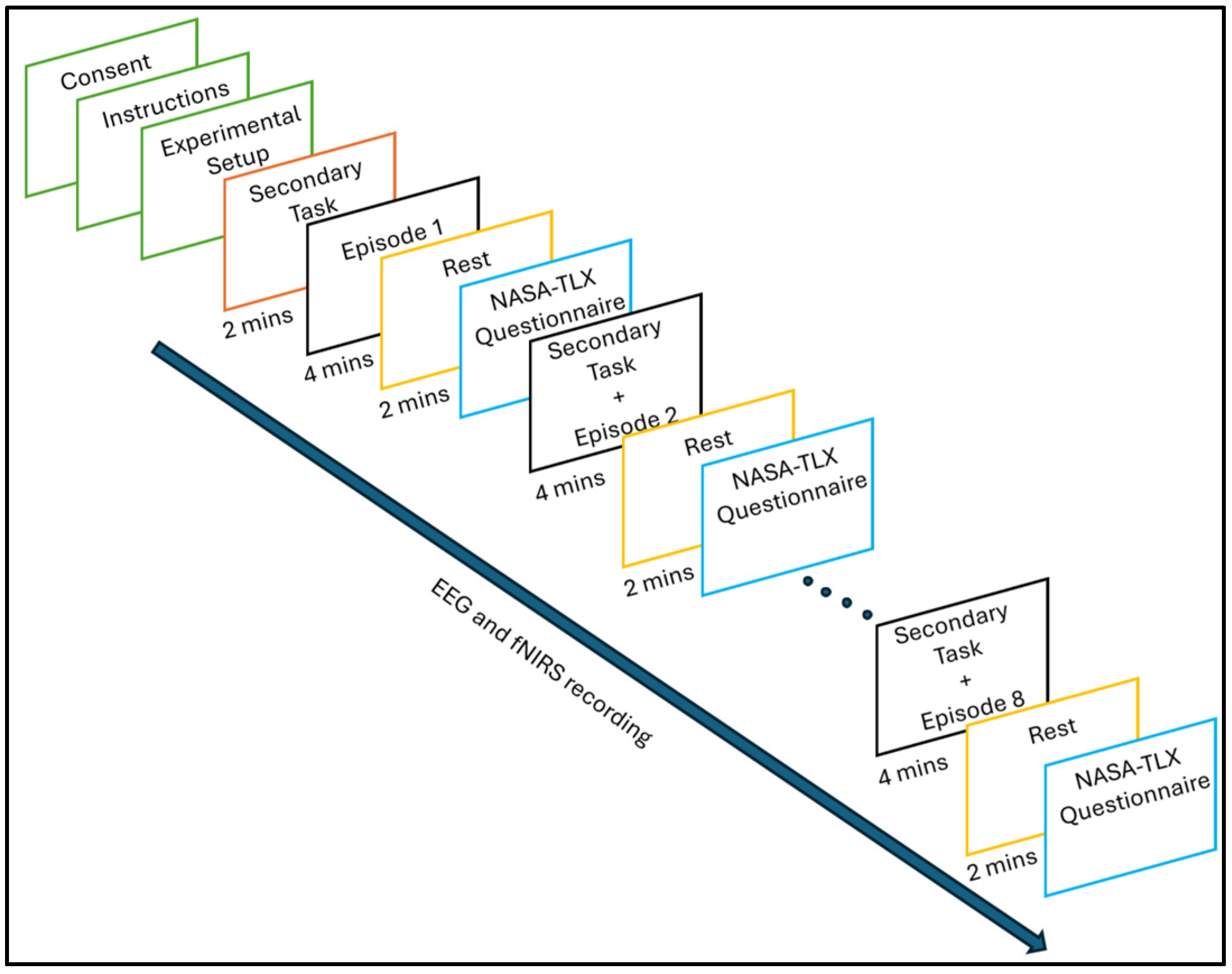

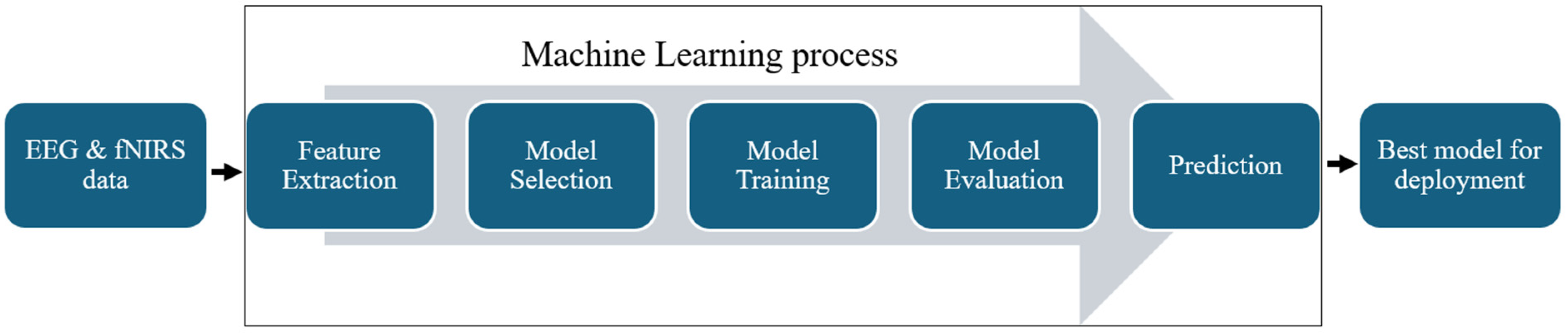

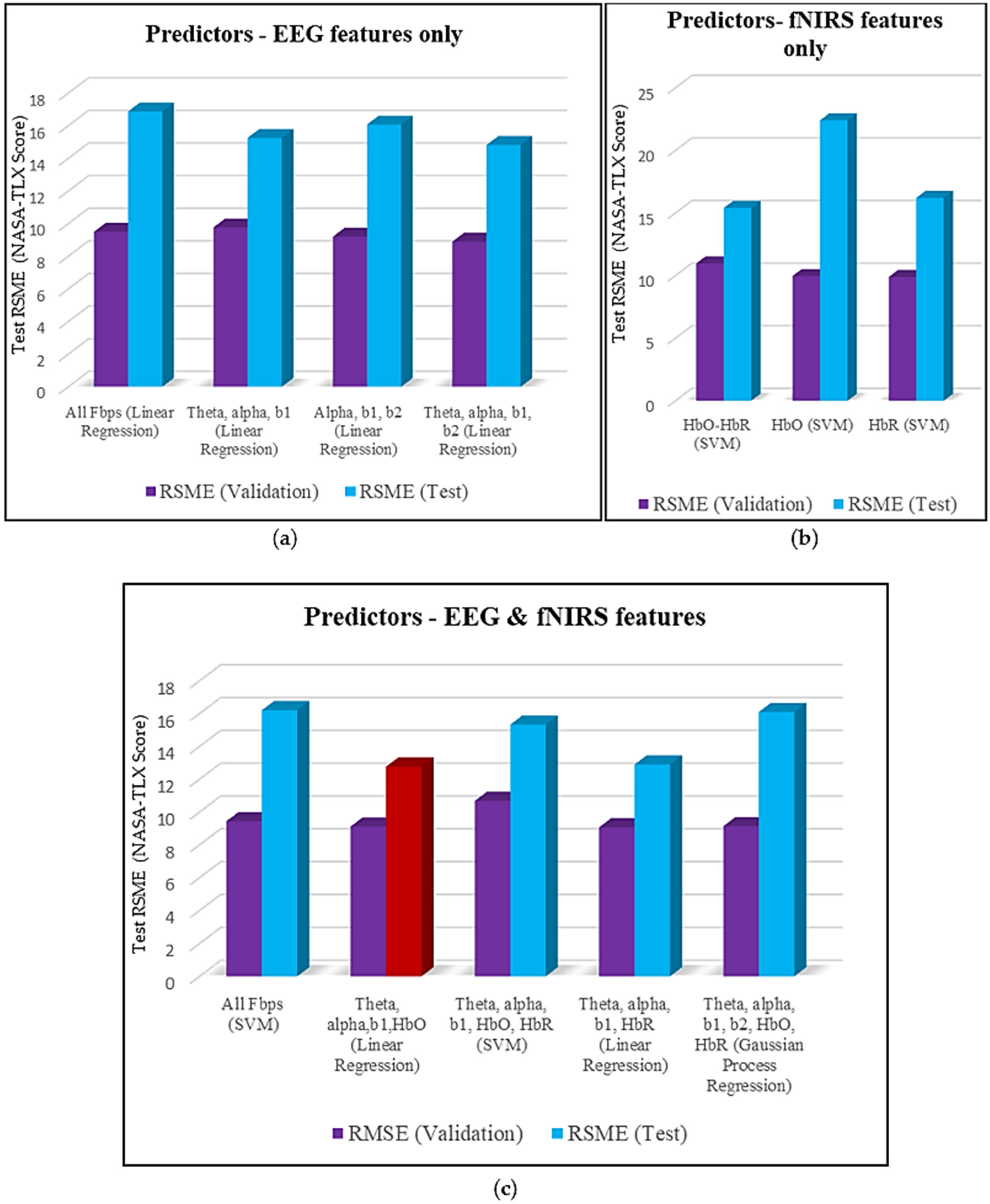

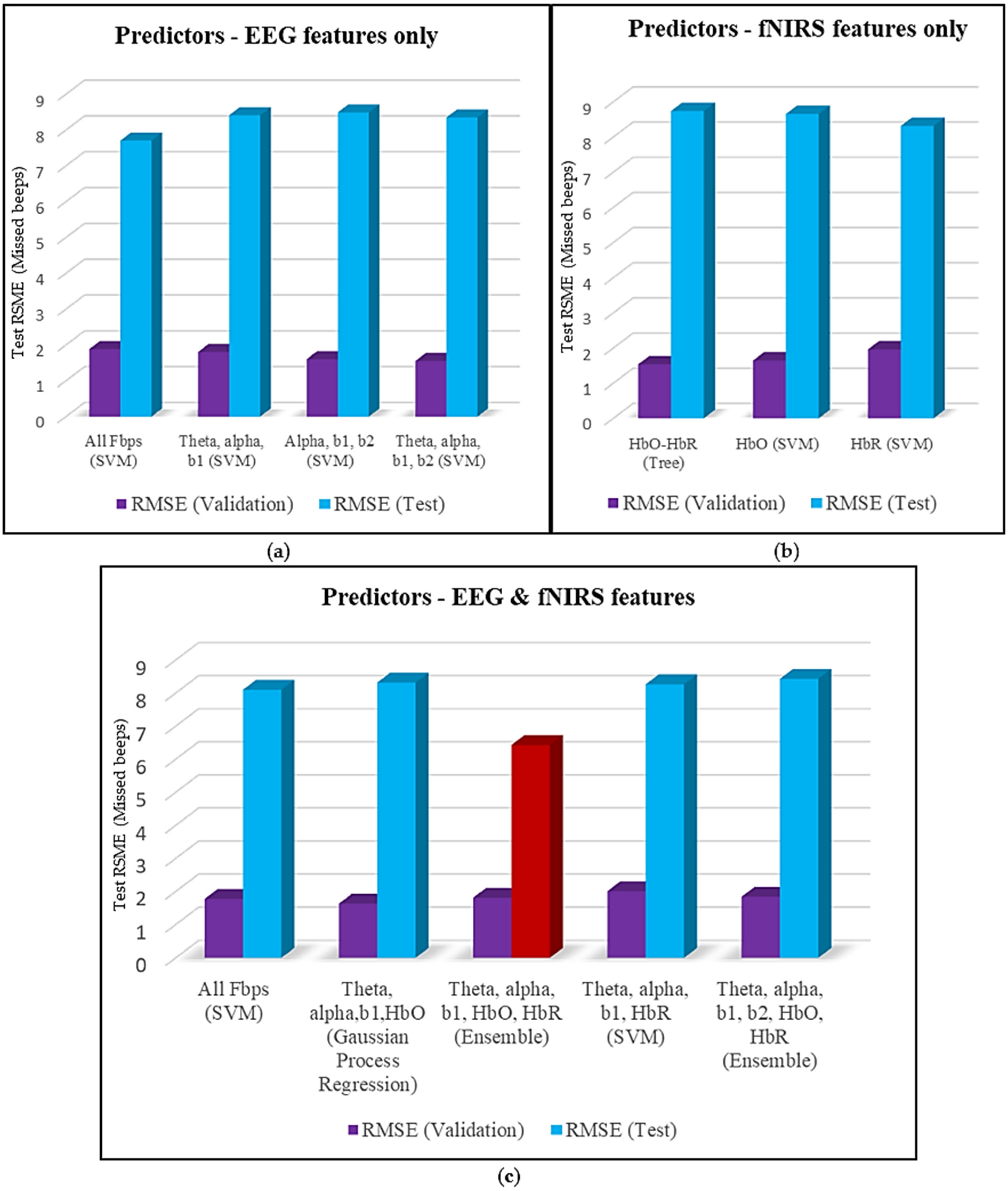

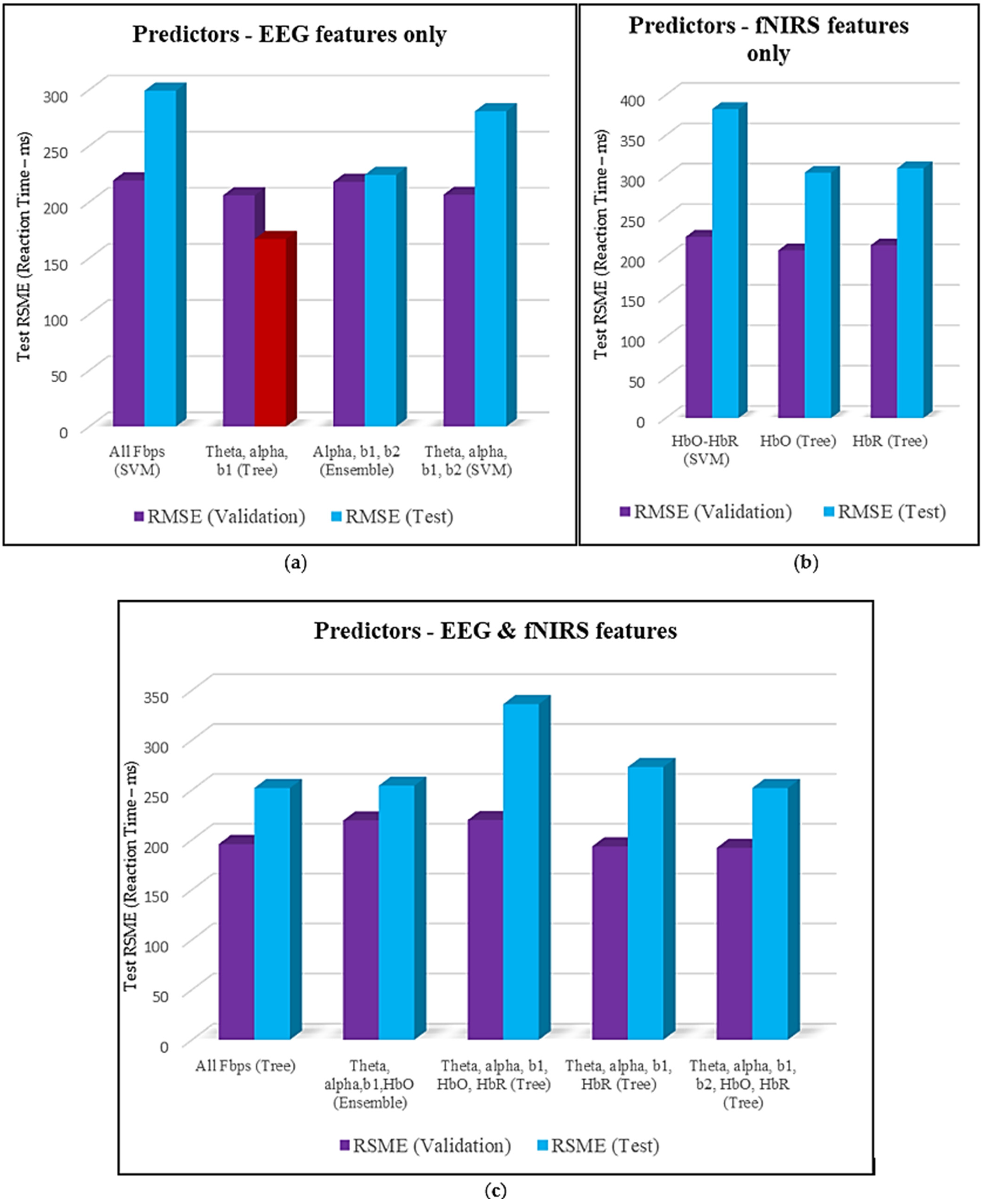

41]. As subjective and behavioural measures lack the ability to provide real-time assessment of the perceptual state of a person, the need for physiological measures arises there. The aim of this analysis is to determine whether physiological measures are powerful enough to replace the conventional measures for monitoring the cognitive stress of factory workers. If physiological measures (neuroimaging variables) have the potential to quantify cognitive stress, they can circumvent the limitations of using conventional measures. To explore this potential of neuroimaging, a correlation study is required for physiological measures and conventional measures. Therefore, the main goal of this study is to predict subjective and behavioural measures using physiological variables. There are 3 categories of data-driven decision models including rule-based (using a set of rules or a decision tree), shallow statistical (shallow models like linear regression) and deep learning models (several-layered classification, regression and reinforcement models) [

42]. Rule-based and shallow statistical models are often outperformed in performance by deep learning models, but the implementation of deep learning techniques requires big datasets [

42]. For this research the dataset is not large enough to get the desired results using deep learning models, therefore rule-based and shallow statistical models are selected [

42]. In our previous study, the correlation between physiological measures and conventional measures was found using only 2 machine learning algorithms including linear regression and artificial neural networks (ANN) [

43]. Regression proved to be the better one for most of the targets but there was still a requirement to test multiple machine learning models and deploy the best one for each target [

43]. Therefore, this research intends to find a correlation between physiological (EEG and fNIRS) and traditional measures (i.e., behavioural and subjective measures) using rule-based and shallow statistical models and learn the impact of using individual EEG and fNIRS features and combinations of EEG and fNIRS features on the prediction of targets. As per the authors’ knowledge, this is the first study to explore and compare all these machine-learning techniques, especially for multimodal brain data. Another novelty of this analysis is that a unique and personalised model has been chosen for each target instead of using a single model for predicting all targets. The strengths of the chosen machine learning algorithms for predicting different targets are also evident from this research.