Abstract

An effective approach to addressing the speech separation problem is utilizing a time–frequency (T-F) mask. The ideal binary mask (IBM) and ideal ratio mask (IRM) have long been widely used to separate speech signals. However, the IBM is better at improving speech intelligibility, while the IRM is better at improving speech quality. To leverage their respective strengths and overcome weaknesses, we propose an ideal threshold-based mask (ITM) to combine these two masks. By adjusting two thresholds, these two masks are combined to jointly act on speech separation. We list the impact of using different threshold combinations on speech separation performance under ideal conditions and discuss a reasonable range for fine tuning the thresholds. By using masks as a training target, to evaluate the effectiveness of the proposed method, we conducted supervised speech separation experiments applying a deep neural network (DNN) and long short-term memory (LSTM), the results of which were measured by three objective indicators: the signal-to-distortion ratio (SDR), signal-to-interference ratio (SIR), and signal-to-artifact ratio improvement (SAR). Experimental results show that the proposed mask combines the strengths of the IBM and IRM and implies that the accuracy of speech separation can potentially be further improved by effectively leveraging the advantages of different masks.

1. Introduction

Speech separation [1,2,3,4] aims to extract the target speech signal from the noisy speech signal that may contain not only the background noise but also the non-target speech signals. This method can be adopted for speech recognition [5,6], mobile communication [7,8], speaker recognition [9,10], and so forth. It is classified as single-channel speech separation with only one microphone [11,12] and as multi-channel speech separation with multiple microphones [13,14]. Single-channel speech separation is a more challenging task than multi-channel speech separation as it cannot leverage spatial information, such as different arrival times and arrival directions of speech signals, when the target and interfering sound sources are co-located or near to each other. The single-channel speech separation discussed in this paper refers to the separation of speech from multiple speakers.

Over the past few decades, several approaches for single-channel speech separation have been proposed, including speech enhancement techniques [15,16] (among which the most widely used is spectral subtraction [17]) and model-based (such as hidden Markov model (HMM) [18] and Gaussian mixture model (GMM) [19]) techniques. To use these methods, some prior knowledge about the speech or background interference is required, and the speech intelligibility is difficult to improve by using these methods. Recently, computational auditory scene analysis (CASA) [20,21,22] has been proposed, which uses a set of perceptual cues such as pitch, voice onset/offset, temporal continuity, harmonic structure, modulation correlation, and so forth, to separate the desired speech by masking the interfering sources.

In CASA systems, time–frequency masks are typically used to retain the target speech signal as much as possible while removing interferences. The ideal binary mask (IBM) [23,24] extracts the dominant components of the target speech in the mixed speech signal by being assigned a value of 1 and directly discards the non-dominant parts by being assigned a value of 0. In contrast, the ideal ratio mask (IRM) [25,26] attempts to preserve as much of the target speech signal components as possible by being assigned any value within the range of 0 to 1. These two types of masks are widely used and exhibit different performances under various speech separation conditions. Generally, the IBM is more conducive to improving speech intelligibility [27,28], while the IRM is more conducive to improving speech quality [29,30]. It is feasible to establish a combined mask using their respective advantages to improve the performance of speech separation.

In this paper, we propose a method using a threshold-based combination mask for single-channel speech separation. Taking into account the differences in mixed speech from different speakers, the mask with thresholds is used to determine the critical point of the strength between the target and interfering speech signals, thus enhancing the performance of speech separation by combining the advantages of the IBM and IRM. With the development of deep learning (DL), masks have been used as a training target in speech separation, achieving good performance [3,29,30,31]. We conducted speech separation experiments under both ideal conditions and DL models to evaluate the effectiveness of our proposed method.

This paper is organized as follows. In Section 2, the notation and formulation of this research are explained, which contain notation and background information of the IBM and IRM. In Section 3, the single-channel speech separation using the threshold-based combination mask is proposed. Then, evaluation experiments are described in Section 4. Finally, the conclusion is given with a discussion of future work in Section 5.

2. Ideal Binary Mask (IBM) and Ideal Ratio Mask (IRM)

In this section, we first introduce the relevant notation and formulation of using masks for speech separation. Then, we review the two commonly used masks, the IBM and IRM, and discuss their respective advantages and disadvantages.

2.1. Notation and Formulation

Single-channel speech separation is commonly formulated as an estimation problem based on the following:

where is the acquired signal (mixed signal), n is the time index, and is the clean speech signals, for the index of the speaker i = 1, 2, …, C. To simplify the discussion, we consider C = 2, in which two speakers speak at the same time.

The short-time Fourier transform (STFT) of is represented as

where is the window function, k, l, H, j, and N are the frequency bin index, frame index, window shift, the imaginary unit, and window length, respectively.

To separate the mixed speech signal into each individual speech signal, we consider one speech signal as the target speech signal (e.g., speech signal ). To obtain the target speech signal, the T-F mask is used in this study. As mentioned in Section 1, the IBM and IRM are widely used. The IBM (also known as the hard mask) of target speech signal is defined by

where denotes the STFT of , denotes the amplitude spectrum of , and t denotes the index of the target speaker. The IRM (also known as the soft mask) of target speech signal is defined by

By using the T-F mask, the target speech signal (as mentioned earlier) can be extracted from the mixed speech as follows:

Accordingly, the other speech signal (it is considered as the non-target signal) can be represented as

where is the estimated spectrum of , is the T-F mask for , and ⊙ is the Hadamard product. As shown in Equations (3) and (4), the IBM and IRM have different characteristics, and the spectra estimated by them are also different. Finally, after separating the speech signal by Equations (5) and (6), the time-domain signal of is obtained by inverse STFT.

2.2. Advantages and Disadvantages of IBM and IRM

As described above, the IBM is a hard mask, which can take two values, 0 and 1. It only preserves the dominant signal in the mixed signal, as shown in Figure 1a,c. This means that using this mask is beneficial for separating each time–frequency component from the mixed signal with significant differences in signal strength, but it is not effective at separating each time–frequency component from the mixed signal with comparable strength. In other words, the target signal is difficult to separate from the mixed signal when the signal powers of both signals are almost the same, as shown in Figure 1b.

Figure 1.

Examples of target signals having different intensities compared with interference signals shown as complex spectra. Here, is the target and is the interference. (a) The target signal dominates. (b) The target signal is comparable to or slightly lower in strength than the interfering signal. (c) The interfering signal dominates.

Regarding the IRM, as a soft mask, it can have any value between 0 and 1. Based on the above definition and description, it can preserve all the time–frequency components of the target signal as much as possible, regardless of whether the target signal dominates in these regions or not. This indiscriminate preservation can lead to more residual interfering signals because such preservation is very redundant in mixed signals where one side absolutely dominates, as shown in Figure 1a,c.

In summary, the IBM is effective for removing interference signals but not for recovering the target signal, while the IRM is advantageous for preserving the target signal but not for removing the interference signals. Therefore, the extent to which interference signals are retained is a concern in the method of speech separation by using masks, which also greatly affects the performance of extracted signals. It is necessary to explore a new method to combine the advantages of the above two masks to control the retention of interference signals, thereby improving the accuracy of speech separation.

3. Proposed T-F Mask for Speech Separation

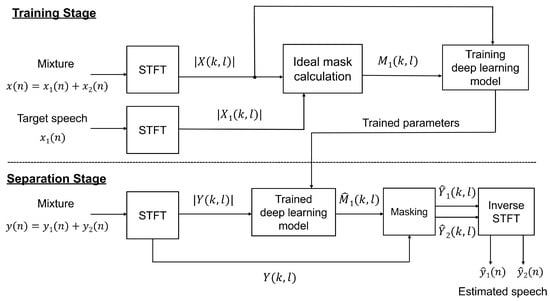

In this section, we present the proposed combination mask and describe the use of masks as training targets for speech separation. Figure 2 shows an overview of the proposed method by using the T-F mask and DL-based model.

3.1. Threshold-Based Combination Mask

On the basis of the discussion and explanation in Section 2.2, we consider the different intensities of the target signal and the interference signal in the mixed signal. As shown in Figure 1a,c, when one signal dominates, the use of the IBM is entirely feasible. This implies that we can directly discard the signal with very weak intensity. However, when the target signal is comparable to the interference signal, as shown in Figure 1b, we cannot directly discard either of them. Therefore, to improve the performance of speech separation, the IRM needs to be used and the energy of both signals needs to be fully preserved.

Based on the above analysis, considering the differing strengths and combinations of the target signal and the interference signal, it is highly reasonable to use a combination of these two masks for speech separation. It is expected that when one signal dominates in strength, the IBM can be used to effectively discard the interference signal, and when the strengths of both signals are comparable, the IRM can be used to fully preserve the desired target signal. Here, we use thresholds to determine the differences in signal strength and adopt different masks on the basis of these differences. By determining the upper and lower limit values, the target signal can be retained as much as possible while also more effectively discarding the interference signal. Here, we define an ideal threshold-based mask (ITM), shown as

where and are thresholds applied to all elements, and these thresholds are used to compare with the ideal ratio mask to determine which mask (IBM or IRM) should be used for the ITM. For regions where the target and interfering signals significantly differ in strength, the upper threshold should be used to enhance the target signal, and the lower threshold should be used to further discard the interference signal. For regions where there is a small difference in strength between the target and interfering signals, the ratio mask is adopted to effectively preserve the energy of both signals for speech separation.

3.2. Using Masks as Training Targets

Deep neural network (DNN) [32,33,34] and long short-term memory (LSTM) [35,36,37] are popular DL networks that effectively generate the estimated mask. Supervised speech separation is divided into two stages, the training stage and the separation stage, which are shown in Figure 2. In the training stage, we use the STFT to obtain the features of the speech signals and calculate the ideal mask, in which the DL model is trained for generating the estimated T-F mask of the target speaker. In the separation stage, we extract the spectrum of the target speech from the mixed speech utilizing the DL model, which has already been trained in the previous training stage. Then, the spectrum of the target speech signal is converted into the time waveform by inverse STFT.

Figure 2.

Diagram of deep learning-based speech separation system using mask as training target.

In this paper, the DL network is designed to estimate the mask calculated by Equation (7). The speech features are the amplitude spectra of the speech signal. We compute the masks for the target speech signal in advance, which are used as the training targets for the network. The mask is estimated to be equal to the ideal mask. The network is trained using mean-square error as the loss function. The mean-square error of target speech is represented by

where is the ideal T-F mask (either IBM, IRM, or ITM) for the target speaker, is the estimated T-F mask for the target speaker (which is also the output of the separation model), K is the number of frequency bins, and L is the number of frames. In addition, because the hard mask is not smooth, we use the soft mask as the training target of the network and then generate a hard mask through discrimination, with values greater than or equal to 0.5 being set to 1, and others set to 0.

4. Experiments and Results

We conducted experiments to evaluate the effectiveness of the proposed method. The experiments can be divided into two parts. In the first part, the performances of the conventional and proposed masks were evaluated in an ideal condition, i.e., the masks are directly calculated from the target and interference signals. In the second part, the performances were evaluated with the masks estimated using the DL model.

4.1. Experimental Setup

All experiments were implemented by using Matlab R2022b. The experimental conditions are shown in Table 1.

Table 1.

Experimental conditions.

4.1.1. Dataset

We conducted the speech separation experiments to justify the effectiveness of our proposed methods. In the experiments, three male and three female speakers were chosen from the TIMIT Acoustic Phonetic Continuous Speech Corpus [38]. The speech signals from 2 speakers were mixed to create 15 different sets of two-speaker recordings: male+male, male+female, and female+female. For every speaker, nine TIMIT sentences, respectively, were used for training, and the remaining sentence was used as the test set [39]. All the mixed speech database was created at the ratio of , 0, and 5 dB [40]. The whole database in this evaluation experiment is more than three hours.

4.1.2. Experimental Configurations

We use STFT (the frame length was set to 512 samples) to obtain 257-dimensional spectral features for training DL models. The parameters of STFT are shown in Table 1. As a DL model, DNN and LSTM were used for conducting the speech separation experiments, respectively. For the DNN model, a feed-forward neural network is applied. The input and output sizes were set to 257 × 10 units, which denoted input and output layers with a 10-frame context. We employed the sigmoid activation function for the network. There are three layers of the network. The dropout layer is used for training to reduce overfitting with a loss rate of 10%. The adaptive moment estimation (ADAM) [41] optimizer is used to train DNN. For the LSTM model, the number of layers in the network is two. The dropout layer is used for training to reduce overfitting with a loss rate of 20%. The number of units is 640 in hidden layer 1 and 500 in hidden layer 2. ADAM is also used in the LSTM network.

The IBM and IRM in this experiment were calculated using Equations (3) and (4), respectively. The proposed mask (7) was calculated with the threshold varying from 0.5 to 0.9 (in 0.1 increments), and the threshold varying from 0.1 to 0.5 (in 0.1 increments). As a result, 25 different combinations are formed. We obtained separated target and interference speech on the test set, evaluated the separation results with objective metrics, and took averages for comparing the speech separation performances.

4.1.3. Evaluation Metrics

Three indicators are used to evaluate the segregation performance: the signal-to-distortion ratio (SDR), signal-to-interference ratio (SIR), and signal-to-artifact ratio (SAR) [42]. The SDR is the ratio of the power of the target signal to the power of the difference between the target signal and the estimated signal. It reflects the overall separation performance in a speech separation task. The SIR reflects errors due to incomplete removal of the interfering signal, while the SAR reflects errors caused by additional artifacts introduced during the speech separation process. These metrics can be calculated [42] using the following formulas:

where is the target speech signal, is the interference speech signal, is the artifact signal, and (or ) is the power of the signal. When evaluating separation performance, (or ) and (or ) are treated as the target speech signal and the interfering speech signal, respectively. All these signals are obtained by decomposing [42] the estimated signal. The higher these indicators, the higher the accuracy of speech separation.

4.2. Experimental Results and Discussions

4.2.1. Comparison of Proposed and Conventional Masks in Ideal Conditions

First, we compared the separation results by using various masks under ideal conditions (using the ideal masks).

Table 2 lists the results of the speech separation experiments using the conventional method by the IBM and IRM. Table 2 shows that the IBM can better remove interference signals but leads to greater signal distortion. On the other hand, the IRM has a better degree of signal distortion but preserves more interference signals. From these results, the IBM has a better indicator of the SIR (8.77 dB higher than the IRM), and the IRM has a better indicator of the SDR (0.35 dB higher than the IRM). The IRM also improves the SAR more than the IBM does (1.12 dB higher).

Table 2.

Evaluation metrics [dB] of different variants of ideal mask. (IBM: ideal binary mask, IRM: ideal ratio mask).

Table 3, Table 4 and Table 5 show the results of the speech separation experiments using the proposed combination masks with various combinations of the thresholds. From Table 3, Table 4 and Table 5, the proposed combination mask shows the different results with each combination of the thresholds. As shown in Table 3, for the restoration of the target signal, better separation results can be achieved by adjusting the thresholds. Moreover, compared with both the IBM and IRM, using the ITM in certain threshold regions can improve the quality of target speech in speech separation. Table 3 shows that the best results in terms of the SDR are when is in the range of [0.6, 0.8] and is in the range of [0.2, 0.4]. This can be expressed by the comparison results of the SDR: the average value of these results is 0.71 dB higher than that of the IBM and 0.36 dB higher than that of the IRM.

Table 3.

The ideal value of the SDR [dB] at different values of and .

Table 4.

The ideal value of the SIR [dB] at different values of and .

Table 5.

The ideal value of the SAR [dB] at different values of and .

For the removal of interference signals, as shown in Table 4, when the threshold is fixed, the value of the SIR increases with the increment of the threshold . When the threshold is fixed, the value of the SIR generally shows a downward trend with the increment of the threshold . From the preliminary experiments, we found that, the smaller the difference between the thresholds and , the stronger the ability to remove interference signals. The best separation performance was achieved by ITM ( = 0.5 and = 0.5), the same as indicated by the results of the IBM.

Table 5 shows the results for the measurement of the degree of the SAR. These results are highly consistent with those indicated by the SDR. This means that the higher the SDR value, the higher the SAR value. Moreover, similar to the SDR, the best SAR results are also distributed in these regions, where is in the range of [0.6, 0.8] and is in the range of [0.2, 0.4]. This can be expressed by the comparison results of the SAR: the average value of these results is 0.86 dB higher than that of the IBM but 0.26 dB lower than that of the IRM. The above results and analysis show that, with fine-tuned thresholds, the proposed threshold-based combination mask can achieve better speech separation results in terms of objective metrics (SDR, SIR, and SAR) under the ideal conditions. Especially when we set these two thresholds close to the middle value (in the setting of this paper, = 0.7 and = 0.3), we can maximize the advantages of combining the IBM and IRM, thereby improving the performance of speech separation in this experiment.

4.2.2. Comparison of Proposed and Conventional Masks Based on Deep Learning Model for Speech Separation

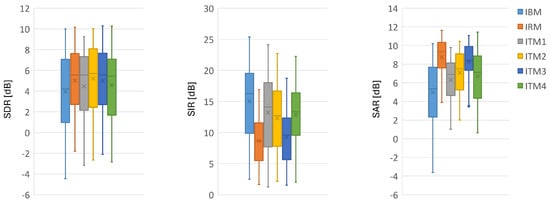

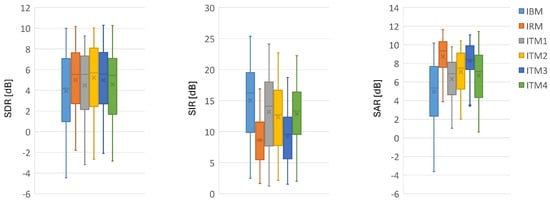

We performed the speech separation experiments using different masks, and these experiments were based on the DNN and LSTM models. Here, on the basis of the previous discussion, in this experiment, we selected several representative masks (under different threshold combinations) to present and compare the results of these experiments. These results are presented in Figure 3, Figure 4 and Figure 5.

Figure 3.

Evaluation metrics [dB] of different variants of conventional method and proposed method using DNN. (IBM: ideal binary mask, IRM: ideal ratio mask, ITM: ideal threshold-based mask with different combinations of and , for ITM1: = 0.5, = 0.1, ITM2: = 0.7, = 0.3, ITM3: = 0.9, = 0.1, ITM4: = 0.9, = 0.5).

Figure 4.

Evaluation metrics [dB] of different variants of conventional method and proposed method using LSTM. (IBM: ideal binary mask, IRM: ideal ratio mask, ITM: ideal threshold-based mask with different combinations of and , for ITM1: = 0.5, = 0.1, ITM2: = 0.7, = 0.3, ITM3: = 0.9, = 0.1, ITM4: = 0.9, = 0.5).

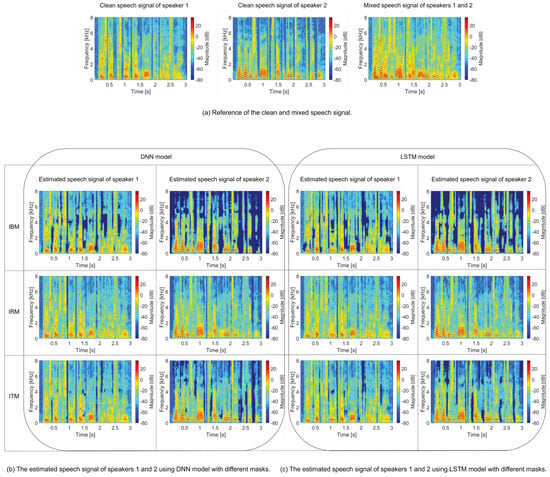

Figure 5.

Examples of power spectrogram of conventional and proposed methods using DNN and LSTM. (Mixed speech with an input at the ratio of −5 dB. ITM with the = 0.7, = 0.3).

From Figure 3 and Figure 4, The IBM is superior to the IRM at removing interference signals, while the IRM is superior to the IBM at preserving the target signal. On the basis of different threshold combinations, the proposed ITM presented different separation results. Specifically, the ITM1 ( = 0.5, = 0.1) and ITM4 ( = 0.9, = 0.5) are closer to the IBM, while the ITM2 ( = 0.7, = 0.3) and ITM3 ( = 0.9, = 0.1) are closer to the IRM. The best overall result is presented by ITM2. When the DNN model is used, it improves the SDR by 1.28 dB, the SIR by −2.70 dB, and the SAR by 2.12 dB compared with the IBM and improves the SDR by 0.21 dB, the SIR by 3.69 dB, and the SAR by −1.65 dB compared with the IRM. When the LSTM model is used, it improves the SDR by 0.96 dB, the SIR by −2.88 dB, and the SAR by 1.77 dB compared with the IBM and improves the SDR by 0.29 dB, the SIR by 2.75 dB, and the SAR by −1.14 dB compared with the IRM. All of these results are consistent with what we achieved in the ideal conditions, as described in the previous sections.

To further validate these results, we compare the power spectrograms of the experimental results, which are shown in Figure 5. For example, at approximately 2 s into the speech signal of speaker 1, as well as 1.5 s into speech signal of speaker 2, we can observe the differences in separation performance presented under different masks. It shows that the IBM can more effectively remove interference speech signals, but compared with clean speech, the spectrum shows significant distortion. Also, the IRM can better preserve the original target speech signal, but compared with clean speech, the spectrum shows that more interference components are generated. ITM can generate fewer interference components while retaining the original target signal spectrum structure. From the spectrum graph, it can also be concluded that ITM combines the advantages of the IBM and IRM, overcoming their shortcomings, indicating that our proposed method is effective. These analyses show that the proposed method can improve the performance of speech separation.

5. Conclusions and Future Works

In this paper, we proposed an ideal threshold-based mask (ITM) combining the strengths of the ideal binary mask (IBM) and the ideal ratio mask (IRM) to further enhance the effectiveness of speech separation for single-channel speech separation. While the IBM is advantageous for improving speech intelligibility, it loses more energy from the target signal, and the IRM, while beneficial for enhancing speech quality, retains more interference signal energy. Our proposed method aims to use the strengths of both while mitigating their weaknesses. Experiments on the speech separation in two-speaker tasks were conducted, and three objective metrics were adopted to evaluate our experimental results: the signal-to-distortion ratio (SDR), signal-to-interference ratio (SIR), and signal-to-artifact ratio improvement (SAR). We discussed the impact of different combinations of thresholds on the speech separation performance and suggested thresholds to achieve overall improvement in results. The experimental results show that by setting appropriate thresholds, our proposed method can utilize the advantages of both the IBM and IRM, leading to further improvements in the accuracy of speech separation.

This study demonstrates that by combining the strengths of different masks, the performance of speech separation can be enhanced. In the future, we will consider the characteristics of specific speakers, as well as the differences in different combinations of speakers. By exploring more effective methods of combining with different masks, we aim to further improve the accuracy of speech separation.

Author Contributions

Writing—original draft, P.C.; Writing—review and editing, P.C., B.T.N., K.I. and T.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Makino, S.; Lee, T.; Sawada, H. Blind Speech Separation; Springer: Berlin/Heidelberg, Germany, 2007; Volume 615. [Google Scholar]

- Qian, Y.; Weng, C.; Chang, X.; Wang, S.; Yu, D. Past review, current progress, and challenges ahead on the cocktail party problem. Front. Inf. Technol. Electron. Eng. 2018, 19, 40–63. [Google Scholar] [CrossRef]

- Wang, D.; Chen, J. Supervised speech separation based on deep learning: An overview. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1702–1726. [Google Scholar] [CrossRef] [PubMed]

- Agrawal, J.; Gupta, M.; Garg, H. A review on speech separation in cocktail party environment: Challenges and approaches. Multimed. Tools Appl. 2023, 82, 31035–31067. [Google Scholar] [CrossRef]

- Yu, D.; Deng, L. Automatic Speech Recognition; Springer: Berlin/Heidelberg, Germany, 2016; Volume 1. [Google Scholar]

- Malik, M.; Malik, M.K.; Mehmood, K.; Makhdoom, I. Automatic speech recognition: A survey. Multimed. Tools Appl. 2021, 80, 9411–9457. [Google Scholar] [CrossRef]

- Stüber, G.L.; Steuber, G.L. Principles of Mobile Communication; Springer: Berlin/Heidelberg, Germany, 2001; Volume 2. [Google Scholar]

- Onnela, J.P.; Saramäki, J.; Hyvönen, J.; Szabó, G.; Lazer, D.; Kaski, K.; Kertész, J.; Barabási, A.L. Structure and tie strengths in mobile communication networks. Proc. Natl. Acad. Sci. USA 2007, 104, 7332–7336. [Google Scholar] [CrossRef]

- Campbell, J.P. Speaker recognition: A tutorial. Proc. IEEE 1997, 85, 1437–1462. [Google Scholar] [CrossRef]

- Hansen, J.H.; Hasan, T. Speaker recognition by machines and humans: A tutorial review. IEEE Signal Process. Mag. 2015, 32, 74–99. [Google Scholar] [CrossRef]

- Davies, M.E.; James, C.J. Source separation using single channel ICA. Signal Process. 2007, 87, 1819–1832. [Google Scholar] [CrossRef]

- Cooke, M.; Hershey, J.R.; Rennie, S.J. Monaural speech separation and recognition challenge. Comput. Speech Lang. 2010, 24, 1–15. [Google Scholar] [CrossRef]

- Weinstein, E.; Feder, M.; Oppenheim, A.V. Multi-channel signal separation by decorrelation. IEEE Trans. Speech Audio Process. 1993, 1, 405–413. [Google Scholar] [CrossRef]

- Nugraha, A.A.; Liutkus, A.; Vincent, E. Multichannel audio source separation with deep neural networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 1652–1664. [Google Scholar] [CrossRef]

- Loizou, P.C. Speech Enhancement: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Jensen, J.; Hansen, J.H.L. Speech enhancement using a constrained iterative sinusoidal model. IEEE Trans. Speech Audio Process. 2001, 9, 731–740. [Google Scholar] [CrossRef]

- Boll, S. Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech, Signal Process. 1979, 27, 113–120. [Google Scholar] [CrossRef]

- Sameti, H.; Sheikhzadeh, H.; Deng, L.; Brennan, R.L. HMM-based strategies for enhancement of speech signals embedded in nonstationary noise. IEEE Trans. Speech Audio Process. 1998, 6, 445–455. [Google Scholar] [CrossRef]

- Reynolds, D.A.; Quatieri, T.F.; Dunn, R.B. Speaker verification using adapted Gaussian mixture models. Digit. Signal Process. 2000, 10, 19–41. [Google Scholar] [CrossRef]

- Brown, G.J.; Cooke, M. Computational auditory scene analysis. Comput. Speech Lang. 1994, 8, 297–336. [Google Scholar] [CrossRef]

- Wang, D.; Brown, G.J. Computational Auditory Scene Analysis: Principles, Algorithms, and Applications; Wiley-IEEE Press: Hoboken, NJ, USA, 2006. [Google Scholar]

- Liu, Y.; Wang, D. A CASA approach to deep learning based speaker-independent co-channel speech separation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5399–5403. [Google Scholar]

- Wang, D. On ideal binary mask as the computational goal of auditory scene analysis. In Speech Separation by Humans and Machines; Springer: Berlin/Heidelberg, Germany, 2005; pp. 181–197. [Google Scholar]

- Kjems, U.; Boldt, J.B.; Pedersen, M.S.; Lunner, T.; Wang, D. Role of mask pattern in intelligibility of ideal binary-masked noisy speech. J. Acoust. Soc. Am. 2009, 126, 1415–1426. [Google Scholar] [CrossRef]

- Narayanan, A.; Wang, D. Ideal ratio mask estimation using deep neural networks for robust speech recognition. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 7092–7096. [Google Scholar]

- Hummersone, C.; Stokes, T.; Brookes, T. On the ideal ratio mask as the goal of computational auditory scene analysis. In Blind Source Separation: Advances in Theory, Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2014; pp. 349–368. [Google Scholar]

- Li, N.; Loizou, P.C. Factors influencing intelligibility of ideal binary-masked speech: Implications for noise reduction. J. Acoust. Soc. Am. 2008, 123, 1673–1682. [Google Scholar] [CrossRef]

- Wang, D.; Kjems, U.; Pedersen, M.S.; Boldt, J.B.; Lunner, T. Speech intelligibility in background noise with ideal binary time-frequency masking. J. Acoust. Soc. Am. 2009, 125, 2336–2347. [Google Scholar] [CrossRef]

- Wang, Y.; Narayanan, A.; Wang, D. On training targets for supervised speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1849–1858. [Google Scholar] [CrossRef]

- Minipriya, T.; Rajavel, R. Review of ideal binary and ratio mask estimation techniques for monaural speech separation. In Proceedings of the 2018 Fourth International Conference on Advances in Electrical, Electronics, Information, Communication and Bio-Informatics (AEEICB), Chennai, India, 27–28 February 2018; pp. 1–5. [Google Scholar]

- Chen, J.; Wang, D. DNN Based Mask Estimation for Supervised Speech Separation. In Audio Source Separation; Springer: Cham, Switzerland, 2018; pp. 207–235. [Google Scholar]

- Xu, Y.; Du, J.; Dai, L.R.; Lee, C.H. An experimental study on speech enhancement based on deep neural networks. IEEE Signal Process. Lett. 2013, 21, 65–68. [Google Scholar] [CrossRef]

- Du, J.; Tu, Y.; Dai, L.R.; Lee, C.H.; Fellow. A Regression Approach to Single-Channel Speech Separation Via High-Resolution Deep Neural Networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 1424–1437. [Google Scholar] [CrossRef]

- Delfarah, M.; Wang, D. Features for masking-based monaural speech separation in reverberant conditions. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1085–1094. [Google Scholar] [CrossRef]

- Weninger, F.; Erdogan, H.; Watanabe, S.; Vincent, E.; Le Roux, J.; Hershey, J.R.; Schuller, B. Speech enhancement with LSTM recurrent neural networks and its application to noise-robust ASR. In Proceedings of the Latent Variable Analysis and Signal Separation: 12th International Conference, LVA/ICA 2015, Liberec, Czech Republic, 25–28 August 2015; Proceedings 12. pp. 91–99. [Google Scholar]

- Chen, J.; Wang, D. Long short-term memory for speaker generalization in supervised speech separation. J. Acoust. Soc. Am. 2017, 141, 4705–4714. [Google Scholar] [CrossRef] [PubMed]

- Strake, M.; Defraene, B.; Fluyt, K.; Tirry, W.; Fingscheidt, T. Separated noise suppression and speech restoration: LSTM-based speech enhancement in two stages. In Proceedings of the 2019 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 20–23 October 2019; pp. 239–243. [Google Scholar]

- Garofolo, J.S. Timit Acoustic Phonetic Continuous Speech Corpus; Linguistic Data Consortium: Philadelphia, PA, USA, 1993. [Google Scholar]

- Huang, P.S.; Kim, M.; Hasegawa-Johnson, M.; Smaragdis, P. Deep learning for monaural speech separation. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014. [Google Scholar]

- Grais, E.M.; Sen, M.U.; Erdogan, H. Deep neural networks for single channel source separation. In Proceedings of the IEEE International Conference on Acoustic, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Vincent, E.; Gribonval, R.; Fevotte, C. Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1462–1469. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).