Abstract

A growing focus among scientists has been on researching the techniques of automatic detection of dementia that can be applied to the speech samples of individuals with dementia. Leveraging the rapid advancements in Deep Learning (DL) and Natural Language Processing (NLP), these techniques have shown great potential in dementia detection. In this context, this paper proposes a method for dementia detection from the transcribed speech of subjects. Unlike conventional methods that rely on advanced language models to address the ability of the subject to make coherent and meaningful sentences, our approach relies on the center of focus of the subjects and how it changes over time as the subject describes the content of the cookie theft image, a commonly used image for evaluating one’s cognitive abilities. To do so, we divide the cookie theft image into regions of interest, and identify, in each sentence spoken by the subject, which regions are being talked about. We employed a Long Short-Term Memory (LSTM) neural network to learn different patterns of dementia subjects and control ones and used it to perform a 10-fold cross validation-based classification. Our experimental results on the Pitt corpus from the DementiaBank resulted in a 82.9% accuracy at the subject level and 81.0% at the sample level. By employing data-augmentation techniques, the accuracy at both levels was increased to 83.6% and 82.1%, respectively. The performance of our proposed method outperforms most of the conventional methods, which reach, at best, an accuracy equal to 81.5% at the subject level.

1. Introduction

Dementia, a neurodegenerative disease, gradually deteriorates cognitive functioning, affecting language, memory, executive functions, motivation, motor skills, and emotional well-being [1]. With Alzheimer’s Disease (AD) being the most common form of dementia and with it being mainly correlated with age, the number of dementia cases has been drastically rising in our modern aging society [2]. Today, as of 2023, over 55 million people are estimated to have dementia worldwide, with it being the seventh leading cause of death and one of the main causes of disability and dependency among the elderly population [3].

Currently, dementia, in particular AD, has no known cure. However, early detection and prevention have been proven to be effective in enhancing therapy outcomes and improving the quality of life for patients. This is by no means an easy task to perform though. AD is characterized by the intracellular accumulation of tau-protein fibers and the extracellular accumulation of beta-amyloid plaques, which can remain silent for up to 20 years before noticeable symptoms appear. By this stage, treatment becomes less effective.

That being said, Mild Cognitive Impairment (MCI) can be an early indicator of potential development of AD and dementia. Generally speaking, cognition encompasses memory, attention, language, and decision-making abilities. Identifying MCI narrows down the search space and allows for dedicating resources to assess and slow down the cognitive decline of subjects with MCI, rendering their quality of their life more bearable.

Motivated by this, the research community has dedicated much attention to the task of the early detection of dementia. The vast majority of the research effort is driven by advances and expertise in the medical field [4,5,6] and imaging technologies [7]. However, with the recent advances in Natural Language Processing (NLP) owing to the proliferation of Deep Learning (DL) technologies, more and more attention has been attributed to the detection of dementia from the natural spoken language [8,9,10,11,12]. In this context, researchers have addressed the language and cognitive thinking deficiency symptoms of dementia. A common observation is that people with dementia find it difficult to make coherent sentence or find the appropriate vocabulary to describe a certain situation. Nonetheless, it is common for people with late-stage dementia to forget part of their sentence by the time they reach its end. With this idea in mind, if we refer to a language model trained on the speech of healthy people, identifying the deficiencies in the speech of the dementia patients is similar to identifying anomalies in the normal speech. The idea of language models has been deeply studied with various approaches [9,10,11,12] proposed for modeling dementia patients’ behavior.

However, in addition to their inability to make coherent sentences or find the appropriate vocabulary (as evaluated using the lexical frequencies in [10,12]), dementia patients suffer from a lack of focus and from not being able to connect things within a certain context [13]. Motivated by this idea, rather than addressing the former issue (i.e., an inability to make coherent sentences or find the appropriate vocabulary), in this paper, we address the latter one (i.e., a lack of focus and an inability to connect things). In our work, we make use of a particular type of data commonly collected for dementia diagnosis: the image description task. In this task, subjects are given an image, the most famous one being the cookie theft image, and are asked to describe its contents. By dividing the image into regions of interest, and by tracking, over time, the regions the subject is focusing on, we could identify whether the subject in question is a dementia patient or one of the control subjects. With the limited amount of data available in the data set used (i.e., the Pitt corpus of the DementiaBank [14]), we proposed an augmentation technique by querying GPT-3 [15] to increase the number of samples from subjects with mild and severe dementia, allowing to balance the data set for a better classification performance.

Our approach has been shown to outperform, by a large margin, the conventional methods [8,9,11,12] and reach an accuracy equal to 83.6%.

The remainder of this paper is structured as follows: Section 2 discusses some of the recent works related to the detection of dementia, and presents our motivations for conducting this research. Section 3 describes our proposed method in detail. Section 4 presents the data set used and our method of evaluation. Section 5 shows the experimental results of our proposed method, first introducing the data set, metrics and conventional methods used. Finally, Section 6 concludes this work, and opens directions for future works.

2. Related Work and Motivations

2.1. Related Work

In recent years, various approaches have been proposed for dementia detection, each trying to exploit some of the manifestations of symptoms exhibited by dementia patients.

Roark et al. [16] annotated speech features using NLP and automatic speech recognition tools. Their best model, combining speech and language features with cognitive test scores, achieved an AUC of 0.86 in classifying individuals with MCI and healthy subjects from 74 speech recordings. Zhu et al. [17] explored different transfer learning techniques for dementia detection by utilizing pre-trained models and fine-tuning them with dementia data sets. Jarrold et al. [18] utilized a data set consisting of semi-structured interviews from individuals with various forms of dementia and healthy individuals. They extracted 41 features using an Automatic Speech Recognition (ASR) system, including the speech rate, pause length, and vowel and consonant duration. Using a multilayered perceptron network, they achieved an 88% classification accuracy for Alzheimer’s Disease (AD) vs. healthy participants based on lexical and auditory data. Luz et al. [19] extracted features from the Carolina Conversations Collection, a data set containing interviews with dementia patients and healthy individuals. They used a graph-based approach to encode turn patterns and speech rates, and created an additive logistic regression model that could differentiate speech between healthy subjects and dementia patients.

More recently, benefiting from advances in signal processing techniques, novel approaches were introduced to detect dementia indicators related to dementia that humans might overlook. Tóth et al. [20] found that acoustic parameters, such as the hesitation ratio, speech speed, and pauses, extracted through an ASR system, outperformed manually computed features when combined with machine learning approaches. In a similar way, König et al. [21] achieved high accuracy rates (79% for MCI vs. healthy individuals, 94% for AD vs. healthy individuals, and 80% for MCI vs. AD) using similar machine learning methods on non-spontaneous speech data collected under controlled settings. Wankerl et al. [8] proposed a method that combines perplexity values from language models trained on speech samples from dementia patients and healthy individuals. Perplexity was used to estimate the fit between a probabilistic language model and unseen text samples. N-gram language models, widely used in processing speech and written language, calculate word sequence frequencies to create a probability density from training text data. The simplest form is the uni-gram/1-g model, which assigns probabilities to individual words based on their frequencies.

Fritsch et al. [9] used a statistical n-gram language model that they enhanced by using the rwthlm toolkit [22] to create Neural Network Language Models (NNLMs) with Long Short Term-Memory (LSTM) cells. The model was used to evaluate the perplexity of the subjects to derive who has dementia and who does not. Cohen et al. [10] interrogated two neural language models trained exclusively on texts collected for subjects who have dementia and subjects who do not. In their method, which they referred to as “A tale of two perplexities”, they used the two language models to measure the perplexity of an unknown subject, and depending on the perplexities measured for both models, they could identify whether the subject had dementia or not. Bouazizi et al. [11] used the language model AWD-LSTM [23], which they fine-tuned for classification to identify dementia from control subjects. Their approach aimed to address the limited amount of data available in the DementiaBank data set [14] by first learning the language patterns from larger corpora, and then fine-tuning the model to the particularities of the data set in their hands. Zheng et al. [12] introduced the concept of simplified language models, which they developed with reference to part-of-speech tags to train the two-perplexity method [10]. They used such simplified language models to overcome the issue of the limitation of training data in the corpus used (i.e., the Pitt corpus of the DementiaBank [14]).

2.2. Motivations

In recent years, with the rapid and exponentially growing advances in machine learning-related technologies, in particular with relation to deep learning, more focus has been attributed to exploiting such technologies in the medical field. In the context of the identification of mental health problems, the idea of using language models, in particular, has attracted a large amount of attention over the past few years. The automatic detection of depression [24,25,26,27], anxiety [28], bipolar disorder [29], and even schizophrenia [30] using advanced language models such as BERT [31], GPT-3, and GPT-4 [15] are some of the research directions in this context. However, the power of these language models comes at a cost. To begin with, these language models are massive and require a tremendous amount of data and computation power to train. Not only are the resources required for this training beyond the reach of most researchers, but they are also unjustifiable when performing simple classification tasks. That being said, it is possible to reduce such costs by employing techniques such as transfer learning, where the models are initially trained on a large corpus unrelated to the task at hand and acquired from any source, and are then fine-tuned to the task using the data set at hand, regardless of its size [32]. However, this does not solve the second issue, which is the overfitting of these models on small data sets. When classifying data with very small numbers of instances and where each instance is very small in size, such language models tend to learn specific patterns in the training samples, making the classification of the test samples non-reliable and sometimes non-reproducible. Finally, the use of such language models assumes that the information to be extracted from the samples lays within the grammar and vocabulary-related contexts of the samples, which is not always the case.

In the context of dementia detection, a few attempts have aimed to use language models for a more accurate detection compared to conventional methods, notably the aforementioned works of Zheng et al. [12], Cohen et al. [10], and Bouazizi et al. [11]. However, the aforementioned limitations of the use of language models for a task with very scarce and small data sets are applicable here.

In addition, we believe that language, manifested in the richness of the vocabulary and the grammatical correctness, is not the only component that can be extracted from the speech of the subjects. A key component that can be extracted is the topics the subjects are talking about, how often they change (from one sentence to the next, or within the same sentence), and how they relate to one another. This is, in particular, true for a common task in mental health assessment, which is the picture description task, where the subjects are given a known image and are asked to describe what they see.

Motivated by these observations, our work aims to perform the task of dementia detection using a novel approach that addresses the topics being talked about when subjects are performing an image description task in the Pitt corpus in the DementiaBank data set [14].

3. Proposed Approach

3.1. Problem Statement

In a previous work of ours [12], we aimed to evaluate the importance of grammar and vocabulary in the detection of dementia. In the current work, we aim to identify other clues that might help identify individuals with dementia. Given the cookie theft image description task, we aim to use such clues to classify a group of subjects into ones with dementia and control ones.

3.2. Proposed Approach

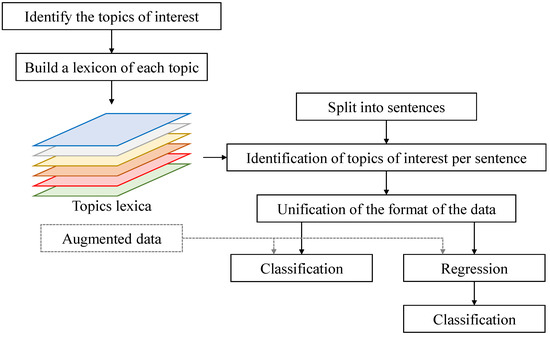

In Figure 1, we show the flowchart of our proposed approach. In our work, we first defined the main objects of interest present in the image shown to the subjects. In the case of the cookie theft image, these include the mother, the boy, the girl, the cookie jar, etc. In addition, extra topics that are not directly identifiable in the image were considered, such as when the subjects are referring to themselves, or to animate and inanimate objects that are not present in the image. We automatically collected words that could be associated with each of the topics into a separate set using online sources (independently from the data set at hand). The transcribed speech of each subject was then split into sentences. In each of the sentences we checked for the presence of (and counted) the words associated with each of the topics. By observing the change of topics of interest over time (i.e., from one sentence to the next), we aimed to distinguish behaviors of subjects with dementia from control subjects. In addition, information such as the length of the sentence, the grammatical structure correctness, etc., was extracted for each sentence, and its change was observed over time and used in the distinction. To observe and track these data over time, we trained an LSTM neural network either for classification, where the output classes are “dementia” and “control”, or regression, where the output is the Mini-Mental State Examination (MMSE) score, which was then used for class identification. In addition, to improve the classification and regression performance, an augmentation technique was used, where GPT-3 [15] was queried to generate samples of data, as we will explain later.

Figure 1.

A flowchart of the proposed method.

In the following, we describe in more detail each of the steps described above.

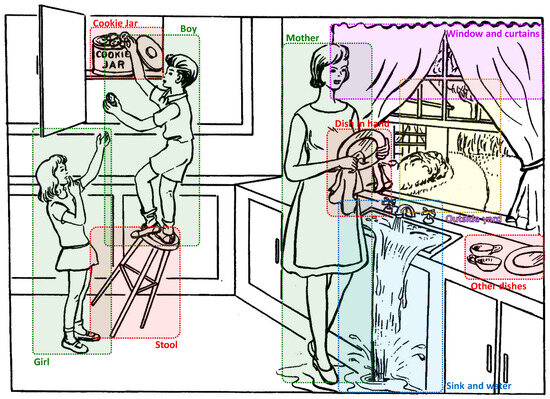

3.2.1. Dividing the Image into Regions

The first step of our approach consisted of dividing the cookie theft images into regions of interest, as shown in Figure 2. In total, 10 regions where defined. For each of the regions, we manually defined a set of seed words that we believed to be associated with its content. For instance, for the mother, we defined a small set of words including “woman”, “mother”, “lady”, etc. Similarly, for the view outside the window, we defined a small set of words including “yard”, “garden”, “bush”, etc. In addition to the seed words of regions identified in the cookie theft image, we considered extra sets of seed words, containing the following information:

Figure 2.

Different regions of the cookie theft image.

- Set 1: This is the set of words used by the subjects when addressing to themselves using expressions such as “I think”, “I might”, “my opinion”, etc.

- Set 2: This is the set of animate human objects that are referred to by the subject but are not present in the image, such as “father”, “husband”, etc.

- Set 3: This is the set of animate non-human objects that are referred to by the subject but are not present in the image, such as “pet”, etc.

- Set 4: This is the set of inanimate objects that are referred to by the subject but are not present in the image, such as “sun”, “sky”, etc.

- Set 5: This is the set of interjections showing hesitation or thinking, such as “umm”, “oh”, etc.

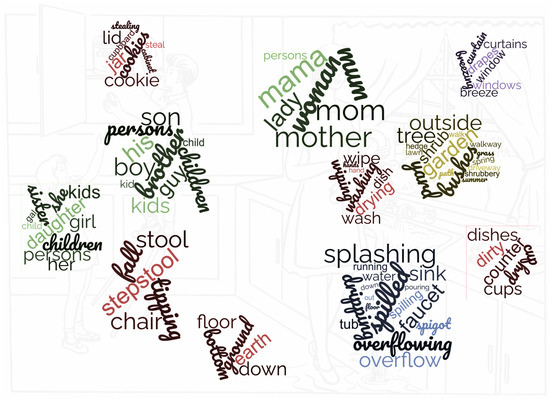

We used an online thesaurus (https://www.thesaurus.com/) as well the Natural Language Toolkit (https://www.nltk.org/) (NLTK) to collect synonyms, hypernyms, and hyponyms of the seed words used to enrich our sets of words associated with the regions of interest or the other topics referred to. This allowed us to associate a certain number of words (mainly nouns) with each of the regions, as shown in Figure 3 (words of other topics are not displayed in the image). Note that words that were extracted from the thesauri and that did not appear in the data set were completely discarded, since they added no value either way.

Figure 3.

Sets of words associated with the different regions.

In addition to the contextual information extracted above, we added a few extra pieces of information extracted at the sentence level. They included the sentence structure correctness, the repetition of the same word multiple times, the length of the sentence (in words), and the presence of indications of location (e.g., “here”, “there”, etc.).

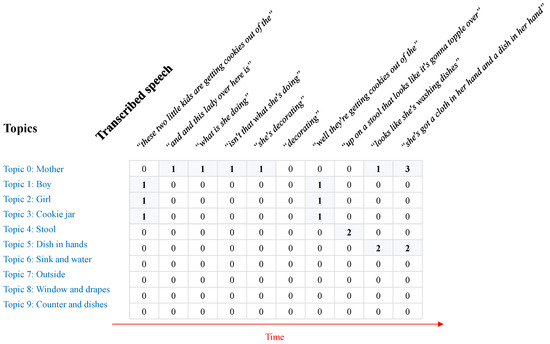

3.2.2. Tracking the Subject’s Focus over Time

Since we are dealing with transcribed text in this work, we split the speech of the subject into sentences. Each sentence is considered as a time step and the topics addressed by the subject in the sentence are considered to be the topics of interest during that particular time step. As shown in Figure 4, it is possible to create a matrix for the i-th subject, of size , where is the total number of topics and is the number of sentences spoken by the i-th subject. The cell shows the number of occurrences of words of the topic x in the sentence y. Let us denote the set of tokens collected for the topic x by , such as . Let us also denote a sentence from the subject’s speech by , which can be tokenized into a set of tokens, such as . The value of the cell will thus be:

In brief, this matrix tracks the topics of focus/interest of the subject over time.

Figure 4.

An example of the matrix collected for a subject using our proposed method.

A second alternative is opted for as well, in which the cell indicates simply whether the topic is mentioned in the sentence or not. As such, instead of counting the number of words related to the topic present in the sentence, we simply set the cell value to 1 if the topic is present, and otherwise the cell value is set to 0.

In both cases, to the generated matrix, we also added a few rows containing the information previously described using the same method (i.e., Sets 1, ⋯, 5 as well as the non-contextual information).

It is worth mentioning that some words might overlap between different sets associated with different topics (i.e., regions in the original cookie theft image). In particular, words such as “she”, “her”, etc. appear in the sets of words associated with the mother and the girl. To handle this, we referred to the closest topic in the previous sentences that was mentioned to identify which topic the new word refers to. Obviously, more elaborate techniques can be used to make sure the topic is correctly identified. However, for simplicity, and to keep our approach reproducible, we opted for such simplistic approach.

3.2.3. Direct Classification of the Subjects

To identify whether a subject has dementia or is a control subject, we use an LSTM neural network, which we train for classification. However, to train such a network, the data need to be standardized first. The data acquired from the previous steps result in matrices of different sizes for each subject. While the number of topics is the same throughout the entire process, each subject describes the image in their own way. This means that some would briefly describe it while others describe it in more detail. This makes the dimension specifying the number of sentences differ from one subject to another (and it is, therefore, referred to as where i is the index of the subject in the data set. To address this issue, we pad the data to the highest number of sentences in our data set. The padding is performed at the beginning of the matrix, meaning that we assume that a certain number of empty sentences preceded the subject’s speech. This results, finally, in a set of equal matrices of size , where is the longest image description in the data set.

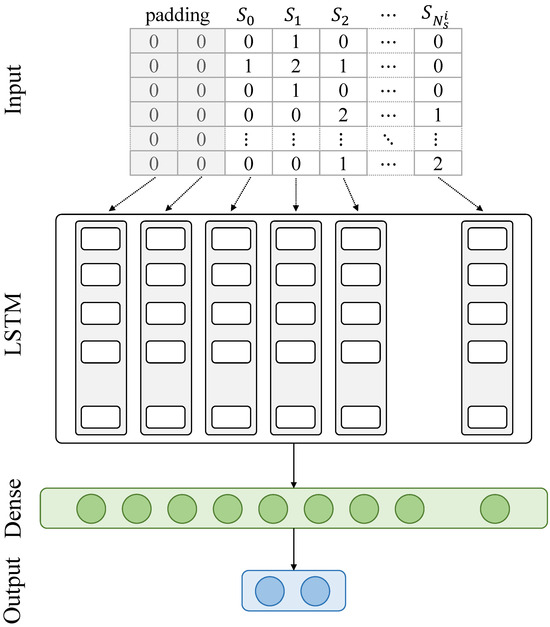

The LSTM neural network used for classification is shown in Figure 5. It is composed of an input layer whose shape is equal to , where refers to the maximum number of sentences and refers to the number of topics. The input layer feeds the LSTM layer, whose number of hidden units is set to , and which is connected to a first dense layer with neurons followed by a second dense layer with two neurons, which returns the class probabilities (i.e., dementia vs. control). In practice, the values of the different parameters were set as shown in Table 1.

Figure 5.

A flowchart of the proposed method.

Table 1.

Parameters of the neural network used for classification.

3.2.4. Regression-Based Classification

In the data set at hand, 82.8% of the samples come not only with their associated classes, but also with an MMSE score attributed by a doctor to that particular sample. MMSE scores are scores that range between 0 and 30, indicating the mental state of a subject. A score below 10 indicates that the subject has a severe impairment. A score between 10 and 20 indicates that the subject has a mild/moderate impairment. A score higher than 20 usually refers to a small or non-existent impairment. A person with dementia in its early stage tend to have scores in the range of 19–24 [33]. However, this is a one-directional observation, meaning that a subject that scores a score in this range does not necessarily have a mild cognitive impairment or early dementia. Through multiple observations, it is possible to make a more accurate judgement of the mental state.

With that in mind, in addition to the direct classification explained in Section 3.2.3, we train a similar LSTM neural network for regression, where the output is the MMSE score. In this second neural network, the last layer is made of a single neuron (instead of two) and its activation is set to be linear rather than a softmax. By selecting the appropriate threshold, which is, according to [33], within the range of 19–24, we separate the samples into two groups indicating whether their members correspond to a subject with dementia or to a control subject.

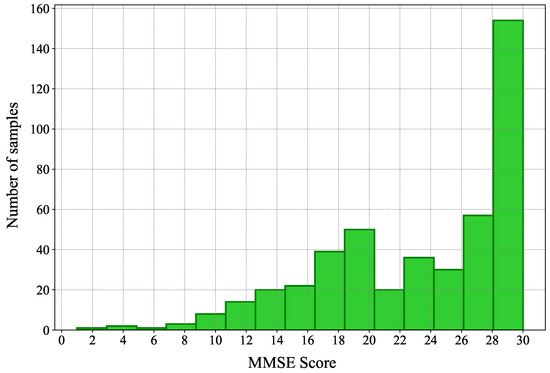

3.2.5. Data Augmentation

As stated above, the number of samples with severe dementia (i.e., with a low MMSE score) is very limited in our data set. In the next section, we will describe in more detail the data set at hand. However, to justify the data augmentation, we present the distribution of the samples in the data set. In Figure 6, we show the distribution of the MMSE scores among samples in the Pitt corpus. As can be seen, the number of samples with low and moderate MMSE scores is by far smaller than that of samples with high MMSE scores. Unsurprisingly, during the classification phase, our classification as well as our regression neural networks had difficulty identifying samples in the lowest range of MMSE scores.

Figure 6.

The distribution of the MMSE scores among samples in the Pitt corpus.

To address this issue, we opted for a data-augmentation technique to create more samples for the lower end of the spectrum. We queried GPT-3 for such samples, and observed the data generated. Below are two examples of data generated:

- Example 1: “In this … picture, there’s a … um … room, I believe. It’s disorganized, with water … spilling from the sink. And there are … um … children, yes, children present. They seem to be … doing something near the counter. Sorry, it’s a bit fuzzy.”

- Example 2: “I can see … a room, maybe a kitchen. There is this one … um … the boy … and water. Two children … yes, children there. They’re … um … A woman here.”

While not similar to the samples we have in our data set, the generated samples are representative of what a person with sever dementia might say. In total, we generated 50 samples of data for people with severe dementia. GPT-3 was queried to automatically attribute scores between 1 and 9 depending on the grammatical correctness of the sentences they contain. For the above two samples, the first was attributed a score of 9, whereas the second was attributed a score equal to 3. In addition, we queried GPT-3 for 30 samples for subjects with MMSE scores between 10 and 18, and 30 samples with MCI, which were attribute scores from 19 to 24. GPT-3 was asked to attribute the scores to these samples as well.

It is important to keep in mind that the augmented data were used purely for training, and none of them were used as part of the test set during any of the classification phases.

4. Data Collection and Evaluation Methods

4.1. Data Set

This work utilizes the TalkBank data set, specifically the DementiaBank subset, obtained from TalkBank [14], to assess the performance of our proposed method against existing ones [8,9,11,12] in detecting dementia. TalkBank is a comprehensive collection of multilingual data established in 2002 to foster research in human and animal communication. It encompasses diverse domains such as first/second language acquisition, conversation analysis, and dementia, among others. The DementiaBank subset comprises video and audio recordings, along with transcribed texts in different languages, featuring individuals with dementia and individuals without it (i.e., control subjects). For our analysis, we focused on the Pitt corpus within DementiaBank, which contains audio samples and manually annotated transcripts of picture-description tasks. In this task, patients were asked to describe the content of a given picture with the assistance of an interviewer. As stated above, we limited our analysis to the Pitt corpus. The corpus encompasses 309 recordings from 194 AD patients and 243 recordings from 98 healthy individuals. The average word count in the AD patients’ text samples is 91, while it is 97 in the text samples of healthy subjects. For augmentation purposes, we solely added to these data the samples generated for low and mild MMSE scores (i.e., samples with an MMSE score below 10 and samples with an MMSE score between 10 and 24, respectively) and considered that each five samples were generated by a different subject. In Table 2, we show the structure of the data set before and after augmentation.

Table 2.

The structure of the data set used for classification.

The same data set was also used for regression. However, not all subjects were given accurate MMSE scores. In addition, the same subject might have scores in different ranges. For instance, a subject with MCI might sometimes have scores higher than 24, which makes the decision as to how to categorize such subjects a bit harder. In Table 3, we show the distribution of samples before and after augmentation in our data set. Note that the same subject could belong to different groups, whereas samples are exclusive to each group. When running the regression-based classification, rather than opting for a voting approach, we considered a subject to have dementia if at least one of his/her samples has an MMSE score below a certain threshold.

Table 3.

The structure of the data set used for regression.

4.2. Conventional Methods

In Section 2.1, we introduced a few works related to the automatic detection of dementia. Some of these works will be used to position the performance of our proposed method in this field. In the following, we briefly describe the conventional methods against which we evaluate our proposal:

- Wankerl et al. [8] proposed a simple approach that relies on n-grams to detect AD from spoken language.

- Fritsch et al. [9] used a statistical n-gram language model that they enhanced by using the rwthlm toolkit [22] to create NNLMs with LSTM cells. The model is used to evaluate the perplexity of the subjects to derive who has dementia and who does not.

- Bouazizi et al. [11] used the language model AWD-LSTM [23], which they fine-tuned for classification to identify dementia vs. control subjects.

- Zheng et al. [12] introduced the concept of simplified language models, which they developed with reference to part-of-speech tags to train the two-perplexity method [10]. They used such simplified language models to overcome the issue of the limitation of training data in the corpus used (i.e., the Pitt corpus of the DementiaBank [14]).

- GPT-4 was also queried with the text samples for all subjects with the purpose of identifying whether the sample is generated by a subject with dementia or a healthy one.

Since the aforementioned works were evaluated on the same data set as ours, we refer to the results reported in their respective papers during the evaluation.

4.3. Evaluation Metrics

To assess the performance of our proposed method in detecting dementia, we used the following classification evaluation metrics:

- Accuracy: this metric measures the ratio of the total number of instances (subjects or samples) correctly classified to the total number of samples.

- Precision: this metric is calculated for each class. It measures the ratio of instances correctly classified for that class to the instances classified as part of that class.

- Recall: this metric is also calculated for each class. It measures the ratio of instances correctly classified for that class to the total instances of that class.

- F1-Score: this metric combines the precision and the recall and is usually used when the data set is unbalanced, or to compare approaches to one another.

For a given class, let us denote the number of true positives, true negatives, false positives, and false negatives as , , , and , respectively. The aforementioned metrics are calculated as follows:

In addition to the aforementioned metrics, during the regression phase, we use the Mean Absolute Error (MAE) between the estimated MMSE score and the ground-truth one as a measure of correctness of the regression. Obviously, the classification based on the regression is evaluated using the accuracy, precision, recall, and F1-Score.

These metrics are measured at the subject level and the sample level. As mentioned, in the Pitt corpus, each subject has multiple samples collected at different times. Given that the amount of data available in the Pitt corpus is very limited (i.e., 552 samples in total), splitting the data set into a training set, a validation set, and a test set will reduce the amount of data used for training to a point where it becomes hard for the neural network to find patterns that are valid for enough samples. Therefore, we opted for a 10-fold cross-validation instead. In brief, we split the data set into 10 sub-sets. In every fold, we used nine of the subsets to train the neural network and evaluated its performance on the remaining one. The split of the data set was carried out at the subject level (i.e., samples of a given subject were all used exclusively for training or for validation). However, to ensure that the trained models are good and usable, the models were selected at an epoch that presented stable results for three epochs before and three epochs after.

5. Experimental Results

5.1. Performance of the Proposed Method

5.1.1. Direct Classification-Based Dementia Detection

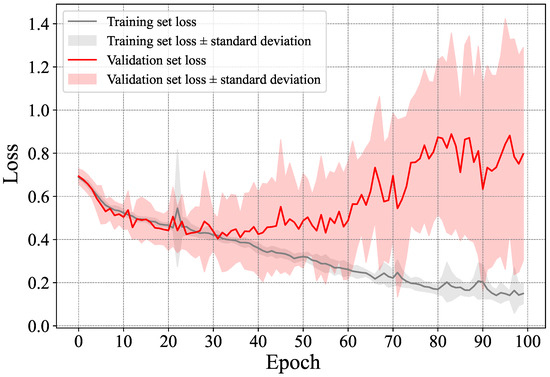

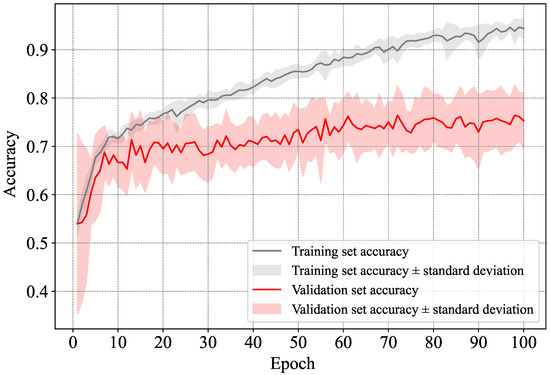

As previously stated, we ran our method using a 10-fold cross-validation approach. For every sub-set, we trained the LSTM network from scratch using the data from the remaining ones. The network was trained for 100 epochs, and the average loss and accuracy are reported for all the epochs. In Figure 7 and Figure 8, we show, respectively, the average loss and accuracy as well as their standard deviation in all the folds for both the training and validation sets. Note that the validation set, for each fold, is made up of a number of subjects, which justifies the high standard deviation in the figure. As can be seen in Figure 7, the trained models started overfitting (on average) after epoch 40 or so, as both the training and validation loss started to diverge, with the divergence becoming more noticeable after epoch 60. However, the accuracy itself was rather stable. This means that the models generated around the 40-th epoch are more reliable than ones at the end of the training (i.e., after 100 epochs). With that in mind, as can be seen in Figure 8, the accuracy reached over 75% starting from epoch 60 and over 77% around the end of the 100 epochs. This is in part due to the very limited amount of training data used in each fold. Overfitting is expected to occur when using so little data, despite opting for a simple neural network and avoiding using time-distributed dense and convolutional layers before the LSTM one. This observation goes along with prior works such as that of Fritsch et al. [9], where it has been suggested to stop the training at epoch 20, as such a problem is unavoidable. Note that these results are reported at the sample level, and the results for subject-level classification were derived after the classification of samples was perfomred.

Figure 7.

Average and standard deviation of the loss in the training and validation sets.

Figure 8.

Average and standard deviation of the accuracy in the training and validation sets.

To avoid encumbering the figure, we did not include the accuracy and loss of the training and validation sets after augmentation. However, they followed very similar patterns to those before augmentation.

For a better evaluation, in Table 4, we show the performance at its best for both classes (i.e., dementia and control) as well as the overall performance, at the subject level and at the sample level. We also show the performance of the classification after data augmentation. Note that the augmented data were added in their entirety to the training set in each fold, and were not split among the folds.

Table 4.

Classification accuracy, precision, recall, and F1-Score of the proposed method.

As can be seen, the classification reached an accuracy equal to 80.98% at the sample level and 82.88% at the subject level. The class “dementia”, in particular, presented an accuracy equal to 84.02% with a precision equal to 89.56%. Note that the high precision is partially due to the imbalance in the data set between the two classes. More interestingly, despite the amount of augmented data that have been added to the training set throughout all of the folds, the accuracy has increased by less than 2%. This increase in accuracy is mainly attributed to the better detection of the dementia class. For this particular class, the accuracy has increased from 81.23% to 84.14% at the sample level, and from 84.02% to 85.57% at the subject level. To recall, we augmented the data set by solely adding samples of the dementia class, mainly for the lower end of the spectrum (i.e., samples with MMSE scores below 10). This led to an improvement of the detection of such samples in our data set.

In Section 3.2.2, we proposed a small alteration of our proposed approach, where instead of counting the number of words related to the subject present in the sentence, we simply set the cell value to 1 if so, and otherwise the cell value is set to 0. In Table 5, we report the results of the original method against the alternative one with and without augmentation. As can be seen, even though the approaches present good performance, it is obvious that counting the words of a topic rather than checking the presence of one of its words yields a much better accuracy.

Table 5.

A comparison between the classification accuracy, precision, recall, and F1-Score of the two alternatives of the proposed method.

5.1.2. Regression-Based Dementia Detection

Here, we report the regression results from which we derived the classes of samples by setting a threshold above which a sample was considered to belong to a control subject, and below which a sample was considered to belong to a healthy subject. We first report the results prior to data augmentation, and then observe the improvements when using data augmentation.

Before Data Augmentation

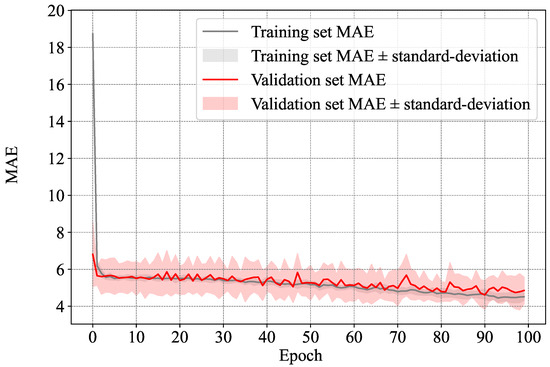

As previously described in Section 3.2 and Figure 1, we evaluated the MMSE score of the different samples through regression, and used the resulting scores to evaluate whether a subject has dementia or not. In Figure 9, we show the average loss in terms of Mean Absolute Error (MAE) as well as its standard deviation in all of the folds for both the training and validation sets.

Figure 9.

Average and standard deviation of the MAE between the estimated MMSE score and the ground-truth one in the training and validation sets.

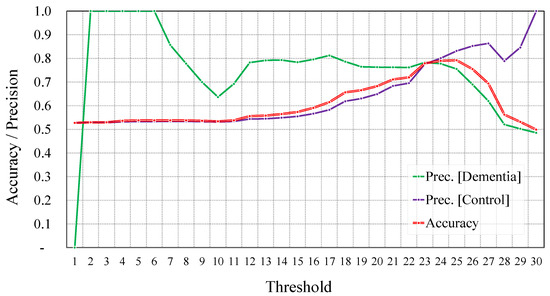

As stated above, it has been observed in [33] that people with MCI tend to obtain an MMSE score in the range of 19–24. Setting a threshold equal to 24 to identify subjects with dementia vs. healthy ones did indeed yield a good classification accuracy of 78.99%. However, to identify the optimal results, rather than fixing the threshold, we varied it and observed the overall accuracy as well as the precision of the two classes. In Figure 10, we show the overall accuracy as well as the precision of the two classes of classification for different thresholds with a step of one point in the MMSE score.

Figure 10.

Overall accuracy and precision per class using different thresholds of the MMSE score before augmentation.

Obviously, for lower threshold values, most samples will be classified as healthy (i.e., control subjects). However, samples that are detected as belonging to the dementia class are very likely to be correctly classified. In a similar way, for very high threshold values, most samples will be classified as being from subjects with dementia. However, samples that are detected as belonging to the healthy class are also very likely to be correctly classified. This justifies the high precision for the dementia class for low thresholds, and the high precision for the healthy class for high thresholds.

Accuracy-wise, the highest accuracy was achieved when the threshold was equal to 25, and it reached 79.2%. For the same threshold, the precision of the dementia and control classes reached 75.5% and 83.2%, respectively. However, this discrepancy in terms of precision was minimal when setting the threshold to 23. The overall accuracy reached 77.9%, with a precision equal to 78.4% and 77.6% for the dementia and control classes, respectively.

After Data Augmentation

Since the MAE pattern during the training exhibited a pattern very similar to that reported in Figure 9, we do not show it in this section to avoid encumbering the paper.

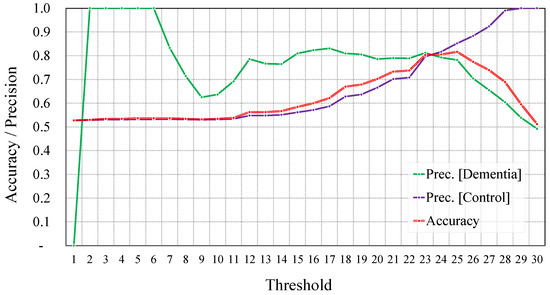

Similar to what we did before augmentation, to identify the optimal results, we varied the threshold and observed the overall accuracy as well as the precision of the two classes. In Figure 11, we report these metrics for different thresholds with a step of one point in the MMSE score.

Figure 11.

Overall accuracy and precision per class using different thresholds of the MMSE score after augmentation.

As we can observe, the highest accuracy was also obtained when we set the threshold to 25. The accuracy obtained for this threshold was equal to 81.2%, with a precision equal to 78.2% and 85.2% for the dementia and control classes, respectively. The optimal balance between accuracy and precision was obtained for threshold values equal to 23 and 24. For consistency with the settings from before augmentation, we report only the results for a threshold value equal to 23. Here, the overall accuracy reached 80.3%, and the precision reached 81.2% and 79.6% for the dementia and control classes, respectively.

Summary of Regression-Based Classification

We summarize the results of classification using these two thresholds (i.e., 25 and 23) to segregate dementia samples from healthy ones in Table 6.

Table 6.

A comparison between the classification accuracy, precision, recall, and F1-Score for the regression-based identification before and after data augmentation.

As can be seen, in this context (i.e., the context of regression-based classification), the data augmentation has contributed much to improving the classification compared to the direct classification approach. Unlike the direct classification, here, the data augmentation contributed by about 2.5% in improving the classification. This can be attributed to the fact that the regression benefits more from the augmentation of samples at the lower end of the spectrum of MMSE scores. Prior to augmentation, there were very few samples with very low MMSE scores. This makes it hard for the neural network to learn useful patterns from these samples. After augmentation, the number of such samples increased remarkably in the training set, allowing the network to learn useful patterns. On the other hand, in the case of direct classification, samples of MMSE scores ranging from 1 to 24 are put all together within the same class. This hinders the network’s ability to find useful patterns that are common to all these samples.

5.2. Proposed Approach against Conventional Ones

In Table 7, we compare our proposed method against the conventional ones described in Section 4.2. As can be seen, our approach outperformed the conventional ones, except for that of Fritsch et al. [9], whose accuracy reached 85.6%. Our obtained accuracy at the subject level was equal to 83.6%, whereas that of Wankerl et al. [8], Bouazizi et al. [11], and Zheng et al. [12] reached 77.1%, 81.5%, and 75.3%, respectively.

Table 7.

A comparison between the proposed approach and the conventional ones.

The approach of Fritsch et al. [9], relying on sophisticated language models, benefits from the power of the language model itself. A language model is meant to analyze bodies of text data to provide a basis for their word predictions. As such, they are able to identify when a given text follows correct grammar or uses the appropriate words in the text’s context. This explains the good accuracy of this approach, which reached 85.6%. The same can be said for the results obtained using GPT-4. GPT-4 is the leading Large Language Model (LLM) in today’s NLP tasks and has shown good results in almost all of the classification tasks that it was challenged with. The results obtained using GPT-4 reached 86.2%, which does not outperform our method or the conventional ones by a large margin. As stated previously, the gain in performance might not justify the use of such LLMs, in particular if the models are to be used by regular users who do not have access to such models.

Our approach, on the other hand, relies on the topics on which the subject is focusing in the image they are describing. This goes beyond the reach of the language model and addresses how often the subject switches from one topic to another, and their ability to stay focused on a single subject until they fully describe it. It also addresses how they relate things to one another in the image. For instance, a healthy subject might relate the overflowing water to the mother’s lack of attention, whereas a dementia subject might put it in the same sentence as the falling boy.

5.3. Discussion

Our approach is built on the idea that dementia subjects and control subjects have different behaviors when it comes to how focused they are on a single topic, and how frequently they move from one topic to another. They also exhibit different behaviors when it comes to the topics of interest themselves. In this sense, our method tracks, over time, the topics a given subject is focusing on. By analyzing two components, namely the overall distribution of topics of interest as well as how they change from one sentence to the next, the LSTM neural network is meant to capture the trend differences between control subjects and dementia subjects.

Our observations show that control subjects spend more time (compared to dementia subjects) describing the actions of the boy interacting with the cookie jar and the mother interacting with the dish in her hand. Dementia subjects, on the other hand, seem to spend, relatively to the control ones, more time describing the window and drapes, the outside, and the kitchen overall. In addition, the jump from one topic to another is much more noticeable for dementia subjects than for control subjects.

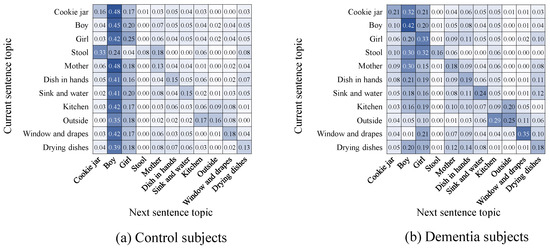

This can be quantitatively measured using the matrices showing topics of interest in two (non-directly) consecutive sentences as shown in the tables given by Figure 12. This figure shows the next appearing topics given a topic discussed in a sentence. As can be seen, control subjects had a higher tendency to talk about the boy in consecutive frames and to get back to him after talking about other topics. Control subjects seemed to switch between talking about the boy and the girl more often. In addition, dementia subjects seemed also to focus more on topics that are not so dominant from the perspective of control subjects (e.g., the drying dishes and the dishes in the hands of the mother).

Figure 12.

Probabilities of topic change from one sentence to the next.

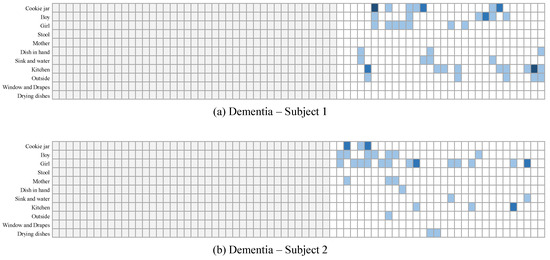

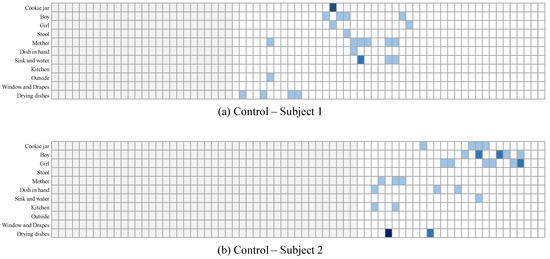

It is important to keep in mind that the observations made above are for consecutive sentences in general. While this shows some aspects of the differences, more important aspects require analyzing the transition between topics for a more extended period of time. To visualize this, in Figure 13 and Figure 14, we show the topics of interest discussed in 2 samples collected from dementia subjects, and two samples collected from control subjects, respectively. In these figures, the gray areas are the padding added at the beginning. The blue areas are where the subjects used words related to each topic. The darker the color is, the more words are used in the sentence. As can be seen, a clear difference can be seen between the patterns of both groups. Control subjects seemed to discuss most of the topics in a clear order: the two subjects were first interested in the center of the image where they described the mother and her actions, and then were interested in the children and their actions. The dementia subjects, on the other hand, seemed to have their attention alternate quite often between the mother and her actions and the children and their actions. They also seemed to repeat, quite often, the same words related to a given subject in the same sentence, or over consecutive sentences. While this is just a qualitative observation of ours, the LSTM is more capable of finding such patterns and building classifications based on them.

Figure 13.

Topics discussed for 2 samples collected from dementia subjects.

Figure 14.

Topics discussed for 2 samples collected from control subjects.

6. Conclusions

In this paper, we proposed a method for dementia detection from the transcribed speech of subjects. Unlike conventional methods [8,9,10,11,12], which rely on language models to address the ability of the subject to make coherent and meaningful sentences, our approach relies on the center of focus of the subjects and how it changes over time as the subject describes the content of the cookie theft picture. To do so, we divide the cookie theft image into regions of interest, and identify, in each sentence spoken by the subject, which regions are being talked about. We employed an LSTM neural network to learn different patterns of dementia subjects and control ones and used it to perform a leave-one-subject-out classification. Our experimental results on the Pitt corpus from the DementiaBank resulted in a 83.56% accuracy at the subject level and 82.07% at the sample level. The performance of our proposed method outperformed, by far, the conventional methods [8,11,12], which reached, at best, an accuracy equal to 81.5% at the subject level.

Author Contributions

Conceptualization, M.B. and C.Z.; methodology, M.B.; software, M.B.; validation, M.B., C.Z. and S.Y.; formal analysis, M.B. and C.Z.; investigation, M.B. and C.Z.; resources, M.B. and C.Z.; data curation, M.B. and C.Z.; writing—original draft preparation, M.B. and C.Z.; writing—review and editing, S.Y. and T.O.; visualization, M.B. and S.Y.; supervision, T.O.; project administration, T.O.; funding acquisition, M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Japanese Grants-in-Aid for Scientific Research (KAKENHI) under Grant YYH3Y07.

Data Availability Statement

This research uses a data set offered by TalkBank which can be obtained upon request from the DementiaBank page.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AD | Alzheimer’s Disease |

| ASR | Automatic Speech Recognition |

| AUC | Area Under the Curve |

| DL | Deep Learning |

| FN | False Negative |

| FP | False Positive |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MCI | Mild Cognitive Impairment |

| MMSE | Mini-Mental State Examination |

| NLP | Natural Language Processing |

| NLTK | Natural Language Toolkit |

| NNLM | Neural Network Language Model |

| TP | True Positive |

| TN | True Negative |

References

- Bourgeois, J.A.; Seaman, J.; Servis, M.E. Delirium dementia and amnestic and other cognitive disorders. In The American Psychiatric Publishing Textbook of Psychiatry; American Psychiatric: Washington, DC, USA, 2008; p. 303. [Google Scholar]

- Saxena, S.; Funk, M.; Chisholm, D. World health assembly adopts comprehensive mental health action plan 2013–2020. Lancet 2013, 381, 1970–1971. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Dementia—Key Facts. Available online: https://www.who.int/news-room/fact-sheets/detail/dementia (accessed on 1 November 2023).

- Valcour, V.G.; Masaki, K.H.; Curb, J.D.; Blanchette, P.L. The detection of dementia in the primary care setting. Arch. Intern. Med. 2000, 160, 2964–2968. [Google Scholar] [CrossRef] [PubMed]

- Ólafsdóttir, M.; Skoog, I.; Marcusson, J. Detection of dementia in primary care: The Linköping study. Dement. Geriatr. Cogn. Disord. 2000, 11, 223–229. [Google Scholar] [CrossRef] [PubMed]

- Grande, G.; Vetrano, D.L.; Mazzoleni, F.; Lovato, V.; Pata, M.; Cricelli, C.; Lapi, F. Detection and prediction of incident Alzheimer dementia over a 10-year or longer medical history: A population-based study in primary care. Dement. Geriatr. Cogn. Disord. 2021, 49, 384–389. [Google Scholar] [CrossRef] [PubMed]

- Murugan, S.; Venkatesan, C.; Sumithra, M.; Gao, X.Z.; Elakkiya, B.; Akila, M.; Manoharan, S. DEMNET: A deep learning model for early diagnosis of Alzheimer diseases and dementia from MR images. IEEE Access 2021, 9, 90319–90329. [Google Scholar] [CrossRef]

- Wankerl, S.; Nöth, E.; Evert, S. An N-Gram Based Approach to the Automatic Diagnosis of Alzheimer’s Disease from Spoken Language. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 3162–3166. [Google Scholar]

- Fritsch, J.; Wankerl, S.; Nöth, E. Automatic diagnosis of Alzheimer’s disease using neural network language models. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 5841–5845. [Google Scholar]

- Cohen, T.; Pakhomov, S. A tale of two perplexities: Sensitivity of neural language models to lexical retrieval deficits in dementia of the Alzheimer’s type. arXiv 2020, arXiv:2005.03593. [Google Scholar]

- Bouazizi, M.; Zheng, C.; Ohtsuki, T. Dementia Detection Using Language Models and Transfer Learning. In Proceedings of the 2022 5th International Conference on Software Engineering and Information Management (ICSIM), ICSIM 2022, New York, NY, USA, 21–23 January 2022; pp. 152–157. [Google Scholar] [CrossRef]

- Zheng, C.; Bouazizi, M.; Ohtsuki, T. An Evaluation on Information Composition in Dementia Detection Based on Speech. IEEE Access 2022, 10, 92294–92306. [Google Scholar] [CrossRef]

- Jang, H.; Soroski, T.; Rizzo, M.; Barral, O.; Harisinghani, A.; Newton-Mason, S.; Granby, S.; Stutz da Cunha Vasco, T.M.; Lewis, C.; Tutt, P.; et al. Classification of Alzheimer’s disease leveraging multi-task machine learning analysis of speech and eye-movement data. Front. Hum. Neurosci. 2021, 15, 716670. [Google Scholar] [CrossRef] [PubMed]

- MacWhinney, B. TalkBank. 1999. Available online: http://talkbank.org (accessed on 1 December 2023).

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Roark, B.; Mitchell, M.; Hosom, J.P.; Hollingshead, K.; Kaye, J. Spoken language derived measures for detecting mild cognitive impairment. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2081–2090. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Liang, X.; Batsis, J.A.; Roth, R.M. Exploring deep transfer learning techniques for Alzheimer’s dementia detection. Front. Comput. Sci. 2021, 3, 624683. [Google Scholar] [CrossRef] [PubMed]

- Jarrold, W.; Peintner, B.; Wilkins, D.; Vergryi, D.; Richey, C.; Gorno-Tempini, M.L.; Ogar, J. Aided diagnosis of dementia type through computer-based analysis of spontaneous speech. In Proceedings of the Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality, Baltimore, MD, USA, 22–27 June 2014; pp. 27–37. [Google Scholar]

- Luz, S.; de la Fuente, S.; Albert, P. A method for analysis of patient speech in dialogue for dementia detection. arXiv 2018, arXiv:1811.09919. [Google Scholar]

- Tóth, L.; Hoffmann, I.; Gosztolya, G.; Vincze, V.; Szatlóczki, G.; Bánréti, Z.; Pákáski, M.; Kálmán, J. A speech recognition-based solution for the automatic detection of mild cognitive impairment from spontaneous speech. Curr. Alzheimer Res. 2018, 15, 130–138. [Google Scholar] [CrossRef] [PubMed]

- König, A.; Satt, A.; Sorin, A.; Hoory, R.; Toledo-Ronen, O.; Derreumaux, A.; Manera, V.; Verhey, F.; Aalten, P.; Robert, P.H.; et al. Automatic speech analysis for the assessment of patients with predementia and Alzheimer’s disease. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2015, 1, 112–124. [Google Scholar] [CrossRef] [PubMed]

- Sundermeyer, M.; Schlüter, R.; Ney, H. rwthlm—The RWTH Aachen University neural network language modeling toolkit. In Proceedings of the Fifteenth Annual Conference of the International Speech Communication Association, Singapore, 14–18 September 2014. [Google Scholar]

- Merity, S.; Keskar, N.S.; Socher, R. Regularizing and optimizing LSTM language models. arXiv 2017, arXiv:1708.02182. [Google Scholar]

- Rodrigues Makiuchi, M.; Warnita, T.; Uto, K.; Shinoda, K. Multimodal fusion of bert-cnn and gated cnn representations for depression detection. In Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop, Nice, France, 21 October 2019; pp. 55–63. [Google Scholar]

- Rutowski, T.; Shriberg, E.; Harati, A.; Lu, Y.; Chlebek, P.; Oliveira, R. Depression and anxiety prediction using deep language models and transfer learning. In Proceedings of the 2020 7th International Conference on Behavioural and Social Computing (BESC), Bournemouth, UK, 5–7 November 2020; pp. 1–6. [Google Scholar]

- Orabi, A.H.; Buddhitha, P.; Orabi, M.H.; Inkpen, D. Deep learning for depression detection of twitter users. In Proceedings of the Fifth Workshop on Computational Linguistics and Clinical Psychology: From Keyboard to Clinic, New Orleans, LA, USA, 5 June 2018; pp. 88–97. [Google Scholar]

- Hayati, M.F.M.; Ali, M.A.M.; Rosli, A.N.M. Depression Detection on Malay Dialects Using GPT-3. In Proceedings of the 2022 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Kuala Lumpur, Malaysia, 7–9 December 2022; pp. 360–364. [Google Scholar]

- Attas, D.; Power, N.; Smithies, J.; Bee, C.; Aadahl, V.; Kellett, S.; Blackmore, C.; Christensen, H. Automated Detection of the Competency of Delivering Guided Self-Help for Anxiety via Speech and Language Processing. Appl. Sci. 2022, 12, 8608. [Google Scholar] [CrossRef]

- Jan, Z.; Ai-Ansari, N.; Mousa, O.; Abd-Alrazaq, A.; Ahmed, A.; Alam, T.; Househ, M. The role of machine learning in diagnosing bipolar disorder: Scoping review. J. Med. Internet Res. 2021, 23, e29749. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Schoene, A.M.; Ji, S.; Ananiadou, S. Natural language processing applied to mental illness detection: A narrative review. NPJ Digit. Med. 2022, 5, 46. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Howard, J.; Ruder, S. Universal language model fine-tuning for text classification. arXiv 2018, arXiv:1801.06146. [Google Scholar]

- Pradier, C.; Sakarovitch, C.; Le Duff, F.; Layese, R.; Metelkina, A.; Anthony, S.; Tifratene, K.; Robert, P. The Mini Mental State Examination at the Time of Alzheimer’s Disease and Related Disorders Diagnosis, According to Age, Education, Gender and Place of Residence: A Cross-Sectional Study among the French National Alzheimer Database. PLoS ONE 2014, 9, e103630. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).