Automatic 3D Building Model Generation from Airborne LiDAR Data and OpenStreetMap Using Procedural Modeling

Abstract

1. Introduction

2. Materials and Methods

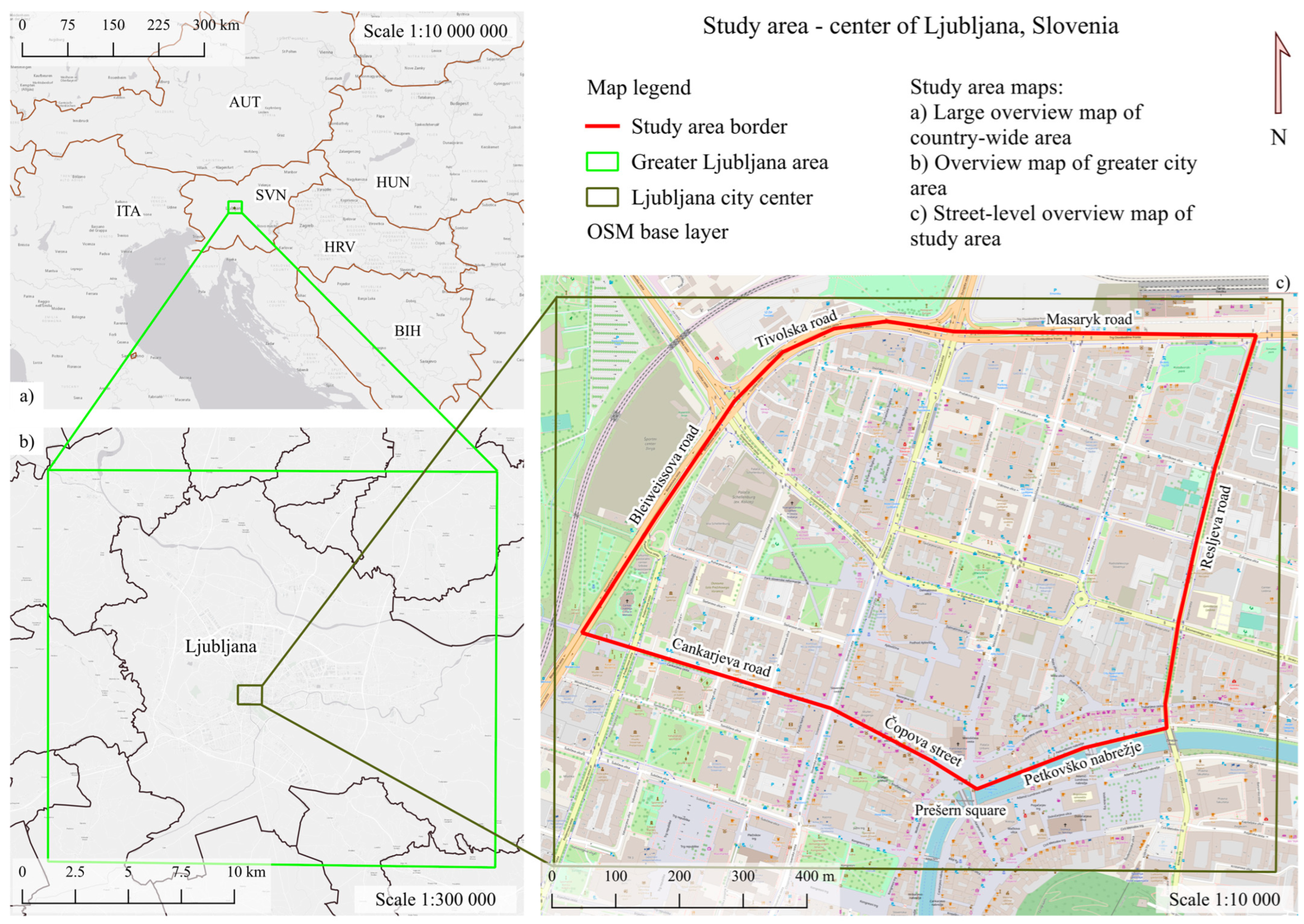

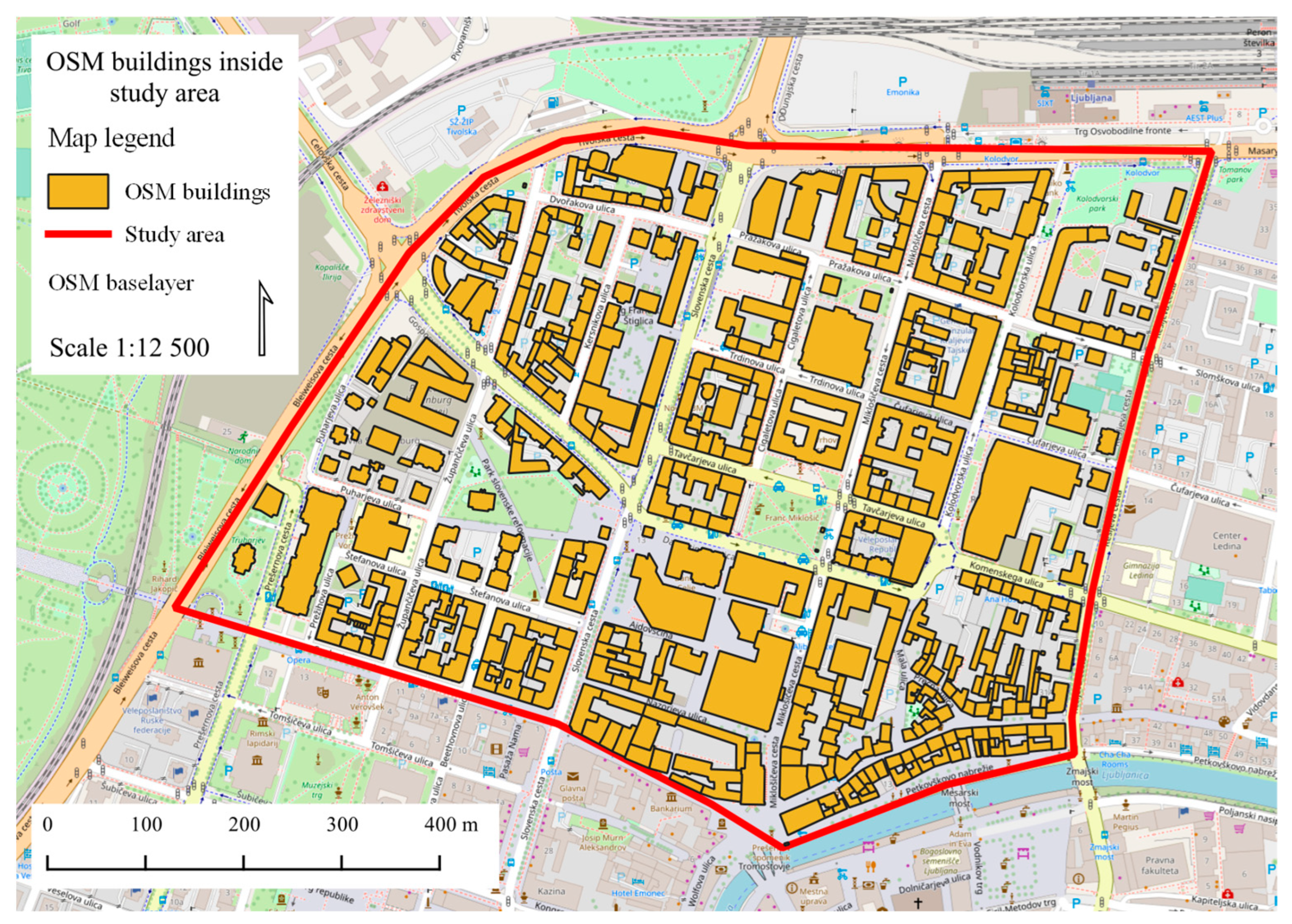

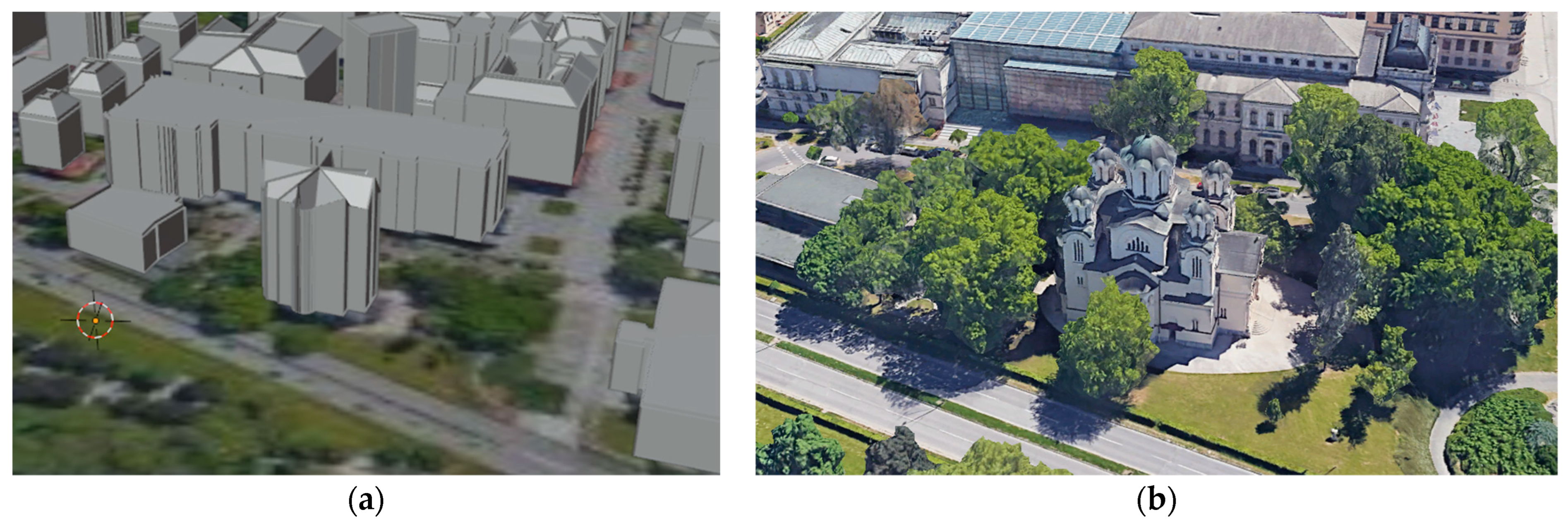

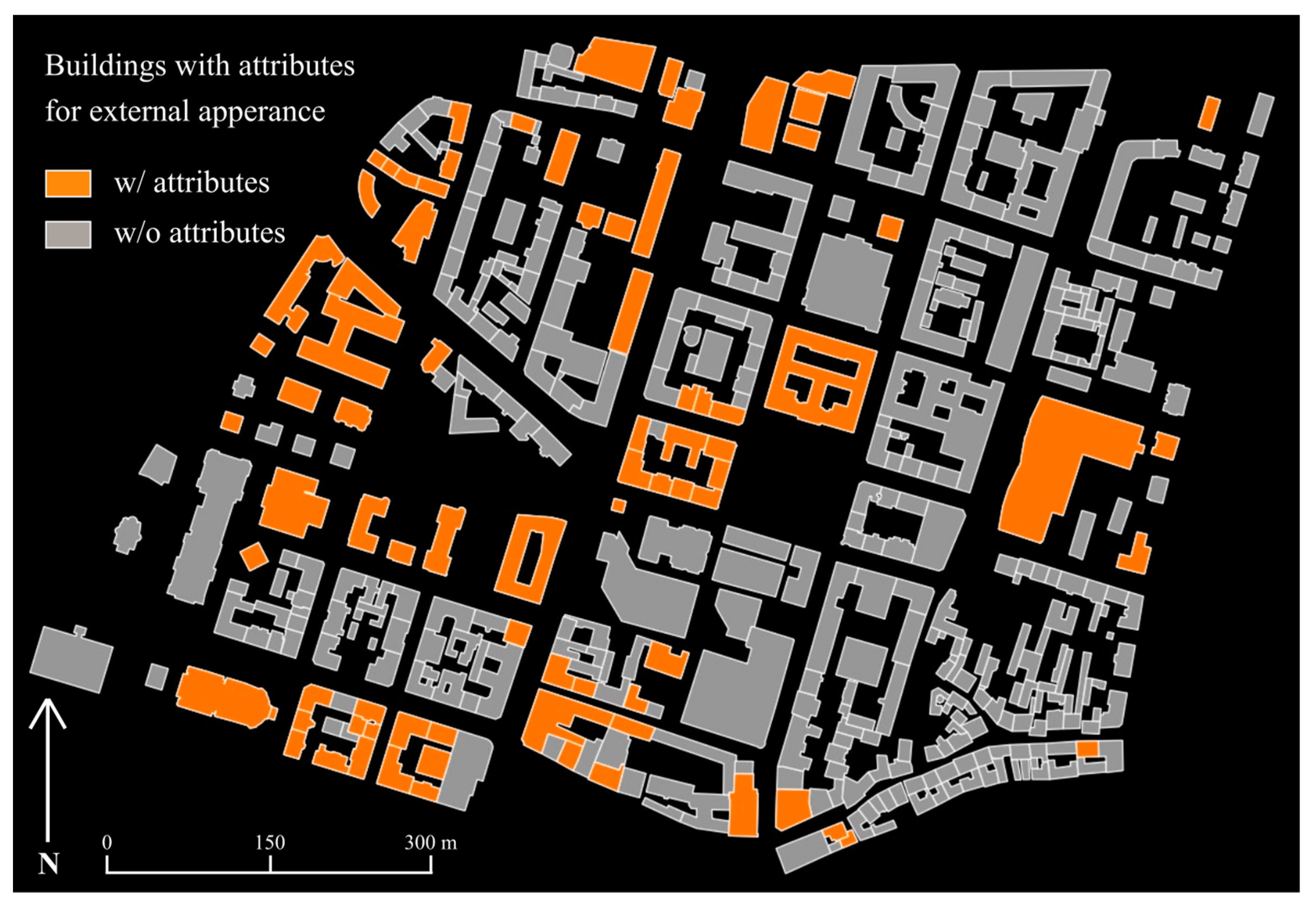

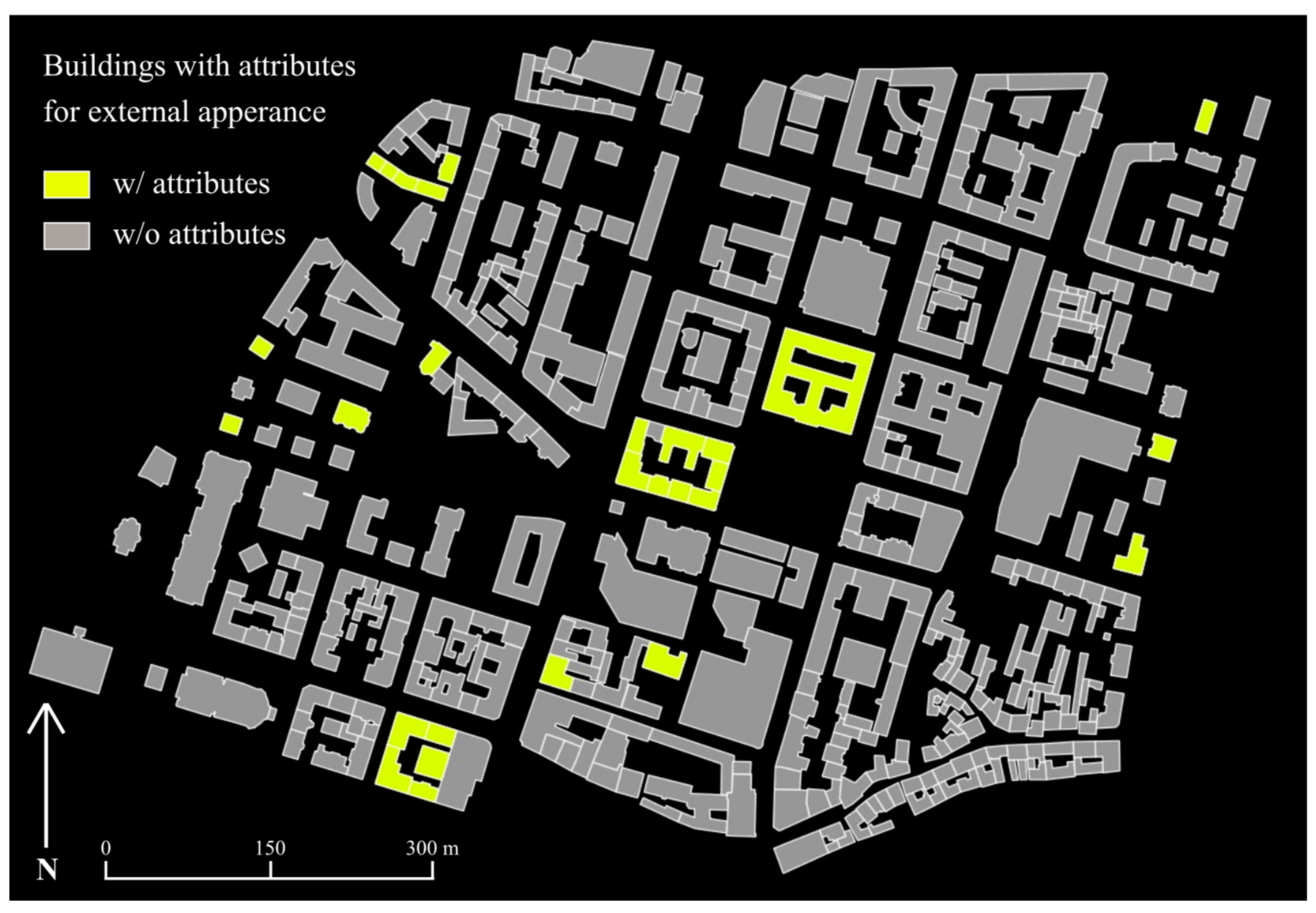

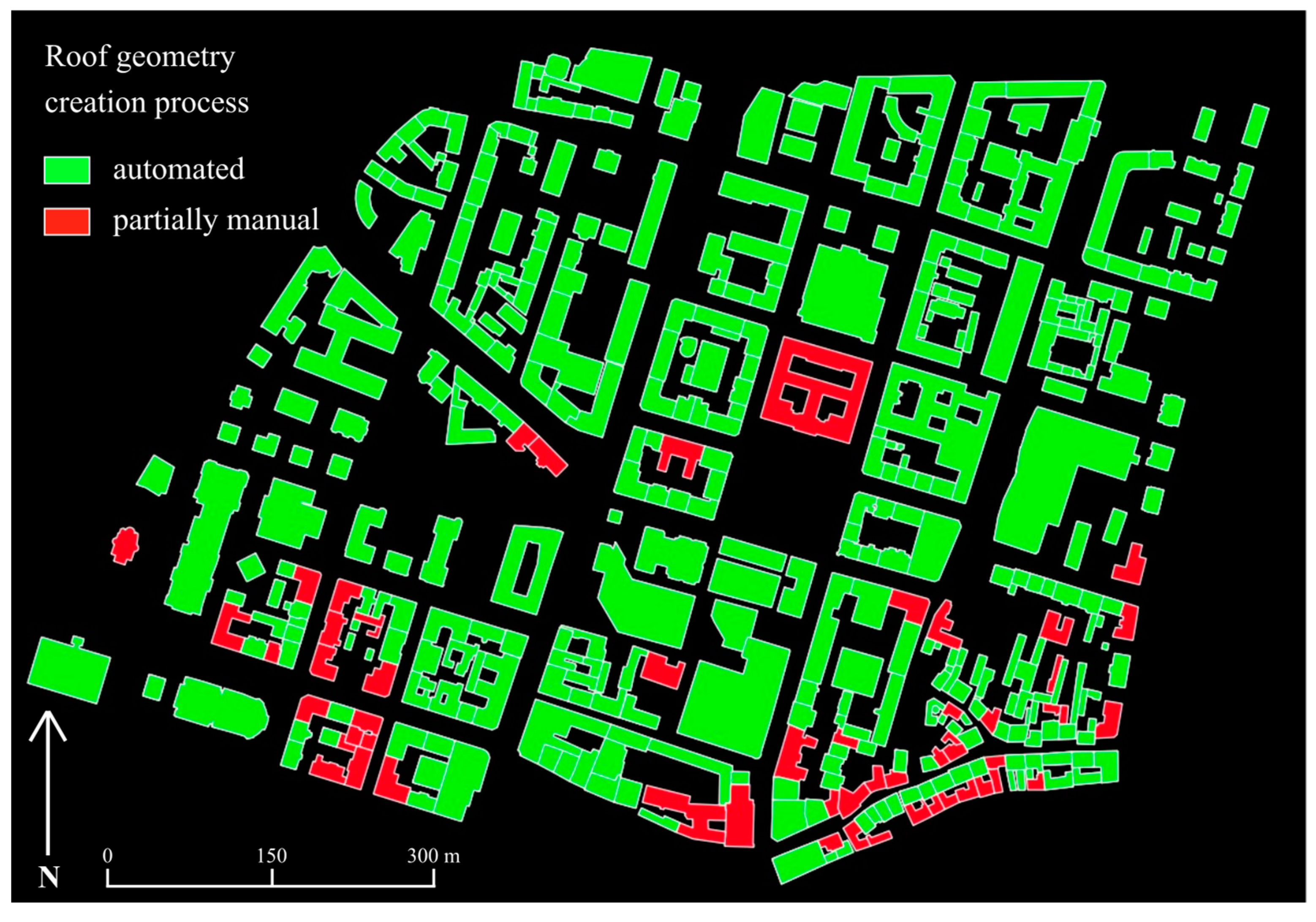

2.1. Study Area

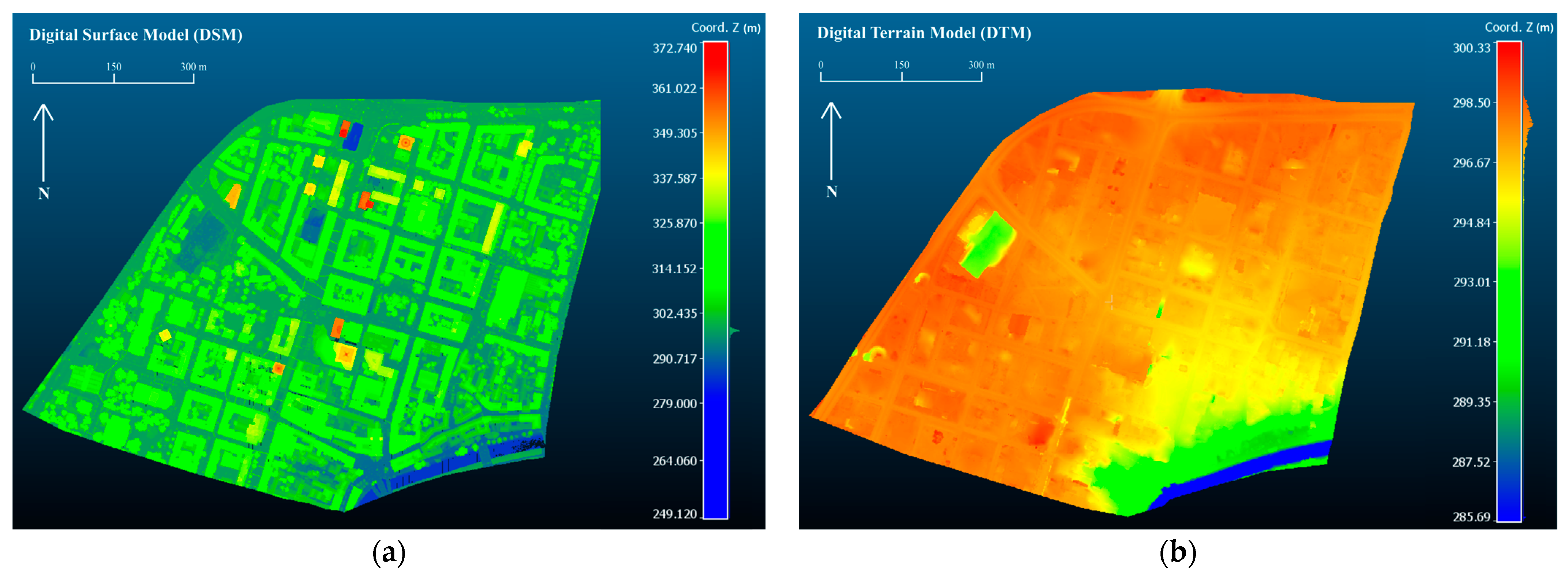

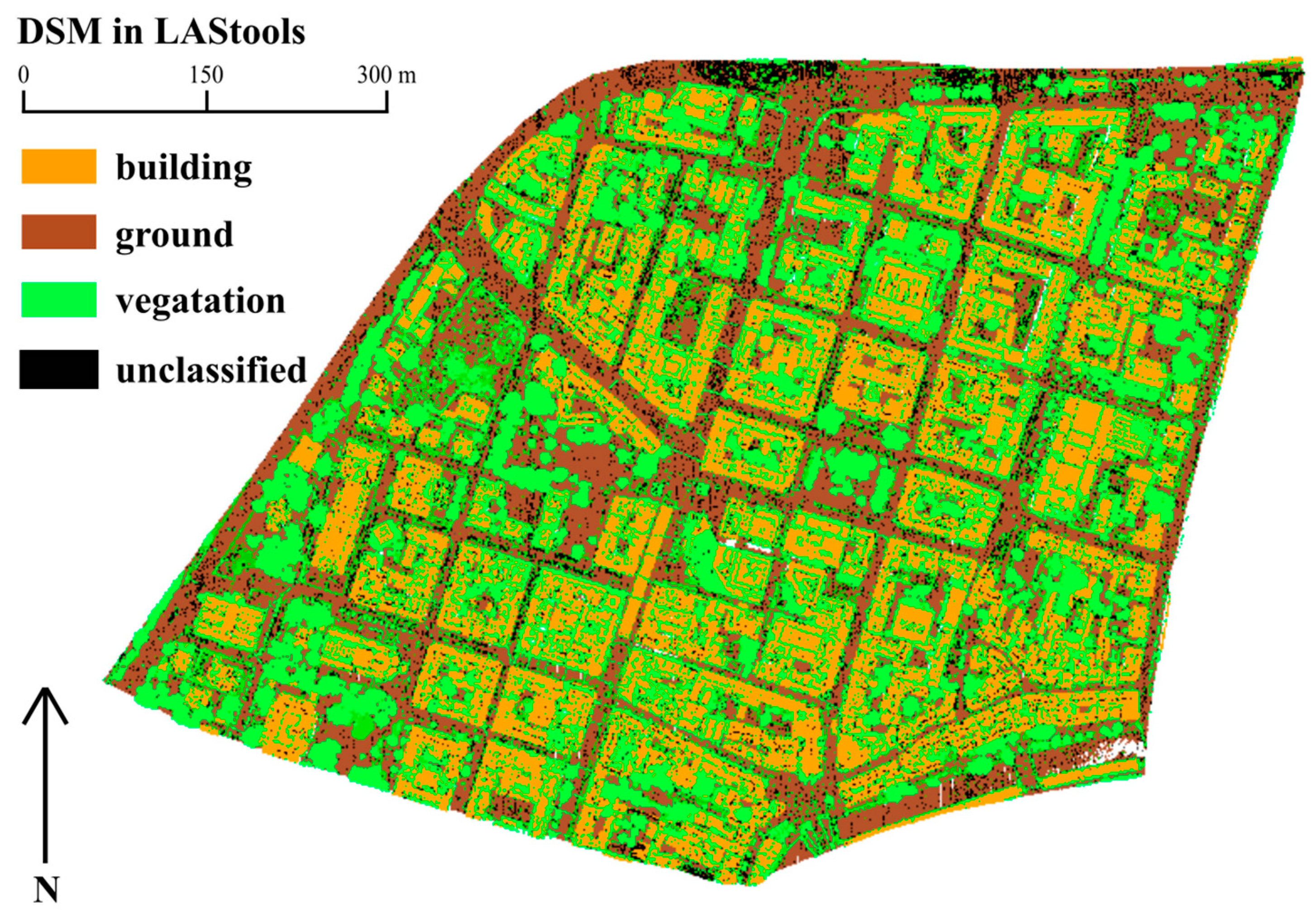

2.2. Data Processing

- OTR—(Oblak Točaka Reljefa) georeferenced relief point cloud containing only points classified at the ground (the storage format is zLAS);

- GKOT—(Georeferencirani i Klasificirani Oblak Točaka) georeferenced and classified point cloud, which includes points from the ground, buildings, and three different types of vegetation (the storage format is zLAS);

- DEM (digital elevation model (DEM), which is an interpolation of the relief based on OTR points), stored in a regular grid of 1 m × 1 m in the form of an ASCII file.

2.3. Comparison of Software for Procedural Modeling

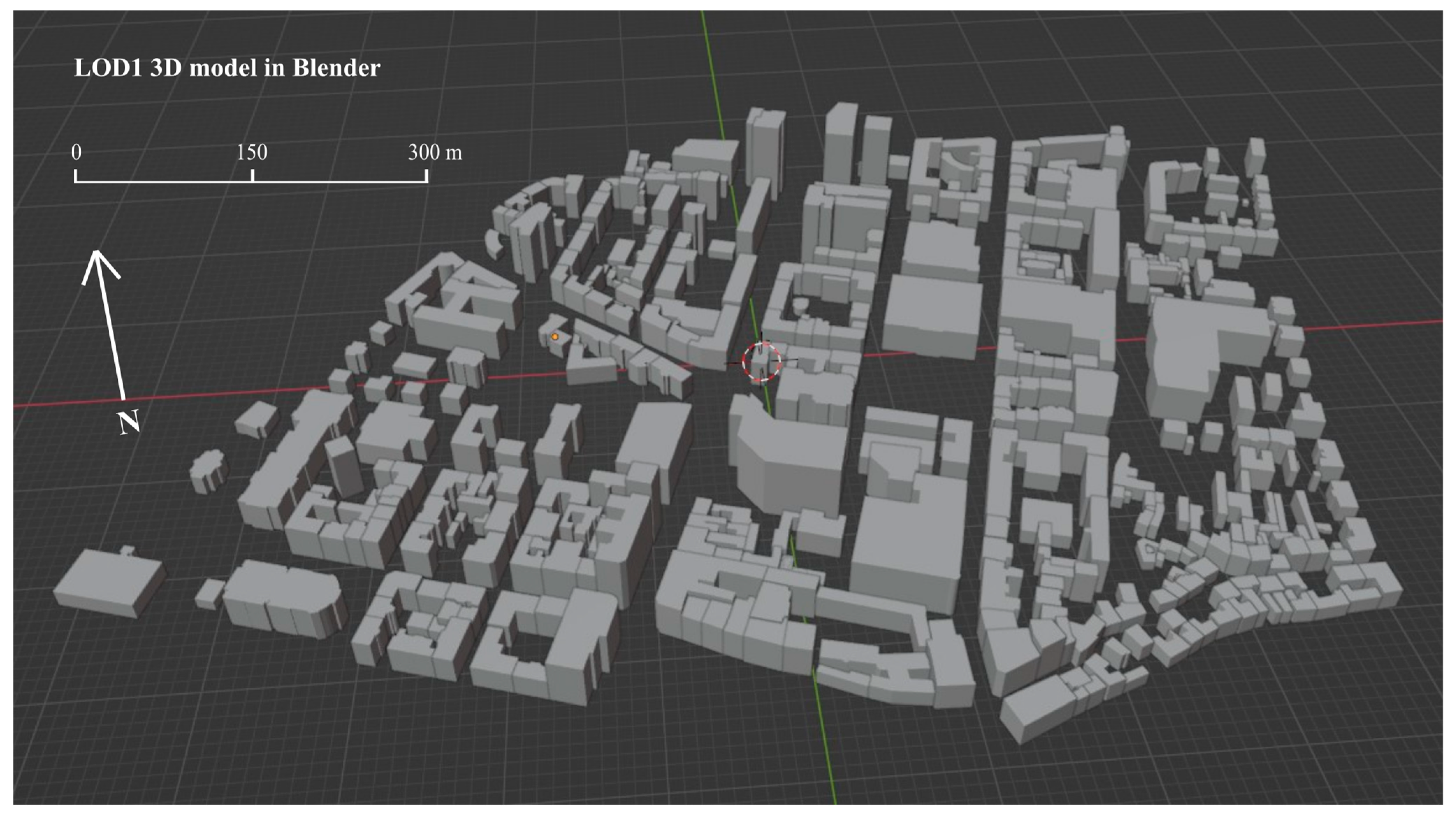

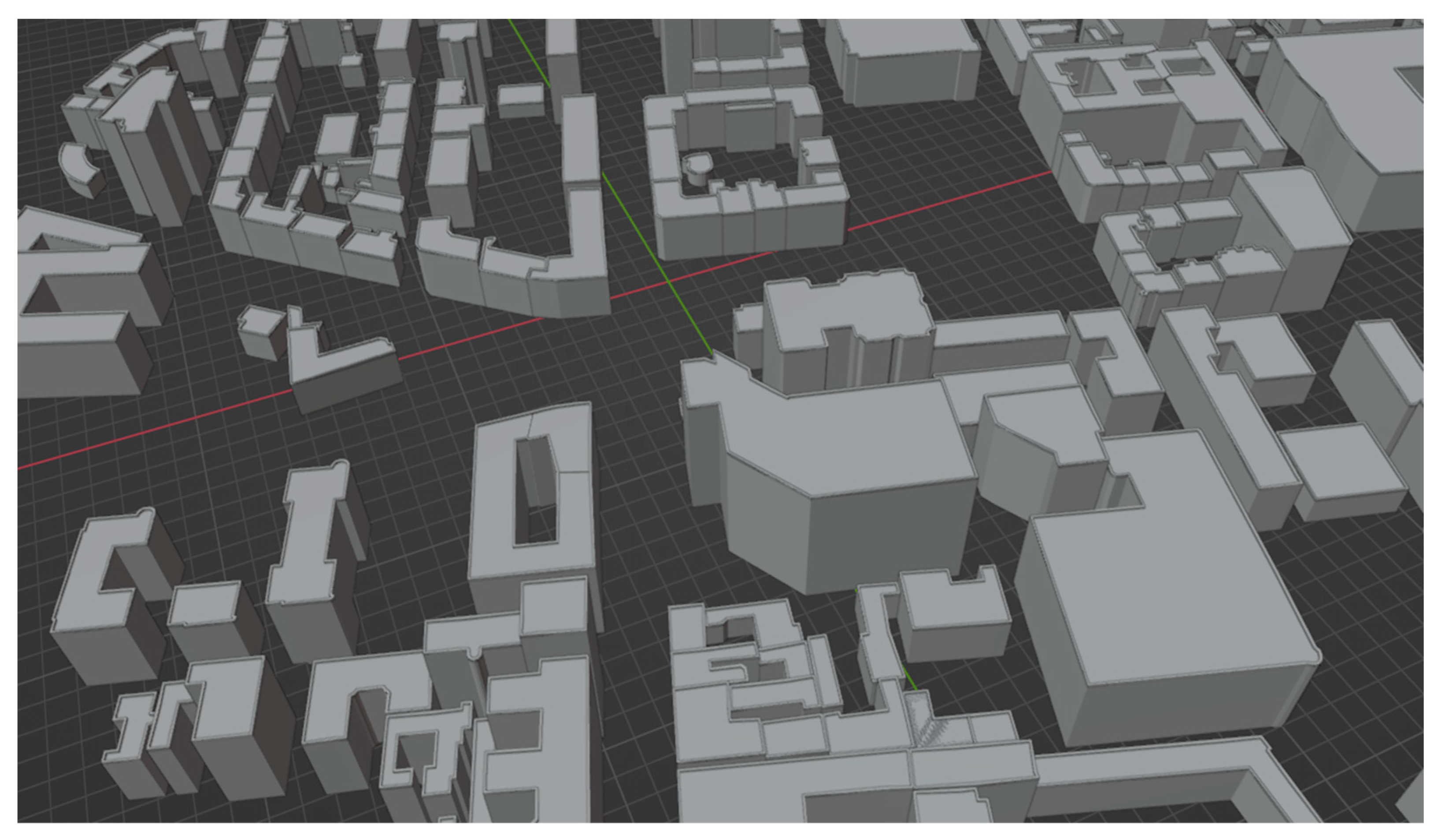

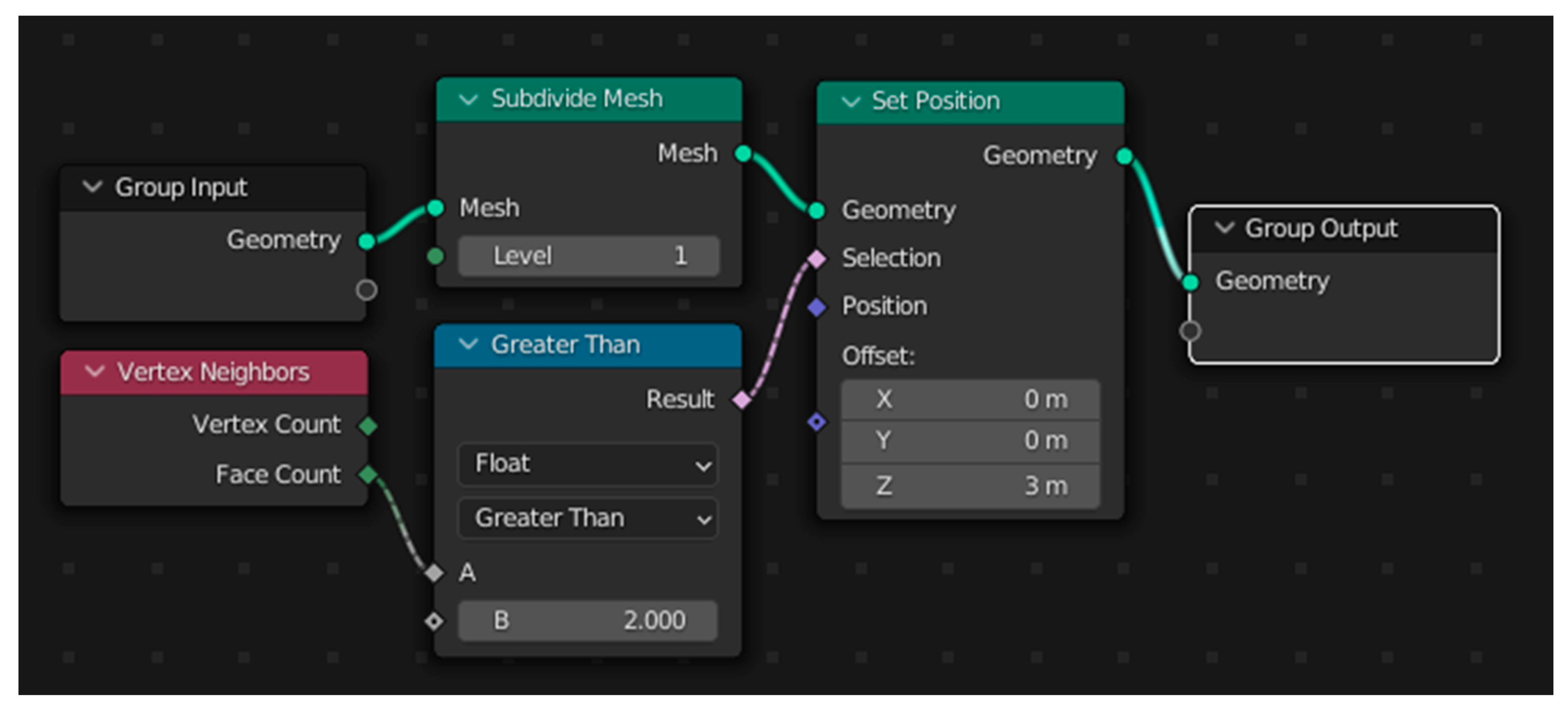

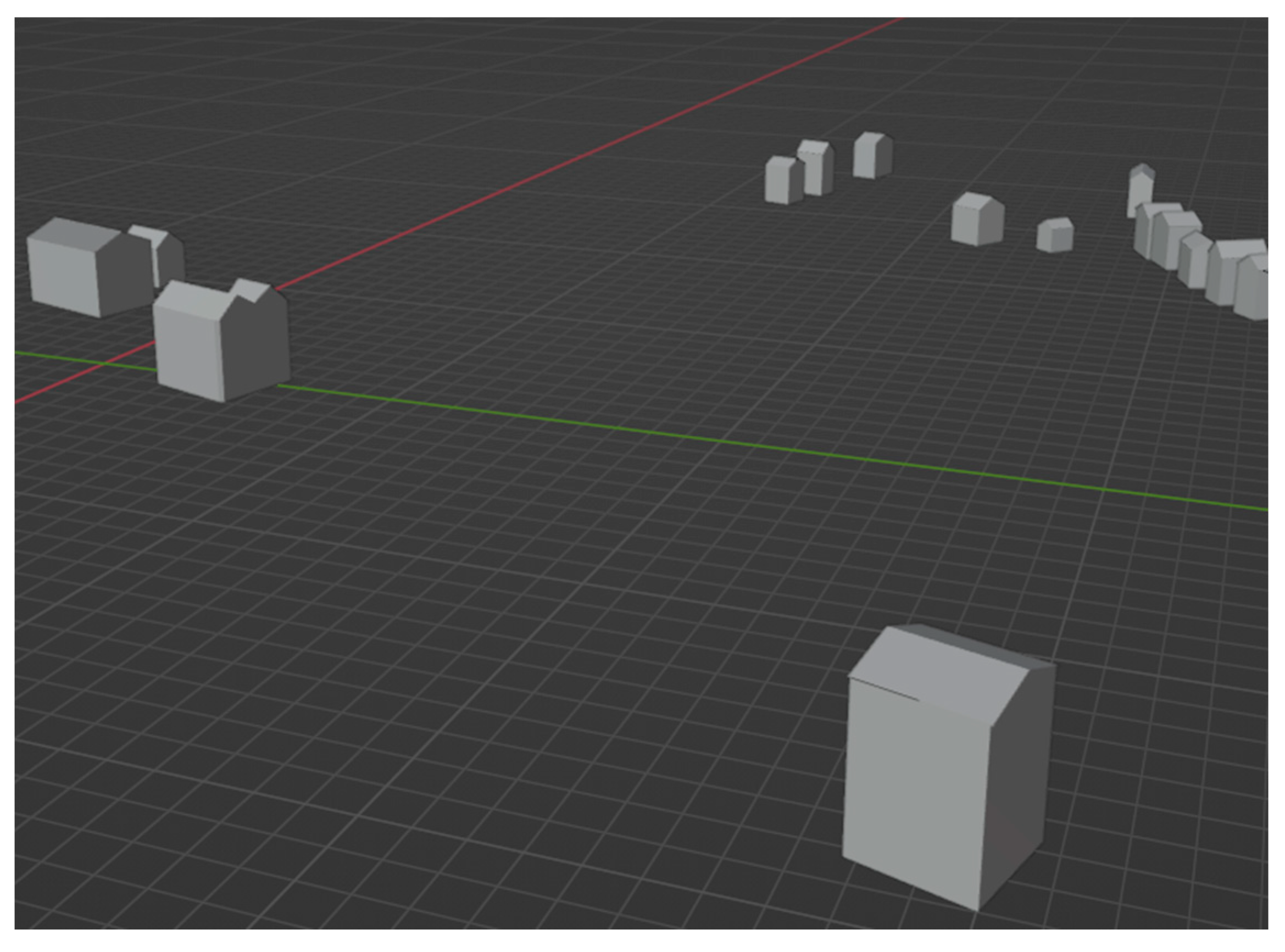

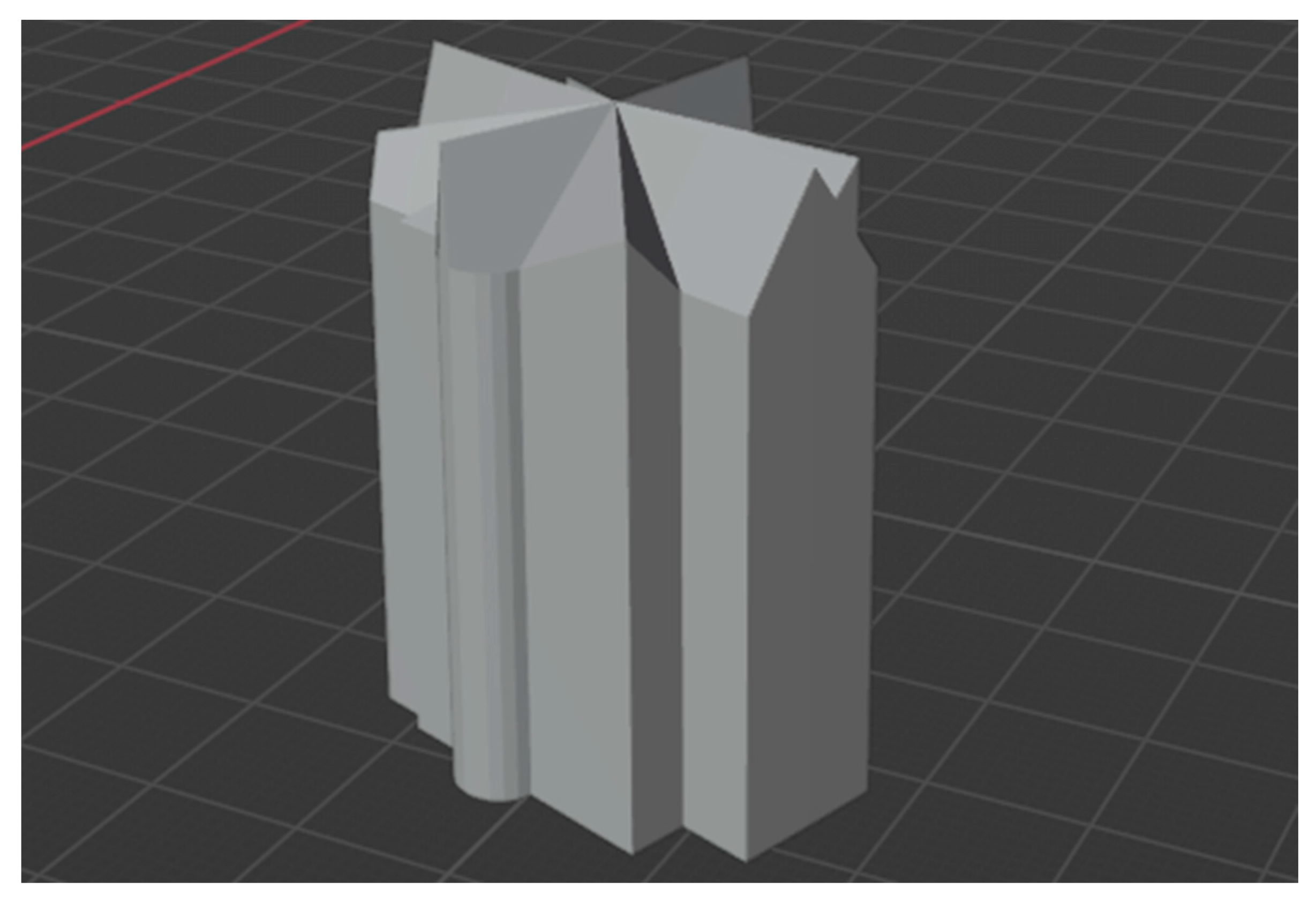

3. Procedural Modeling in Blender

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| OSM ID | OSM Building Levels | OSM Building Height m | LiDAR Mean Building Height m | Height Difference m | LiDAR Max Building Height m | Height Difference m |

|---|---|---|---|---|---|---|

| 2512227 | 1 | 2.60 | 28.4 | 25.8 | 37.4 | 34.8 |

| 4089569 | 4 | 10.40 | 21.5 | 11.1 | 27.8 | 17.4 |

| 24785631 | 4 | 10.40 | 15.3 | 4.9 | 24.2 | 13.8 |

| 24786527 | 2 | 5.20 | 7.2 | 2.0 | 9.2 | 4.0 |

| 24786528 | 14 | 36.40 | 38.5 | 2.1 | 52.4 | 16.0 |

| 61024039 | 12 | 31.20 | 35.3 | 4.1 | 43.9 | 12.7 |

| 111584761 | 4 | 10.40 | 16.4 | 6.0 | 29.8 | 19.4 |

| 153059527 | 13 | 33.80 | 10.8 | −23.0 | 16.1 | −17.7 |

| 176487482 | 4 | 10.40 | 14.4 | 4.0 | 17.9 | 7.5 |

| 176487486 | 4 | 10.40 | 20.2 | 9.8 | 26.2 | 15.8 |

| 177270343 | 3 | 7.80 | 14.7 | 6.9 | 17.4 | 9.6 |

| 179744268 | 0 | 0.00 | 7.3 | 7.3 | 20.0 | 20.0 |

| 179744269 | 8 | 20.80 | 22.4 | 1.6 | 30.3 | 9.5 |

| 179746610 | 4 | 10.40 | 16.1 | 5.7 | 27.8 | 17.4 |

| 186332693 | 4 | 10.40 | 16.8 | 6.4 | 19.0 | 8.6 |

| 186335765 | 15 | 39.00 | 54.8 | 15.8 | 71.0 | 32.0 |

| 186335774 | 6 | 15.60 | 24.1 | 8.5 | 28.9 | 13.3 |

| 186513050 | 11 | 28.60 | 32.4 | 3.8 | 37.6 | 9.0 |

| 186513051 | 11 | 28.60 | 34.7 | 6.1 | 37.3 | 8.7 |

| 186515083 | 6 | 15.60 | 16.3 | 0.7 | 33.3 | 17.7 |

| 186518586 | 4 | 10.40 | 19.8 | 9.4 | 23.8 | 13.4 |

| 186518625 | 4 | 10.40 | 19.7 | 9.3 | 24.4 | 14.0 |

| 186547097 | 3 | 7.80 | 15.7 | 7.9 | 21.5 | 13.7 |

| 186547098 | 14 | 36.40 | 41.0 | 4.6 | 44.5 | 8.1 |

| 186547099 | 2 | 5.20 | 14.6 | 9.4 | 33.9 | 28.7 |

| 186547100 | 6 | 15.60 | 19.3 | 3.7 | 24.3 | 8.7 |

| 186547113 | 8 | 20.80 | 24.7 | 3.9 | 29.0 | 8.2 |

| 186547122 | 10 | 26.00 | 24.7 | −1.3 | 40.0 | 14.0 |

| 186547124 | 14 | 36.40 | 41.6 | 5.2 | 45.7 | 9.3 |

| 186547129 | 7 | 18.20 | 27.0 | 8.8 | 31.4 | 13.2 |

| 186547134 | 7 | 18.20 | 22.6 | 4.4 | 27.2 | 9.0 |

| 186547137 | 2 | 5.20 | 9.4 | 4.2 | 13.1 | 7.9 |

| 186549962 | 7 | 18.20 | 19.4 | 1.2 | 23.9 | 5.7 |

| 196794006 | 21 | 54.60 | −12.1 | −66.7 | 81.0 | 26.4 |

| 197017824 | 4 | 10.40 | 22.9 | 12.5 | 26.1 | 15.7 |

| 235168944 | 13 | 33.80 | 49.8 | 16.0 | 60.4 | 26.6 |

| 248803106 | 3 | 7.80 | 16.4 | 8.6 | 21.4 | 13.6 |

| 248803110 | 5 | 13.00 | 3.4 | −9.6 | 12.7 | −0.3 |

| 496313466 | 3 | 7.80 | 15.9 | 8.1 | 20.8 | 13.0 |

| 496313467 | 6 | 15.60 | 22.1 | 6.5 | 27.2 | 11.6 |

| 778984182 | 5 | 13.00 | 19.1 | 6.1 | 24.3 | 11.3 |

| 824372657 | 7 | 18.20 | 23.3 | 5.1 | 28.3 | 10.1 |

| 824372661 | 3 | 7.80 | 12.5 | 4.7 | 17.6 | 9.8 |

| 936397467 | 22 | 57.20 | 4.4 | −52.8 | 81.0 | 23.8 |

| 976077230 | 7 | 18.20 | 0.2 | −18.0 | 27.0 | 8.8 |

| 976077231 | 8 | 20.80 | 0.2 | −20.6 | 30.0 | 9.2 |

| 976077232 | 7 | 18.20 | 0.1 | −18.1 | 7.9 | −10.3 |

| 1030934401 | 2 | 5.20 | 13.6 | 8.4 | 17.8 | 12.6 |

| 1030934402 | 2 | 5.20 | 11.4 | 6.2 | 17.7 | 12.5 |

| 1036836792 | 3 | 7.80 | 14.0 | 6.2 | 19.0 | 11.2 |

| 1040050251 | 4 | 10.40 | 18.1 | 7.7 | 22.7 | 12.3 |

| 1040050252 | 4 | 10.40 | 20.1 | 9.7 | 24.1 | 13.7 |

| 1040050253 | 4 | 10.40 | 20.4 | 10.0 | 23.9 | 13.5 |

| 1040050254 | 4 | 10.40 | 18.7 | 8.3 | 22.2 | 11.8 |

| 1040050255 | 4 | 10.40 | 19.5 | 9.1 | 23.2 | 12.8 |

| 1040050256 | 4 | 10.40 | 19.5 | 9.1 | 23.0 | 12.6 |

| 1040050257 | 4 | 10.40 | 18.0 | 7.6 | 23.5 | 13.1 |

| 1055837765 | 4 | 10.40 | 20.6 | 10.2 | 24.3 | 13.9 |

| 1055837766 | 4 | 10.40 | 20.6 | 10.2 | 23.6 | 13.2 |

| 1055837767 | 4 | 10.40 | 20.5 | 10.1 | 24.7 | 14.3 |

| 1055837768 | 4 | 10.40 | 19.9 | 9.5 | 25.0 | 14.6 |

| 1118759071 | 4 | 10.40 | 15.8 | 5.4 | 18.9 | 8.5 |

| 1118759076 | 4 | 10.40 | 12.3 | 1.9 | 18.7 | 8.3 |

| 1118759078 | 4 | 10.40 | 8.7 | −1.7 | 22.3 | 11.9 |

| 1118759079 | 4 | 10.40 | 19.8 | 9.4 | 22.9 | 12.5 |

| 1118759081 | 4 | 10.40 | 19.1 | 8.7 | 22.8 | 12.4 |

| 1118759082 | 4 | 10.40 | 18.8 | 8.4 | 22.7 | 12.3 |

| 1118759083 | 4 | 10.40 | 14.5 | 4.1 | 23.4 | 13.0 |

| 1118759084 | 4 | 10.40 | 12.0 | 1.6 | 19.6 | 9.2 |

| 1118759085 | 4 | 10.40 | 13.5 | 3.1 | 21.6 | 11.2 |

| 1120198927 | 4 | 10.40 | 20.3 | 9.9 | 24.7 | 14.3 |

| 1120210047 | 7 | 18.20 | 20.8 | 2.6 | 23.7 | 5.5 |

| 1120210048 | 7 | 18.20 | 23.8 | 5.6 | 25.7 | 7.5 |

| 1120210049 | 7 | 18.20 | 22.2 | 4.0 | 24.4 | 6.2 |

| 1121513868 | 3 | 7.80 | 14.1 | 6.3 | 17.3 | 9.5 |

| 186547113 | 8 | 20.80 | 23.4 | 2.6 | 34.6 | 13.8 |

| 153059527 | 13 | 33.80 | 41.6 | 7.8 | 70.6 | 36.8 |

| 153059527 | 13 | 33.80 | 8.0 | −25.8 | 12.4 | −21.4 |

References

- Ullrich, T.; Schinko, C.; Fellner, D.W. Procedural Modeling in Theory and Practice. In Proceedings of the 18th International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision, WSCG 2010—Co-operation with EUROGRAPHICS, Plzen, Czech Republic, 1–4 February 2010; pp. 5–8. [Google Scholar]

- Watson, B.; Müller, P.; Veryovka, O.; Fuller, A.; Wonka, P.; Sexton, C. Procedural Urban Modeling in Practice. IEEE Comput. Graph. Appl. 2008, 28, 18–26. [Google Scholar] [CrossRef]

- Smelik, R.M.; Tutenel, T.; Bidarra, R.; Benes, B. A Survey on Procedural Modelling for Virtual Worlds. Comput. Graph. Forum 2014, 33, 31–50. [Google Scholar] [CrossRef]

- Olsen, J. Realtime Procedural Terrain Generation; Department of Mathematics and Computer Science, University of Southern Denmark: Odense, Denmark, 2004; 20p. [Google Scholar]

- Parish, Y.I.H.; Müller, P. Procedural Modeling of Cities. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; Association for Computing Machinery: New York, NY, USA, 2001; pp. 301–308. [Google Scholar]

- Schwarz, M.; Müller, P. Advanced Procedural Modeling of Architecture. ACM Trans. Graph. 2015, 34, 1–12. [Google Scholar] [CrossRef]

- Müller, P.; Vereenooghe, T.; Wonka, P.; Paap, I.; Van Gool, L. Procedural 3D Reconstruction of Puuc Buildings in Xkipché. In Proceedings of the International Symposium on Virtual Reality, Archaeology and Cultural Heritage 2006, Nicosia, Cyprus, 30 October–4 November 2006; pp. 139–146. [Google Scholar]

- Haegler, S.; Müller, P.; Van Gool, L. Procedural Modeling for Digital Cultural Heritage. EURASIP J. Image Video Process. 2009, 2009, 852392. [Google Scholar] [CrossRef]

- Gasch, C.; Chover, M.; Remolar, I.; Rebollo, C. Procedural Modelling of Terrains with Constraints. Multimed. Tools Appl. 2020, 79, 31125–31146. [Google Scholar] [CrossRef]

- Esch, T.; Zeidler, J.; Palacios-Lopez, D.; Marconcini, M.; Roth, A.; Mönks, M.; Leutner, B.; Brzoska, E.; Metz-Marconcini, A.; Bachofer, F.; et al. Towards a Large-Scale 3D Modeling of the Built Environment—Joint Analysis of TanDEM-X, Sentinel-2 and Open Street Map Data. Remote Sens. 2020, 12, 2391. [Google Scholar] [CrossRef]

- Qin, J.; Fang, C.; Wang, Y.; Li, G.; Wang, S. Evaluation of Three-Dimensional Urban Expansion: A Case Study of Yangzhou City, Jiangsu Province, China. Chin. Geogr. Sci. 2015, 25, 224–236. [Google Scholar] [CrossRef]

- da Silveira, L.G.; Musse, S.R. Real-Time Generation of Populated Virtual Cities. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Limassol, Cyprus, 1–3 November 2006; Association for Computing Machinery: New York, NY, USA, 2006; pp. 155–164. [Google Scholar]

- Alomía, G.; Loaiza, D.; Zúñiga, C.; Luo, X.; Asorey-Cacheda, R. Procedural Modeling Applied to the 3D City Model of Bogota: A Case Study. Virtual Real. Intell. Hardw. 2021, 3, 423–433. [Google Scholar] [CrossRef]

- Maim, J.; Haegler, S.; Yersin, B.; Mueller, P.; Thalmann, D.; Van Gool, L. Populating Ancient Pompeii with Crowds of Virtual Romans. In Proceedings of the 8th International Conference on Virtual Reality, Archaeology and Intelligent Cultural Heritage, Brighton, UK, 26–30 November 2007; Eurographics Association: Goslar, Germany, 2007; pp. 109–116. [Google Scholar]

- Bejleri, I.; Noh, S.; Bufkin, J.N.; Zhou, R.; Wasserman, D. Using 3D Rule-Based Modeling to Interactively Visualize “Complete Streets” Design Scenarios. Transp. Res. Rec. 2021, 2675, 14–30. [Google Scholar] [CrossRef]

- Tekavec, J.; Lisec, A.; Rodrigues, E. Simulating Large-Scale 3D Cadastral Dataset Using Procedural Modelling. ISPRS Int. J. Geo-Inf. 2020, 9, 598. [Google Scholar] [CrossRef]

- Fletcher, D.; Yue, Y.; Kader, M. Al Challenges and Perspectives of Procedural Modelling and Effects. In Proceedings of the 2010 14th International Conference Information Visualisation, London, UK, 26–29 July 2010; pp. 543–550. [Google Scholar]

- Kim, J.-S.; Kavak, H.; Crooks, A. Procedural City Generation beyond Game Development. SIGSPATIAL Spec. 2018, 10, 34–41. [Google Scholar] [CrossRef]

- Buyuksalih, G.; Baskaraca, P.; Bayburt, S.; Buyuksalih, I.; Abdul Rahman, A. 3D City Modelling of Istanbul Based on Lidar Data and Panoramic Images—Issues and Challenges. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W12, 51–60. [Google Scholar] [CrossRef]

- Yang, X.; Delparte, D. A Procedural Modeling Approach for Ecosystem Services and Geodesign Visualization in Old Town Pocatello, Idaho. Land 2022, 11, 1228. [Google Scholar] [CrossRef]

- Nichol, J.; Wong, M.S. Modeling Urban Environmental Quality in a Tropical City. Landsc. Urban Plan. 2005, 73, 49–58. [Google Scholar] [CrossRef]

- Choei, N.-Y.; Kim, H.; Kim, S. Improving Infrastructure Installation Planning Processes Using Procedural Modeling. Land 2020, 9, 48. [Google Scholar] [CrossRef]

- Rossknecht, M.; Airaksinen, E. Concept and Evaluation of Heating Demand Prediction Based on 3D City Models and the CityGML Energy ADE-Case Study Helsinki. ISPRS Int. J. Geo-Inf. 2020, 9, 602. [Google Scholar] [CrossRef]

- El-Hosaini, H. Locating and Positioning Solar Panels in a 3D City Model: A Case Study of Newcastle, UK. GI_Forum 2015, 1, 147–157. [Google Scholar] [CrossRef]

- Biljecki, F.; Stoter, J.; Ledoux, H.; Zlatanova, S.; Çöltekin, A. Applications of 3D City Models: State of the Art Review. ISPRS Int. J. Geo-Inf. 2015, 4, 2842–2889. [Google Scholar] [CrossRef]

- Chen, K.; Lu, W.; Xue, F.; Tang, P.; Li, L.H. Automatic Building Information Model Reconstruction in High-Density Urban Areas: Augmenting Multi-Source Data with Architectural Knowledge. Autom. Constr. 2018, 93, 22–34. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M. Unsupervised Reconstruction of Building Information Modeling Wall Objects from Point Cloud Data. Autom. Constr. 2020, 120, 103338. [Google Scholar] [CrossRef]

- Ullo, S.L.; Zarro, C.; Wojtowicz, K.; Meoli, G.; Focareta, M. Lidar-Based System and Optical Vhr Data for Building Detection and Mapping. Sensors 2020, 20, 1285. [Google Scholar] [CrossRef] [PubMed]

- Forlani, G.; Nardinocchi, C.; Scaioni, M.; Zingaretti, P. Building Reconstruction and Visualization from LIDAR Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2003, 34, 151–156. [Google Scholar]

- Barranquero, M.; Olmedo, A.; Gómez, J.; Tayebi, A.; Hellín, C.J.; Saez de Adana, F. Automatic 3D Building Reconstruction from OpenStreetMap and LiDAR Using Convolutional Neural Networks. Sensors 2023, 23, 2444. [Google Scholar] [CrossRef] [PubMed]

- Pang, S.; Hu, X.; Wang, Z.; Lu, Y. Object-Based Analysis of Airborne LiDAR Data for Building Change Detection. Remote Sens. 2014, 6, 10733–10749. [Google Scholar] [CrossRef]

- Gonzalez-Aguilera, D.; Crespo-Matellan, E.; Hernandez-Lopez, D.; Rodriguez-Gonzalvez, P. Automated Urban Analysis Based on LiDAR-Derived Building Models. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1844–1851. [Google Scholar] [CrossRef]

- Wang, R.; Peethambaran, J.; Chen, D. LiDAR Point Clouds to 3-D Urban Models$:$ A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Vosselman, G.; Maas, H.G. (Eds.) Airborne and Terrestrial Laser Scanning; CRC Press (Taylor & Francis): Boca Raton, FL, USA, 2010; ISBN 978-1904445-87-6. [Google Scholar]

- Haala, N.; Anders, K.-H. Acquisition of 3D Urban Models by Analysis of Aerial Images, Digital Surface Models, and Existing 2D Building Information. In Integrating Photogrammetric Techniques with Scene Analysis and Machine Vision III; SPIE: Bellingham, WA, USA, 1997; Volume 3072, pp. 212–222. [Google Scholar] [CrossRef]

- Chen, L.; Teo, T.-A.; Shao, Y.-C.; Lai, Y.-C.; Rau, J.-Y. Fusion of LIDAR Data and Optical Imagery for Building Modeling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 732–737. [Google Scholar]

- Dorninger, P.; Pfeifer, N. A Comprehensive Automated 3D Approach for Building Extraction, Reconstruction, and Regularization from Airborne Laser Scanning Point Clouds. Sensors 2008, 8, 7323–7343. [Google Scholar] [CrossRef]

- Mathews, A.J.; Frazier, A.E.; Nghiem, S.V.; Neumann, G.; Zhao, Y. Satellite Scatterometer Estimation of Urban Built-up Volume: Validation with Airborne Lidar Data. Int. J. Appl. Earth Obs. Geoinf. 2019, 77, 100–107. [Google Scholar] [CrossRef]

- Yastikli, N.; Cetin, Z. AUTOMATIC 3D BUILDING MODEL GENERATIONS with AIRBORNE LiDAR DATA. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 411–414. [Google Scholar] [CrossRef]

- Jayaraj, P.; Ramiya, A.M. 3D CityGML Building Modelling from Lidar Point Cloud Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2018, 42, 175–180. [Google Scholar] [CrossRef]

- Oude Elberink, S.; Vosselman, G. Quality Analysis on 3D Building Models Reconstructed from Airborne Laser Scanning Data. ISPRS J. Photogramm. Remote Sens. 2011, 66, 157–165. [Google Scholar] [CrossRef]

- Ostrowski, W.; Pilarska, M.; Charyton, J.; Bakuła, K. Analysis of 3D Building Models Accuracy Based on the Airborne Laser Scanning Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2018, 42, 797–804. [Google Scholar] [CrossRef]

- Tarsha-Kurdi, F.; Landes, T.; Grussenmeyer, P. Extended RANSAC Algorithm for Automatic Detection of Building Roof Planes from LiDAR Data. Photogramm. J. Finl. 2008, 21, 97–109. [Google Scholar]

- Jarząbek-Rychard, M.; Borkowski, A. 3D Building Reconstruction from ALS Data Using Unambiguous Decomposition into Elementary Structures. ISPRS J. Photogramm. Remote Sens. 2016, 118, 1–12. [Google Scholar] [CrossRef]

- Wichmann, A. Grammar-Guided Reconstruction of Semantic 3D Building Models from Airborne LiDAR Data Using Half-Space Modeling. Doctoral Dissertation, Technische Universität Berlin, Berlin, Germany, 2018. [Google Scholar]

- Wu, F.; Yan, D.-M.; Dong, W.; Zhang, X.; Wonka, P. Inverse Procedural Modeling of Facade Layouts. ACM Trans. Graph. 2014, 33, 1–10. [Google Scholar] [CrossRef]

- Airaksinen, E.; Bergstrom, M.; Heinonen, H.; Kaisla, K.; Lahti, K.; Suomisto, J. The Kalasatama Digital Twins Project; The Final Report of the KIRA-Digi Pilot Project, City of Helsinki. 2019. Available online: https://www.hel.fi/static/liitteet-2019/Kaupunginkanslia/Helsinki3D_Kalasatama_Digital_Twins.pdf (accessed on 3 June 2023).

- Castagno, J.; Ochoa, C.; Atkins, E. Comprehensive Risk-Based Planning for Small Unmanned Aircraft System Rooftop Landing. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 1031–1040. [Google Scholar]

- Župan, R.; Župan, K.; Frangeš, S.; Vinković, A. Automatic Procedural 3D Modelling of Buildings. Teh. Glas.-Tech. J. 2018, 12, 166–173. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Župan, R.; Vinković, A.; Nikçi, R.; Pinjatela, B. Automatic 3D Building Model Generation from Airborne LiDAR Data and OpenStreetMap Using Procedural Modeling. Information 2023, 14, 394. https://doi.org/10.3390/info14070394

Župan R, Vinković A, Nikçi R, Pinjatela B. Automatic 3D Building Model Generation from Airborne LiDAR Data and OpenStreetMap Using Procedural Modeling. Information. 2023; 14(7):394. https://doi.org/10.3390/info14070394

Chicago/Turabian StyleŽupan, Robert, Adam Vinković, Rexhep Nikçi, and Bernarda Pinjatela. 2023. "Automatic 3D Building Model Generation from Airborne LiDAR Data and OpenStreetMap Using Procedural Modeling" Information 14, no. 7: 394. https://doi.org/10.3390/info14070394

APA StyleŽupan, R., Vinković, A., Nikçi, R., & Pinjatela, B. (2023). Automatic 3D Building Model Generation from Airborne LiDAR Data and OpenStreetMap Using Procedural Modeling. Information, 14(7), 394. https://doi.org/10.3390/info14070394