Scene Text Recognition Based on Improved CRNN

Abstract

1. Introduction

2. Related Work

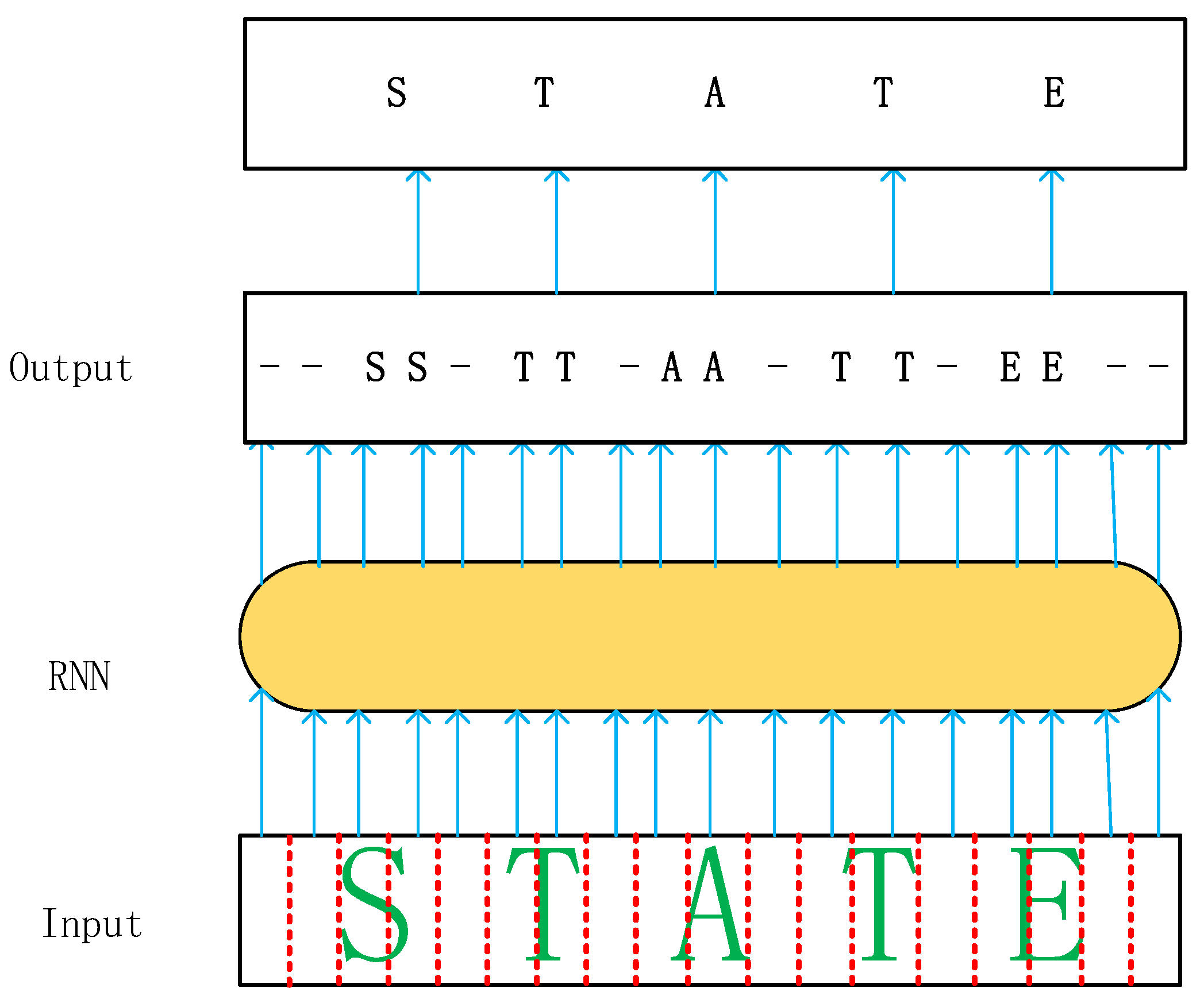

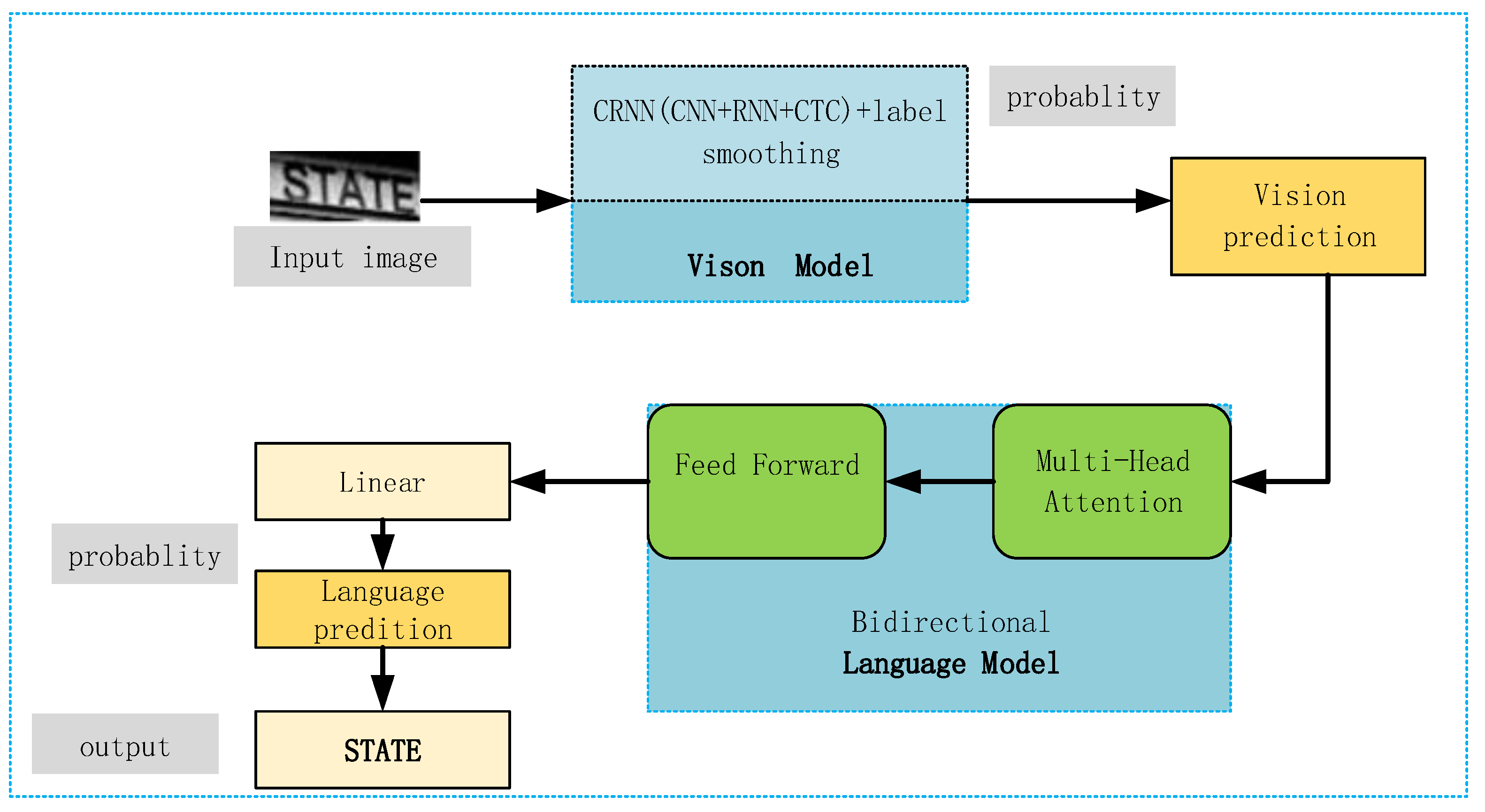

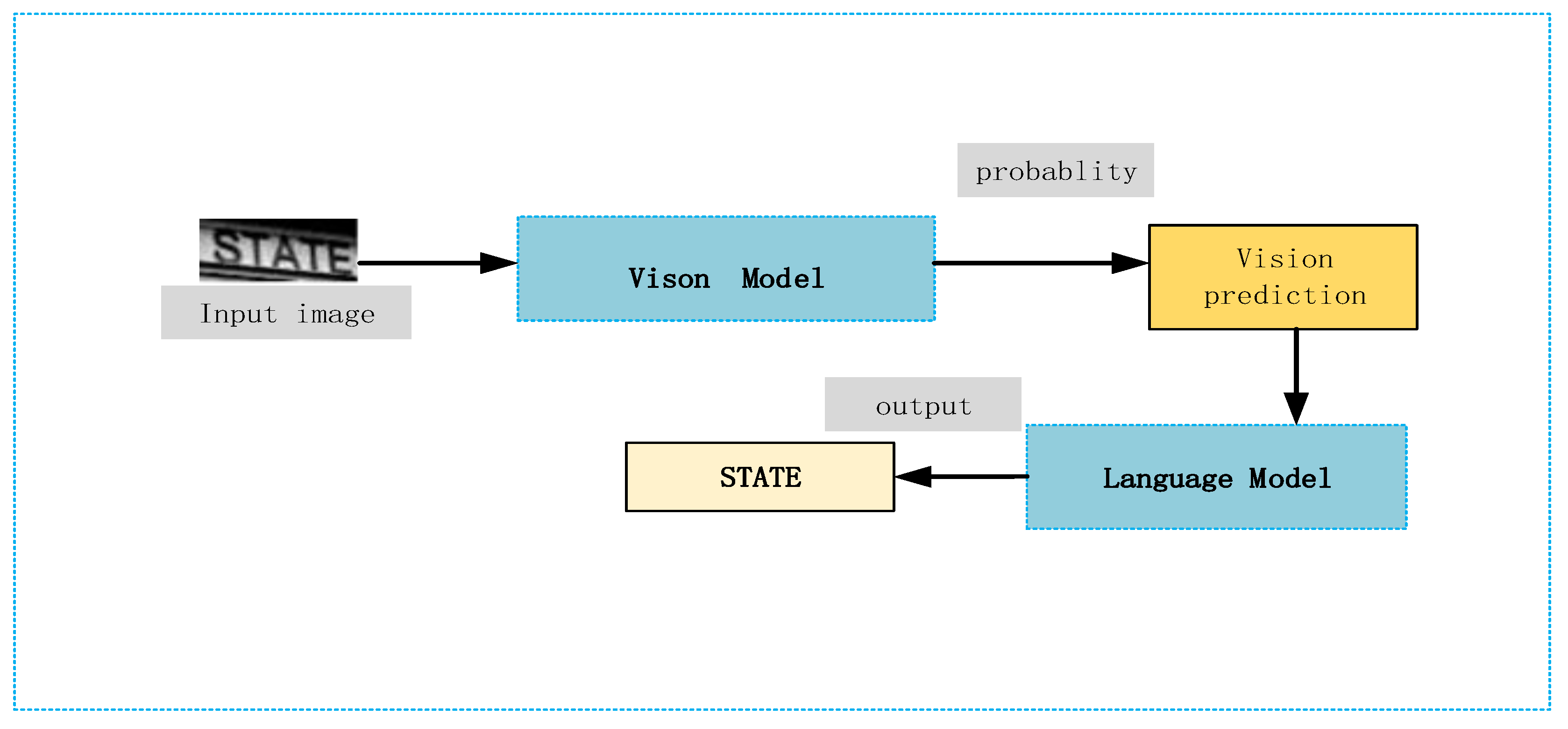

3. Methods

3.1. Label Smoothing (LS)

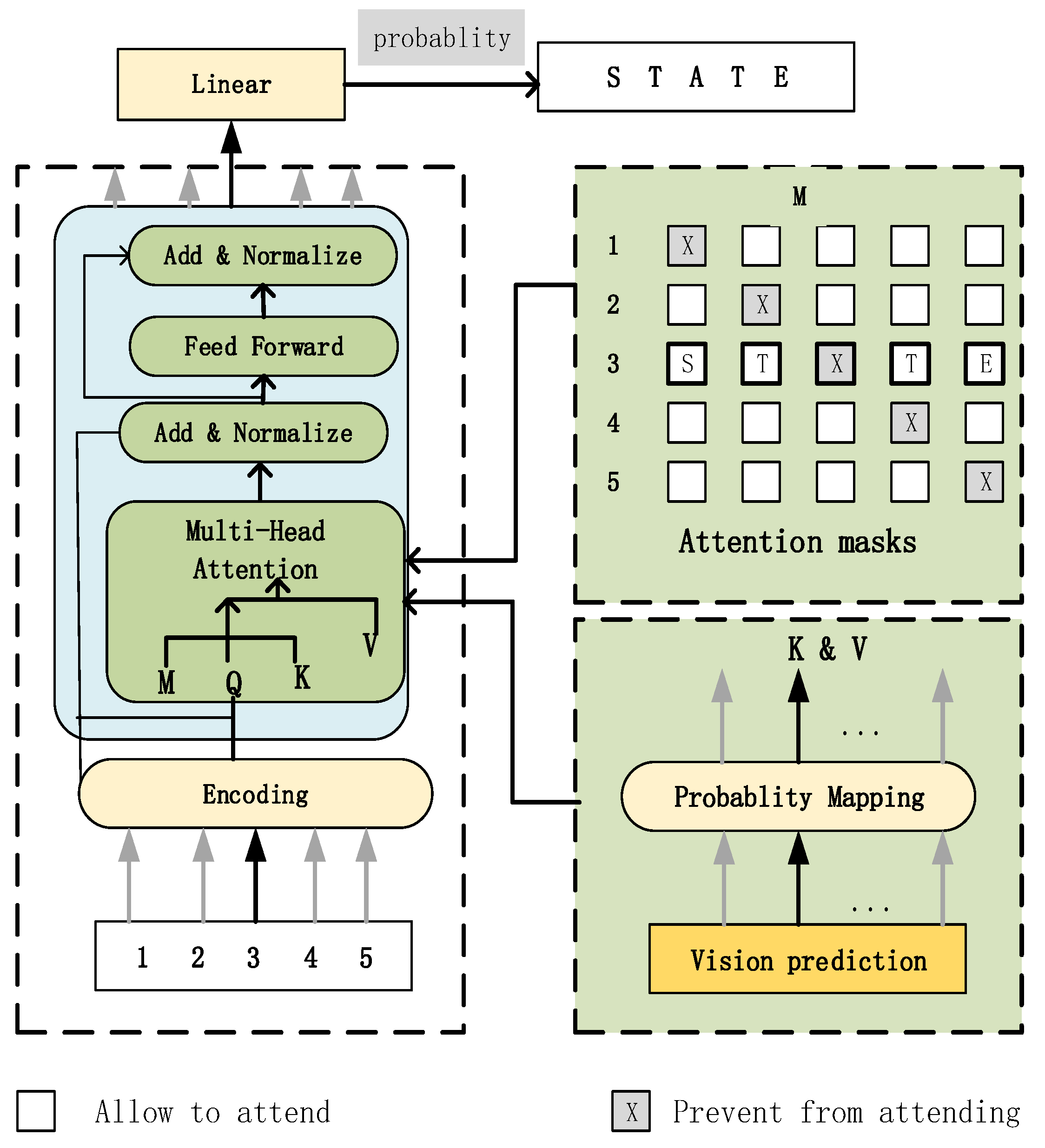

3.2. Language Model

4. Experiments

4.1. Datasets

4.2. Implementation Details

4.2.1. Comparison of Experimental Results between the Actual Run Baseline Model and the Original Baseline Model

4.2.2. Comparison of Adding Label Smoothing with the Baseline Model Approach

4.2.3. Comparison of Adding Language Model with the Baseline Model Approach

4.2.4. Comparison of Ablation Experiments

4.2.5. Comparison of the Improved CRNN Model with Other Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, C.; Chen, X.; Luo, C.; Jin, L.; Xue, Y.; Liu, Y. A deep learning approach for natural scene text detection and recognition. Chin. J. Graph. 2021, 26, 1330–1367. [Google Scholar] [CrossRef]

- Shi, B.; Bai, X.; Yao, C. An End-to-End Trainable Neural Network for Image-based Sequence Recognition and Its Application to Scene Text Recognition. arXiv 2015, arXiv:1507.05717. [Google Scholar] [CrossRef] [PubMed]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Liu, Y.; Wang, Y.; Shi, H. A Convolutional Recurrent Neural-Network-Based Machine Learning for Scene Text Recognition Application. Symmetry 2023, 15, 849. [Google Scholar] [CrossRef]

- Lei, Z.; Zhao, S.; Song, H.; Shen, J. Scene text recognition using residual convolutional recurrent neural network. Mach. Vis. Appl. 2018, 29, 861–871. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Graves, A.; Mohamed, A.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Shi, B.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. Robust scene text recognition with automatic rectification. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4168–4176. [Google Scholar]

- Lee, C.-Y.; Osindero, S. Recursive recurrent nets with attention modeling for OCR in the wild. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2231–2239. [Google Scholar]

- Liu, W.; Chen, C.; Wong, K.-Y.K.; Su, Z.; Han, J. Star-net: A spatial attention residue network for scene text recognition. BMVC 2016, 2, 7. [Google Scholar]

- Wang, J.; Hu, X. Gated recurrent convolution neural network for OCR. Adv. Neural Inf. Process. Syst. 2017, 30, 334–343. [Google Scholar]

- Borisyuk, F.; Gordo, A.; Sivakumar, V. Rosetta: Large scale system for text detection and recognition in images. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 71–79. [Google Scholar]

- Baek, J.; Kim, G.; Lee, J.; Park, S.; Han, D.; Yun, S.; Oh, S.J.; Lee, H. What is wrong with scene text recognition model comparisons? Dataset and model analysis. In Proceedings of the 2019 IEEE/CVF international Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4715–4723. [Google Scholar]

- Qiao, Z.; Zhou, Y.; Yang, D.; Zhou, Y.; Wang, W. Seed: Semantics enhanced encoder-decoder framework for scene text recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13528–13537. [Google Scholar]

- Atienza, R. Vision transformer for fast and efficient scene text recognition. In Proceedings of the Document Analysis and Recognition—ICDAR 2021: 16th International Conference, Lausanne, Switzerland, 5–10 September 2021; Proceedings, Part I 16. Springer International Publishing: Cham, Switzerland, 2021; pp. 319–334. [Google Scholar]

- Baek, J.; Matsui, Y.; Aizawa, K. What if we only use real datasets for scene text recognition? Toward scene text recognition with fewer labels. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3113–3122. [Google Scholar]

- Zhang, M.; Ma, M.; Wang, P. Scene text recognition with cascade attention network. In Proceedings of the 2021 International Conference on Multimedia Retrieval, New York, NY, USA, 21–24 August 2021; pp. 385–393. [Google Scholar]

- Bhunia, A.K.; Sain, A.; Chowdhury, P.N.; Song, Y.-Z. Text is text, no matter what: Unifying text recognition using knowledge distillation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 983–992. [Google Scholar]

- Liu, C.; Yang, C.; Yin, X.C. Open-Set Text Recognition via Character-Context Decoupling. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4523–4532. [Google Scholar]

- Liu, M.; Zhou, L. A cervical cell classification method based on migration learning and label smoothing strategy. Mod. Comput. 2022, 28, 1–9+32. [Google Scholar]

- Müller, R.; Kornblith, S.; Hinton, G.E. When does label smoothing help? Adv. Neural Inf. Process. Syst. 2019, 32, 422. [Google Scholar]

- Zhao, L. Research on User Behavior Recognition Based on CNN and LSTM. Master’s Thesis, Nanjing University of Information Engineering, Nanjing, China, 2021. [Google Scholar] [CrossRef]

- Kim, S.; Seltzer, M.L.; Li, J.; Zhao, R. Improved training for online end-to-end speech recognition systems. arXiv 2017, arXiv:1711.02212. [Google Scholar]

- Qin, C. Research on End-to-End Speech Recognition Technology. Ph.D. Thesis, Strategic Support Force Information Engineering University, Zhengzhou, China, 2020. [Google Scholar] [CrossRef]

- Fang, S.; Xie, H.; Wang, Y.; Mao, Z.; Zhang, Y. Read like humans: Autonomous, bidirectional and iterative language modeling for scene text recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7098–7107. [Google Scholar]

- Cheng, L.; Yan, J.; Chen, M.; Lu, Y.; Li, Y.; Hu, L. A multi-scale deformable convolution network model for text recognition. In Proceedings of the Thirteenth International Conference on Graphics and Image Processing (ICGIP 2021); SPIE: Paris, France, 2022; Volume 12083, pp. 627–635. [Google Scholar]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Synthetic data for text localisation in natural images. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2315–2324. [Google Scholar]

- Mishra, A.; Alahari, K.; Jawahar, C.V. Top-down and bottom-up cues for scene text recognition. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2687–2694. [Google Scholar]

- Wang, K.; Babenko, B.; Belongie, S. End-to-end scene text recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1457–1464. [Google Scholar]

- Karatzas, D.; Shafait, F.; Uchida, S.; Iwamura, M.; Bigorda, L.G.; Mestre, S.R.; Mas, J.; Mota, D.F.; Almazan, J.A.; de las Heras, L.P. ICDAR 2013 robust reading competition. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1484–1493. [Google Scholar]

- Phan, T.Q.; Shivakumara, P.; Tian, S.; Tan, C.L. Recognizing text with perspective distortion in natural scenes. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 569–576. [Google Scholar]

- Risnumawan, A.; Shivakumara, P.; Chan, C.S.; Tan, C.L. A robust arbitrary text detection system for natural scene images. Expert Syst. Appl. 2014, 41, 8027–8048. [Google Scholar] [CrossRef]

- Cheng, Z.; Xu, Y.; Bai, F.; Niu, Y.; Pu, S.; Zhou, S. Aon: Towards arbitrarily-oriented text recognition. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5571–5579. [Google Scholar]

| Methods | IC13 | SVT | IIIT5K | IC15 | SVTP | CUTE |

|---|---|---|---|---|---|---|

| CRNN | 91.10 | 81.60 | 82.90 | 69.40 | 70.00 | 65.50 |

| CRNN-base | 91.15 | 85.43 | 85.91 | 75.93 | 75.07 | 66.25 |

| Methods | IC13 | SVT | IIIT5K | IC15 | SVTP | CUTE |

|---|---|---|---|---|---|---|

| CRNN-base | 91.15 | 85.43 | 85.91 | 75.93 | 75.07 | 66.25 |

| LS (α = 0.005) | 94.79 (+3.64) | 90.44 (+5.01) | 89.61 (+3.70) | 82.15 (+6.22) | 80.70 (+5.63) | 73.40 (+7.15) |

| LS (α = 0.01) | 93.42 (+2.27) | 89.30 (+3.87) | 89.27 (+3.36) | 82.28 (+6.35) | 80.04 (+4.97) | 72.40 (+6.15) |

| LS (α = 0.05) | 94.12 (+2.97) | 90.12 (+4.69) | 90.56 (+4.65) | 81.70 (+5.77) | 79.22 (+4.15) | 73.34 (+7.09) |

| LS (α = 0.1) | 89.71 (−1.44) | 83.30 (−2.13) | 83.91 (−2.00) | 71.98 (−3.95) | 69.61 (−5.46) | 60.48 (−5.77) |

| Methods | IC13 | SVT | IIIT5K | IC15 | SVTP | CUTE |

|---|---|---|---|---|---|---|

| CRNN-base | 91.15 | 85.43 | 85.91 | 75.93 | 75.07 | 66.25 |

| CRNN+BCN | 93.50 (+2.35) | 88.67 (+3.24) | 88.53 (+2.62) | 80.64 (+4.71) | 79.46 (+4.39) | 71.46 (+5.21) |

| Methods | IC13 | SVT | IIIT5K | IC15 | SVTP | CUTE |

|---|---|---|---|---|---|---|

| CRNN-base | 91.15 | 85.43 | 85.91 | 75.93 | 75.07 | 66.25 |

| LS (α = 0.005) | 94.79 | 90.44 | 89.61 | 82.15 | 80.70 | 73.40 |

| CRNN+BCN | 93.50 | 88.67 | 88.53 | 80.64 | 79.46 | 71.46 |

| CRNN+BCN+LS (α = 0.005) | 95.15 | 90.70 | 91.13 | 83.70 | 82.44 | 75.28 |

| Methods | IC13 | SVT | IIIT5K | IC15 | SVTP | CUTE |

|---|---|---|---|---|---|---|

| CRNN-base | 91.15 | 85.43 | 85.91 | 75.93 | 75.07 | 66.25 |

| RARE [8] | 92.60 | 85.80 | 86.20 | 74.50 | 76.20 | 70.40 |

| R2AM [9] | 90.20 | 82.40 | 83.40 | 68.90 | 72.10 | 64.90 |

| STAR-Net [10] | 92.80 | 86.90 | 87.00 | 76.10 | 77.50 | 71.70 |

| GRCNN [11] | 90.90 | 83.70 | 84.20 | 71.40 | 73.60 | 68.10 |

| Rosetta [12] | 90.90 | 84.70 | 84.30 | 71.20 | 73.80 | 69.20 |

| Benchmark [13] | 93.60 | 87.50 | 87.90 | 77.60 | 79.20 | 74.00 |

| SEED [14] | 92.80 | 89.60 | 93.80 | 80.00 | 81.40 | 83.60 |

| ViTSTR [15] | 92.40 | 87.70 | 88.40 | 78.50 | 81.80 | 81.30 |

| TRBA [16] | 93.10 | 88.90 | 92.10 | - | 79.50 | 78.20 |

| Cascade Attention [17] | 96.80 | 89.50 | 90.30 | - | 78.50 | 78.90 |

| Text is Text [18] | 93.30 | 89.90 | 92.30 | 76.90 | 84.40 | 86.30 |

| Character Context Decoupling [19] | 92.21 | 85.93 | 91.90 | - | - | 83.68 |

| CRNN+BCN+LS (our) | 95.15 | 90.70 | 91.13 | 83.70 | 82.44 | 75.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, W.; Ibrayim, M.; Hamdulla, A. Scene Text Recognition Based on Improved CRNN. Information 2023, 14, 369. https://doi.org/10.3390/info14070369

Yu W, Ibrayim M, Hamdulla A. Scene Text Recognition Based on Improved CRNN. Information. 2023; 14(7):369. https://doi.org/10.3390/info14070369

Chicago/Turabian StyleYu, Wenhua, Mayire Ibrayim, and Askar Hamdulla. 2023. "Scene Text Recognition Based on Improved CRNN" Information 14, no. 7: 369. https://doi.org/10.3390/info14070369

APA StyleYu, W., Ibrayim, M., & Hamdulla, A. (2023). Scene Text Recognition Based on Improved CRNN. Information, 14(7), 369. https://doi.org/10.3390/info14070369