A Systematic Literature Review on Human Ear Biometrics: Approaches, Algorithms, and Trend in the Last Decade

Abstract

1. Introduction

- Fewer inconsistencies in ear structure due to advancement in age compared with a face.

- Reliable ear outline throughout an individual life cycle.

- The distinctiveness of the external ear shape is not affected by moods, emotions, other expressions, etc.

- Restricted surface ear surface area leads to faster processing compared with a face.

- It is easier to capture the human ear even at a distance.

- The procedure is non-invasive. Beards, spectacles, and makeup cannot alter the appearance of the ear.

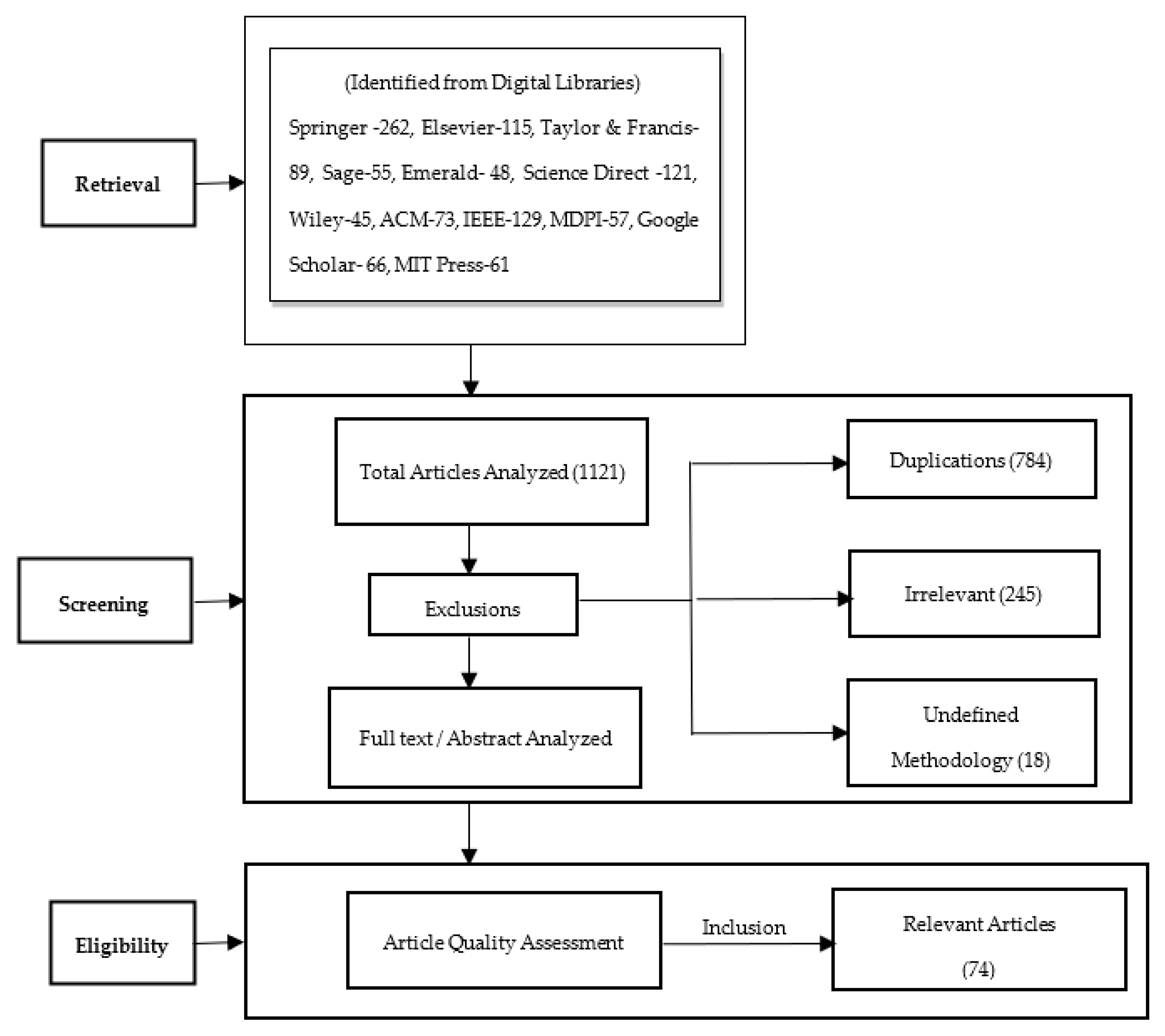

2. Research Method

2.1. Search Attributes

2.2. Search Queries

- Boolean operators of “OR or “AND” to retrieve data.

- Keywords generated from the research question as search parameters.

- Restriction to some publication types and publishers.

- Identifiers from related work.

2.3. Search Strategy

2.4. Article Source (AS)

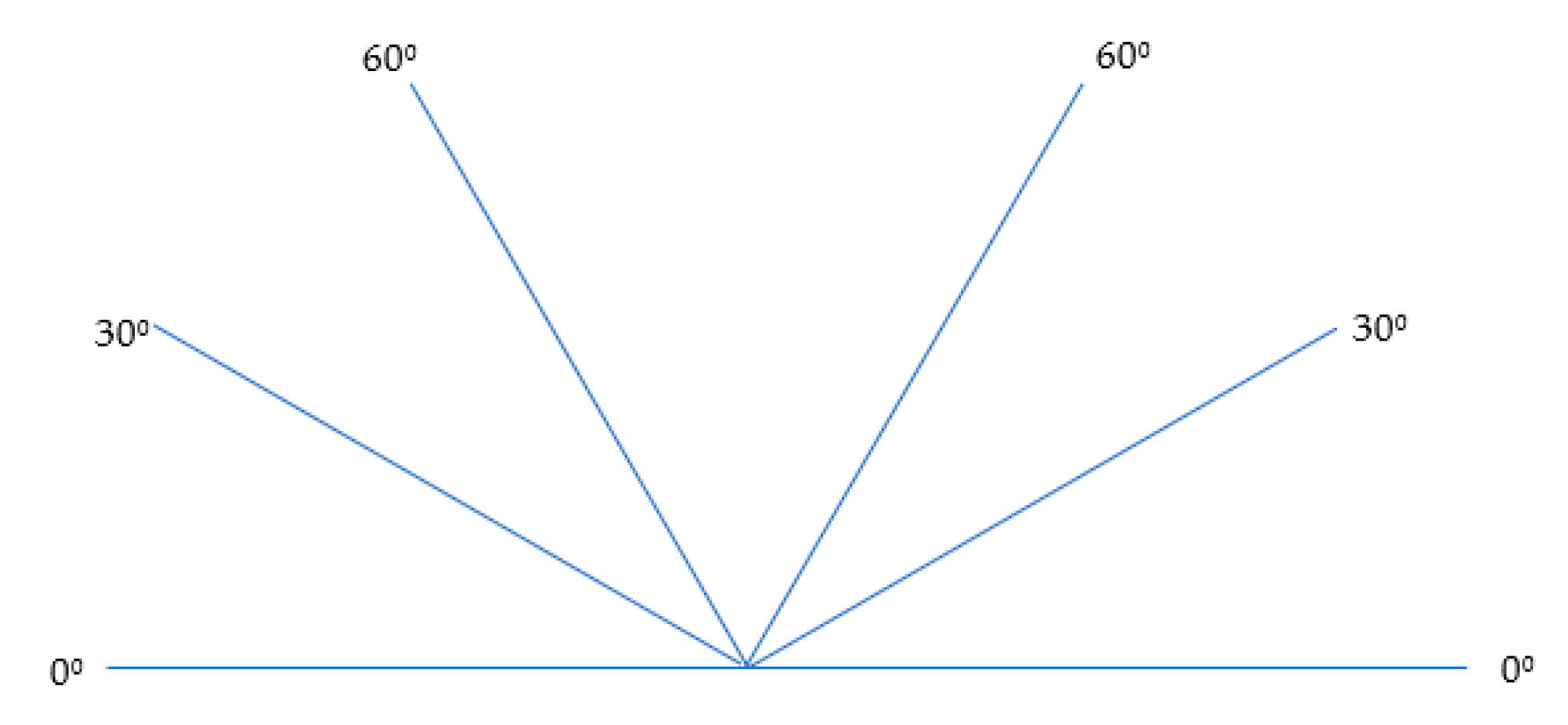

2.5. Ear Databases

2.6. Methods of Classification

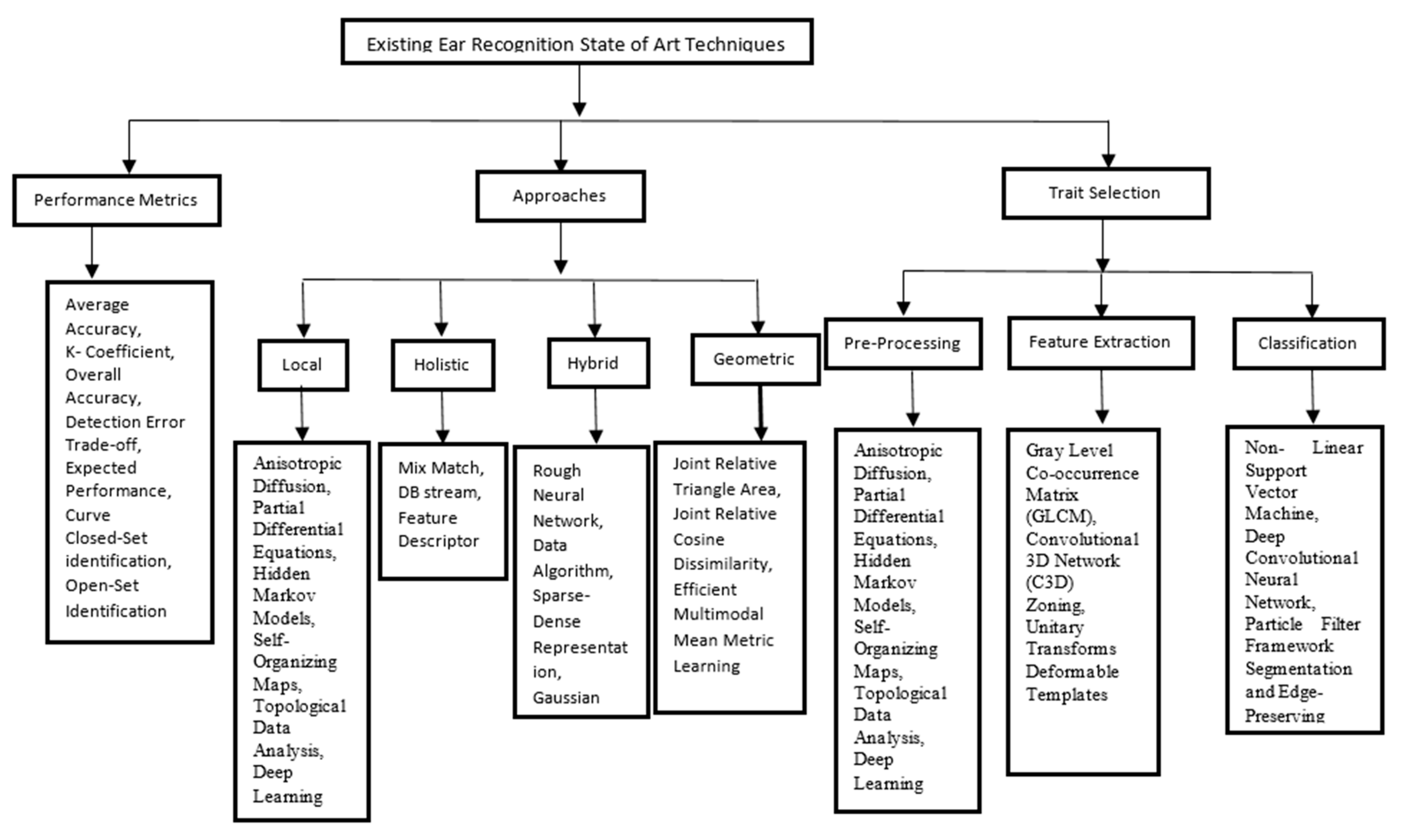

2.6.1. Geometric Approach

2.6.2. Holistic Approach

2.6.3. Local Approach

2.6.4. Hybrid Approach

2.7. Ear Recognition Stages

2.7.1. Pre-Processing

2.7.2. Feature Extraction

2.7.3. Classification

2.8. Deep Learning Approaches in Ear Recognition

3. Results Analysis

3.1. Search Strategy 1: Source

- RQ1: What is state of the art in ear recognition research?

3.2. Relevance of Publication

3.3. Search Strategy 3: (Method)

- an assessment of existing algorithms on a given dataset (A);

- a proposed or yet-to-be-evaluated techniques (S);

- a designed templates using existing procedures (D);

- planning and assessment with studies based on established procedures (PA);

- newly proposed and executed techniques (PE).

- RQ2: What are the contributions of deep learning to ear recognition in the last decade?

- RQ3: Is there sufficient publicly available data for ear recognition research?

3.4. Comparison with Related Surveys

- Poor feature selection: the application of feature selection is very diverse as it aims to reduce factors that can affect the performance of classifiers. Many images are acquired with several inherent background noises. Invariably, poor feature selection results in poor classification.

- Hardware Dependence: A common drawback identified from selected works of literature is the resource-intensive tendencies of neural networks and other associated costs. They often require large volumes of data for training, placing heavy computational demand on processors.

- Gaps between industry, implementation, research, and deployment: studies from reviewed articles revealed a missing link between the industries, researchers, and other stakeholders such that the majority of the related experimental studies were performed for purely academic purposes, hence limiting the potential to fine-tune existing technologies to suit user requirements.

3.5. State of the Art in Ear Biometrics over the Last Decade

3.6. Threats to Validity

4. Discussions, Limitations, and Taxonomy

4.1. Limitations

4.2. Specific Contributions

- This study identifies a need to evaluate the performance of ear recognition systems with ear images of different races before they are deployed in real-world scenarios. However, existing ear recognition databases contain mostly Caucasian ear images, while other minority ethnic groups such as blacks, Asians, and Arabs are ignored [169].

- The black race form 18.2% of the total world population, however, previous research endeavors toward black ear recognition have not been established, and there is no publicly available dataset dedicated to black ear recognition in the works of literature reviewed.

- This study observed that ear recognition images are often partially or fully occluded by hair, dress, headphone, hat/cap, scarf, rings, and other obstacles [170]. Such occlusions and viewpoints may cause a significant decline in the performance of the ear recognition algorithm (ERA) during identification or verification tasks [171]. Therefore, reliable ear recognition should be equipped with automated detection of occlusion to avoid misclassification due to occluded samples [51].

5. Conclusions and Future Direction

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- World Bank Group. Identification for Development Strategic Framework; Working Paper; World Bank Group: Washington, DC, USA, 2016. [Google Scholar]

- Atick, J. The Identity Ecosystem of Rwanda. A Case Study of a Performant ID System in an African Development Context. ID4Africa Rep. 2016, 1–38. Available online: https://citizenshiprightsafrica.org/the-identity-ecosystem-of-rwanda-a-case-study-of-a-performant-id-system-in-an-african-development-context/ (accessed on 17 December 2022).

- Saranya, M.; Cyril, G.L.I.; Santhosh, R.R. An approach towards ear feature extraction for human identification. In Proceedings of the International Conference on Electrical, Electronics and Optimization Techniques (ICEEOT 2016), Chennai, India, 3–5 March 2016; pp. 4824–4828. [Google Scholar] [CrossRef]

- Unar, J.; Seng, W.C.; Abbasi, A. A review of biometric technology along with trends and prospects. Pattern Recognit. 2014, 47, 2673–2688. [Google Scholar] [CrossRef]

- Emersic, Z.; Stepec, D.; Struc, V.; Peer, P. Training Convolutional Neural Networks with Limited Training Data for Ear Recognition in the Wild. In Proceedings of the International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 30 May–3 June 2017; pp. 987–994. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, J.; Zhu, Y. Review of Ear Biometrics. Arch. Comput. Methods Eng. 2019, 28, 149–180. [Google Scholar] [CrossRef]

- Alaraj, M.; Hou, J.; Fukami, T. A neural network based human identification framework using ear images. In Proceedings of the International Technical Conference of IEEE Region 10, Fukuoka, Japan, 21–24 November 2010; pp. 1595–1600. [Google Scholar]

- Song, L.; Gong, D.; Li, Z.; Liu, C.; Liu, W. Occlusion Robust Face Recognition Based on Mask Learning with Pairwise Differential Siamese Network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 773–782. [Google Scholar] [CrossRef]

- Li, P.; Prieto, L.; Mery, D.; Flynn, P.J. On Low-Resolution Face Recognition in the Wild: Comparisons and New Techniques. IEEE Trans. Inf. Forensics Secur. 2019, 14, 2000–2012. [Google Scholar] [CrossRef]

- Emersic, Z.; Struc, V.; Peer, P. Ear recognition: More than a survey. Neurocomputing 2017, 255, 26–39. [Google Scholar] [CrossRef]

- Abayomi-Alli, O.; Misra, S.; Abayomi-Alli, A.; Odusami, M. A review of soft techniques for SMS spam classification: Methods, approaches and applications. Eng. Appl. Artif. Intell. 2019, 86, 197–212. [Google Scholar] [CrossRef]

- Youbi, Z.; Boubchir, L.; Boukrouche, A. Human ear recognition based on local multi-scale LBP features with city-block distance. Multi. Tools Appl. 2019, 78, 14425–14441. [Google Scholar] [CrossRef]

- Madhusudhan, M.V.; Basavaraju, R.; Hegde, C. Secured Human Authentication Using Finger-Vein Patterns. In Data Management, Analytics, and Innovation. Advances in Intelligent Systems and Computing; Balas, V., Sharma, N., Chakrabarti, A., Eds.; Springer: Singapore, 2019; pp. 311–320. [Google Scholar] [CrossRef]

- Lei, Y.; Qian, J.; Pan, D.; Xu, T. Research on Small Sample Dynamic Human Ear Recognition Based on Deep Learning. Sensors 2022, 22, 1718. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, W.; Wei, C.; Wang, Y. Occlusion aware face in painting via generative adversarial networks. In Proceedings of the Image Processing (ICIP), International Conference on IEEE, Beijing, China, 17–20 September 2017; pp. 1202–1206. [Google Scholar]

- Tian, L.; Mu, Z. Ear recognition based on deep convolutional network. In Proceedings of the 9th International Congress on Image and Signal Processing, Biomedical Engineering, and Informatics (CISP-BMEI 2016), Datong, China, 15–17 October 2016; pp. 437–441. [Google Scholar] [CrossRef]

- Labati, R.D.; Muñoz, E.; Piuri, V.; Sassi, R.; Scotti, F. Deep-ECG: Convolutional Neural Networks for ECG biometric recognition. Pattern Recognit. Lett. 2019, 126, 78–85. [Google Scholar] [CrossRef]

- Ramos-Cooper, S.; Gomez-Nieto, E.; Camara-Chavez, G. VGGFace-Ear: An Extended Dataset for Unconstrained Ear Recognition. Sensors 2022, 22, 1752. [Google Scholar] [CrossRef]

- Guo, Y.; Xu, Z. Ear Recognition Using a New Local Matching Approach. In Proceedings of the 15th IEEE International Conference on Image Processing (ICIP), San Diego, CA, USA, 12–15 October 2008; pp. 289–293. [Google Scholar]

- Raghavendra, R.; Raja, K.B.; Venkatesh, S.; Busch, C. Improved ear verification after surgery—An approach based on collaborative representation of locally competitive features. Pattern Recognit. 2018, 83, 416–429. [Google Scholar] [CrossRef]

- Bertillon, A. La Photographie Judiciaire, Avec un Appendice Classificationetl Identification Anthropométriques; Technical Report; Gauthier-Villars: Paris, France, 1890. [Google Scholar]

- Burge, M.; Burger, W. Ear biometrics in computer vision. In Proceedings of the 15th International Conference on Pattern Recognition. ICPR-2000, Barcelona, Spain, 3–7 September 2000; pp. 822–826. [Google Scholar]

- Alva, M.; Srinivasaraghavan, A.; Sonawane, K. A Review on Techniques for Ear Biometrics. In Proceedings of the IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 20–22 February 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Chowdhury, M.; Islam, R.; Gao, J. Robust ear biometric recognition using neural network. In Proceedings of the 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; pp. 1855–1859. [Google Scholar]

- Kumar, R.; Dhenakaran, S. Pixel based feature extraction for ear biometrics. In Proceedings of the IEEE International Conference on Machine Vision and Image Processing (MVIP), Coimbatore, India, 14–15 December 2012; pp. 40–43. [Google Scholar]

- Rahman, M.; Islam, R.; Bhuiyan, I.; Ahmed, B.; Islam, A. Person identification using ear biometrics. Int. J. Comput. Internet Manag. 2007, 15, 1–8. [Google Scholar]

- El-Naggar, S.; Abaza, A.; Bourlai, T. On a taxonomy of ear features. In Proceedings of the IEEE Symposium on Technologies for Homeland Security; HST2016, Waltham, MA, USA, 10–11 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Damer, N.; Führer, B. Ear Recognition Using Multi-Scale Histogram of Oriented Gradients. In Proceedings of the Eighth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Piraeus-Athens, Greece, 18–20 July 2012; pp. 21–24. [Google Scholar]

- Tiwari, S.; Singh, A.; Singh, S. Comparison of Adult and Infant Ear Images for Biometric Recognition. In Proceedings of the Fourth International Conference on Parallel Distribution Grid Computing, Waknaghat, India, 22–24 December 2016; pp. 4–9. [Google Scholar]

- Tariq, A.; Anjum, M.; Akram, M. Personal identification using computerized human ear recognition system. In Proceedings of the 2011 International Conference on Computer Science and Network Technology, Harbin, China, 24–26 December 2011; pp. 50–54. [Google Scholar]

- Chang, K.; Bowyer, K.; Sarkar, S.; Victor, B. Comparison and combination of ear and face images in appearance-based biometrics. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1160–1165. [Google Scholar] [CrossRef]

- Pflug, A.; Busch, C. Ear biometrics: A survey of detection, feature extraction and recognition methods. IET Biom. 2012, 1, 114–129. [Google Scholar] [CrossRef]

- Dong, J.; Mu, Z. Multi-pose ear recognition based on force field transformation. In Proceedings of the 2nd International Symposium on Intelligence in Information Technology Applications, Shanghai, China, 20–22 December 2008; pp. 771–775. [Google Scholar]

- Xiao, X.; Zhou, Y. Two-Dimensional Quaternion PCA and Sparse PCA. IEEE Trans. Neural Networks Learn. Syst. 2018, 30, 2028–2042. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Mu, C.; Qu, W.; Liu, M.; Zhang, Y. A novel approach for ear recognition based on ICA and RBF network. In Proceedings of the International Conference on Machine Learning and Cybernetics, Guangzhou, China, 18–21 August 2005; pp. 4511–4515. [Google Scholar]

- Yuan, L.; Mu, C.; Zhang, Y.; Liu, K. Ear recognition using improved non-negative matrix factorization. In Proceedings of the International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; pp. 501–504. [Google Scholar]

- Sana, A.; Gupta, P.; Purkai, R. Ear Biometrics: A New Approach. In Advances in Pattern Recognition; Pal, P., Ed.; World Scientific Publishing: Singapore, 2007; pp. 46–50. [Google Scholar]

- Naseem, I.; Togneri, R.; Bennamoun, M. Sparse Representation for Ear Biometrics; Bebis, G., Boyle, R., Parvin, B., Koracin, D., Remagnino, P., Porikli, F., Eds.; Advances in Visual Computing: San Diego, CA, USA, 2008; pp. 336–345. [Google Scholar]

- Wang, X.-Q.; Xia, H.-Y.; Wang, Z.-L. The Research of Ear Identification Based On Improved Algorithm of Moment Invariant. In Proceedings of the 2010 Third International Conference on Information and Computing, Wuxi, China, 4–6 June 2010; Volume 1, pp. 58–60. [Google Scholar] [CrossRef]

- Bustard, J.D.; Nixon, M.S. Toward Unconstrained Ear Recognition From Two-Dimensional Images. IEEE Trans. Syst. Man Cybern. Part A Syst. Humans 2010, 40, 486–494. [Google Scholar] [CrossRef]

- Arbab-Zavar, B.; Nixon, S.; Hurley, J. On model-based analysis of ear biometrics. In Proceedings of the Conference on Biometrics: Theory, Applications and Systems, Crystal City, VA, USA, 27–29 September 2007; pp. 1–5. [Google Scholar]

- Kisku, R.; Mehrotra, H.; Gupta, P.; Sing, K. SIFT-Based ear recognition by fusion of detected key-points from color similarity slice regions. In Proceedings of the IEEE International Conference on Advances in Computational Tools for Engineering Applications (ACTEA), Beirut, Lebanon, 15–17 July 2009; pp. 380–385. [Google Scholar]

- Emersic, Z.; Playa, O.; Struc, V.; Peer, P. Towards accessories-aware ear recognition. In Proceedings of the 2018 IEEE International Work Conference on Bioinspired Intelligence (IWOBI), San Carlos, Costa Rica, 18–20 July 2018; pp. 1–8. [Google Scholar]

- Jeges, E.; Mate, L. Model-Based Human Ear Localization and Feature Extraction. Int. J. Intell. Comput. Med. Sci. Image Pro. 2007, 1, 101–112. [Google Scholar]

- Liu, H.; Yan, J. Multi-view Ear Shape Feature Extraction and Reconstruction. In Proceedings of the Third International IEEE Conference on Signal-Image Technologies and Internet-Based System (SITIS), Shanghai, China, 16–18 December 2007; pp. 652–658. [Google Scholar]

- Lakshmanan, L. Efficient person authentication based on multi-level fusion of ear scores. IET Biom. 2013, 2, 97–106. [Google Scholar] [CrossRef]

- Nosrati, M.S.; Faez, K.; Faradji, F. Using 2D wavelet and principal component analysis for personal identification based On 2D ear structure. In Proceedings of the International Conference on Intelligent and Advanced Systems, Kuala Lumpur, Malaysia, 25–28 November 2007; pp. 616–620. [Google Scholar] [CrossRef]

- Kumar, A.; Chan, T.-S. Robust ear identification using sparse representation of local texture descriptors. Pattern Recognit. 2013, 46, 73–85. [Google Scholar] [CrossRef]

- Galdamez, P.; Arrieta, A.G.; Ramon, M. Ear recognition using a hybrid approach based on neural networks. In Proceedings of the International Conference on Information Fusion, Salamanca, Spain, 7–10 July 2014; pp. 1–6. [Google Scholar]

- Mahajan, A.S.B.; Karande, K.J. PCA and DWT based multimodal biometric recognition system. In Proceedings of the International Conference on Pervasive Computing (ICPC), Pune, India, 8–10 January 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Quoc, H.N.; Hoang, V.T. Real-Time Human Ear Detection Based on the Joint of Yolo and RetinaFace. Complexity 2021, 2021, 7918165. [Google Scholar] [CrossRef]

- Panchakshari, P.; Tale, S. Performance analysis of fusion methods for EAR biometrics. In Proceedings of the 2016 IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 20–21 May 2016; pp. 1191–1194. [Google Scholar] [CrossRef]

- Ghoualmi, L.; Draa, A.; Chikhi, S. An ear biometric system based on artificial bees and the scale invariant feature transform. Expert Syst. Appl. 2016, 57, 49–61. [Google Scholar] [CrossRef]

- Mishra, S.; Kulkarni, S.; Marakarkandy, B. A neoteric approach for ear biometrics using multilinear PCA. In Proceedings of the International Conference and Workshop on Electronics and Telecommunication Engineering (ICWET 2016), Mumbai, India, 26–27 February 2016. [Google Scholar] [CrossRef]

- Kumar, A.; Hanmandlu, M.; Kuldeep, M.; Gupta, H.M. Automatic ear detection for online biometric applications. In Proceedings of the 2011 3rd International Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics, Hubli, India, 15–17 December 2011. [Google Scholar]

- Anwar, A.S.; Ghany, K.K.A.; Elmahdy, H. Human Ear Recognition Using Geometrical Features Extraction. Procedia Comput. Sci. 2015, 65, 529–537. [Google Scholar] [CrossRef]

- Cintas, C.; Quinto-Sánchez, M.; Acuña, V.; Paschetta, C.; de Azevedo, S.; de Cerqueira, C.C.S.; Ramallo, V.; Gallo, C.; Poletti, G.; Bortolini, M.C.; et al. Automatic ear detection and feature extraction using Geometric Morphometrics and convolutional neural networks. IET Biom. 2017, 6, 211–223. [Google Scholar] [CrossRef]

- Rahman, M.; Sadi, R.; Islam, R. Human ear recognition using geometric features. In Proceedings of the International Conference on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, 5–8 March 2014; pp. 1–4. [Google Scholar]

- Canny Edge Detection. Fourier.eng.hmc.edu. 2018. Available online: http://fourier.eng.hmc.edu/e161/lectures/canny/node1.html (accessed on 18 December 2022).

- Omara, I.; Li, X.; Xiao, G.; Adil, K.; Zuo, W. Discriminative Local Feature Fusion for Ear Recognition Problem. In Proceedings of the 2018 8th International Conference on Bioscience, Biochemistry and Bioinformatics (ICBBB), Tokyo, Japan, 18–20 January 2018; pp. 139–145. [Google Scholar] [CrossRef]

- Omara, I.; Li, F.; Zhang, H.; Zuo, W. A novel geometric feature extraction method for ear recognition. Expert Syst. Appl. 2016, 65, 127–135. [Google Scholar] [CrossRef]

- Hurley, D.; Nixon, M.; Carter, J. Force Field Energy Functionals for Ear Biometrics. Comput. Vis. Image Underst. 2005, 98, 491–512. [Google Scholar] [CrossRef]

- Polin, Z.; Kabir, E.; Sadi, S. 2D human-ear recognition using geometric features. In Proceedings of the 7th International Conference on Electrical and Computer Engineering, Dhaka, Bangladesh, 20–22 December 2012; pp. 9–12. [Google Scholar]

- Benzaoui, A.; Adjabi, I.; Boukrouche, A. Person identification based on ear morphology. In Proceedings of the International Conference on Advanced Aspects of Software Engineering (ICAASE), Constantine, Algeria, 29–30 October 2016; pp. 1–5. [Google Scholar]

- Benzaoui, A.; Hezil, I.; Boukrouche, A. Identity recognition based on the external shape of the human ear. In Proceedings of the 2015 International Conference on Applied Research in Computer Science and Engineering (ICAR), Beirut, Lebanon, 8–9 October 2015; pp. 1–5. [Google Scholar]

- Sharkas, M. Ear recognition with ensemble classifiers: A deep learning approach. Multi. Tools Appl. 2022, 81, 43919–43945. [Google Scholar] [CrossRef]

- Korichi, A.; Slatnia, S.; Aiadi, O. TR-ICANet: A Fast Unsupervised Deep-Learning-Based Scheme for Unconstrained Ear Recognition. Arab. J. Sci. Eng. 2022, 47, 9887–9898. [Google Scholar] [CrossRef]

- Pflug, A.; Busch, C.; Ross, A. 2D ear classification based on unsupervised clustering. In Proceedings of the International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–8. [Google Scholar]

- Dodge, S.; Mounsef, J.; Karam, L. Unconstrained ear recognition using deep neural networks. IET Biom. 2018, 7, 207–214. [Google Scholar] [CrossRef]

- Ying, T.; Shining, W.; Wanxiang, L. Human ear recognition based on deep convolutional neural network. In Proceedings of the 30th Chinese Control and Decision Conference (2018 CCDC), Shenyang, China, 9–11 June 2018; pp. 1830–1835. [Google Scholar] [CrossRef]

- Zarachoff, M.M.; Sheikh-Akbari, A.; Monekosso, D. Multi-band PCA based ear recognition technique. Multi. Tools Appl. 2022, 82, 2077–2099. [Google Scholar] [CrossRef]

- Alshazly, H.; Linse, C.; Barth, E.; Martinetz, T. Handcrafted versus CNN Features for Ear Recognition. Symmetry 2019, 11, 1493. [Google Scholar] [CrossRef]

- Moreno, B.; Sanchez, A.; Velez, J. On the use of outer ear images for personal identification in security applications. In Proceedings of the IEEE 33rd Annual International, Carnahan Conference on Security Technology, Madrid, Spain, 5–7 October 1999; pp. 469–476. [Google Scholar] [CrossRef]

- Mu, Z.; Yuan, L.; Xu, Z.; Xi, D.; Qi, S. Shape and Structural Feature Based Ear Recognition; Springer: Berlin/Heidelberg, Germany, 2004; pp. 663–670. [Google Scholar] [CrossRef]

- Choras, M. Ear biometrics based on geometrical feature extraction. Electron. Lett. Comput. Vis. Image Anal. 2005, 5, 84–95. [Google Scholar] [CrossRef]

- Tomczyk, A.; Szczepaniak, P.S. Ear Detection Using Convolutional Neural Network on Graphs with Filter Rotation. Sensors 2019, 19, 5510. [Google Scholar] [CrossRef] [PubMed]

- Abdellatef, E.; Omran, E.M.; Soliman, R.F.; Ismail, N.A.; Elrahman, S.E.S.E.A.; Ismail, K.N.; Rihan, M.; El-Samie, F.E.A.; Eisa, A.A. Fusion of deep-learned and hand-crafted features for cancelable recognition systems. Soft Comput. 2020, 24, 15189–15208. [Google Scholar] [CrossRef]

- Traore, I.; Alshahrani, M.; Obaidat, M.S. State of the art and perspectives on traditional and emerging biometrics: A survey. Secur. Priv. 2018, 1, e44. [Google Scholar] [CrossRef]

- Prakash, S.; Gupta, P. Human recognition using 3D ear images. Neurocomputing 2014, 140, 317–325. [Google Scholar] [CrossRef]

- Raposo, R.; Hoyle, E.; Peixinho, A.; Proenca, H. UBEAR: A dataset of ear images captured on-the-move in uncontrolled conditions. In Proceedings of the 2011 IEEE Workshop on Computational Intelligence in Biometrics and Identity Management (CIBIM), Paris, France, 11–15 April 2011; pp. 84–90. [Google Scholar] [CrossRef]

- Abaza, A.; Bourlai, T. On ear-based human identification in the mid-wave infrared spectrum. Image Vis. Comput. 2013, 31, 640–648. [Google Scholar] [CrossRef]

- Pandiar, A.; Ntalianis, K. Palanisamy, Intelligent Computing, Information and Control Systems, Advances in Intelligent Systems and Computing 1039; Springer: Berlin/Heidelberg, Germany, 2019; pp. 176–185. [Google Scholar]

- Nait-Ali, A. (Ed.) Hidden Biometrics; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Srinivas, N.; Flynn, P.J.; Bruegge, R.W.V. Human Identification Using Automatic and Semi-Automatically Detected Facial Marks. J. Forensic Sci. 2015, 61, S117–S130. [Google Scholar] [CrossRef]

- Almisreb, A.; Jamil, N. Advanced Technologies in Robotics and Intelligent Systems; Proceedings of ITR 2019; Springer: Berlin/Heidelberg, Germany, 2012; pp. 199–203. [Google Scholar] [CrossRef]

- Almisreb, A.; Jamil, N. Automated Ear Segmentation in Various Illumination Conditions. In Proceedings of the IEEE 8th International Colloquium on Signal Processing and Its Applications, Malacca, Malaysia, 23–25 March 2012; pp. 199–203. [Google Scholar]

- Kang, J.S.; Lawryshyn, Y.; Hatzinakos, D. Neural Network Architecture and Transient Evoked Otoacoustic Emission (TEOAE) Biometrics for Identification and Verification. IEEE Trans. Inf. Forensics Secur. 2019, 15, 2291–2301. [Google Scholar] [CrossRef]

- Rane, M.E.; Bhadade, U.S. Multimodal score level fusion for recognition using face and palmprint. Int. J. Electr. Eng. Educ. 2020. [Google Scholar] [CrossRef]

- Saini, R.; Rana, N. Comparison of Various Biometrics Methods. Int. J. Adv. Sci. Technol. 2014, 2, 24–30. [Google Scholar]

- Patil, S. Biometric Recognition Using Unimodal and Multimodal Features. Int. J. Innov. Res. Comput. Commun. Eng. 2014, 2, 6824–6829. [Google Scholar]

- Khan, B.; Khan, M.; Alghathbar, K. Biometrics and identity management for homeland security applications in Saudi Arabia. Afr. J. Bus. Manag. 2010, 4, 3296–3306. [Google Scholar]

- Zhang, J.; Ma, Q.; Cui, X.; Guo, H.; Wang, K.; Zhu, D. High-throughput corn ear screening method based on two-pathway convolutional neural network. Comput. Electron. Agric. 2020, 179, 105525. [Google Scholar] [CrossRef]

- Bansal, J.; Das, K.N.; Nagar, A.; Deep, K.; Ojha, A. Soft Computing for Problem Solving. In Advances in Intelligent Systems and Computing; Springer: Singapore, 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Earnest, E.; Hansley, E.; Segundo, P.; Sarkar, S. Employing fusion of learned and handcrafted Feature for unconstrained ear recognition. IET Biom. 2018, 7, 215–223. [Google Scholar]

- Chen, L.; Mu, Z. Partial Data Ear Recognition From One Sample per Person. IEEE Trans. Hum. Mach. Syst. 2016, 46, 799–809. [Google Scholar] [CrossRef]

- Wang, X.; Yuan, W. Gabor wavelets and General Discriminant analysis for ear recognition. In Proceedings of the 8th World Congress on Intelligent Control and Automation, Jinan, China, 7–9 July 2010; pp. 6305–6308. [Google Scholar] [CrossRef]

- Fahmi, P.A.; Kodirov, E.; Choi, D.J.; Lee, G.S.; Azli, A.M.F.; Sayeed, S. Implicit Authentication based on Ear Shape Biometrics using Smartphone Camera during a call. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Seoul, Korea, 14–17 October 2012; pp. 2272–2276. [Google Scholar]

- Ariffin, S.M.Z.S.Z.; Jamil, N. Cross-band ear recognition in low or variant illumination environments. In Proceedings of the International Symposium on Biometrics and Security Technologies (ISBAST), Kuala Lumpur, Malaysia, 26–27 August 2014; pp. 90–94. [Google Scholar] [CrossRef]

- Al Rahhal, M.M.; Mekhalfi, M.L.; Guermoui, M.; Othman, E.; Lei, B.; Mahmood, A. A Dense Phase Descriptor for Human Ear Recognition. IEEE Access 2018, 6, 11883–11887. [Google Scholar] [CrossRef]

- Oravec, M. Feature extraction and classification by machine learning methods for biometric recognition of face and iris. In Proceedings of the ELMAR-2014, Zadar, Croatia, 10–12 September 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, Z.; Sun, D.; Zhao, L.; Zhou, C.; Yue, W. Human Ear Recognition Using HOG with PCA Dimension Reduction and LBP. In Proceedings of the 2019 IEEE 9th International Conference on Electronics Information and Emergency Communication (ICEIEC), Beijing, China, 12–14 July 2019; pp. 72–75. [Google Scholar] [CrossRef]

- Sable, A.H.; Talbar, S.N. An Adaptive Entropy Based Scale Invariant Face Recognition Face Altered by Plastic Surgery. Pattern Recognit. Image Anal. 2018, 28, 813–829. [Google Scholar] [CrossRef]

- Mali, K.; Bhattacharya, S. Comparative Study of Different Biometric Features. Int. J. Adv. Res. Comput. Commun. Eng. 2013, 2, 30–35. [Google Scholar]

- Kandgaonkar, T.V.; Mente, R.S.; Shinde, A.R.; Raut, S.D. Ear Biometrics: A Survey on Ear Image Databases and Techniques for Ear Detection and Recognition. IBMRD’s J. Manag. Res. 2015, 4, 88–103. [Google Scholar] [CrossRef]

- Sikarwar, R.; Yadav, P. An Approach to Face Detection and Feature Extraction using Canny Method. Int. J. Comput. Appl. 2017, 163, 1–5. [Google Scholar] [CrossRef]

- Maity, S. 3D Ear Biometrics and Surveillance Video Based Biometrics. Ph.D. Thesis, University of Miami, Miami, FL, USA, 2017; p. 1789, Open Access Dissertations. Available online: https://scholarlyrepository.miami.edu/oa_dissertations/1789 (accessed on 5 December 2022).

- Mamta; Hanmandlu, M. Robust ear based authentication using Local Principal Independent Components. Expert Syst. Appl. 2013, 40, 6478–6490. [Google Scholar] [CrossRef]

- Galdámez, P.L.; Raveane, W.; Arrieta, A.G. A brief review of the ear recognition process using deep neural networks. J. Appl. Log. 2017, 24, 62–70. [Google Scholar] [CrossRef]

- Li, L.; Zhong, B.; Hutmacher, C.; Liang, Y.; Horrey, W.J.; Xu, X. Detection of driver manual distraction via image-based hand and ear recognition. Accid. Anal. Prev. 2020, 137, 105432. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, K.; Fookes, C.; Sridharan, S.; Tistarelli, M.; Nixon, M. Super-resolution for biometrics: A comprehensive survey. Pattern Recognit. 2018, 78, 23–42. [Google Scholar] [CrossRef]

- HaCohen-Kerner, Y.; Hagege, R. Language and Gender Classification of Speech Files Using Supervised Machine Learning Methods. Cybern. Syst. 2017, 48, 510–535. [Google Scholar] [CrossRef]

- Kaur, P.; Krishan, K.; Sharma, S.K.; Kanchan, T. Facial-recognition algorithms: A literature review. Med. Sci. Law 2020, 60, 131–139. [Google Scholar] [CrossRef] [PubMed]

- Pedrycz, W.; Chen, S. Deep Learning: Algorithms and Applications. In Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2020; pp. 157–170. [Google Scholar] [CrossRef]

- Zhang, Y.; Mu, Z. Ear Detection under Uncontrolled Conditions with Multiple Scale Faster Region-Based Convolutional Neural Networks. Symmetry 2017, 9, 53. [Google Scholar] [CrossRef]

- Eyiokur, F.I.; Yaman, D.; Ekenel, H.K. Domain adaptation for ear recognition using deep convolutional neural networks. IET Biom. 2018, 7, 199–206. [Google Scholar] [CrossRef]

- Kandaswamy, C.; Monteiro, J.C.; Silva, L.M.; Cardoso, J.S. Multi-source deep transfer learning for cross-sensor biometrics. Neural Comput. Appl. 2016, 28, 2461–2475. [Google Scholar] [CrossRef]

- Sinha, H.; Manekar, R.; Sinha, Y.; Ajmera, P.K. Convolutional Neural Network-Based Human Identification Using Outer Ear Images. In Soft Computing for Problem Solving; Springer: Berlin/Heidelberg, Germany, 2018; pp. 707–719. [Google Scholar] [CrossRef]

- Xu, X.; Liu, Y.; Cao, S.; Lu, L. An Efficient and Lightweight Method for Human Ear Recognition Based on MobileNet. Wirel Commun. Mob. Comput. 2022, 2022, 9069007. [Google Scholar] [CrossRef]

- Hidayati, N.; Maulidah, M.; Saputra, E.P. Ear Identification Using Convolution Neural Network. Available online: www.iocscience.org/ejournal/index.php/mantik/article/download/2263/1800 (accessed on 18 December 2022).

- Madec, S.; Jin, X.; Lu, H.; De Solan, B.; Liu, S.; Duyme, F.; Heritier, E.; Baret, F. Ear density estimation from high resolution RGB imagery using deep learning technique. Agric. For. Meteorol. 2018, 264, 225–234. [Google Scholar] [CrossRef]

- Mikolajczyk, M.; Growchoski, M. Data augmentation for improving deep learning in image classification. In Proceedings of the 2018 International Interdisciplinary PhD Workshop (IIPhDW), Świnouście, Poland, 9–12 May 2018; pp. 215–224. [Google Scholar]

- Jiang, R.; Tsun, L.; Crookes, D.; Meng, W.; Rosenberger, C. Deep Biometrics, Unsupervised and Semi-Supervised Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 238–322. [Google Scholar] [CrossRef]

- Pereira, T.M.; Conceição, R.C.; Sencadas, V.; Sebastião, R. Biometric Recognition: A Systematic Review on Electrocardiogram Data Acquisition Methods. Sensors 2023, 23, 1507. [Google Scholar] [CrossRef]

- Raveane, W.; Galdámez, P.L.; Arrieta, M.A.G. Ear Detection and Localization with Convolutional Neural Networks in Natural Images and Videos. Processes 2019, 7, 457. [Google Scholar] [CrossRef]

- Martinez, A.; Moritz, N.; Meyer, B. Should Deep Neural Nets Have Ears? The Role of Auditory Features in Deep Learning Approaches; Interspeech: Incheon, Korea, 2014; pp. 1–5. [Google Scholar]

- Jamil, N.; Almisreb, A.; Ariffin, S.; Din, N.; Hamzah, R. Can Convolution Neural Network (CNN) Triumph in Ear Recognition of Uniform Illumination Variant? Indones. J. Electr. Eng. Comput. Sci. 2018, 11, 558–566. [Google Scholar]

- de Campos, L.M.L.; de Oliveira, R.C.L.; Roisenberg, M. Optimization of neural networks through grammatical evolution and a genetic algorithm. Expert Syst. Appl. 2016, 56, 368–384. [Google Scholar] [CrossRef]

- El-Bakry, H.; Mastorakis, N. Ear Recognition by using Neural networks. J. Math. Methods Appl. Comput. 2010, 770–804. Available online: https://www.researchgate.net/profile/Hazem-El-Bakry/publication/228387551_Ear_recognition_by_using_neural_networks/links/553fa82c0cf2320416eb244b/Ear-recognition-by-using-neural-networks.pdf (accessed on 18 December 2022).

- Victor, B.; Bowyer, K.; Sarkar, S. An evaluation of face and ear biometrics. In Proceedings of the International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; Volume 1, pp. 429–432. [Google Scholar]

- Jacob, L.; Raju, G. Ear recognition using texture features-a novel approach. In Advances in Signal Processing and Intelligent Recognition Systems; Springer International Publishing: New York, NY, USA, 2014; pp. 1–12. [Google Scholar]

- Kumar, A.; Zhang, D. Ear authentication using Log-Gabor wavelets. In Proceedings of the Symposium on Defense and Security, International Society for Optics and Photonics, Orlando, FL, USA, 9–13 April 2007; pp. 1–5. [Google Scholar]

- Lumini, A.; Nanni, L. An improved BioHashing for human authentication. Pattern Recognit. 2007, 40, 1057–1065. [Google Scholar] [CrossRef]

- Arbab-Zavar, B.; Nixon, M. Robust Log-Gabor Filter for Ear Biometrics. In Proceedings of the International Conference on Pattern Recognition (ICPR 2008), Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Wang, Y.; Mu, Z.-C.; Zeng, H. Block-based and multi-resolution methods for ear recognition using wavelet transform and uniform local binary patterns. In Proceedings of the Pattern Recognition, 2008, ICPR 2008, 19th International Conference, Tampa, FL, USA, 8–11 December; 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Xie, Z.; Mu, Z. Ear recognition using LLE and IDLLE algorithm. In Proceedings of the 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, H. Multi-view ear recognition based on B-Spline pose manifold construction. In Proceedings of the 7th World Congress on Intelligent Control and Automation, Chongqing, China, 25–27 June 2008; pp. 2416–2421. [Google Scholar] [CrossRef]

- Nanni, L.; Lumini, A. Fusion of color spaces for ear authentication. Pattern Recognit. 2009, 42, 1906–1913. [Google Scholar] [CrossRef]

- Xiaoyun, W.; Weiqi, Y. Human ear recognition based on block segmentation. In Proceedings of the Cyber—Enabled Distributed Computing and Knowledge Discovery, Zhangjiajie, China, 10–11 October 2009; pp. 262–266. [Google Scholar]

- Chan, S.; Kumar, A. Reliable ear identification using 2-D quadrature filters. Pattern Recognit. Lett. 2012, 33, 1870–1881. [Google Scholar] [CrossRef]

- Ganapathi, I.I.; Prakash, S.; Dave, I.R.; Bakshi, S. Unconstrained ear detection using ensemble-based convolutional neural network model. Concurr. Comput. Pract. Exp. 2019, 32, e5197. [Google Scholar] [CrossRef]

- Baoqing, Z.; Zhichun, M.; Chen, J.; Jiyuan, D. A robust algorithm for ear recognition under partial occlusion. In Proceedings of the Chinese Control Conference, Xi’an, China, 26–28 July 2013; pp. 3800–3804. [Google Scholar]

- Kacar, U.; Kirci, M. ScoreNet: Deep Cascade Score Level Fusion for Unconstrained Ear Recognition. Available online: https://ietresearch.onlinelibrary.wiley.com/doi/pdfdirect/10.1049/iet-bmt.2018.5065 (accessed on 18 December 2022).

- Wang, Y.; Cheng, K.; Zhao, S.; Xu, E. Human Ear Image Recognition Method Using PCA and Fisherface Complementary Double Feature Extraction. Available online: https://ojs.istp-press.com/jait/article/download/146/159 (accessed on 18 December 2022).

- Basit, A.; Shoaib, M. A human ear recognition method using nonlinear curvelet feature subspace. Int. J. Comput. Math. 2014, 91, 616–624. [Google Scholar] [CrossRef]

- Benzaoui, A.; Hadid, A.; Boukrouche, A. Ear biometric recognition using local texture descriptors. J. Electron. Imaging 2014, 23, 053008. [Google Scholar] [CrossRef]

- Khorsandi, R.; Abdel-Mottaleb, M. Gender classification using 2-D ear images and sparse representation. In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Clearwater Beach, FL, USA, 15–17 January 2013; pp. 461–466. [Google Scholar] [CrossRef]

- Pflug, A.; Paul, N.; Busch, N. A comparative study on texture and surface descriptors for ear biometrics. In Proceedings of the International Carnahan Conference on Security Technology, Rome, Italy, 13–16 October 2014; pp. 1–6. [Google Scholar]

- Ying, T.; Debin, Z.; Baihuan, Z. Ear recognition based on weighted wavelet transform and DCT. In Proceedings of the Chinese Conference on Control and Decision, Changsha, China, 31 May–2 June 2014; pp. 4410–4414. [Google Scholar]

- Chattopadhyay, P.K.; Bhatia, S. Morphological examination of ear: A study of an Indian population. Leg. Med. 2009, 11, S190–S193. [Google Scholar] [CrossRef] [PubMed]

- Krishan, K.; Kanchan, T.; Thakur, S. A study of morphological variations of the human ear for its applications in personal identification. Egypt. J. Forensic Sci. 2019, 9, 1–11. [Google Scholar] [CrossRef]

- Houcine, B.; Hakim, D.; Amir, B.; Hani, B.A.; Bourouba, H. Ear recognition based on Multi-bags-of-features histogram. In Proceedings of the International Conference on Control, Engineering Information Technology, Tlemcen, Algeria, 25–27 May 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Meraoumia, A.; Chitroub, S.; Bouridane, A. An automated ear identification system using Gabor filter responses. In Proceedings of the International Conference on New Circuits and Systems, Grenoble, France, 7–10 June 2015; pp. 1–4. [Google Scholar]

- Morales, A.; Diaz, M.; Llinas-Sanchez, G.; Ferrer, M. Ear print recognition based on an ensemble of global and local features. In Proceedings of the International Carnahan Conference on Security Technology, Taipei, Taiwan, 21–24 September 2015; pp. 253–258. [Google Scholar]

- Sánchez, D.; Melin, P.; Castillo, O. Optimization of modular granular neural networks using a firefly algorithm for human recognition. Eng. Appl. Artif. Intell. 2017, 64, 172–186. [Google Scholar] [CrossRef]

- Almisreb, A.; Jamil, N.; Din, M. Utilizing AlexNet Deep Transfer Learning for Ear Recognition. In Proceedings of the 4th International Conference on Information Retrieval and Knowledge Management (CAMP), Kota Kinabalu, Malaysia, 26–28 March 2018; pp. 1–5. [Google Scholar]

- Wiseman, K.B.; McCreery, R.W.; Walker, E.A. Hearing Thresholds, Speech Recognition, and Audibility as Indicators for Modifying Intervention in Children With Hearing Aids. Ear Hear. 2023. [Google Scholar] [CrossRef]

- Khan, M.A.; Kwon, S.; Choo, J.; Hong, S.J.; Kang, S.H.; Park, I.-H.; Kim, S.K. Automatic detection of tympanic membrane and middle ear infection from oto-endoscopic images via convolutional neural networks. Neural Netw. 2020, 126, 384–394. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Huang, Z.; Wang, X.; Huang, K. An Overview of Multimodal Biometrics Using the Face and Ear. Math. Probl. Eng. 2020, 2020, 6802905. [Google Scholar] [CrossRef]

- Hazra, A.; Choudhury, S.; Bhattacharyya, N.; Chaki, N. An Intelligent Scheme for Human Ear Recognition Based on Shape and Amplitude Features. Advanced Computing Systems for Security: 13. Lecture Notes in Networks and Systems, 241; Chaki, R., Chaki, N., Cortesi, A., Saeed, K., Eds.; Advanced Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar] [CrossRef]

- Jeyabharathi, J.; Devi, S.; Krishnan, B.; Samuel, R.; Anees, M.I.; Jegadeesan, R. Human Ear Identification System Using Shape and structural feature based on SIFT and ANN Classifier. In Proceedings of the International Conference on Communication, Computing and Internet of Things (IC3IoT), Chennai, India, 10–11 March 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, X.; Lu, L.; Zhang, X.; Lu, H.; Deng, W. Multispectral palmprint recognition using multiclass projection extreme learning machine and digital shearlet transform. Neural Comput. Appl. 2016, 27, 143–153. [Google Scholar] [CrossRef]

- Borodo, S.; Shamsuddin, S.; Hasan, S. Big Data Platforms and Techniques. Indones. J. Electr. Eng. Comput. Sci. 2016, 1, 191–200. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields of single neurones in the cat’s striate cortex. J. Physiol. 1959, 148, 574–591. [Google Scholar] [CrossRef] [PubMed]

- Booysens, A.; Viriri, S. Ear biometrics using deep learning: A survey. Appl. Comput. Intell. Soft Comput. 2022, 2022. [Google Scholar] [CrossRef]

- Zhao, H.M.; Yao, R.; Xu, L.; Yuan, Y.; Li, G.Y.; Deng, W. Study on a Novel Fault Damage Degree Identification Method Using High-Order Differential Mathematical Morphology Gradient Spectrum Entropy. Entropy 2018, 20, 682. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, E.; Alvarez, L.; Mazorra, L. Normalization and feature extraction on ear images. In Proceedings of the IEEE International Carnahan Conference on Security, Newton, MA, USA, 15–18 October 2012; pp. 97–104. [Google Scholar] [CrossRef]

- Zhang, J.; Yu, W.; Yang, X.; Deng, F. Few-shot learning for ear recognition. In Proceedings of the 2019 International Conference on Image, Video and Signal Processing, New York, NY, USA, 25–28 February 2019; pp. 50–54. [Google Scholar] [CrossRef]

- Zou, Q.; Wang, C.; Yang, S.; Chen, B. A compact periocular recognition system based on deep learning framework AttenMidNet with the attention mechanism. Multimed. Tools Appl. 2022. [Google Scholar] [CrossRef]

- Shafi’I, M.; Latiff, M.; Chiroma, H.; Osho, O.; Abdul-Salaam, G.; Abubakar, A.; Herawan, T. A Review on Mobile SMS Spam Filtering Techniques. IEEE Access 2017, 5, 15650–15666. [Google Scholar] [CrossRef]

- Perkowitz, S. The Bias in the Machine: Facial Recognition Technology and Racial Disparities. MIT Case Stud. Soc. Ethic Responsib. Comput. 2021. [Google Scholar] [CrossRef]

- Kamboj, A.; Rani, R.; Nigam, A. A comprehensive survey and deep learning-based approach for human recognition using ear biometric. Vis. Comput. 2021, 38, 2383–2416. [Google Scholar] [CrossRef]

- Othman, R.; Alizadeh, F.; Sutherland, A. A novel approach for occluded ear recognition based on shape context. In Proceedings of the 2018 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 9–11 October 2018; pp. 93–98. [Google Scholar]

- Zangeneh, E.; Rahmati, M.; Mohsenzadeh, Y. Low resolution face recognition using a two-branch deep convolutional neural network architecture. Expert Syst. Appl. 2020, 139, 112854. [Google Scholar] [CrossRef]

- Toprak, I.; Toygar, Ö. Detection of spoofing attacks for ear biometrics through image quality assessment and deep learning. Expert Syst. Appl. 2021, 172, 114600. [Google Scholar] [CrossRef]

- Rahim, M.; Rehman, A.; Kurniawan, F.; Saba, T. Biometrics for Human Classification Based on Region Features Mining. Biomed. Res. 2017, 28, 4660–4664. [Google Scholar]

- Hurley, D.; Nixon, M.; Carter, J. Automatic ear recognition by force field transformations. In Proceedings of the IEE Colloquium on Visual Biometrics, Ref. No. 2000/018, London, UK, 2 March 2000; pp. 1–5. [Google Scholar]

- Abaza, A.; Ross, A.; Herbert, C.; Harrison, M.; Nixon, M. A survey on ear biometrics. ACM Comput. Surv. 2013, 45, 1–35. [Google Scholar] [CrossRef]

- Chowdhury, D.P.; Bakshi, S.; Sa, P.K.; Majhi, B. Wavelet energy feature based source camera identification for ear biometric images. Pattern Recognit. Lett. 2018, 130, 139–147. [Google Scholar] [CrossRef]

- Miccini, R.; Spagnol, S. HRTF Individualization using Deep Learning. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 390–395. [Google Scholar]

- Bargal, S.A.; Welles, A.; Chan, C.R.; Howes, S.; Sclaroff, S.; Ragan, E.; Johnson, C.; Gill, C. Image-based Ear Biometric Smartphone App for Patient Identification in Field Settings. In Proceedings of the 10th International Conference on Computer Vision Theory and Applications, Berlin, Germany, 11–14 March 2010; pp. 171–179. [Google Scholar] [CrossRef]

- Agarwal, R. Local and Global Features Based on Ear Recognition System. In International Conference on Artificial Intelligence and Sustainable Engineering; Sanyal, G., Travieso-González, C.M., Awasthi, S., Pinto, C.M., Purushothama, B.R., Eds.; Lecture Notes in Electrical Engineering; Springer: Singapore, 2022; p. 837. [Google Scholar] [CrossRef]

- Chowdhury, D.P.; Bakshi, S.; Pero, C.; Olague, G.; Sa, P.K. Privacy Preserving Ear Recognition System Using Transfer Learning in Industry 4.0. In IEEE Transactions on Industrial Informatics; IEEE: New York, NY, USA, 2022; pp. 1–10. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidi, A.; Su, H.; Bennamoun, M.; Zhang, D. Biometrics recognition using deep learning: A survey. Artif. Intell. Rev. 2023, 1–49. [Google Scholar] [CrossRef]

- Kamboj, A.; Rani, R.; Nigam, A.; Jha, R.R. CED-Net: Context-aware ear detection network for unconstrained images. Pattern Anal. Appl. 2020, 24, 779–800. [Google Scholar] [CrossRef]

- Ganapathi, I.I.; Ali, S.S.; Prakash, S.; Vu, N.S.; Werghi, N. A Survey of 3D Ear Recognition Techniques. ACM Comput. Surv. 2023, 55, 1–36. [Google Scholar] [CrossRef]

- Alkababji, A.M.; Mohammed, O.H. Real time ear recognition using deep learning. TELKOMNIKA Telecommun. Comput. Electron. Control 2021, 19, 523–530. [Google Scholar] [CrossRef]

- Hamdany, A.H.S.; Ebrahem, A.T.; Alkababji, A.M. Earprint recognition using deep learning technique. TELKOMNIKA Telecommun. Comput. Electron. Control 2021, 19, 432–437. [Google Scholar] [CrossRef]

- Hadi, R.A.; George, L.E.; Ahmed, Z.J. Automatic human ear detection approach using modified adaptive search window technique. TELKOMNIKA Telecommun. Comput. Electron. Control. 2021, 19, 507–514. [Google Scholar] [CrossRef]

- Mussi, E.; Servi, M.; Facchini, F.; Furferi, R.; Governi, L.; Volpe, Y. A novel ear elements segmentation algorithm on depth map images. Comput. Biol. Med. 2021, 129, 104157. [Google Scholar] [CrossRef]

- Kamboj, A.; Rani, R.; Nigam, A. CG-ERNet: A lightweight Curvature Gabor filtering based ear recognition network for data scarce scenario. Multi. Tools Appl. 2021, 80, 26571–26613. [Google Scholar] [CrossRef]

- Emersic, Z.; Susanj, D.; Meden, B.; Peer, P.; Struc, V. ContexedNet: Context–Aware Ear Detection in Unconstrained Settings. IEEE Access 2021, 9, 145175–145190. [Google Scholar] [CrossRef]

- El-Naggar, S.; Abaza, A.; Bourlai, T. Ear Detection in the Wild Using Faster R-CNN Deep Learning. In Proceedings of the 2018 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), Barcelona, Spain, 28–31 August 2018; pp. 1124–1130. [Google Scholar] [CrossRef]

- Tang, X.; Du, D.K.; He, Z.; Liu, J. PyramidBox: A Context-Assisted Single Shot Face Detector; Springer: Berlin/Heidelberg, Germany, 2018; pp. 812–828. [Google Scholar] [CrossRef]

- Najibi, M.; Samangouei, P.; Chellappa, R.; Davis, L.S. SSH: Single Stage Headless Face Detector. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4875–4884. [Google Scholar] [CrossRef]

- Khaldi, Y.; Benzaoui, A.; Ouahabi, A.; Jacques, S.; Taleb-Ahmed, A. Ear Recognition Based on Deep Unsupervised Active Learning. IEEE Sens. J. 2021, 21, 20704–20713. [Google Scholar] [CrossRef]

- Khaldi, Y.; Benzaoui, A. A new framework for grayscale ear images recognition using generative adversarial networks under unconstrained conditions. Evol. Syst. 2021, 12, 923–934. [Google Scholar] [CrossRef]

- Omara, I.; Hagag, A.; Ma, G.; El-Samie, F.E.A.; Song, E. A novel approach for ear recognition: Learning Mahalanobis distance features from deep CNNs. Mach. Vis. Appl. 2021, 32, 38. [Google Scholar] [CrossRef]

- Alejo, M.B. Unconstrained Ear Recognition Using Transformers. Jordanian J. Comput. Inf. Technol. 2021, 7, 326–336. [Google Scholar] [CrossRef]

- Alshazly, H.; Linse, C.; Barth, E.; Idris, S.A.; Martinetz, T. Towards Explainable Ear Recognition Systems Using Deep Residual Networks. IEEE Access 2021, 9, 122254–122273. [Google Scholar] [CrossRef]

- Priyadharshini, R.A.; Arivazhagan, S.; Arun, M. A deep learning approach for person identification using ear biometrics. Appl. Intell. 2020, 51, 2161–2172. [Google Scholar] [CrossRef] [PubMed]

- Lavanya, B.; Inbarani, H.H.; Azar, A.T.; Fouad, K.M.; Koubaa, A.; Kamal, N.A.; Lala, I.R. Particle Swarm Optimization Ear Identification System. In Soft Computing Applications. SOFA 2018. Advances in Intelligent Systems and Computing; Balas, V., Jain, L., Balas, M., Shahbazova, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; pp. 372–384. [Google Scholar] [CrossRef]

- Sarangi, P.P.; Panda, M.; Mishra, S.; Mishra, B.S.P. Multimodal biometric recognition using human ear and profile face: An improved approach. In Cognitive Data Science in Sustainable Computing, Machine Learning for Biometrics; Sarangi, P.P., Ed.; Elsevier: Amsterdam, The Netherlands; pp. 47–63. [CrossRef]

- Sarangi, P.P.; Nayak, D.R.; Panda, M.; Majhi, B. A feature-level fusion based improved multimodal biometric recognition system using ear and profile face. J. Ambient. Intell. Humaniz. Comput. 2022, 13, 1867–1898. [Google Scholar] [CrossRef]

- Abayomi-Alli, A.; Bioku, E.; Folorunso, O.; Dawodu, G.A.; Awotunde, J.B. An Occlusion and Pose Sensitive Image Dataset for Black Ear Recognition. Available online: https://zenodo.org/record/7715970#.ZBPQjPZBxPZ (accessed on 18 December 2022).

| S/n | Digital Library | No. Articles | Percentage (%) |

|---|---|---|---|

| 1 | Taylor & Francis | 89 | 7.9 |

| 2 | Science Direct | 157 | 14 |

| 3 | IEEE | 255 | 22.7 |

| 4 | Emerald | 48 | 4.2 |

| 5 | ACM | 73 | 6.5 |

| 6 | Sage | 55 | 4.9 |

| 7 | Springer | 201 | 17.9 |

| 8 | Elsevier | 137 | 12.2 |

| 9 | Wiley | 45 | 4.0 |

| 10 | MIT | 61 | 5.4 |

| Total | 1121 | 100 |

| S/n | Catalogue | Year | Total Images | Sides | Volunteers | Description | Available |

|---|---|---|---|---|---|---|---|

| 1 | VGGFace-Ear | 2022 | 234651 | both | 660 | Iner and intra subject variations in pose, age, illumination and ethnicity. | Free |

| 2 | UERC | 2019 | 11000 | Both | 3690 | Three image datasets to train and test images under varied parameters | Free |

| 3 | EarVN1.0 | 2018 | 28412 | N/A | 164 | Images captured under varied pose, illumination, and occlusion conditions | Free |

| 4 | USTB-HELLOEAR (A) | 2017 | 336572 | Both | 104 | Pose variations | Free |

| 5 | USTB-HELLOEAR (B) | 2017 | 275909 | Both | 466 | Left and right images captured in uncontrolled conditions | Free |

| 6 | WebEars | 2017 | 1000 | N/A | N/A | Images captured under varied conditions | Free |

| 7 | HelloEars | 2017 | 610000 | Both | 1570 | Images captured in a controlled environment | Free |

| 8 | AWE | 2016 | 1000 | Both | 100 | Images captured in the wild in an uncontrolled environment | Free |

| 9 | UND | 2014 | NA | Both | N/A | Different image collections with varied images captured in 3D. | Free |

| 10 | XM2VTS | 2014 | 4 Footages | Both | 295 | 32 khz 16-bit audio/video files | Not Free |

| 11 | UMIST | 2014 | 564 | Both | 20 | Head rotation from the left-hand side to the frontal view | Free |

| 12 | UBEAR | 2011 | 4497 | Both | 127 | Images captured in an uncontrolled environment with different poses and occlusion | Free |

| 13 | WPUT | 2010 | 2071 | Both | 501 | Varied illumination | Free |

| 14 | YSU | 2009 | 2590 | 259 | Angle images between 0 and 90 | Free | |

| 15 | IIT Delhi | 2007 | 493 | Right | 125 | 3 Images taken indoor | Free |

| 16 | WVU | 2006 | 460 | Both | 402 | 2 min audio-visual from both sides | Free |

| 17 | USTB (4) | 2005 | 8500 | Both | 500 | 15-degree differences using 17 cameras | Free |

| 18 | USTB (3) | 2004 | 1738 | Right | 79 | Dual images at 5-degree variation till 60. | Free |

| 19 | USTB (2) | 2003 | 308 | Right | 77 | Varying degrees of illumination at +30 and −30 degrees | Free |

| 20 | USTB (1) | 2002 | 180 | Right | 60 | Different illumination conditions at a trivial angle | Free |

| 21 | UND (E) | 2002 | 942 | Both | 302 | Both 2D and 3D pictures | Free |

| 22 | UND (F) | 2003 | 464 | Side | 114 | Side profile appearance | Free |

| 23 | UND (G) | 2005 | 738 | Side | 235 | 2D and 3D pictures | Free |

| 24 | UND (J2) | 2005 | 1800 | Both | 415 | 2D and 3D pictures | Free |

| 25 | IITD | 2007 | 663 | Right | 121 | Greyscale images with slight angle variations. | Free |

| 26 | PERPINAN | 1995 | 102 | Left | 17 | Images with minor pose variations captured in a controlled environment | Free |

| 27 | AMI | NA | 700 | Both | 100 | Fixed Illumination | Free |

| 28 | NCKU | N/A | 330 | Both | 90 | 37 images for each respondent | Free |

| Pre-Processing | Feature Extraction | Decision-Making and Classification |

|---|---|---|

| Filter Method Log Gabor Filter [54] Gaussian filter [55] Middle filter [55,56] Fuzzy filter [24] Intensity Method Histogram equalization [53,57] RBG—grayscale [25,55] | Geometric Method Numerical technique [58] Ear contour [25] Detection of the edge [59] Appearance Based Method Descriptors of features [60] Reduction of Dimension [61] Force field Transformations [62] Wavelet Method [63] | Neural networks [64] Normalized cross-correlation [53] SVM classifier [64,65], K-Nearest Neighbours [28] Minimum Distance Classifier [50] |

| Traditional Learning Technique | |||

| True Acceptance Rate [6,78,79,80,81,82,83] | Template capacity [5,84,85,86] | False Acceptance Rate [4,6,21,23,83,87,88,89,90,91] | Equal Error Rate [92,93,94] |

| Matching Speed [3,95] | Recognition Accuracy [14,15,24,28,68,85,96,97,98,99,100,101,102,103,104,105] | Recall [106,107,108] | Precision [40,95,102,109,110,111] |

| Deep Learning Techniques | |||

| True Acceptance Rate [110,111,112,113,114] | Template capacity [115] | False Acceptance Rate [110,111,112,113,114]. | Equal Error Rate [72,114] |

| Matching Speed [61,115,116,117] | Recognition Accuracy [70,118,119,120,121] | Recall [57,77,122,123,124,125] | Precision [126,127] |

| Reference. | Year | Method | Type | Dataset | Performance (%) |

|---|---|---|---|---|---|

| [7] | 2010 | PCA and NN | Holistic | UBEAR | 96 |

| [18] | 2022 | Deep Learning | CNN | VGGFace | NA |

| [23] | 2019 | NA | NA | NA | NA |

| [27] | 2016 | Geometric features | Geometric features | CP | 88 |

| [31] | 2003 | Force field transform | Holistic | Own | NA |

| [31] | 2003 | PCA | Holistic | UND(E) | 71.6 |

| [35] | 2005 | Matrix factorization | Holistic | USTB II | 91 |

| [38] | 2008 | Sparse representation | Holistic | UND | 96.9 |

| [39] | 2010 | Moment invariant method | Holistic | Own | 91.8 |

| [40] | 2010 | SIFT | Local | XM2VTS | 96 |

| [41] | 2007 | Combination of pre-filtered points and SIFT | Local | XM2VTS | 91.5 |

| [47] | 2007 | PCA and wavelet transformation | Hybrid | USTB II, CP | 90.5 |

| [47] | 2007 | Inpainting techniques, neural networks | CNN, Traditional learning | UERC | 75 |

| [48] | 2013 | SIFT | Local | CP | 78.8 |

| [49] | 2014 | Hybrid-based on SURF LDA AND NN | Hybrid | Own | 97 |

| [49] | 2014 | Neural networks | Deep CNN | UERC | 99.7 |

| [72] | 2019 | Neural Networks | CNN | AMI | 75.6 |

| [73] | 1999 | Orthogonal log-Gabor filter pairs | Local | IITD II | 95.9 |

| [75] | 2005 | Ear framework geometry | Geometric | Own | 86.2 |

| [81] | 2013 | Not Applicable (NA) | NA | NA | NA |

| [85] | 2019 | NA | NA | NA | NA |

| [87] | 2019 | Neural networks | CNN | - | - |

| [92] | 2020 | Deep learning | CNN | NA | 97 |

| [98] | 2014 | Edge image dimension | Geometric | USTB II | 85 |

| [107] | 2016 | CNN | Local | Avila Police School & Bisite Video | 80.5 & 79.2 |

| [107] | 2013 | Deep neural network | CNN | Avila Police School | 84 |

| [108] | 2017 | Traditional Machine Learning | YOLO, Multilayer perceptron | Own | 82 |

| [117] | 2018 | Maximum and minimum height lines | Geometric | USTDB&IIT Delhi | 98.3 & 99.6 |

| [119] | 2018 | Deep Learning | CNN | Open image dataset | 85 |

| [123] | 2023 | Neural networks | CNN | AMI, UND, Video Dataset, UBEAR | 98 |

| [128] | 2010 | PCA | Holistic | Own | 40 |

| [129] | 2002 | ICA | Holistic | Own | 94.1 |

| [130] | 2014 | Log-Gabor wavelets | Local | UND | 90 |

| [131] | 2007 | Multi-Matcher | Hybrid | UND(E) | 80 |

| [132] | 2007 | Log-Gabor filters | Local | XM2VTS | 85.7 |

| [133] | 2008 | LBP and Haar Wavelet transformation | Hybrid | USTB III | 92.4 |

| [134] | 2008 | Improved locally linear embedding | Holistic | USTB III | 90 |

| [135] | 2008 | Null Kernel discriminant analysis | Holistic | USTB I | 97.7 |

| [136] | 2008 | Gabor filters | Local | UND(E) | 84 |

| [137] | 2009 | Block portioning and Gabor transformation | Local | USTB II | 100 |

| [138] | 2009 | 2D quadrature filter | Local | IITD I | 96.5 |

| [140] | 2013 | Sparse representation classification | Holistic | USTB III | 90 |

| [141] | 2019 | Multi-level fusion | Hybrid | USTB II | 99.2 |

| [142] | 2014 | Enhanced SURF with NN | Local | IITK 1 | 2.8 |

| [143] | 2014 | Non-linear curvelet features | Local | IITD II | 96.2 |

| [144] | 2014 | BSIF | Local | IITD II | 97.3 |

| [145] | 2014 | LPQ | Local | Several | 93.1 |

| [146] | 2014 | LPQ, BSIF, LBP, HOG with LDA | Hybrid | UND-J2, AMI, IITK | 98.7 |

| [147] | 2014 | Weighted wavelet transforms and DCT | Hybrid | Own | 98.1 |

| [148] | 2015 | Haar wavelet and LBP | Hybrid | IITD | 94.5 |

| [149] | 2016 | BSIF | Local | IITD I, IITD II | 96.7 & 97.3 |

| [150] | 2015 | Multi-bags-of-features histogram | Local | IITD I | 6.3 |

| [151] | 2015 | Gabor filters | Local | IITD II | 92.4 |

| [153] | 2017 | Modular neural network | Hybrid | USTB | 99 |

| [154] | 2018 | Biased normalized cut and morphological operations | Deep Neural Network | Own | 95 |

| [155] | 2018 | Traditional machine learning | Local | NA | NA |

| [156] | 2020 | Deep learning | CNN | Own | 95 |

| [157] | 2020 | Traditional Machine Learning | Sparse Representation | USTB III | NA |

| [158] | 2022 | Traditional Machine Learning | Hybrid | IITDelhi | NA |

| [159] | 2022 | Deep Learning | SIFT and ANN | IITDelhi | NA |

| [180] | 2022 | Global and local ear prints | Hybrid | FEARID | 91.3 |

| Stage | Sub-Area | Pros | Cons |

|---|---|---|---|

| Pre-processing | Filter method | No need for object segmentation | Aligned ears are at a disadvantage |

| Graceful degradation is a major boost | Some details may be lost | ||

| Suitable for non-aligned images | Limited bandwidth is a drawback | ||

| Intensity method | Reduced computational difficulty | Distorted uniform images are concealed | |

| Spin and reflection invariant | Poor performance against scaling | ||

| Limited false matches | Copy and paste regions of an image cannot be detected | ||

| Feature Extraction | Geometric method | Suitable for obtaining a non-varying feature | Increased computation requirements |

| Methods are easy to implement | Results can sometimes be inaccurate | ||

| Image orientations are detected | Susceptible to noise | ||

| Appearance Method | Very robust, particularly in 2-dimensional space | Performance decreases with size | |

| Any image characteristics is extracted as a feature | Average accuracy is less compared with other methods | ||

| Minimized false matches | Cannot handle certain compressions | ||

| It can be used with a few selected features | Illumination is a significant factor | ||

| Recognition accuracy is high | Good-quality images are required | ||

| Classification | Neural Networks | Non-linear problems are easily resolved | Inability to model a few numbers of training datasets |

| Support Vector | Increased performance with gap in classes | Large datasets are unsuitable in SVM | |

| Improved memory utilization | Noise is not effectively controlled | ||

| Improved memory utilization | Limited explanation for classification |

| Year | Authors | Dataset | Approaches | Methods | Architecture | Status | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Holistic | Local | Geometric | Hybrid | TL | DL | CNN | Others | Unspecified | Assessment (A) | Proposed (S) | Designed (D) | Planned & Assessed (P&A) | Proposed & Executed (P&E) | |||

| 2016 | [3] | x | x | x | ||||||||||||

| 2017 | [5] | x | x | |||||||||||||

| 2019 | [6] | x | x | x | x | |||||||||||

| 2010 | [7] | x | x | |||||||||||||

| 2017b | [10] | x | x | x | x | |||||||||||

| 2019 | [12] | x | x | x | ||||||||||||

| 2016 | [16] | x | x | x | x | |||||||||||

| 2018 | [17] | x | x | x | x | |||||||||||

| 2022 | [18] | x | x | x | ||||||||||||

| 2018 | [20] | x | x | x | x | |||||||||||

| 2017 | [24] | x | x | x | ||||||||||||

| 2012 | [25] | x | x | x | x | x | ||||||||||

| 2012 | [28] | x | x | x | x | |||||||||||

| 2016 | [29] | x | x | x | ||||||||||||

| 2018 | [34] | x | x | x | x | |||||||||||

| 2010 | [39] | x | x | x | x | |||||||||||

| 2010 | [40] | x | x | x | x | |||||||||||

| 2018 | [43] | x | x | x | x | |||||||||||

| 2013 | [46] | x | x | x | x | x | ||||||||||

| 2013 | [48] | x | x | x | x | x | ||||||||||

| 2014 | [49] | x | x | x | x | x | ||||||||||

| 2015 | [50] | x | x | x | x | x | ||||||||||

| 2021 | [51] | x | x | |||||||||||||

| 2016 | [52] | x | x | x | x | |||||||||||

| 2016 | [53] | x | x | x | ||||||||||||

| 2011 | [55] | x | x | x | x | x | ||||||||||

| 2015 | [56] | x | x | x | x | x | ||||||||||

| 2016 | [57] | x | x | x | x | x | ||||||||||

| 2014 | [58] | x | x | |||||||||||||

| 2018 | [59] | x | x | x | x | |||||||||||

| 2018 | [60] | x | x | x | x | |||||||||||

| 2016 | [61] | x | x | x | x | |||||||||||

| 2016 | [64] | x | x | x | ||||||||||||

| 2015 | [65] | x | x | x | x | x | ||||||||||

| 2022 | [66] | x | x | x | x | |||||||||||

| 2018 | [69] | x | x | x | x | |||||||||||

| 2019 | [72] | x | x | x | x | |||||||||||

| 2019 | [76] | x | x | x | x | |||||||||||

| 2020 | [77] | x | x | |||||||||||||

| 2018 | [78] | x | x | x | x | |||||||||||

| 2014 | [79] | x | x | x | x | |||||||||||

| 2011 | [80] | x | x | |||||||||||||

| 2013 | [81] | x | x | x | x | |||||||||||

| 2020 | [83] | x | x | x | ||||||||||||

| 2019 | [87] | x | x | x | x | x | ||||||||||

| 2020 | [88] | x | x | x | x | |||||||||||

| 2010 | [91] | x | x | x | x | x | ||||||||||

| 2020 | [92] | x | x | x | x | |||||||||||

| 2017 | [93] | x | x | |||||||||||||

| 2018 | [94] | x | x | x | x | |||||||||||

| 2016 | [95] | x | x | x | x | |||||||||||

| 2014 | [98] | x | x | x | x | |||||||||||

| 2018 | [99] | x | x | x | x | |||||||||||

| 2014 | [100] | x | x | x | ||||||||||||

| 2019 | [101] | x | x | x | x | |||||||||||

| 2018 | [102] | x | x | x | x | |||||||||||

| 2017 | [104] | x | x | |||||||||||||

| 2013 | [106] | x | x | x | x | |||||||||||

| 2016 | [107] | x | x | x | ||||||||||||

| 2020 | [108] | x | x | x | ||||||||||||

| 2017 | [109] | x | x | x | x | |||||||||||

| 2017 | [110] | x | x | x | x | |||||||||||

| 2020 | [111] | x | x | |||||||||||||

| 2020 | [112] | x | x | x | ||||||||||||

| 2017 | [113] | x | x | x | ||||||||||||

| 2019 | [116] | x | x | x | x | |||||||||||

| 2018 | [119] | x | x | x | ||||||||||||

| 2020 | [121] | x | x | x | x | |||||||||||

| 2019 | [123] | x | x | x | x | |||||||||||

| 2014 | [124] | x | x | x | x | x | ||||||||||

| 2016 | [126] | x | x | x | x | |||||||||||

| 2010 | [127] | x | x | x | x | x | ||||||||||

| 2013 | [140] | x | x | x | ||||||||||||

| 2013 | [141] | x | x | x | x | x | ||||||||||

| 2014 | [142] | x | x | x | x | x | ||||||||||

| 2014 | [143] | x | x | x | x | |||||||||||

| 2015 | [150] | x | x | x | x | |||||||||||

| 2020 | [156] | x | x | x | x | |||||||||||

| 2020 | [157] | x | x | x | x | |||||||||||

| 2019 | [166] | x | x | x | x | |||||||||||

| 2018 | [167] | x | x | x | x | |||||||||||

| 2010 | [179] | x | x | x | x | x | ||||||||||

| 2020 | [183] | x | x | x | x | |||||||||||

| 2021 | [184] | x | ||||||||||||||

| 2021 | [185] | x | x | x | x | |||||||||||

| 2021 | [186] | x | x | x | ||||||||||||

| 2021 | [187] | x | x | x | ||||||||||||

| 2021 | [188] | x | x | |||||||||||||

| 2021 | [189] | x | x | x | x | |||||||||||

| 2021 | [190] | x | x | x | x | |||||||||||

| 2021 | [194] | x | x | x | ||||||||||||

| 2021 | [195] | x | x | x | x | |||||||||||

| 2021 | [196] | x | x | x | x | |||||||||||

| 2021 | [198] | x | x | x | x | |||||||||||

| 2021 | [199] | x | x | x | x | |||||||||||

| 2022 | [202] | x | x | x | x | |||||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oyebiyi, O.G.; Abayomi-Alli, A.; Arogundade, O.‘T.; Qazi, A.; Imoize, A.L.; Awotunde, J.B. A Systematic Literature Review on Human Ear Biometrics: Approaches, Algorithms, and Trend in the Last Decade. Information 2023, 14, 192. https://doi.org/10.3390/info14030192

Oyebiyi OG, Abayomi-Alli A, Arogundade O‘T, Qazi A, Imoize AL, Awotunde JB. A Systematic Literature Review on Human Ear Biometrics: Approaches, Algorithms, and Trend in the Last Decade. Information. 2023; 14(3):192. https://doi.org/10.3390/info14030192

Chicago/Turabian StyleOyebiyi, Oyediran George, Adebayo Abayomi-Alli, Oluwasefunmi ‘Tale Arogundade, Atika Qazi, Agbotiname Lucky Imoize, and Joseph Bamidele Awotunde. 2023. "A Systematic Literature Review on Human Ear Biometrics: Approaches, Algorithms, and Trend in the Last Decade" Information 14, no. 3: 192. https://doi.org/10.3390/info14030192

APA StyleOyebiyi, O. G., Abayomi-Alli, A., Arogundade, O. ‘T., Qazi, A., Imoize, A. L., & Awotunde, J. B. (2023). A Systematic Literature Review on Human Ear Biometrics: Approaches, Algorithms, and Trend in the Last Decade. Information, 14(3), 192. https://doi.org/10.3390/info14030192