Enhanced Learning and Forgetting Behavior for Contextual Knowledge Tracing

Abstract

1. Introduction

- Can exercise embeddings and students’ answering performance be enriched to increase the learnable information of models?

- Can contextual information for exercises be derived by considering students’ historical learning sequences?

- Can the learning and forgetting behavior of students be modeled more accurately by incorporating pedagogical theory?

- To distinguish exercises involving the same KC, we incorporated item response theory (IRT) [26] to enrich the exercise embeddings with difficulty information. In addition, we present an expanded Q matrix and an exercise–KC relation layer to address the issue of subjective bias in the human-calibrated Q matrix. Then, we incorporated students’ response time and hint times into the embeddings for their answer performance. Students’ answering time and hint times reflect their proficiency in using the corresponding KC. That is, the higher the proficiency of the corresponding KC, the less the required answer time and hint times are.

- Inspired by self-attention KT models such as AKT, we modeled the contextual information of learned sequences using the LSTM network to represent the impact of historically learned sequences.

- Combining our KT model with educational psychology theories, we split the students’ learning process into two parts: knowledge acquisition and knowledge retention. Knowledge acquisition simulates the expansion of knowledge gained by students’ learning behavior, and knowledge retention simulates students’ knowledge absorption and forgetting to determine the degree of knowledge retention. Furthermore, we modeled three factors affecting knowledge acquisition and retention: students’ repeated learning times, sequential learning time intervals, and current knowledge state.

2. Related Works

2.1. Knowledge Tracing

2.2. Learning and Forgetting

2.3. The Context of Learning Sequence

2.4. Item Response Theory

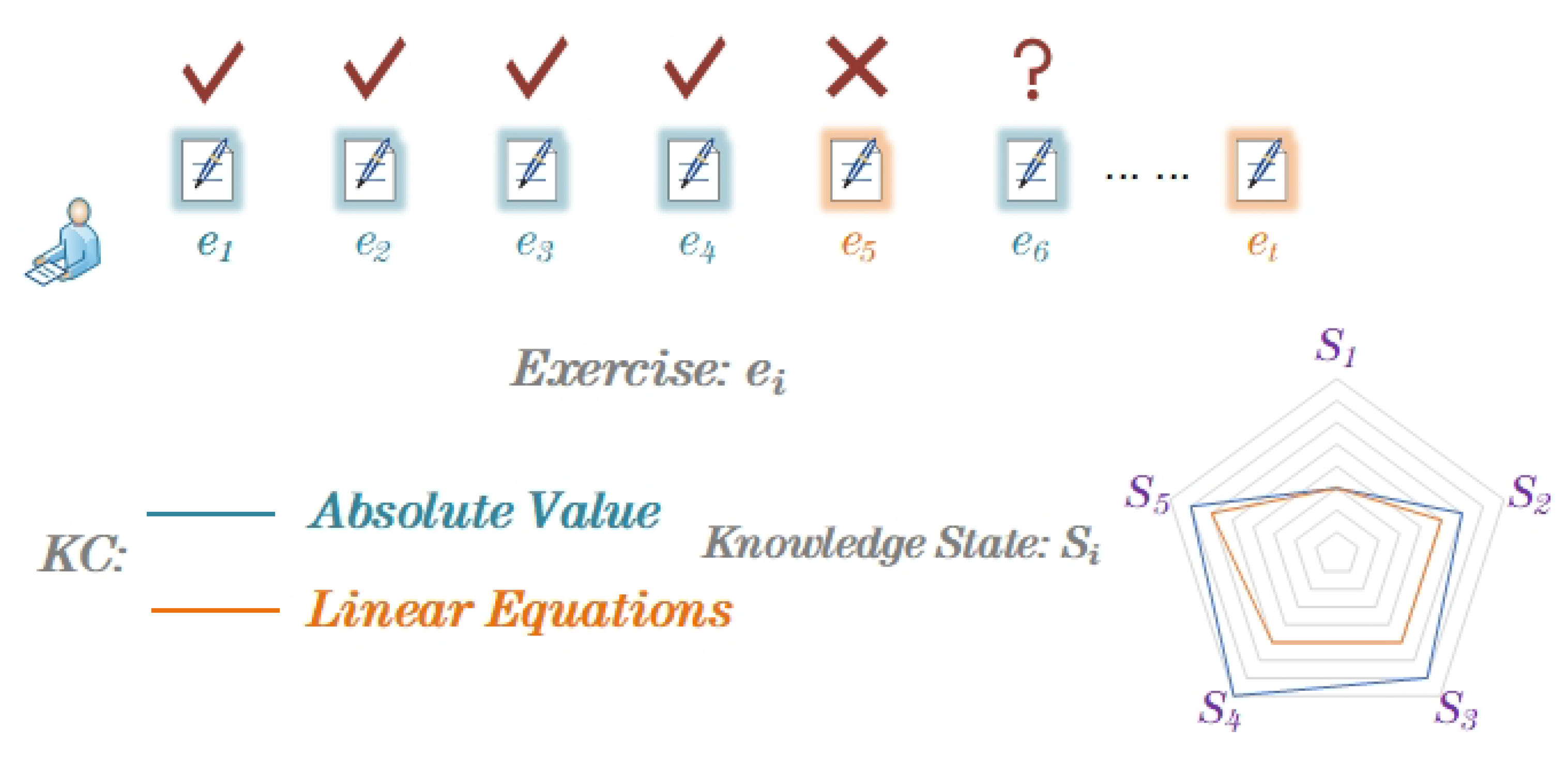

3. Problem Definition

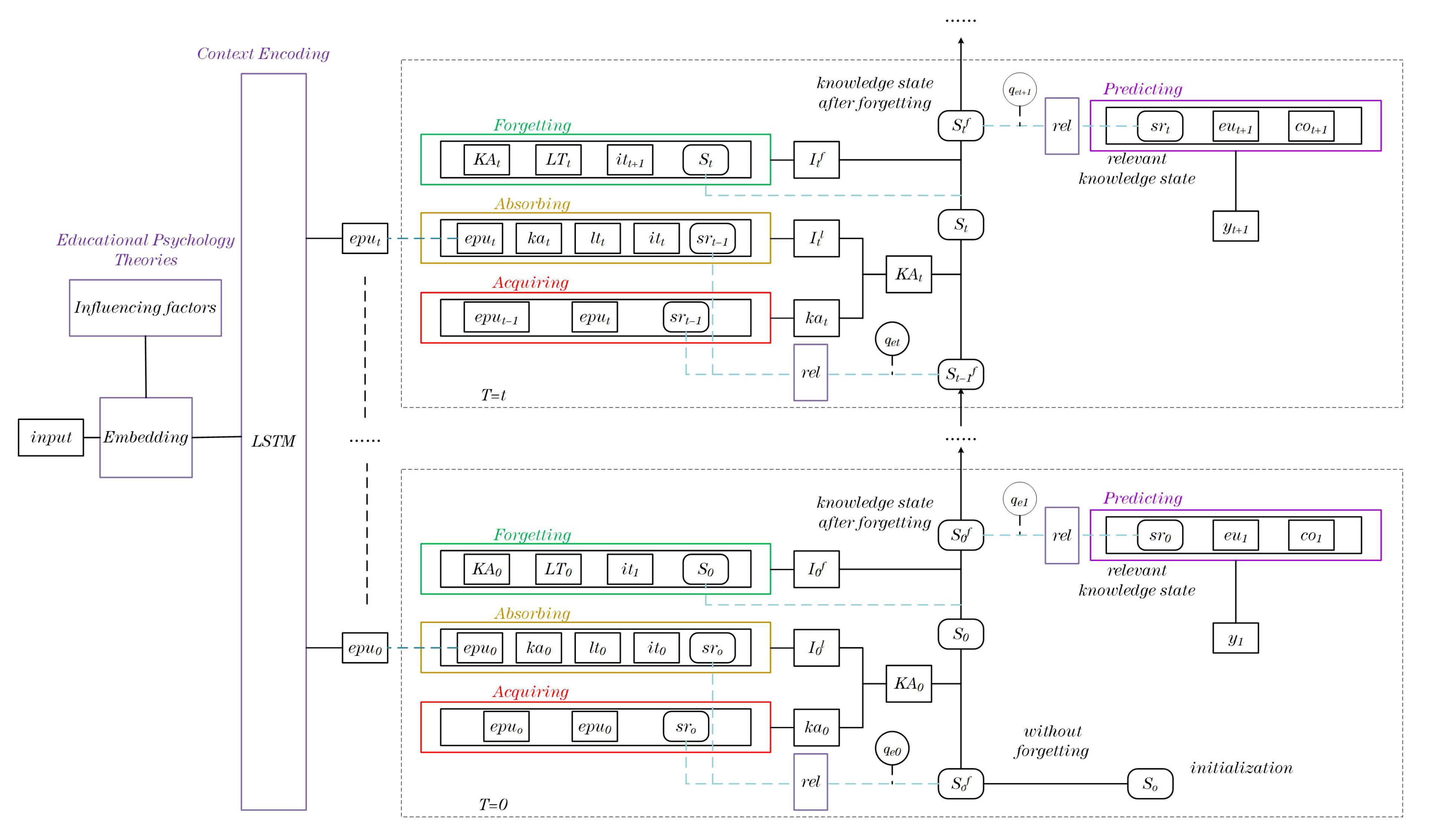

4. Methodology

4.1. Embedding Module

4.1.1. Exercise Embedding

4.1.2. Answering Performance Embedding

4.1.3. Exercise Performance Embedding

4.1.4. Historical Behavior Embedding

4.1.5. Knowledge Embedding

4.2. Knowledge Acquisition Module

4.3. Knowledge Retention Module

4.3.1. Knowledge Absorption Module

4.3.2. Knowledge Forgetting Module

4.4. Predicting Module

5. Experiments

5.1. Training Details

5.2. Datasets

- ASSISTments 2009 (ASSIST2009) (https://sites.google.com/site/assistmentsdata/home (accessed on 1 March 2022)) was collected by the online intelligent tutoring system ASSISTment [64] and has been widely used in the evaluation of KT models in several papers.

- ASSISTments 2012 (ASSIST2012) (https://sites.google.com/site/assistmentsdata/home (accessed on 1 March 2022)) was also collected from ASSISTments, which contains data and impact forecasts for the 2012–2013 school year.

- ASSISTments Challenge (ASSISTChall) (https://sites.google.com/site/assistmentsdata/home (accessed on 1 March 2022)) belongs to the same source as ASSISTments2009 and ASSISTments2012. The researchers gathered these data from a study that traced secondary school students’ use of teaching assistant blended learning platforms between 2004–2007. The average learning sequence length of students in this dataset is the longest.

- Statics2011 (https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=507 (accessed on 1 April 2022)) was provided by the university-level Engineering Statics course and has the most KCs in the four datasets.

5.3. Baseline Models

- BKT [5] is a classic KT model using the HMM. BKT uses the HMM to trace and represent students’ mastery of KCs.

- DKT [6] was the first to apply the RNN and LSTM to KT. We used LSTM to simulate students’ changing knowledge state.

- The DKVMN [13] borrowed the idea of a memory network to obtain interpretable students’ knowledge state. When updating students knowledge state, the forgetting mechanism is also considered.

- CKT [34] proposed a student-personalized KT task called convolutional knowledge tracing model, which uses hierarchical convolutional layers to extract personalized learning rates based on continuous learning interactions.

- SAKT [31] introduced a self-attention mechanism to the KT task and used a transformer model to capture the relationship between students’ learning interactions over time.

- AKT [23] adopted two self-attention encoders that are used to learn the contextual software representations of exercises and answers and combined self-attention and monotonic attention mechanisms to capture long-term temporal information. Besides, AKT also generated an embedding for exercises based on the Rasch model.

- LPKT [14] recorded the changes after each learning interaction of students, taking into account the impact of students’ learning and forgetting.

5.4. Evaluation Methodology

5.5. Experimental Results and Analysis

- The KT models based on deep learning outperformed the traditional methods. For all four datasets, DKT, the DKVMN, SAKT, AKT, LPKT, and LFEKT had significant improvements over BKT, which can be seen as the effectiveness of the deep-learning-based KT models.

- Students’ learning and forgetting behavior cannot be ignored. Compared to the traditional KT model, the LSTM-based DKT exhibited excellent performance. However, DKT represents the overall knowledge state of students through the latent vector of LSTM, and it is impossible to obtain students’ mastery of each KC. The DKVMN can represent students’ knowledge mastery on each KC through a value matrix, but it does not consider the forgetting behavior in the learning process. The DKVMN defaults to the students’ mastery of KCs remains unchanged over time and is somewhat straightforward in modeling learning behavior, so the prediction performance of the DKVMN was not as good as that of LFEKT. Both SAKT and AKT use a self-attention mechanism to optimize their performance. AKT combines the Rasch model to enhance exercises’ information, so AKT’s prediction performance was better than that of SAKT. However, AKT only uses a decaying kernel function to simulate the forgetting behavior of students, and LFEKT, which comprehensively models students’ learning and forgetting behavior, performed better and could more accurately predict students’ future performance. Both CKT and LFEKT performed well on the Statics2011 dataset; however, LFEKT performed significantly better than CKT on the other datasets, demonstrating that generality is an advantage of our model.

- The setting of the exercise performance units containing exercise information and students’ answering performance was valid. Compared with LPKT, which also models learning and forgetting effects, LFEKT showed certain advantages on the four datasets. It can be seen that the enhancement of the exercise unit and the performance unit was effective, and encoding the learned sequence context was helpful for improving the prediction performance.

5.6. Ablation Experiments

- LFEKT-NF refers to the LFEKT that does not consider knowledge forgetting, that is the knowledge forgetting layer was removed.

- LFEKT-NL refers to the LFEKT without considering knowledge retention, that is the knowledge absorption layer was removed.

- LFEKT-NCT refers to the LFEKT that does not use LSTM to capture contextual information as set by Equation (5).

- LFEKT-NQ refers to the LFEKT without using an enhanced Q matrix and the rel layer.

- LFEKT-ND refers to the LFEKT that does not introduce difficulty information to enhance the information of the exercise itself.

- LFEKT-NP refers to the LFEKT that does not introduce other answering performances, that is it does not include the answering time and the hint time.

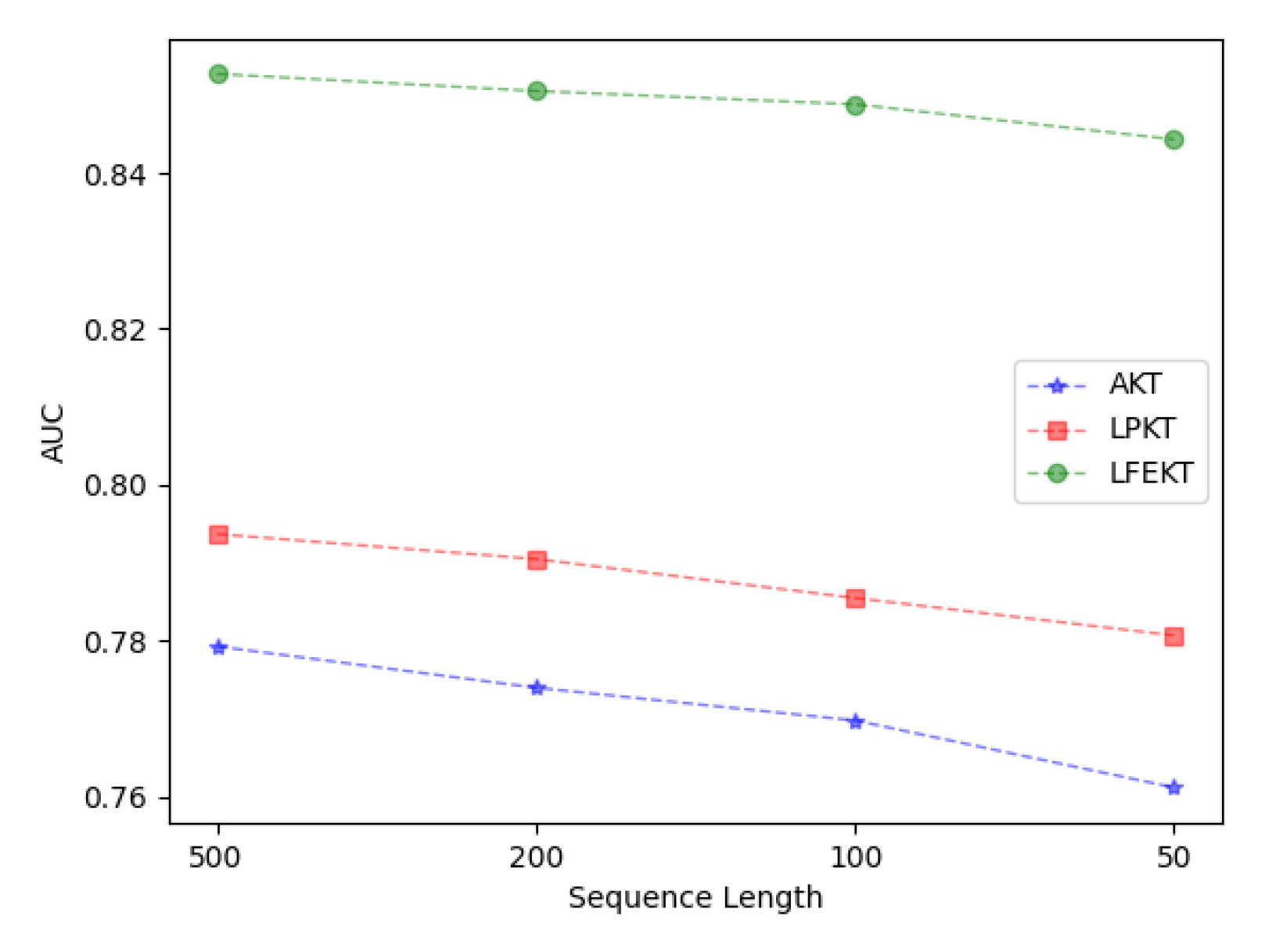

5.7. The Effect of the Length of the Learned Sequence

5.8. Knowledge State Visualization

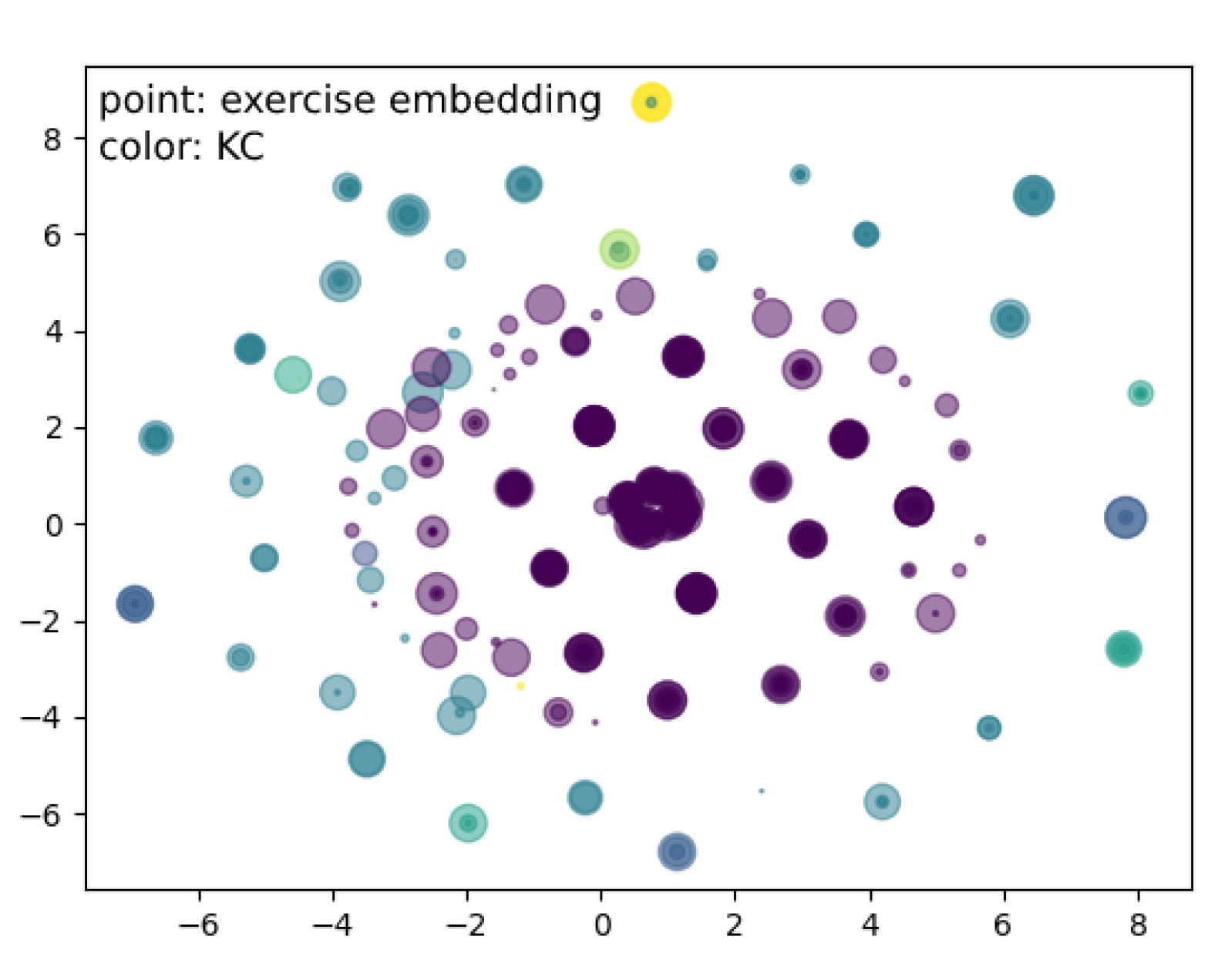

5.9. Effectiveness of Exercise Embedding

6. Conclusions

- The definition of exercise difficulty is relatively simple and may not be applicable in all educational scenarios. Currently, the Bloom taxonomy [66] is a popular method for determining difficulty. In addition, difficulty may be determined by performing a semantic extraction of the question text. For programming questions with less text information, it is feasible to extract information from suggested answers (i.e., the codes).

- Although our model is robust to changes in sequence length, the prediction performance will still decrease if the length of sequences becomes shorter. In a real learning environment, it is difficult to obtain the complete learning sequences of students. Therefore, achieving better results in short-sequence KT scenarios will also be a very challenging topic.

- In this paper, we integrated educational psychology theory and some neurological theories into our KT model. However, several other learning-related theories should be explored. Bruner learning theory highlighted the significance of learning motivation [67]. The learning process of students is motivated by their high cognitive requirements. Integrating students’ learning motivation into the KT model is a direction that may be explored. Furthermore, students’ learning behavior is a kind of physiological activity, so we can also try something more biologically inspired.

- Most of the public datasets used in current research are balanced, but real-world data are likely to be unbalanced. How to deal with unbalanced data and perform the corresponding preprocessing are questions to be studied.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nguyen, T. The effectiveness of online learning: Beyond no significant difference and future horizons. MERLOT J. Online Learn. Teach. 2015, 11, 309–319. [Google Scholar]

- Wyse, A.E.; Stickney, E.M.; Butz, D.; Beckler, A.; Close, C.N. The potential impact of COVID-19 on student learning and how schools can respond. Educ. Meas. Issues Pract. 2020, 39, 60–64. [Google Scholar] [CrossRef]

- Anderson, A.; Huttenlocher, D.; Kleinberg, J.; Leskovec, J. Engaging with massive online courses. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Republic of Korea, 7–11 April 2014; pp. 687–698. [Google Scholar]

- Lan, A.S.; Studer, C.; Baraniuk, R.G. Time-varying learning and content analytics via sparse factor analysis. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 452–461. [Google Scholar]

- Corbett, A.T.; Anderson, J.R. Knowledge tracing: Modeling the acquisition of procedural knowledge. User Model. User-Adapt. Interact. 1994, 4, 253–278. [Google Scholar] [CrossRef]

- Piech, C.; Bassen, J.; Huang, J.; Ganguli, S.; Sahami, M.; Guibas, L.J.; Sohl-Dickstein, J. Deep knowledge tracing. Adv. Neural Inf. Process. Syst. 2015, 28, 1–12. [Google Scholar]

- Liu, Q.; Shen, S.; Huang, Z.; Chen, E.; Zheng, Y. A survey of knowledge tracing. arXiv 2021, arXiv:2105.15106. [Google Scholar]

- Abdelrahman, G.; Wang, Q.; Nunes, B.P. Knowledge tracing: A survey. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar] [CrossRef]

- Rabiner, L.; Juang, B. An introduction to hidden Markov models. IEEE ASSP Mag. 1986, 3, 4–16. [Google Scholar] [CrossRef]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Jafari, R.; Razvarz, S.; Gegov, A. End-to-End Memory Networks: A Survey. In Proceedings of the Intelligent Computing; Arai, K., Kapoor, S., Bhatia, R., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 291–300. [Google Scholar]

- Zhang, J.; Shi, X.; King, I.; Yeung, D.Y. Dynamic key–value memory networks for knowledge tracing. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 May 2017; pp. 765–774. [Google Scholar]

- Shen, S.; Liu, Q.; Chen, E.; Huang, Z.; Huang, W.; Yin, Y.; Su, Y.; Wang, S. Learning process-consistent knowledge tracing. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual, 14–18 August 2021; pp. 1452–1460. [Google Scholar]

- Boaler, J. Mistakes grow your brain. Youcubed Stanf. Univ. Grad. Sch. Educ. 2016, 14, 2016. [Google Scholar]

- Steuer, G.; Dresel, M. A constructive error climate as an element of effective learning environments. Psychol. Test Assess. Model. 2015, 57, 262–275. [Google Scholar]

- Ebbinghaus, H. Memory: A contribution to experimental psychology. Ann. Neurosci. 2013, 20, 155. [Google Scholar] [CrossRef] [PubMed]

- Ricker, T.J.; Vergauwe, E.; Cowan, N. Decay theory of immediate memory: From Brown (1958) to today (2014). Q. J. Exp. Psychol. 2016, 69, 1969–1995. [Google Scholar] [CrossRef] [PubMed]

- Ryan, T.J.; Frankland, P.W. Forgetting as a form of adaptive engram cell plasticity. Nat. Rev. Neurosci. 2022, 23, 173–186. [Google Scholar] [CrossRef]

- de Souza Pereira Moreira, G.; Rabhi, S.; Lee, J.M.; Ak, R.; Oldridge, E. Transformers4rec: Bridging the gap between nlp and sequential/session-based recommendation. In Proceedings of the 15th ACM Conference on Recommender Systems, Amsterdam, The Netherlands, 27 September–1 October 2021; pp. 143–153. [Google Scholar]

- Liu, Q.; Huang, Z.; Yin, Y.; Chen, E.; Xiong, H.; Su, Y.; Hu, G. Ekt: Exercise-aware knowledge tracing for student performance prediction. IEEE Trans. Knowl. Data Eng. 2019, 33, 100–115. [Google Scholar] [CrossRef]

- Nagatani, K.; Zhang, Q.; Sato, M.; Chen, Y.Y.; Chen, F.; Ohkuma, T. Augmenting knowledge tracing by considering forgetting behavior. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 3101–3107. [Google Scholar]

- Ghosh, A.; Heffernan, N.; Lan, A.S. Context-aware attentive knowledge tracing. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual, 6–10 July 2020; pp. 2330–2339. [Google Scholar]

- Bailey, C.D. Forgetting and the learning curve: A laboratory study. Manag. Sci. 1989, 35, 340–352. [Google Scholar] [CrossRef]

- Chen, M.; Guan, Q.; He, Y.; He, Z.; Fang, L.; Luo, W. Knowledge Tracing Model with Learning and Forgetting Behavior. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 3863–3867. [Google Scholar]

- Embretson, S.E.; Reise, S.P. Item Response Theory; Psychology Press: Mahwah, NJ, USA, 2013. [Google Scholar]

- Yeung, C.K.; Yeung, D.Y. Addressing two problems in deep knowledge tracing via prediction-consistent regularization. In Proceedings of the Fifth Annual ACM Conference on Learning at Scale, London, UK, 26–28 June 2018; pp. 1–10. [Google Scholar]

- Chen, P.; Lu, Y.; Zheng, V.W.; Pian, Y. Prerequisite-driven deep knowledge tracing. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, 17–20 November 2018; pp. 39–48. [Google Scholar]

- Abdelrahman, G.; Wang, Q. Knowledge tracing with sequential key–value memory networks. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 175–184. [Google Scholar]

- Sun, X.; Zhao, X.; Ma, Y.; Yuan, X.; He, F.; Feng, J. Muti-behavior features based knowledge tracking using decision tree improved DKVMN. In Proceedings of the ACM Turing Celebration Conference, Chengdu, China, 17–19 May 2019; pp. 1–6. [Google Scholar]

- Pandey, S.; Karypis, G. A self-attentive model for knowledge tracing. arXiv 2019, arXiv:1907.06837. [Google Scholar]

- Pandey, S.; Srivastava, J. RKT: Relation-aware self-attention for knowledge tracing. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual, 19–23 October 2020; pp. 1205–1214. [Google Scholar]

- Zhang, X.; Zhang, J.; Lin, N.; Yang, X. Sequential self-attentive model for knowledge tracing. In Proceedings of the International Conference on Artificial Neural Networks, Bratislava, Slovakia, 14–17 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 318–330. [Google Scholar]

- Shen, S.; Liu, Q.; Chen, E.; Wu, H.; Huang, Z.; Zhao, W.; Su, Y.; Ma, H.; Wang, S. Convolutional knowledge tracing: Modeling individualization in student learning process. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 1857–1860. [Google Scholar]

- Hooshyar, D.; Huang, Y.M.; Yang, Y. GameDKT: Deep knowledge tracing in educational games. Expert Syst. Appl. 2022, 196, 116670. [Google Scholar] [CrossRef]

- Huang, Z.; Liu, Q.; Chen, Y.; Wu, L.; Xiao, K.; Chen, E.; Ma, H.; Hu, G. Learning or forgetting? a dynamic approach for tracking the knowledge proficiency of students. ACM Trans. Inf. Syst. (TOIS) 2020, 38, 1–33. [Google Scholar] [CrossRef]

- Qiu, Y.; Qi, Y.; Lu, H.; Pardos, Z.A.; Heffernan, N.T. Does Time Matter? Modeling the Effect of Time with Bayesian Knowledge Tracing. In Proceedings of the EDM, Eindhoven, The Netherlands, 6–8 July 2011; pp. 139–148. [Google Scholar]

- Khajah, M.; Lindsey, R.V.; Mozer, M.C. How deep is knowledge tracing? arXiv 2016, arXiv:1604.02416. [Google Scholar]

- Wang, C.; Ma, W.; Zhang, M.; Lv, C.; Wan, F.; Lin, H.; Tang, T.; Liu, Y.; Ma, S. Temporal cross-effects in knowledge tracing. In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, Virtual, 8–12 March 2021; pp. 517–525. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Tang, D.; Qin, B.; Feng, X.; Liu, T. Effective LSTMs for target-dependent sentiment classification. arXiv 2015, arXiv:1512.01100. [Google Scholar]

- Huang, T.; Liang, M.; Yang, H.; Li, Z.; Yu, T.; Hu, S. Context-Aware Knowledge Tracing Integrated with the Exercise Representation and Association in Mathematics. In Proceedings of the International Conference on Educational Data Mining (EDM), Online, 29 June–2 July 2021. [Google Scholar]

- Wong, C.S.Y.; Yang, G.; Chen, N.F.; Savitha, R. Incremental Context Aware Attentive Knowledge Tracing. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 3993–3997. [Google Scholar]

- Shin, D.; Shim, Y.; Yu, H.; Lee, S.; Kim, B.; Choi, Y. Saint+: Integrating temporal features for ednet correctness prediction. In Proceedings of the LAK21: 11th International Learning Analytics and Knowledge Conference, Irvine, CA, USA, 12–16 April 2021; pp. 490–496. [Google Scholar]

- Choi, Y.; Lee, Y.; Cho, J.; Baek, J.; Kim, B.; Cha, Y.; Shin, D.; Bae, C.; Heo, J. Towards an appropriate query, key, and value computation for knowledge tracing. In Proceedings of the Seventh ACM Conference on Learning@ Scale, Virtual, 12–14 August 2020; pp. 341–344. [Google Scholar]

- Wang, F.; Liu, Q.; Chen, E.; Huang, Z.; Chen, Y.; Yin, Y.; Huang, Z.; Wang, S. Neural cognitive diagnosis for intelligent education systems. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 6153–6161. [Google Scholar]

- Minn, S.; Zhu, F.; Desmarais, M.C. Improving knowledge tracing model by integrating problem difficulty. In Proceedings of the 2018 IEEE International conference on Data Mining Workshops (ICDMW), Singapore, 17–20 November 2018; pp. 1505–1506. [Google Scholar]

- Yudelson, M.V.; Koedinger, K.R.; Gordon, G.J. Individualized bayesian knowledge tracing models. In Proceedings of the International Conference on Artificial Intelligence in Education, Memphis, TN, USA, 9–13 July 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 171–180. [Google Scholar]

- Converse, G.; Pu, S.; Oliveira, S. Incorporating item response theory into knowledge tracing. In Proceedings of the International Conference on Artificial Intelligence in Education, Utrecht, The Netherlands, 14–18 June 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 114–118. [Google Scholar]

- Yeung, C.K. Deep-IRT: Make deep learning based knowledge tracing explainable using item response theory. arXiv 2019, arXiv:1904.11738. [Google Scholar]

- Shen, S.; Huang, Z.; Liu, Q.; Su, Y.; Wang, S.; Chen, E. Assessing Student’s Dynamic Knowledge State by Exploring the Question Difficulty Effect. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 427–437. [Google Scholar]

- Liu, Y.; Yang, Y.; Chen, X.; Shen, J.; Zhang, H.; Yu, Y. Improving knowledge tracing via pre-training question embeddings. arXiv 2020, arXiv:2012.05031. [Google Scholar]

- Kim, P.; Kim, P. Convolutional neural network. In MATLAB Deep Learning: With Machine Learning, Neural Networks and Artificial Intelligence; Apress: Berkeley, CA, USA, 2017; pp. 121–147. [Google Scholar]

- Yang, B.; Li, J.; Wong, D.F.; Chao, L.S.; Wang, X.; Tu, Z. Context-aware self-attention networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 387–394. [Google Scholar]

- Li, H.; Min, M.R.; Ge, Y.; Kadav, A. A context-aware attention network for interactive question answering. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 927–935. [Google Scholar]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Pelánek, R. Modeling Students’ Memory for Application in Adaptive Educational Systems. In Proceedings of the International Conference on Educational Data Mining (EDM), Madrid, Spain, 26–29 June 2015. [Google Scholar]

- Settles, B.; Meeder, B. A trainable spaced repetition model for language learning. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 1848–1858. [Google Scholar]

- McGrath, C.H.; Guerin, B.; Harte, E.; Frearson, M.; Manville, C. Learning Gain in Higher Education; RAND Corporation: Santa Monica, CA, USA, 2015. [Google Scholar]

- Käfer, J.; Kuger, S.; Klieme, E.; Kunter, M. The significance of dealing with mistakes for student achievement and motivation: Results of doubly latent multilevel analyses. Eur. J. Psychol. Educ. 2019, 34, 731–753. [Google Scholar] [CrossRef]

- Kliegl, O.; Bäuml, K.H.T. The Mechanisms Underlying Interference and Inhibition: A Review of Current Behavioral and Neuroimaging Research. Brain Sci. 2021, 11, 1246. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Feng, M.; Heffernan, N.; Koedinger, K. Addressing the assessment challenge with an online system that tutors as it assesses. User Model. User-Adapt. Interact. 2009, 19, 243–266. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Huitt, W. Bloom et al.’s taxonomy of the cognitive domain. Educ. Psychol. Interact. 2011, 22, 1–4. [Google Scholar]

- Clark, K.R. Learning theories: Constructivism. Radiol. Technol. 2018, 90, 180–182. [Google Scholar] [PubMed]

| Model | Deep Learning | Forgetting | Context | Exercise Embedding | Answering Performance |

|---|---|---|---|---|---|

| BKT | |||||

| DKT | ✓ | ||||

| DKT+ | ✓ | ||||

| DKVMN | ✓ | ||||

| PDKT-C | ✓ | ✓ | |||

| SKVMN | ✓ | ✓ | ✓ | ||

| SAKT | ✓ | ✓ | |||

| DKVMN-DT | ✓ | ✓ | |||

| RKT | ✓ | ✓ | ✓ | ✓ | |

| AKT | ✓ | ✓ | ✓ | ✓ | |

| SSAKT | ✓ | ✓ | ✓ | ✓ | |

| CKT | ✓ | ||||

| GameDKT | ✓ | ||||

| KPT | ✓ | ✓ | |||

| DKT-Forgetting | ✓ | ✓ | |||

| LPKT | ✓ | ✓ | ✓ | ||

| HawkesKT | ✓ | ✓ | |||

| iAKT | ✓ | ✓ | |||

| ERAKT | ✓ | ✓ | |||

| EKT | ✓ | ✓ | ✓ | ||

| SAINT | ✓ | ✓ | ✓ | ||

| SAINT+ | ✓ | ✓ | ✓ | ||

| DKT-IRT | ✓ | ✓ | |||

| Deep-IRT | ✓ | ✓ | |||

| DIMKT | ✓ | ✓ | |||

| PEBG | ✓ | ✓ | |||

| LFEKT | ✓ | ✓ | ✓ | ✓ | ✓ |

| Model | ASSISTChall | ASSIST2012 | ASSIST2009 | Statics2011 |

|---|---|---|---|---|

| Students | 1709 | 29,018 | 4151 | 333 |

| Exercises | 3162 | 50,803 | 17,751 | 278 |

| Concepts | 102 | 198 | 123 | 1178 |

| Answer Time | 1326 | 26,747 | 140 | 2031 |

| Interval Time | 2839 | 29,538 | 25,290 | 4241 |

| Learning Times | 745 | 335 | 290 | 24 |

| Hint Times | 41 | 11 | 10 | 50 |

| Model | ASSISTChall | ASSIST2012 | ASSIST2009 | Statics2011 | ||||

|---|---|---|---|---|---|---|---|---|

| AUC | ACC | AUC | ACC | AUC | ACC | AUC | ACC | |

| BKT | ||||||||

| DKT | ||||||||

| DKVMN | ||||||||

| CKT | ||||||||

| SAKT | ||||||||

| AKT | ||||||||

| LPKT | ||||||||

| LFEKT | ||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.; Bian, K.; He, Y.; Li, Z.; Zheng, H. Enhanced Learning and Forgetting Behavior for Contextual Knowledge Tracing. Information 2023, 14, 168. https://doi.org/10.3390/info14030168

Chen M, Bian K, He Y, Li Z, Zheng H. Enhanced Learning and Forgetting Behavior for Contextual Knowledge Tracing. Information. 2023; 14(3):168. https://doi.org/10.3390/info14030168

Chicago/Turabian StyleChen, Mingzhi, Kaiquan Bian, Yizhou He, Zhefu Li, and Hua Zheng. 2023. "Enhanced Learning and Forgetting Behavior for Contextual Knowledge Tracing" Information 14, no. 3: 168. https://doi.org/10.3390/info14030168

APA StyleChen, M., Bian, K., He, Y., Li, Z., & Zheng, H. (2023). Enhanced Learning and Forgetting Behavior for Contextual Knowledge Tracing. Information, 14(3), 168. https://doi.org/10.3390/info14030168