Complex Causal Extraction of Fusion of Entity Location Sensing and Graph Attention Networks

Abstract

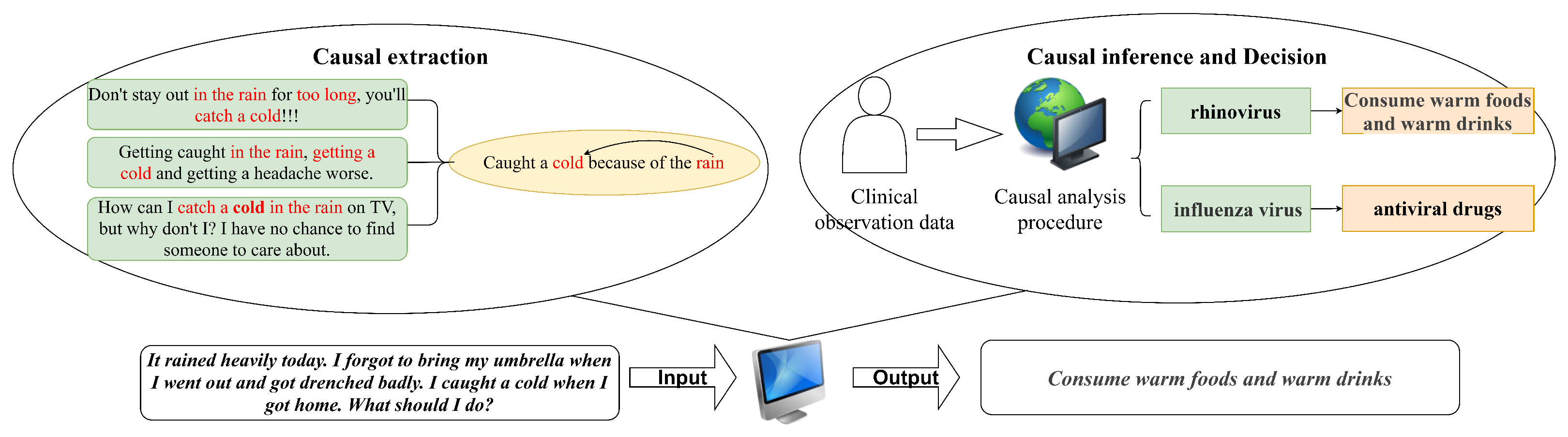

1. Introduction

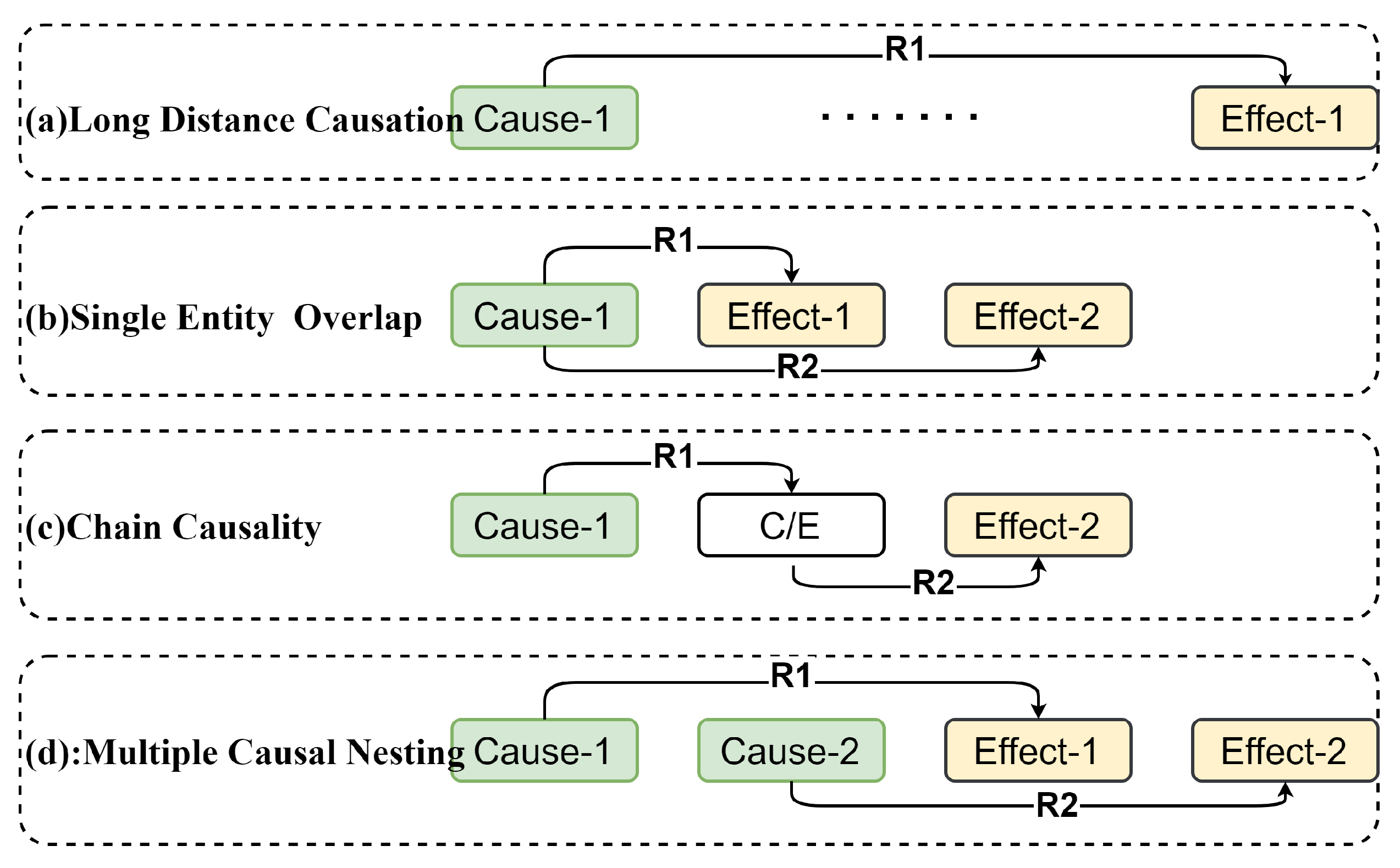

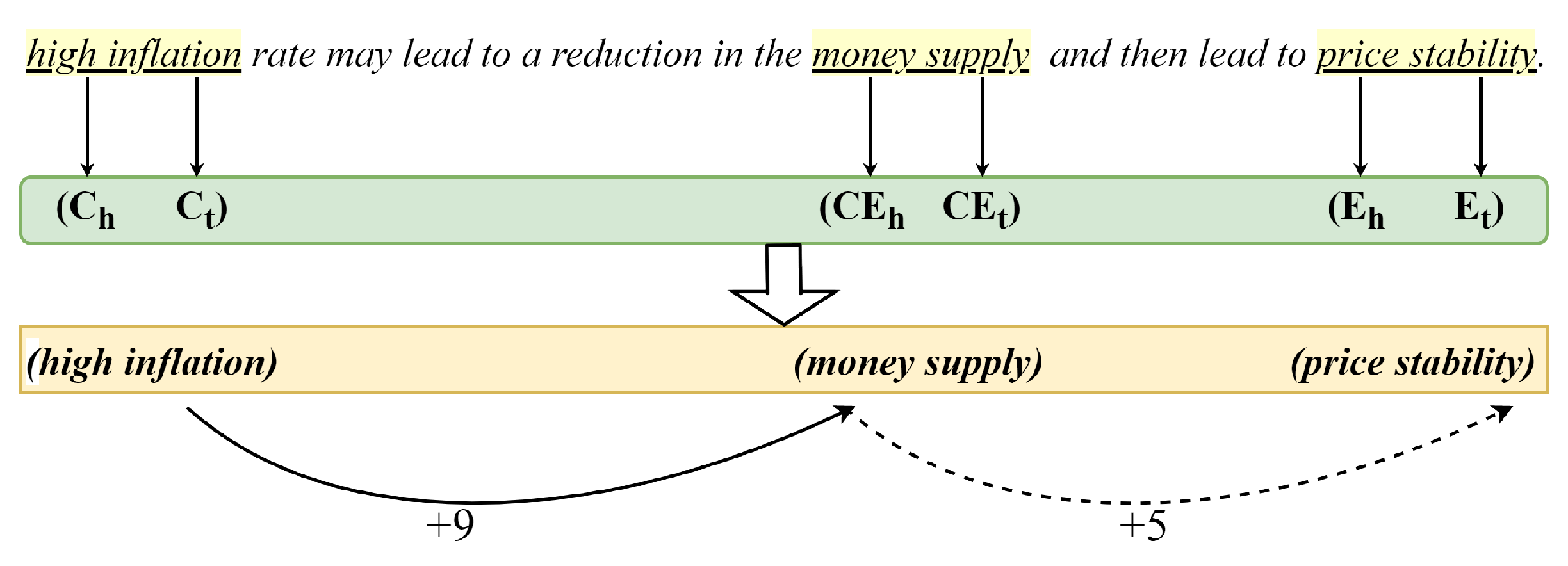

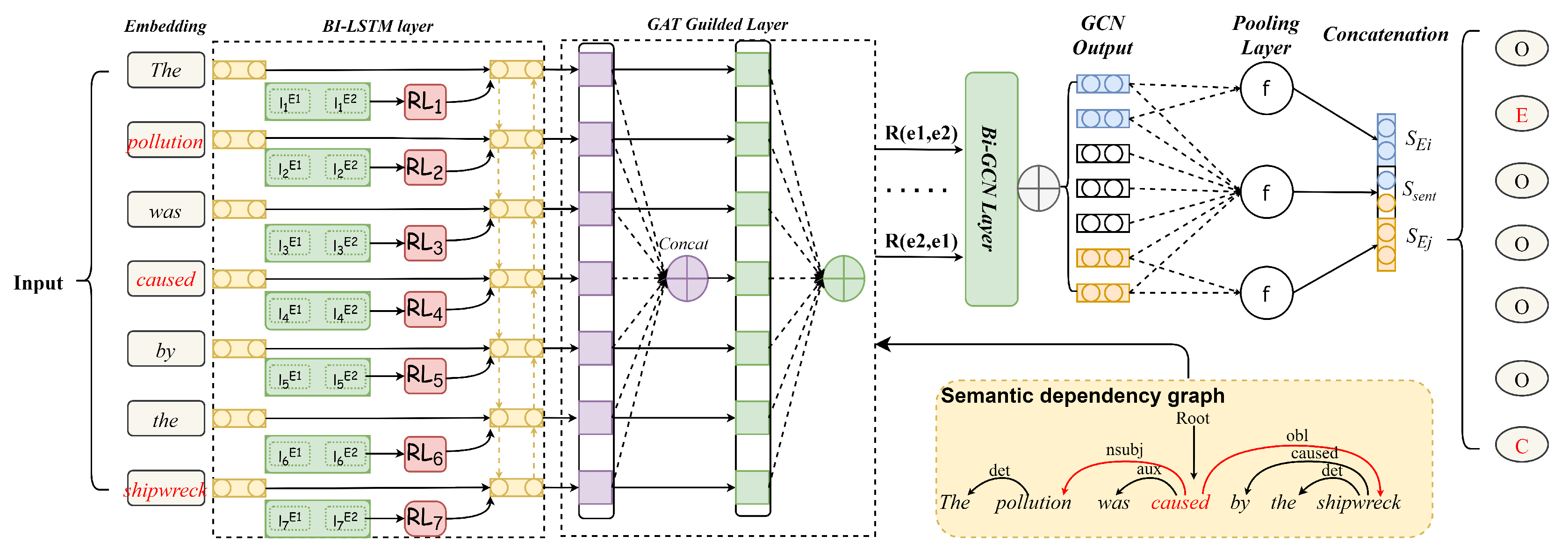

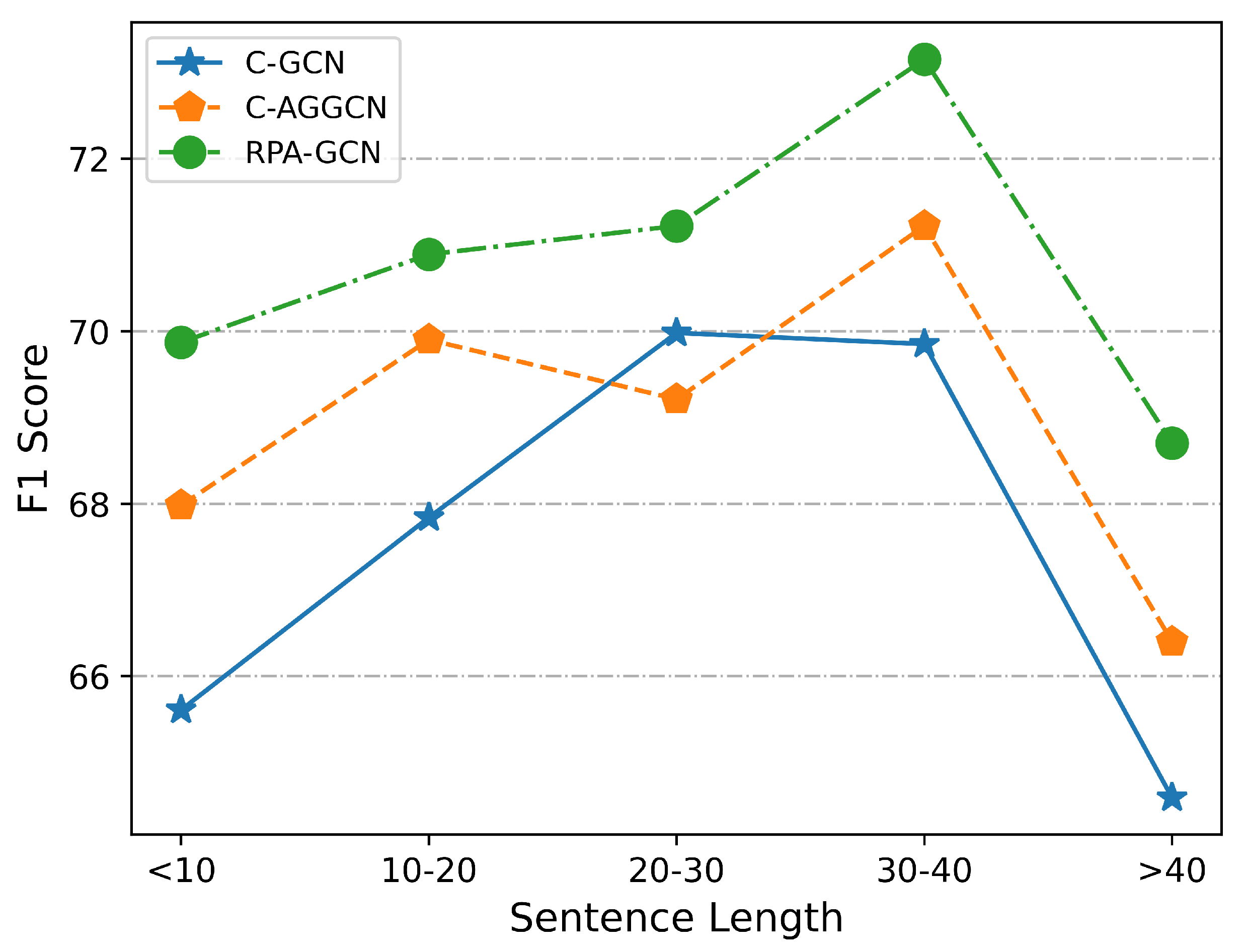

- To address the problems of overlapping multiple relationship groups and long-span dependencies in causal-relationship extraction, a novel head-and-tail annotation scheme for relationship entities is proposed. This contains head and tail nodes for causal entities and relationship words, dividing the triads in the text into multiple simple small sets according to relationship categories and reducing the complexity of subsequent entity recognition. Entities of arbitrary span can be detected using these head and tail pointers, which capture information-boundary data and define scoring functions. All possible mentions of a causal entity in a sentence can be detected iteratively, fully integrating the interaction information between entities and relationships. This provides notable advantages for solving complex causal and long-span causal problems without complex feature engineering.

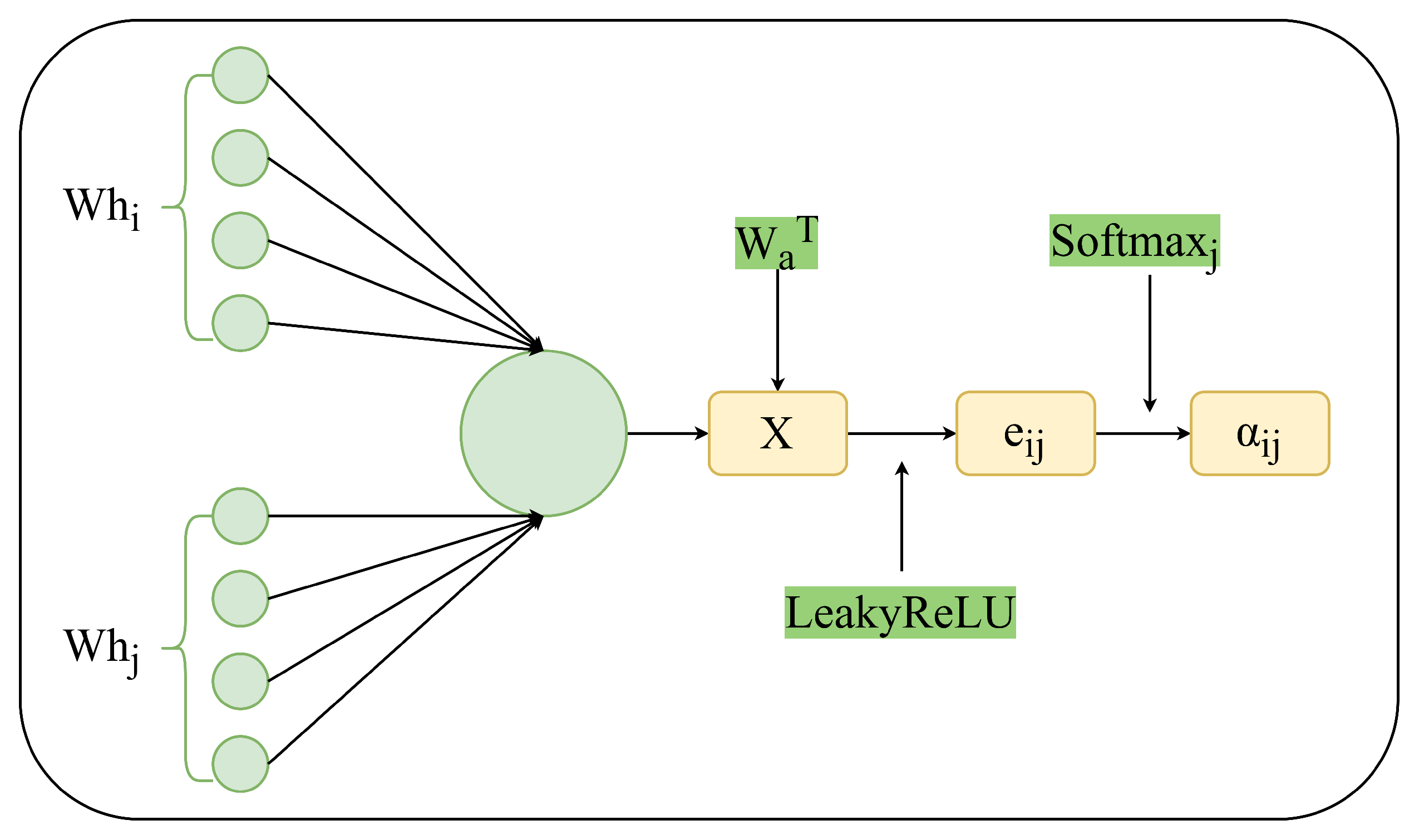

- We propose a GAT mechanism incorporating entity-location perception, in which entity-location perception strategies provide constraint guidance for graph-dependency semantics to learn relationships between long-span nodes and reduce redundant interference. This enables better capture of long-range dependencies between entities and strengthens the dependency-association features between causal pairs.

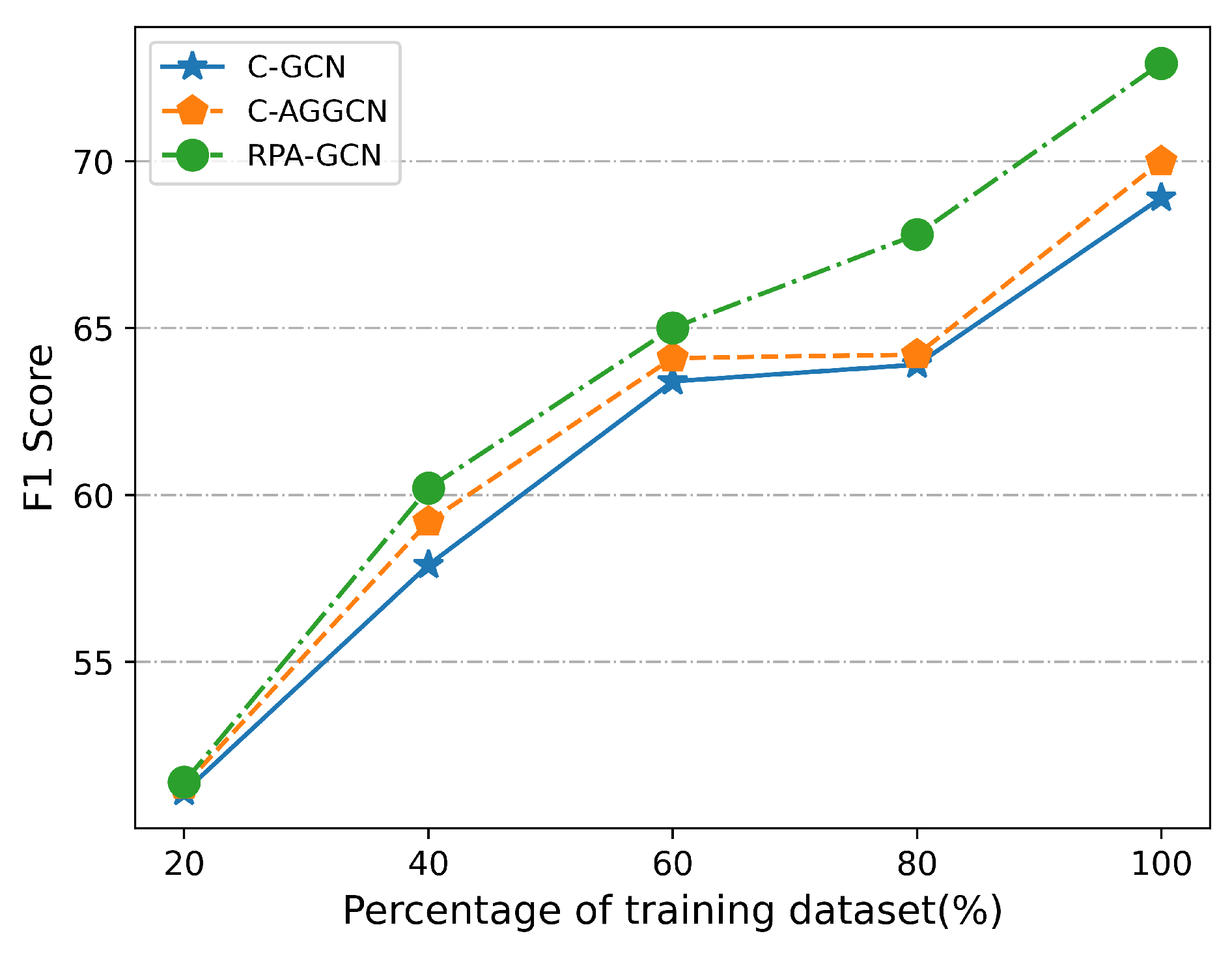

- We build a bidirectional graph convolutional network (GCN) to perform deep mining of the implicit relationship features between each word pair outputted from the attention layer. This improves the directionality of the subject and object in relationship extraction, and it allows iterative prediction of the relationship between each pair of words in a sentence using a classifier; the functions are then scored to analyze all causal pairs in a sentence. Experiments were conducted on a sentence-level explicit-relationship-extraction corpus. The experimental results show that the proposed method obtains the optimal F1 value when compared with the state-of-the-art model, and it effectively improves the extraction accuracy for complex cause–effect and long-span sentences.

2. Related Work

2.1. Causal-Sequence Labeling Method

2.2. Relationship Extraction Technology

3. Methods

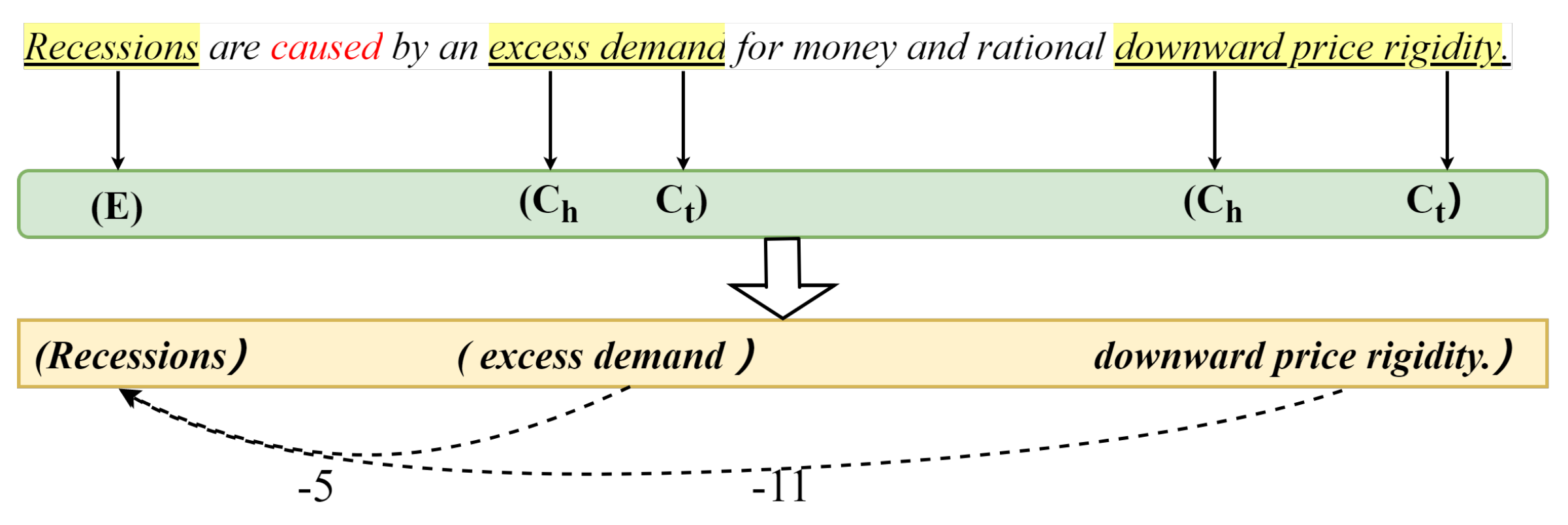

3.1. Cause–Effect Sequence Labeling Method

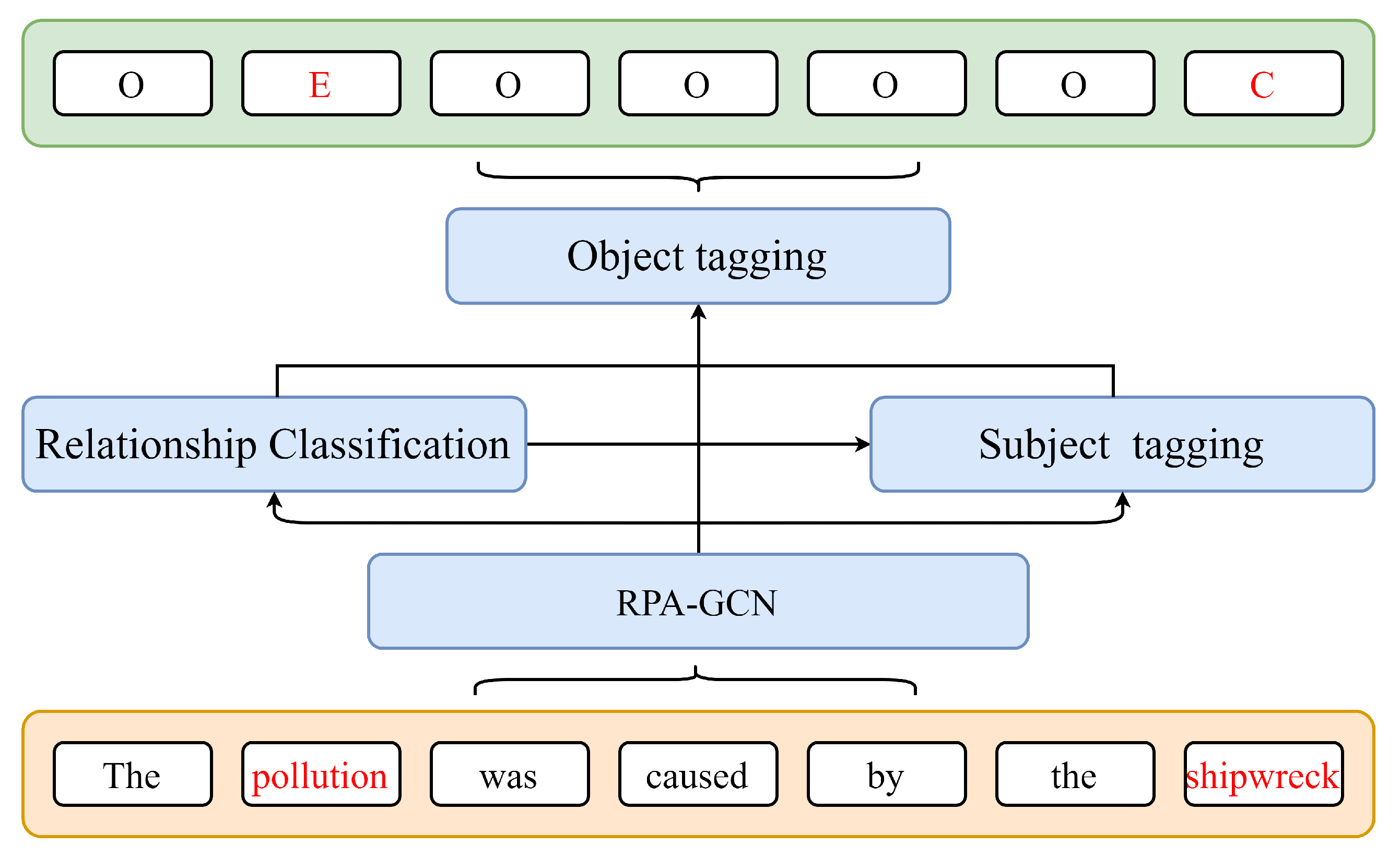

3.2. Causality-Extraction Model (RPA-GCN)

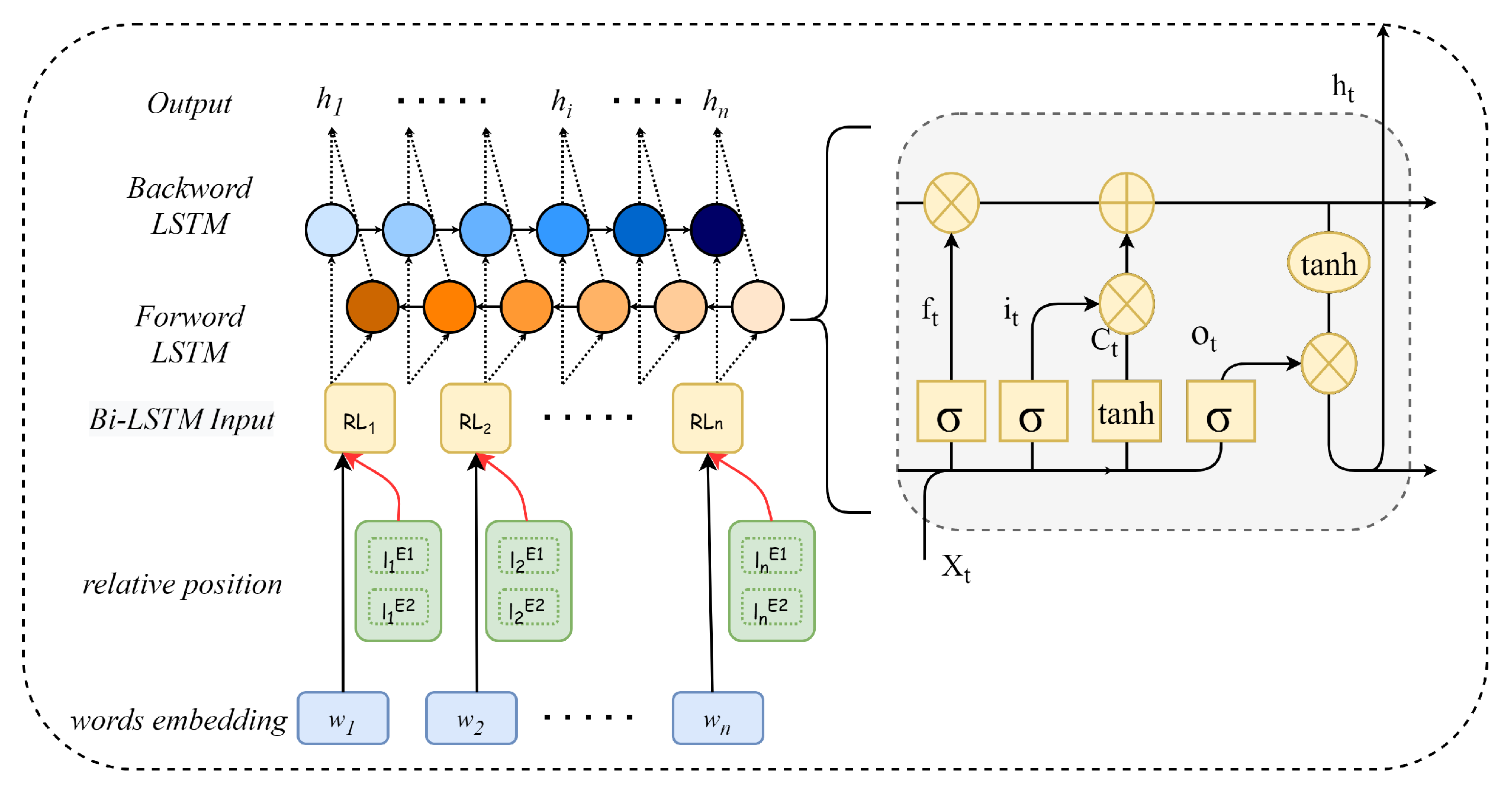

3.2.1. Bi-LSTM Layer (Fused Entity Location Information)

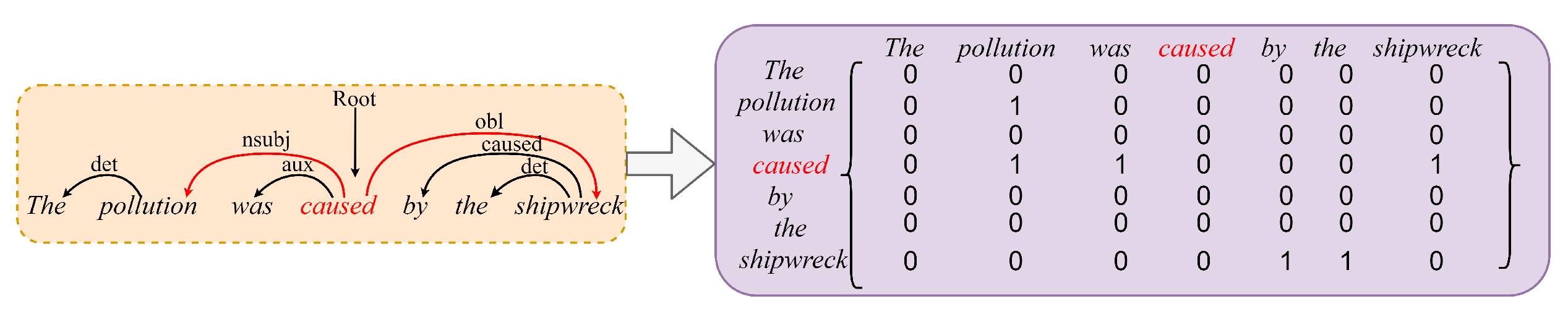

3.2.2. GAT Layer

3.2.3. GCN Layer

4. Experiments and Analysis

4.1. Experimental Data

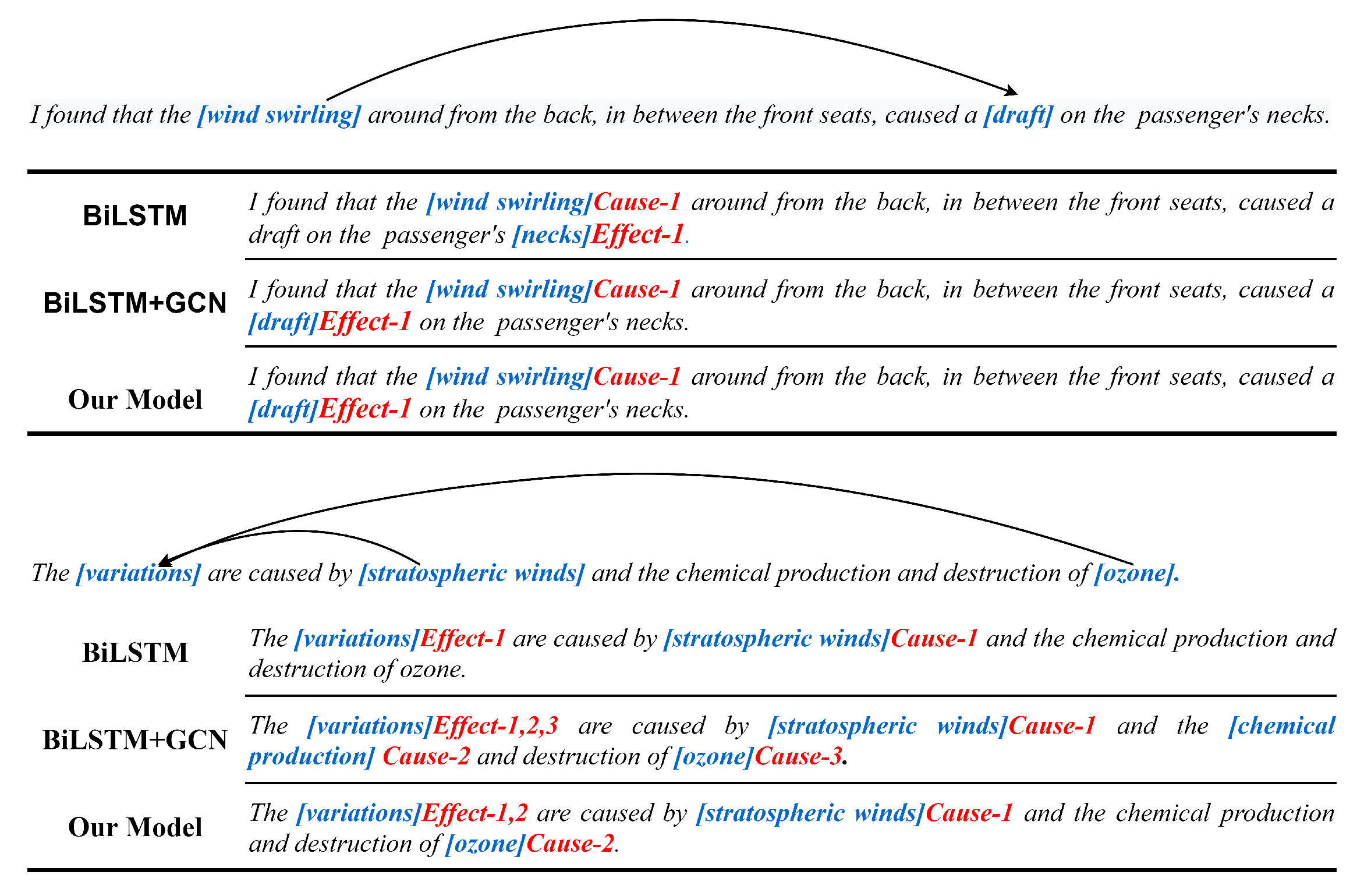

- The existence of multiple cause − effect relationships. As in the example of Figure 9, the relationship labeling of the original corpus sentences is limited to one cause and one effect. This ignores the possible existence of multiple cause − effect relationships in most of the sentences. We expand the candidate cause − effect pairs for the sentences.

- Presence of chain causality. As in the example in Figure 4, a word can simultaneously be a cause or effect in multiple causal pairs; herein, the sentence treatment is considered an extraction of multiple sets of causal pairs. The model is more generalized than that of a previous study [29], which focused on the most basic causes for chained causal sentences.

4.2. Experimental Parameter Setting

4.3. Evaluation Indicators

4.4. Baseline Model Comparison

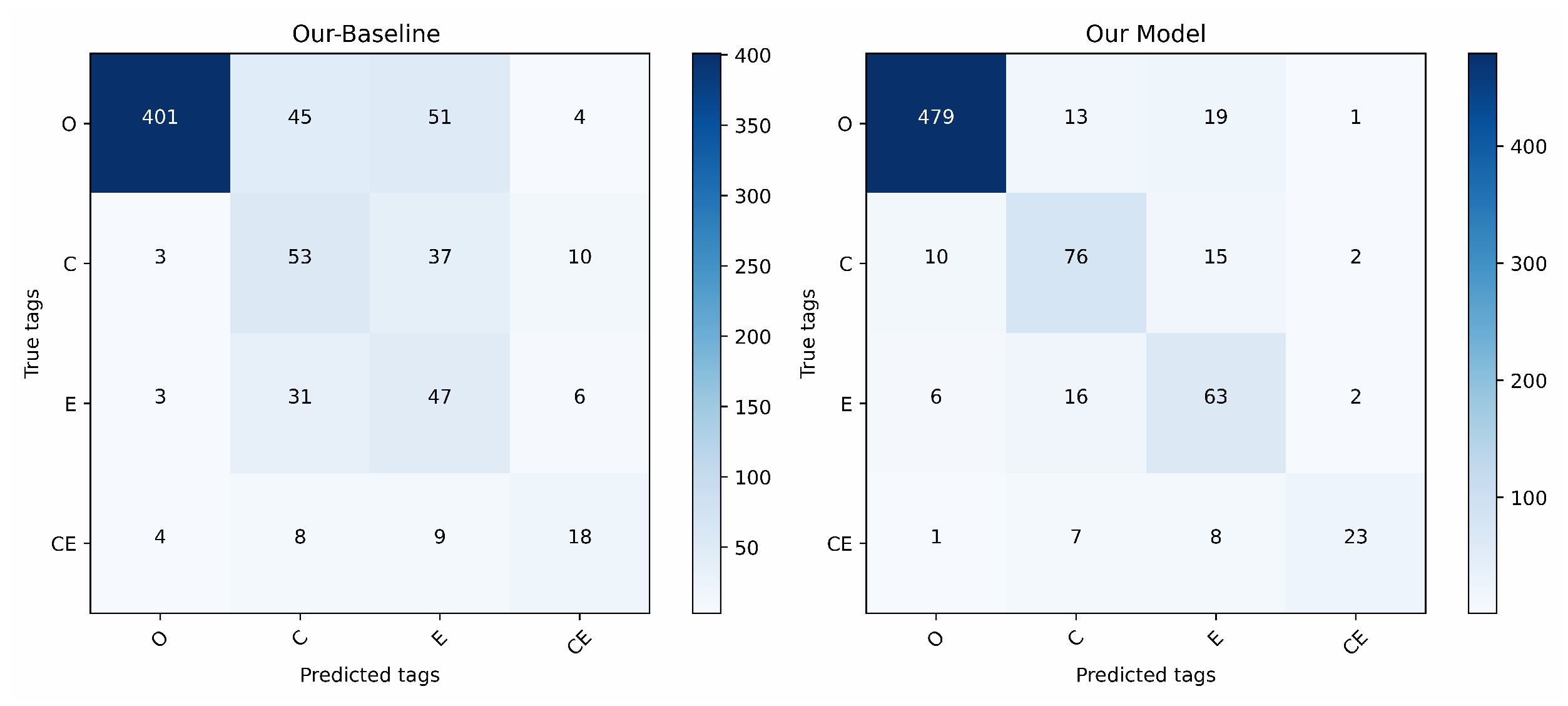

5. Analysis of Complex Cause-and-Effect Sentences

5.1. Complex Relational Data

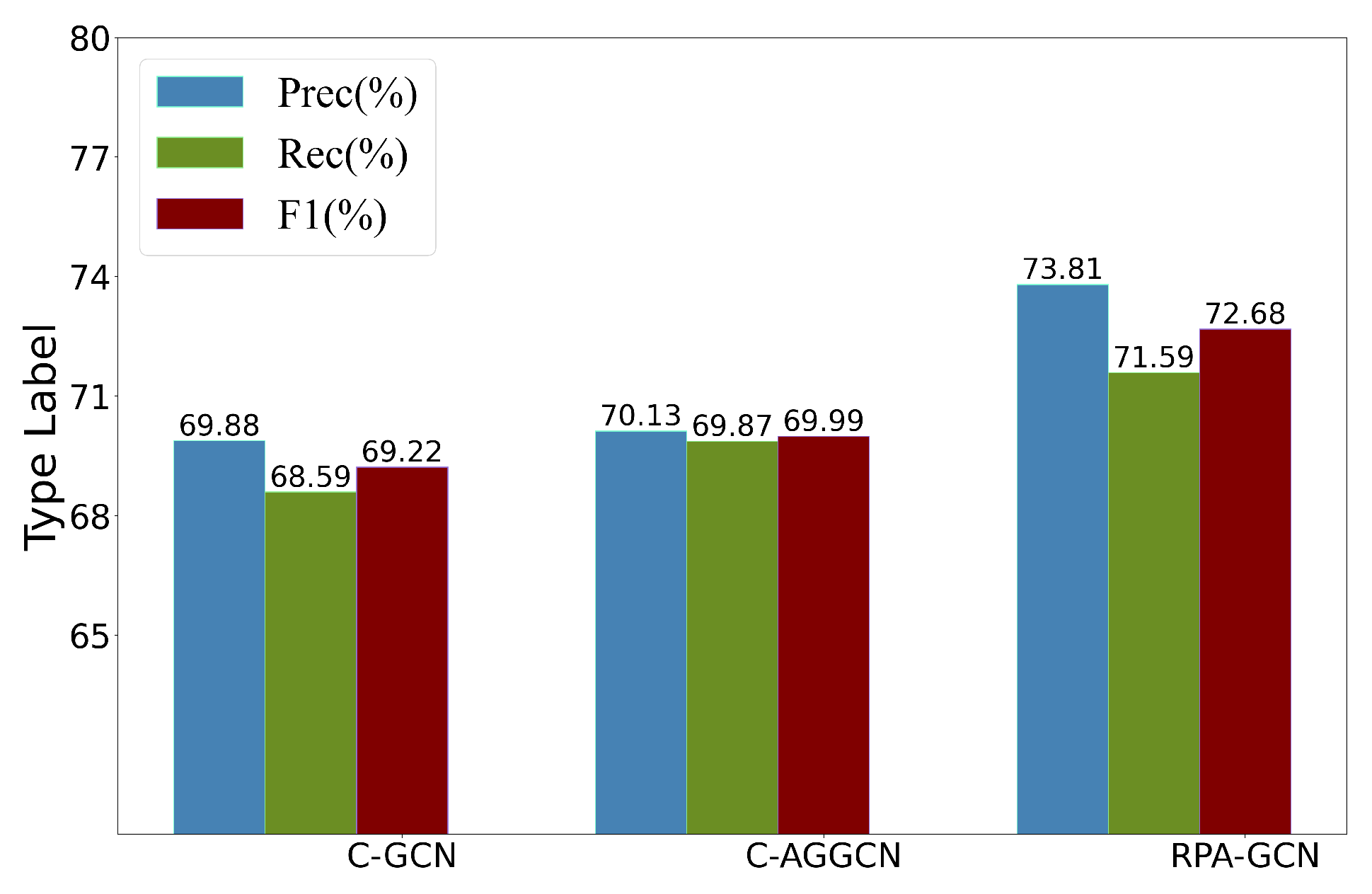

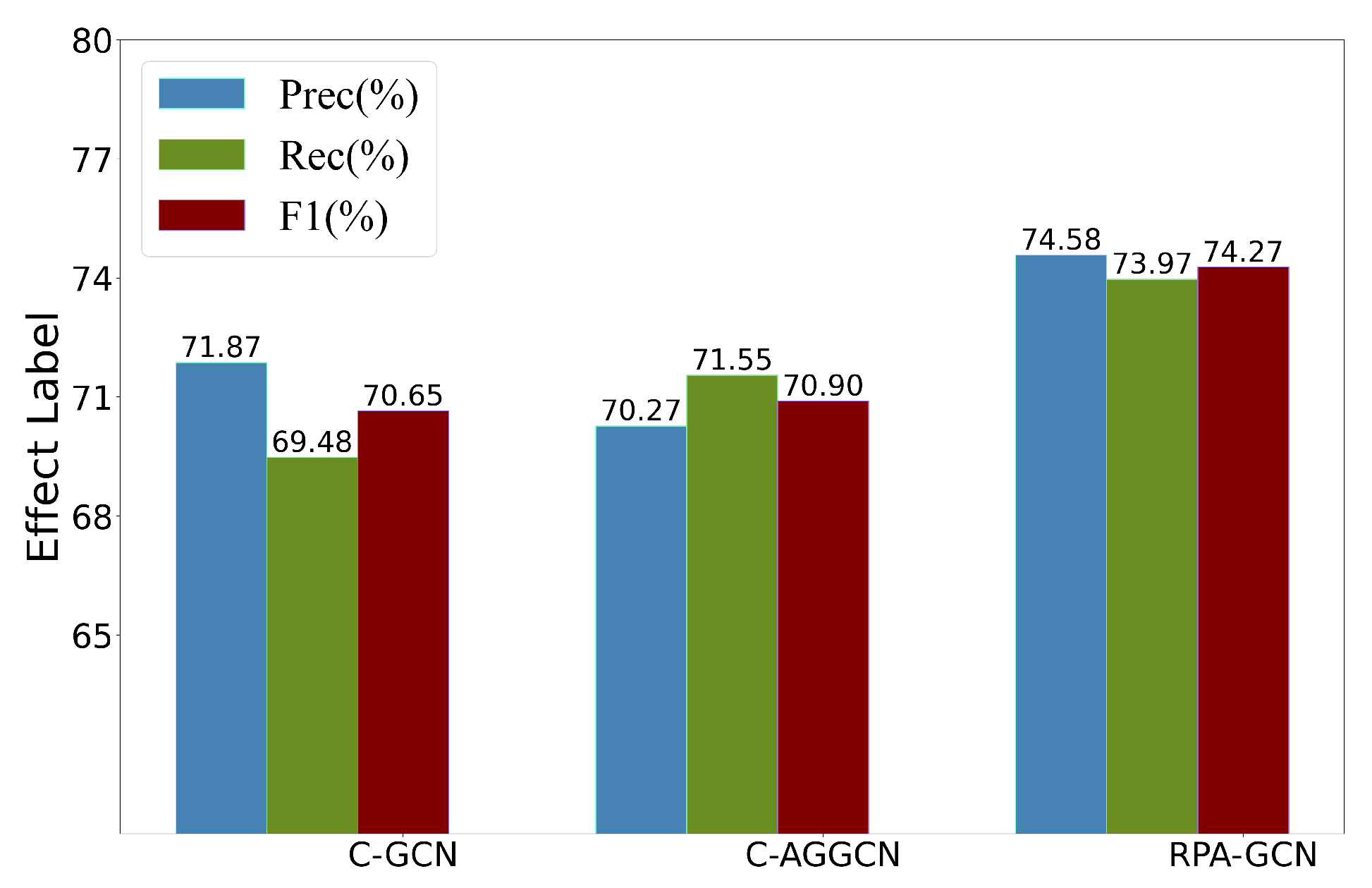

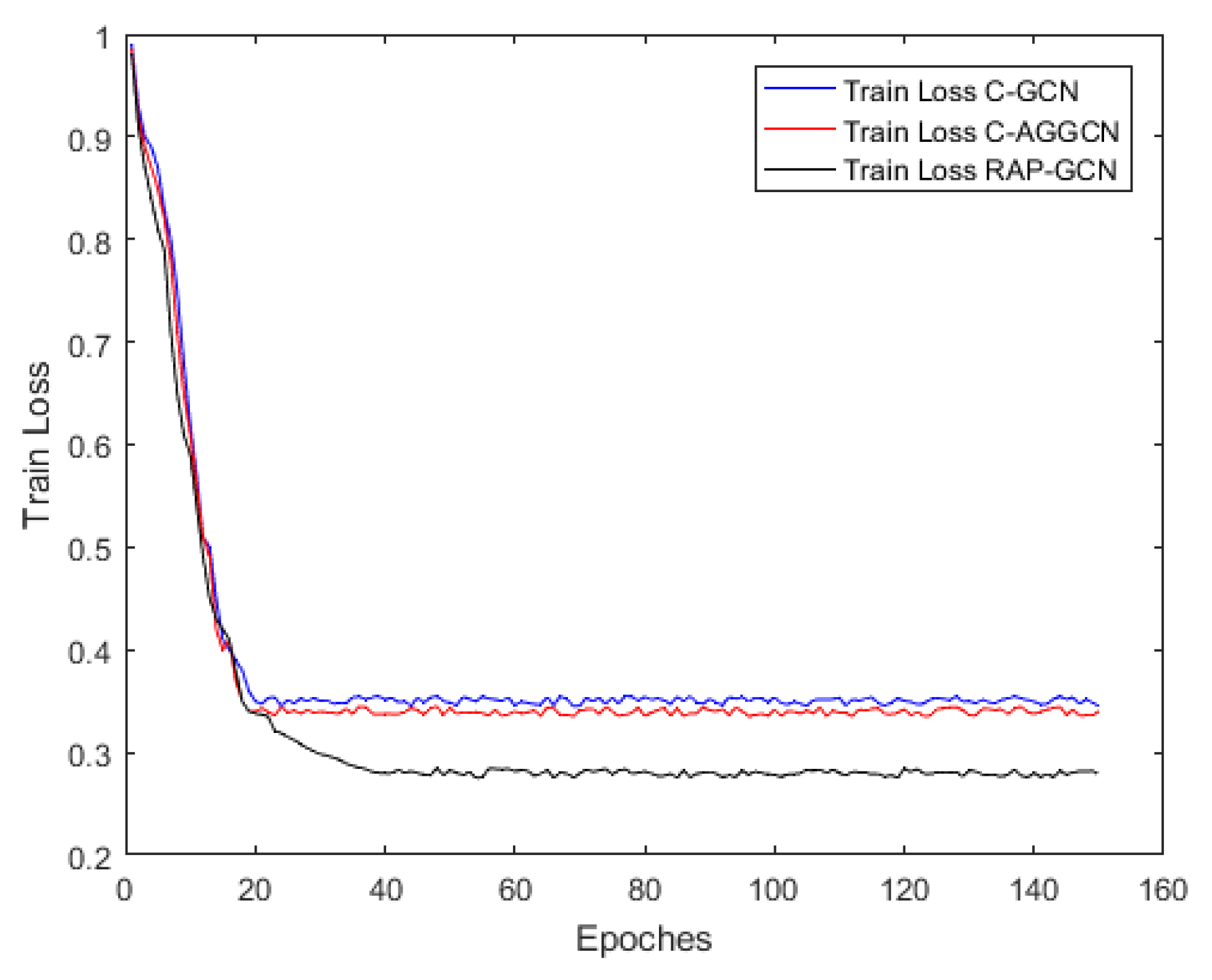

5.2. Model Performance Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RGP-GCN | relation position and attention-graph convolutional networks |

| GAT | graph attention network |

| Bi-GCN | bi-directional graph convolutional network |

| CNN | convolutional neural network |

| Bi-LSTM | bi-directional long short-term memory |

References

- Pearl, J. The seven tools of causal inference, with reflections on machine learning. Commun. ACM 2019, 62, 54–60. [Google Scholar] [CrossRef]

- Zybin, S.; Bielozorova, Y. Risk-based decision-making system for information processing systems. Int. J. Inf. Technol. Comput. Sci. 2021, 13, 1–18. [Google Scholar] [CrossRef]

- Young, I.J.B.; Luz, S.; Lone, N. A systematic review of natural language processing for classification tasks in the field of incident reporting and adverse event analysis. Int. J. Med. Inform. 2019, 132, 103971. [Google Scholar] [CrossRef] [PubMed]

- Jones, N.D.; Azzam, T.; Wanzer, D.L.; Skousen, D.; Knight, C.; Sabarre, N. Enhancing the effectiveness of logic models. Am. J. Eval. 2020, 41, 452–470. [Google Scholar] [CrossRef]

- Jun, E.J.; Bautista, A.R.; Nunez, M.D.; Allen, D.C.; Tak, J.H.; Alvarez, E.; Basso, M.A. Causal role for the primate superior colliculus in the computation of evidence for per-ceptual decisions. Nat. Neurosci. 2021, 24, 1121–1131. [Google Scholar] [CrossRef] [PubMed]

- Dasgupta, T.; Saha, R.; Dey, L.; Naskar, A. Automatic extraction of causal relations from text using linguistically informed deep neural networks. In Proceedings of the 19th Annual SIGdial Meeting on Discourse and Dialogue, Melbourne, Australia, 12–14 July 2018. [Google Scholar]

- Fu, J.; Liu, Z.; Liu, W.; Guo, Q. Using dual-layer CRFs for event causal relation extraction. IEICE Electron. Express 2011, 8, 306–310. [Google Scholar] [CrossRef][Green Version]

- Wei, Z.; Su, J.; Wang, Y.; Tian, Y.; Chang, Y. A novel cascade binary tagging framework for relational triple extraction. arXiv 2019, arXiv:1909.03227. [Google Scholar]

- Garcia, D. COATIS, an NLP system to locate expressions of actions connected by causality links. In International Conference on Knowledge Engineering and Knowledge Management; Springer: Berlin/Heidelberg, Germany, 1997; pp. 347–352. [Google Scholar]

- Radinsky, K.; Davidovich, S.; Markovitch, S. Learning causality for news events prediction. In Proceedings of the 21st International Conference on World Wide Web, Lyon, France, 16–20 April 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 909–918. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, T.; Zhao, S.; Chen, Y.; Nie, J.-Y. Event causality extraction based on connectives analysis. Neurocomputing 2016, 173, 1943–1950. [Google Scholar] [CrossRef]

- Kim, H.D.; Castellanos, M.; Hsu, M. Mining causal topics in text data: Iterative topic modeling with time series feedback. In Proceedings of the 22nd ACM International Conference on Information and Knowledge Management, San Francisco, CA, USA, 27 October–1 November 2013. [Google Scholar]

- Lin, Z.; Kan, M.-Y.; Ng, H.T. Recognizing implicit discourse relations in the Penn Discourse Treebank. In Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–7 August 2009. [Google Scholar]

- Wang, L.; Cao, Z.; de Melo, G.; Liu, Z. Relation classification via multi-level attention cnns. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016. [Google Scholar]

- Li, P.; Mao, K. Knowledge-oriented convolutional neural network for causal relation extraction from natural language texts. Expert Syst. Appl. 2019, 115, 512–523. [Google Scholar] [CrossRef]

- Xu, Y.; Mou, L.; Li, G.; Chen, Y.; Peng, H.; Jin, Z. Classifying relations via long short term memory networks along shortest dependency paths. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015. [Google Scholar]

- Zhao, S.; Wang, Q.; Massung, S.; Qin, B.; Liu, T.; Wang, B.; Zhai, C. Constructing and embedding abstract event causality networks from text snippets. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, Cambridge, UK, 6–10 February 2017; pp. 335–344. [Google Scholar]

- Li, Z.; Li, Q.; Zou, X.; Ren, J. Causality extraction based on self-attentive BiLSTM-CRF with transferred embeddings. Neurocomputing 2021, 423, 207–219. [Google Scholar] [CrossRef]

- Zhang, Y.; Qi, P.; Manning, C.D. Graph convolution over pruned dependency trees improves relation extraction. arXiv 2018, arXiv:180910185. [Google Scholar]

- Xu, J.; Zuo, W.; Liang, S.; Wang, Y. Causal relation extraction based on graph attention network. Comput. Res. Dev. 2020, 57, 159. (In Chinese) [Google Scholar]

- Dai, D.; Xiao, X.; Lyu, Y.; Dou, S.; She, Q.; Wang, H. Joint extraction of entities and overlapping relations using position-attentive sequence labeling. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Dixit, K.; Al-Onaizan, Y. Span-level model for relation extraction. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Zhang, Y.; Zhong, V.; Chen, D.; Angeli, G.; Manning, C.D. Position-aware attention and supervised data improve slot filling. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017. [Google Scholar]

- de Marneffe, M.-C.; Manning, C.D. Stanford Typed Dependencies Manual; Technical report; Stanford University: Stanford, CA, USA, 2008. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:160902907. [Google Scholar]

- Saha, S.; Kumar, K.A. Emoji Prediction Using Emerging Machine Learning Classifiers for Text-based Communication. J. Math. Sci. Comput. 2022, 1, 37–43. [Google Scholar] [CrossRef]

- Hendrickx, I.; Kim, S.N.; Kozareva, Z.; Nakov, P.; Séaghdha, D.Ó.; Padó, S.; Pennacchiotti, M.; Romano, L.; Szpakowicz, S. Semeval-2010 task 8: Multi-way classification of semantic relations between pairs of nominals. arXiv 2019, arXiv:191110422. [Google Scholar]

- Hidey, C.; McKeown, K. Identifying causal relations using parallel Wikipedia articles. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 1424–1433. [Google Scholar]

- Zheng, Y.; Zuo, X.; Zuo, W.; Liang, S.; Wang, Y. Bi-LSTM+GCN Causal Relationship Extraction Based on Time Relationship. J. Jilin Univ. (Sci. Ed.) 2021, 59, 643–648. (In Chinese) [Google Scholar]

- Manning, C.; Surdeanu, M.; Bauer, J.; Finkel, J.; Bethard, S.; McClosky, D. The Stanford CoreNLP natural language processing toolkit. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Baltimore, MD, USA, 23–24 June 2014. [Google Scholar]

- Guo, Z.; Zhang, Y.; Lu, W. Attention guided graph convolutional networks for relation extraction. arXiv 2019, arXiv:190607510. [Google Scholar]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:150801991. [Google Scholar]

| Model | F1 |

|---|---|

| LR [23] | 82.2 |

| SDP-LSTM [16] | 83.7 |

| PA-LSTM [23] | 82.7 |

| C-GCN [19] | 84.8 |

| C-AGGCN [31] | 85.1 |

| RPA-GCN(Model of this paper) | 85.67 |

| Model | F1 |

|---|---|

| Final Model | 72.68 |

| - Position aware | 70.88 |

| - Graph attention networks | 70.41 |

| - Bi-directional GCN | 69.21 |

| - All of the above | 67.58 |

| Dataset | Chain of Cause and Effect | Multi-Relationship Causation |

|---|---|---|

| All | 244 | 65 |

| Train set | 147 | 39 |

| Validation set | 49 | 13 |

| Test set | 48 | 13 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Wan, W.; Hu, J.; Wang, Y.; Huang, B. Complex Causal Extraction of Fusion of Entity Location Sensing and Graph Attention Networks. Information 2022, 13, 364. https://doi.org/10.3390/info13080364

Chen Y, Wan W, Hu J, Wang Y, Huang B. Complex Causal Extraction of Fusion of Entity Location Sensing and Graph Attention Networks. Information. 2022; 13(8):364. https://doi.org/10.3390/info13080364

Chicago/Turabian StyleChen, Yang, Weibing Wan, Jimi Hu, Yuxuan Wang, and Bo Huang. 2022. "Complex Causal Extraction of Fusion of Entity Location Sensing and Graph Attention Networks" Information 13, no. 8: 364. https://doi.org/10.3390/info13080364

APA StyleChen, Y., Wan, W., Hu, J., Wang, Y., & Huang, B. (2022). Complex Causal Extraction of Fusion of Entity Location Sensing and Graph Attention Networks. Information, 13(8), 364. https://doi.org/10.3390/info13080364