Computational Offloading of Service Workflow in Mobile Edge Computing

Abstract

:1. Introduction

- We study the offloading and scheduling problems of workflow tasks in an MEC scenario with multi-MD and multi-VM. A workflow model based on the directed acyclic graph which indicates execution order and execution location of workflow tasks is proposed.

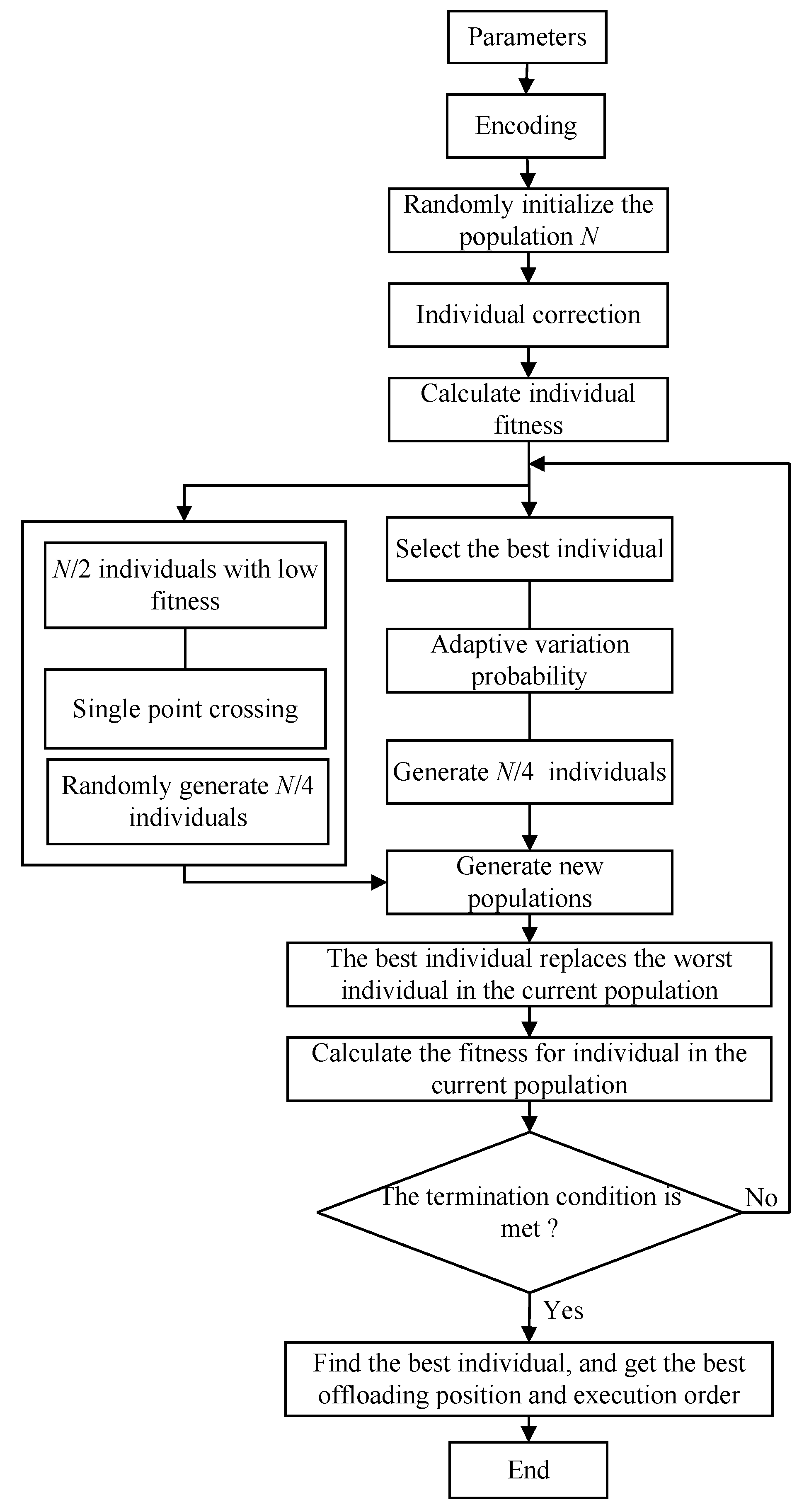

- We propose a workflow scheduling strategy based on an adaptive genetic algorithm. In genetic algorithm, the offloading scheduling consisting of the execution order and execution location of workflow is defined as the individual. The optimal scheduling strategy for workflow in multi-user and multi-task scenarios is finally obtained through individual correction, competition for survival, selection, crossover, and mutation operations.

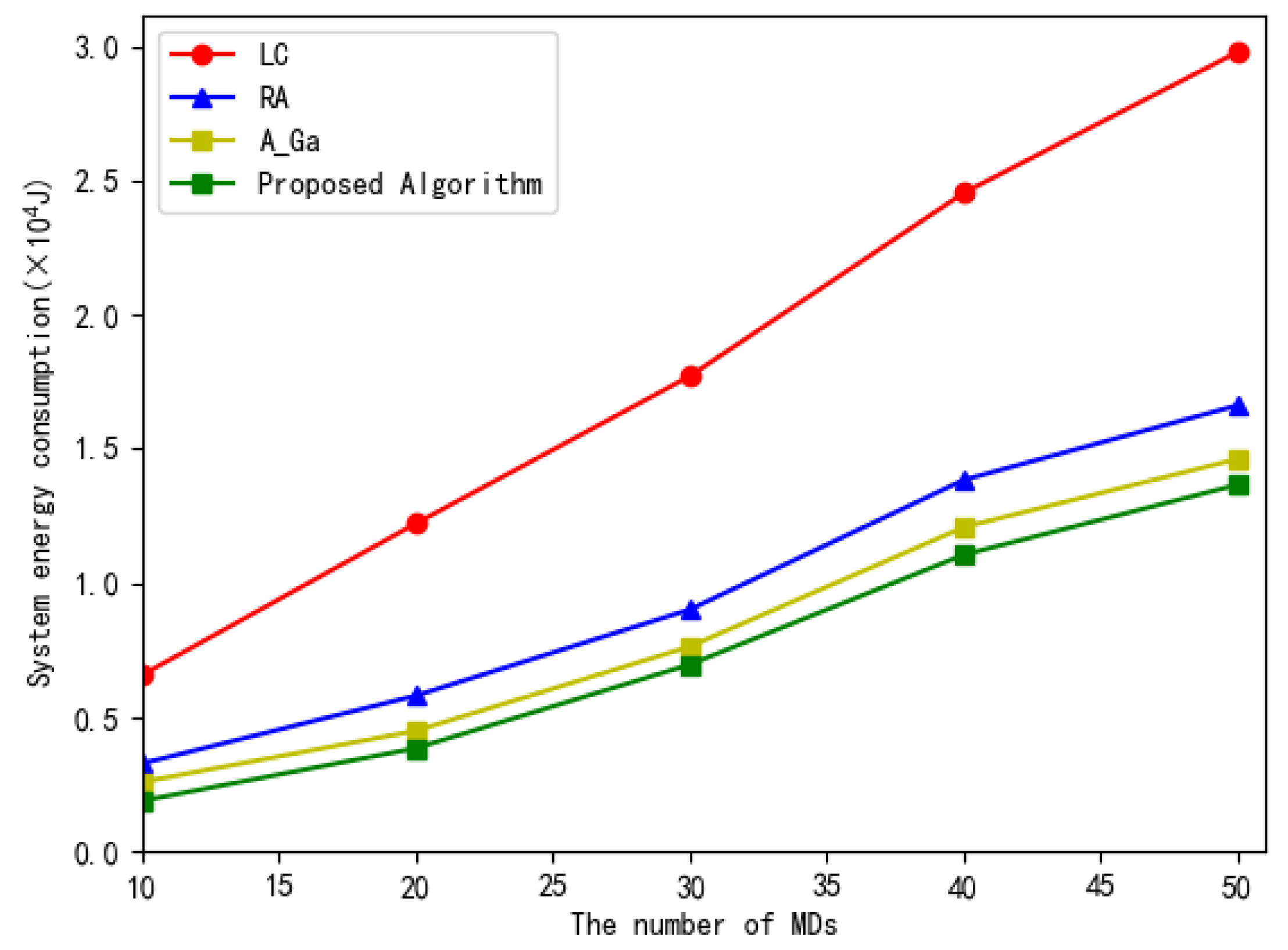

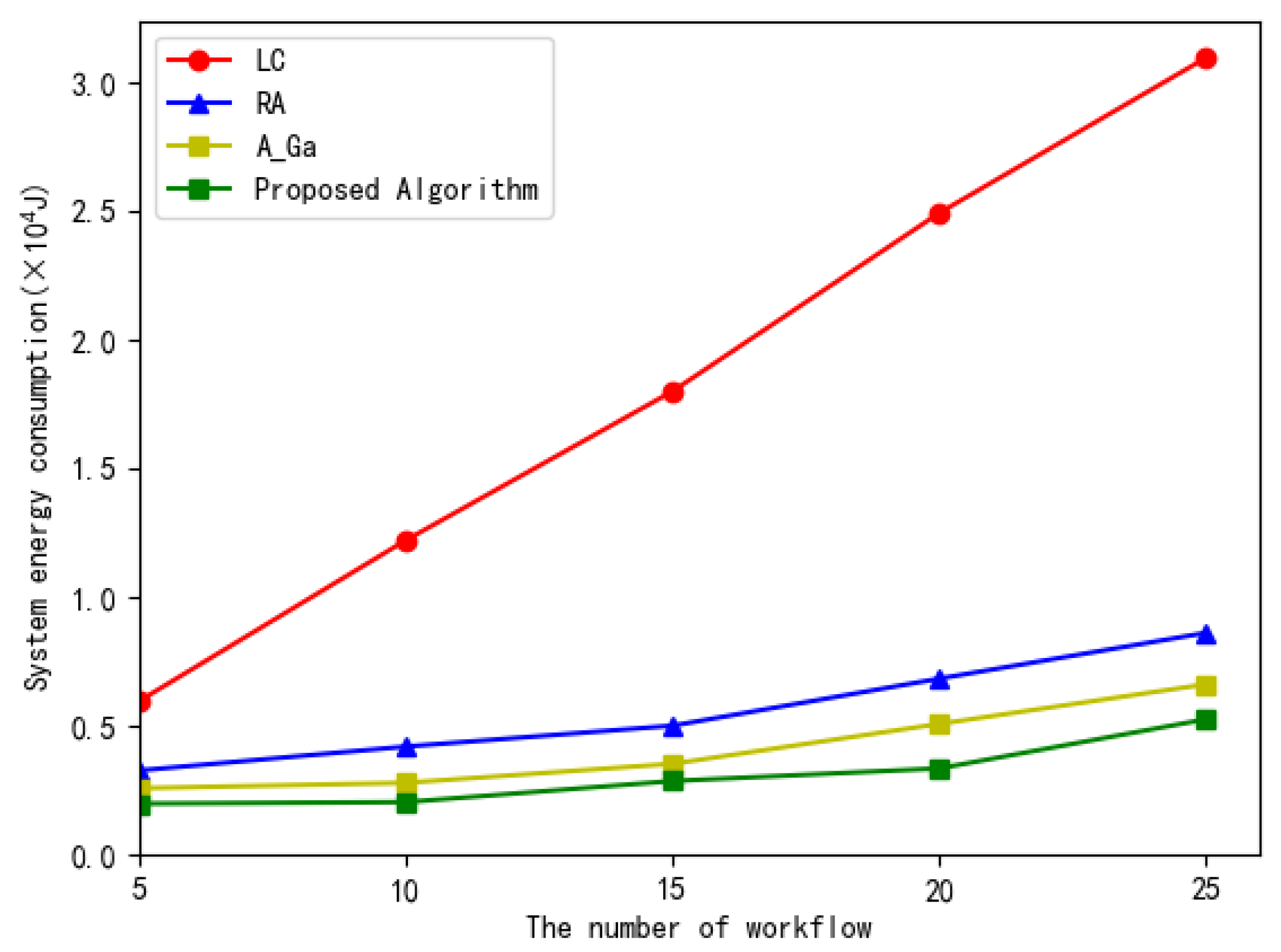

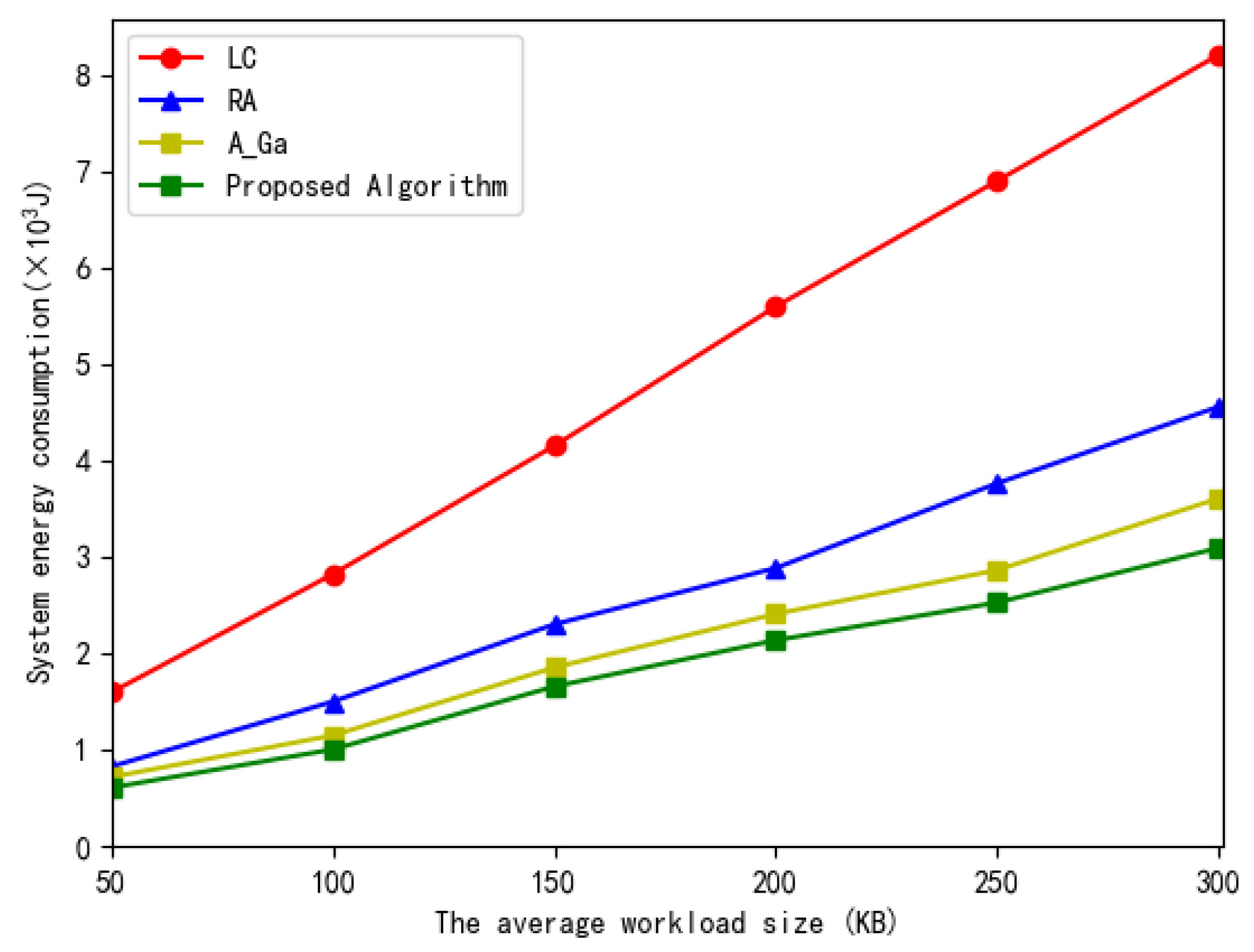

- The simulation results show that, compared with other benchmark methods, such as local offloading and random offloading, the proposed method can achieve optimal task scheduling for multi-user workflow to minimize the total energy consumption of the system.

2. Related Work

3. System Model

3.1. Workflow Task Model

3.2. Communication Model

3.3. Computation Model

- (1)

- Local computing: We define as the local computation ability of the MD k. When the subtask is executed locally, the local computation time isThe energy consumption of computing the subtask can be calculated aswhere is the energy consumption factor related to the CPU chip architecture, is the energy consumption in each CPU cycle [20].

- (2)

- Offloading computing: If a subtask is offloaded to VM for computing, the total execution time consists of two parts. One is the transmission time that MD offloads the subtask to the MEC server. The other is the computation time on VMs. Then, the transmission time of offloading subtask to MEC can be calculated as followsThe energy consumption of uplink can be calculated as follows:where is the transmission power of MD k. The computation time of subtasks on VM, which can be given aswhere is the CPU frequency of the VMs m. Therefore, the total execution time for offloading can be expressed asSimilarly, in the case that a subtask is offloaded to VM, the energy consumption of MD includes transmission energy consumption and the circuit loss of the local device. Similar with [21], we only consider the energy consumption for offloading and ignore the circuit loss. Thus, the energy consumption of MD for offloading subtasks is given byLet denote the execution position set of the subtasks. Since a task can only be performed by one VM, an offloading decision is utilized. If subtask is offloaded to the VM m () for computation, , otherwise . Thus, the total time and the energy consumption of subtask for computation are given as followsIn the workflow, subtask is the immediate successor of subtask . When subtask is finished computing, the output data of subtask is transmitted to successor subtask . We assume that subtask is computed locally and is computed on VM m. The output data transmission time from to and transmission energy consumption arewhere is the transmission power of MD k, and is the transmission rate of MD k.Similarly, in the case that the subtask is executed on VM m and the subtask is executed locally, the local device needs to download the data from VM. Let denote the downloading power of MD k. The data downloading time and the download energy consumption can be calculated respectively asThe total computation time of workflow on MD k is the sum of computation time and data transmission time of the data transmitted between the associated subtasks. The total energy consumption of the workflow on MD k is the sum of the local computing energy consumption, offloading energy consumption, and the energy consumption for data transmission between associated subtasks. As mentioned above, the total computation time and the total energy consumption can be calculated respectively as

4. Problem Formulation

4.1. Algorithm Implementation

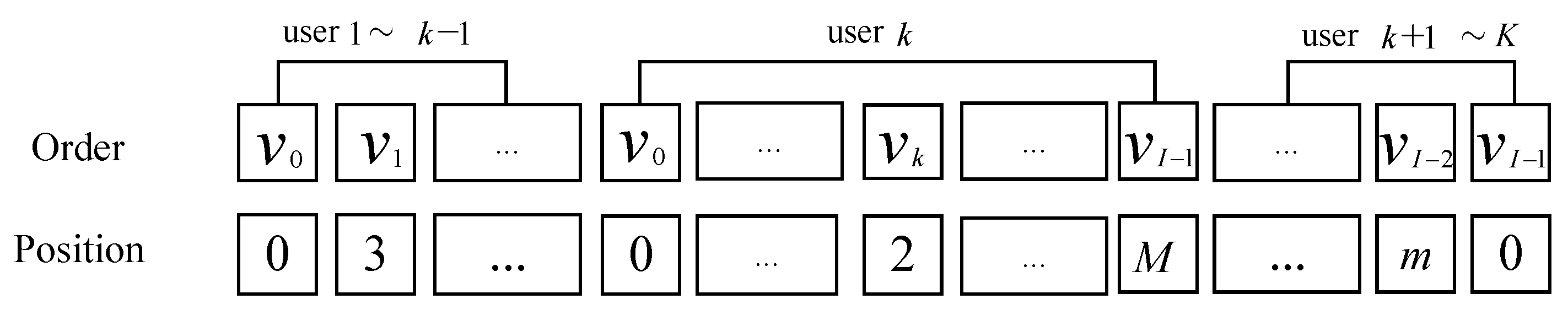

4.1.1. Encoding

4.1.2. Population Initialization and Individual Correction

4.1.3. Select

4.1.4. Competition for Survival

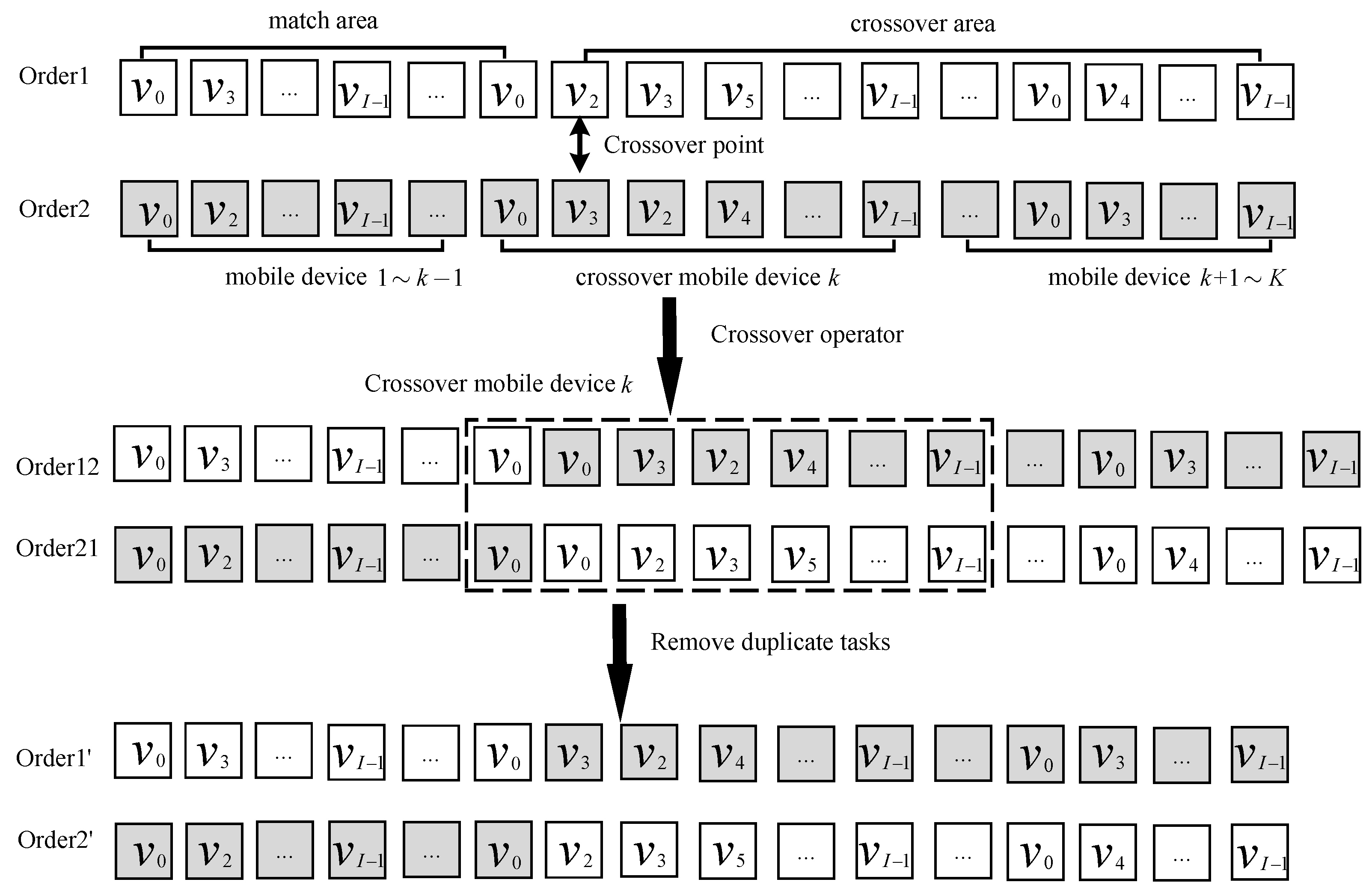

4.1.5. Crossover

| Algorithm 1 Task execution order single-point crossover algorithm. |

|

| Algorithm 2 Single-point crossover algorithm for task offloading position. |

|

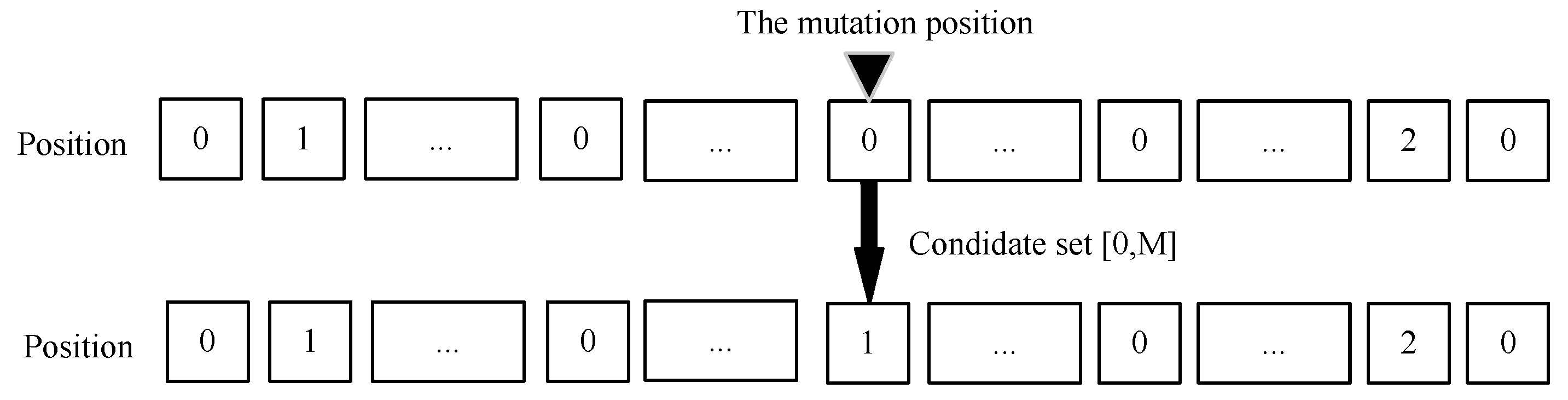

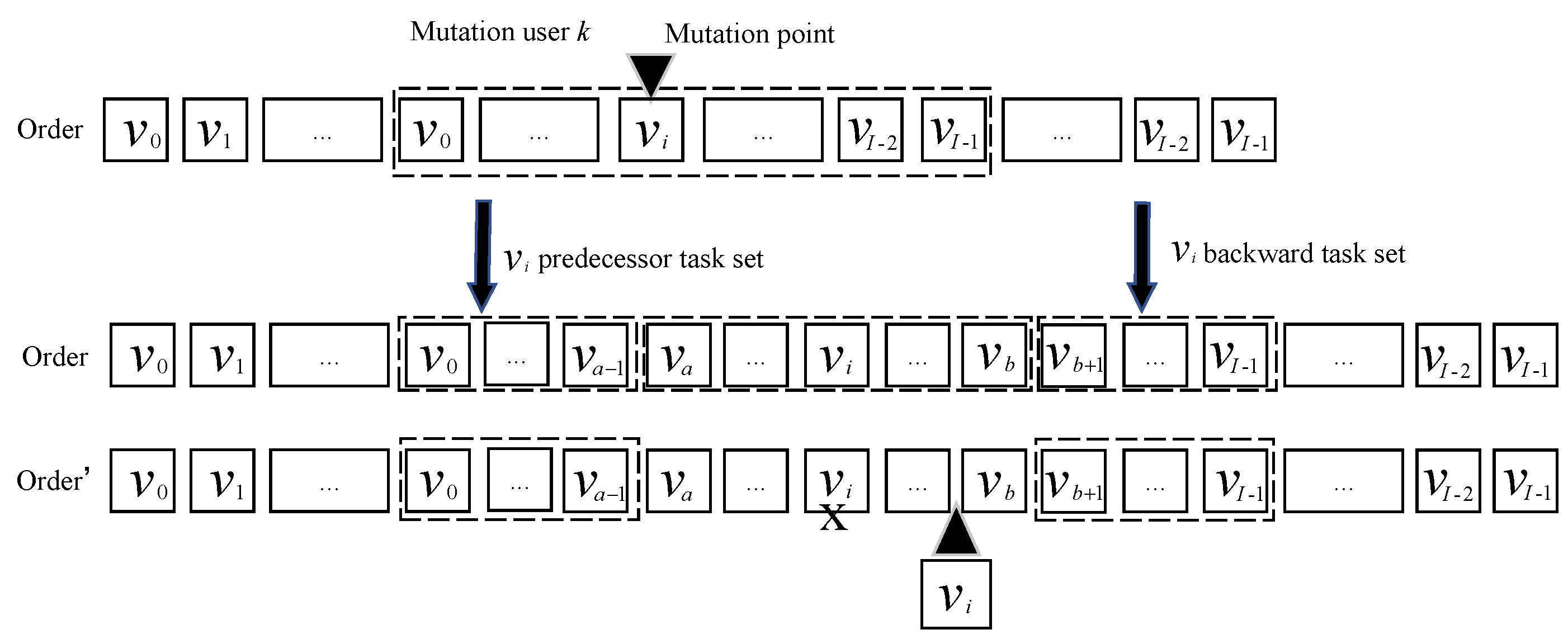

4.1.6. Mutation

| Algorithm 3 Offloading position single-point mutation algorithm. |

|

| Algorithm 4 Single-point mutation for task execution order algorithm. |

|

5. Simulation Results and Discussion

- (1)

- Local computing (LC): The local execution involves no offloading. All tasks are executed locally on MDs;

- (2)

- Random offloading (RA): All subtasks in the workflow are randomly offloaded to some MEC servers for execution or executed locally;

- (3)

- Adaptive genetic algorithm (AGA): All tasks of the workflow are executed locally or offloaded to the MEC for execution based on the adaptive genetic algorithm in [24].

6. Conclusions

7. Work Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hoang, D.T.; Lee, C.; Niyato, D.T.; Wang, P. A survey of mobile cloud computing: Architecture, applications, and approaches. Wirel. Commun. Mob. Comput. 2013, 13, 1587–1611. [Google Scholar]

- Sahni, J.; Vidyarthi, D.P. A Cost-Effective Deadline-Constrained Dynamic Scheduling Algorithm for Scientific Workflows in a Cloud Environment. IEEE Trans. Cloud Comput. 2018, 6, 2–18. [Google Scholar] [CrossRef]

- Wang, X.; Yang, L.T.; Chen, X.; Han, J.; Feng, J. A Tensor Computation and Optimization Model for Cyber-Physical-Social Big Data. IEEE Trans. Sustain. Comput. 2019, 4, 326–339. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Liu, W. Edge Computing—An Emerging Computing Model for the Internet of Everything Era. J. Comput. Res. Dev. 2017, 54, 907–924. [Google Scholar]

- Peng, K.; Leung, V.C.M.; Xu, X.; Zheng, L.; Wang, J.; Huang, Q. A Survey on Mobile Edge Computing: Focusing on Service Adoption and Provision. Wirel. Commun. Mob. Comput. 2018, 2018, 8267838. [Google Scholar] [CrossRef]

- Mobile Edge Computing—A Key Technology towards 5G; ETSI White Paper No. 11; ETSI: Valbonne, France, 2015; ISBN 979-10-92620-08-5.

- Sun, X.; Ansari, N. EdgeIoT: Mobile Edge Computing for the Internet of Things. IEEE Commun. Mag. 2016, 54, 22–29. [Google Scholar] [CrossRef]

- Peng, Q.; Jiang, H.; Chen, M.; Liang, J.; Xia, Y. Reliability-aware and Deadline-constrained workflow scheduling in Mobile Edge Computing. In Proceedings of the 2019 IEEE 16th International Conference on Networking, Sensing and Control (ICNSC), Banff, AB, Canada, 9–11 May 2019; pp. 236–241. [Google Scholar]

- Leymann, F.; Roller, D. Workflow-based applications. IBM Syst. J. 1997, 36, 102–123. [Google Scholar] [CrossRef] [Green Version]

- Pandey, S.; Wu, L.; Guru, S.M.; Buyya, R. A Particle Swarm Optimization-Based Heuristic for Scheduling Workflow Applications in Cloud Computing Environments. In Proceedings of the 2010 24th IEEE International Conference on Advanced Information Networking and Applications, Perth, Australia, 20–23 April 2010; pp. 400–407. [Google Scholar]

- Li, X.; Chen, T.; Yuan, D.; Xu, J.; Liu, X. A Novel Graph-based Computation Offloading Strategy for Workflow Applications in Mobile Edge Computing. arXiv 2021, arXiv:2102.12236. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, W.; Cao, Y.; Li, D.; Wang, L. Energy-Delay Tradeoff for Dynamic Offloading in Mobile-Edge Computing System With Energy Harvesting Devices. IEEE Trans. Ind. Inform. 2018, 14, 4642–4655. [Google Scholar] [CrossRef]

- Dong, H.; Zhang, H.; Li, Z.; Liu, H. Computation Offloading for Service Workflow in Mobile Edge Computing. Comput. Eng. Appl. 2019, 55, 36–43. [Google Scholar]

- Li, W.; Liu, H.; Li, Z.; Yuan, Y. Energy-Delay Tradeoff for Dynamic Offloading in Mobile-Edge Security and energy aware scheduling for service workflow in mobile edge computing. Comput. Integr. Manuf. Syst. 2020, 26, 1831–1842. [Google Scholar]

- Sundar, S.; Liang, B. Offloading Dependent Tasks with Communication Delay and Deadline Constraint. In Proceedings of the IEEE INFOCOM 2018—IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 37–45. [Google Scholar]

- Guo, S.; Liu, J.; Yang, Y.; Xiao, B.; Li, Z. Energy-Efficient Dynamic Computation Offloading and Cooperative Task Scheduling in Mobile Cloud Computing. IEEE Trans. Mob. Comput. 2019, 18, 319–333. [Google Scholar] [CrossRef]

- Ning, Z.; Dong, P.; Kong, X.; Xia, F. A Cooperative Partial Computation Offloading Scheme for Mobile Edge Computing Enabled Internet of Things. IEEE Internet Things J. 2019, 6, 4804–4814. [Google Scholar] [CrossRef]

- Sun, J.; Yin, L.; Zou, M.; Zhang, Y.; Zhang, T.; Zhou, J. Makespan-minimization workflow scheduling for complex networks with social groups in edge computing. J. Syst. Archit. 2020, 108, 101799. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, W.; Chen, P.; Ma, Y.; Xia, Y.; Liu, W.; Li, X.; Guo, K. A Novel Coevolutionary Approach to Reliability Guaranteed Multi-Workflow Scheduling upon Edge Computing Infrastructures. Secur. Commun. Netw. 2020, 2020, 6697640. [Google Scholar] [CrossRef]

- Elgendy, I.A.; Zhang, W.Z.; Zeng, Y.; He, H.; Tian, Y.C.; Yang, Y. Efficient and Secure Multi-User Multi-Task Computation Offloading for Mobile-Edge Computing in Mobile IoT Networks. IEEE Trans. Netw. Serv. Manag. 2020, 17, 2410–2422. [Google Scholar] [CrossRef]

- Chen, X. Decentralized Computation Offloading Game for Mobile Cloud Computing. IEEE Trans. Parallel Distrib. Syst. 2015, 26, 974–983. [Google Scholar] [CrossRef] [Green Version]

- Srinivas, M.; Patnaik, L.M. Adaptive probabilities of crossover and mutation in genetic algorithms. IEEE Trans. Syst. Man, Cybern. 1994, 24, 656–667. [Google Scholar] [CrossRef] [Green Version]

- Rappaport, T.T.S. Wireless Communications: Principles and Practice; Prentice Hall: Hoboken, NJ, USA, 1996. [Google Scholar]

- Yan, W.; Shen, B.; Liu, X. Offloading and resource allocation of MEC based on adaptive genetic algorithm. Appl. Electron. Tech. 2020, 46, 95–100. [Google Scholar]

| Simulation Parameter | Value |

|---|---|

| Bandwidth | 5 MHz |

| Transmission power of mobile device | 600 mW |

| Receive power of mobile device | 100 mW |

| Background noise | −113 dBm |

| Mobile device execution power consumption coefficient | Joule/cycle |

| MEC execution power consumption coefficient | Joule/cycle |

| Data subtask | [50, 300] kB |

| The weight between two subtasks | [300, 500] kB |

| Needed CPU cycles to calculate 1 bit task | 1000–1200 (cycles/byte) |

| MD’s local computation capability | [0.1, 1] GHz |

| MEC computation capability | [2, 4] GHz |

| 0.9, 0.4, 0.1, 0.05 | |

| Population Size | 80 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, S.; Ding, C.; Jiang, P. Computational Offloading of Service Workflow in Mobile Edge Computing. Information 2022, 13, 348. https://doi.org/10.3390/info13070348

Fu S, Ding C, Jiang P. Computational Offloading of Service Workflow in Mobile Edge Computing. Information. 2022; 13(7):348. https://doi.org/10.3390/info13070348

Chicago/Turabian StyleFu, Shuang, Chenyang Ding, and Peng Jiang. 2022. "Computational Offloading of Service Workflow in Mobile Edge Computing" Information 13, no. 7: 348. https://doi.org/10.3390/info13070348

APA StyleFu, S., Ding, C., & Jiang, P. (2022). Computational Offloading of Service Workflow in Mobile Edge Computing. Information, 13(7), 348. https://doi.org/10.3390/info13070348