Abstract

Content and metadata concerning a specialized field such as Art and Cultural Heritage are often scattered throughout the World Wide Web, making it hard for end-users to find, especially amid the vast and often commercialized general content of the Web. This paper presents the process of designing and developing a Federated Search Engine (FSE) that collects such content from multiple credible sources of the world of Art and Culture and presents it to the user in a unified user-oriented manner, enhancing it with added functionality. The study focuses on the challenges such an endeavor presents and the technological tools, design decisions and methodology that lead to a fully functional, Web-based platform. This implemented search engine was evaluated by a group of stakeholders from the wider fields of art, culture and media during a closed test and the insights and feedback gained by these tests are herein analyzed and presented. These insights contain both the quantitative metrics of user engagement during the testing period and the qualitative information presented by the stakeholders through interviews. The above findings are thoroughly discussed and lead to conclusions regarding the usefulness and viability of Web applications in the aggregation and diffusion of Art and Cultural Heritage related content.

1. Introduction

Undeniably, the Internet as we know it and use it today, is more than a network; it is the primary and most pervasive mass medium of our generation. Its repercussions continue to be far-reaching and revolutionary. The Internet permeates every aspect of our lives and is currently pervading many fields and industries, including Arts and Culture [1]. Eva Respini [1] mentions that since its birth, the Internet has significantly—and perhaps irreversibly—changed all forms of art, profoundly affecting numerous artists and artworks, whether in painting or moving images, sculpture or photography.

Although there might be a discussion on whether the Internet is preserving or killing Arts and Culture, Manjoo [2] supports that it acts as a preserving factor for already existing art and artists and as a promoting factor for new artists who are using social media to promote their work [2]. What we need to keep in mind is that the Internet is a pool of information that includes only pieces that someone has decided to put into it, as pointed out by Jones [3]. When it comes to finding Arts and Culture in the Internet, Jones [3] reveals six types of web presence: websites of museums, websites of academic institutions, corporate sponsors and individual, websites of cultural civic and professional organizations; national trusts and foundations, websites of serials and indexes for art and literature, websites for buying and selling art and the web presence of libraries (overviews, search strategies and services). Although having Arts and Cultural objects and information presented in a digital manner might sound at first to be counter-intuitive, a study by Di Franco et al. showed that people were more engaged with online museum artifacts and enjoyed a better interaction with them, rather than with physical museum artifacts [4]. After all, a physical visit at the museum also has its limitations, since in the majority of the cases, you cannot touch the objects. Digital environments in Cultural Heritage have, as a goal, the desire to promote and preserve art artifacts and to educate the public in an engaging manner [5].

One function of the Internet that actually revolutionized and rendered it accessible and user friendly to almost everyone is the search engine; a tool that is giving the user the ability to find specific information in the vast world of the Internet. In order for someone to find information, they need to enter a web query into the search engine. A web query consists of one or more search terms. Spink and Jansen claim that queries are an essential component of web searching and they express a problem regarding information that a searcher encounters [6]. The purpose of a web search is not always informational. It can also be navigational (for instance, to find the URL of a specific website the searcher wants to reach) or transactional (to find sites related to a particular transaction such as an e-shop, downloadable material, etc.), according to Broder [7]. The same applies when someone searches for Arts and Culture; since they might search the URL for a specific library, they might want to download audio and video material related to artifacts or to just learn more about them. An older study by Purcell et al. showed that in general, more than 90% of the users eventually find what they are searching for in a web search engine, with more than half evaluating the results as becoming more relevant over time [8].

Although not limited to it, Cultural Heritage information is frequently dispersed across the World Wide Web, making it difficult for end-users to locate, particularly among the large and frequently marketed general content of the web. At the same time, the information that a searcher will receive might not be highly relevant to what they were searching for, regardless of whether this insufficiency is related to their search query or to the search engine itself. These problems can be solved to a high extent by using metasearch engines and federated search. Metasearch engines are an intuitive method for optimizing web search performance by expanding coverage, returning a large number of relevant results and presenting various perspectives on information demands, as noted by Jansen et al. [9].

Federated search (also known as distributed information retrieval or federated information retrieval) is a technique for simultaneously searching different collections. Shokouhi and Si [10] mention that queries are routed via a selection of collections with the highest probability of returning relevant results. The results of selected collections are combined into a single list. Federated search is frequently favored to centralized search options in a variety of situations. For example, commercial search engines cannot easily index crawl-incapable secret web collections, whereas federated search systems can search the contents of such collections through the use of appropriate web services or APIs. Federated search solutions can allow for concurrent searches over numerous collections in business situations where each organization maintains its own search engine [10].

Searching for Arts and Culture is not something new or limited to the World Wide Web. In fact, the desire to seek art or art-related artifacts and content predates the birth of the Internet and is a fundamental part of the general public’s interaction with the arts. It was a significant factor in the development of art collections, which began as a collection of items from royalty, nobles and religious institutions displayed in palaces, temples, monasteries, and subsequently grew into private collections and museums [11]. As art began transitioning to digital, more people began searching for it. As in other fields, searching for Arts and Culture poses certain challenges. Web search engines face a slew of severe challenges in order to maintain or improve the quality of their performance according to Henzinger et al. [12]. One of the key challenges is that of spam. Silverstein and his team [13], in a much older study, found that more than 80% of users only look at the first page’s results upon using a web search engine. This did not seem to change as the years passed, since a more recent study by Beus has shown that more than 25% of Google searchers are clicking the first organic (not advertisement) result they get, and more than 50% click on the three top organic results [14]. Taking into account that a first page consists of about 7–8 organic results, we can see that almost 80% do not go to the next page, verifying the results of Silverstein’s research [13] more than 20 years later. What is of importance here, however, is that the top results are not always the most relevant, and sometimes they intentionally mislead the searchers themselves. By using modern Search Engine Optimization techniques, some websites can click-bait their way into the top results.

Other challenging factors for search engines are content quality, quality evaluation, web conventions and duplicate hosts [12]. Content quality refers to the overall quality of the result, even if it is not spam. This might include, for example, outdated or contradictory information. The quality evaluation refers to the way the search engine’s algorithms evaluate the results. Web convention issues are related to these practices that a search engine takes for granted in order to produce results. For instance, if there is a referral link in a website, we believe that it will be of high importance to those who have visited the particular web page, but this is not always the case. Last, but not least, duplicate hosts are referring to the inclusion of the same host twice, something that search engines in general try to avoid, but do not always manage [12].

As far as Arts and Culture is concerned, one web convention that is of high relevance is the use of meta tags to convey metadata. Metadata is content that describes the content. For instance, for Leonardo da Vinci’s Mona Lisa, the metadata will include descriptions such as painting, Leonardo da Vinci, year, country or institution. This will result in a relevant and high quality search result for the web page that contains the painting. However, a lot of metadata and meta tag authors abuse their purpose and include irrelevant information in an effort to increase the web page’s search engine rankings, although the latter has been decreased significantly since 2009, when major search engines stopped using meta keywords as the basis for indexing a page according to Gudivada et al. [15].

Another development that emerged recently in the world of search engines is the use of phonetic search. Due to the rise of mobile devices, Song et al. point out that more and more systems are utilizing voice search [16] in an effort to offer their users a better user experience that doesn’t include trying to type into small device screens.

As technology and algorithms become more sophisticated, the development of search engines becomes even more complex, as noticed by Chau and Chen [17]. In order to gain insight that will help developers achieve a better result, traditional feedback methods can be utilized. Such methods may include the collection of not only qualitative feedback through focus groups or interviews with stakeholders, but also quantitative feedback collected during a pilot or test run of the search engine under development.

Focus group interviews are a very popular method for qualitative data collection and are used for exploring what individuals believe or feel and why they behave in the way they do, according to Rabiee [18]. They mainly aim to comprehend and explain the meanings, beliefs and cultures that influence individuals’ feelings, attitudes and behaviors [18]. Linda Lederman [19] describes focus group interviews as the technique of using the detailed, in-depth interviews of groups who are focused on a given topic. The participants have been selected as a purposive—but not necessarily representative—sampling of a specific population. The idea is that people who face a particular problem will be willing to discuss it with other people who are also facing the same issue. Rabbie [18] mentions that focus groups provide an effective mechanism for including users in care management and strategy formulation, as well as in needs assessment, participatory planning and the evaluation of health promotion and nutrition intervention programs. Twin also mentions the contribution of focus groups as a method of data collection in nursing research [20]. In the same sense, the focus group method can and has been used as a tool to obtain feedback and experiences from software engineering practitioners and application users [21]. Kontio et al. [21] mention that if the technique is used properly and with adequate planning, it can provide “a valuable complementary empirical experience quickly and at low cost”.

Focus group interviews are a beneficial empirical method to acquire feedback. However, especially in software engineering where quantitative data is available, they are best used in conjunction with other means of information gathering, as is also supported by Kontio [21]. Qualitative data can be a useful asset if it is combined with a method of gathering actual usage metrics such as a pilot of the application itself. A pilot is a test application that is delivered to a specific audience sample in order to evaluate its effectiveness. Alpha testing (or quality assurance testing) is usually an early version of the app that is functional but distributed only to a few people related to it, most likely the engineers, that use automated and regimented tests, according to Fine [22]. Wurangian clarifies that alpha testing takes place in-house [23]. After successful alpha testing, beta testing is scheduled. Beta testing is the last step before a product is released commercially [23]. A beta test is used to determine whether a product is ready for release by distributing it to members of the general public and gathering feedback [22].

In this study, researchers employed the principles of federated search to create a web-based meta-search engine platform that aimed to collect information related to Arts and Cultural Heritage from various repositories and present it to any interested parties. In order to create a product that would better cover the needs of such interested parties, a preliminary research was conducted consisting of a series of semi-structured interviews with members of the platform’s target group such as artists, art historians, curators, students of art and more [24]. During that research, questions regarding the motives of stakeholders, temporal and topical parameters of their searches and their overall experiences were asked and the key challenges faced by people searching for art related content were identified and discussed. These included the objective difficulty of properly presenting art in the digital space, the business and marketing oriented nature of the Web, the prevalent role of social media and the often fragmented and incomplete state of information [24]. These challenges affected design and implementation decisions throughout the creation of the search engine presented here.

Essentially, the research presented is a case study of a software system prototype. The principles and lessons learned during the development and evaluation of this specific case can be transferred to other cases and situations according to Schoch [25]. The study is focused around answering the following research questions:

- RQ1: Is it feasible to create a search engine for Arts and Cultural Heritage that uses content openly available to different entities, and what are the challenges that need to be overcome?

- RQ2: What features and technologies contribute most to the effectiveness of such a software system, both from a functionality perspective and from a user experience perspective?

- RQ3: Is the functionality provided by such a platform considered useful by the stakeholders, and can this platform cover the existing needs in the field of Arts and Culture?

In order to gain better insight concerning these questions, a pilot run of the application took place in the form of a beta test. During this beta test, quantitative data was gathered by the system. Additionally, the participants of the test provided qualitative feedback by participating in focus group interviews. Both the development and the evaluation process are presented in the next section.

2. Methodology

2.1. Research Design

The research was conducted in three stages. It started with the development of the prototype Federated Search Engine (FSE), continued with its public testing, in order to collect quantitative data and concluded with mini focus group interviews with the test participants.

The development of the FSE was deemed necessary in order to evaluate the technical challenges and feasibility of such an endeavor. Software prototyping for research purposes aims to investigate new ideas and faces promising research directions, according to Winkler et al. [26] in his comparison between industrial and research oriented prototyping. Its usual outcomes in a research environment are studying the feasibility of novel approaches, as well as creating applications for demonstration purposes [26]. Research through software prototypes can cover a vast array of different fields. Pissinati et al. [27] used it to research retirement planning, Carvajal–Ortiz et al. [28] used it to support design and evaluation in curriculum management in education and Begic et al. [29] used it to study the use of augmented reality in mastering vocabulary. In this case, the prototyping approach was selected because it offers flexibility in the development cycle length and team size, as indicated by Terho [30]. The purpose of the first stage was to reach a conclusion concerning the feasibility of aggregating and presenting Art and Cultural Heritage related content from open access repositories and offering it to the end-user through the use of a searching mechanism.

In order to reach conclusions regarding both the evaluation of various features of the FSE and the overall value of such a platform to the target group, the FSE presented in this study was designed to implement user-interaction data gathering mechanisms that collected various metrics concerning user engagement with the different features of the FSE. During the second stage of this research, which consisted of the public testing of the FSE, these mechanisms collected data from real world usage of the software prototype. According to Terho et al. [30], the prototyping process should involve several stakeholders, with the purpose of providing feedback on usability and on the prototype’s feature set. This feedback does not have to be limited to qualitative data but can be expanded to incorporate user interaction data, as seen in Suonsyrja’s work [31]. According to Suonsyrja, measurements collected during user interaction can evolve into metrics, which, in turn, and through analysis, offer useful insights to the developers. Hence, the user-interaction data gathering approach was deemed suitable for this research. The purpose of this second stage was to collect unbiased, usage-based, quantitative data to use during the evaluation of the FSE.

In addition to this data, after the public testing of the FSE concluded, the third stage of the research was carried out, which involved all stakeholders participating in focus group interviews in order to provide, as Kontio et al. put it [21], “qualitative insights and feedback”. Kontio et al. [21] also argued that such feedback is better when accompanied by other evaluation methods, which in this case are the quantitative user-interaction metrics of stage two. Qualitative feedback was deemed necessary to paint a more thorough picture of both user engagement and usability. During the third stage of the research, the collection of qualitative metrics was achieved through mini focus group interviews. Since the goal of these interviews was to explore a complex software platform and detailed accounts of each user’s experience were necessary, the focus group sizes were limited, as suggested by Litosseliti [32]. The product of these mini focus groups was expected to be especially helpful in assessing the value of the FSE to stakeholders and whether it has a place in the landscape of searching for Arts and Cultural Heritage related content.

2.2. Development of the Federated Search Engine

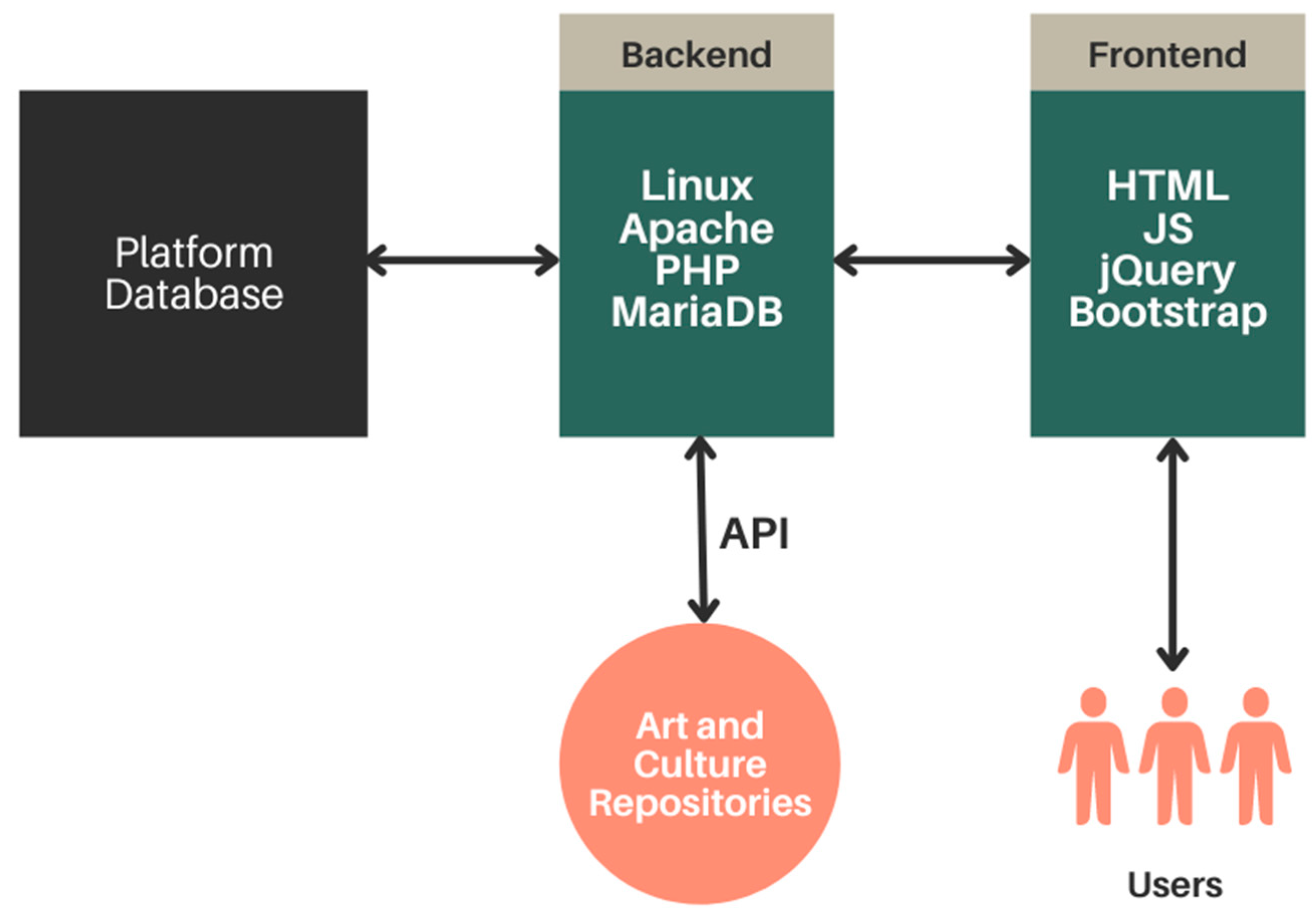

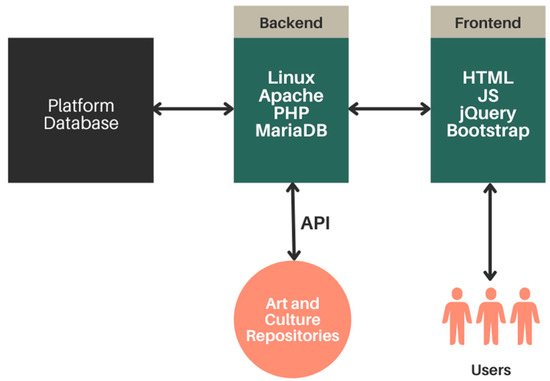

The cornerstone of this research is the FSE that was developed and deployed and will be described in detail in this section. The FSE’s core functionality was build using the PHP general-purpose scripting language and makes use of a relational database created with the MariaDB database management system. The FSE’s interface was implemented using standard World Wide Web technologies and, namely, HTML5 and CSS3. Compatibility with multiple devices and screen sizes was achieved by adhering to the standards of responsive design through the use of the Bootstrap CSS framework. User interactivity with the FSE was enhanced with the use of the client-side scripting language Javascript and its JQuery library.

The platform was deployed on a dedicated server using the CentOS7 distribution of the Linux operating system, as well as the Apache Web server, thus completing an iteration of the LAMP (Linux, Apache, mySQL, PHP) software stack, which is one of the most popular comprehensive solutions for running web applications. All of the above technologies were chosen because of their large outreach, which ensures future support. Using popular and proved software packages both for the implementation and for the deployment of the FSE ensures that development can be expanded and continued in the future by incorporating new developers in the research team, or even by establishing an open source development model.

2.2.1. Core Functionality of the FSE

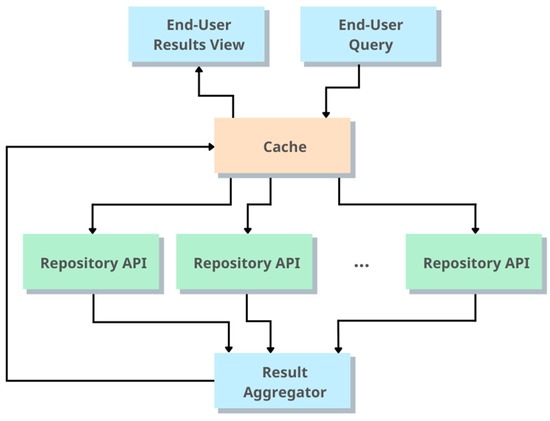

The basic function of the FSE is receiving search queries from the end user and forwarding them to a series of Arts and Culture repositories through the use of the various application programming interfaces (APIs) provided by the repositories. It then proceeds to collect and present the results from the various repositories in a unified manner. In Figure 1, a basic overview of the FSE’s technologies and operation is presented.

Figure 1.

A basic overview of the technologies of the FSE.

The repositories that were selected to be incorporated in the FSE were:

- Europeana Collections

- Harvard Art Museums

- The Metropolitan Museum of Art

- The National Gallery of Denmark

- Artsy

- Crossref

- The Open Library

Europeana is a digital library that grants digital access to European Cultural Heritage information in order to inspire and inform enthusiasts, professionals, educators and researchers.

The Harvard Art Museums is the online version of the Harvard Art Museums, three museums that are part of Harvard University. They are comprised of the Fogg Museum, the Busch–Reisinger Museum and the Arthur M. Sackler Museum. All three of them are attempting to support and advance learning not only at Harvard University, but also in the local community and around the world.

The Metropolitan Museum of Art is one of the most popular museums around the world. Its online version provides access to six of the museum’s historic American period rooms via virtual reality technology that enables Internet users to “navigate” the rooms via all-encompassing, three-dimensional perspectives.

The National Gallery of Denmark is the biggest Art museum in the country, featuring more than 260,000 pieces. Out of these, almost 70,000 artworks have been registered digitally with many types of metadata such as title and artist name. Approximately 40,000 artworks have been photographed (15,000 of which are of a high contemporary quality).

Artsy is the most popular art marketplace, comprised of more than 1 million artworks from a network of over 4000 partners.

Crossref is a not-for-profit association and acts as the official digital object identifier (DOI) Registration Agency of the International DOI Foundation. Its goal is to find, cite, link, assess and reuse research objects easily.

Last, but not least, the Open Library is an open, editable library catalog, aiming to form and provide a web page for every book ever published.

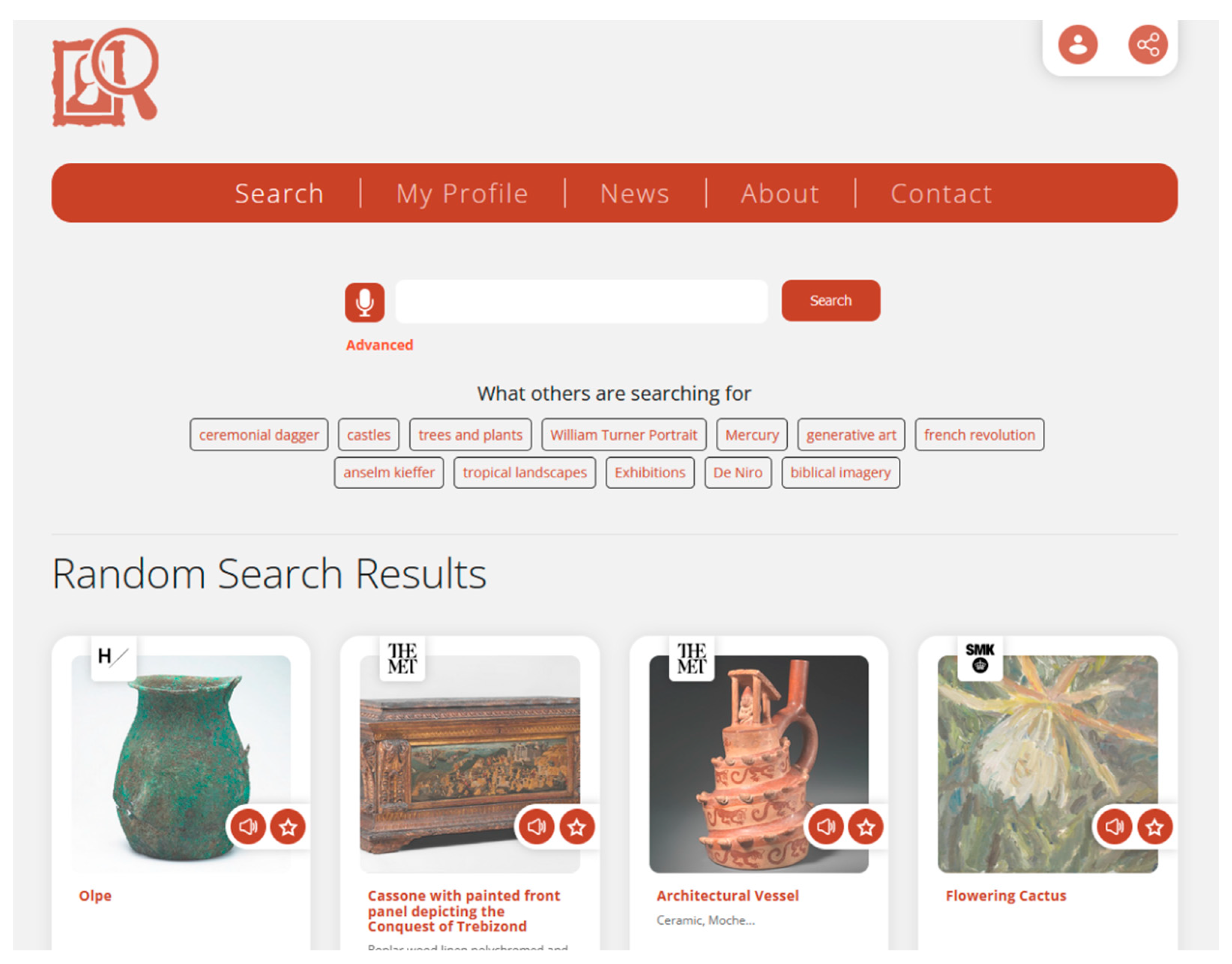

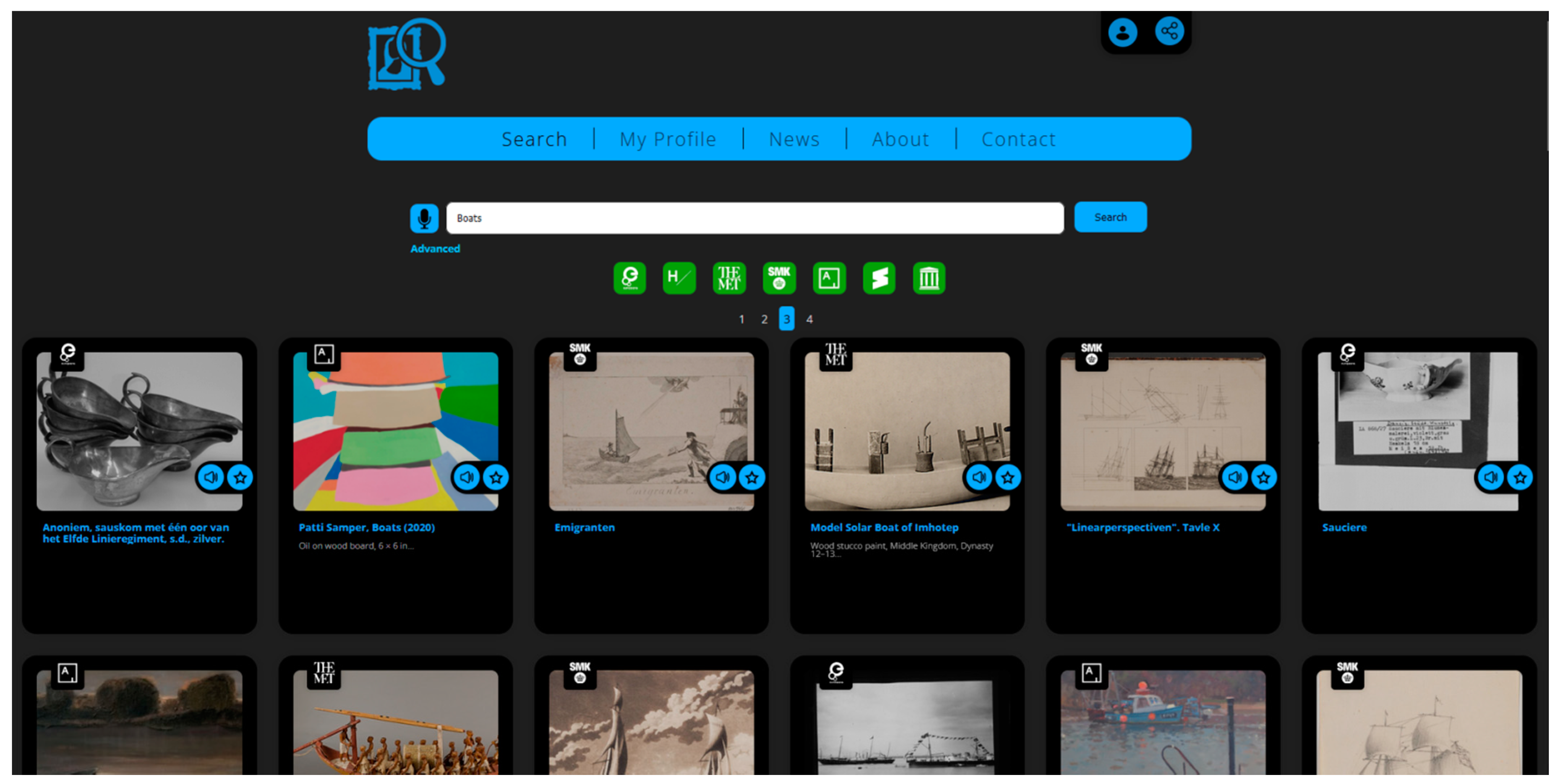

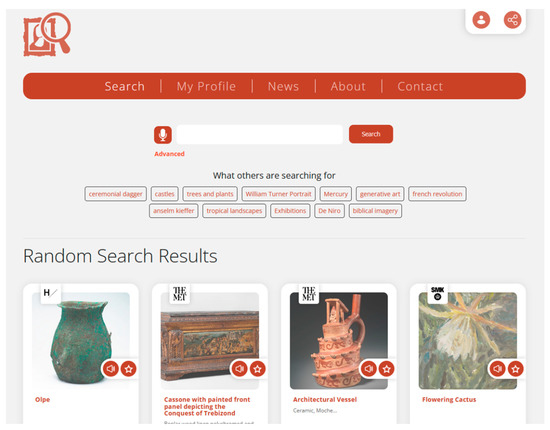

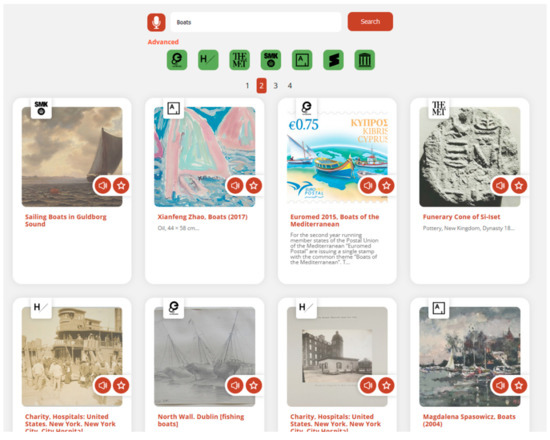

The above selection was based on two main criteria: the quality and abundance of relevant content provided by these repositories and the existence of a RESTful API that would provide all or part of this content to the general public with reasonable restrictions on the number of requests allowed. Most of the repositories provide mainly artifacts or Cultural Heritage objects. In order to provide the end-user with a more comprehensive view of the object of the search query, Crossref a repository providing research articles, was added, as well as Open Library, a repository providing access to books. The article and book parts of the search were tuned to focus on content relating to the Arts and Humanities in order to remain focused on the FSE’s main purpose of serving Arts and Culture related content. Figure 2 presents a screenshot of the FSE’s front page with the Search Query input element of the interface prevalent in the top and center.

Figure 2.

A screenshot of the first page of the FSE’s interface.

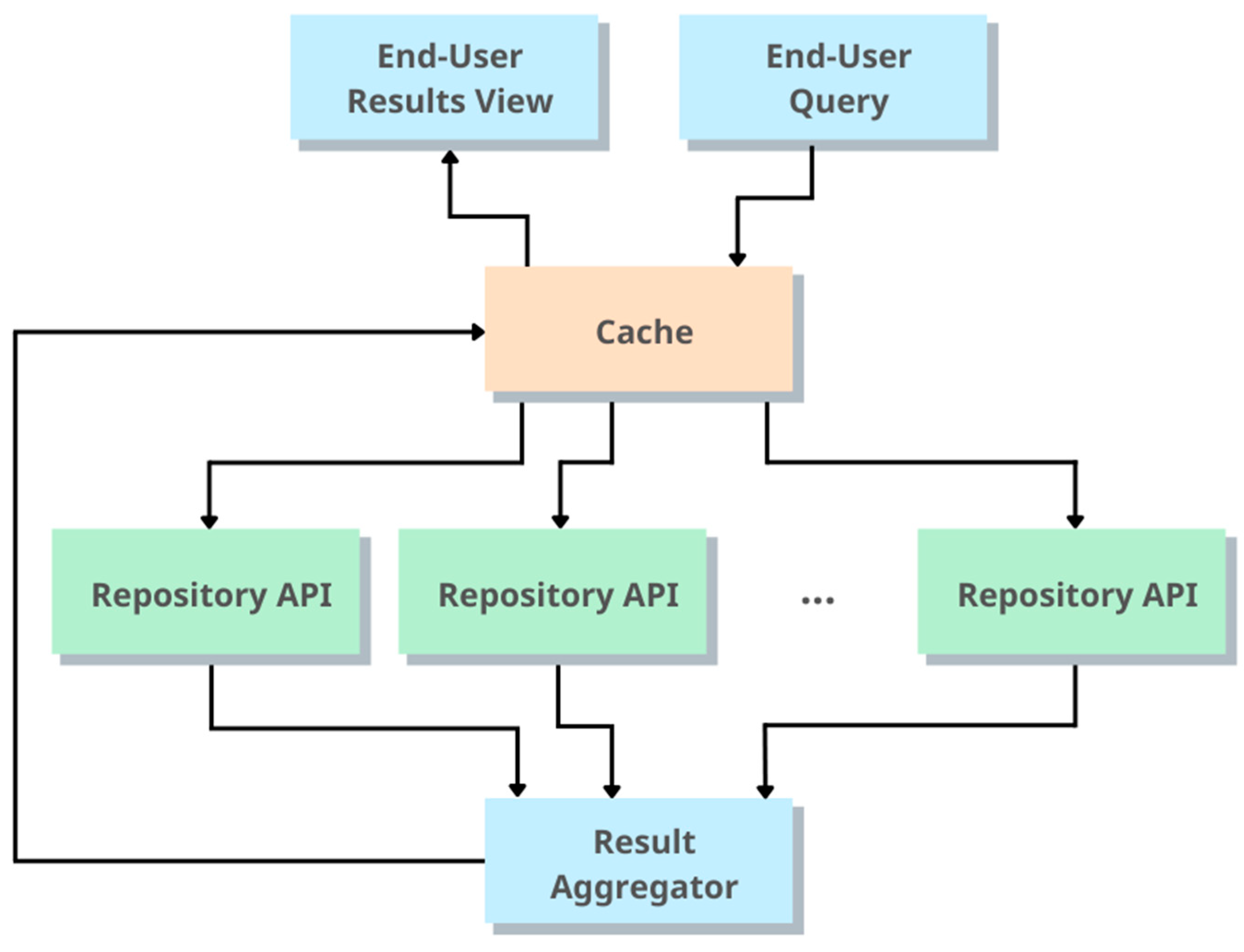

The best practices for creating authentication keys or providing request identification, as dictated by each API’s documentation, were used in order to avoid becoming a nuisance to the content providers. Additionally, the mass scraping of content was avoided. Each contact with the repository APIs is unique and occurs only upon the request of the end-user. Moreover, the results are fetched in small groups, using pagination, in order to stagger the load on the content providers. In tandem with these precautions, a caching system was implemented which ensures that repeated requests for the same content are always avoided. Each individual search result is cached in a local database for at least 24 h and its contents are renewed only upon a new request, which is of course essential in order to keep the results current. In Figure 3, a visualization of the search query process is presented.

Figure 3.

A simplified visualization of the query process.

2.2.2. Result Aggregation and Homogenization

In order to present the results to the end-user in a unified manner, a simple result data model was developed and the various results from the repositories were mapped to fit this new data model. The data model was designed to be as simple as possible since the FSE’s purpose is not to replace the actual repositories but to act as a facilitator, guiding the users and driving traffic toward the actual content providers. Only a small part of each item’s metadata are inserted into the data model and presented to the user in order to enrich their searching process by providing related results and other general, spatial and temporal concepts referring to the items. The rest of the metadata are always available on the repository’s actual view of the result, which is provided through a prevalent link in each result’s presentation page.

In the process of developing this simple data model, the Dublin Core Metadata Element Set (DCMES) was used as a starting point. The DCMES has been formally standardized by multiple standards organizations and consists of fifteen elements, as detailed by Kunze and Baker in the set’s resource description [33]. The Title and Description elements of the DCMES were retained in our simple data model. Europeana’s Europeana Data Model (EDM), which Windhager et al. [34] claim is one of the most mature efforts in the field and incorporates the DCMES, requires a mandatory Title or Description for every Cultural Heritage Object (ProvidedCHO), as dictated in the data model’s guidelines by Clayphan et al. [35], thus indicating the importance of these fields for any digital representation of such content.

The digital depiction of the item, as presented by DCMES’s Source and Format elements, are replaced in our simple data model by the View element and the Image and Thumbnail elements. The intention of these fields remains the same and they aim to provide access to the digital resource of the object of Arts or Cultural Heritage.

The Contributor, Creator and Publisher elements are consolidated in our People element for simplicity. This approach is common and was also suggested by Windhager et al. [34], which similarly consolidated said fields in their “Actor” category for their classification schema.

Additionally, the dcterms:spatial and dcterms:temporal elements of the EDM are also partially mandatory (alongside dc:subject and dc:type at least one of the four is required). Windhanger et al. [34] also create the time and place categories to consolidate metadata relating to the temporal or spatial presence of an object. This information can be derived from either these EDM fields, from the original Dublin Core’s Coverage and Date fields or from any relevant elements of other similar schemata. Following suit, our simple data model establishes the Time and Place elements to store such metadata.

The various other aspects that describe an object are consolidated in the General Concepts element of our data model. Each API and each content provider might offer different types of descriptive metadata and, additionally, they might or might not be applicable to each individual object. Organizing such information is beyond the scope of the FSE, so it is stored uniformly in the General Concepts field.

The current ownership and provenance information (if available) is stored in our simple data model in the Provenance element. Especially in works of Arts and Culture, provenance acts as a “social biography” of the work, according to Feigenbaum et al. [36] and, as such, is of great significance.

Finally, the model incorporates the Rights and Content Provider elements to store information concerning the origin of the objects and their usage rights. This aims to ensure that each object’s dissemination through the FSE does not harm the original content providers or content owners. The importance of respecting the creator’s rights is paramount in artistic works and goes beyond securing financial incentives, as is argued by Ng [37]. Table 1 presents the elements that consist of the FSE’s simple data model with a short explanation of each one.

Table 1.

Elements of the simple data model used by the FSE to represent results.

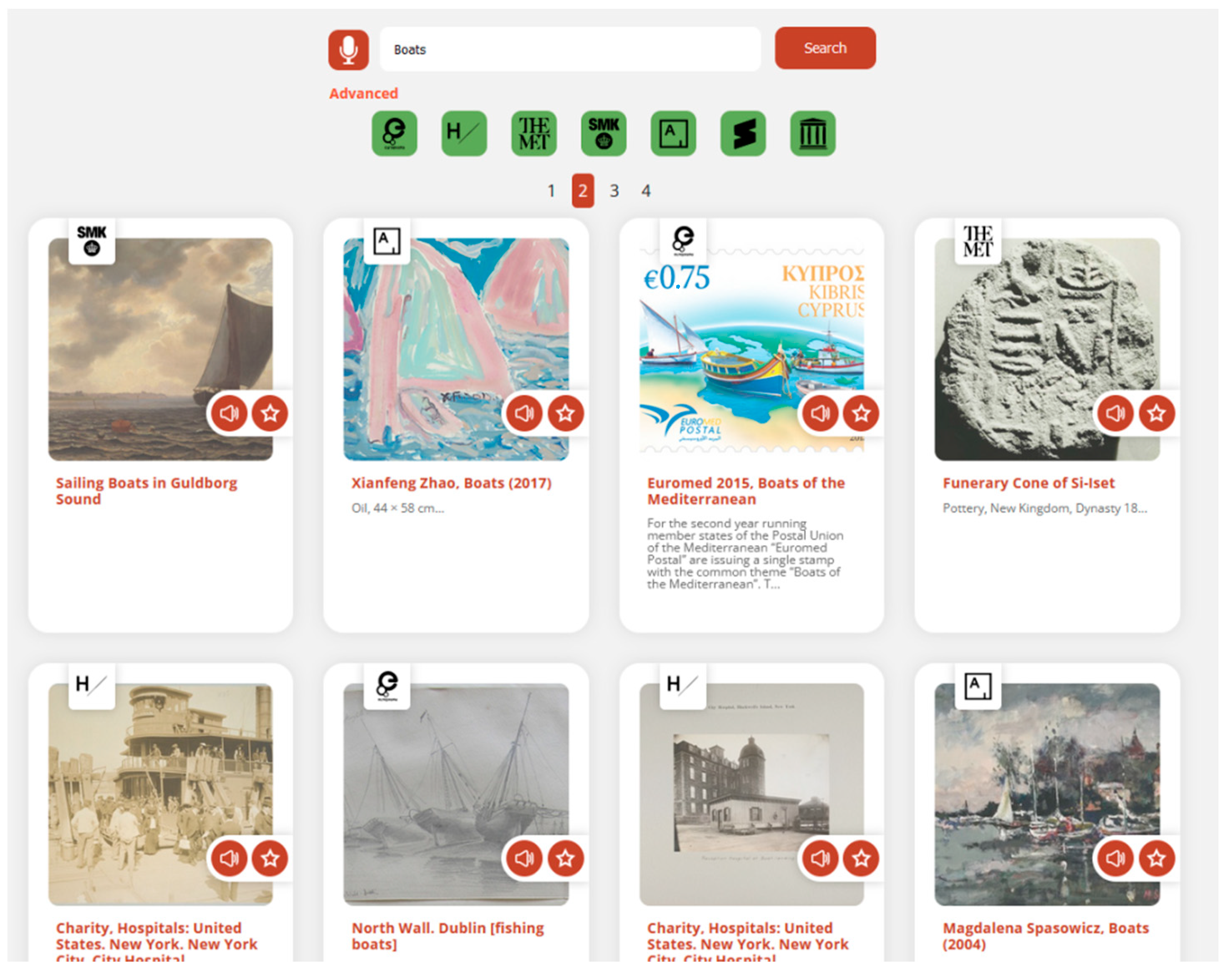

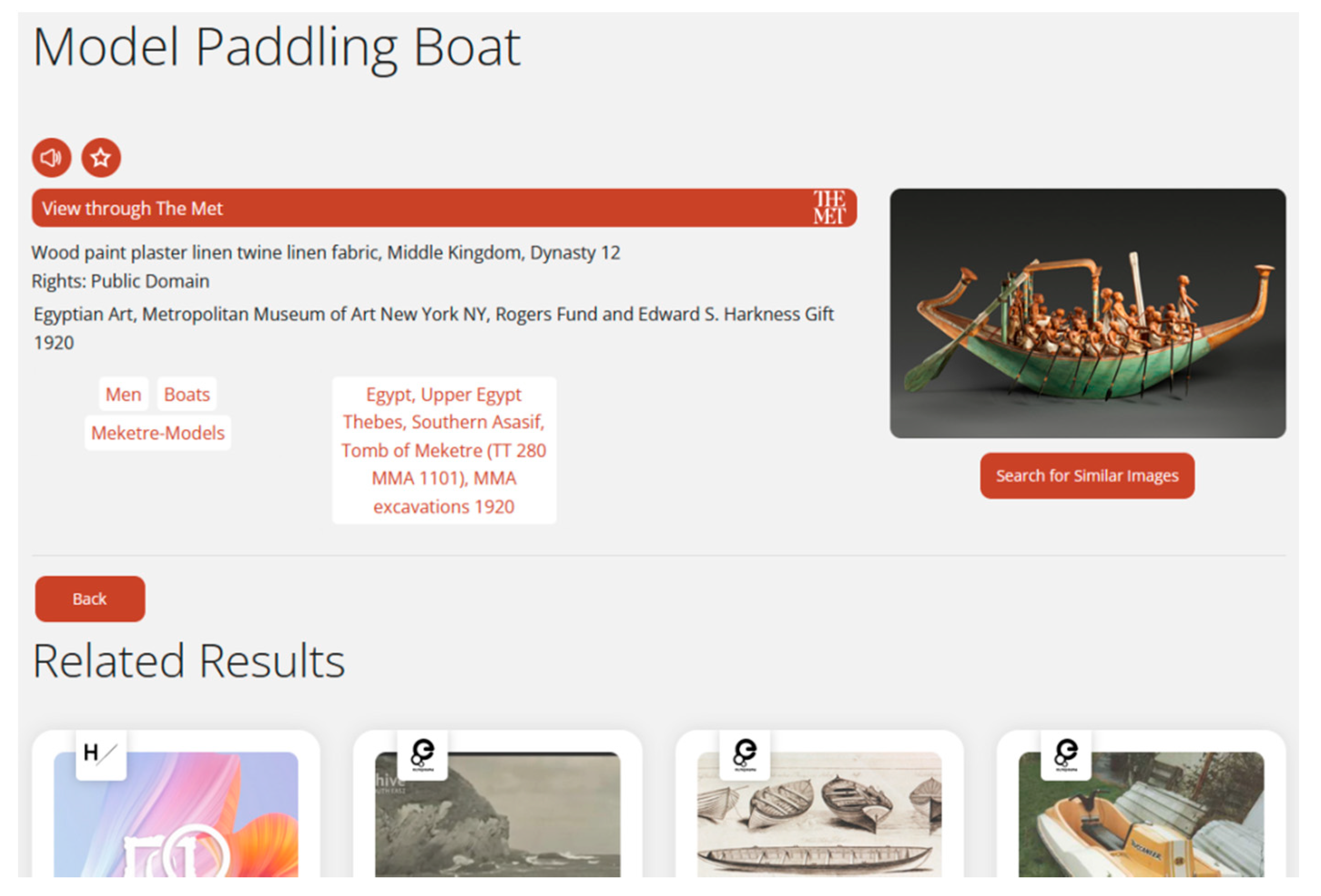

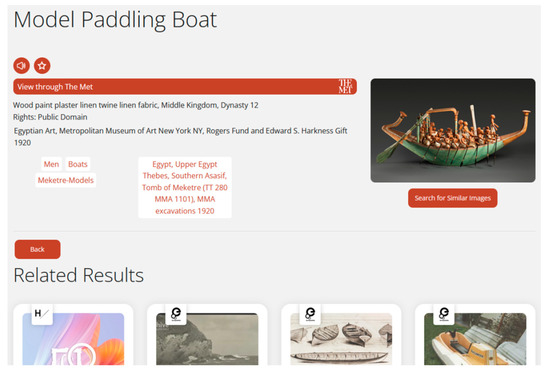

Figure 4 depicts a screenshot of the FSE’s interface presenting a list of results to the end-user. Figure 5 depicts a screenshot of the FSE’s interface providing the presentation page for a specific search result.

Figure 4.

A screenshot of a list of search results, as presented by the FSE’s interface.

Figure 5.

A screenshot of the presentation page of a specific search result, as presented by the FSE’s interface.

A limited advanced search functionality was also implemented. This includes a series of options for the user to improve the specificity of their search. The options correspond to the four elements that describe each item in various terms, as presented in Table 1: People, Time, Place and General Concepts. By selecting one such option before submitting a query, the user has the opportunity to focus on finding results that best fit the specific intended use of the query string. For example, searching for a name as a general term will prioritize finding results depicting the specified person, while when searching by choosing “People” as the search’s advanced option, the focus will shift to finding works created or published by this person.

2.2.3. Additional Technologies

In addition to the search functionality that was described above, the FSE was enriched with a series of features based on modern web technologies and aims to create a more well-rounded and satisfying user experience. These are:

- Text to Speech functionality.

- The ability to preform a Voice Search

- The ability to preform Visual Search

- A simple User System

The implementation of text-to-speech functionality is a great method of providing both ease of use and accessibility. As established by Szarkowska’s [38] findings on film audio description, the usage of automated text-to-speech can be an acceptable solution when narration is not available. With that in mind, all titles and descriptions of the various results, as well as any other text in the platform, can be spoken out loud by the user’s browser using automated speech synthesis. In order to achieve this, the platform uses the Javascript Web Speech API, as published by the Web Platform Incubator Community Group under the W3C Community Contributor License Agreement. Icons for initiating the text-to-speech function are available in all result lists and result presentation pages, as seen in Figure 4 and Figure 5.

Voice search is based on the ability of users to provide voiced queries by using their device’s microphones. This is useful not only in regards to user convenience, especially on mobile devices, but, according to Corbett et al. [39], it can allow for greater accessibility, especially for people with hand dexterity issues. The conversion of user speech into a query that can be used was achieved through the Speech to Text Cloud service provided by Microsoft’s Azure Cognitive Services Platform. The text created by the cloud service is further processed to remove common words that are of low value to the end search query, commonly known as stop words. The final version of this text is then provided as a basic search query string to the system and results are collected and displayed as they would have for a normal text search query. The voice search function can be initiated by clicking on the microphone icon in the FSE’s interface, as seen in Figure 2.

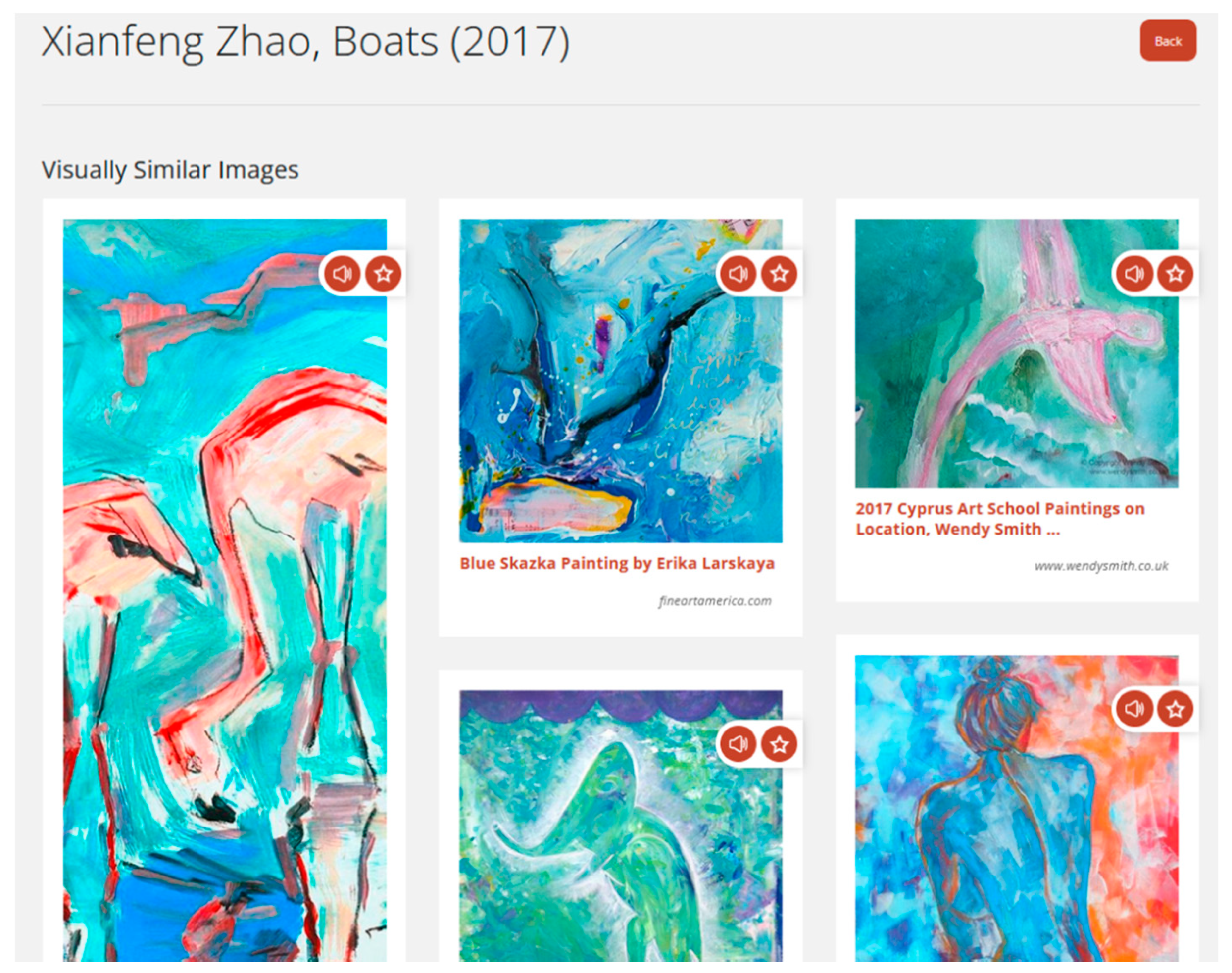

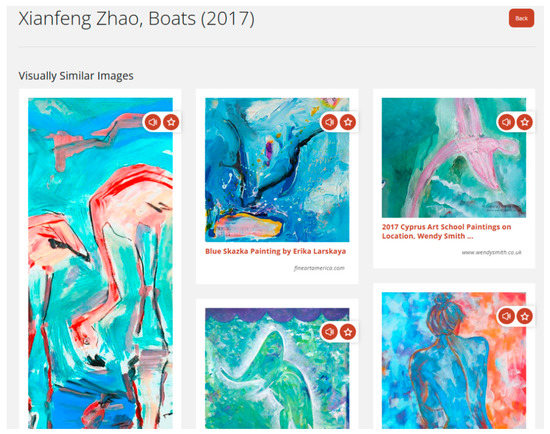

Visual search, often referred to as reverse image search, is the function of searching the Web for images of similar visual qualities as an original image used as the search’s input. While browsing a search result’s presentation page, the user can press a button labeled “Search for Similar Images”, as seen in Figure 5 and, using the Bing Visual Search API provided by Microsoft’s Azure Bing Search Services, the platform will take this representative image from an item’s simple data model and use it as the input for a visual search. The platform then collects results from across the World Wide Web and presents them to the user alongside links to the original websites presenting these images. Figure 6 depicts a list of visual search results, as presented by the FSE’s interface.

Figure 6.

A screenshot of the FSE’s interface presenting visual search results.

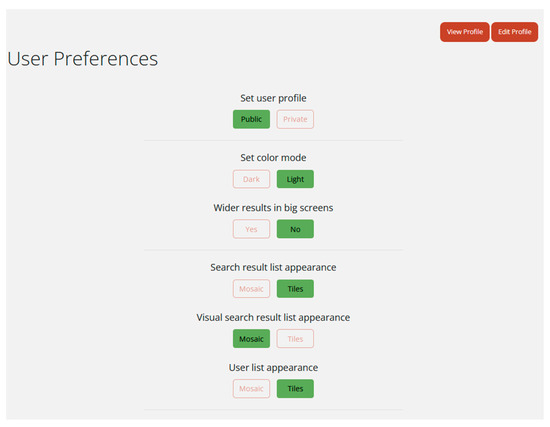

The FSE also implements a simple user system in an effort to both increase user engagement, as defined in regards to the social landscape by Sharma [40] and to satisfy Arts and Culture consumers’ need for a social environment [24]. The end-users can create their own user accounts either by registering in the FSE’s platform or through the use of their Google or Facebook accounts. A user that is logged into an account has the ability to customize their experience through a series of preferences, as seen in Figure 7. Additionally, they can choose to bookmark any search result or visual search result by clicking on the star icon, as seen in Figure 4, Figure 5 and Figure 6. The bookmarked results then appear in the user’s personal profile page.

Figure 7.

A screenshot of a user’s personalization options, as seen in the FSE’s interface.

Furthermore, each user can choose to create a public profile for their account by providing a nickname and an optional personal avatar and short self-description. The public profile version of their account will be available for other users to browse and will display their bookmarked results. Checking out other users’ public profiles can present a different avenue of discovering content related to Arts and Cultural Heritage that works alongside the traditional search query based content discovery. Finally, a user can select to bookmark another user’s public profile and “follow” the other user’s updates regarding their bookmarked results in a way similar to social media.

2.2.4. User Interface and Aesthetics

According to Gaona–Garcia et al. [41], simplicity and maximizing user satisfaction are important principles in building search interfaces for digital libraries and repositories. Extending that to the present prototype that has a lot in common with such repositories, the FSE’s user interface was designed with simplicity and functionality as its main goals. The engine’s main functions were contained in the following web pages:

- The front page

- A page listing search results.

- A presentation page for each search result

- A visual search results’ list page

- The User profile pages

The front page, as can be seen in Figure 2, consists of the main search input element which includes the voice search and advanced search functionality. Further below, a series of popular queries will appear. In addition, random results from other queries, as well as visual search results and public user profiles, are also presented on the FSE’s front page. This series of queries and results are meant to provide the user with an alternative to the classic method of typing a search query.

When a search is submitted, the results appear in a list page, as displayed in Figure 4. Each presented result is provided with its title and a small part of its description, a thumbnail (if it exists), an icon indicating the content provider that procured this result and two buttons, one shaped like a star for bookmarking the result and one shaped like a megaphone for initiating the text to speech function for the specific result. The results are ranked based on the ranking they were provided by their original repositories. Additional pages of results can be viewed by the user by using the pagination links at the top and bottom of the result list.

By clicking on a result, the user visits its presentation page in the FSE, as depicted in Figure 5. This page contains all the elements that were already on the list page that refer to this specific result, as well as the provenance and rights elements. Moreover, the various terms that describe the result, as contained in the elements People, Time, Place and General Concepts of the data model are presented as links. When clicked, these links will initiate a new search based on the specific term. Finally, at the bottom of the presentation page, a series of related results from the FSE’s cached results will appear, as well as the users’ latest previously viewed results. This aims to make discovery and navigation between results easier and without requiring a constant input of search queries from the user.

The visual results list page depicts each result found, as seen Figure 6. Each result is accompanied by its thumbnail, its title and the URL of the domain that the image appears in. The bookmark and text-to-speech icons appear as well, providing their already mentioned functionality. While normal search results are presented in a tile pattern which is achieved by cropping their thumbnail images, the visual search results are presented in a mosaic pattern, thus retaining their original aspect ratio. This can help users better judge the content of each result. In any case, both display methods (tiles and mosaic) are available for both list pages and a user can choose their preferred method in their user preferences page, as is presented in Figure 7.

The user profile pages are only available if a user is logged in and include the preference page, as seen in Figure 7, a profile edit page that allows the user to change their personal data and provide a nickname, an avatar and a description for their public profile, and a personal page that includes the user’s latest searches, as well as their bookmarked results, visual search results and users.

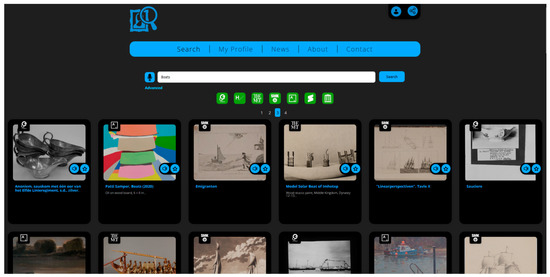

Concerning aesthetics, a limited color palette was used based on shades of black and white alongside a singular theme color. The intention behind this decision was to allow for the various aesthetics presented by the search results themselves to dominate the final visual product, as opposed to the platform’s aesthetics. Rectangle shapes with rounded edges where used for the majority of interface elements, providing a modern feel. Standard sans serif fonts were used for the entire typography, keeping true to the core tenet of the FSE interface’s, which is functional and visual simplicity. A dark mode for the interface was also implemented that makes the FSE easier to use in rooms with low illumination. Additionally, in an effort to make better use of high resolutions, display support for displaying search results on wider screens was also implemented. Both the dark mode and the wide result list are presented in Figure 8.

Figure 8.

A screenshot of the FSE interface’s using dark mode and wide result list.

2.3. Beta Testing of the FSE

The closed Beta test of the FSE took place in late March and early April of 2022. The participants were selected using purposive sampling and were chosen for being stakeholders, meaning part of the intended target-group of the platform. They covered a wide range of ages, with the youngest stakeholder being in their early 20s, while the older one was in their late 50s. The group of stakeholders included 7 women and 5 men. Since the research was based in Greece, all of the stakeholders currently reside in Greece. In Table 2, the desirable related attributes of each participant are presented in a vague manner in order to preserve their privacy.

Table 2.

The desirable related attributes of the stakeholders.

The stakeholders were presented with an invitation to participate in a series of guidelines regarding the usage of the FSE. While some suggestions about use cases were made, there were no specific requirements on what the participants were supposed to do during the beta testing period and they were left to engage to the extent in which they desired.

During their engagement, the FSE collected quantitative metrics regarding their usage. The collected metrics were in the form of a timeline of actions taken from a user over the course of a single session of interaction with the platform. A total of 20 different metrics were being monitored, including submitting a query, a voice query, performing a visual search, viewing a result, viewing a visual search result, updating an avatar, editing a user profile or changing user preferences, bookmarking and removing bookmarks of results and visual search results, using text-to-speech of all the various elements, opening the source view of a result and clicking a concept tag. All metrics included the timestamp of the event taking place and the value of the related event (e.g., the term of a search query). Using the collected data, a user’s interaction with the platform could be recreated to a high degree, thus providing the researchers with a very clear understanding of system-user interactions.

2.4. Evaluation Interviews

Despite the great amount of detail in quantitative metrics collected, the sample of stakeholders is limited, which makes the quantitative part of the beta testing secondary to the main feedback method, which was the collection of qualitative data regarding the FSE’s usage through two mini focus group meetings. The focus group meetings took place in April 2022 using the Zoom online platform. Each meeting hosted a subgroup of the stakeholders and had a duration of over one hour in which the stakeholders introduced themselves and held an open conversation guided by a series of open-ended questions. The participants were encouraged to interact with each other and establish rapport, which led to very interesting results that will be presented in the Results section. Table 3 presents the questions that guided the mini focus group conversation.

Table 3.

The questions which guided the discussion of the mini focus groups.

The main purpose of the mini focus group interviews was to evaluate each feature of the FSE individually, as well as provide an overall assessment of its usefulness to the stakeholders and of the stakeholder’s interest in its use. Following the reasoning established by Sunikka [42] and evaluated by Kontio [21], the focus groups started with a series of warm up questions, including the self-introduction of the stakeholders, continued with the core functionality questions (Q1–Q6) and ended with general feedback questions (Q8–Q9). Similar to Sunikka [42], the core functionality questions included questions regarding technical issues, aesthetics, interface and ideas for further development.

Q1–Q3 each investigate a portion of the FSE’s functionality. Q4–Q5 proceed to the evaluation of the user experience based on the interaction of the stakeholders with the FSE both in terms of interactivity and in terms of aesthetics. Q6 revolves around the technical issues and limitations of the FSE and is an important question in assessing the feasibility of the FSE, as stated in RQ1. A system with technical issues that hindered user experience would raise questions about its viability. Q7 allows stakeholders to provide feedback considering missing features and is a great way to assess the importance of various features in regards to the effectiveness of such a platform, as proposed by RQ2. Q8 is a direct evaluation question aiming to provide insight as to the usefulness of the FSE that is the main objective of RQ3. Finally, Q9 prompts the stakeholders to profess any and all additional considerations regarding the FSE and also acts as a general feedback question for the whole process.

3. Results

The results of this study are two-fold. First, we have the quantitative metrics that were collected by the FSE during its use by the beta testers, and which correspond with their actions while interacting with the platform. Secondly, we have the oral feedback of the stakeholders themselves, as they provided it during the two mini focus group meetings that were held after the platform’s beta testing period had concluded.

3.1. Quantitative Metrics concerning the Use of the FSE Collected by the System

During the beta test period, a total of 61 usage sessions were identified by the FSE’s data gathering algorithm. The criteria for an interaction to qualify as a proper usage session was to contain at least two HTTPS requests and to have a total duration of above 5 s. Information collected concerning each such session included the client’s IP address, its country of origin, the user agent text, an identification of the type of device used for the session (mobile or desktop) based on the user agent, the total duration of the session and the date and time of the first and last interaction of the session. Additionally, if a user chose to log in during a session, that session was marked as belonging to that specific user. Table 4 presents a sample of this gathered information, but with any sensitive information redacted.

Table 4.

A sample of information gathered per session.

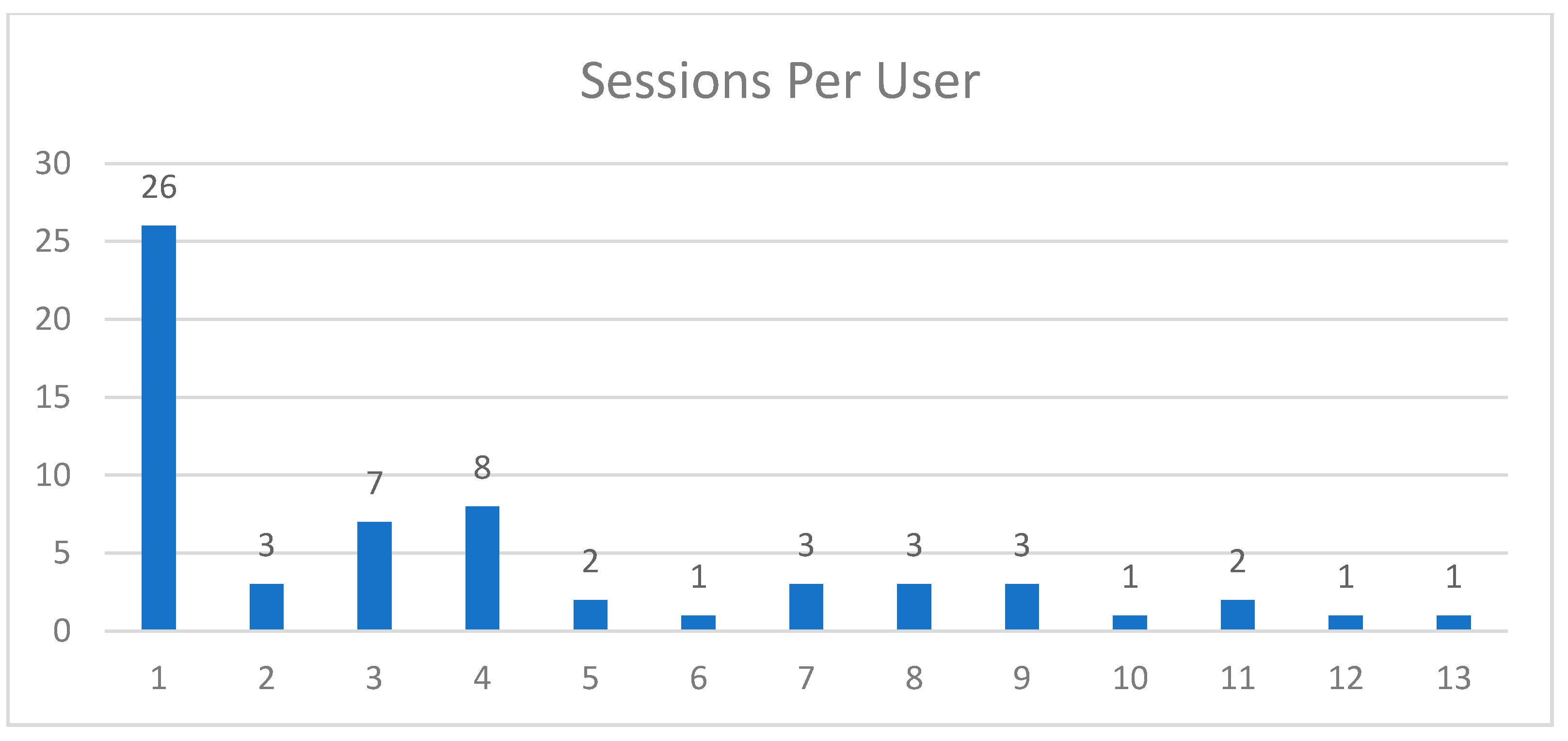

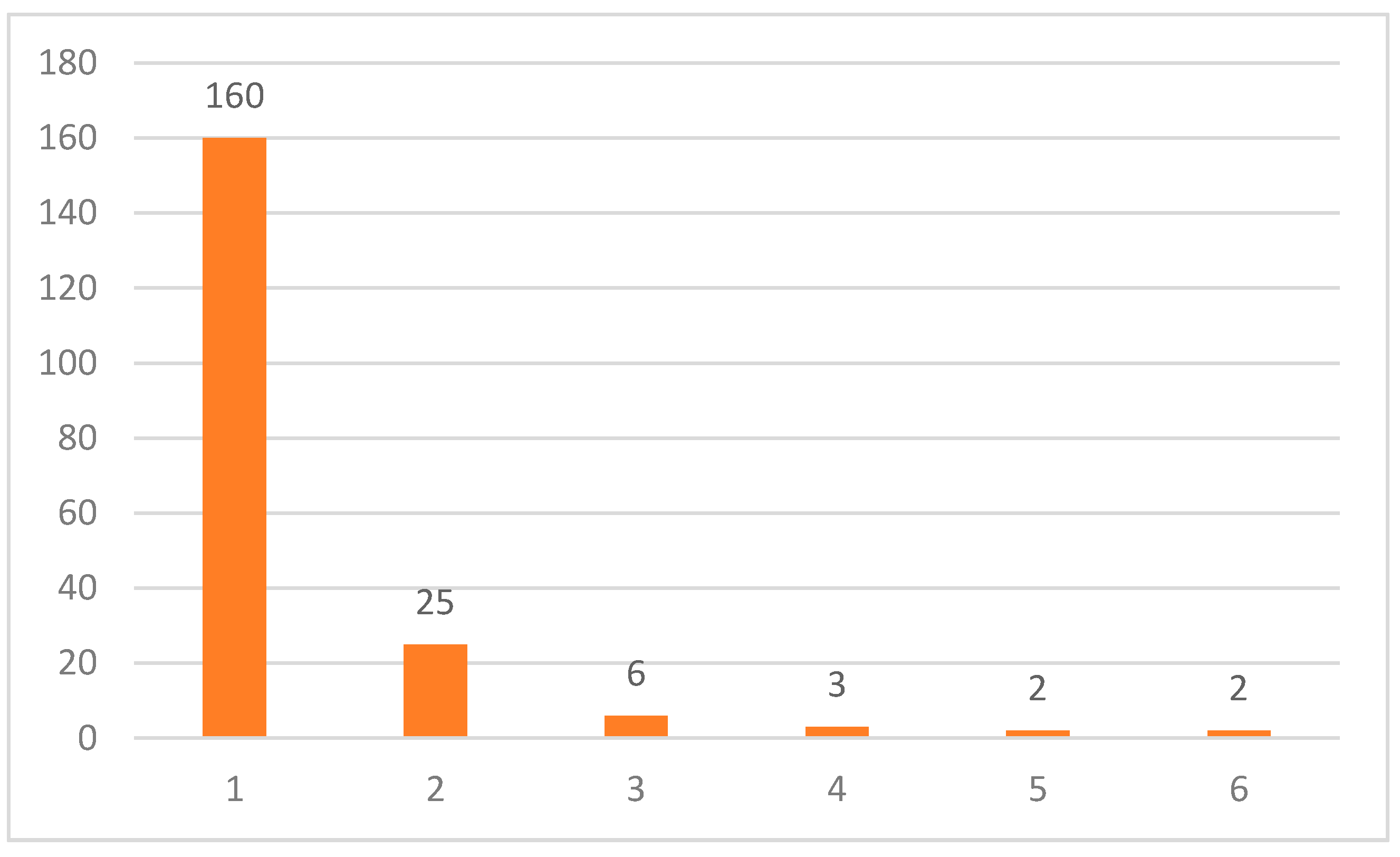

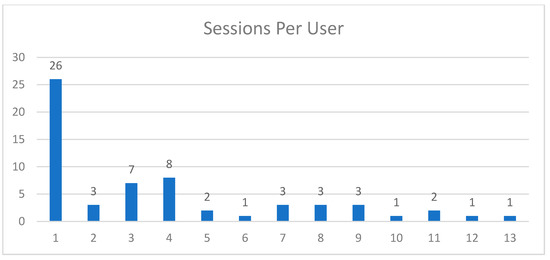

During the recorded usage sessions, twelve unique users were registered and logged in. Some of the sessions did not involve a user login and are attributed to user 1. This represents a session of searching using the FSE as an anonymous user. In Figure 9, the distribution of sessions per user is presented, including the anonymous user.

Figure 9.

The sessions per user. User 1 is the anonymous user.

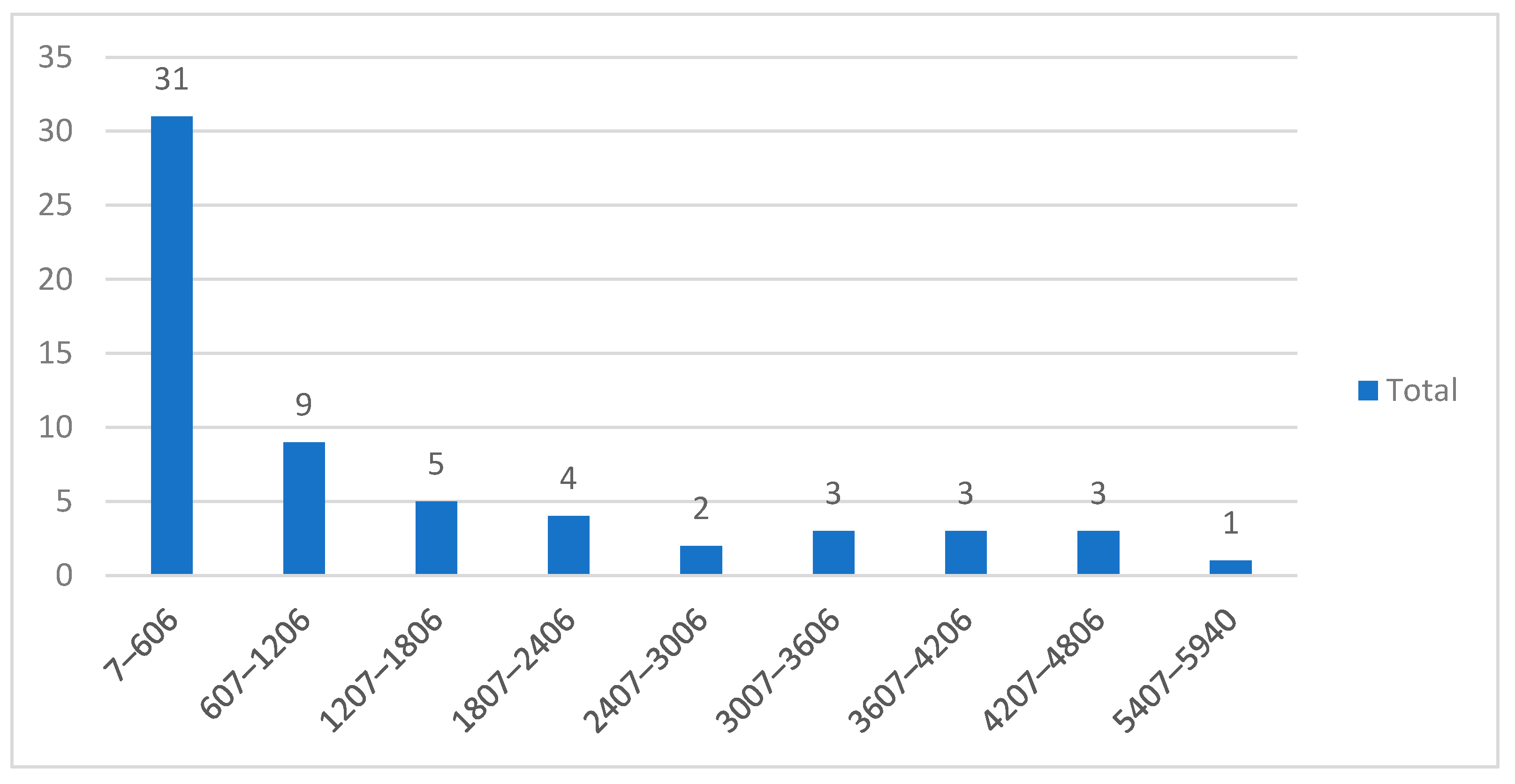

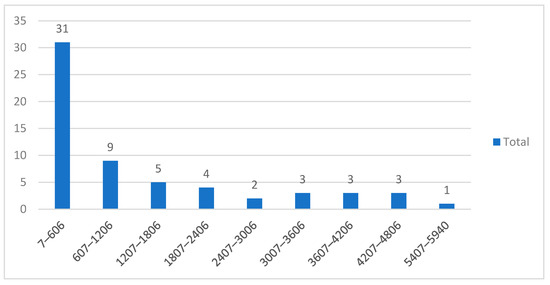

A total of 26 out of the 61 sessions were anonymous, while the other 35 included a user login. The average session per named user was 2.9 sessions/user. 53 out of the 61 sessions were conducted using a desktop or laptop computer, while 8 out of 61 were conducted using a mobile device such as a smartphone or tablet. In Figure 10, the sessions are presented by duration. Ten brackets were created, each representing a 10 min time span. 31 out of 61 sessions were less than 10 min long, with the shortest one being 7 s. The longest session lasted 99 min. The average session duration was 19.6 min, while the median was 8.9 min. When studying the sessions that included a user login, the average duration increased to 25.6 min and the median to 16.9 min.

Figure 10.

The sessions and their duration in seconds.

In addition to session related information, the FSE’s algorithm recorded a series of interactions between the users and the FSE. For each one of them, the session, the type of interaction, the time of interaction and any value relevant to that specific interaction (e.g., query string, link, result id, etc.) were recorded. Table 5 presents a series of interaction events, as measured during these usage sessions and their absolute quantities, as well as their derived frequency per session.

Table 5.

The interaction events recorded.

Out of the 300 search queries submitted, 160 where a unique combination of search string and advanced search type (item, time, place, concept), and 152 were completely unique query strings. The advanced search functionality was used on 12 queries in order to designate an advanced search type. The 152 unique query strings presented an average of 1.76 words per query.

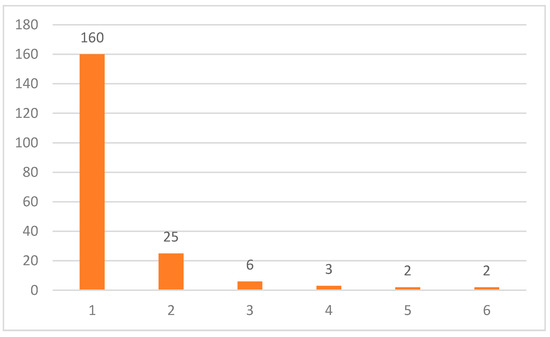

Users occasionally viewed more than one page of search results while conducting a content search. Figure 11 presents the amount of times a specific page of search results was viewed during the beta test. The first page of results was viewed in 160 queries, the second page of results in 25 queries and so on.

Figure 11.

The frequency of specific page views.

3.2. Qualitative Feedback concerning the Use of the FSE Provided by the Stakeholders

The major points of the feedback provided by the testers during the mini focus group interviews is presented below, per question, as they appear in Table 3 of the methodology section.

3.2.1. Q1: Make a First Evaluation of the Search Process—Which Are the Strong and Week Points of the Basic Search Functionality?

Respondents seemed overall satisfied with the basic search functionality results. Positive opinions were expressed on the number of results, on the variety of result types (books, articles, etc.) and on the focused nature of the results. Respondents that searched for broader terms and notions were generally more satisfied than respondents that searched for specific individuals (people) or specific works that were not in any of the source collections. Results that were not on point were not always considered a drawback since they were seen as a tool for broadening the scope of the search.

S5 focused a lot on searching for modern artists and was disappointed by the FSE’s limited data pool. They expressed the least satisfaction in the basic search functionality.

S12 expressed concern about the imbalance between artefact results and article/book results. They felt that images of artifacts were overshadowing the other results.

S7 pointed out that searching for “nude” in this engine would result in content involving nudity in art, while this query in Google would result in inappropriate content (porn, commercialization, abuse). This was evaluated as one of the most desirable elements of the platform: curated content, as opposed to general purpose content.

During Q1, a lot of respondents trailed off into other issues regarding their overall user experience and not just the basic search functionality. Answers and quotes regarding these other issues will be presented where appropriate bellow.

3.2.2. Q2: Did You Use the Voice Search and Visual Search Features? Why? Why Not?

Most respondents used both the voice search and the visual search functionalities. The voice search was criticized by some for not successfully comprehending their speech, but others were defensive of its capabilities. Voice search for names proved unsuccessful more often than not. Visual search was highly acclaimed. Most respondents claimed to be very interested in the functionality and praised the quality of the results it provided.

S8 expressed concerns about visual search expanding the data pool to the wider web. They claimed results from commercial sites were troubling.

S4 declared that visual search was “one of the most interesting features of the platform”.

Accent and word pronunciation might have caused trouble in voice search since all respondents were not native English speakers.

3.2.3. Q3: Did You Use the User System and Public Profile Feature? Why? Why Not?

All respondents created a user profile, and the general consensus was that the bookmarking, user preferences and search history functionality were very helpful.

Having a public profile was controversial. Some respondents were interested in interaction with other users and viewing bookmarked results of others. On the other hand, some users avoided making a public profile and found that functionality unnecessary.

Moreover, there was no consensus on the scope of the public aspect of the user system, as some respondents thought it was too limited to be of value, while others praised its simplicity and the fact that it does not try to become a full-fledged social media system.

S6 claimed that having a clear choice between a private or public profile was exceptional.

S8 argued that they have no interest in seeing what other users bookmark since they do not really know who the other users are.

Some of the user functionality appeared to not have been made clear to a few of the respondents.

3.2.4. Q4: What Are the Advantages and Shortcomings of the User Interface of the Platform?

Respondents spoke positively of the front page of the FSE that provides random results and popular searches. They also commented positively on the clear and simple nature of the UI. The text-to-speech components also received some praise but there were concerns about the synthetic speech’s ability to properly pronounce some content. More than one respondent commented that there was no clear information on the original content providers other than their icons and suggested a clearer reference to the original content providers. Moreover, respondents agreed that having a summary indicator of the search results (number of results, distribution per type: item, book, article) would be desirable.

S4 noted that the UI “focuses on the items themselves”.

S1 expressed concern that the related results that appear in the presentation page are based on the item being viewed and do not take into account the initial search query that lead the user there.

There were no complains of the FSE being hard to figure out or non-intuitive. This is a very strong indicator that the respondents didn’t encounter interface induced frustration.

3.2.5. Q5: What Is Your Personal Opinion on the Aesthetic Look of the Platform?

The respondents reacted positively to the aesthetics of the FSE. It was described as “easy on the eye” and “elegant without being overly simplistic”. Some minor refinements were suggested concerning the appearance of specific UI elements such as the advanced search toggle and the top menu. The existence of a light theme and a dark theme was viewed positively by several respondents.

S3 suggested the addition of more themes beyond the light and dark ones since “the platform is art related”.

S1 was concerned that the option to view items in tiles or in mosaic form was unnecessary.

Many of the respondents had expertise in visual arts and digital design. Neither the colors nor the fonts were negatively criticized.

3.2.6. Q6: Did You Encounter Technical Difficulties While Using the Platform? What Kind and How Did That Affect Your Experience?

The respondents noted some technical difficulties concerning the profile editing process, which had been resolved by the time of the interviews. Some concerns were also raised regarding the speed of the basic search process, which was considered somewhat slow. There were some concerns that visual search based on low quality images returned visual results that were intentionally blurry or distorted.

S5 was concerned about the basic search functions not correcting misspellings like popular general purpose search engines do.

S1 noticed some dysfunction in the social media sharing functionality that was the result of the use of an ad blocking browser plugin.

The respondents’ main goal was to test the FSE’s main functions and evaluate its use. They were not tasked with hunting down technical issues or trying to create intentional problems. As such, it was to be expected that any technical issues mentioned would be limited.

3.2.7. Q7: What Additional Functionality or Interface Features Would You Like to See Added to the Platform?

Respondents largely agreed that the advanced search functionality could use some improvements. Most expected the user to be able to provide different search terms for each advanced search field such as person, time, etc. and combine them to create one unified search query. Additional concerns were voiced regarding the related terms searches that were initiated from the result presentation page. There was no consensus on whether they should be used as a new query or as a specification of the query that produced the result, but some respondents supported that specializing the existing query would help with discovering more obscure results.

Finally, a major suggestion that had multiple supporters was the inclusion of more languages. The discussion covered a lot of alternative solutions to the language issue, such as automatic translation or including different repositories from different languages. Special mention was made about how the platform is suitable for teaching and students or that trainees would benefit from wider language support.

S2 suggested a system of keeping bookmarks in different bookmark folders for easier organization.

S4 suggested the ability to filter results based on type (item, book, article); not from the user preferences, but from the results list.

S1 suggested a method of notifying the users about queries, users and results in which they might be interested through emails as a way to create an interactive relationship with the users that will act as an incentive for them to return to the platform.

A lot of respondent suggestions throughout the evaluation of the FSE, but especially in this question, were made without technical knowledge of the difficulties a new feature might impose. Respondents were explicitly encouraged to voice any and all concerns and to make any suggestion regardless of feasibility or cost effectiveness, since these are matters that the research team will evaluate at a later stage.

3.2.8. Q8: Would You Use This Platform If It Was Made Openly Available? Why? Why Not?

The consensus was that the respondents would use the platform if openly available.

The FSE was evaluated as innovative in this specific field and already operational to a large extent. A lot of the respondents were explicitly supportive of the FSE using words such as “exciting”, “presenting enormous interest”, “unprecedented” and more. Special mention was made by multiple respondents about the value the FSE would have for students of all levels in various fields such as History, Art and Cultural Heritage. They also stated that they would suggest the use of the platform to students or ask students to use it as a tool for school or university projects.

S7 mentioned that the platform is especially useful because the field of Arts and Culture has specific needs that general purpose tools have a hard time meeting.

S2 specifically claimed that the platform could see a lot of use in secondary education, in a school environment.

3.2.9. Q9: What Other Comment Would You Like to Add That Wasn’t Covered by the Previous Points?

Since this question focuses on individual commentary, there were no common or unified responses, but rather a series of relevant matters that were discussed between the respondents.

S3 presented a case for expanding the platform to offer news coverage concerning relevant fields such as open access events, Arts and Culture news, articles presenting similar tools or tools targeting a similar audience and so on. They claimed this would serve as an incentive for people to revisit the platform and keep the interest and engagement levels high.

S5 also supported this feature, requesting a focus specifically on activities of museums, artist groups or art collectives.

S8 also showed interest in adding news related content to the platform to work alongside the search functionality and to create an art related portal.

S1 and S4 both focused on the inclusion of other institutions as content providers and the expansion of the data provided by the FSE.

4. Discussion

4.1. RQ1: Is It Feasible to Create a Search Engine for Art and Cultural Heritage That Uses Content Openly Available by Different Entities and What Challenges Need to Be Overcome?

As presented above, the development of the software prototype was successful. Through the use of the aforementioned APIs, a significant amount of content related to Arts and Cultural Heritage was able to be presented by the FSE. The success of the task of producing a functional FSE for Arts and Cultural Heritage was not self-evident, considering that, as noted in Kapsalis’s work [43], repositories are often skeptical about offering their content publicly in order to avoid additional burden in their digital infrastructure, increased workload for personnel, the potential loss of revenue and the chance of a loss of intellectual control. Despite that, a competent number of providers were identified that offered a significant amount of content through their APIs. An important challenge was posed by the lack of standardization in the content repository APIs used, which is a problem similar to what Premchand et al. [44] identified in the field of Financial Institutions. The various differences and approaches of the different art APIs was presented in detail by the research team in 2022 [45].

The integration of various technologies, such as voice and visual search, was accomplished through the use of Microsoft’s Azure cloud services. The incorporation of cloud services in the FSE delivered the benefit of faster time to market, which is one of the important benefits of the cloud-as-a-service platform, according to Krishna et al. [46].

4.2. RQ2: What Features and Technologies Contribute Most to the Effectiveness of Such a Software System, Both from a Functionality and from a User Experience Perspective?

Studying the collected user-interaction metrics concerning the FSE’s usage can provide a good idea of which features were of most interest to the users. Table 5 presents the various other metrics that the FSE’s system recorded. Overall, metrics concerning the basic search functionality, such as submitting queries and viewing result presentation pages, show the most use. The main feature of a search engine is searching for content, and this notion is reinforced by the findings in this case. From the secondary functionality, the viewing of each user’s own profile seems to be popular. The profile page is not only where users see their bookmarked content, but also where they can review their public profile (avatar, nickname, etc.) and this seems to be the source of its popularity. As noted by Sharma, managing, bookmarking and making information public can be considered a more intense form of user engagement [40]. Additionally, changing user preferences is also a popular metric. Both of the above observations indicate that a user system—even a very simple one, such as the one implemented in this platform—is great for increasing user engagement. Users take the time to craft their public profiles and also to personalize their experience, or to at least experiment with the personalization options offered. Despite the benefits of user registration (for both the user and the website) and its effect on engagement, Li et al. point out that there are concerns, often stemming from worries about information privacy and lack of trust [47]. Li et al. detail how these concerns can be mitigated through brand awareness and word of mouth regarding the trustworthiness of the website [47]. Gafni et al. discuss how the Social Login mechanism, despite still presenting concerns about trust and privacy, might mitigate registration concerns by helping with “password fatigue”, but they also mention concerns over the loss of anonymity [48].

Visual search follows in usage popularity according to the metrics. The visual search functionality is often difficult to use in tools such as Bing Visual search, Google reverse image search or TinEye because the user is expected to provide a URL or an actual file containing the image. As Gaona–Garcia et al. concluded, “simplicity should be one of the fundamental principles of building search interfaces” [41]. In this case, directly connecting this functionality with the images found by the basic search in the various content repositories employed by the FSE provides simplicity by eliminating that intermediate step and makes stakeholders more interested in its use.

Device usage was heavily skewed towards desktops. Out of a total of 8 mobile sessions, two where anonymous and the others belonged to two specific users. This indicates a clear preference for desktops, but for users that preferred mobile devices, it was their only engagement device for the total of their sessions. While in general purpose engines such as Google, mobile search seems to be overtaking desktop search in most countries as observed by Beus [14], this was not the case in this study’s metrics. The preferred use of desktops and laptops for art related searches, as opposed to mobile devices, was also a finding in previous research conducted by this team [24]. The fact that art-related searches are more often than not informational, as opposed to navigational or transactional, plays a big part in this differentiation. Church and Oliver [49] point out that “the popularity of stationary mobile web access is increasing” from both home and work, which would create the expectation of higher mobile usage in our findings. On the other hand, they discuss how mobile search in particular might be dictated by the user’s social interactions (e.g., the motive for a search might manifest during conversation) [49]. This means that if the FSE’s user pool were larger, the effect of “social mobile search” might have led to an increase of mobile use.

According to Figure 11, which depicts the frequency of specific page views during the FSE’s beta testing, it is made apparent that most participants were satisfied with the first page of results and did not delve any further. It should be noted that the FSE may present up to a maximum of 70 results on its first page (10 from each repository), which even further decreases the chances of a user going beyond the first page, which is less than 20% in general purpose search engines, corresponding with what both Beus and Silverstein et al. observed [13,14]. In the case of this beta test, the results of the second (or later) page were viewed in only 16% of the total number of unique search queries.

The average query length of 1.76 that was recorded is smaller than what is found in most general purpose search engines, but a smaller average query length is something that is often reported in searches that relate closely to Arts and Culture, as observed in searches in Europeana made by Ceccarelli et al. [50].

Besides observing the features that were most used in the prototype FSE, a second way to gain insight into which features might contribute to the effectiveness of such a system was through the feature related feedback of the stakeholders. Most of the feedback regarding improvements and feature requests revolved around the FSE’s success in helping the user make their search more targeted to specifics. The fact that the engine uses a limited pool of resources and content works to its advantage when eliminating irrelevant, commercial or even controversial content that can be found in the uncurated general web. As Dimoulas et al. [51] state, credibility and quality of the results may be just as important as popularity, and in this context, what Dimoulas et al. attempt to achieve through alternative Search Engine Optimization models, this FSE achieves by limiting its scope to trusted sources. Unfortunately, this is not without its drawbacks. The limited pool of resources might not be able to satisfy the end users’ request for specific information. Widening the search parameters when results are limited is something that is employed by some of the APIs integrated in the FSE. This tactic, on the one hand, ensures that at least some results will be presented, but on the other hand, greatly reduces the engine’s accuracy and creates a lot of noise. De Groote and Appelt, in their work concerning federated search in the field of Health Sciences, conclude that the increased number of results an FSE produces are not useful if they contain an unwieldy number of irrelevant citations [52]. It is up to the FSE to make sure search fuzziness behaviour is consistent across the board, and though this iteration of the FSE attempts to accomplish this, there is always room for improvement. Allowing the user to create more specificity in their searches through mechanisms such as the advanced search might be a solution to the issue of high inaccuracy. Additionally, using the links that are generated by a result’s relevant terms regarding People, Time, Place and General Concepts as they appear in Figure 5, to make a search query more specific instead of more general, might also help. That can be accomplished by including the search query that brought the user to said result into the new search that begins when a term is clicked.

Another major point of respondent feedback had to do with the inclusion of more languages in the FSE. Accessibility was always one of the main design goals when implementing the FSE, as can be made apparent by the use of TTS, light/dark themes and the high-contrast and simple presentation of the UI. However, the language barrier is also a big factor in accessibility and one that is often overlooked. As Rehm et al. mention, “language barriers impacting business, cross-lingual and cross-cultural communication are still omnipresent” [53]. English is the “Lingua Franca” of the web, at least in the west, and because of this, most content providers put effort into offering their content at least in English. Despite that, there is still plenty of content in other languages, and on top of that, there are people who do not speak English to the level required to understand the information related to them by the FSE. Including more languages in the platform would be a great way to increase the platform’s reach and through it the actual reach of the content itself. Systems of automatic translation might be a great tool to move toward that goal, although the cost effectiveness and feasibility of such an endeavor should be carefully considered. In the future, a language-centric AI approach, as outlined by Rehm et al. [53] might make such an undertaking less time consuming and more cost effective.

4.3. RQ3: Is the Functionality Provided by Such a Platform Considered Useful by the Stakeholders and Can This Platform Cover Existing Needs in the Field of Art and Culture?

The foremost metric for the usefulness of the FSE is user engagement. With a quick glance at the quantitative metrics, it becomes apparent that the 12 stakeholders that participated in the beta test of the FSE showed adequate interest in the platform. All of the participants created user accounts and 8 out of 12 visited the platform more than once, with the most interested user going as high as 8 unique use sessions, as depicted in Figure 9. In their analysis of the search logs of archival systems, Zhang and Kamps found that one-time users amount for almost 80% of the total user-base, while on the other end of the spectrum, a small number of users (1.26%) constituted repeat visitors that were responsible for almost 20% of the total search sessions [54]. In our limited data, the pattern of repeat visitors seems to also emerge, while on the other hand there were fewer one-time users (approx 33%). The 2.9 sessions per logged in user is high considering the limited duration of the beta test. Moreover, there were a lot of anonymous sessions that also indicate further engagement beyond those measured by session per user.

The duration of the sessions, as depicted in Figure 10, is also a good indicator of engagement. The average of 25.6 min on sessions that involved a logged in user is encouraging. Barifah et al. measured an average session duration of about 20 min in their evaluation of user experience in a digital library [55]. In the guidelines the stakeholders received prior to the testing, a modest suggestion of 15 min of total engagement was made. The total hours of engagement across all users was very close to 20 h, which translates to an average of almost 100 min of engagement per user including the anonymous visits, or almost 75 min per user when including only sessions that involved a user login. This is much higher than the guideline suggestion. The least involved stakeholder only used the platform for 170 s, while the most involved used it for over 180 min. While session duration distribution usually follows a declining trend, as depicted in Figure 10, a small peek of long duration sessions was noticed by Barifah et al. [55], as well as other researchers [56,57]. Such a peek did not occur in our findings, and Barifah et al. suggest that it might be the result of users taking breaks while searching.

The moderate to high user engagement that was observed from the quantitative metrics was explicitly stated in the focus group interviews that were the source of the qualitative insight. As presented in detail in Section 3.2, most respondents praised the FSE in terms of functionality, interface design and aesthetics. Overall, impressions were positive and future use of the platform was supported by the vast majority of beta test participants. Additionally, special mention was made by multiple participants as to the value that the FSE could provide in education for learners at various academic stages.

5. Conclusions

As the vastness of the World Wide Web continues to expand, searching for useful information becomes more and more difficult. Content relevant to specialized fields, such as Arts and Cultural Heritage, is especially often marginalized by commercial content, which makes extended use of Search Engine Optimization techniques and other means to increase their visibility both legitimate and occasionally illicit. In this study, in an effort to facilitate the discovery of such content, an innovative Federated Search Engine was developed and evaluated by a group of stakeholders selected through purposive sampling.

With regards to feasibility, the endeavor to develop a fully functioning prototype was successful. The process of the study highlighted the challenges that its development presented. Collecting information from multiple sources, unifying it and presenting it to the end-user poses not only technical difficulties but also the difficulties in understanding the users’ needs and wants. From a technical perspective, the FSE implemented a simple data model that was used to map the basic information made available by the various APIs, while the rest of that information remained in the original repositories and was accessed through a link to the original source. Additionally, it made sure the content was enhanced with features such as added accessibility through a clean UI, text-to-speech, voice search and a user system to allow users to bookmark and organize their results. A visual search function directly connected to the results without the need for intermediary steps was implemented to help users widen their search reach beyond the initial repositories.

Concerning the various features that could contribute to the effectiveness of such a tool, the basic search functionality and the user system drew most of the stakeholders’ interests, while additional technologies such as voice and visual search also helped with engagement. From the qualitative feedback that the mini focus group interviews provided, the issues of advanced search accuracy and language accessibility were identified. In the future, the development of the FSE should move towards accomplishing the goal of improved results on specific informational queries by widening the content provider base, while at the same time improving the tools of targeted search such as the advanced search options and the related links. Moreover, the goal of increasing the platforms’ outreach through the inclusion of multiple language support must be evaluated for feasibility.

The overall evaluation of the FSE was focused around user engagement. The presented platform with all its basic and extended features, was positively evaluated by the vast majority of the stakeholders. Engagement metrics based on user interaction and qualitative responses from the mini focus group interviews both emphatically validated the usefulness of the FSE, as well as the value of its present implementation both in terms of functionality and in terms of user experience. The vast majority of participants confirmed that they would be interested in using this tool in the future.

Further development of the FSE beyond the prototype stage, as well as a better understanding of user behaviour and the user needs when they are searching for content relating to Arts and Cultural Heritage on the web, are imperative. Collecting quantitative data from a wider sample of users, as well as devising and implementing more metrics, must be one of the goals of this research moving forward. Comprehending and analyzing user needs, in tandem with aggregating, organizing, enhancing and assisting in the diffusion of data that can cover these needs, can create a more timely, more accurate and more fruitful search experience. This, in turn, may result in an upgrade of the secondary product of the process of searching the web for Arts and Culture, which is essentially the knowledge and understanding that this search produces, as well as whatever work is produced with their aid.

Author Contributions

Conceptualization, M.P., I.V. and A.G.; methodology, M.P. and A.G.; software, M.P.; validation, M.P. and I.V.; formal analysis, M.P. and I.V.; investigation, M.P. and A.G.; resources, A.G.; data curation, M.P.; writing—original draft preparation, M.P.; writing—review and editing, M.P.; I.V. and A.G.; visualization, M.P.; supervision, I.V. and A.G.; project administration, M.P. and A.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in Zenodo at [doi.org/10.5281/zenodo.6510049], reference number [10.5281/zenodo.6510049], both accessed on 30 April 2022.