An Accurate Refinement Pathway for Visual Tracking

Abstract

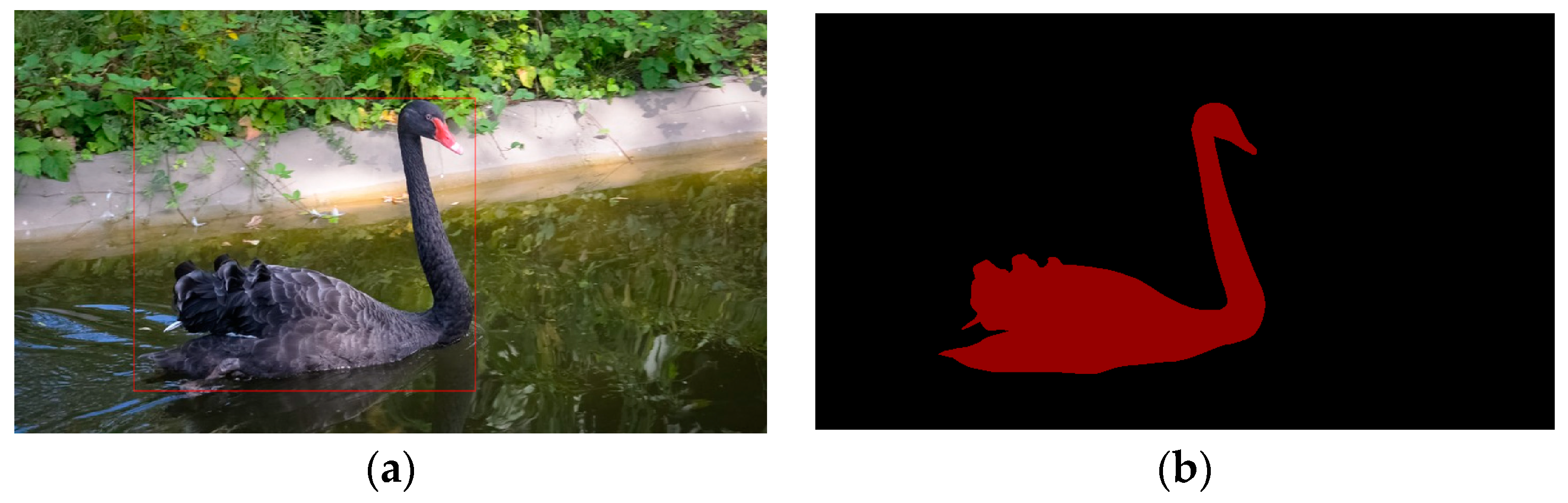

:1. Introduction

2. Related Work

2.1. Segmentation-Based Object Tracker

2.2. Skip Connection

3. The Proposed Method

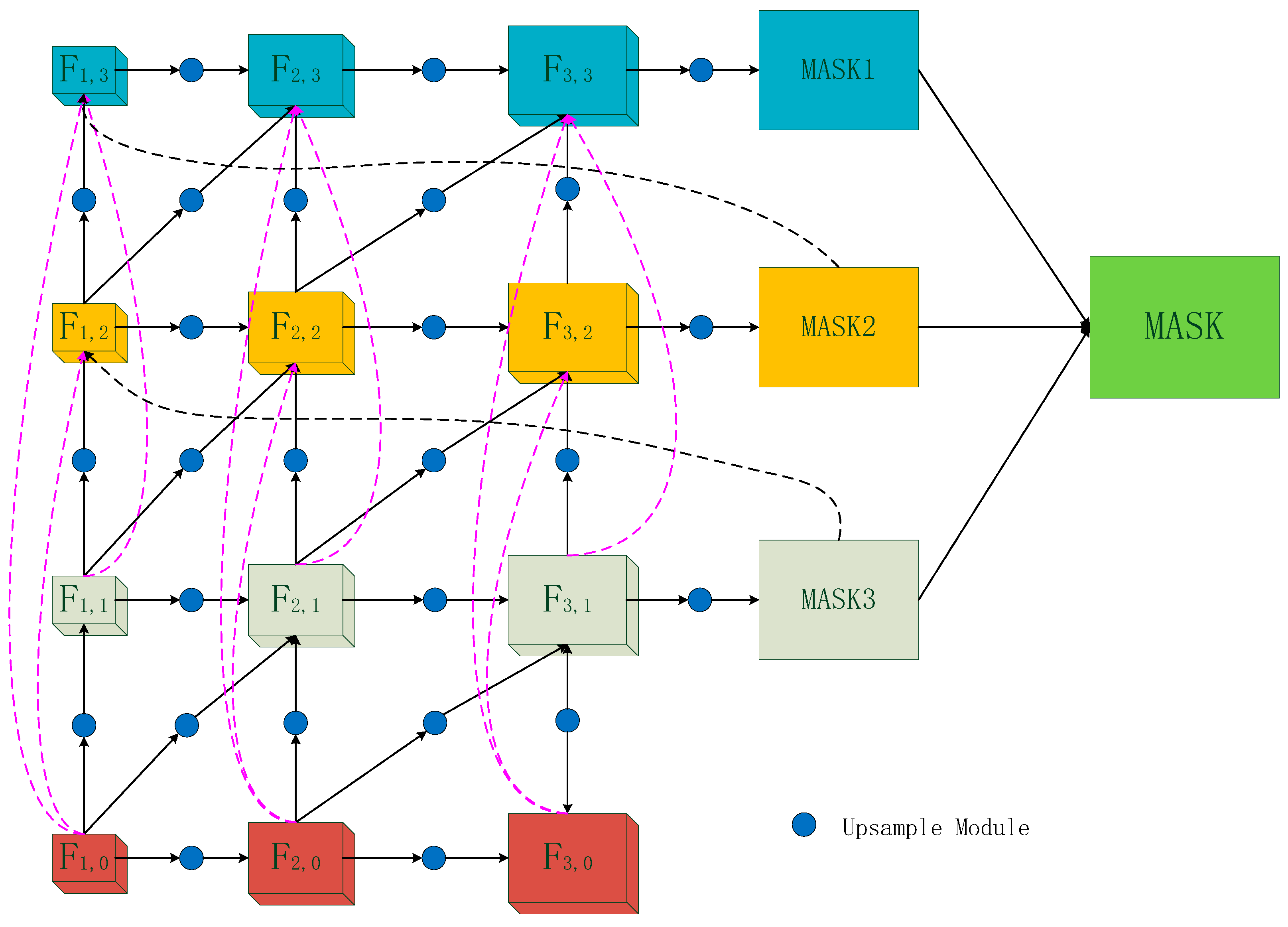

3.1. Cross-Stage Feature Fusion

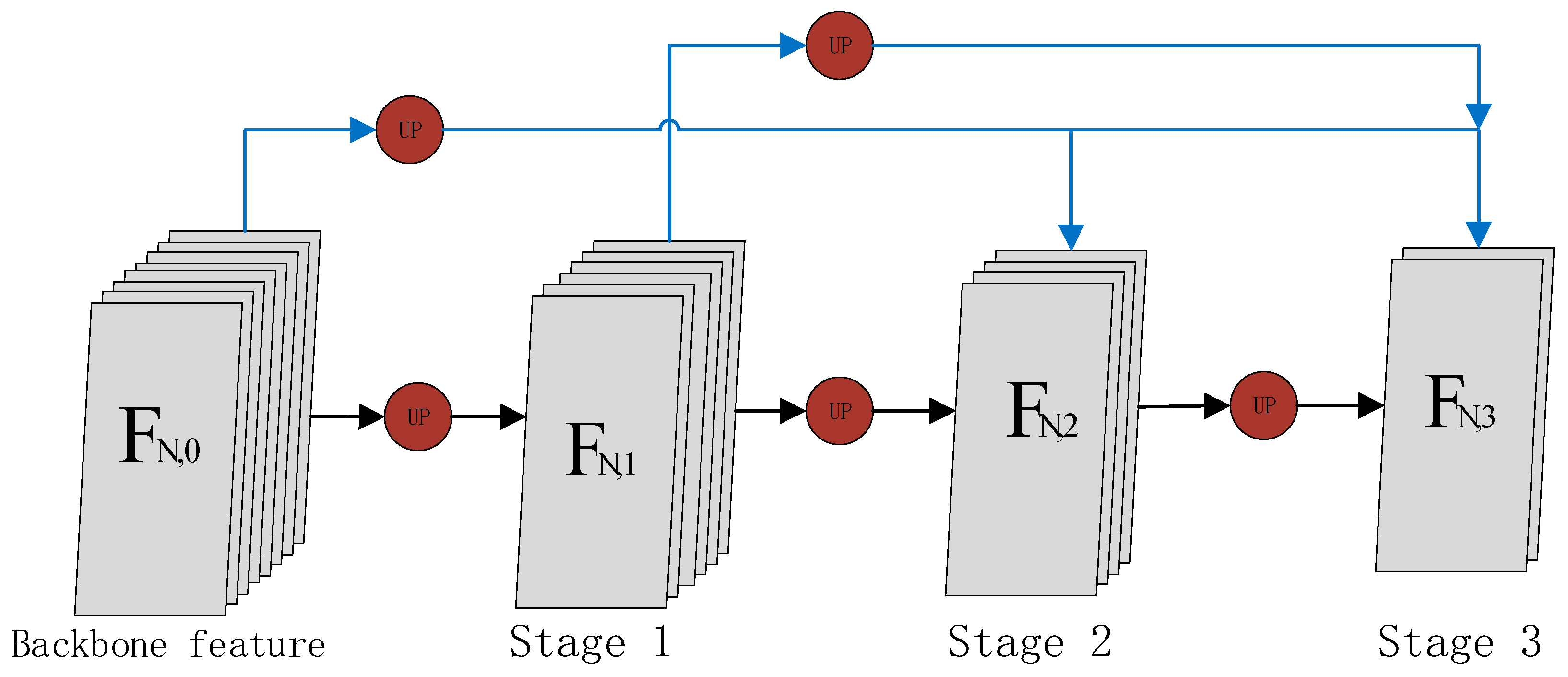

3.2. Cross-Resolution Feature Fusion

3.3. Cross-Stage and Cross-Resolution Feature Fusion

3.4. The Segmentation-Based Tracker

4. Experiments

4.1. Experimental Environment and Training Details

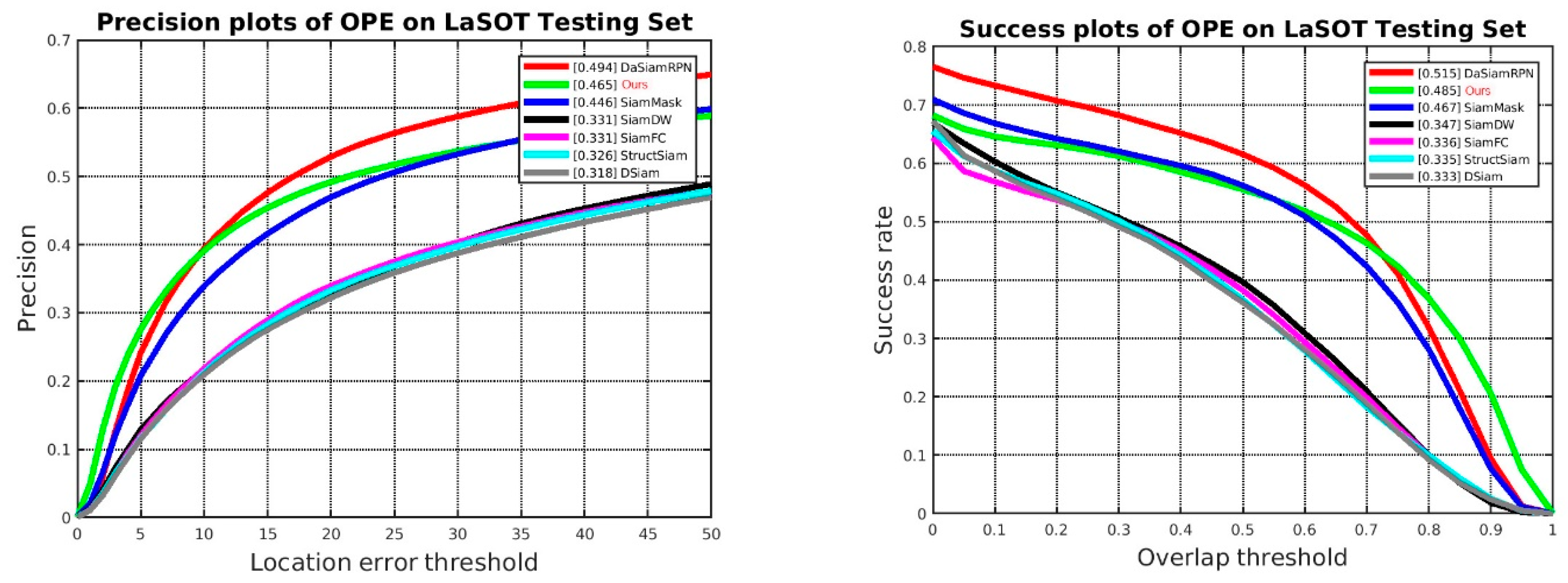

4.2. Comparison on VOT

4.3. Comparison on VOS

5. Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Kämäräinen, J.K.; Danelljan, M.; Zajc, L.C.; Lukežic, A.; Drbohlav, O.; et al. The eighth visual object tracking VOT2020 challenge results. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 547–601. [Google Scholar]

- Perazzi, F.; Khoreva, A.; Benenson, R.; Schiele, B.; Sorkine-Hornung, A. Learning video object segmentation from staticim-ages. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2663–2672. [Google Scholar]

- Chen, C.; Wang, G.; Peng, C.; Fang, Y.; Zhang, D.; Qin, H. Exploring Rich and Efficient Spatial Temporal Interactions for Real-Time Video Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 3995–4007. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Lin, Z.; Xu, J.; Jin, W.-D.; Lu, S.-P.; Fan, D.-P. Bilateral Attention Network for RGB-D Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 1949–1961. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Li, S.; Chen, C.; Hao, A.; Qin, H. A Plug-and-Play Scheme to Adapt Image Saliency Deep Model for Video Data. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 2315–2327. [Google Scholar] [CrossRef]

- Yao, R.; Lin, G.; Xia, S.; Zhao, J.; Zhou, Y. Video object segmentation and tracking: A survey. ACM Trans. Intell. Syst. Technol. (TIST) 2020, 11, 1–47. [Google Scholar] [CrossRef]

- Guo, Q.; Feng, W.; Gao, R.; Liu, Y.; Wang, S. Exploring the Effects of Blur and Deblurring to Visual Object Tracking. IEEE Trans. Image Process. 2021, 30, 1812–1824. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Li, S.; Qin, H.; Hao, A. Real-time and robust object tracking in video via low-rank coherency analysis in feature space. Pattern Recognit. J. Pattern Recognit. Soc. 2015, 48, 2885–2905. [Google Scholar] [CrossRef]

- Wang, N.; Zhou, W.; Wang, J.; Li, H. Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1571–1580. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 4282–4291. [Google Scholar]

- Son, J.; Jung, I.; Park, K.; Han, B. Tracking-by-segmentation with online gradient boosting decision tree. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 3056–3064. [Google Scholar]

- Yeo, D.; Son, J.; Han, B.; Hee Han, J. Superpixel-based tracking-by-segmentation using markov chains. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1812–1821. [Google Scholar]

- Yao, R.; Lin, G.; Shen, C.; Zhang, Y.; Shi, Q. Semantics-aware visual object tracking. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 1687–1700. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International CONFERENCE on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar]

- Chen, X.; Li, Z.; Yuan, Y.; Yu, G.; Shen, J.; Qi, D. State-Aware Tracker for Real-Time Video Object Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9384–9393. [Google Scholar]

- Yu, Y.; Xiong, Y.; Huang, W.; Scott, M.R. Deformable Siamese attention networks for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6728–6737. [Google Scholar]

- Zhang, Z.; Hua, Y.; Song, T.; Xue, Z.; Ma, R.; Robertson, N.; Guan, H. Tracking-assisted Weakly Supervised Online Visual Object Segmentation in Unconstrained Videos. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 941–949. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; pp. 770–778. [Google Scholar]

- Li, H.; Xu, Z.; Taylor, G.; Studer, C.; Goldstein, T. Visualizing the loss landscape of neural nets. arXiv 2017, arXiv:1712.09913. [Google Scholar]

- Orhan, A.E.; Pitkow, X. Skip connections eliminate singularities. arXiv 2017, arXiv:1701.09175. [Google Scholar]

- Chen, R.T.; Rubanova, Y.; Bettencourt, J.; Duvenaud, D. Neural ordinary differential equations. arXiv 2018, arXiv:1806.07366. [Google Scholar]

- Weinan, E. A proposal on machine learning via dynamical systems. Commun. Math. Stat. 2017, 5, 1–11. [Google Scholar]

- Drozdzal, M.; Vorontsov, E.; Chartrand, G.; Kadoury, S.; Pal, C. The importance of skip connections in biomedical image segmentation. In Deep Learning and Data Labeling for Medical Applications; Springer: Berlin/Heidelberg, Germany, 2016; pp. 179–187. [Google Scholar]

- Jégou, S.; Drozdzal, M.; Vazquez, D.; Romero, A.; Bengio, Y. The one hundred layers tiramisu: Fully convolutional densenets for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 11–19. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Lukezic, A.; Matas, J.; Kristan, M. D3S-A discriminative single shot segmentation tracker. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7133–7142. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2019; pp. 4660–4669. [Google Scholar]

- Hu, Y.T.; Huang, J.B.; Schwing, A.G. Videomatch: Matching based video object segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 54–70. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and wider siamese networks for real-time visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2019; pp. 4591–4600. [Google Scholar]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 6182–6191. [Google Scholar]

- Zhang, Z.; Peng, H. Ocean: Object-aware anchor-free tracking. arXiv 2020, arXiv:2006.10721. [Google Scholar]

- Ma, Z.; Wang, L.; Zhang, H.; Lu, W.; Yin, J. RPT: Learning Point Set Representation for Siamese Visual Tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 653–665. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GOT-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. LaSOT: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhang, Y.; Wang, L.; Qi, J.; Wang, D.; Feng, M.; Lu, H. Structured siamese network for real-time visual tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 351–366. [Google Scholar]

- Guo, Q.; Feng, W.; Zhou, C.; Huang, R.; Wan, L.; Wang, S. Learning dynamic siamese network for visual object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1763–1771. [Google Scholar]

- Perazzi, F.; Pont-Tuset, J.; McWilliams, B.; van Gool, L.; Gross, M.; Sorkine-Hornung, A. A benchmark dataset and evaluation methodology for video object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Caelles, S.; Maninis, K.K.; Pont-Tuset, J.; Leal-Taixé, L.; Cremers, D.; Van Gool, L. One-shot video object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 221–230. [Google Scholar]

- Cheng, J.; Tsai, Y.H.; Hung, W.C.; Wang, S.; Yang, M.H. Fast and accurate online video object segmentation via tracking parts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7415–7424. [Google Scholar]

- Oh, S.W.; Lee, J.Y.; Sunkavalli, K.; Kim, S.J. Fast video object segmentation by reference-guided mask propagation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7376–7385. [Google Scholar]

| SiamMask | SiamRPN | ATOM | SiamFC | DaSiamRPN | SiamDW | D3S | Ours | |

|---|---|---|---|---|---|---|---|---|

| Acc ↑ | 0.640 | 0.560 | 0.610 | 0.530 | 0.610 | 0.580 | 0.660 | 0.670 |

| Rob ↓ | 0.214 | 0.302 | 0.180 | 0.460 | 0.220 | 0.240 | 0.131 | 0.126 |

| EAO ↑ | 0.433 | 0.344 | 0.430 | 0.235 | 0.411 | 0.370 | 0.493 | 0.514 |

| SiamMask | ATOM | D3S | SiamRPN | DiMP | Ocean | SiamRPN++ | Ours | |

|---|---|---|---|---|---|---|---|---|

| Acc ↑ | 0.602 | 0.590 | 0.640 | 0.490 | 0.597 | 0.598 | 0.600 | 0.652 |

| Rob ↓ | 0.288 | 0.204 | 0.150 | 0.460 | 0.153 | 0.169 | 0.234 | 0.164 |

| EAO ↑ | 0.347 | 0.401 | 0.489 | 0.244 | 0.440 | 0.467 | 0.414 | 0.475 |

| SiamMask | ATOM | D3S | SiamFC | DiMP | Ocean | RPT | Ours | |

|---|---|---|---|---|---|---|---|---|

| Acc ↑ | 0.624 | 0.462 | 0.699 | 0.418 | 0.457 | 0.693 | 0.700 | 0.707 |

| Rob ↑ | 0.648 | 0.734 | 0.769 | 0.502 | 0.740 | 0.754 | 0.869 | 0.760 |

| EAO ↑ | 0.321 | 0.271 | 0.439 | 0.179 | 0.274 | 0.430 | 0.530 | 0.445 |

| SiamFC | ATOM | D3S | DiMP | SiamRPN | Ocean | SiamRPN++ | Ours | |

|---|---|---|---|---|---|---|---|---|

| AO ↑ | 0.348 | 0.556 | 0.597 | 0.611 | 0.463 | 0.611 | 0.518 | 0.600 |

| SR0.5 ↑ | 0.353 | 0.634 | 0.676 | 0.717 | 0.549 | 0.721 | 0.618 | 0.681 |

| SR0.75 ↑ | 0.098 | 0.402 | 0.462 | 0.492 | 0.253 | 0.473 | - | 0.471 |

| J&FMean | JMean | JRecall | JDecay | FMean | FRecall | FDecay | FPS | |

|---|---|---|---|---|---|---|---|---|

| OSVOS | 80.2 | 79.8 | 93.6 | 14.9 | 80.6 | 92.6 | 15.0 | 0.2 |

| FAVOS | 80.8 | 82.4 | - | - | 79.5 | - | - | 0.8 |

| RGMP | 81.8 | 81.5 | 91.7 | 10.9 | 82.0 | 90.8 | 10.1 | 8 |

| SAT | 83.1 | 82.6 | - | - | 83.9 | - | - | 39 |

| SiamMask | 69.8 | 71.7 | 86.8 | 3.0 | 67.8 | 79.8 | 2.1 | 35 |

| D3S | 74 | 75.4 | - | - | 72.6 | - | - | 25 |

| Ours | 74.4 | 75.9 | 90.5 | 4.3 | 72.8 | 84.5 | 5.8 | 20 |

| EAO ↑ | Accuracy ↑ | Robustness ↓ | |

|---|---|---|---|

| D3S | 0.493 | 0.660 | 0.131 |

| D3S-MS | 0.500 | 0.677 | 0.142 |

| RPVT | 0.514 | 0.670 | 0.126 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, L.; Cheng, S.; Wang, L. An Accurate Refinement Pathway for Visual Tracking. Information 2022, 13, 147. https://doi.org/10.3390/info13030147

Xu L, Cheng S, Wang L. An Accurate Refinement Pathway for Visual Tracking. Information. 2022; 13(3):147. https://doi.org/10.3390/info13030147

Chicago/Turabian StyleXu, Liang, Shuli Cheng, and Liejun Wang. 2022. "An Accurate Refinement Pathway for Visual Tracking" Information 13, no. 3: 147. https://doi.org/10.3390/info13030147

APA StyleXu, L., Cheng, S., & Wang, L. (2022). An Accurate Refinement Pathway for Visual Tracking. Information, 13(3), 147. https://doi.org/10.3390/info13030147