COVID-19 and Science Communication: The Recording and Reporting of Disease Mortality

Abstract

:1. Introduction

2. On the Quantification of Mortality Rates

2.1. Survival Analysis

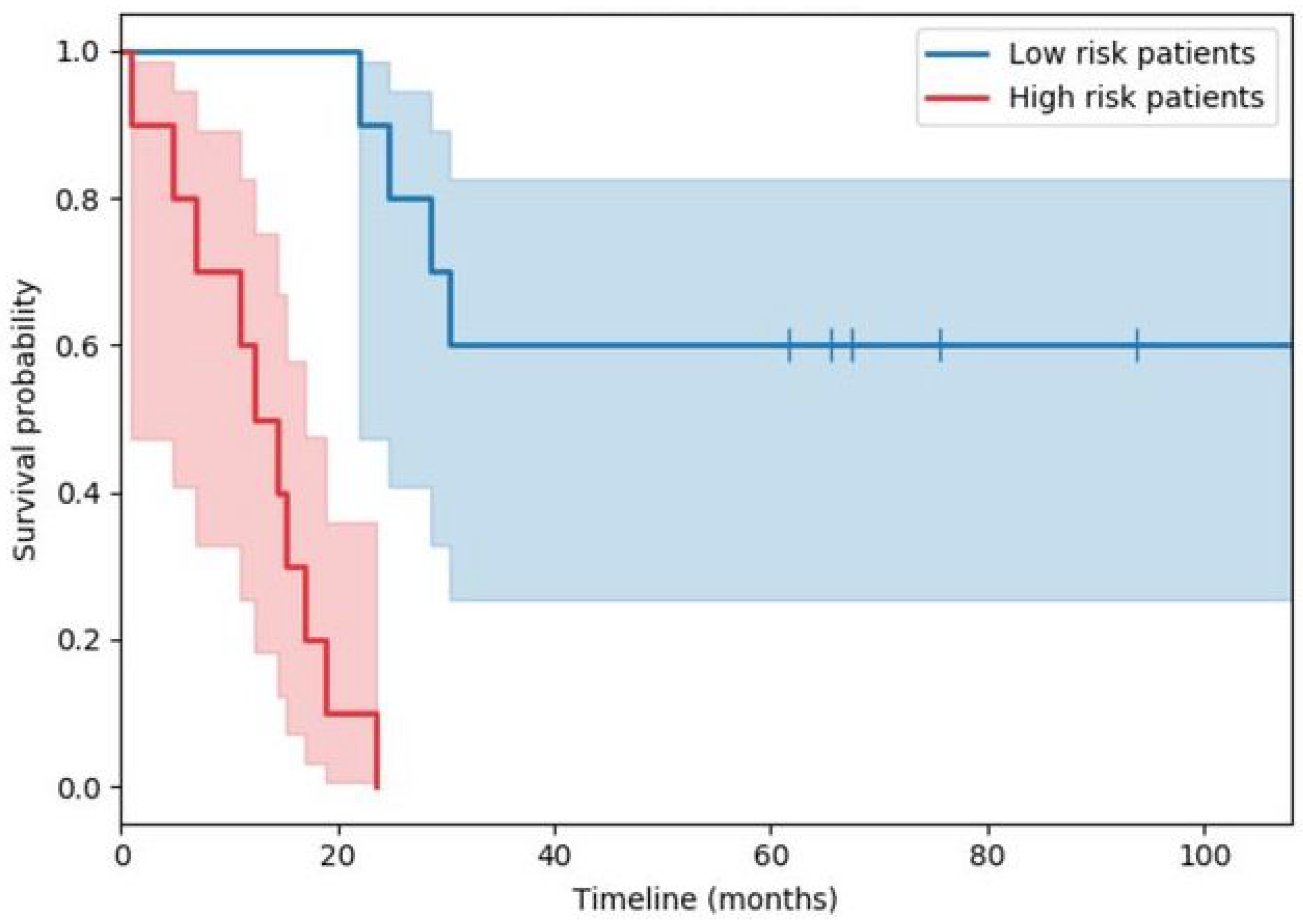

2.1.1. Kaplan–Meier Estimation

2.1.2. Cox’s Regression

2.2. The Recording and Reporting of Deaths

2.2.1. England

2.2.2. Survival Analysis…Again

2.2.3. Piecing It All Together

“...if, for example, somebody tested positive in April but recovered and was then hit by a bus in July, they would still be counted as a Covid-19 victim.”

“Essentially, there is no way to recover, statistically. So, if I tested positive for COVID-19 today and then I got hit by a bus tomorrow, then COVID-19 would be listed as my cause of death.”

“You could have been tested positive in February, have no symptoms, then be hit by a bus in July and you’d be recorded as a Covid death.”

“…such a scenario would ‘technically’ be counted as a coronavirus death, ‘though the numbers where that situation would apply are likely to be very small’.”

“Nearly 800,000 people are known to have died of COVID-19. [all emphasis added]”

3. Analysis of Mainstream Media

3.1. Data Collection

3.2. Data Analysis

3.3. Discussion

4. Summary and Conclusions

the second one from the UK’s Prime Minister Boris Johnson statement on coronavirus delivered on 26 January 2021 [42]:“Today we mark a truly grim, heartbreaking milestone—500,071 dead.”,

and the last one from the speech by Keir Starmer, then Leader of the Labour Party, at the Labour Party Conference 2021 [43]:“I am sorry to have to tell you that today the number of deaths recorded from Covid in the UK has surpassed 100,000... [all emphasis added]”,

“We have now lost 133,000 people to Covid. [all emphasis added]”

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Walsh, J. Public Attitude Toward Science Is Yes, but— Survey results in NSF report show backing is still strong, indicate that the best informed are the most favorably disposed. Science 1982, 215, 270–272. [Google Scholar] [CrossRef]

- Claessens, M. Public perception of science in Eastern and Central Europe. In Proceedings of the 8th International Conference on Public Communication of Science and Technology (PCST), Barcelona, Spain, 3–6 June 2004; pp. 427–429. [Google Scholar]

- Cobb, M.D.; Macoubrie, J. Public perceptions about nanotechnology: Risks, benefits and trust. J. Nanoparticle Res. 2004, 6, 395–405. [Google Scholar] [CrossRef]

- Skinner, G.; Clemence, M. Veracity Index 2016: Trust in Professions; Ipsos MORI: London, UK, 2016. [Google Scholar]

- Bredahl, L. Determinants of consumer attitudes and purchase intentions with regard to genetically modified food–results of a cross-national survey. J. Consum. Policy 2001, 24, 23–61. [Google Scholar] [CrossRef]

- Allum, N.; Sturgis, P.; Tabourazi, D.; Brunton-Smith, I. Science knowledge and attitudes across cultures: A meta-analysis. Public Underst. Sci. 2008, 17, 35–54. [Google Scholar] [CrossRef] [Green Version]

- Brown, R.C.; Savulescu, J.; Williams, B.; Wilkinson, D. Passport to freedom? Immunity passports for COVID-19. J. Med. Ethics 2020, 46, 652–659. [Google Scholar] [CrossRef] [PubMed]

- Gaglione, C.; Purificato, I.; Rymkevich, O.P. Covid-19 and labour law: Italy. Ital. Labour Law e-J. 2020, 13, 306–313. [Google Scholar]

- Brzezinski, A.; Kecht, V.; Van Dijcke, D.; Wright, A.L. Belief in Science Influences Physical Distancing in Response to Covid-19 Lockdown Policies; BFI Working Paper; University of Chicago, Becker Friedman Institute for Economics: Chicago, IL, USA, 2020. [Google Scholar]

- Goldman, A.I. Reliabilism, veritism, and epistemic consequentialism. Episteme 2015, 12, 131–143. [Google Scholar] [CrossRef]

- Pritchard, D. Knowledge and understanding. In Virtue Epistemology Naturalized; Springer: Cham, Switzerland, 2014; pp. 315–327. [Google Scholar]

- Arandjelović, O. AI, democracy, and the importance of asking the right questions. AI Ethics J. 2021, 2, 2. [Google Scholar]

- Cooper, J.; Dimitriou, N.; Arandjelović, O. How Good is the Science That Informs Government Policy? A Lesson From the UK’s Response to 2020 CoV-2 Outbreak. J. Bioethical Inq. 2021, 4, 561–568. [Google Scholar] [CrossRef]

- Lavazza, A.; Farina, M. The role of experts in the Covid-19 pandemic and the limits of their epistemic authority in democracy. Front. Public Health 2020, 8, 356. [Google Scholar] [CrossRef]

- Gerbaudo, P. The pandemic crowd. J. Int. Aff. 2020, 73, 61–76. [Google Scholar]

- Burns, T.W.; O’Connor, D.J.; Stocklmayer, S.M. Science communication: A contemporary definition. Public Underst. Sci. 2003, 12, 183–202. [Google Scholar] [CrossRef]

- Fischhoff, B. The sciences of science communication. Proc. Natl. Acad. Sci. USA 2013, 110, 14033–14039. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bubela, T.; Nisbet, M.C.; Borchelt, R.; Brunger, F.; Critchley, C.; Einsiedel, E.; Geller, G.; Gupta, A.; Hampel, J.; Hyde-Lay, R.; et al. Science communication reconsidered. Nat. Biotechnol. 2009, 27, 514–518. [Google Scholar] [CrossRef] [PubMed]

- de Bruin, W.B.; Bostrom, A. Assessing what to address in science communication. Proc. Natl. Acad. Sci. USA 2013, 110, 14062–14068. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Trench, B. Towards an analytical framework of science communication models. In Communicating Science in Social Contexts; Cheng, D., Claessens, M., Gascoigne, T., Metcalfe, J., Schiele, B., Shi, S., Eds.; Springer: Dordrecht, The Netherlands, 2008; pp. 119–135. [Google Scholar]

- Scheufele, D.A. Science communication as political communication. Proc. Natl. Acad. Sci. USA 2014, 111, 13585–13592. [Google Scholar] [CrossRef] [Green Version]

- Leung, K.M.; Elashoff, R.M.; Afifi, A.A. Censoring issues in survival analysis. Annu. Rev. Public Health 1997, 18, 83–104. [Google Scholar] [CrossRef]

- Dimitriou, N.; Arandjelović, O.; Harrison, D.J.; Caie, P.D. A principled machine learning framework improves accuracy of stage II colorectal cancer prognosis. NPJ Digit. Med. 2018, 1, 52. [Google Scholar] [CrossRef] [Green Version]

- Gavriel, C.G.; Dimitriou, N.; Brieu, N.; Nearchou, I.P.; Arandjelović, O.; Schmidt, G.; Harrison, D.J.; Caie, P.D. Assessment of Immunological Features in Muscle-Invasive Bladder Cancer Prognosis Using Ensemble Learning. Cancers 2021, 13, 1624. [Google Scholar] [CrossRef]

- Nearchou, I.P.; Soutar, D.A.; Ueno, H.; Harrison, D.J.; Arandjelovic, O.; Caie, P.D. A comparison of methods for studying the tumor microenvironment’s spatial heterogeneity in digital pathology specimens. J. Pathol. Inform. 2021, 12, 6. [Google Scholar] [CrossRef]

- Goel, M.K.; Khanna, P.; Kishore, J. Understanding survival analysis: Kaplan-Meier estimate. Int. J. Ayurveda Res. 2010, 1, 274. [Google Scholar] [PubMed] [Green Version]

- Wallin, L.; Strandberg, E.; Philipsson, J.; Dalin, G. Estimates of longevity and causes of culling and death in Swedish warmblood and coldblood horses. Livest. Prod. Sci. 2000, 63, 275–289. [Google Scholar] [CrossRef]

- Malatack, J.J.; Schaid, D.J.; Urbach, A.H.; Gartner Jr, J.C.; Zitelli, B.J.; Rockette, H.; Fischer, J.; Starzl, T.E.; Iwatsuki, S.; Shaw, B.W. Choosing a pediatric recipient for orthotopic liver transplantation. J. Pediatr. 1987, 111, 479–489. [Google Scholar] [CrossRef] [Green Version]

- Prentice, R.L.; Breslow, N.E. Retrospective studies and failure time models. Biometrika 1978, 65, 153–158. [Google Scholar] [CrossRef]

- Copas, A.J.; Farewell, V.T. Incorporating retrospective data into an analysis of time to illness. Biostatistics 2001, 2, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Matt Hancock Launches Urgent Review into Fiasco at Public Health England as It’s Revealed Anyone Who Has Ever Died after Testing Positive for COVID-19 Has Been Recorded as a ‘Coronavirus Death’—Even If They Were Hit by a Bus. Available online: https://www.dailymail.co.uk/news/article-8531765/Matt-Hancock-launches-urgent-review-way-PHE-counts-coronavirus-deaths.html (accessed on 25 December 2021).

- Coronavirus Death Numbers: Health Secretary Matt Hancock Orders Urgent Review into Public Health England Data. Available online: https://news.sky.com/story/coronavirus-health-secretary-matt-hancock-orders-urgent-review-into-public-health-england-death-data-12030392 (accessed on 25 December 2021).

- Are Official Figures Overstating England’s Covid-19 Death Toll? Available online: https://www.theguardian.com/world/2020/jul/21/analysis-why-englands-covid-19-death-toll-is-wrong-but-not-by-much (accessed on 25 December 2021).

- Montalvan, V.; Lee, J.; Bueso, T.; De Toledo, J.; Rivas, K. Neurological manifestations of COVID-19 and other coronavirus infections: A systematic review. Clin. Neurol. Neurosurg. 2020, 194, 105921. [Google Scholar] [CrossRef]

- Thrasher, S.W. Why COVID Deaths Have Surpassed AIDS Deaths in the U.S. Available online: https://www.scientificamerican.com/article/why-covid-deaths-have-surpassed-aids-deaths-in-the-u-s/ (accessed on 25 December 2021).

- Kaner, H.C.; Mohanty, S.G.; Lyons, J. Critical values of the Kolmogorov-Smirnov one-sample tests. Psychol. Bull. 1980, 88, 498. [Google Scholar] [CrossRef]

- Friedrich, T.; Schellhaas, H. Computation of the percentage points and the power for the two-sided Kolmogorov-Smirnov one sample test. Stat. Pap. 1998, 39, 361–375. [Google Scholar] [CrossRef]

- Huang, C.; Wang, Y.; Li, X.; Ren, L.; Zhao, J.; Hu, Y.; Zhang, L.; Fan, G.; Xu, J.; Gu, X.; et al. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet 2020, 395, 497–506. [Google Scholar] [CrossRef] [Green Version]

- WHO Director-General’s Opening Remarks at the Media Briefing on COVID-19—11 March 2020. Available online: https://www.who.int/director-general/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19---11-march-2020 (accessed on 25 December 2021).

- Nielsen, R.K.; Kalogeropoulos, A.; Fletcher, R. Most in the UK say news media have helped them respond to COVID-19, but a third say news coverage has made the crisis worse. Reuters Inst. Study J. 2020, 25, 1–8. [Google Scholar]

- Covid: Biden Calls 500,000 Death Toll a Heartbreaking Milestone. Available online: https://www.bbc.co.uk/news/world-us-canada-56159756 (accessed on 25 December 2021).

- Prime Minister’s Statement on Coronavirus (COVID-19): 26 January 2021. Available online: https://www.gov.uk/government/speeches/prime-ministers-statement-on-coronavirus-covid-19-26-january-2021 (accessed on 29 December 2021).

- Conference Speech: Keir Starmer. Available online: https://labour.org.uk/press/conference-speech-keir-starmer/ (accessed on 25 December 2021).

| Brand Name | ‘with’ | ‘from’ | ‘of’ | ‘from’ + ‘of’ | |

|---|---|---|---|---|---|

| Newspapers | The Metro | 96 | 431 | 2820 | 3251 |

| The Sun | 59 | 416 | 288 | 704 | |

| Daily Mail | 463 | 9120 | 16,000 | 25,120 | |

| The Times | 36 | 147 | 275 | 422 | |

| The Mirror | 3360 | 10,700 | 41,400 | 52,100 | |

| The Telegraph | 222 | 627 | 788 | 1415 | |

| TV | BBC | 4210 | 5850 | 4200 | 10,050 |

| ITV | 1650 | 5860 | 3440 | 9300 |

| Brand Name | ‘with’ | ‘from’ | ‘of’ | ‘from’ +‘of’ | |

|---|---|---|---|---|---|

| Newspapers | USA Today | 64 | 657 | 861 | 1518 |

| WSJ | 1 | 250 | 3780 | 4030 | |

| NY Times | 4 | 5250 | 16,600 | 21,850 | |

| New York Post | 1 | 403 | 612 | 1015 | |

| LA Times | 1 | 1540 | 9720 | 11,260 | |

| Washington Post | 91 | 2670 | 2000 | 4670 | |

| Star Tribune | 246 | 2380 | 2480 | 4860 | |

| Newsday | 0 | 263 | 1140 | 1403 | |

| Chicago Tribune | 9 | 2400 | 7780 | 10,180 | |

| The Boston Globe | 7 | 229 | 872 | 1101 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arandjelović, O. COVID-19 and Science Communication: The Recording and Reporting of Disease Mortality. Information 2022, 13, 97. https://doi.org/10.3390/info13020097

Arandjelović O. COVID-19 and Science Communication: The Recording and Reporting of Disease Mortality. Information. 2022; 13(2):97. https://doi.org/10.3390/info13020097

Chicago/Turabian StyleArandjelović, Ognjen. 2022. "COVID-19 and Science Communication: The Recording and Reporting of Disease Mortality" Information 13, no. 2: 97. https://doi.org/10.3390/info13020097

APA StyleArandjelović, O. (2022). COVID-19 and Science Communication: The Recording and Reporting of Disease Mortality. Information, 13(2), 97. https://doi.org/10.3390/info13020097