Abstract

The ongoing COVID-19 pandemic has brought science to the fore of public discourse and, considering the complexity of the issues involved, with it also the challenge of effective and informative science communication. This is a particularly contentious topic, in that it is both highly emotional in and of itself; sits at the nexus of the decision-making process regarding the handling of the pandemic, which has effected lockdowns, social behaviour measures, business closures, and others; and concerns the recording and reporting of disease mortality. To clarify a point that has caused much controversy and anger in the public debate, the first part of the present article discusses the very fundamentals underlying the issue of causative attribution with regards to mortality, lays out the foundations of the statistical means of mortality estimation, and concretizes these by analysing the recording and reporting practices adopted in England and their widespread misrepresentations. The second part of the article is empirical in nature. I present data and an analysis of how COVID-19 mortality has been reported in the mainstream media in the UK and the USA, including a comparative analysis both across the two countries as well as across different media outlets. The findings clearly demonstrate a uniform and worrying lack of understanding of the relevant technical subject matter by the media in both countries. Of particular interest is the finding that with a remarkable regularity (), the greater the number of articles a media outlet has published on COVID-19 mortality, the greater the proportion of its articles misrepresented the disease mortality figures.

1. Introduction

The crucial role that science plays in our everyday lives is hardly something that needs to be emphasised even to the general public [1,2]. Moreover, in the economically developed world, science is, for the most part, seen as a positive actor [3]: it is science that has helped us avoid or overcome previously widespread diseases and illnesses; facilitated our being able to communicate across vast distances using video and audio; made travel fast, efficient, and accessible, allowing many to explore—relatively cheaply—distant parts of the globe; made large swaths of knowledge freely and readily accessible to most; and so on. Unsurprisingly, polling consistently shows that scientists too are seen in a positive light [4]. The negative aspects of science, which the public does recognise (correctly or incorrectly), are largely well-confined to specific realms: the pace of lifestyle change [1], applications seen as ‘playing God’ or ‘playing with nature’ (e.g., genetic modification, creation of new life forms) [5,6], or rogue actors’ misuse thereof [2].

However, over the last two years, that is, since the emergence of COVID-19, the place that science plays in our lives appears to have changed substantially. From the largely benevolent supporting actor working in the background, supporting, facilitating, and enhancing various everyday pursuits we undertake, science has come to the fore and is being used to justify—for better or worse, I state this in a value-free sense—in modern times virtually, if not literally unprecedented restrictions on people’s freedoms in countries with historically liberal values. Science is used to justify the prohibition to leave one’s residence [7], to legislate compulsory cessation of normal business operations [8], to impose bans on socialising with others [7], etc. When science is placed at the crux of decision-making that effects such severe harmful effects (I am referring to the aforementioned restrictions themselves only, which are undoubtedly harmful, rather than the net effect thereof, which may very well be beneficial), it is unsurprising that the public starts to take interest in the relevant science, and seeks to understand and scrutinise it [9]. Yet, this endeavour is fraught with difficulty. Firstly, considering the breadth and the depth of competence required to understand the relevant processes and phenomena to an extent whereby this understanding (and thereby I am not referring merely to the knowledge of procedural or factual matters that is veritism [10], but actual understanding [11]) is sufficient to facilitate a meaningful critical assessment of experts’ views, the notion that this competence could be attained in a short period of time by the general public is rather absurdly naïve. Secondly, and perhaps where the greatest danger lies, is the lack of understanding of science and the scientific method that makes the lay public unaware of the limitations of its knowledge, making ill-founded arguments appear convincing and credible, and decreasing trust in the rational scientific authority [12]. In this context, effective science communication is crucial, whether it comes from politicians, scientists themselves, or the media. The overarching message of the present paper is that science communication in these delicate and febrile times have been found wanting. Inept and misleading communication, often stemming from a lack of understanding of the subject matter itself, has resulted in undue (in the specific context considered herein, which is not to dismiss other, possibly correct criticisms [13,14]) public scepticism towards scientific advice, a reluctance to adopt and follow guidance, increased discontent [15], etc.

The realm of science communication research is broad and multifaceted, with a large and ever-increasing body of published work on its different aspects, ranging from the underlying philosophical principles to issues of a more practical nature. A reader interested in this wider context is advised to refer to a number of comprehensive reviews on the subject (please note that the citations do not imply the present author’s endorsement of all or any specific arguments, views, or conclusions put forward in the referenced articles) [16,17,18,19,20,21]. Herein, I restrict my focus to the specific issue of communication regarding COVID-19 mortality—a particularly emotive topic at the nexus of the decision-making processes that led to a great number of the aforementioned restrictive and far-reaching measures aimed at dealing with the pandemic and pivotal in shaping the public’s attitude and behaviour. I start by discussing the very fundamentals underlying the issue of causative attribution with regards to mortality—that is, what it means that a person ‘has died of’ something—in Section 2. Having clarified this notion, which has caused much controversy, to say nothing of anger on all sides of the debate played out in public, and in particular having explained why the phrasing is epistemologically inappropriate when applied on a personal level, I lay out the foundations for the statistical means of mortality estimation on the cohort or population level in Section 2.1. In Section 2.2, I concretize these statistical approaches by analysing the COVID-19 recording and reporting practices adopted in England, and explain why certain measures were adopted and how they were misrepresented first and foremost by the media but also by some scientists when communicating with the public. The second part of the article, namely, Section 3, is empirical in nature. Specifically, I present data and analyse how COVID-19 mortality has been reported in the mainstream media in the UK and the USA, including a comparative analysis both across the two countries as well as across different media outlets. The findings of the analysis clearly demonstrate a uniformly and worrying lack of understanding of the relevant technical subject matter by the media in both countries. Finally, Section 4 presents a summary of the key points of the article and its conclusions.

2. On the Quantification of Mortality Rates

It is unsurprising that the mortality of the novel coronavirus SARS-CoV-2—that is, the disease it causes, COVID-19—has quickly become the primary measure of the virus’s direct impact. Mortality is a simple measure in that it is underlain by a binary outcome (death or survival) and is easily understood by the general public. It is also a highly emotive one.

To a nonspecialist, the mortality of a disease also appears as being easily measurable: it is a simple process involving little more than the counting of deaths deemed to have resulted from an infection with SARS-CoV-2. However, underneath this seemingly straightforward task lies a number of nuances. The primary one of these is presented by the question of when a death can be attributed to the virus. Even to laypersons who may not be able to express the reasons behind their reckoning, it is readily evident that this question is different than, say, that of asking when somebody has died of, e.g., a gunshot wound, as used for the reporting of firearm murders. The key difference between the two stems from the former being a distal and the latter a proximal factor. In seeking a link between distal causes and the corresponding outcomes of interest, the analysis of causality is complicated by the complexity effected by numerous intervening and confounding factors. Thus, to give a simple example, while it may be a relatively straightforward matter to establish respiratory failure as the proximal cause of death, it is far less clear when COVID-19 can be linked to it as the distal cause and to what extent, especially if the patient has pre-existing conditions.

The key insight stemming from the above is that indeed, it is fundamentally impossible to claim with certainty that any particular death was caused by COVID-19. Rather, the approach has to be on cohort- or population-based analysis. The basic idea is reasonably simple: in order to assess how a particular factor of interest affects survival, a comparison is made between cohorts that differ in the aforementioned factor but are otherwise statistically matched in the potentially relevant characteristics. Indeed, the entire field of study usually termed ‘survival analysis’ [22], widely used in biomedical sciences and engineering amongst others, is focused on the development of techniques that can be utilised for such analysis and that are suited to different scenarios (for example, for problem settings when not all data are observable, or for different types of factor of interest such as discrete or continuous, etc.). In the specific case that we consider here, the situation is rather straightforward in principle in that the factor of interest is also binary, namely, an individual in a cohort either has had or has not had a positive SARS-CoV-2 diagnosis in the past.

While an in-depth overview of survival analysis is out of scope of the present article, for completeness and clarity, it is useful to illustrate some of the more common methods used to this end in the literature and in practice. An understanding of these will help set the ground for the topics discussed thereafter, namely, how SARS-CoV-2 deaths should be recorded, how they should be reported, and why the two are different.

2.1. Survival Analysis

2.1.1. Kaplan–Meier Estimation

The frequently used Kaplan–Meier estimator [23,24,25] is a non-parametric estimator of the survival function within a cohort. The survival function captures the probability of an individual’s death being no earlier than t:

where is the time of death of an individual in the cohort, treated as an outcome of a random variable. In the simplest setting, the challenge is thus of estimating given the set where is the time of death of the j-th individual in a cohort numbering n. It is assumed that the outcomes corresponding to different individuals are independent from one another, and identically distributed. However, usually not all are available because the time of analysis precedes the death of at least some individuals in a cohort. In our specific example, many of the individuals whose data is analysed, whether previously infected with SARS-CoV-2, will not die for many years in future. To account for this, rather than assuming that all are known, the estimate is sought from a set of pairs where are the censoring times. The censoring time is the latest time for which the survival or non-survival of the j-th individual is known; thus, is meaningful only if (e.g., the time of death of those individuals who have not died by the time of analysis is not known). It is important to emphasise the assumption that censoring is non-informative, i.e., that censoring statistics in both cohorts are identical.

It is then a straightforward matter to derive the following estimate for (for further technical detail and a step by step derivation see e.g., the work of Goel et al. [26]):

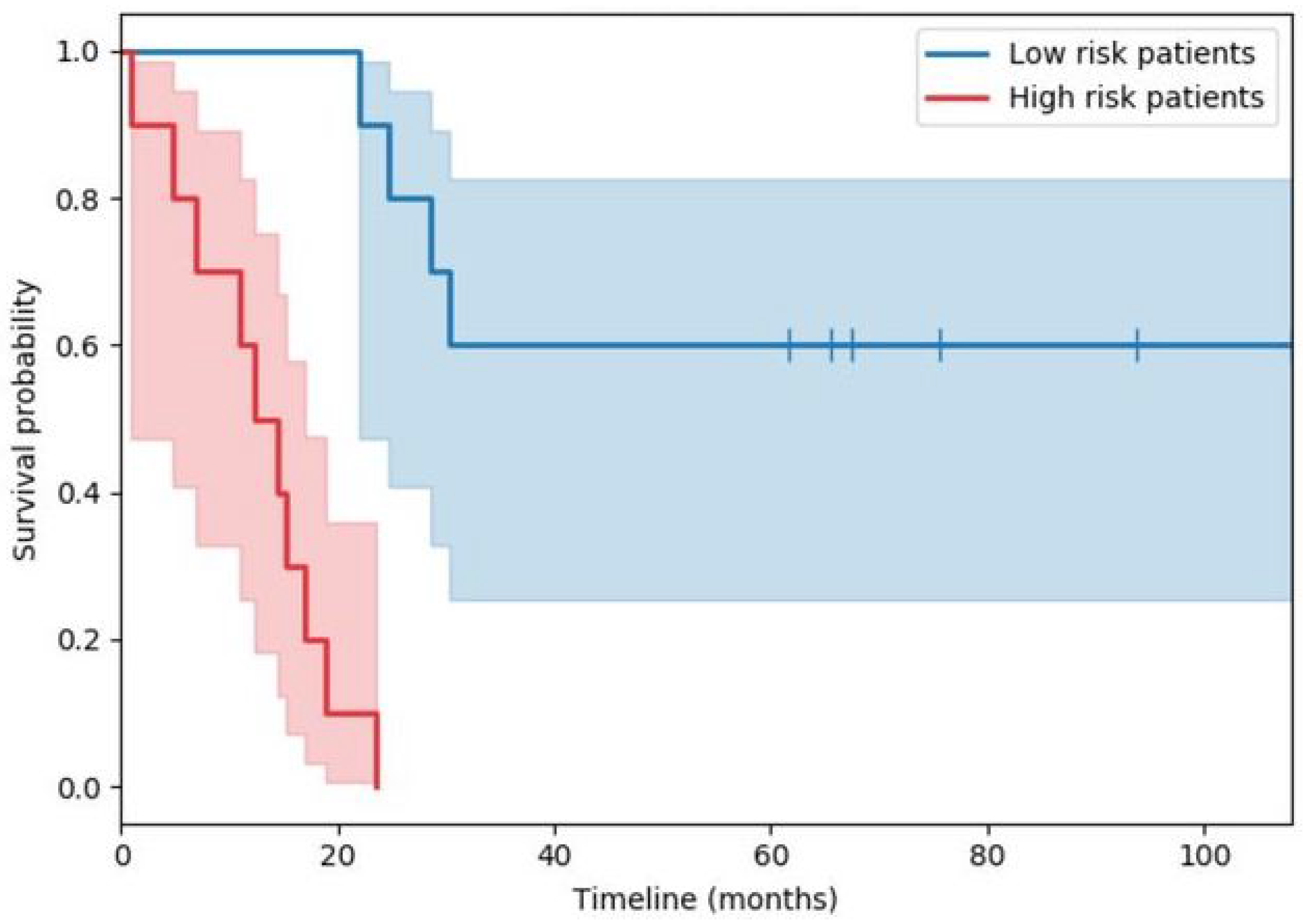

with a time when at least one death occurred, the number of deaths that happened at time , and the individuals known to have survived up to time . The plot in Figure 1 shows a typical example of two survival functions obtained in this manner (the specific example is from a study of immunological features in muscle-invasive bladder cancer). Both estimates, the red and the blue one, start at 1, as all participants in the study are initially alive (and hence, by design, the probability of being alive is 1). Thereafter, the faster decline of the red curve as compared with the blue one, captures a more rapid death rate in the cohort corresponding to the former, i.e., a lower probability of survival past a certain point in the future.

Figure 1.

Example of the Kaplan–Meier derived estimate of two survival functions (red and blue lines). The corresponding shared areas indicate the standard deviations of the estimates across time.

2.1.2. Cox’s Regression

Another widely used technique for survival analysis is Cox’s regression (also often referred to as Cox’s proportional hazards model), which adopts a somewhat different, semi-parametric approach from that of the Kaplan–Meier estimator in several important ways. Firstly, unlike in the case of the latter, no explicit stratification of the entire patient cohort is performed. Rather, the same effect is achieved statistically. Secondly, the approach is multivariate rather than univariate in nature, which makes the method more appropriate for many real-world analyses when it is not possible to perform randomization or to ensure the satisfaction of other criteria required for Kaplan–Meier analysis.

Cox’s regression for survival analysis in its general form is a method for investigating the effect of several variables on the time of death. Central to it is the concept of the hazard function, , also called the hazard rate, which is the instantaneous death rate in a cohort (often, it is incorrectly described as the probability of death at a certain time [27,28]). It is modelled as a product of the baseline hazard function, and the exponential of a linear combination covariates (that is, factors of interest), :

The coefficients can be interpreted as quantifying the effect of the corresponding covariates and can be inferred from data by means of partial maximum likelihood estimation over all observed deaths. The resulting hazard ratios provide a simple way of interpreting the findings, with values around 1 indicating a lack of effect of the factor, and those greater or lesser than 1, respectively, increased and decreased associated hazard of death. A factor in this analysis can be, for example, the presence of a specific disease (e.g., COVID-19), a particular demographic characteristic, a comorbidity of interest, etc. Thus, Cox’s analysis allows us to interrogate the data as regards the effect of specifically, say, a past positive test for COVID-19, on mortality, adjusted for other factors which too may affect it.

Note that both methods described, namely, both Cox’s regression and Kaplan–Meier estimation, are statistical and therefore phenomenological in nature, as opposed to mechanistic—neither approach models the underlying processes that effect the connection of observable predictor data (e.g., the presence of a historical positive test for COVID-19, or one’s sex, age, etc.) with the outcome of interest (time of death).

2.2. The Recording and Reporting of Deaths

An understanding of the technical basics of survival analysis covered in the previous section erects a solid basis for the consideration of how the recording and the reporting of deaths due to a distal cause should be performed. Indeed, the latter have attracted considerable attention and criticism.

The recording of SARS-CoV-2 deaths during the ongoing pandemic has varied across different jurisdictions in a multitude of ways. My focus here is not on the many practical aspects of this process (e.g., how deaths in different settings such as hospitals, homes, and care homes are aggregated), as important as they are, but on its fundamental, methodological underpinnings that are unaffected by geographical, social, and similar factors. In this regard, we find rather more uniformity; so, I will use England as a representative example.

2.2.1. England

Up to August 2020, for England, the COVID-19 Data Dashboard reported all deaths in people who had a prior laboratory-confirmed positive SARS-CoV-2 test. Thereafter, two further indicators were included: the numbers of deaths of individuals () who had their first positive test within 28 days of dying; () either those who had their first positive test within 60 days of dying or who had COVID-19 on the death certificate (as recorded by a registered medical practitioner).

The reasoning underlying these choices and the evolution of the reporting system is straightforward to understand. The original intention was to ensure a high degree of confidence in there having been a SARS-CoV-2 infection in a deceased person, hence the requirement of a laboratory-confirmed positive SARS-CoV-2 test. The subsequent expansion of the reporting parameters can be seen as an attempt to account for those deceased individuals—possibly many of them—who were infected but never actually tested for the virus. It is difficult to argue that these reporting choices are anything other but sensible. It is in the use and the interpretation of them that the nuance lies, as I discuss shortly.

2.2.2. Survival Analysis…Again

As I noted earlier, although the techniques outlined in Section 2.1 provide a good basis for contextualising the recording of SARS-CoV-2-related deaths in England, it is important to observe that neither Kaplan–Meier estimation nor Cox’s regression can be applied in the context just described out of the proverbial can—further thought is needed to adapt the methods to the problem at hand. In particular, note that, as described, both approaches are prospective in nature, in that observation begins at a set time for a known cohort. In contrast, in the problem setting of interest, the question is retrospectively posed. This challenge is not new and can be addressed in a principled and robust manner by extending the original statistical models. The relevant technical details are involved and unnecessary to go into detail herein—the interested reader would be well-advised to consult the work of Prentice and Breslow [29] or Copas et al. [30] for examples.

Nevertheless, there is an important difficulty of a practical nature that has emerged in the context of COVID-19 and is often one that one has to contend with in many other epidemiological settings. Specifically, the selection of nondiseased individuals is far from straightforward. The reason for this lies in the potentially asymptomatic presentation of the disease. As a consequence, there is a possibility of the nondiseased cohort actually containing individuals who had COVID-19 at some point but were unaware of it. An important yet subtle observation that is key to make here is that this data contamination does not act merely so as to reduce the accuracy of analysis or reduce the uncertainty of the conclusions; rather, there is a systematic bias that is introduced. In particular, observe that because it is the asymptomatic individuals—i.e., those with the least disease severity and thus the most optimistic prognosis—who are removed from the COVID-19 positive cohort, the overall prognosis of the nominal COVID-19 positive cohort is made to appear worse than it would have been had the asymptomatic cases been included. As a corollary, any analysis applied is likely to produce an overestimate, rather than an underestimate or an unbiased estimate, of COVID-19 mortality. Including a model of the source of bias in the overall statistical model is difficult because the key variables underlying the phenomenon are latent by their very nature.

2.2.3. Piecing It All Together

My closing remark in Section 2.2.1 that the COVID-19 reporting choices in England are eminently sensible may have resulted in some readers raising their brow. Indeed, when the reporting protocol was first published, the socialist mainstream (as well as nonmainstream) media were quick to point out that somebody who died after being hit by a bus and who had had a recent positive COVID-19 test would be included in the reported numbers. This has been widely repeated and a few examples serve well to illustrate the gist of the argument. Thus, the Daily Mail, the highest circulation daily newspaper in the UK following the Sun, reported the following [31]:

“...if, for example, somebody tested positive in April but recovered and was then hit by a bus in July, they would still be counted as a Covid-19 victim.”

Rowland Manthorpe, an editor at Wired magazine who has written for the Guardian, Observer, Sunday Telegraph, Spectator, etc., speaking for Sky News [32], echoed the thoughts:

“Essentially, there is no way to recover, statistically. So, if I tested positive for COVID-19 today and then I got hit by a bus tomorrow, then COVID-19 would be listed as my cause of death.”

The reiteration was not limited to media personalities. For example, in an article provocatively entitled “Are official figures overstating England’s Covid-19 death toll?” the Guardian [33] reported that an unnamed Department of Health and Social Care source summed up the process as follows:

“You could have been tested positive in February, have no symptoms, then be hit by a bus in July and you’d be recorded as a Covid death.”

At first sight, these criticisms do not seem entirely unreasonable. Why would a person who was killed by being hit by a bus be counted as a COVID-19 death? The Guardian, to its credit, did report an apparent defence by a ‘source at Public Health England’, who was quoted as saying that

“…such a scenario would ‘technically’ be counted as a coronavirus death, ‘though the numbers where that situation would apply are likely to be very small’.”

This is a rather feeble response and a misleading one too. The implication is that those deaths indeed should not have been recorded but that their infrequency renders the matter of little practical significance. That is incorrect. If these deaths are indeed entirely confounding, statistical analyses such as those outlined earlier, would have found them to be such—in these cases, they would indeed present as noise in the data and be practically insignificant. However, there is another possibility, which is particularly important when dealing with novel and poorly understood diseases. Imagine if statistical analysis did reveal that previously COVID-19-positive people die in greater numbers by being hit by vehicles than their disease-free counterparts; in other words, that there was statistical significance to this observation. This would have suggested possibly new knowledge about the virus and its effects. For example, it could have indicated that the virus has long-lasting neurological effects that would affect one’s ability to respond in traffic. Indeed, now we do know that SARS-CoV-2 is a neurotropic virus with a whole host of neuropathological effects including dizziness, decreased alertness, headaches, seizures, nausea, cognitive impairment, encephalopathy, encephalitis, meningitis, anosmia, etc. [34]. Yet, an explanation of this kind was woefully missing from the mainstream coverage. Further, still working within the premises of this hypothetical scenario, even before any mechanistic understanding is developed, the mere new knowledge that in some way or another past COVID-19 positivity predicts pedestrian deaths in traffic, as any knowledge, can only be advantageous. For example, it could lead to timely advice to the public to take additional care in appropriate situations.

To summarise, the recording of COVID-19-related deaths should indeed include all deaths—whatever their proximal cause may be or appear to be—of individuals tested positive any time in the past and these numbers should be reported to the relevant bodies. Thus, if anything, the criteria for the recording of COVID-19-related deaths were insufficiently rather than excessively inclusive. However, the reporting, i.e., the communication of COVID-19 mortality to the general public should be based on robust statistical analyses at the cohort level. It should be a cause of profound concern that even such publications as Scientific American failed to observe what is little more than rudimentary rigour regarding this [35], incorrectly claiming

“Nearly 800,000 people are known to have died of COVID-19. [all emphasis added]”

3. Analysis of Mainstream Media

Having equipped ourselves with the understanding of how data on disease effected mortality ought to be collected and recorded, and interpreted and reported, we are now in a good position to turn our attention to the analysis of the reporting of COVID-19 mortality by the mainstream media. I do so in the present section. Its structure follows the usual pattern: I start by describing my data collection protocol in Section 3.1; then, report my analysis of these data in Section 3.2; and finally, discuss the findings in Section 3.3.

3.1. Data Collection

Data were collected from the web sites of the top circulation national daily newspapers in the UK and the USA, as well as the web sites of the two leading UK television broadcasters, namely, the BBC (British Broadcasting Corporation) and ITV (Independent Television; legally, Channel 3). Sister publications, e.g., the Sun and the Sun on Sunday or the Daily Mail and Mail On Sunday, were considered jointly under the name of the main brand. The collection of all data was conducted on 23 August 2020. In particular, I recorded the number of articles on each web site containing the exact phrases ‘deaths with COVID’, ‘deaths from COVID’, ‘deaths of COVID’ (n.b. the search was not case-sensitive). A summary of the raw data can be found in Table 1 and Table 2. For completeness, I note that, by design, the collected corpus does not include any articles that use alternative phrasings, though it is unlikely that there is a significant number of these.

Table 1.

Summary of UK data.

Table 2.

Summary of USA data.

3.2. Data Analysis

I started by looking at the proportion of articles by the publication that used the incorrect ‘deaths of COVID’ or ‘deaths from COVID’ phrasing. For the UK-based publishers, averaged across publishers—that is,

where is the number of different publishers, and and are the number of publisher i’s articles with the correct (‘with COVID’) incorrect (‘from COVID’ or ‘of COVID’) phrasings—89.4% of articles contained an incorrect formulation, with the corresponding standard deviation being 9.0%. In the USA, the corresponding average was found to be 98.8%, with a standard deviation of 1.8%. Taking into account the different numbers of articles that contained any of the search terms—that is,

the overall proportion of incorrectly phrased articles in the UK was 91.0% and in the USA was 99.3%.

Using the one-sample Kolmogorov–Smirnov test [36], the distributions of per-publisher proportions of incorrectly phrased articles were confirmed to be log-normal, both for the UK and the USA, at the confidence levels and , respectively. The two-sample Kolmogorov–Smirnov test [37] further confirmed that the UK and the USA distributions differ significantly—that is, the null hypothesis of the two sample sets coming from the same log-normal distribution was rejected at the confidence level .

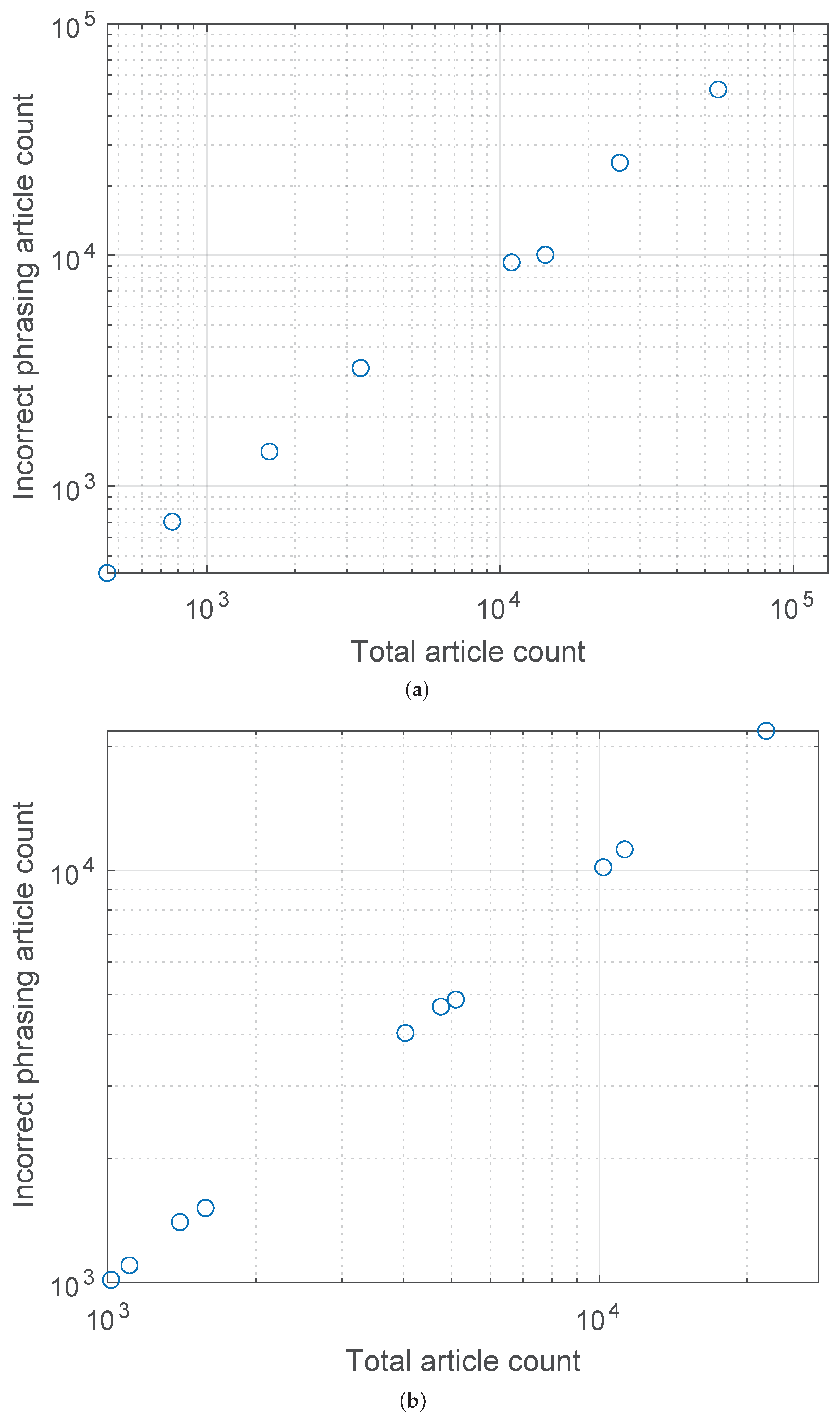

Lastly, the plots in Figure 2a,b show the relationships between the number of incorrectly phrased articles and the total number of articles reporting COVID-related deaths for the UK and the USA, respectively. The corresponding Pearson’s was found to be for the UK and for the USA.

Figure 2.

Relationship between the number of incorrectly phrased articles and the total number of articles reporting COVID-related deaths for (a) the UK and (b) the USA. A highly linear behaviour is readily observed, with the corresponding Pearson’s equal to and , respectively.

3.3. Discussion

To begin with, I present a few brief but important comments on the data collection. Firstly, it should be noted that for the sake of uniformity and like-for-like comparison, all data were collected on the same day, namely, 23 August 2020, and include all historical articles, i.e., all articles published on or before that date. Although the exact date in question was selected at random, it was deliberately chosen not to be too early in the pandemic, as a reasonable argument could have been made that journalists—largely unequipped with the kind of expertise needed to understand and communicate the highly technical and report such a type of novel information—needed a period of adjustment and learning. This possible objection is fully addressed by the choice of 23 August 2020, which is some 9 months following the first identification of COVID-19 in December 2019 [38] and nearly 6 months following the declaration of a pandemic by The World Health Organization (WHO) on 11 March 2020 [39].

The most immediately apparent finding of my analysis in the previous section is that of the strikingly high proportion of articles that incorrectly described COVID-19 deaths as being ‘from’ or ‘of’ COVID-19. Perhaps even more remarkably, this was found to be the case across all analysed media outlets, both in the UK and the USA. Interestingly though, although in both countries all but a few articles used the incorrect phrasings, the transgression in communication was significantly worse in the USA (as I also confirmed statistically in the previous section)—in the UK, approximately 1 in 11 articles used the correct ‘with’ phrasing, whereas in the USA, it was fewer than 1 in 100. Indeed, USA’s Newsday did not have a single correctly worded article despite reporting on COVID-19 mortality in 1403 articles, and WSJ, New York Post, and LA Times had only a single correctly worded article each, despite having 4030, 21,850, and 11,260 articles, respectively, on the topic. The only significant outlier, in a positive sense yet with a still exceedingly high proportion of incorrectly phrased articles of approximately 70.5%, is the BBC; the BBC was approximately twice as likely to use the correct phrasing in reporting COVID-19 mortality than the next best outlet and more than 3 times as likely as the analysed UK media outlets on average. None of the USA outlets stand out from the rest, even the most accurate one—USA Today—using the correct wording in only approximately 4% of its articles.

Perhaps the most concerning finding of my analysis concerns the relationship between the proportion of incorrect reporting by a media outlet and the outlet’s volume of COVID-19 mortality reporting, summarised by the plots in Figure 2a,b. As stated in the previous section, we find that with a remarkable regularity (Pearson’s for the UK and the USA outlets being and , respectively) the greater the number of articles an outlet published on COVID-19 mortality, the greater the proportion (n.b. not the absolute number, which would be expected) of articles that used incorrect phrasing with respect to it. There are multiple reasons that could explain this. For example, it is possible that outlets whose journalists’ collective values are more caution-driven have, as a consequence, published more on the topic. This would make the transgression—for a transgression has certainly taken place in the sense that an incorrect claim was made—entirely of an intellectual, rather than ethical nature. On the other hand, it is impossible to dismiss the possibility of a more sinister cause, such as increased fear-oriented reporting being driven by commercial or other interests. Numerous other more complex reasons are possible too. Considering that the available data do not allow us to favour one hypothesis over another, it would be inappropriate to speculate on the topic; nevertheless, it is important to note the trend and highlight it as an important one for future research. Lastly, note that my analysis found no relationship between the circulation of a newspaper and the corresponding COVID-19 mortality reporting phrasing accuracy.

4. Summary and Conclusions

The coronavirus disease 2019 pandemic has placed science in the spotlight. However, science does not exist as an immaterial Platonic form—research is performed by humans, science-based decisions are made by humans, and science is communicated by humans. Human fallibility cannot be taken out of science. Scientists, decision-makers, and communicators make not only genuine errors, but also have egos, compete for jobs and prestige, exhibit biases, hold political and broader philosophical beliefs, etc., all of which affect how science is materialised and used. A failure to recognise faults in these processes when they occur can only serve to undermine the general public trust in science and its application [13,40].

In this article, I focused on a specific and highly relevant aspect of science communication in the context of the COVID-19 pandemic, namely, that of disease mortality reporting. Considering the amount of confusion and anger across the spectrum that the issue has caused, I began with a discussion of what it means to say that a person has died from a disease. In particular, I explained that while this may be an acceptable phrasing in some circumstances, strictly speaking, it is not a meaningful one and as such should generally be avoided. Indeed, this nuance is crucial in situations when there is a significant interaction between different causal factors, as is the case regarding COVID-19 mortality. Hence, I clarified how mortality ought to be assessed, namely, on a cohort (population) basis, and summarised the technical fundamentals that underpin the necessary analysis used to arrive at such estimates. The developed insight was concretized with an analysis of the controversy surrounding COVID-19 mortality recording and reporting process in England. I showed how the decisions made were widely misunderstood and incorrectly interpreted in the media, and demystified the decisions underlying the process.

The second part of the article turned its attention to the quantitative analysis of COVID-19 mortality reporting by the mainstream media, namely, by the top circulation daily newspapers in the UK and the USA, as well as the UK’s two leading TV broadcasters—the BBC and the ITV. The results are striking: in both countries, the vast majority of articles incorrectly reported COVID-19 deaths, erroneously attributing a causative link between COVID-19 and the death of any person with a past positive COVID-19 test. This effect was observed with remarkable uniformity over the different outlets considered, with the transgression being significantly worse in the USA than the UK (approximately 89% vs. 99%, respectively).

The effects of poor science communication of the kind considered in this paper must not be underestimated. Not only does incorrect information provide a faulty basis for individual decision-making, but can also penetrate the highest levels of legislative and executive branches of government. Indeed, I leave the reader with three poignant examples. The first of these is from the address to the nation in February 2021 by the president of the USA, Joe Biden [41]:

the second one from the UK’s Prime Minister Boris Johnson statement on coronavirus delivered on 26 January 2021 [42]:“Today we mark a truly grim, heartbreaking milestone—500,071 dead.”,

and the last one from the speech by Keir Starmer, then Leader of the Labour Party, at the Labour Party Conference 2021 [43]:“I am sorry to have to tell you that today the number of deaths recorded from Covid in the UK has surpassed 100,000... [all emphasis added]”,

“We have now lost 133,000 people to Covid. [all emphasis added]”

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Walsh, J. Public Attitude Toward Science Is Yes, but— Survey results in NSF report show backing is still strong, indicate that the best informed are the most favorably disposed. Science 1982, 215, 270–272. [Google Scholar] [CrossRef]

- Claessens, M. Public perception of science in Eastern and Central Europe. In Proceedings of the 8th International Conference on Public Communication of Science and Technology (PCST), Barcelona, Spain, 3–6 June 2004; pp. 427–429. [Google Scholar]

- Cobb, M.D.; Macoubrie, J. Public perceptions about nanotechnology: Risks, benefits and trust. J. Nanoparticle Res. 2004, 6, 395–405. [Google Scholar] [CrossRef]

- Skinner, G.; Clemence, M. Veracity Index 2016: Trust in Professions; Ipsos MORI: London, UK, 2016. [Google Scholar]

- Bredahl, L. Determinants of consumer attitudes and purchase intentions with regard to genetically modified food–results of a cross-national survey. J. Consum. Policy 2001, 24, 23–61. [Google Scholar] [CrossRef]

- Allum, N.; Sturgis, P.; Tabourazi, D.; Brunton-Smith, I. Science knowledge and attitudes across cultures: A meta-analysis. Public Underst. Sci. 2008, 17, 35–54. [Google Scholar] [CrossRef] [Green Version]

- Brown, R.C.; Savulescu, J.; Williams, B.; Wilkinson, D. Passport to freedom? Immunity passports for COVID-19. J. Med. Ethics 2020, 46, 652–659. [Google Scholar] [CrossRef] [PubMed]

- Gaglione, C.; Purificato, I.; Rymkevich, O.P. Covid-19 and labour law: Italy. Ital. Labour Law e-J. 2020, 13, 306–313. [Google Scholar]

- Brzezinski, A.; Kecht, V.; Van Dijcke, D.; Wright, A.L. Belief in Science Influences Physical Distancing in Response to Covid-19 Lockdown Policies; BFI Working Paper; University of Chicago, Becker Friedman Institute for Economics: Chicago, IL, USA, 2020. [Google Scholar]

- Goldman, A.I. Reliabilism, veritism, and epistemic consequentialism. Episteme 2015, 12, 131–143. [Google Scholar] [CrossRef]

- Pritchard, D. Knowledge and understanding. In Virtue Epistemology Naturalized; Springer: Cham, Switzerland, 2014; pp. 315–327. [Google Scholar]

- Arandjelović, O. AI, democracy, and the importance of asking the right questions. AI Ethics J. 2021, 2, 2. [Google Scholar]

- Cooper, J.; Dimitriou, N.; Arandjelović, O. How Good is the Science That Informs Government Policy? A Lesson From the UK’s Response to 2020 CoV-2 Outbreak. J. Bioethical Inq. 2021, 4, 561–568. [Google Scholar] [CrossRef]

- Lavazza, A.; Farina, M. The role of experts in the Covid-19 pandemic and the limits of their epistemic authority in democracy. Front. Public Health 2020, 8, 356. [Google Scholar] [CrossRef]

- Gerbaudo, P. The pandemic crowd. J. Int. Aff. 2020, 73, 61–76. [Google Scholar]

- Burns, T.W.; O’Connor, D.J.; Stocklmayer, S.M. Science communication: A contemporary definition. Public Underst. Sci. 2003, 12, 183–202. [Google Scholar] [CrossRef]

- Fischhoff, B. The sciences of science communication. Proc. Natl. Acad. Sci. USA 2013, 110, 14033–14039. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bubela, T.; Nisbet, M.C.; Borchelt, R.; Brunger, F.; Critchley, C.; Einsiedel, E.; Geller, G.; Gupta, A.; Hampel, J.; Hyde-Lay, R.; et al. Science communication reconsidered. Nat. Biotechnol. 2009, 27, 514–518. [Google Scholar] [CrossRef] [PubMed]

- de Bruin, W.B.; Bostrom, A. Assessing what to address in science communication. Proc. Natl. Acad. Sci. USA 2013, 110, 14062–14068. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Trench, B. Towards an analytical framework of science communication models. In Communicating Science in Social Contexts; Cheng, D., Claessens, M., Gascoigne, T., Metcalfe, J., Schiele, B., Shi, S., Eds.; Springer: Dordrecht, The Netherlands, 2008; pp. 119–135. [Google Scholar]

- Scheufele, D.A. Science communication as political communication. Proc. Natl. Acad. Sci. USA 2014, 111, 13585–13592. [Google Scholar] [CrossRef] [Green Version]

- Leung, K.M.; Elashoff, R.M.; Afifi, A.A. Censoring issues in survival analysis. Annu. Rev. Public Health 1997, 18, 83–104. [Google Scholar] [CrossRef]

- Dimitriou, N.; Arandjelović, O.; Harrison, D.J.; Caie, P.D. A principled machine learning framework improves accuracy of stage II colorectal cancer prognosis. NPJ Digit. Med. 2018, 1, 52. [Google Scholar] [CrossRef] [Green Version]

- Gavriel, C.G.; Dimitriou, N.; Brieu, N.; Nearchou, I.P.; Arandjelović, O.; Schmidt, G.; Harrison, D.J.; Caie, P.D. Assessment of Immunological Features in Muscle-Invasive Bladder Cancer Prognosis Using Ensemble Learning. Cancers 2021, 13, 1624. [Google Scholar] [CrossRef]

- Nearchou, I.P.; Soutar, D.A.; Ueno, H.; Harrison, D.J.; Arandjelovic, O.; Caie, P.D. A comparison of methods for studying the tumor microenvironment’s spatial heterogeneity in digital pathology specimens. J. Pathol. Inform. 2021, 12, 6. [Google Scholar] [CrossRef]

- Goel, M.K.; Khanna, P.; Kishore, J. Understanding survival analysis: Kaplan-Meier estimate. Int. J. Ayurveda Res. 2010, 1, 274. [Google Scholar] [PubMed] [Green Version]

- Wallin, L.; Strandberg, E.; Philipsson, J.; Dalin, G. Estimates of longevity and causes of culling and death in Swedish warmblood and coldblood horses. Livest. Prod. Sci. 2000, 63, 275–289. [Google Scholar] [CrossRef]

- Malatack, J.J.; Schaid, D.J.; Urbach, A.H.; Gartner Jr, J.C.; Zitelli, B.J.; Rockette, H.; Fischer, J.; Starzl, T.E.; Iwatsuki, S.; Shaw, B.W. Choosing a pediatric recipient for orthotopic liver transplantation. J. Pediatr. 1987, 111, 479–489. [Google Scholar] [CrossRef] [Green Version]

- Prentice, R.L.; Breslow, N.E. Retrospective studies and failure time models. Biometrika 1978, 65, 153–158. [Google Scholar] [CrossRef]

- Copas, A.J.; Farewell, V.T. Incorporating retrospective data into an analysis of time to illness. Biostatistics 2001, 2, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Matt Hancock Launches Urgent Review into Fiasco at Public Health England as It’s Revealed Anyone Who Has Ever Died after Testing Positive for COVID-19 Has Been Recorded as a ‘Coronavirus Death’—Even If They Were Hit by a Bus. Available online: https://www.dailymail.co.uk/news/article-8531765/Matt-Hancock-launches-urgent-review-way-PHE-counts-coronavirus-deaths.html (accessed on 25 December 2021).

- Coronavirus Death Numbers: Health Secretary Matt Hancock Orders Urgent Review into Public Health England Data. Available online: https://news.sky.com/story/coronavirus-health-secretary-matt-hancock-orders-urgent-review-into-public-health-england-death-data-12030392 (accessed on 25 December 2021).

- Are Official Figures Overstating England’s Covid-19 Death Toll? Available online: https://www.theguardian.com/world/2020/jul/21/analysis-why-englands-covid-19-death-toll-is-wrong-but-not-by-much (accessed on 25 December 2021).

- Montalvan, V.; Lee, J.; Bueso, T.; De Toledo, J.; Rivas, K. Neurological manifestations of COVID-19 and other coronavirus infections: A systematic review. Clin. Neurol. Neurosurg. 2020, 194, 105921. [Google Scholar] [CrossRef]

- Thrasher, S.W. Why COVID Deaths Have Surpassed AIDS Deaths in the U.S. Available online: https://www.scientificamerican.com/article/why-covid-deaths-have-surpassed-aids-deaths-in-the-u-s/ (accessed on 25 December 2021).

- Kaner, H.C.; Mohanty, S.G.; Lyons, J. Critical values of the Kolmogorov-Smirnov one-sample tests. Psychol. Bull. 1980, 88, 498. [Google Scholar] [CrossRef]

- Friedrich, T.; Schellhaas, H. Computation of the percentage points and the power for the two-sided Kolmogorov-Smirnov one sample test. Stat. Pap. 1998, 39, 361–375. [Google Scholar] [CrossRef]

- Huang, C.; Wang, Y.; Li, X.; Ren, L.; Zhao, J.; Hu, Y.; Zhang, L.; Fan, G.; Xu, J.; Gu, X.; et al. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet 2020, 395, 497–506. [Google Scholar] [CrossRef] [Green Version]

- WHO Director-General’s Opening Remarks at the Media Briefing on COVID-19—11 March 2020. Available online: https://www.who.int/director-general/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19---11-march-2020 (accessed on 25 December 2021).

- Nielsen, R.K.; Kalogeropoulos, A.; Fletcher, R. Most in the UK say news media have helped them respond to COVID-19, but a third say news coverage has made the crisis worse. Reuters Inst. Study J. 2020, 25, 1–8. [Google Scholar]

- Covid: Biden Calls 500,000 Death Toll a Heartbreaking Milestone. Available online: https://www.bbc.co.uk/news/world-us-canada-56159756 (accessed on 25 December 2021).

- Prime Minister’s Statement on Coronavirus (COVID-19): 26 January 2021. Available online: https://www.gov.uk/government/speeches/prime-ministers-statement-on-coronavirus-covid-19-26-january-2021 (accessed on 29 December 2021).

- Conference Speech: Keir Starmer. Available online: https://labour.org.uk/press/conference-speech-keir-starmer/ (accessed on 25 December 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).