Deep-Learning Image Stabilization for Adaptive Optics Ophthalmoscopy

Abstract

1. Introduction

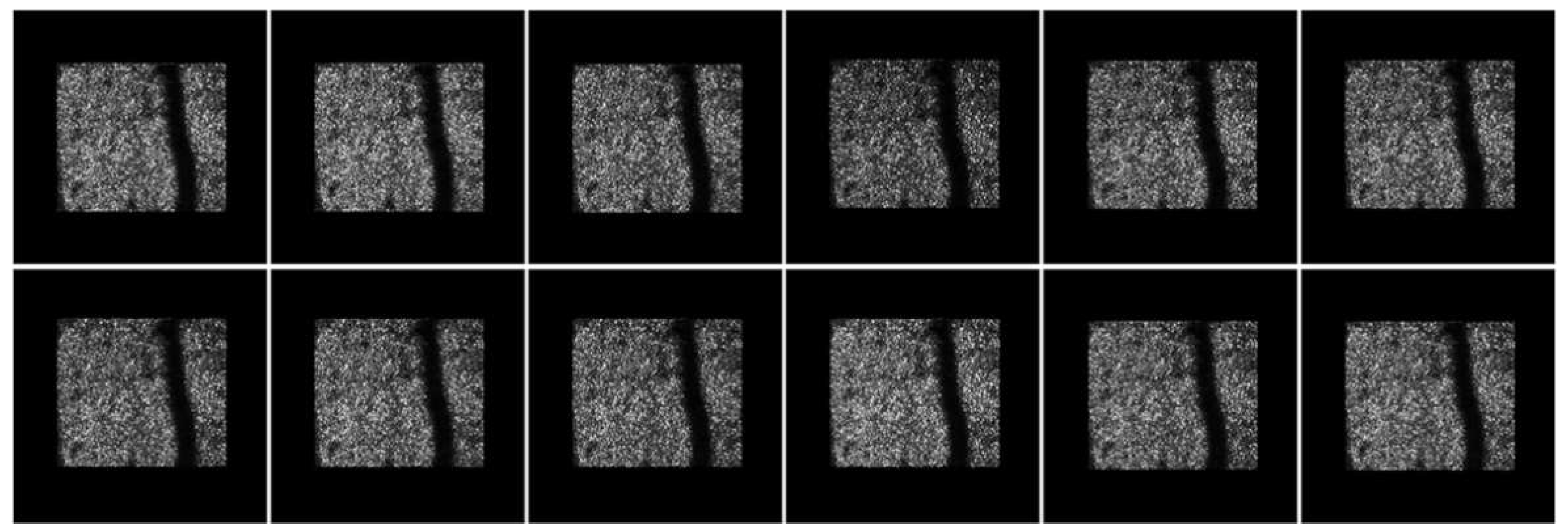

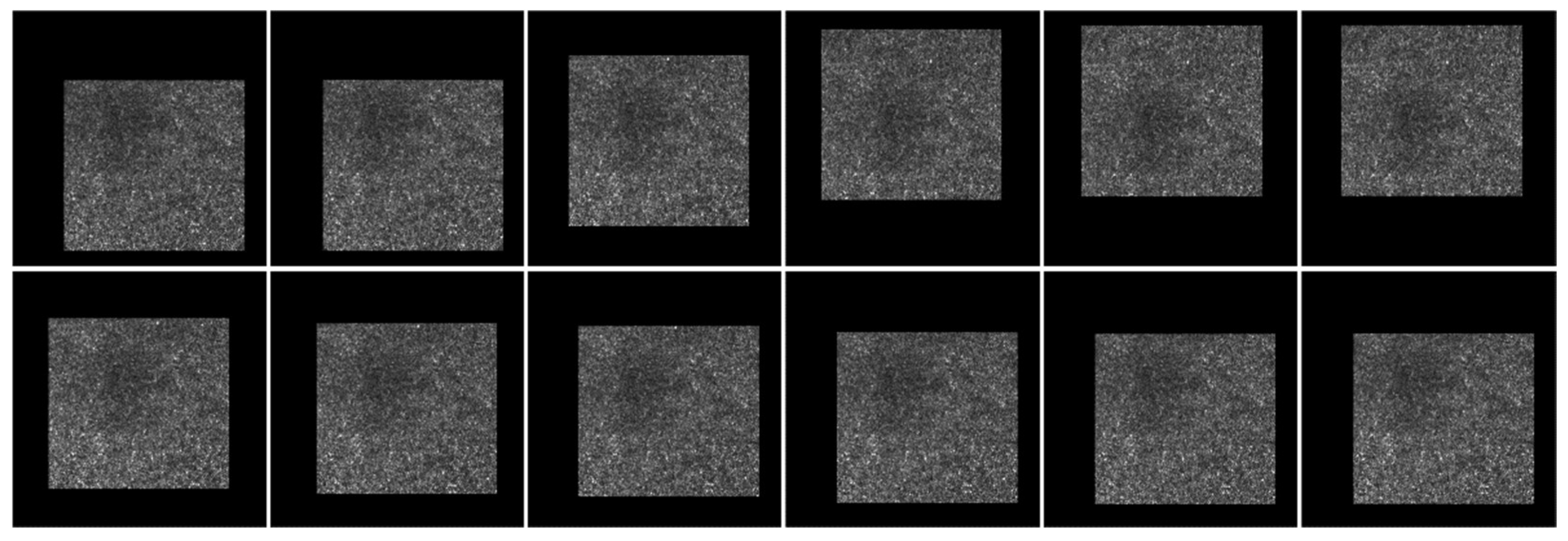

2. Materials and Methods

2.1. Data

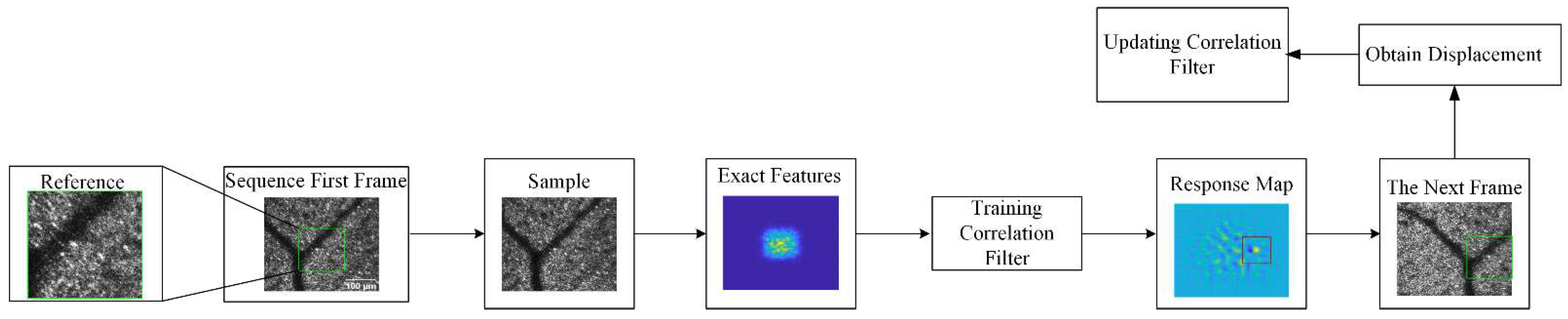

2.2. Baseline Approach ECO: Efficient Convolution Operators for Tracking

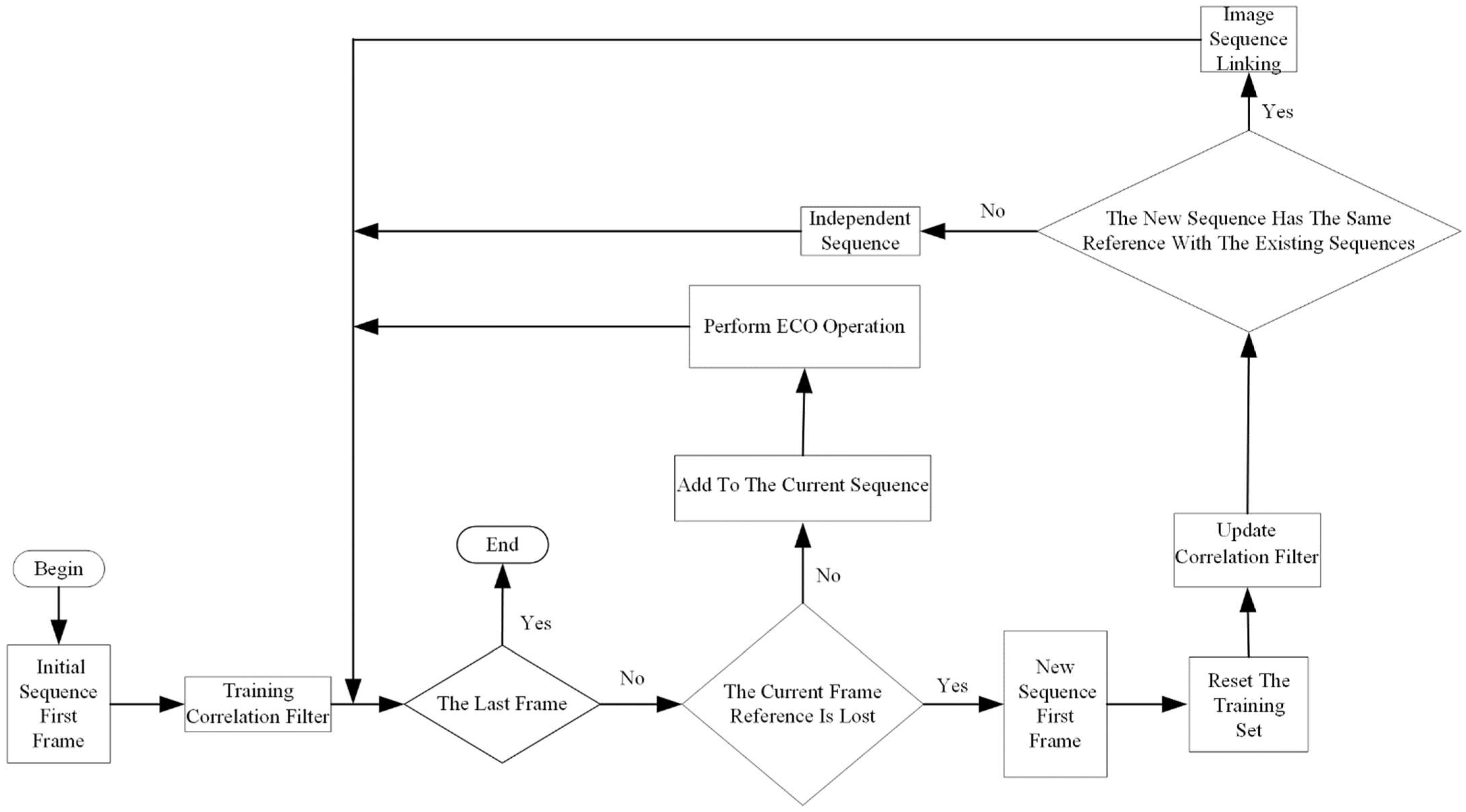

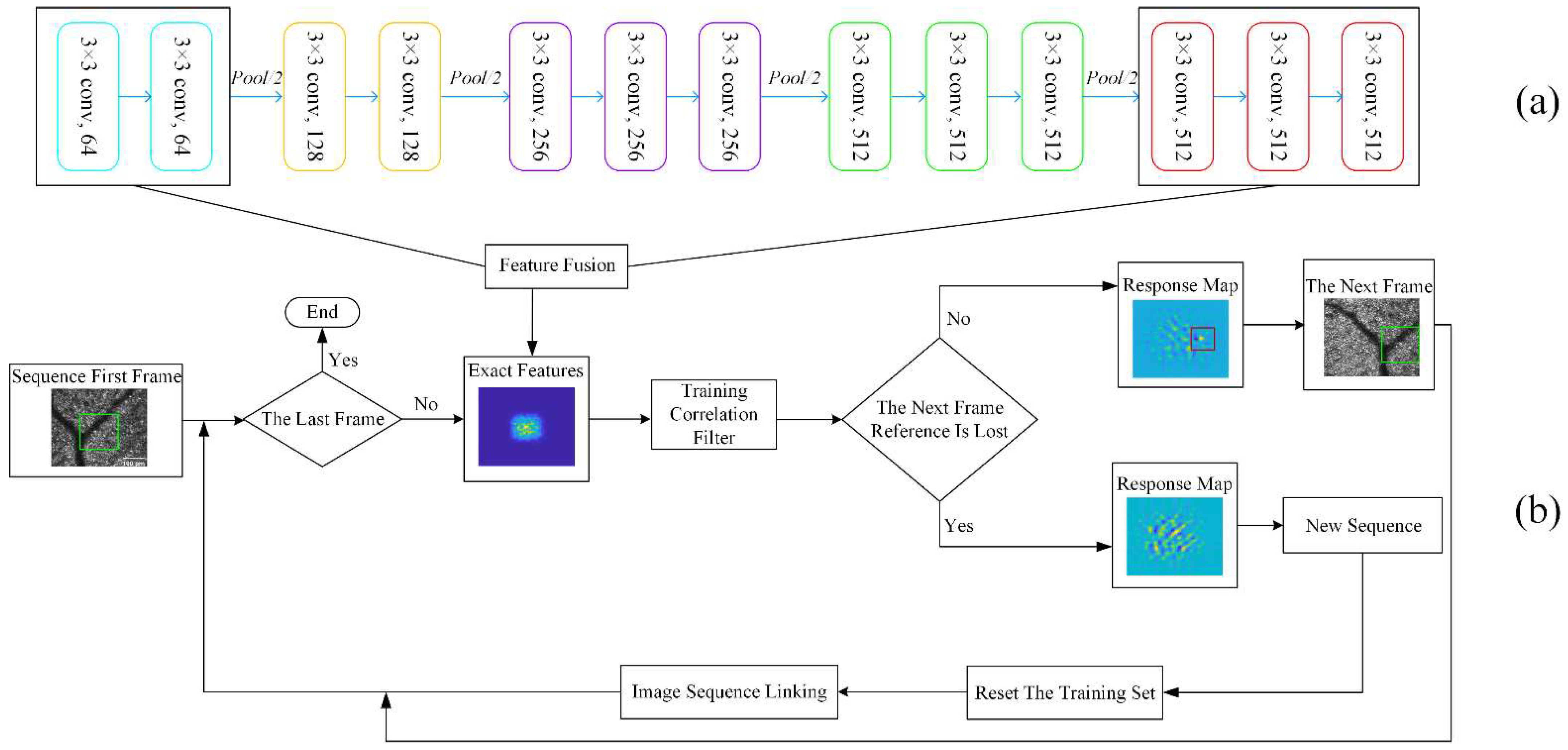

2.3. Our Approach

2.3.1. Preprocessing

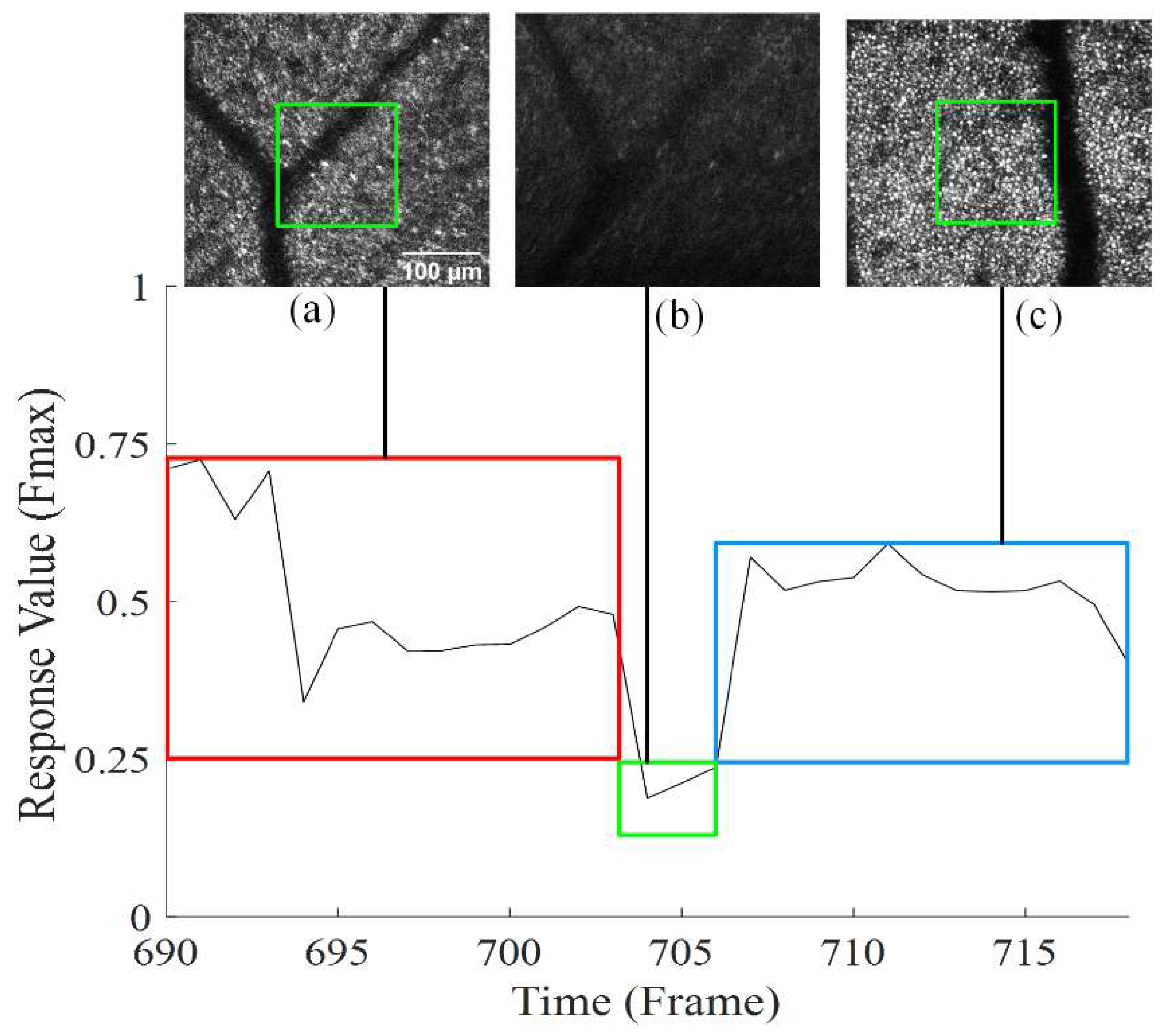

2.3.2. Reset Training Set

2.3.3. Image Sequence Linking

3. Results

3.1. Experimental Environment

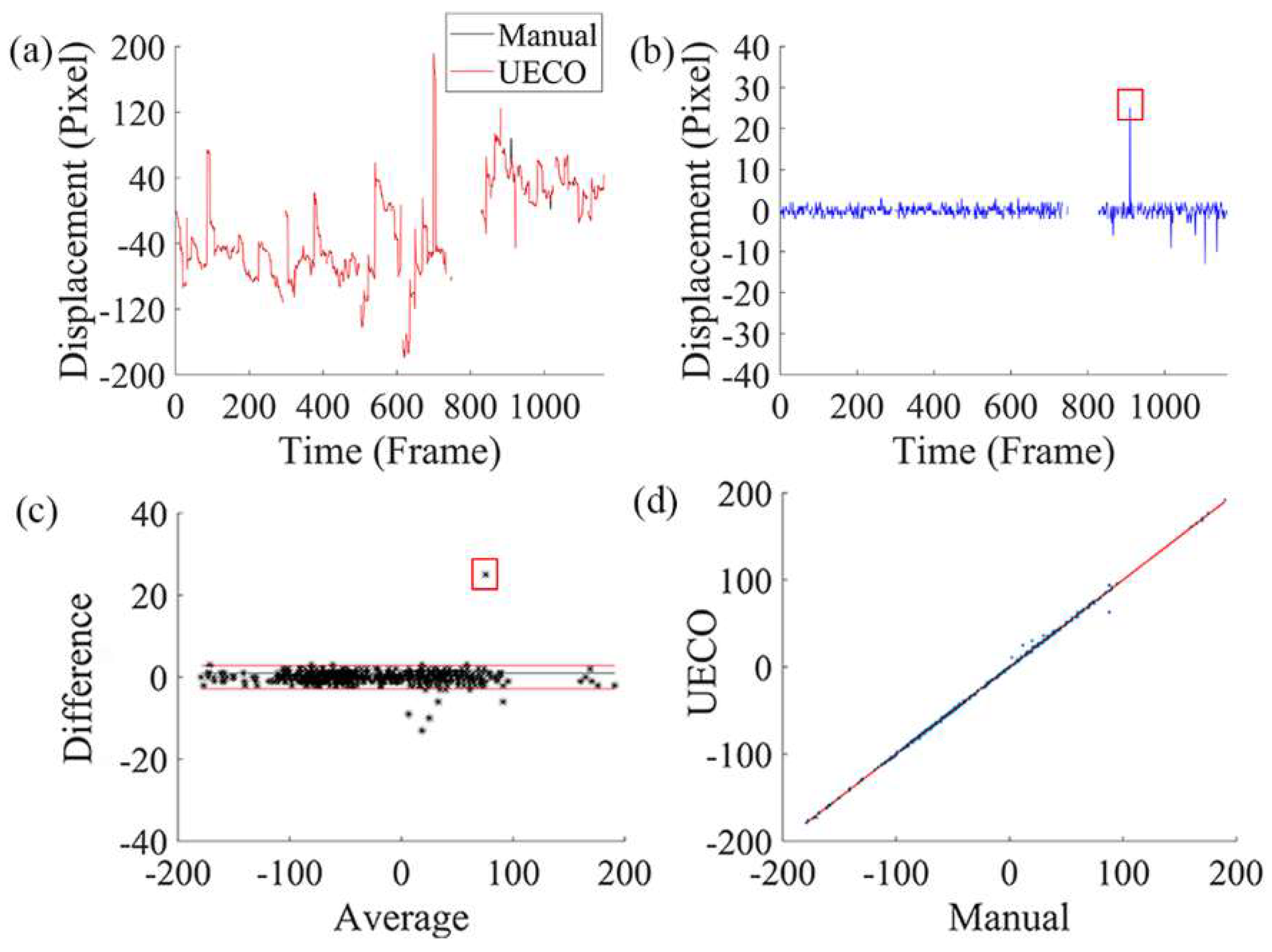

3.2. Comparison with Manual Registration

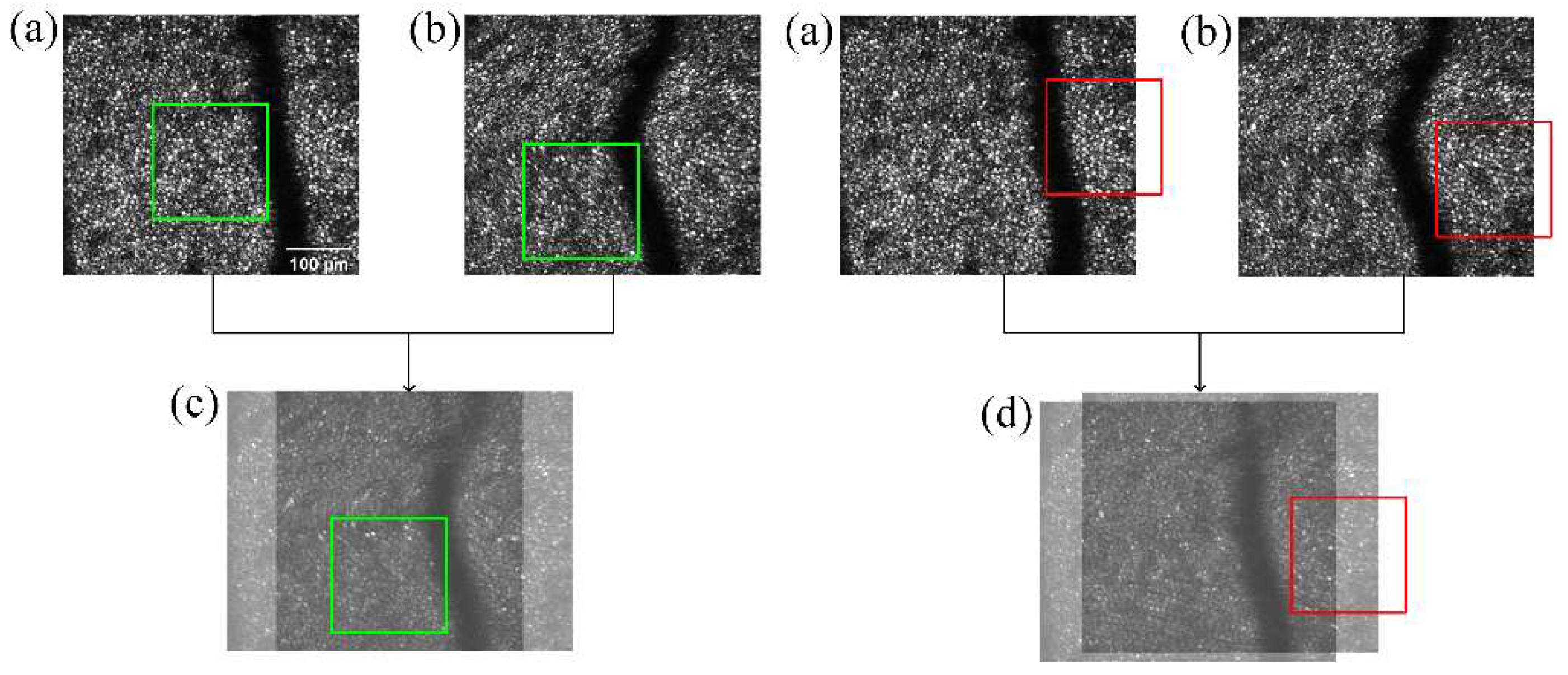

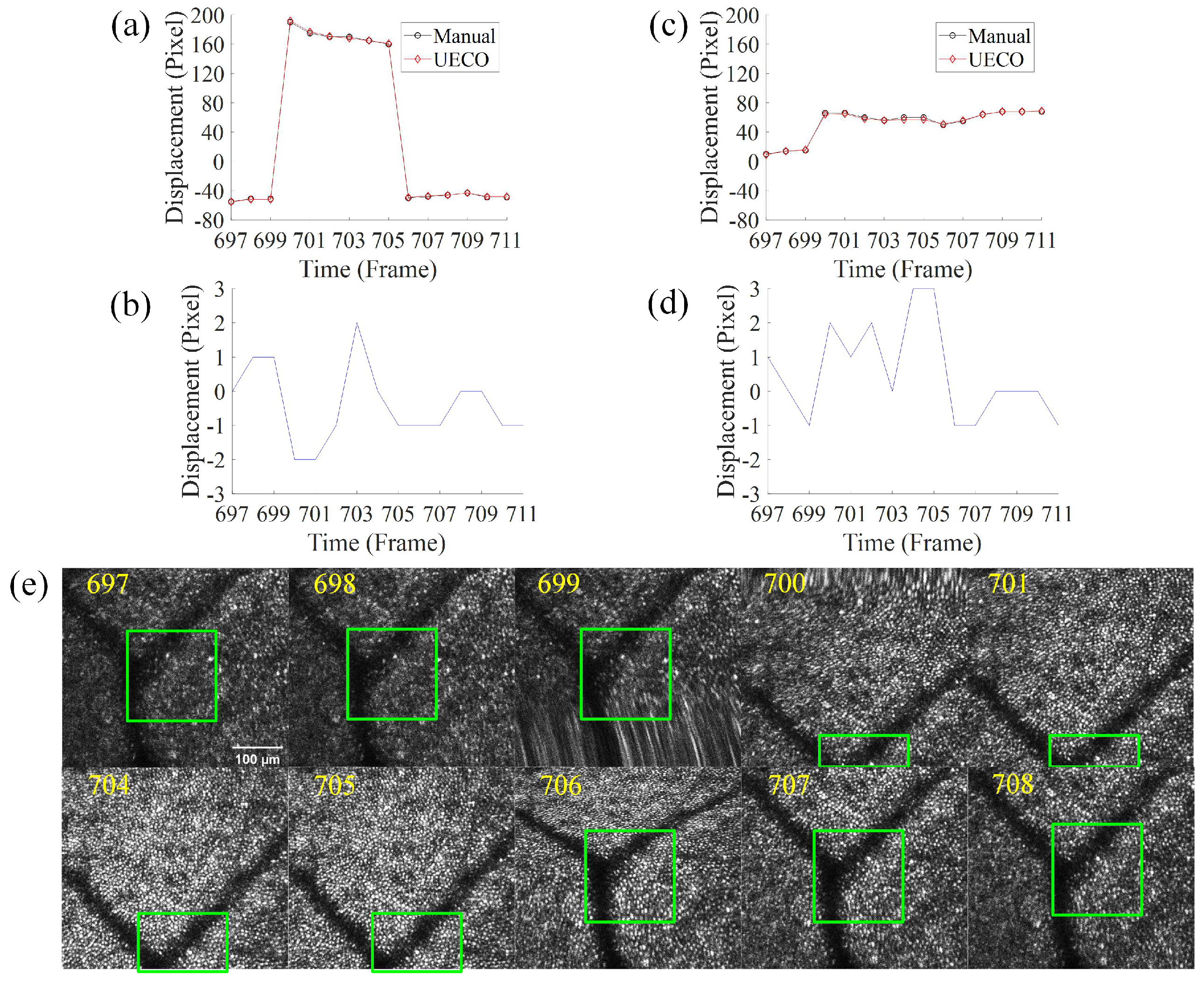

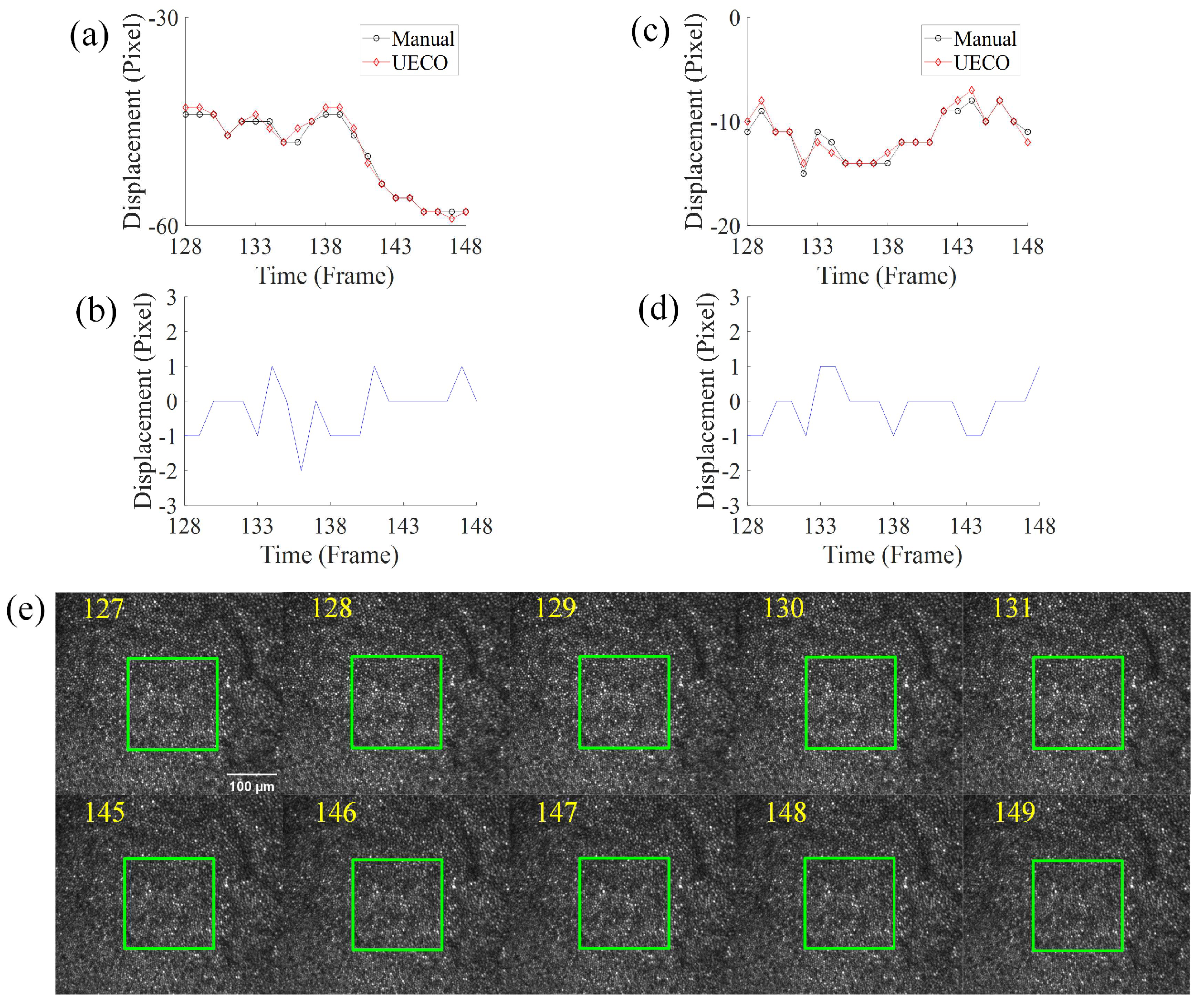

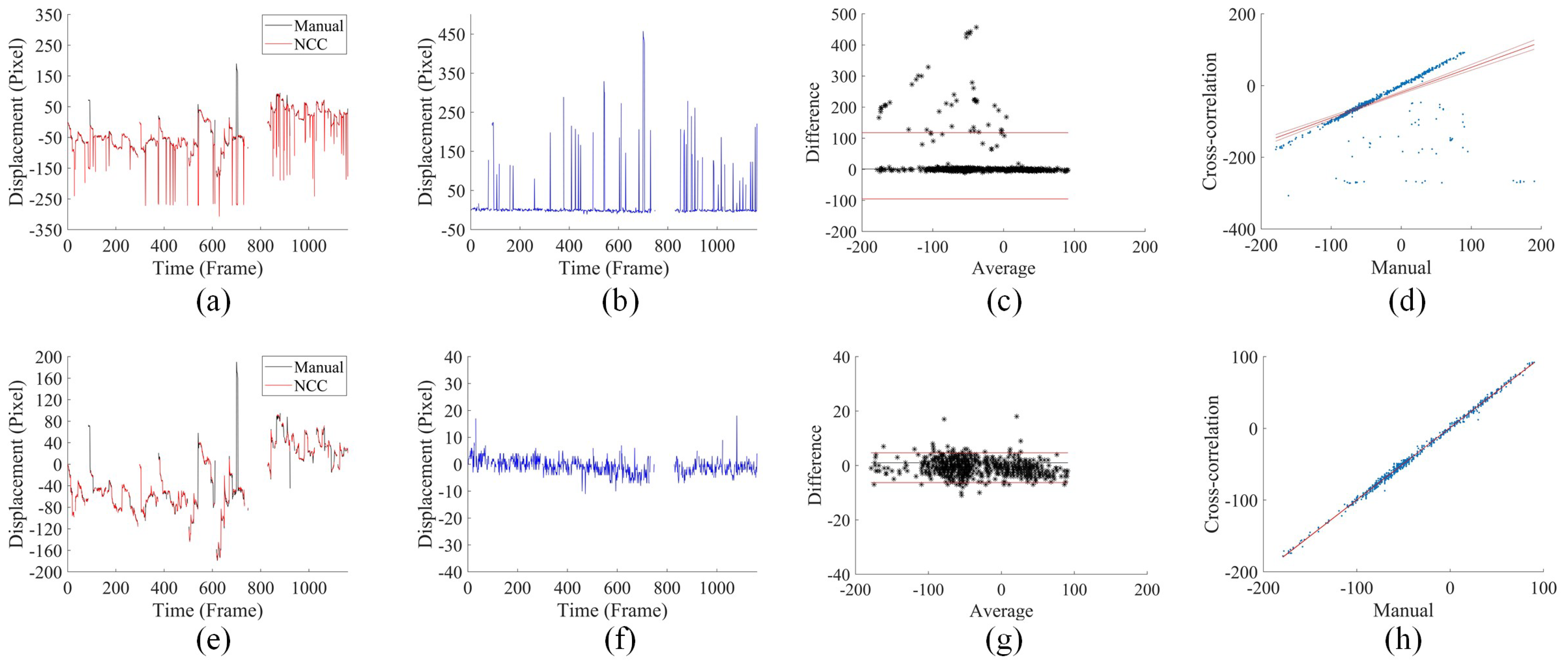

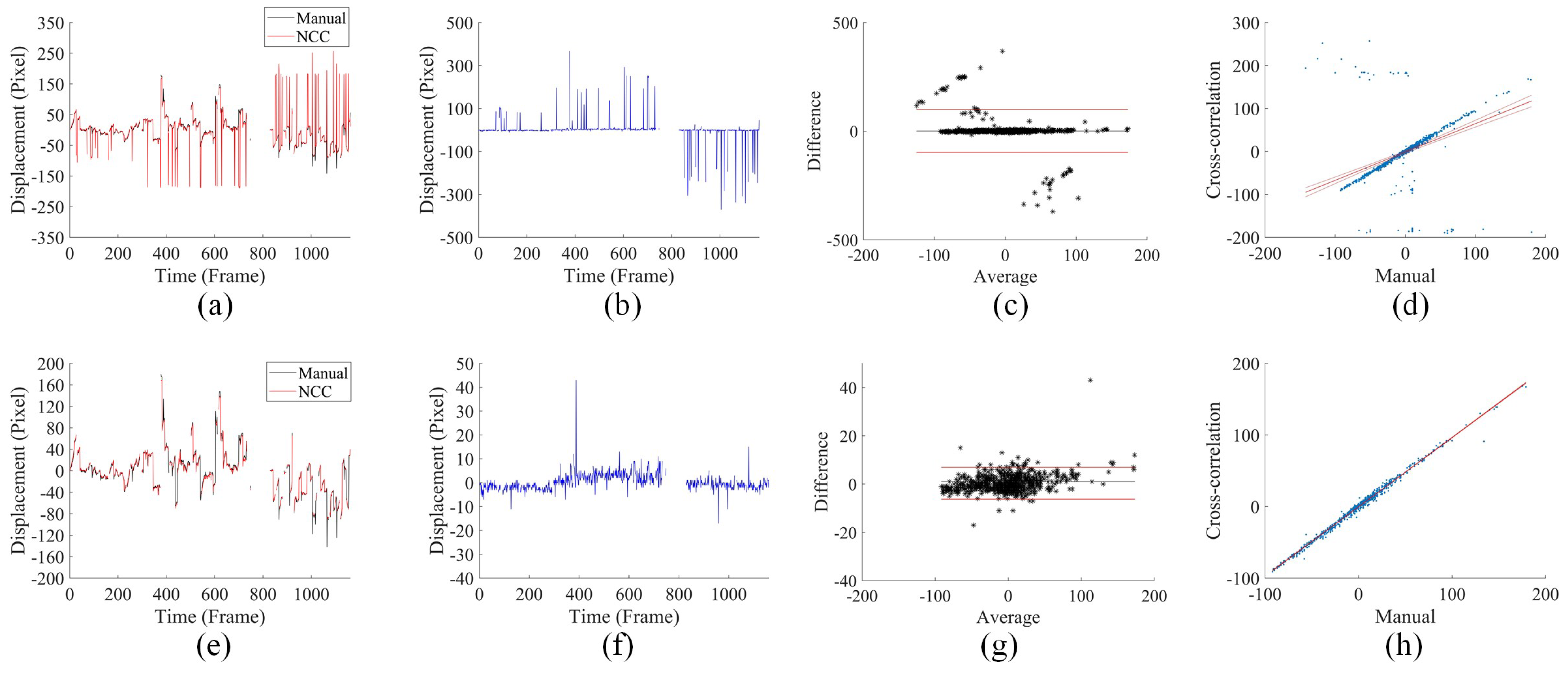

3.3. Displacement Analysis under Fast Saccadic Eye Motion and Slow Drifts

3.4. Comparison with Cross-Correlation-Based Method

3.5. Comparison of the Accuracy of Cross-Correlation-Based Method and UECO

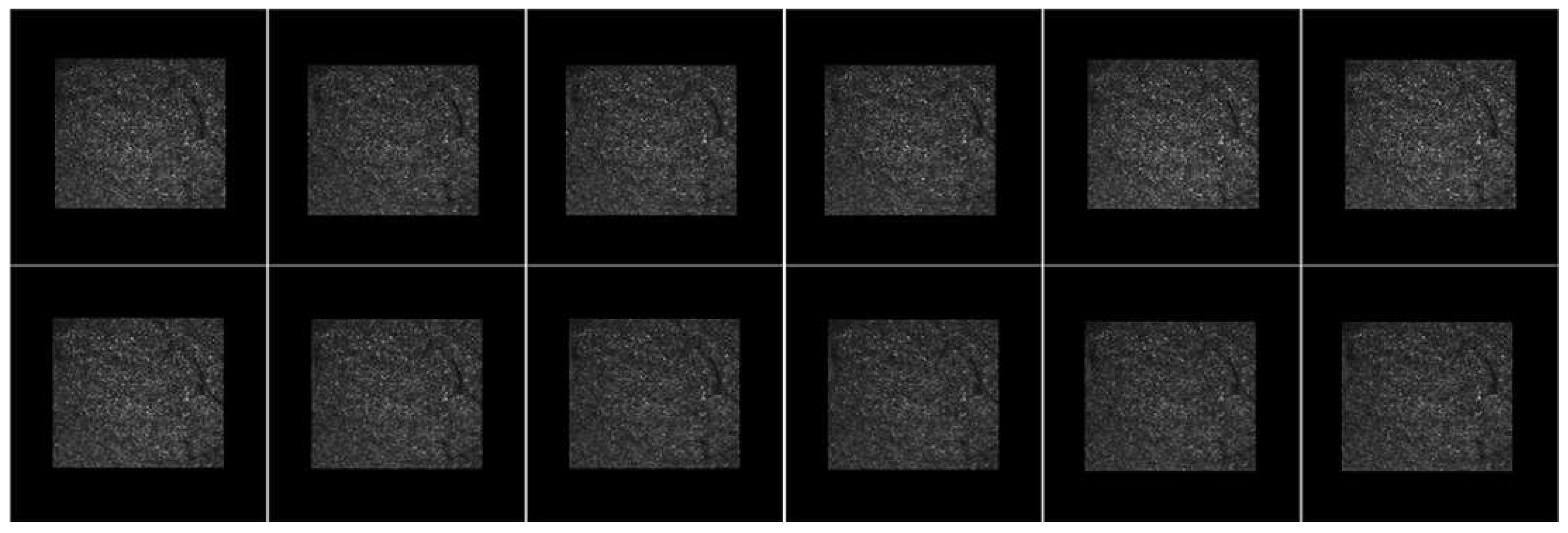

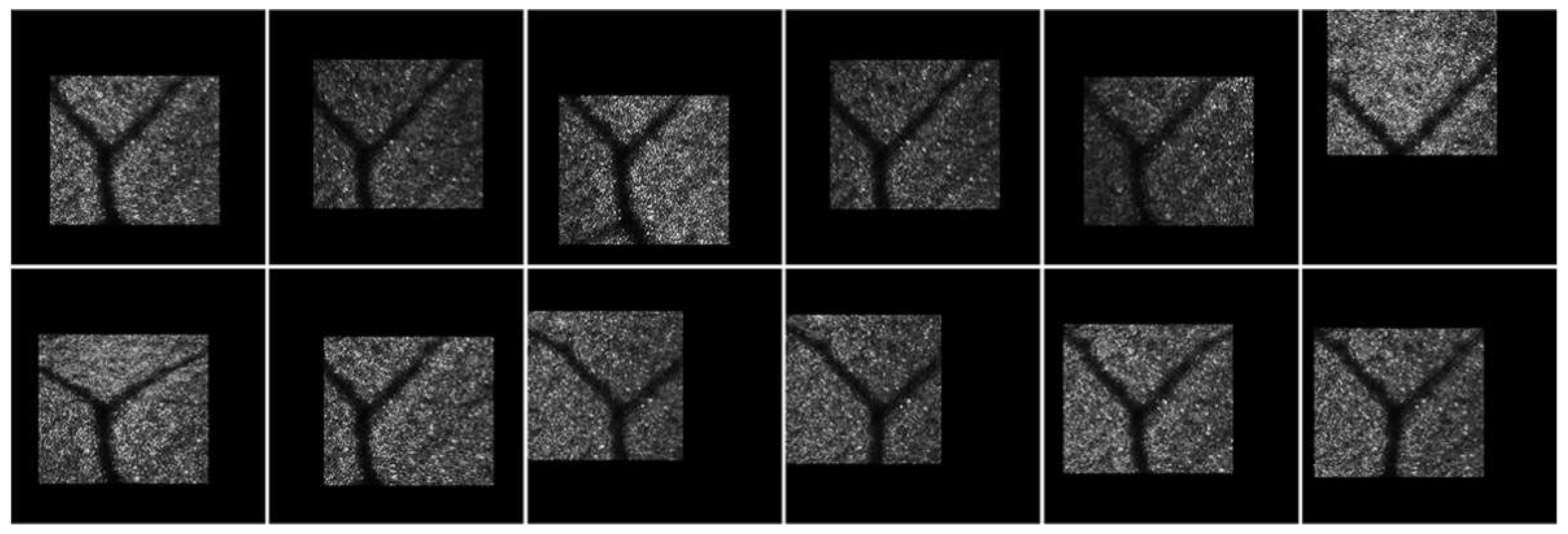

3.6. AOSLO Image Stabilization Results

4. Discussion

4.1. Difference in Experiments

4.2. Limitation

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- DeHoog, E.; Schwiegerling, J. Fundus camera systems: A comparative analysis. Appl. Opt. 2009, 48, 221–228. [Google Scholar] [CrossRef] [PubMed]

- Yao, X.; Son, T.; Ma, J. Developing portable widefield fundus camera for teleophthalmology: Technical challenges and potential solutions. Exp. Biol. Med. 2021, 247, 289–299. [Google Scholar] [CrossRef] [PubMed]

- De Boer, J.F.; Leitgeb, R.; Wojtkowski, M. Twenty-five years of optical coherence tomography: The paradigm shift in sensitivity and speed provided by Fourier domain OCT [Invited]. Biomed. Opt. Express 2017, 8, 3248–3280. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Chen, Z. Advances in Doppler OCT. Chin. Opt. Lett 2013, 11, 011702. [Google Scholar]

- Makita, S.; Miura, M.; Azuma, S.; Mino, T.; Yamaguchi, T.; Yasuno, Y. Accurately motion-corrected Lissajous OCT with multi-type image registration. Biomed. Opt. Express 2021, 12, 637–653. [Google Scholar] [CrossRef]

- Mecê, P.; Scholler, J.; Groux, K.; Boccara, C. High-resolution in-vivo human retinal imaging using full-field OCT with optical stabilization of axial motion. Biomed. Opt. Express 2020, 11, 492–504. [Google Scholar] [CrossRef]

- Pircher, M.; Zawadzki, R.J. Review of adaptive optics OCT (AO-OCT): Principles and applications for retinal imaging [Invited]. Biomed. Opt. Express 2017, 8, 2536–2562. [Google Scholar] [CrossRef]

- Azimipour, M.; Jonnal, R.S.; Werner, J.S.; Zawadzki, R.J. Coextensive synchronized SLO-OCT with adaptive optics for human retinal imaging. Opt. Lett. 2019, 44, 4219–4222. [Google Scholar] [CrossRef]

- Bower, A.J.; Liu, T.; Aguilera, N.; Li, J.; Liu, J.; Lu, R.; Giannini, J.P.; Huryn, L.A.; Dubra, A.; Liu, Z.; et al. Integrating adaptive optics-SLO and OCT for multimodal visualization of the human retinal pigment epithelial mosaic. Biomed. Opt. Express 2021, 12, 1449–1466. [Google Scholar] [CrossRef]

- Felberer, F.; Rechenmacher, M.; Haindl, R.; Baumann, B.; Hitzenberger, C.K.; Pircher, M. Imaging of retinal vasculature using adaptive optics SLO/OCT. Biomed. Opt. Express 2015, 6, 1407–1418. [Google Scholar] [CrossRef]

- Pircher, M.; Baumann, B.; Götzinger, E.; Sattmann, H.; Hitzenberger, C.K. Simultaneous SLO/OCT imaging of the human retina with axial eye motion correction. Opt. Express 2007, 15, 16922–16932. [Google Scholar] [CrossRef] [PubMed]

- Pircher, M.; Götzinger, E.; Sattmann, H.; Leitgeb, R.A.; Hitzenberger, C.K. In vivo investigation of human cone photoreceptors with SLO/OCT in combination with 3D motion correction on a cellular level. Opt. Express 2010, 18, 13935–13944. [Google Scholar] [CrossRef] [PubMed]

- Roorda, A.; Romero-Borja, F.; Donnelly Iii, W.; Queener, H.; Hebert, T.; Campbell, M. Adaptive optics scanning laser ophthalmoscopy. Opt Express 2002, 10, 405–412. [Google Scholar] [CrossRef] [PubMed]

- Migacz, J.V.; Otero-Marquez, O.; Zhou, R.; Rickford, K.; Murillo, B.; Zhou, D.B.; Castanos, M.V.; Sredar, N.; Dubra, A.; Rosen, R.B.; et al. Imaging of vitreous cortex hyalocyte dynamics using non-confocal quadrant-detection adaptive optics scanning light ophthalmoscopy in human subjects. Biomed. Opt. Express 2022, 13, 1755–1773. [Google Scholar] [CrossRef]

- Ferguson, R.D.; Zhong, Z.; Hammer, D.X.; Mujat, M.; Patel, A.H.; Deng, C.; Zou, W.; Burns, S.A. Adaptive optics scanning laser ophthalmoscope with integrated wide-field retinal imaging and tracking. JOSA A 2010, 27, A265–A277. [Google Scholar] [CrossRef] [PubMed]

- Bakker, E.; Dikland, F.A.; van Bakel, R.; Andrade De Jesus, D.; Sánchez Brea, L.; Klein, S.; van Walsum, T.; Rossant, F.; Farías, D.C.; Grieve, K.; et al. Adaptive optics ophthalmoscopy: A systematic review of vascular biomarkers. Surv. Ophthalmol. 2022, 67, 369–387. [Google Scholar] [CrossRef] [PubMed]

- Harmening, W.M.; Tuten, W.S.; Roorda, A.; Sincich, L.C. Mapping the Perceptual Grain of the Human Retina. J. Neurosci. Methods 2014, 34, 5667–5677. [Google Scholar] [CrossRef]

- Martin, J.A.; Roorda, A. Direct and noninvasive assessment of parafoveal capillary leukocyte velocity. Ophthalmology 2005, 112, 2219–2224. [Google Scholar] [CrossRef]

- Guevara-Torres, A.; Joseph, A.; Schallek, J.B. Label free measurement of retinal blood cell flux, velocity, hematocrit and capillary width in the living mouse eye. Biomed. Opt. Express 2016, 7, 4228–4249. [Google Scholar] [CrossRef]

- Lu, J.; Gu, B.; Wang, X.; Zhang, Y. High-speed adaptive optics line scan confocal retinal imaging for human eye. PLoS ONE 2017, 12, e0169358. [Google Scholar] [CrossRef]

- Lu, J.; Gu, B.; Wang, X.; Zhang, Y. High speed adaptive optics ophthalmoscopy with an anamorphic point spread function. Opt. Express 2018, 26, 14356–14374. [Google Scholar] [CrossRef] [PubMed]

- Salmon, A.E.; Cooper, R.F.; Langlo, C.S.; Baghaie, A.; Dubra, A.; Carroll, J. An Automated Reference Frame Selection (ARFS) Algorithm for Cone Imaging with Adaptive Optics Scanning Light Ophthalmoscopy. Transl. Vis. Sci. Technol. 2017, 6, 9. [Google Scholar] [CrossRef] [PubMed]

- Arathorn, D.W.; Stevenson, S.B.; Yang, Q.; Tiruveedhula, P.; Roorda, A. How the unstable eye sees a stable and moving world. J. Vis 2013, 13, 22. [Google Scholar] [CrossRef] [PubMed]

- Arathorn, D.W.; Yang, Q.; Vogel, C.R.; Zhang, Y.; Tiruveedhula, P.; Roorda, A. Retinally stabilized cone-targeted stimulus delivery. Opt. Express 2007, 15, 13731–13744. [Google Scholar] [CrossRef]

- Braaf, B.; Vienola, K.V.; Sheehy, C.K.; Yang, Q.; Vermeer, K.A.; Tiruveedhula, P.; Arathorn, D.W.; Roorda, A.; de Boer, J.F. Real-time eye motion correction in phase-resolved OCT angiography with tracking SLO. Biomed. Opt. Express 2013, 4, 51–65. [Google Scholar] [CrossRef]

- Hammer, D.X.; Ferguson, R.D.; Magill, J.C.; White, M.A.; Elsner, A.E.; Webb, R.H. Tracking scanning laser ophthalmoscope (TSLO). Ophthalmic Technol. 2003, 4951, 208–217. [Google Scholar]

- Kowalski, B.; Huang, X.; Steven, S.; Dubra, A. Hybrid FPGA-CPU pupil tracker. Biomed. Opt. Express 2021, 12, 6496–6513. [Google Scholar] [CrossRef]

- Sheehy, C.K.; Yang, Q.; Arathorn, D.W.; Tiruveedhula, P.; de Boer, J.F.; Roorda, A. High-speed, image-based eye tracking with a scanning laser ophthalmoscope. Biomed. Opt. Express 2012, 3, 2611–2622. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, Q.; Saito, K.; Nozato, K.; Williams, D.R.; Rossi, E.A. An adaptive optics imaging system designed for clinical use. Biomed. Opt. Express 2015, 6, 2120–2137. [Google Scholar] [CrossRef]

- Chan, H.P.; Samala, R.K.; Hadjiiski, L.M.; Zhou, C. Deep Learning in Medical Image Analysis. Adv. Exp. Med. Biol. 2020, 1213, 3–21. [Google Scholar] [CrossRef]

- De Silva, T.; Chew, E.Y.; Hotaling, N.; Cukras, C.A. Deep-learning based multi-modal retinal image registration for the longitudinal analysis of patients with age-related macular degeneration. Biomed. Opt. Express 2021, 12, 619–636. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A.J.C. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Wang, Y.; He, Y.; Wei, L.; Li, X.; Yang, J.; Zhou, H.; Zhang, Y. Bimorph deformable mirror based adaptive optics scanning laser ophthalmoscope for retina imaging in vivo. Chin. Opt. Lett. 2017, 15, 121102. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the Devil in the Details: Delving Deep into Convolutional Nets. arXiv 2014, arXiv:1405.3531. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Kai, L.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Čehovin, L.; Vojíř, T.; Häger, G. (Eds.) The Visual Object Tracking VOT2016 Challenge Results; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. (Eds.) A Benchmark and Simulator for UAV Tracking2016; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Liang, P.; Blasch, E.; Ling, H. Encoding Color Information for Visual Tracking: Algorithms and Benchmark. IEEE Trans. Image Process. 2015, 24, 5630–5644. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Briechle, K.; Hanebeck, U.D. Template matching using fast normalized cross correlation. In Proceedings of the SPIE Defense + Commercial Sensing, Orlando, FL, USA, 20 March 2001. [Google Scholar]

- Yang, Q.; Zhang, J.; Nozato, K.; Saito, K.; Williams, D.R.; Roorda, A.; Rossi, E.A. Closed-loop optical stabilization and digital image registration in adaptive optics scanning light ophthalmoscopy. Biomed. Opt. Express 2014, 5, 3174–3191. [Google Scholar] [CrossRef]

| Methods | Saccade | Drift | Total |

|---|---|---|---|

| UECO | (−1.52, 0.72) | (−1.01, 0.53) | (−1.47, 1.41) |

| NCC | N/A | (−1.03, 3.01) | (−42.94, 65.72) |

| NCC (wrong data removed) | N/A | (−1.03, 3.01) | (−3.61, 1.99) |

| Methods | Saccade | Drift | Total |

|---|---|---|---|

| UECO | (−0.87, 1.94) | (−0.80, 0.51) | (−1.85, 1.68) |

| NCC | N/A | (−2.48, −0.64) | (−49.52, 51.03) |

| NCC (wrong data removed) | N/A | (−2.48, −0.64) | (−3.02, 3.71) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Ji, Z.; He, Y.; Lu, J.; Lan, G.; Cong, J.; Xu, X.; Gu, B. Deep-Learning Image Stabilization for Adaptive Optics Ophthalmoscopy. Information 2022, 13, 531. https://doi.org/10.3390/info13110531

Liu S, Ji Z, He Y, Lu J, Lan G, Cong J, Xu X, Gu B. Deep-Learning Image Stabilization for Adaptive Optics Ophthalmoscopy. Information. 2022; 13(11):531. https://doi.org/10.3390/info13110531

Chicago/Turabian StyleLiu, Shudong, Zhenghao Ji, Yi He, Jing Lu, Gongpu Lan, Jia Cong, Xiaoyu Xu, and Boyu Gu. 2022. "Deep-Learning Image Stabilization for Adaptive Optics Ophthalmoscopy" Information 13, no. 11: 531. https://doi.org/10.3390/info13110531

APA StyleLiu, S., Ji, Z., He, Y., Lu, J., Lan, G., Cong, J., Xu, X., & Gu, B. (2022). Deep-Learning Image Stabilization for Adaptive Optics Ophthalmoscopy. Information, 13(11), 531. https://doi.org/10.3390/info13110531