Abstract

Business-based decision support systems have been proposed for a few decades in the e-commerce and textile industries. However, these Decision Support Systems (DSS) have not been so productive in terms of business decision delivery. In our proposed model, we introduce a content-based image retrieval model based on a DSS and recommendations system for the textile industry, either offline or online. We used the Fashion MNIST dataset developed by Zalando to train our deep learning model. Our proposed hybrid model can demonstrate how a DSS can be integrated with a system that can separate customers based on their personal characteristics in order to tailor recommendations of products using behavioral analytics, which is trained based on MBTI personality data and Deap EEG data containing numerous pre-trained EEG brain waves. With this hybrid, a DSS can also show product usage analytics. Our proposed model has achieved the maximum accuracy compared to other proposed state-of-the-art models due to its qualitative analysis. In the first section of our analysis, we used a deep learning algorithm to train our CBIR model based on different classifiers such as VGG-net, Inception-Net, and U-net which have achieved an accuracy of 98.2% with a 2% of minimized error rate. The result was validated using different performance metrics such as F-score, F-weight, Precision, and Recall. The second part of our model has been tested on different machine learning algorithms with an accuracy rate of 89.9%. Thus, the entire model has been trained, validated, and tested separately to gain maximum efficiency. Our proposal for a DSS system, which integrates several subsystems with distinct functional sets and several model subsystems, is what makes this study special. Customer preference is one of the major problems facing merchants in the textile industry. Additionally, it can be extremely difficult for retailers to predict customer interests and preferences to create products that fulfill those needs. The three innovations presented in this work are a conceptual model for personality characterization, utilizing an amalgamation of an ECG classification model, a suggestion for a textile image retrieval model using Denoising Auto-Encoder, and a language model based on the MBTI for customer rating. Additionally, we have proposed a section showing how blockchain integration in data pre-processing can enhance its security and AI-based software quality assurance in a multi-model system.

1. Introduction

The Decision Support System (DSS) is widely used for various kinds of business industries. Any DSS system is compiled with a large-scale database system under a precise level of the user interface. These knowledge-based systems are used in businesses for forecasting, product planning, supply chain management, analytics, prediction, and many more. The textile industry is well suited for these DSSs due to its large area of coverage as a multi-production market. The textile industry’s e-commerce platform increases customer adoption by selecting and optimizing these preferences based on their behavior analytics, searching patterns, and click stream generated data. The implementation of such a content-based data-based system can be adapted in the textile industry for a keyword-based searching method followed by a user-provided interface used to retrieve content and context-based textile images from a database [1]. Deep learning models have gained significant recognition in the area of business for classification and prediction efficiency. When a user wants to search data-based or content-based image retrieval, a definite system is required to support this user interface, followed by natural language processing-based personality and EEG-based emotion classifiers integrated into a hybrid system. The query is to be followed by an image as input or text, which can be specified to search in a database [2,3,4].

Automatic personality classification has been widely discussed in the past in the NLP area. The retrieved personality is based on Myers and Brigs’ personality quotient. The proposed model combines deep learning and NLP algorithms simultaneously to extract personality types for customer preference segmentation. It is followed by a pre-defined corpus of questionnaire system-based Big Five personality traits such as introversion, extroversion, intuition, sensing, judging, and perceiving. This model is tested and validated based on algorithms such as LSTM and language transformers for high precision and accuracy. This system is further extended by an EEG-based emotion recognition system, which is bifurcated into four procedures such as preproduction, feature extraction, feature selection, and classification. The brain signature-based features are categorized into time and frequency domains, namely fractal dimension features, entropy, and mean variances [5].

The major drawback of conventional DSS systems is that they do not have replicable search index-based matching algorithms to match and retrieve exact queries delivered through input in the form of responses and images. We aim to propose a hybrid model which can further be improved and compiled into an API-based DSS system. We have trained and tested all three models based on publicly available datasets: The Deep dataset contains pre-trained EEG waves; the Fashion MNIST dataset contains data from various clothes, and the MBTI personality dataset has been preprocessed using principal component analysis to reduce the size and remove redundant data. Dataset 1 has been tested and validated using several deep learning classifiers such as U-net, VGG, and the ensemble method to gain maximum confidence level in the model. The EEG data set has been applied with a pre-trained transfer learning model, which accommodates a classification accuracy of 89.9%. Data set 3 has been optimized and implemented using supervised learning classifiers, which are Logistic regression, Decision Tree, Support Vector Machine (SVM), and Adaboost.

Although it has not been solved, Fashion-MNIST method is a state-of-the-art model, which was offered as a substitute for MNIST and may consistently provide error rates of 10% or lower. It can serve as a helpful starting point for creating and honing an approach for resolving picture classification problems using convolution neural networks, similar to MNIST. We can create a new model from scratch rather than researching successful models that have been applied to the dataset. We can use the train and test datasets that are already included in the dataset. We can further divide the training set into a train and validation dataset to calculate a model’s performance for a specific training run. It is thus possible to plot the performance for each run on the train and validation datasets. An EEG, which is regarded as a physiological indicator, can show the electrical activity of the neuronal cells grouped across the human cerebral cortex. EEG is used to capture this activity and is reliable for emotion identification (facial expression) because it assesses emotions more objectively than no physiological indicators [6]. It has been suggested that the power spectral bands, which are among the most comprehensive features of EEG, can be used for categorizing basic emotions. Additionally, external interferences such as audio noise or the sense of touch may add artifacts into the brainwave signals during collection, and these artifacts will need to be eliminated by the application of filtering algorithms [6]. Finally, to examine and evaluate the specific brainwave bands for emotion recognition with machine learning algorithms, the brainwave signals will need to be translated from the time domain to the frequency domain using the fast Fourier transform. We investigated how to categorize personalities using pre-trained linguistic models (BERT) [7]. The proposed model’s accuracy for the classification task was 0.479. Additionally, we obtained losses of roughly 0.02 for the language creation. Using more extensive and well-organized datasets could help this model be improved. We can alternatively use a larger Bert model instead of Bert-base (Bert-large trained on 340 million parameters) if computer and memory resources allow [8].

The novel contribution of this research paper is that we propose a DSS system which is the combination of several subsystems with multiple functional set multi-model subsystems. In the textile industry, customer preference is one of the major problems for retailers. Additionally, sometimes it becomes very difficult for the retailers to predict and produce the products as per the customer’s preferences and as per their interests [2]. The contributions in this paper are (1) a proposal for a textile image retrieval model using Denoising Auto-Encoder, (2) a proposal for a conceptual model for personality characterization with the amalgamation of an ECG classification model, and (3) an MBTI-based language model for feeding customer evaluation.

Let us assume that we already have the information that needs to be added to the blockchain. This information adds a brand-new block to the digital ledger. The data are chained together in chronological sequence as each block is populated with information, binding to the one before it as it does so. Once recorded, this information cannot be altered. Technically, it is possible; however, doing so will cause a new block to be created in the chain. This shows that ML and blockchain can forge a strong alliance that focuses on data, its legitimacy, and all the data-driven decisions involved in the process. As a result, blockchain guarantees data security and may encourage data sharing during the development and testing of ML models. The fact that AI-enabled systems are also software systems and depend on software quality assurance is frequently overlooked (SQA). This study aims to evaluate software quality assurance methods used in the creation, integration, and upkeep of AI/ML components and code. Software-based systems that include AI/ML components in addition to standard software components are known as AI-enabled systems [9].

As with any software system, AI-enabled systems need to pay close attention to software quality assurance (SQA) in general and code quality in particular. This paper is categorized as follows: Section 1 consists of the Introduction, Section 2 concerns Related studies, Section 3 proposes the Framework, Section 4 focuses on Methodology, Section 5 the Implementation, and Section 6 and Section 7 draw together the Results and Conclusions, as well as the outlines the scope for future research. Section 8 provides information about the Limitation of Proposed Work.

2. Related Work

Automatic personality classification models have been widely discussed in previous research in natural language processing. It demonstrates that different types of personalities based on the MBTI factor can be classified using social media data sources and pre-trained language models using a deep learning algorithm. Different data such as Twitter data or Facebook data classify personality based on the core predefined corpus with a maximum accuracy of 98%. Various NLP models are also utilized such as Alexa Net, ImageNet, and other machine learning algorithms [1]. For EEG emotion classification, the combination of wavelet scattering and machine learning has also been predominantly used in human–computer interface systems based on the difficult interfering system and machine learning algorithm. Additionally, features that were extracted from this particular schedule have been collected from the Amigos database, which consists of the different predefined EEG signal waves with different patterns and features. It shows 90% arousal in the two-dimensional classifications of the emotions in the particular segment of the comparison based on the convolution neural network and long-short-term memory (LSTM) algorithm [2]. Content-based image retrieval systems have gained popularity in business and medical content retrieval based on the input query systems that are generated by the users. Content-based image retrieval system consist data of fabric images shown in studies [3] where content-based descriptors have been used [4]. In scripting and mining, the query-based matching algorithm is based on the internal database, which contains thousands of fabric images and hash code. The best scheme in the layer has been used to reduce the retrieval time [3]. A matching algorithm based on local features is used to compare low-level dataset images with low-level Haar feature-based multimedia image classification, which is created by the low-level features of the image and contains the various textures of the image within the data set, in order to retrieve asimilar query-based image. Additionally, research on mean value model-based and content-based image retrieval centered on various standard deviations has been compromised [4,5].

The decision support system is very versatile in terms of industrial adaptability and its performance domains, including the different services in businesses such as agriculture, medical, healthcare, and manufacturing. Several decision support systems in the e-commerce framework support the fuzzy logic theory. Analysis of consumer purchasing trends and B2C manufacturing system operations forms the basis of market research risk analysis, which is meant to optimize scheduling and transportation [10]. In decision support models, knowledge sharing, and knowledge management are very crucial for enterprise-based frameworks. The system is then approached with a reasoning and representation system built based on the enterprise decision models and semantic engine, which perform day-to-day forecasting and business transactional operations. This system supports the various database and back-end system approaches with the high-level model view controller to adapt to the customer’s user-face requirements [7]. Convolution neural network-based algorithms have gained maximum state-of-the-art performance in many computer vision problems, providing different kinds of manual annotation, which have been carried outin fine-tuning the hyper-parameters of the layers of convolution neural networks. It has been shown in various state-of-the-art retrieval systems the existence of a content retrieval system and patterns in CNN-based fine-tuning method [8]. Content-based image retrieval systems have gained a comprehensive survey for the maximum deep learning-based development in the survey of previous research from the decades of 2011 to 2020 and the detailed taxonomy it presents. Different types of supervision problems, different neutral networks, different descriptors, and different architectures of the retrieval methods have been used in different chronological summarization problems to gain the maximum large-scale common data set for image retrieval and performance analysis using the deep learning method [9].

The textile and fiber industries use the decision support-based system for the class of management system with different organizational decision-making activities. Recent research has used fuzzy-based methods such as TOPOSIS and the AHP statistical method in decision support systems for the cotton and textile industries. Cotton fiber is measured for quality analysis concerning yarn quality and validated in the manufacturing system [10]. Different datasets with the algorithms of supervised and semi-supervised methods, sentiment analysis, and NLP detection problems for user behavior such as personality threads have been focused on. In much of the research, deep learning-based text classification has been used where the evaluation of the effectiveness of different statistical analyses is implemented to maximize the total throughput of the algorithm [11]. In recent years, graph-based and textual TR-DLR-based summarization methods have been used in the rating predictions of the different decision support systems [12]. So, the rating recommendation system in the textile industry uses different granularity allocation proportion weights. With different representations of the factor-based embedded algorithm, with extensive experiments on the real-world data set, to project the definite effectiveness of decisions in a real-time business framework [13]. Brain signal-based EEG emotion classification is one of the finest state-of-art for emotion analysis in the present research progression. The differences of human emotions are controlled by the different patterns of the brain that generate the wavelet in transmitting some message. These data features of emotions can be combined through the analysis of brain wave messages. Brain waves, emotion, and classification are critical in terms of cultural differences in human roles and research needs. In recent research, different features such as wavelet features, frequency features, and different entropies have been used. With the combination of statistical-based features and wavelet-based features, based on feature vectors and preprocessing, the principal component analysis is used to maximize the throughput of the algorithms instead of using deep learning algorithms, as shown in [14,15]. Different decision-support tools in the supply chain framework, or, in the case of the textile sector, various parametric assessment standards for more accuracy and decision-support tools to raise performance measurement with the stakeholders in various financial business proposals. For this conceptual-based model, we utilize a framework that will be based on customer personality pattern segmentation using an ECG wavelet, followed by a questionnaire by the system customer segmentation problems in the decision support system. Various studies in decision support systems in the supply chain have demonstrated that the implications of textile supply chain and managerial applications, as well as different novel approaches such as AHP and other methods with comprehensive coverage, have been used for this purpose, adding the mean value to supply chain partners [16]. Web analytics and web-based data mining techniques are used to share and monitor user online activity across various digital platforms. In the conversion of the state-of-the-art opinions into the last textual data [11], this model uses ontology approaches and various machine learning methods by using different questionnaire-based systems. It poses different alternative methods and legacy approaches for personality trait classification and measurement using these two distinct features of the ontology methods: using the shorting algorithm to pass the textual data and sourcing the mechanism facilities in the algorithm of auditions and filtering [11]. Previously, various deep learning-based classification models were developed using a combination of convolution neural networks for classifying EEG-based emotions on a predefined data set, with accuracy on various performance metrics ranging from 70 to 78% at the binary classification level. The two-level binary classification selects the best feasible optimized classifier for the multiclass classifications of the variant and arousing models in the four cross-classification models and in the 10-fold cross-verification performance metrics analysis, which maximizes the accuracy of the model by 91% [17,18]. Various gesture-based methods have been used for emotion recognition based on the different capabilities which encounter the descriptive expressions of humans such as facial gestures and speech. In the various methods, the correlation analysis and the frequency bands have been enhanced in the prediction performance by using two different CNN kernel sizes that are assembled into a single convolution block and combined with all the features. The 10 different call verifications were conducted on the data to prove the average accuracy of 98 to 97% in the different arousers and the variance of the multiclass classification [19]. In Table 1, the major focus of a few state of the art models is mentioned, i.e., Content-Based Image Retrieval (CBIR) systems, providing an intuitive, AI-based solution to assist customers in finding what they desire. The critical factor that determines the performance of CBIR systems is feature extraction, which corresponds to how we represent an image at a high level. These features include the color, texture, and shapes in an image. Personality-based classification has been carried out before in previous papers; however, their extent was limited to a certain small group, as in the case of “Testing the Effectiveness of Retrieval-Based Learning in Naturalistic School Settings” [11] which was focused on retrieval-based learning. Based on the ECG wavelets, our goal is to obtain the customer’s preferences for their attire and their dresses. We have acquired the EEG signal personality data set based on the amigos EEG signal data set, which we have acquired from an Internet source, and for customer preference classification which indeed helps us to classify the personality of a person. Classification of personalities through image retrieval had been the aim of Embodied Refection of Images as an Arts Based Research Method: Teaching Experiment in Higher Education [20]; however, it was limited to 20 students and automation system. In Ref. [21], the author has proposed a color feature extraction algorithm for representing the color vector of an image. In Ref. [4], the authors have proposed the Amigos data collection for research purposes, which contains ECG classified data on mood and personality.

Table 1.

State-of-the-art summary and comparison.

3. Proposed Framework

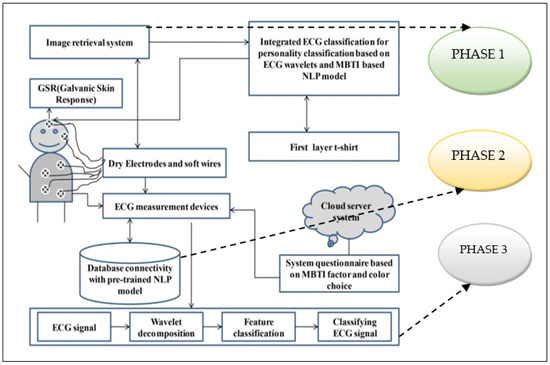

To characterize consumer preferences, we utilize the ECG wavelet in Figure 1 of the suggested architecture. For this conceptual-based model, we utilize a framework that will be based on customer personality pattern segmentation using an ECG wavelet, followed by a questionnaire by the system that will operate on the language model of sentiment analysis. Using the MBTI Factors as a basis, the questionnaire’s feelings will be accessible using a model of natural language comprehension [12,13]. The increase in video quality will be tested on participants in a lab-controlled environment to track any changes in physiological signals. Following that, experts in enthusiasm and valence elements will remotely rate the face video recording with a time objective of 20 s (duration of each described time frame) for both short and long tests. In addition, after each brief video display due to short tests, a self-comment will be distributed through a poll. The ECG signal wavelet classification will then be performed using a relevant model in the MATLAB environment, where the signal will access the answer provided by the customer in the system. The MBTI parameters of personality characteristics and color preference will then be applied. These wavelets will show a consistent range of a person’s mood as determined by a questionnaire, a physical test, and a color preference. Therefore, using this hybrid model, the machine will extract the preferred attire [15,16].

Figure 1.

Proposed framework for content-based recommendation model.

The proposed framework is divided into different phases which are as follows:

Phase 1: Instead of using keywords, CBIR stands for content-based image retrieval. The objective of a CBIR system is to retrieve all the images that are relevant to a user query while simultaneously retrieving as few unrelated images as possible. Similar to its text-based predecessor, an image retrieval system needs to be able to understand what is in the image based on how much they resemble the user’s search and sort the documents (or images) in a collection. Semantic information is eliminated from documents during interpretation to better meet user information (image) needs [11]. Users may have trouble describing their search terms when using the content-based image retrieval (CBIR) method, which also leads to subpar retrieval outcomes. Previously, it was suggested that the ideal strategy for CBIR, which works on the premise that giving photos keywords that help users search for images based on them was to annotate images. Image annotation is frequently thought of as a problem for image classification mapping between low-level and a few low-level attributes for characterizing images. Feature and high-level ideas are processed using supervised learning techniques (class labels). This topic is extremely significant since it leads to the discovery of efficient feature representations and similarity metrics in a CBIR system. Feature extraction and similarity assessment are the two main CBIR components. As a result, the goal of this study is to build content-based picture retrieval using feature extraction techniques that are considered to be traditional methodologies for fabric, among other things such as convolution layers (CNN). While conventional descriptions concentrate on low-level attributes, CNN emphasizes high-level or semantic features. Traditional descriptors have lower system requirements and can be computed more quickly. Meanwhile, CNN descriptors, which deal with high-level qualities created for human perceptions, deal with huge, voluminous amounts of data and take a while to compute. The characteristics of a database’s fully connected layers of CNN pictures must match the search query. Several studies have exploited the properties that CNN has extracted to retrieve images using convolution layers [11,12].

Phase 2: In numerous downstream natural language processing tasks, such as sentiment analysis, author identification, and others, it has been shown that pre-trained language models produce cutting-edge results. In this study, we discuss using these strategies to identify personalities in texts. We have studied research that modifies the popular Bidirectional Encoder Representations from Transformers (BERT) model to carry out MBTI classification, with an emphasis on the Myers–Briggs (MBTI) personality model. In some evaluation situations, our major findings show that the proposed technique consistently outperforms past studies in the field and significantly outperforms popular text classification models based on bag-of-words and static word embeddings.

Phase 3: The most cutting-edge technique for analyzing emotions at the moment is brain wave emotion analysis. The brain develops human emotions, according to advances in brain science. The subject of brain-wave emotion is therefore covered by several applications. Due to their complexity, human emotion analysis is difficult, and this is equally true in brain wave emotion analysis. Numerous studies have employed current techniques for categorizing brain wave emotions as well as proposed new ones. This article discusses and shows several approaches of classifying brain wave emotions. We use the data acquisition system EEG data from the DEAP data set.

The friendliness and excitement sensation models were utilized in two-level (low and high) and three-level (low, center, and high) layout testing. For valence and excitement, the accuracy ratings for the two-level grouping and four-overlay cross-approval models were 90.1 and 87.9 %, respectively. For the three-level characterization, these features had respective percentages of 83.5 and 82.6. Additional testing was conducted using an architecture that merged the proposed model with a convolution functional connectivity (CNN) sub-module [14].

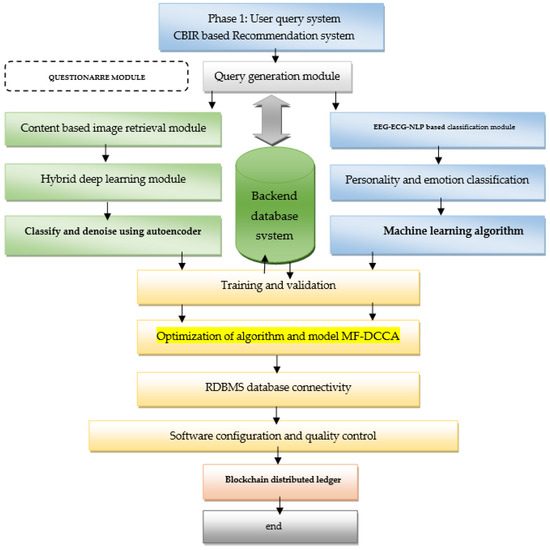

4. Methodology

As seen in Figure 2, one of the key multiracial analysis techniques is the MF-DCCA, which is mostly employed to examine the cross correlation and multiracial characteristics of two sequences. Let and be the two sequences, and let X (s) be the length of the sequences’ numbers, N. The two sequences X (s) and are used to build two new sequences, where Y(s) x y represent the mean value of the sequences used for training g and testing function mapping, as shown in line number one of the algorithm TaT [15].

X(s′) = Σ(x(k) − x),s = 1,2, ,N

Y(s′) = Σ(y(k) − y),s = 1,2, ,N

Figure 2.

Flowchart for content-based recommendation model.

According to the generalized () Hxy q C () Hxy q Hurst exponent, the slope of the and log Fq (s) log(s) functions is constant. From lines 2 and 3 in Algorithm 1, when modifications occur, the cross-correlation between the two sequences possesses () Hxy q multifractal characteristics and vice versa. Calculating the range of means stronger multifractal feature allows one to determine how much () Hxy q q multifractality there is given by the generalized Hurst exponent vs. Range () Hxy q of is (0, 1). If >0.5, long-range cross-correlation exists between the two sequences () Hxy q) Hxy q the two sequences have inverse persistence when 0.5, or negative long-() Hxy q range cross-correlation. Neither of the two () Hxy q sequences exhibit long-range cross-correlation when = 0.5.

Calculate the multifractal scaling exponent to characterize the multifractal properties.

From Algorithm 1, the creation of a graph is necessary to accurately represent the collaborations between the subjects. In lines 4 and 5, |FM| < |FO and M←MC corrupting operation on M using.

On the picture pixel data that we extracted from the trimming of ROIs, we created a directed graph. The graph convolution network (GCN) has emerged as a potential method for diagram mining because it combines the traditional convolution brain network with non-Euclidean space (such as diagrams and manifolds) and Euclidean information (such as 2D or 3D photos). Write a graph as X = (V,E), where V is the configuration of vertices and E is the configuration of edges. A contiguousness lattice in line 7 of Algorithm 1, A = [an ij] R n × n similarly encodes the network of vertices with the component a ij indicating whether the i-th and j-th vertices are related (a ij = 1) or not (a ij = 0). Mean D = diag(d1,d2,…,d n) represents a degree network, where each component, d i = j.aij, represents the number of edges connected to the I-th vertex. The convolution is described by Otherworld GCN by decaying a chart signal s R n (described on the vertex of Diagram X) in the extraterrestrial space. Then, rather than determining the Laplacian eigenvectors, the sign s will be handled at that time by a horrifying channel using the principal request polynomial of the cabinet. To reduce the number of boundaries (i.e., _0 and _1), the ghostly GCN model anticipates that = _0-_1, and the chart convolution is l = layer in the Graph Convolution Network. In Algorithm 1, line 9 and 10 show the weight generation function encoding ← h = f(W × M + a1) 11: decoding ←Q = g(W′ × h + a2), where xh function relocates the anticipated module and reiterates the process outline 15 [16].

is the adjacency matrix of the graph with added self-connection

where H is a matrix of the feature vector of every single vector and W is the linear projection layer

| Algorithm 1: CBIR query retrieval system. |

| 1: Start –M ← MNIST fashion dataset/deap dataset/MBTI datset 2: Feature FO = {n1m1,n2m2,n3m3……nzmz} 3: n = samples; m = features TrainX, Trainy, TestX, Testy = TestTrain(X,y) 4: Feature extraction |FM| < |FO| 5: M←MC corrupting operation on M using 6: PW1.W’a1.a2 (M) ≈ MC 7: W1.W’→weight matrix of hidden layer A = [a ij] R n × n 8: a1, a2 →bias vectors of hidden layer 9: Use MC as input for coding phase using 10: encoding ← h = f(W × M + a1) 11: decoding ←Q = g(W’ × h + a2) 1 = BaggingDT(T rainx)j) =gh(T rainq) =il(T rainb) 12: Calculate loss← L (W1.W’.a1.a2.M) = Q-M2 13: when Q ≈ M 14: Use reconstruction to optimize the biases and Weight 15: Repeat step 5–12 to calculate loss value 16: end for 17. return |

5. Implementation

This section focuses on phase-by-phase implementation of the module and in Section 5, the several subsystem implementations are shown.

5.1. Dataset

For this research, we used Amigos personality ECG data, which contains a video of ECG and EEG signals for different anonymous personalities. This data set [24] is used for building personality classification models. We have also used MNIST data for training our CBIR model; this data contains images for ten different labels: t-shirt, top, trousers, pullover, dress, coat, sandal, shirt, sneaker, bag, and ankle boot. It contains 60,000 training examples and 20,000 test examples consisting of 28 × 28 grayscale images for these ten different clothing items. The generally accessible AMIGOS database was used in the work [25]. It was the preferred database over other databases due to the relatively large number of participants, recent publications, numerous signals and experiments, standardized data collection methods, and accurate and high-resolution annotations. This database contains EEG, EKG, galvanic skin reaction (GSR), and facial video data of 40 participants, recorded twice in experiments. For content-based textile image retrieval, we have used Style MNIST, a dataset of Zalando’s article pictures [20], comprising a preparation set of 60,000 models and a test set of 10,000 models. Every model is a 28 × 28 grayscale image associated with a 10th-grade mark. The “Fashion-MNIST” clothing arrangement issue is another standard dataset utilized in computer vision and profound learning [17,18]. Although the dataset is generally straightforward, it tends to be utilized as a reason for learning and rehearsing how to create, access, and utilize profound convolutional brain networks for picture arrangement without any preparation. This incorporates how to foster a hearty test outfit for assessing the presentation of the model, how to investigate upgrades to the model, and how to save the model and later burden it to make forecasts on new information [19].

5.2. CBIR-Based Textile Image Retrieval

Content-based image retrieval is widely used in the business sector to retrieve selection-based images from an unarranged source of image data. In recent years, the trend of deep learning on image-based selection and prediction has been widely used for business decisions and predictive analytics in the medical field [1]. From the unlabeled data set, it is very hard to obtain the data that corresponds to the information that is needed, and that is why data optimization is needed to implement the data efficiently [2]. First, we need to segregate the data and preprocess them based on the nature of the unlabeled data set of the clothing. Unsupervised learning is more advantageous and suited to these types of data, which is why we used it for the implementation of the data in this article. Additionally, the auto-encoder is one of the most widely used unsupervised learning algorithms for this kind of image retrieval and prediction study. For this research, we have acquired their data from the database of the local market and the Internet, and we have preprocessed them and segregated their labels to make them fit for this research [3,4].

Content-Based Image Retrieval (CBIR) systems provide an intuitive, AI-based solution to assist customers in finding what they desire. The critical factor that determines the performance of CBIR systems is feature extraction, which corresponds to how we represent an image at a high level. These features include the color, texture, and shapes in an image. In online shopping, the databases contain many images, so the features we extract should also allow an efficient retrieval mechanism. Earlier research used what are known as hand-crafted features, which include a histogram-of-colors to define colors and a HOG (histogram-of-oriented gradients) to define shapes. Other descriptors, such as SIFT and SURF, were also found to be very effective in mapping out local features in images [16].

From Figure 3:

(a) Image Retrieval Pre-Processing: In the proposed algorithm, the image captured is required to find the region of interest in the image. The Region of Interest (ROI) with the centered method is used to determine the center of the images. Equations (6) and (7) can be used to calculate the center as follows:

Xc and Yc are the images’ centered points, respectively. fx is the input image where X and Y are the input image width and height sizes, respectively.

An auto encoder is nothing but a trained neural network, and it is an unsupervised way to copy the input to output in an alternative way. However, it is known that auto encoders and other deep models that are capable of assuming the dense representation of the input and this representation are often called latent representation or coding. An auto encoder is composed of two different components, which are an encoder and a decoder. The encoding part takes a parameter from the input data. It is based on the input data and the latent functions and is provided by the E as encoding functions. Then, a decoder takes part of the parameters. Where the latest related representation functions try to rebuild the original input based on the output, which is generated as a decoder by the encoder, and it is summarized based on the D as decoding functions, which are represented in Equations (8) and (9) [10]. Where ‘sX is the output of the decoder and D is our decoding function, as shown in Figure 3 [20].

where xi is the encoding output E(x) activation function,

The alternative to the regular encoder is the denoising auto encoder, which is represented as DE, which works at a high risk of over-fitting. In the case of the data bridge in the data segregation, in denoising autoencoders (DAE), the data are semi-corrupted by noises as the input vector is present in a summarized manner. Then, the model is trained to predict the original UN corrupted input data point as its output. The auto encoder functions in three different phases. The first phase is the collection of the input, which is sampled from the data set and the corrupt plays an important role in sampling the input data in a chronological mapping manner in phase three. This corrupted version of the output is generated as a training sample. Additionally, like the convolution neural networks, it feeds the new to a network that can be trained using the maximum minimization approach on the negatively summarized neighborhood. This a representation of the corrupted version with the negative summaries the neighborhood [17,18,25].

(b) Predictive DAN: We created an annotation index with the aid of annotations that were provided with each image. The index lists the user-annotated and categorized parts for each image. Before ordering fresh labeling, it is important to check for regions with reliable labels. These labels in the An image are only ever added to the index once, and that is for each label. As a consequence, an index is created. Each tag visible in each image is described in detail. The appropriate tags that are already existent are marked with an asterisk To, and a map for labels is also created using an index. A list of synonyms is maintained that contains the corresponding words for each of the eight groups employed. The brain network can manage groups of data sources thanks to a modification known as the Recurrent Neural Network (RNN). A fraction of the data from a donation is retained to compute the following: LSTM networks, which are essentially long- and short-term memory networks, are added to RNN. They have a bigger memory and because of this, it is more remarkable than a normal RNN and can handle larger groupings of data sources (regardless of whether this property is taken advantage of in our arrangement). Because of LSTM, RNNs can retain the results of their work over time. Since this is the case, we connect the data of each log line to the appropriate yield of the pooling layer. The configurations of log lines are taken into account by these two new tensors. The yield of the opposing pooling layer is then linked to the timestamp of each log line. The configurations of log lines are taken into account by these two new tensors. We currently have two tensors in progression, each of which has 31 attributes. The LSTM, which has a single layer and ten mystery features in its mystery state receives this information (depending on whether PyTorch grants us the right to stack distinct LSTMs). The output of the LSTM layer is a set of two tensors of the same size as the unknown component (10 for our example). We can contemplate this as a singular 2-layered tensor of perspectives 2 and 10. Before dealing with the consequence of the LSTM to the straight layer, we play out a dropout with a probability of 0.2. This suggests that we indiscriminately override the characteristics by 0; every value has a probability of 0.2 of being replaced. The left tensor is given to the straight layer, which conveys a single-layered tensor of size dictionary_length × log_line_length (with dictionary_length the number of characters in the word reference, and log_line_length the number of characters of our log lines), dictionary_length the number of characters in the word reference, and log_line_length the number of characters of our log lines. In Figure 3, Using the MLP, LSTM, and GNN technique and an autoencoder, we discovered how to build a very basic picture retrieval system. To help our autoencoder learn how to efficiently encode the visual content of each image, we first trained it on a large dataset. Next, we compared the codes of the query image to the codes in the dataset we were seeking, and we pulled the five closest. Since the visual content of the five photos our system had retrieved was fairly similar to the image we had searched for and they all showed the same digit, even without the use of labels, we could see that our system produced rather accurate results [19,20,24].

Figure 3.

The flow of content-based image retrieval module.

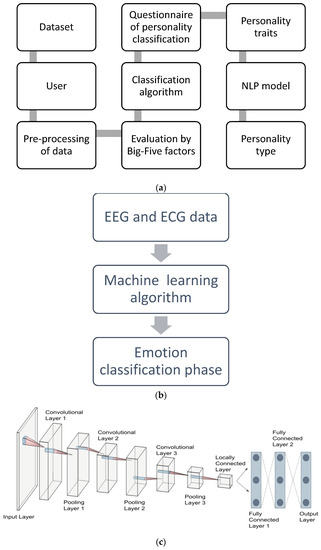

5.3. Personality Classification and Emotion Recognition Module Based on MBTI Factors and EEG Data

The model that fully understands how personality and academic behavior are related is the Big Five model. Using factor analysis of word descriptions of human behavior, this model was developed by numerous separate research teams. These researchers started by examining connections between a wide range of word descriptions that describe personality traits. To discover the underlying elements of personality, they utilized factor analysis to categorize the remaining qualities (using data primarily based on people’s estimations, self-report questionnaires and peer ratings).

They decreased the lists of these descriptors by 5–10 times. MBTI evaluation is a psychometric questionnaire designed to measure mental options in how people perceive the world and make selections. The MBTI sorts a number of these mental differences into four opposite pairs or dichotomies, with a result of sixteen viable psychological sorts. None of these types are superior or inferior; however, Briggs and Myers hypothesized that people choose one general combination of variations. In the same way that writing with the left hand is tough work for someone who writes with their right, so human beings generally tend to find the use of their contrary psychological options more difficult, even if they turn out to be more proficient (and, consequently, behaviorally flexible) with practice and improvement. The problems with the current approach are suggested to be resolved by an automated personality categorization system. To classify the personalities of different users, this system uses machine learning algorithms and data mining approaches [26].

The Big Five Personality Model, Logistic Regression, Decision Tree, and Support Vector Machine are used, among others, in addition to the method. It is straightforward to utilize new methods to determine personalities, circumventing the restrictions of the current system, by recognizing historical data trends. An automated personality categorization system that uses data mining methods and machine learning algorithms to categorize the personalities of different users is proposed to address the drawbacks of the current system, as shown in Figure 4a–c.

- Naive Bayes: Strong independence is assumed when using the NAIVE Bayes classifier. The probability of one attribute does not alter the probability of the other according to this. The naive Bayes classifier makes 2n separate hypotheses given a set of n attributes. The naive Bayes classifier’s output is frequently accurate despite this. Training data noise, bias, and variation are the three causes of error. By picking high-quality training data, noise in the data can be reduced. The machine-learning algorithm needs to separate the training data into several categories. As a result of the training data’s massive groups, bias is the inaccuracy that results from this. As a result of the categories being too small, variance results in a mistake, as shown in one of the steps in Figure 4a [26].

- Random forest: A decision tree is a graph that resembles a tree and is composed of leaf nodes that represent a class name, internal nodes that represent tests on attributes, and branches that indicate test results. By choosing a particular route from the root node to the leaf, classification rules are generated. The tree is built by choosing the qualities and values that will be used to examine the input data. The tree can prefigure incoming data by traversing it once it has been created. This suggests that it follows a path from the root node to the leaf node, stopping at each internal node along the way and testing the properties at each node as needed. Decision trees can examine data and pinpoint important traits.

- The expansion of convolution neural networks is more efficient than traditional models such as ResNet and VggNet. Extended network depth is applied in the training of neural networks. This kind of network can extract features with higher complexity and semantic levels due to a deeper structure. This method is used to adjust the CNN network with a composite coefficient, which uniformly scales the depth, width, and resolution of the image to change the dimension of the network layers. Figure 1 shows the architecture of the EfficientNet-b4 model. There are more than 7 blocks and more than 31 modules in this network. In this paper, we refer to the design of this model and carry out some modifications to make it more suitable for the dataset.

- In this model, we use the backbone of EfficientNet-b4. As is shown in Figure 4b, the segmentation architecture of our proposed model is based on the nested Unet model, which is also called Unet++. It is an encoder-decoder network where the encoder and decoder sub-networks are connected through a series of nested, dense skip pathways. In many medical image segmentation tasks, the UNet++ architecture outperforms the UNet and wide UNet architectures. However, the Unet++’s backbones are based on Vgg19, Resnet152, and DenseNet201, which is inferior to the EfficientNet-B4. To improve the segmentation performance, we replaced the original backbone in Unet++ and made some modifications to the layer numbers and neuron unit numbers of this network, as shown in Figure 4c.The sixteen sorts are normally mentioned through an abbreviation of four letters: the preliminary letters of each of their four type alternatives (in case of instinct, which makes use of the abbreviation N to differentiate it from introversion) [25]. For instance:

ESTJ: extraversion (E), sensing (S), thinking (T), judgment (J), INFP: introversion (I), intuition (N), feeling (F), belief (P).

Figure 4.

(a) personality classification architecture (b) EEG-based emotion classification model (c) proposed network model with three hidden layers.

Figure 4c, about a second-layer neuron named JJ. Its activation is:

The energy of the right response is simply pushed down by this loss function. As an attempt is made to make the energy of the correct response small without releasing energy elsewhere, the network may end up with a mainly flat energy function if it is not properly built. As a result, the system can fail.

When the absolute element-wise error is less than 1, this function uses L2 and when it is greater than 1, it uses L1

(A) ECG wavelet analysis: An ECG wavelet transformation is defined in the analog form b, where P represents the scaling factor and 4 factors dilation of the φt is expressed as:

where 1/p is used for calculating batch normalization.

(B) Wavelet decomposition in the viewpoint of denoising: Wavelets come in a variety of shapes and sizes. The first step in wavelet decomposition is to choose a wavelet that is appropriate for the signal being analyzed. Appropriate wavelets should have a wave form that is similar to the signal being filtered. When the wavelet function is combined with the input signal, the equivalent of a high-pass filter (or a low-pass filter) can be obtained, which gives the signal’s details (or approximation). In most cases, the researchers receive such an analysis via discrete wavelet transformation in the context of denoising. The following is the equation:

where, D(a,b) are wavelet co-efficient

a is the dilation and b is the translation

x(n) is the input signal

gj,k(n) is the discrete wavelet\

(C) Vector Quantization: in the proposed system, the energy and entropy are as the feature vector from the signal and the energy is calculated as.

The entropy is calculated as

where n = number of samples in the signal

i = sample number

P = sample means and N = decomposition number levels.

(D) Feature classification of ECG signals: The feature classification stage is the next step. A technique called vector quantization was used to classify the features extracted in the first stage. Normalized energy and entropy are now two aspects of each decomposed signal. Clusters of vector points can be obtained by visualizing the feature vectors of several types of known signals. Each cluster represents a different class, such as normal ECG or selection ECG in the case of ECG signals. The feature vectors can then be extracted from unknown ECG signals using the same approach. Unknown signals can be categorized by determining which cluster these feature vectors belong to [27].

5.4. Blockchain-Based Security and Software Quality Assurance Module

Since the blockchain has no central authority, the network must handle the issues itself. The records in the blockchain need a dependable and secure component to guarantee that every one of the exchanges occurring inside the network is authentic and not a fraud. The consensus mechanism handles this significant assignment of ensuring that the exchanges are genuine. The consensus is the arrangement of rules which settle on the authenticity of any transfer in the network. In basic terms, the consensus is the concession to any information section or state change in the framework and this arrangement is among the different nodes taking part in the system. There are different consensus calculations present today and each chip away at an alternate rule. The most acclaimed and ordinarily utilized agreement calculations are the proof of work consensus algorithm and the proof of stake consensus algorithm. In proof of work, before adding any record, the node needs to tackle a complex numerical riddle [27]. This demonstrates that the node had invested amounts of energy (read computational energy) before changing the condition of the blockchain. When the riddle is tackled, the solution is sent to different nodes that check it and if the solution is right, they update their separate duplicates of the record. Another agreement component, the proof of state, is viewed as a substitute for the proof of work algorithm, since it requires less CPU calculation power. In proof of stake, the maker of the new block is picked by the network relying on how much stake he places to be chosen as the following figuring node. Here, different consensus calculations can be observed; however, the primary focal point of every one of these components is to give security. They guarantee that every one of the nodes in the organization concurs with the single condition of the blockchain and that the records are safe and secure [23,28,29]. Blockchain utilizes the idea of a digital signature to tackle the issue of verification. It utilizes the idea of asymmetric key cryptography. Each client possesses a pair of keys: the public keys, and the private key. The private key is secret, while the public key is accessible to all. When sending any information, the client encrypts the information utilizing his private keys. For approval, the public key is utilized. Assuming the public key can decrypt the information, it infers that it was signed by that private key only and, hence, the source of the transaction is authentic. Subsequently, a digital signature gives proof that the source of exchange is real and completed by a genuine holder. Transactions are the reasons why blockchains are made. They are the reason behind blockchain creation. These transactions are the smallest structure substances of the blockchain. We can view these transactions like our everyday Visa transactions. The information stored in the blockchain can be of financial or non-financial nature depending upon the reason why the blockchain was created [30,31,32]. A blockchain is a disseminated and decentralized framework. These transactions change the condition of this framework. When a transaction happens, it is publicly declared in the network. The special nodes select these transactions and add them to their separate blocks. When the block is discovered, it is communicated into the network where different nodes check it and update their duplicate of the record. It should be noticed that every transaction is time stamped. A transaction contains information sources and other required data [21].

5.5. Software Quality Measures

Software quality can be divided into functional requirements and operational requirements. The characteristics such as usefulness, usability, integrity, correctness, efficiency, and reliability come under functional requirements. On the other hand, a characteristic that interests the developers such as portability, reusability, and maintainability come under operational requirements. Some of these characteristics can be directly measured whereas others are indirectly measured [21]. Quality metrics are another aspect of software quality assurance. The quality metrics are the indicators that are produced as a result of the evaluation of the various software quality aspects. These measurements can be direct such as speed, memory, line of code, etc., or indirect measurements such as usefulness, user-friendliness, etc. The important factors which affect the performance of the system can be based on the demand and system factors. The demand factor can be found as the number of events that are streamed, the arrival rates which are with the responding events, and the resource usage associated with each event. The system factor is the properties of the scheduler which is allocating resources, the properties of the software operations that comprise the responses to the events, and the relationship between the responses [9]. Another factor that should not be missed when speaking about software quality is error. An error is the system state which leads to failure if not corrected. Similarly, a fault is a hypothesized cause of the error [8]. Quality assurance is sometimes confused with quality control. Quality assurance includes some set of actions such as facilitation, training, and measurement to provide adequate confidence that the product being built is continuously improved and fit for use. Quality assurance is a managerial tool that helps in management. On the other hand, quality control maps the product quality to requirements and takes action when nonconformance is detected. Quality control is a corrective tool [21]. A very important aspect of software quality assurance is software testing. Testing is the process of identifying bugs in an application or a software product. It is a never-ending process and plays an important role in determining the software quality. The testing criteria should be such that it meets all the requirements as specified in the software requirement specification document as well as meet the industry criteria. The testing and quality assurance together ensures that the software is meeting the performance requirements [33]. Further testing can be classified as dynamic testing and static analysis. Dynamic testing executes a program and examines the result produced. The specification of program behavior is the set of output data produced by the program in response to the supplied input data. These specifications vary based on what is to be tested. The advantage of dynamic testing is the ease of executing tests. Static analysis is the examination of the program structure to show which properties are true regardless of the execution path the program takes [33]. Other benefits of quality assurance imply enhanced security of the software. The software which is continuously tested will have its vulnerabilities checked. Appropriate actions can be taken at the right time to safeguard the software and improve the software quality. The quality check can prevent data leak and amplify the company’s cyber security.

5.6. Feature Selection and Performance Evaluation

Methods for Feature Selection Picking out a dataset’s most important types is a process known as feature selection. In specific situations, it is anticipated that reducing the number of input variables will improve the performance of the model while also lowering the computational cost of showing. A high dimensional feature set frequently contains a number of features that are ignored, which means they are not actual features but rather extensions of the other important features. The model training is not adequately supported by these redundant features. To achieve the best prophetic model effectiveness, the most important and pertinent elements of a dataset must be abstracted.

- The Reverse Elimination Method: All the prospective characteristics are added to the model in the beginning, and its performance is squared. After that, until the model’s total performance reaches a respectable level, incrementally remove each of the worst-execution characteristics.

- Removal of the Recursive Feature (RFE) This method works by iteratively removing attributes and creating a model from those that remain. It uses an accuracy metric to rank the characteristics following their standing. Inputs into the RFE technique include the number of required features and the model to be used. The significance of each variable is then indicated, with 1 denoting its highest priority. Additionally, it offers assistance, with the true designation of an important trait and the false unimportant one.

The class predictions generated by the classifiers are displayed in a tabular format that distinguishes between accurate and unfitting predictions. This is called a confusion matrix [11]. Forecasts are revealed, both positive and negative. Accuracy, exactness, recall, and F-measure are a few often used metrics that can be computed using this matrix. The confusion matrix shown below is an example. False positives (FP), false negatives (FN), true positives (TP), and true negatives (TN) are displayed in the four cells of the matrix (FN).

Log loss and loss entropy model: The log value should be near zero for a suitable twofold characterization model. The value of log misfortune rises as the actual value deviates from the predicted value. The model is more precise, with a smaller log misfortune. By using the genuine result (y) in addition to the typical result, the cross-entropy for double characterization is determined (p). Below is a recipe for cross-entropy.

Accuracy: The number of real positive tests compared to the total number of predicted benefits.

Review: The number of authentic positive tests among the true positive instances is taken into account. F1-measure: The weighted normal of accuracy and review represents the two measures. It may give precedence to pieces of information over accuracy due to the lopsidedness of the classifications.

6. Result and Discussion

The result section is divided into subsections, where Section 6.1 discusses data image data classification accuracy and evaluation, Section 6.2 discusses validation of various models used for personality classification based on MBTI factor data, and Section 6.3 discusses EEG emotion classification modules based on the DEAP dataset and Section 6.4 evaluation multi-model accuracy parameters.

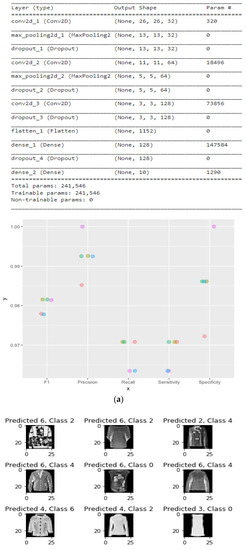

6.1. CBIR Result

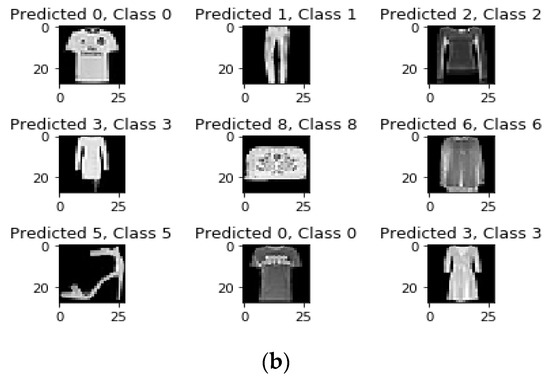

Since our dataset consists of images, it makes sense to use convolution layers for encoding and decoding. The encoder is stacked with Conv2D and MaxPooling2D layers (the max-pooling layer down samples the spatial dimensions of the images by taking the maximum value over an input window for each channel of the input). The decoder is stacked with Conv2D and UpSampling2D layers (which up-sample the input by doubling the dimensions of the input image). The structure of our model is as follows in Figure 5. We normalize the pixel values between 0 and 1 and use our input images’ original (samples, 3, 28, 28) shape for training. The model configuration uses binary cross-entropy as the loss function and the Adam optimizer. The model is trained over 100 epochs with a batch size of 128. The following graph shows the change in loss over the number of epochs in Figure 5 [13]. The model converges to a loss value of 0.2489 and is reasonably successful in encoding the images and reconstructing those encoded images as shown below in Figure 5b. To test the trained auto encoder in a practical scenario, a noise factor is added to the images to corrupt them slightly and see if the encoder can recover the original images. The noised images are as below in Figure 5. The Auto encoder is fitted onto the noisy data, and prediction is made accordingly. As shown below, the model is reasonably successful in finding the right kind of clothing even with much noise as shown in Figure 5b.

Figure 5.

(a) model summary (b) MNIST output.

To evaluate the model, we use the scikit library label_ranking_average_precision_score function. This function takes two arrays as input: an array of zeros and ones and an array of relevance scores. In our implementation, we compute the relevance score from the distance between the query image and the database images. A higher relevance score indicates a lower distance between the images. The first array is populated using the following rule: if the database image and query image have the same label, we append a “1” to the array for each image on the database. If not, we append a “0”. This scoring function returns a maximum score of 1 if the closest images have the same label as the query image. If images with a different label are found close to the query image, the score decreases. We compute the Euclidian distance for each query image feature against the training dataset image features. The lower the distance, the higher the relevance score should be. Then, we apply the scoring function label_ranking_average_precision_score to our results. The graph shows the results of our scoring mechanism. The -y-axis corresponds to the score computed with the label ranking average precision scoring function. The x-axis corresponds to the n-first results assessed, as shown in Figure 6 and Figure 7 [33].

Figure 6.

Training and validation curve.

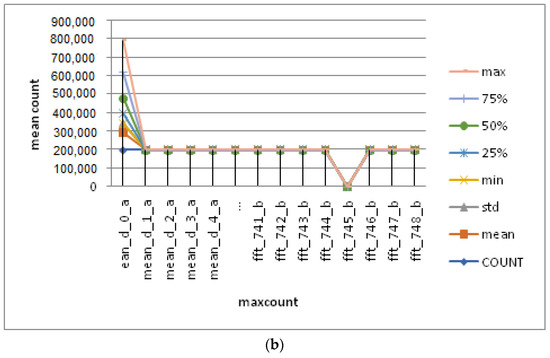

Figure 7.

Class and count instance comparison.

Here, we show CNN’s preliminary findings (intermediate activations). The saliency map produced by various convolutional layers in a network, given a specific input, is the process of visualizing successive installs (the output of a layer is often called its activation, the output of the activation function). This clarifies the many filtrations that the networks have been educated to employ and inputs are divided as shown in Figure 6 [29,30]. In Figure 5a, the dropout probability of p causes the nodes to disappear. Let us try to comprehend using an input of x: 1, 2, 3, 4, and 5 to the completely linked layer. We have a dropout layer with probability p = 0.2 (or retain probability = 0.8). 20% of the nodes would be dropped during forwarding propagation (training) from the input x, meaning that x may change to 1, 0, 3, 4, 5, 1, 2, 0, 4, 5, and so on. It is also applied to the layers that were not visible. The maintained probability, or 1-drop probability, for the input layers is typically closer to 0.8 than 1, as recommended by the authors. The more drop probabilities there are for the hidden layers, up to a maximum of 0.5, the sparser the model becomes. A unit (node/neuron) in a layer is chosen with a maintained probability during training in the original version of the dropout layer (1-drop probability). As a result, the training batch’s architecture becomes thinner and is unique each time.

The following steps are done to manage unbalanced data;

Over- and under-sampling fundamental theoretical foundations are fairly straightforward. To equal the number of samples coming each class, we randomly choose a subset of samples from the class with more occurrences when we use under-sampling. From the 458 benign cases, 241 were chosen at random in our example. The biggest drawback of under-sampling is that the information from the samples that are left out could be useful. To equalize the number of samples in each class, oversampling involves randomly duplicating samples from the class with fewer instances or creating more instances, depending on the data we already have. This approach prevents information loss, but it also puts our model at risk of being overfit because we are more likely to get predictions and accuracy based on imbalanced data during the oversampling phase, as demonstrated in Figure 5a itself.

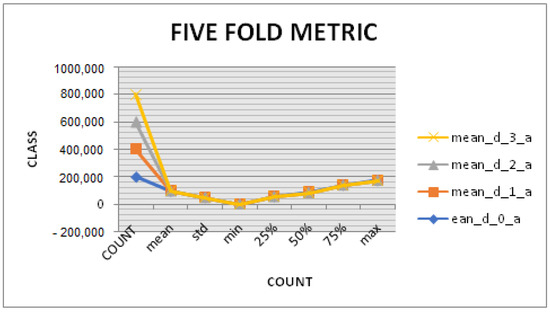

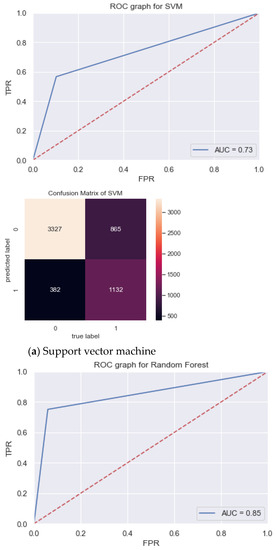

6.2. Personality Classification

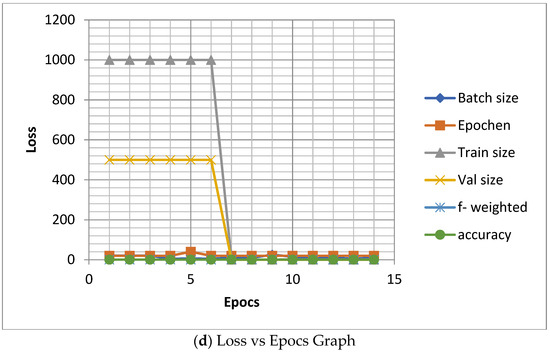

We have portrayed the MBTI-based personality classification based on five-fold cross-metrics, where, the length of each class is depicted as the width, size, concatenated, and terminal transitions. A total of 6000 examples of the various personality types are shown in the length portion of the right column of the data set. We have implemented principal component analysis on the basic state set to gain its entropy in terms of its cross-validation and train and test split data sets. Additionally, the confusion metrics and classification reports have been segregated based on the Python Jupiter notebook, where the precision and recall are depicted. As in the diagram shown below in Figure 8, we show the different confusion metrics and ROC graphs of the different classifiers used for personality type classification. A decision tree classifier was used. For personality type classification, the random forest has the highest accuracy, in which the random forest gives the highest precision of 0.79, 0.90, F recall score of 0.84 and support of3709 to 5706. In Figure 9, the confusion metrics of positive predictive level are shown, where it shows the maximum instances of the different personality types. It has been depicted as an IMFG personality type in the personality distribution metrics. Additionally, the lowest is personality distribution, which is depicted as an ESFJ personality type. We have shown the classification reports of the three classifiers, which we have been used for MBTI personality type classification. The random forest escapes with the highest accuracy of 87%, and the support vector machine gives an average accuracy of 78% in Table 2. Moderate accuracy is achieved by the decision tree induction model, which is 84%. It is validated on the five-fold cross-validation accuracy metrics. The precision-recall and weighted metric show the difference between predicted and correctly classified instances, as shown in Figure 8d [34].

Figure 8.

Model comparison: (a) Support vector machine, (b) Random forest, (c) Decision tree, (d) Loss vs. Epocs Graph.

Figure 9.

Correctly classified personality type.

Table 2.

Comparisionof different classifiers.

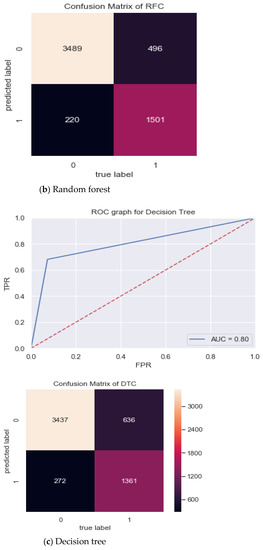

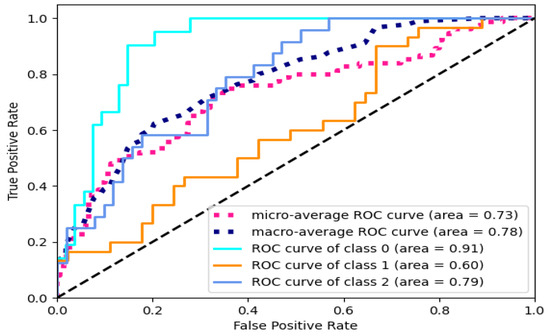

6.3. EEG Emotion Classification

In this work, we explored the end-to-end model while minimizing the preprocessing stage to ensure the accuracy of emotion recognition in general. In addition, we proposed a paradigm to improve emotion recognition precision by supporting more than two levels of emotion recognition and using the evolution of emotion recognition over time. Due to this, we proposed two emotion classification models: one that incorporates an attention mechanism into LSTM and another that simultaneously builds an attention LSTM and a CNN. We c included concluded analysis and experimentation that the CNN-LSTM model and the two-level classification performed similarly, except for the attention categorization, which showed a performance improvement of about 1.6–1.7%. The consequences of a 10-overlap cross approval and a three-level excitement grouping are shown in Figure 10. The typical exactness of every 10-crease cross approval was 91.64 0.34%. The exactness on account of excitement was 82.28 2.07% for the low class, 92.78 0.94% for the working class, and 93.39 0.92% for the elegant. Generally speaking, the presentation of the LSTM + Attention + CNN model outflanks four-crease cross-approval by a variable of ten, with the medium-class examination result being generally recognizably gotten to the next level. In four-crease cross-approval, the typical excitement and valence precisions were 84.1% and 86.93%, separately. In comparison to four-crease cross-approval, excitement accuracy increased by 7.54% and valence precision by 4.84%, and both were generally disappointing, as illustrated in Figure 11 [35].

Figure 10.

(a)confusion matrix of DEAP DATASET (b) mean count validation on result.

Figure 11.

ROC graph of logistic regression classifier on Deep dataset.

Table 3 shows the different parameters used for the analysis of the proposed method, along with others. Concerning architecture complexity, without enough internal complexity to keep it running and maintain its structural integrity, the system as a whole would not exist. The visual surface information of some man-made architectural designs is kept low for stylistic reasons; however, hiding complexity is not being honest about the design. In architecture, what do simplicity and complexity mean? It is very difficult to generate pure simplicity or pure complexity without the necessity for them to coexist because simplicity and complexity are typically seen as the two extreme ends of the same continuum. Typically, the word “simplicity” is used to describe architecture. How long memory can persist without being retrieved is determined by a long-term memory characteristic called stability of memory. The probability of forgetting in a given amount of time depends on stability (the higher the stability, the less the probability). Qualitative data is not based on formal structures because it is non-statistical and frequently semi- or unstructured. It is not always necessary to quantify these data to produce graphs and charts. Instead, it is organized into groups based on its qualities, traits, labels, and other identifiers. Qualitative data can be used to address the “why” query. It is a curious procedure that frequently leaves an opportunity for additional research. These qualitative research findings are useful for theorizing, analyzing, developing hypotheses, and starting to understand something [33,34,35].

Table 3.

Comparison of another state-of-the-art method.

6.4. Data Fusion Result of Multimodality

Machine learning and multimodal data fusion have a variety of use cases, as was previously mentioned. The essential elements for creating multimodal data fusion models are summarized for clarity. We will cover the topics that need to be taken into account before beginning data fusion research and development in the following section of this review. The data fusion method, as described before, merges data from several modalities, utilizing machine learning, deep learning, or even just basic arithmetic processes (e.g., simple concatenation). Fusion is primarily carried out at three levels: early fusion, late fusion, and joint fusion. It can occur at various stages of a modeling process. Early fusion is the process of merging model characteristics at the input layer of the model, primarily by mixing the various forms of data before using a particular technique (Figure 1). When the data formats from multiple modalities are significantly different, it can be difficult to merge the data, which presents a challenge in early fusion. Taking the issue of mixing tabular data, for instance, to comprehend the added performance that results from data fusion, multimodal ML models are often contrasted with models that use fewer data modalities. In general, evaluation metrics across ML domains are comparable and include assessments of accuracy, positive predictive value, negative predictive value, specificity, sensitivity, calibration, AUC, and AUCPR. The dataset and the study’s objectives play a large role in determining the evaluation metric to use. Bringing together data from several sources with various intrinsic distributions and degrees of structure can be difficult. Data fusion techniques try to combine several data observations into a coherent, varied depiction of an event in a way that a single modality cannot. However, noisy and irrelevant data that could impair model performance provide a barrier to fusion itself. In Table 4 and Table 5, the specific multimodal results are described, where Table 4 shows the multimodal evaluation on different metrics and Table 5 shows the model summary used in data fusion [22].

Table 4.

Multi-model evaluation metrics.

Table 5.

Multi-model summary.

7. Conclusions and Future Scope

We have used the Fashion MNIST dataset in our implementation, which is similar to the original MNIST dataset for benchmarking machine learning applications. The dataset contains images for ten different labels: t-shirt, top, trousers, pullover, dress, coat, sandal, shirt, sneaker, bag, and ankle boot. It contains 60,000 training examples and 20,000 test examples consisting of 28 × 28 grayscale images for these ten different clothing items. For our proposed solution, we have trained a convolution Denoising Autoencoder, which can encode and decode an input image with some level of noise (a slight difference from the original image). Since our model is already trained on the ten items of clothing in the Fashion MNIST dataset, it will be able to decode any input image that closely resembles the features (color, texture, shape) of those clothing items. This way, we can compare any input image with all the images in our database according to their encoded features and filter out all those images where the features resemble the proposed work might not just change the landscape of the fashion industry, but also provide the foundation for shaping the e-commerce industry in a whole new way. If online shopping could be made more intelligent and interactive, it could become the new normal. People may opt out of going to physical stores entirely and choose to buy what they desire with a click from the comfort of their own homes. Additionally, we have proposed and tested the ECG wavelet on the AMIGOS dataset for preference segmentation. This particular research area opens the field of various decision support systems for business perspectives as a research opportunity.

8. Limitation of Proposed Work

This technique needs extensive domain knowledge because the feature representation of the items is hand-engineered to some extent. Therefore, the quality of the model is limited to hand-engineered aspects. Only depending on the user’s current interests can the model give recommendations. In other words, the model is only partially able to build on the consumers’ already-existing interests. A Multimodal System’s complexity can be increased by a combination of impairments to the point where it becomes impossible to produce a workable solution.

Author Contributions

Conceptualization, A.S., A.B., N.G., N.K.S., N.G. and J.K.; methodology, N.G. and A.B.; software, A.S., A.B. and N.K.S.; validation, A.S., A.B., N.G. and N.K.S.; formal analysis, A.B. and J.K.; investigation, A.S. and N.G.; resources, A.S.; data curation, A.S., A.B., J.K., N.K.S. and N.G.; writing—original draft preparation, A.S., A.B., N.G. and N.K.S.; writing—review and editing, A.S.; visualization, A.S., A.B., J.K. and N.K.S.; supervision, A.B. and J.K.; project administration, A.B. and J.K.; funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data may be available upon request.

Acknowledgments

We want to acknowledge the group involved in this project for providing us tools for implementation.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ahmad, H.; Asghar, M.U.; Asghar, M.Z.; Khan, A.; Mosavi, A.H. A Hybrid Deep Learning Technique for Personality Trait Classification from Text. IEEE Access 2021, 9, 146214–146232. [Google Scholar] [CrossRef]

- Ali, I.; Lashari, H.N.; Hassan, S.M.; Maitlo, A.; Qureshi, B.H. Image Denoising with Color Scheme by Using Autoencoders. Int. J. Comput. Sci. Netw. Secur. 2018, 18, 5. [Google Scholar]

- Anju, J.; Shreelekshmi, R. FSeCBIR: A Faster Secure Content-Based Image Retrieval for Cloud. Softw. Impacts 2022, 11, 100224. [Google Scholar] [CrossRef]

- Babenko, A.; Slesarev, A.; Chigorin, A.; Lempitsky, V. Neural Codes for Image Retrieval. arXiv 2014, arXiv:1404.1777 [cs]. Available online: http://arxiv.org/abs/1404.1777 (accessed on 30 November 2021).

- Chen, Y.; Wang, J.Z.; Krovetz, R. An unsupervised learning approach to content-based image retrieval. In Proceedings of the Seventh International Symposium on Signal Processing and Its Applications, 2003, Paris, France, 4 July 2003; Volume 1, pp. 197–200. [Google Scholar] [CrossRef][Green Version]

- Christian, H.; Suhartono, D.; Chowanda, A.; Zamli, K.Z. Text based personality prediction from multiple social media data sources using pre-trained language model and model averaging. J. Big Data 2021, 8, 68. [Google Scholar] [CrossRef]

- Dagnew, T.M.; Castellani, U. Advanced Content Based Image Retrieval for Fashion. In Image Analysis and Processing—ICIAP; Murino, V., Puppo, E., Eds.; Springer International Publishing: Cham, Switzerland, 2015; Volume 9279, pp. 705–715. [Google Scholar] [CrossRef]

- Deshmane, M.; Madhe, S. ECG Based Biometric Human Identification Using Convolutional Neural Network in Smart Health Applications. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–6. [Google Scholar] [CrossRef]