Interfaces for Searching and Triaging Large Document Sets: An Ontology-Supported Visual Analytics Approach

Abstract

1. Introduction

- What are the criteria for the design of VAT interfaces that support the process of searching and triaging large document sets?

- If these criteria can be distilled, can they be used to help guide the design of a VAT interface that integrates progressive disclosure and ontology support elements in multi-stage information-seeking tasks?

2. Background

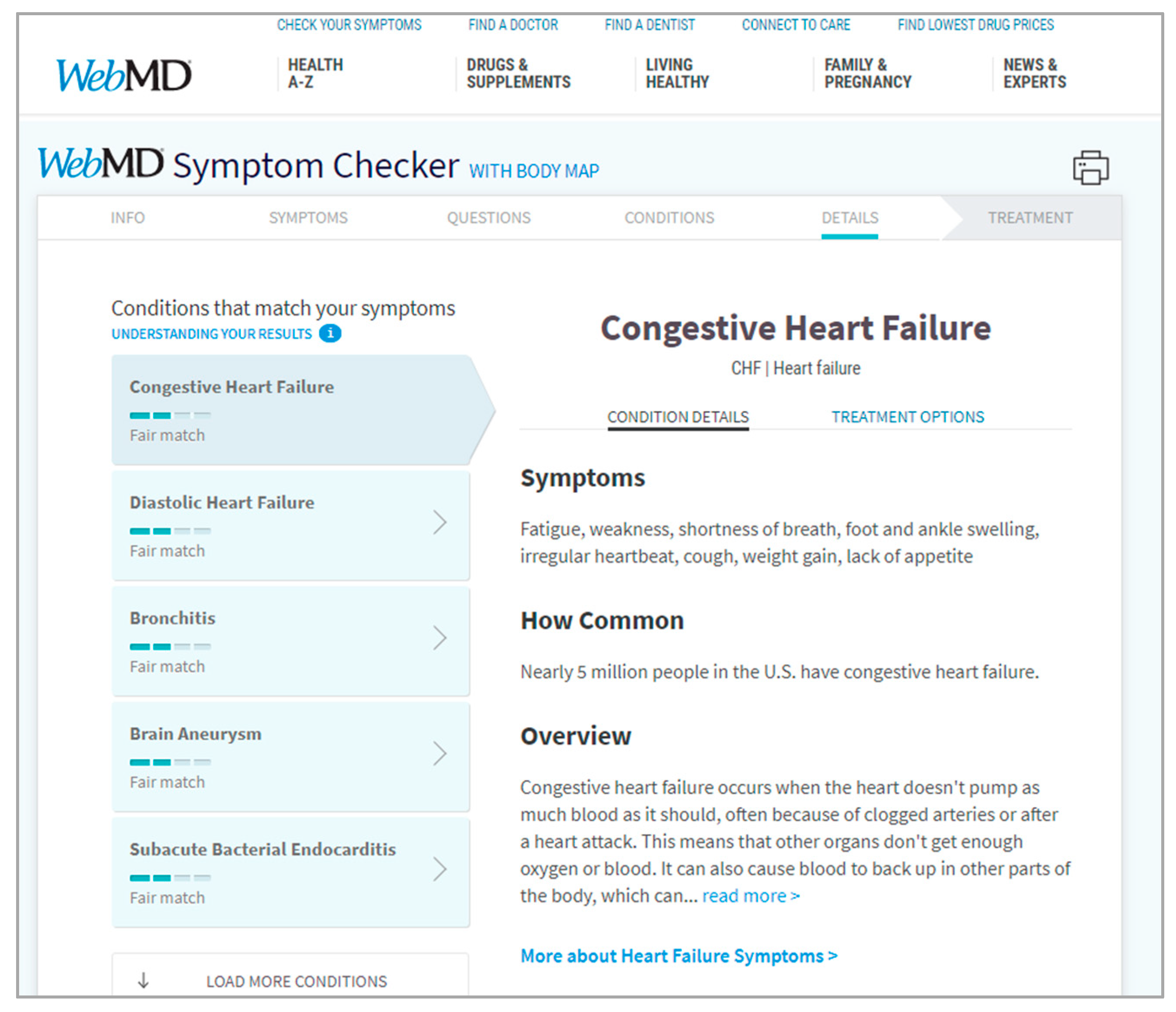

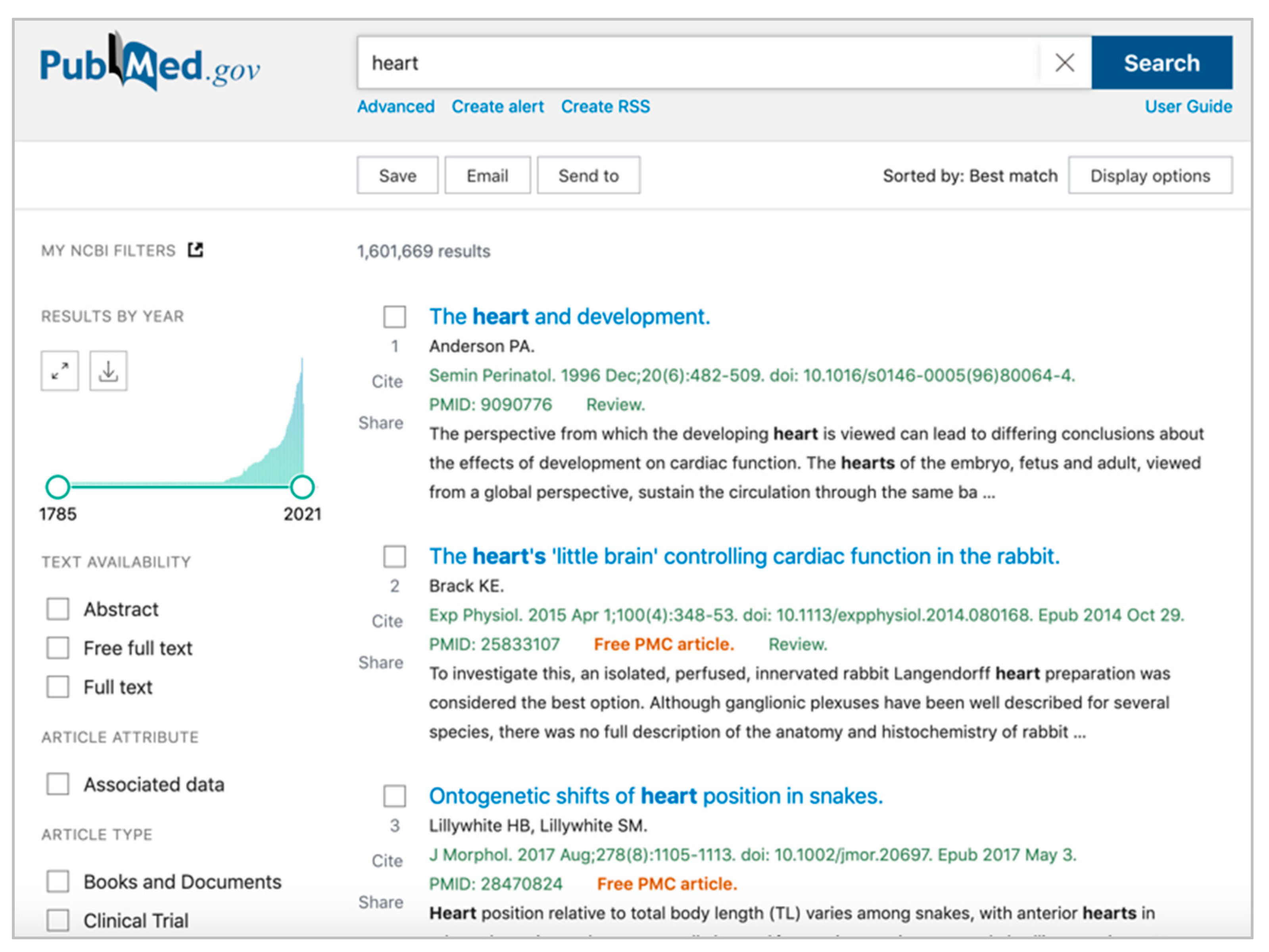

2.1. Information Search and Triage

2.2. Machine Learning

- Users specify their needs as a set of terms understood by the tool.

- Users ask the tool to apply them as input features within its computational components.

- The tool performs computation to map the features against the document set.

- The tool displays the computational results and how they arrived at them.

- Users assess if they are satisfied with the results or if they would like to adjust their set of terms to generate an alternate mapping.

- Users either restart the interaction loop or complete the task.

2.3. Ontologies

3. Methods

3.1. Literature Search

3.1.1. Search Strategy

3.1.2. Inclusion and Exclusion Criteria

3.1.3. Selection and Analysis

3.1.4. Results

3.2. Task Analysis

3.2.1. Models of Information-Seeking Process and Progressive Disclosure

3.2.2. Stages of Query Building and Search

- Difficulty understanding the domain being searched.

- Inability to apply domain expertise.

- Inability to accurately formulate queries matching information-seeking objectives.

- Deficiency assessing and determining if search results satisfy objectives or if adjustments are required.

- Users are people, not oracles.

- Users should not be expected to repeatedly answer if ML results are right or wrong without an opportunity to explore and understand the results.

- Users tend to give more positive than negative feedback to interactive ML.

- Users need a demonstration of the behavior of ML components.

- Users value transparency in ML components of tools, as transparency helps users provide better labels to ML components.

3.2.3. Stages of High-Level and Low-Level Triage

- Underload documents: little to no content of each document is displayed, making it difficult to compare documents.

- Overload documents: too much of each document is displayed, making it difficult to rapidly understand each document.

- Distort documents: a summarization, weighting, or filtering strategy is used to either demote or promote certain document attributes, providing different tradeoffs: making some attributes easier to perceive, creating poor decontextualized generalizations, hiding away value, and sometimes promoting harmful attributes.

3.3. Design Criteria

4. Materials

4.1. Technical Scope

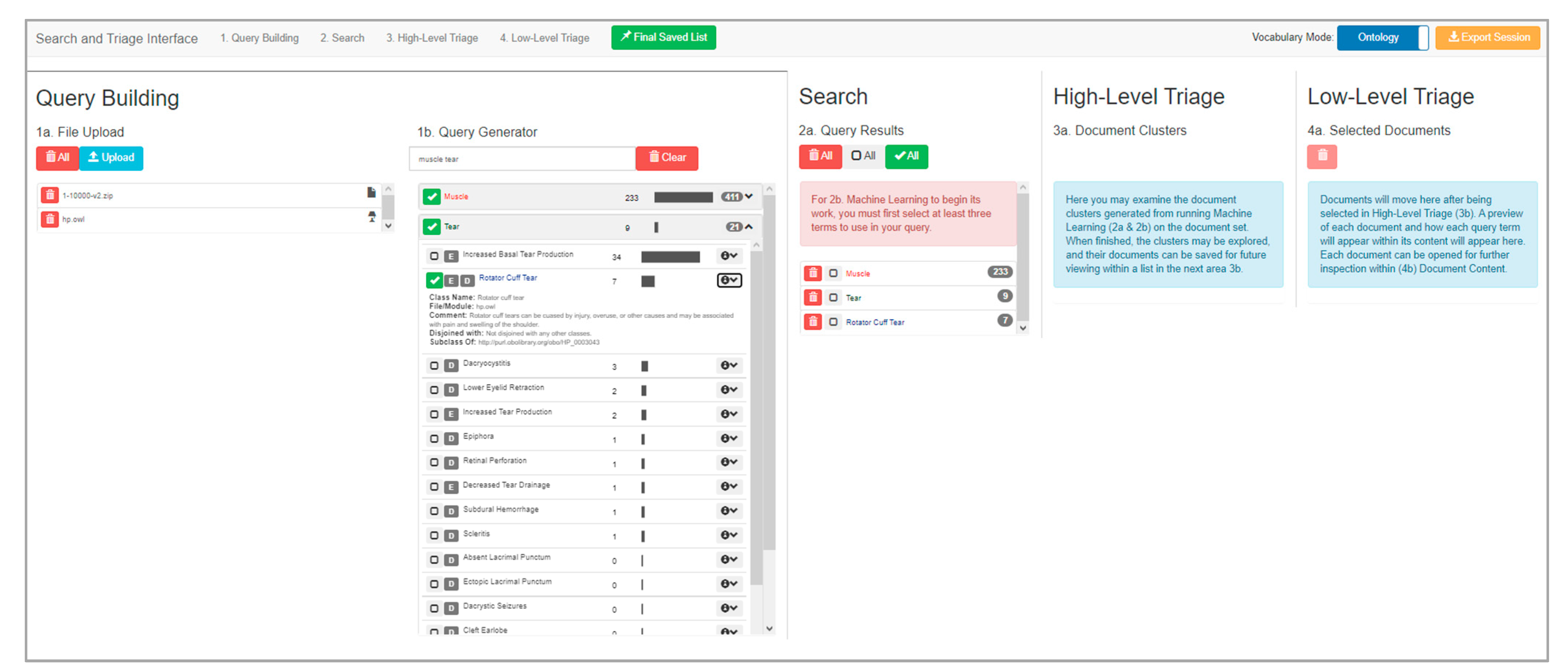

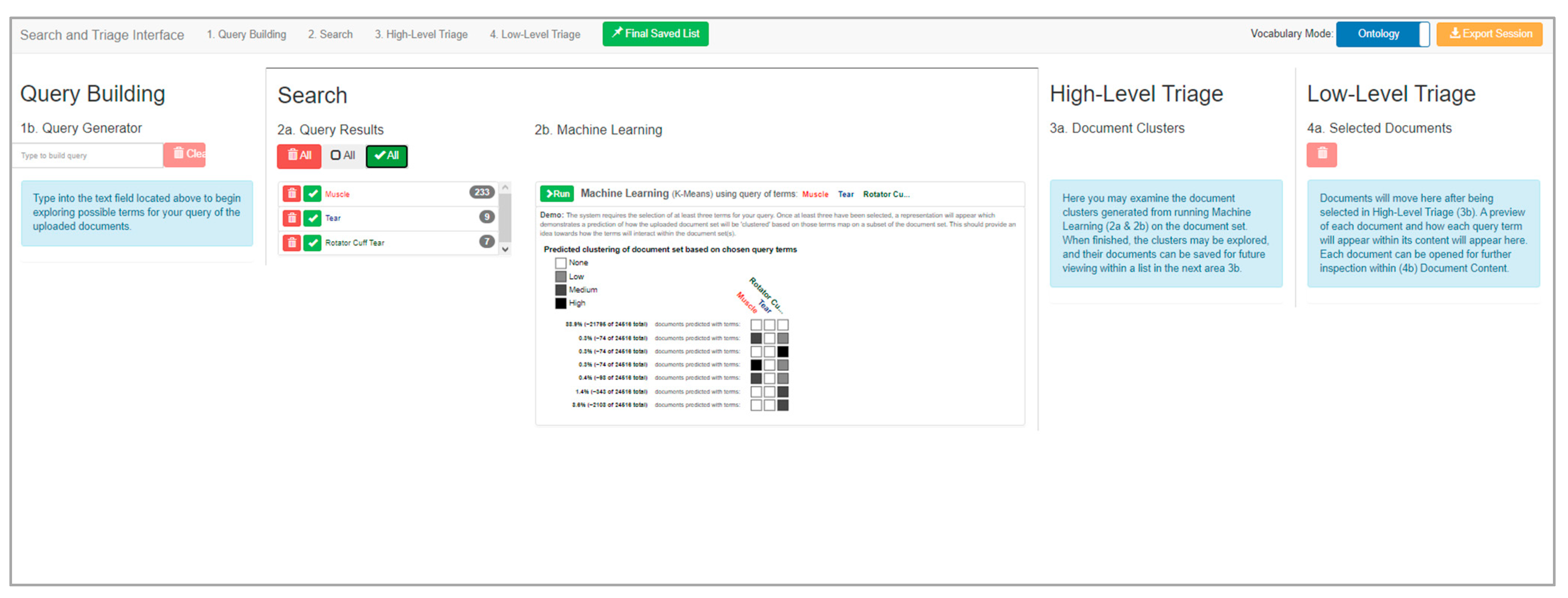

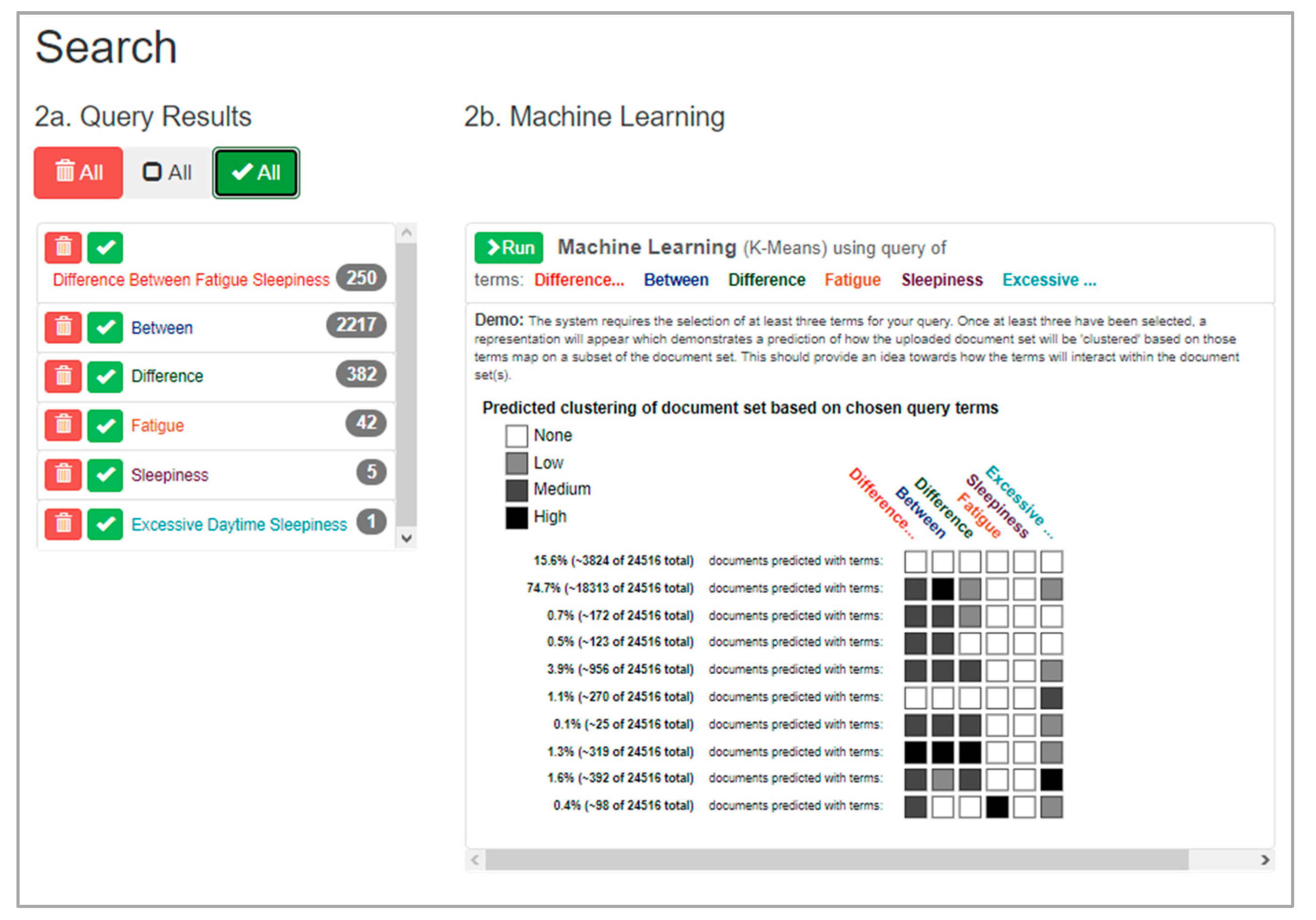

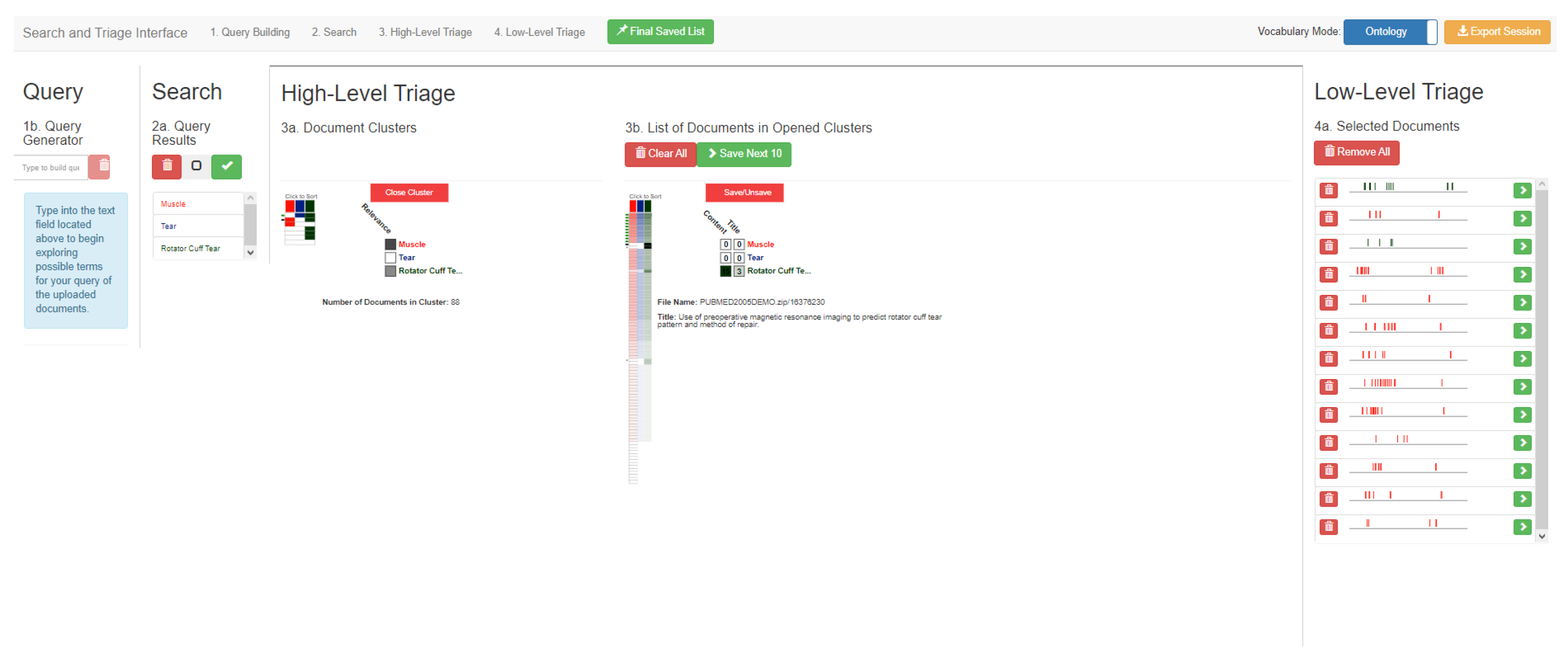

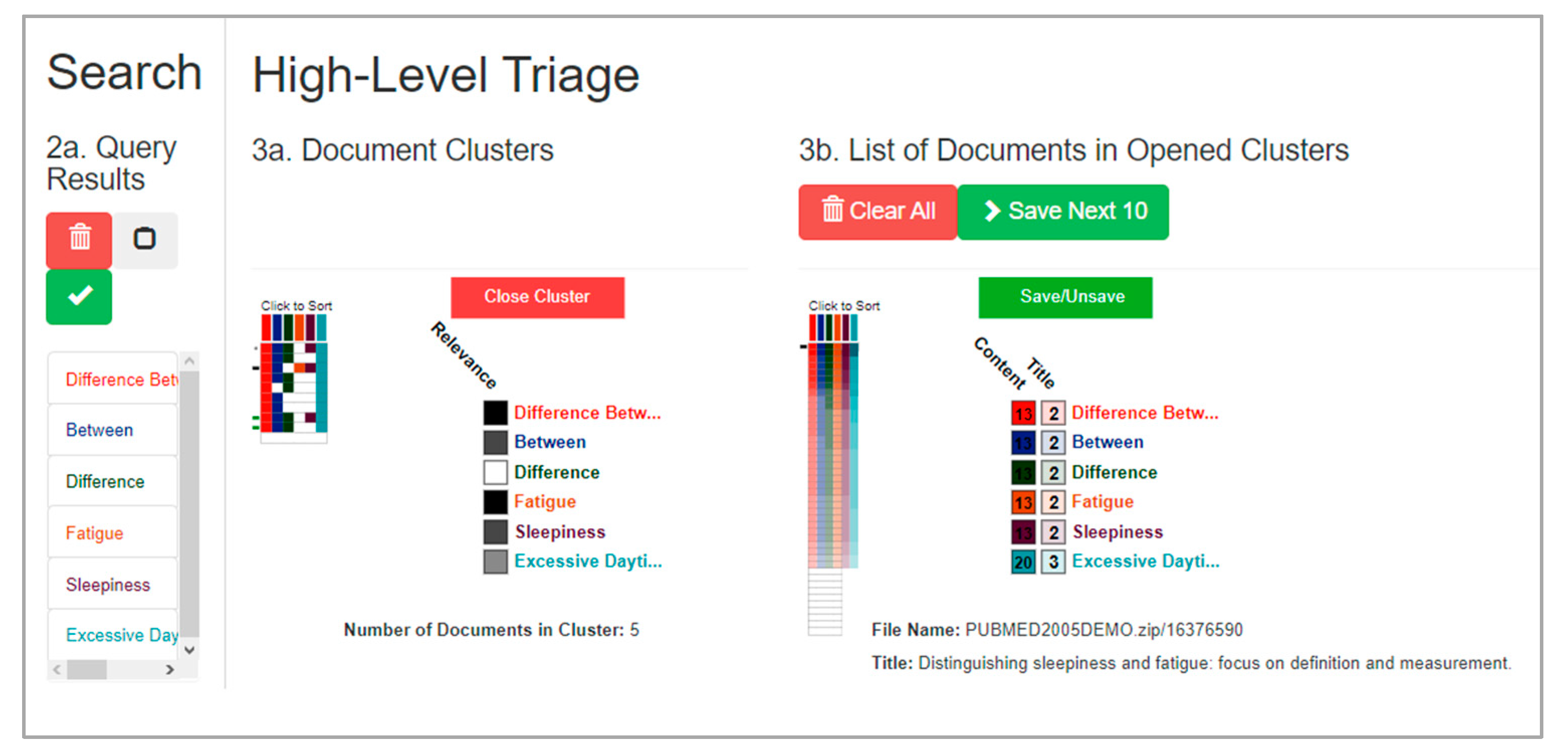

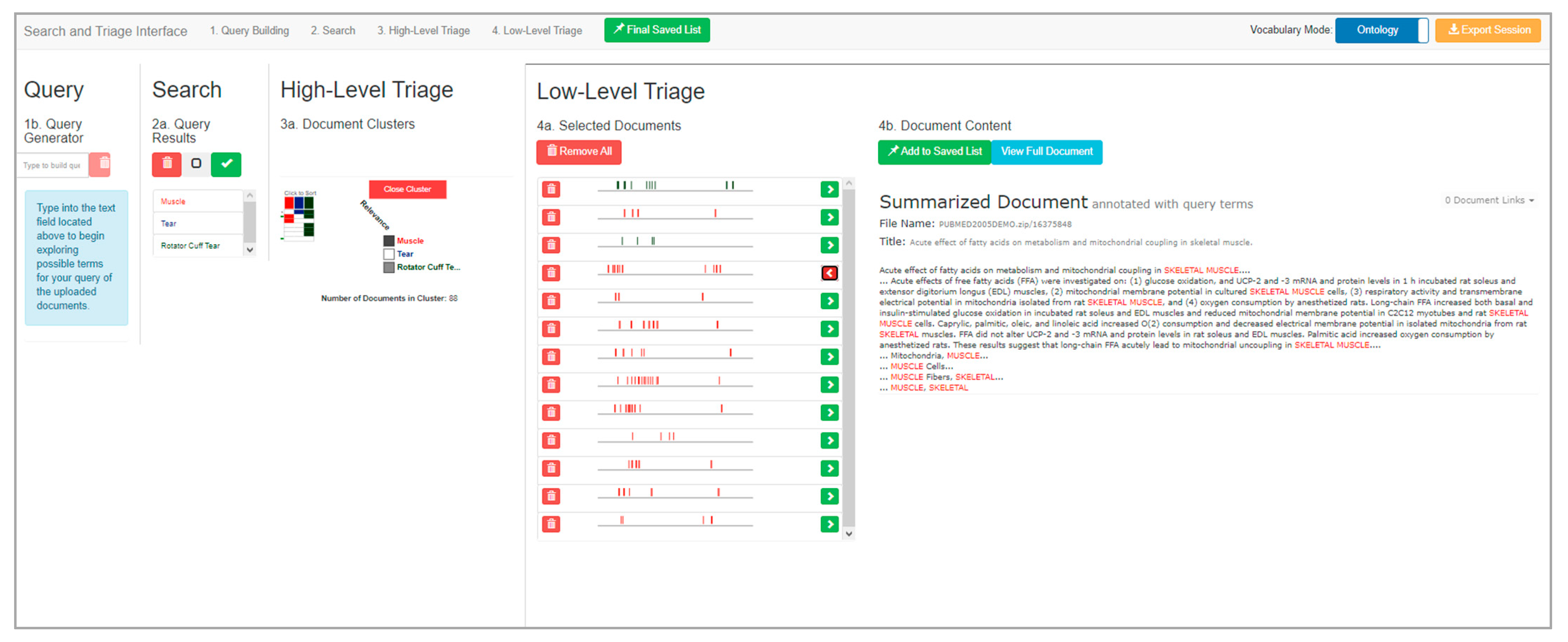

4.2. VisualQUEST Functional Workflow

4.3. Back-End Systems

4.3.1. Analytics Server

| Algorithm 1 Pseudocode of clustering functionality spanning the workflow of VisualQUEST (front-end), Analytics Server, and Document Server. |

| Input: A set Q of user inputted queries. Output: Signal to update interface with cluster assignments targets ← chain(Q).unique().difference(getStopWords()) documents ← getDocuments() /* Prepare bag of words using target, related entities, and their generated WordNet synsets */ for i = 0 to targets.length do target = targets[i] targetCoverage ← target + target.getDirectlyRelatedEntities() targetSpread[target] ← targetCoverage + targetCoverage.getWordNetSynsets() targetSpread[target] ← targetSpread[target].unique().difference(getStopWords()) /* Gather counts from pre-indexed documents, then fit and predict clusters using Scikit.Learn KMeans clustering */ documentCounts ← getIndexesFromSolrAPI(targetSpread, documents).scaleRange(0, 1)reducedPCA ← SciKitLearn.PCA(nComponents = 2).fit_transform(documentCounts) kmeansPCA ← SciKitLearn.KMeans(init =’k-means++’, nClusters = 7, nInit = 10) clusterAssignments ← kmeansPCA.fit_predict(reducedPCA) for i = 0 to targets.length do target = targets[i] for j = 0 to clusterAssignments.length do cluster = clusterAssignments[j] yPred = cluster.yPred[target] /* Generate weighting scale using x5 multiplier */ clusterAssignments[j].weighting[target] ← generateClusterWeighting(yPred) return signalInterfaceUpdate(clusterAssignments) |

4.3.2. Document Server

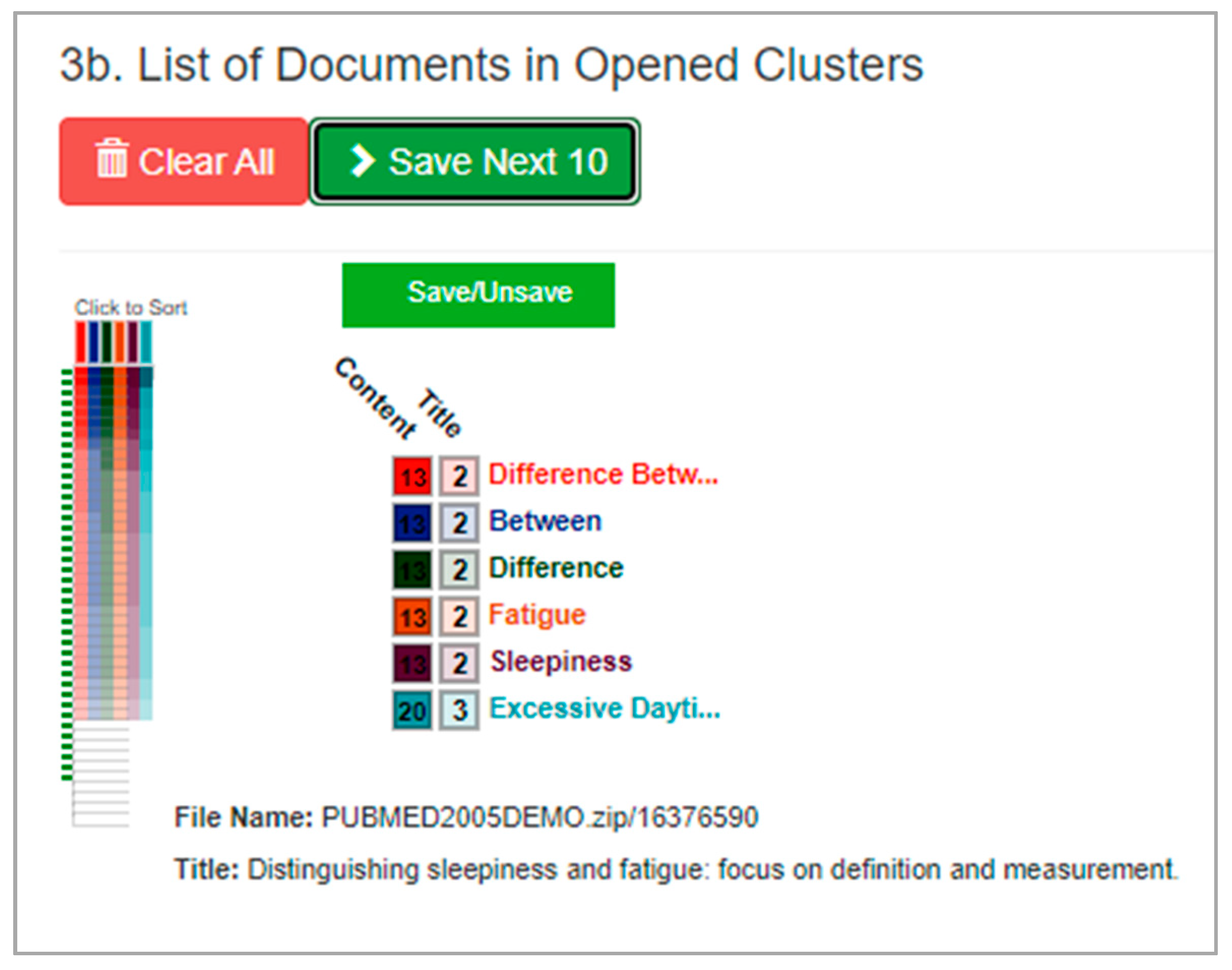

4.4. Front-End Subviews

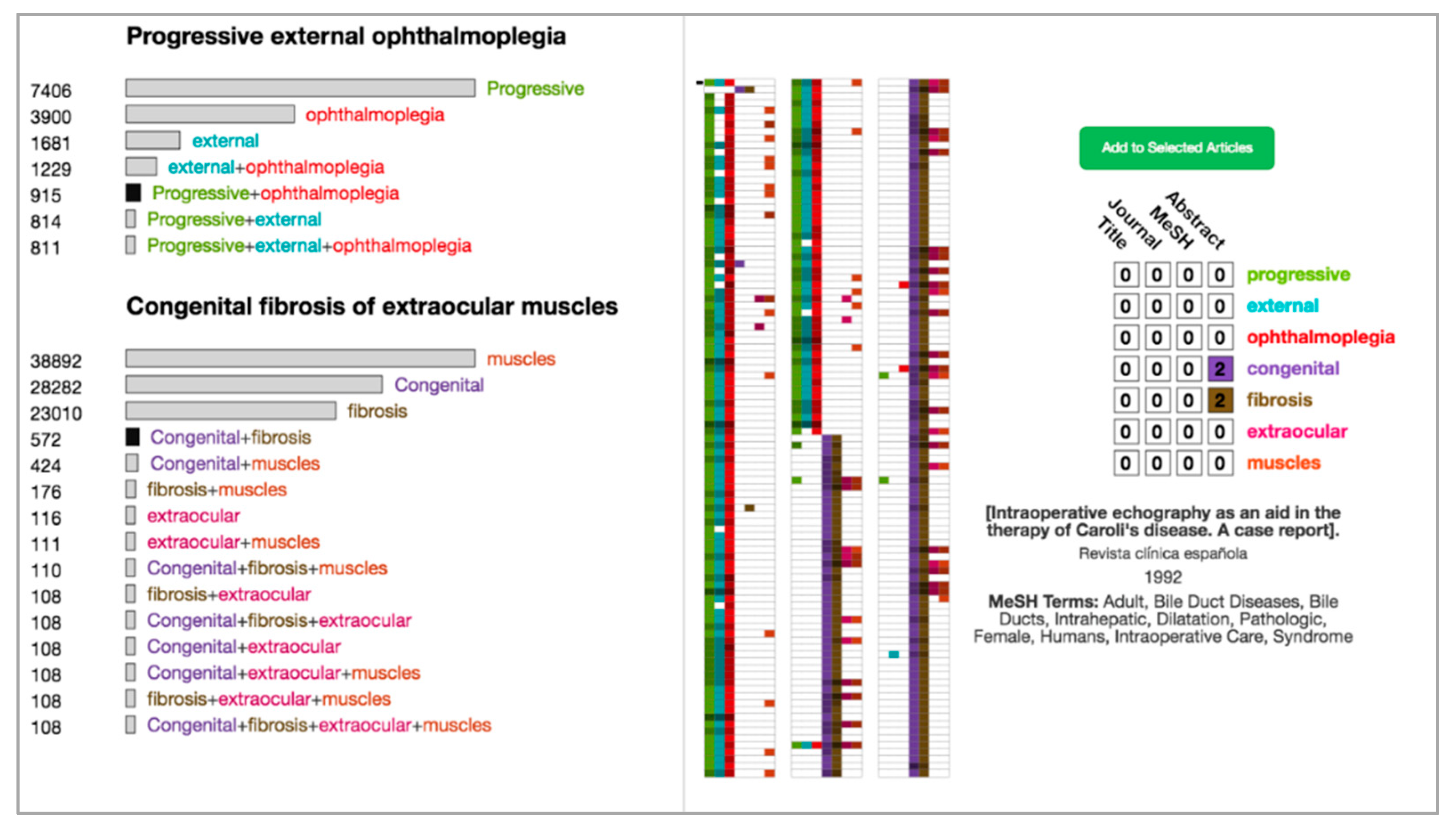

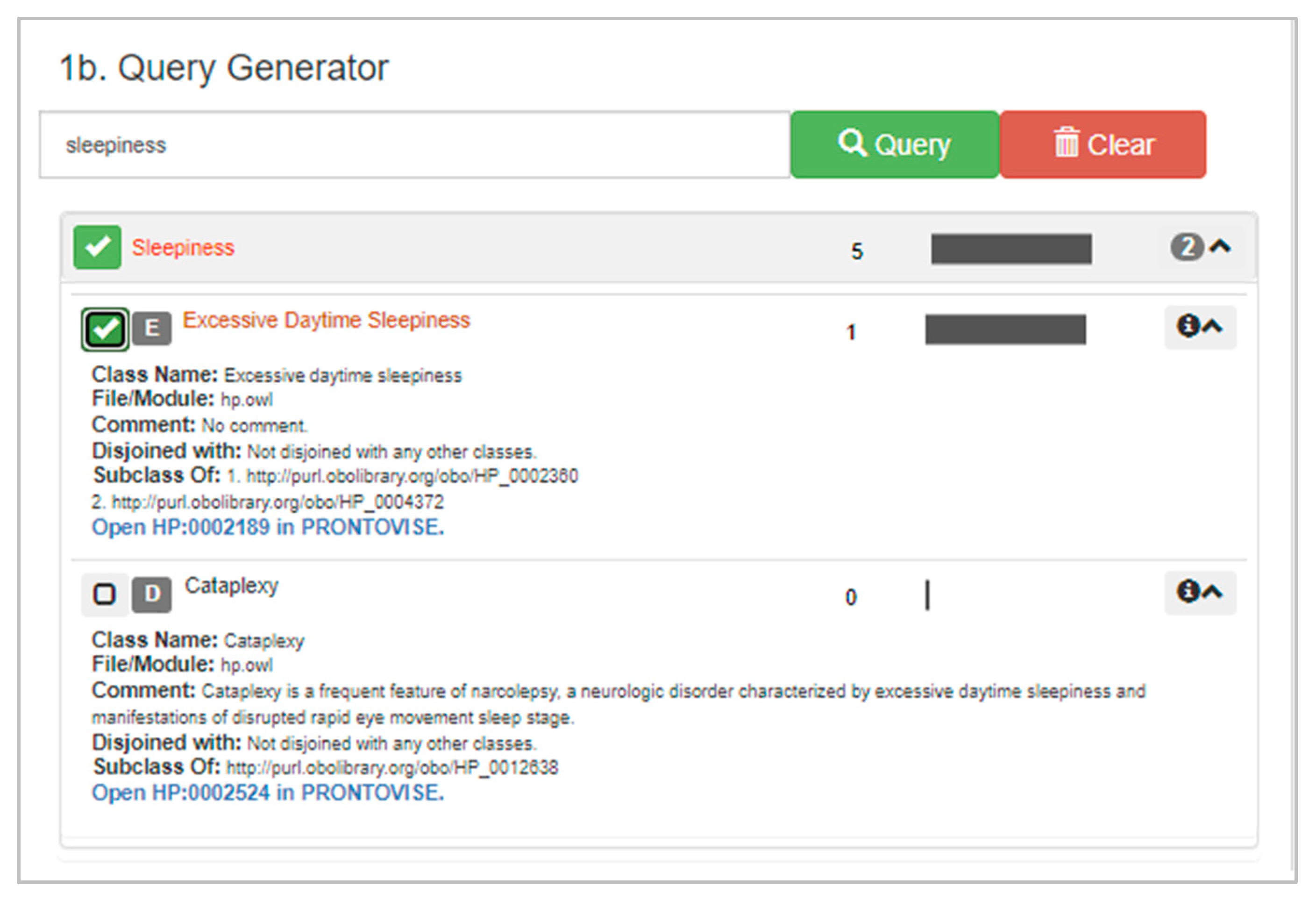

4.4.1. Query Building Subview

4.4.2. Search Subview

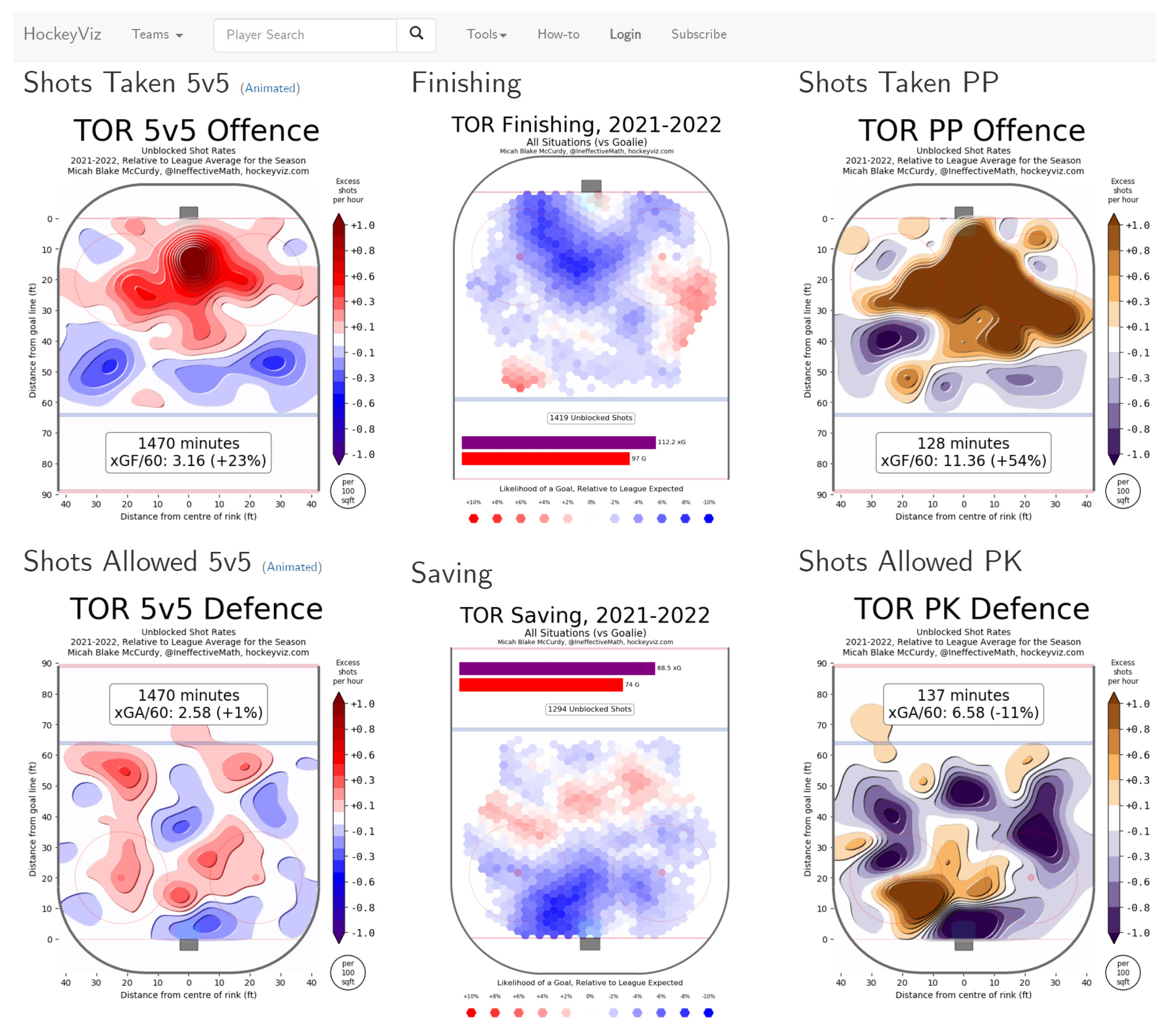

4.4.3. High-Level Triage Subview

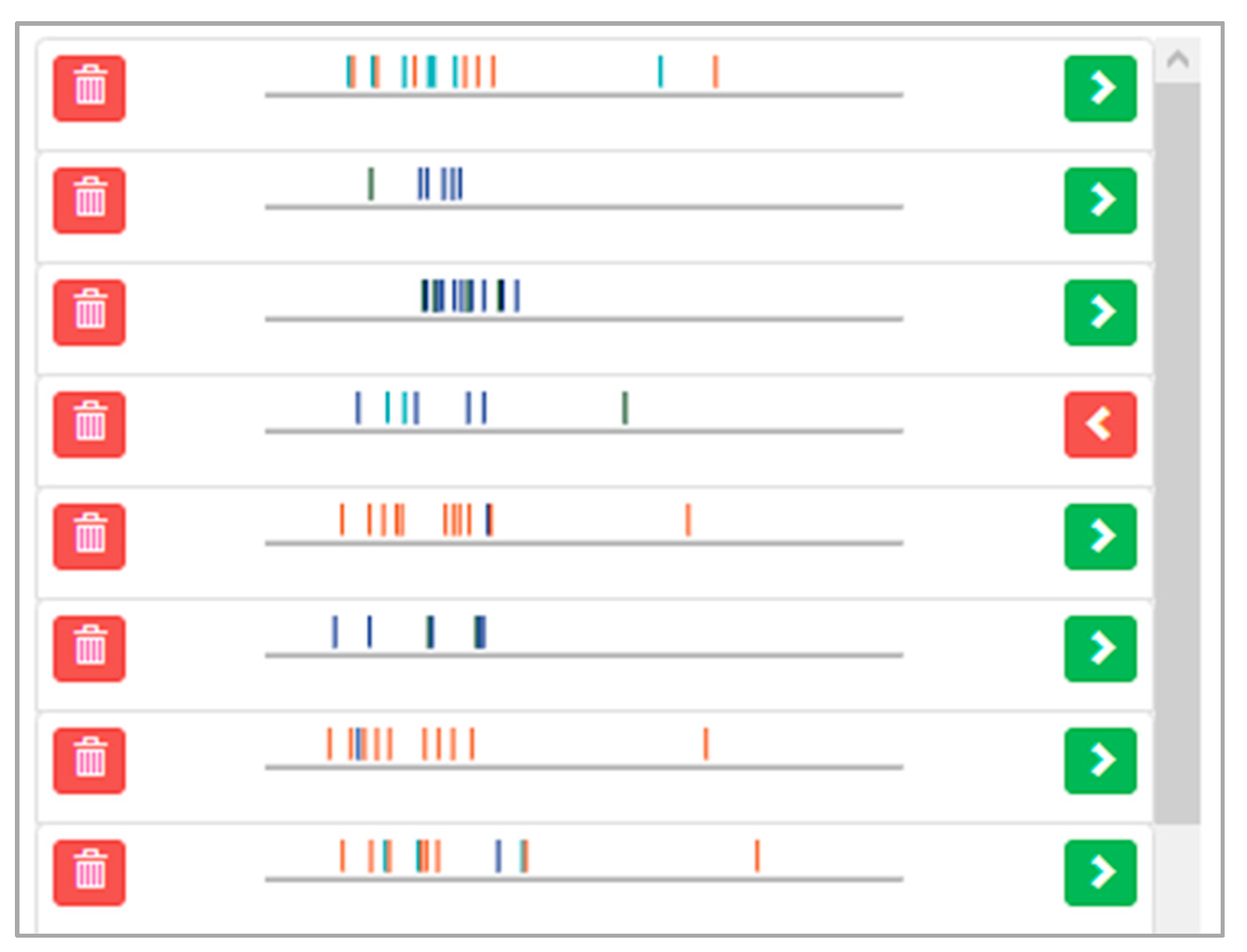

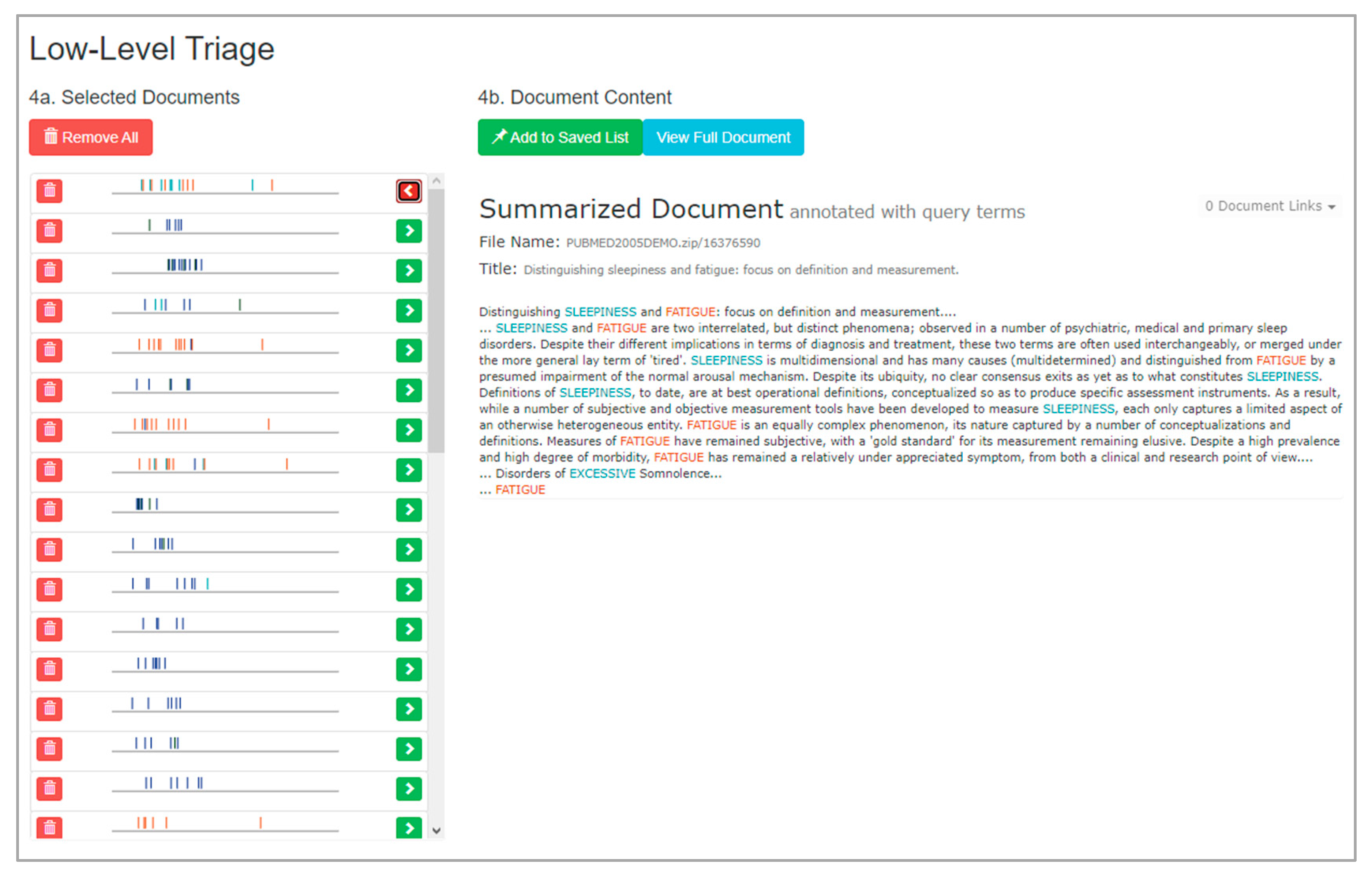

4.4.4. Low-Level Triage Subview

5. Discussion and Summary

5.1. Formative Evaluations of VisualQUEST

5.1.1. Tasks

5.1.2. Formative Evaluations and Findings

- (1)

- Users were able to learn how to use VisualQUEST without much difficulty (e.g., A, B).

- (2)

- Users were able to interpret the visual abstractions in VisualQUEST to engage with the ML component of the tool (e.g., C, D).

- (3)

- Users were able to differentiate between the individual stages of the information-seeking process and used VisualQUEST’s domain-independent, progressively disclosed interface to search and triage MEDLINE’s large document set (e.g., E, F, G).

- (4)

- Users were able to use Human Phenotype Ontology to align their vocabulary with the vocabulary of the medical domain, even while they were not initially familiar with the ontology’s domain or its structure and content (e.g., H, I, J).

- (5)

- Users felt that mediating ontologies make search tasks more manageable and easier and not having them would negatively affect their task performance (e.g., H, I, J).

5.2. Limitations

5.3. Future Work

5.4. Summary

- Users are able to transfer their knowledge of traditional interfaces to use VisualQUEST.

- Users are able to interpret VisualQUEST’s abstract representations to use its ML component more effectively, as compared to traditional “black box” approaches.

- Users are able to differentiate between the individual stages of their information-seeking process and use VisualQUEST’s domain-independent, progressively disclosed interface to search and triage large document sets.

- Users are able to use ontology files to align their vocabulary with the domain, even when they are not initially familiar with the ontology’s domain, its structure, or its content.

- Users feel that mediating ontologies make search tasks more manageable and easier.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Parsons, P.; Sedig, K.; Mercer, R.E.; Khordad, M.; Knoll, J.; Rogan, P. Visual analytics for supporting evidence-based interpretation of molecular cytogenomic findings. In Proceedings of the 2015 Workshop on Visual Analytics in Healthcare, Chicago, IL, USA, 25 October 2015; pp. 1–8. [Google Scholar]

- Sedig, K.; Ola, O. The Challenge of Big Data in Public Helth: An Opportunity for Visual Analytics. Online J. Public Health Inform. 2014, 5, 223. [Google Scholar] [CrossRef][Green Version]

- Sedig, K.; Parsons, P. Design of Visualizations for Human-Information Interaction: A Pattern-Based Framework. Synth. Lect. Vis. 2016, 4, 1–185. [Google Scholar] [CrossRef][Green Version]

- Ramanujan, D.; Chandrasegaran, S.K.; Ramani, K. Visual Analytics Tools for Sustainable Lifecycle Design: Current Status, Challenges, and Future Opportunities. J. Mech. Des. 2017, 139, 111415. [Google Scholar] [CrossRef] [PubMed]

- Golitsyna, O.L.; Maksimov, N.V.; Monankov, K.V. Focused on Cognitive Tasks Interactive Search Interface. Procedia Comput. Sci. 2018, 145, 319–325. [Google Scholar] [CrossRef]

- Ninkov, A.; Sedig, K. VINCENT: A Visual Analytics System for Investigating the Online Vaccine Debate. Online J. Public Health Inform. 2019, 11, e5. [Google Scholar] [CrossRef] [PubMed]

- Demelo, J.; Parsons, P.; Sedig, K. Ontology-Driven Search and Triage: Design of a Web-Based Visual Interface for MEDLINE. JMIR Med. Inform. 2017, 5, e4. [Google Scholar] [CrossRef] [PubMed]

- Ola, O.; Sedig, K. Beyond Simple Charts: Design of Visualizations for Big Health Data. Online J. Public Health Inform. 2016, 8, e195. [Google Scholar] [CrossRef] [PubMed]

- Boschee, E.; Barry, J.; Billa, J.; Freedman, M.; Gowda, T.; Lignos, C.; Palen-Michel, C.; Pust, M.; Khonglah, B.K.; Madikeri, S.; et al. Saral: A Low-resource cross-lingual domain-focused information retrieval system for effective rapid document triage. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Florence, Italy, 28 July–2 August 2019; pp. 19–24. [Google Scholar]

- Talbot, J.; Lee, B.; Kapoor, A.; Tan, D.S. EnsembleMatrix: Interactive Visualization to Support Machine Learning with Multiple Classifiers. In Proceedings of the 27th International Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; p. 1283. [Google Scholar]

- Hohman, F.; Kahng, M.; Pienta, R.; Chau, D.H. Visual Analytics in Deep Learning: An Interrogative Survey for the Next Frontiers. IEEE Trans. Vis. Comput. Graph. 2018, 25, 2674–2693. [Google Scholar] [CrossRef]

- Yuan, J.; Chen, C.; Yang, W.; Liu, M.; Xia, J.; Liu, S. A Survey of Visual Analytics Techniques for Machine Learning. Comput. Vis. Media 2020, 7, 3–36. [Google Scholar] [CrossRef]

- Endert, A.; Ribarsky, W.; Turkay, C.; Wong, B.L.W.; Nabney, I.; Blanco, I.D.; Rossi, F. The State of the Art in Integrating Machine Learning into Visual Analytics. Comput. Graph. Forum 2017, 36, 458–486. [Google Scholar] [CrossRef]

- Harvey, M.; Hauff, C.; Elsweiler, D. Learning by Example: Training Users with High-quality Query Suggestions. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, New York, NY, USA, 9–13 August 2015; pp. 133–142. [Google Scholar]

- Huurdeman, H.C. Dynamic Compositions: Recombining Search User Interface Features for Supporting Complex Work Tasks. CEUR Workshop Proc. 2017, 1798, 22–25. [Google Scholar]

- Chuang, J.; Ramage, D.; Manning, C.; Heer, J. Interpretation and Trust: Designing Model-driven Visualizations for Text Analysis. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 443–452. [Google Scholar]

- Zeng, Q.T.; Tse, T. Exploring and Developing Consumer Health Vocabularies. J. Am. Med. Inform. Assoc. 2006, 13, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.; Kanturska, U.; Waters, T.; Eaton, J.; Bañares-Alcántara, R.; Chen, M. Ontology-assisted Provenance Visualization for Supporting Enterprise Search of Engineering and Business Files. Adv. Eng. Inform. 2016, 30, 244–257. [Google Scholar] [CrossRef]

- Saleemi, M.M.; Rodríguez, N.D.; Lilius, J.; Porres, I. A Framework for Context-aware Applications for Smart Spaces. In Smart Spaces and Next Generation Wired/Wireless Networking; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6869, pp. 14–25. ISBN 9783642228742. [Google Scholar]

- Huurdeman, H.C.; Wilson, M.L.; Kamps, J. Active and passive utility of search interface features in different information seeking task stages. In Proceedings of the 2016 ACM on Conference on Human Information Interaction and Retrieval, Chapel Hill, NC, USA, 13–17 March 2016; pp. 3–12. [Google Scholar]

- Wu, C.M.; Meder, B.; Filimon, F.; Nelson, J.D. Asking Better Questions: How Presentation Formats Influence Information Search. J. Exp. Psychol. Learn. Mem. Cogn. 2017, 43, 1274–1297. [Google Scholar] [CrossRef] [PubMed]

- Herceg, P.M.; Allison, T.B.; Belvin, R.S.; Tzoukermann, E. Collaborative Exploratory Search for Information Giltering and Large-scale Information Triage. J. Assoc. Inf. Sci. Technol. 2018, 69, 395–409. [Google Scholar] [CrossRef]

- Badi, R.; Bae, S.; Moore, J.M.; Meintanis, K.; Zacchi, A.; Hsieh, H.; Shipman, F.; Marshall, C.C. Recognizing User Interest and Document Value from Reading and Organizing Activities in Document Triage. In Proceedings of the 11th International Conference on Intelligent User Interfaces, Sydney, Australia, 29 January–1 February 2006; p. 218. [Google Scholar]

- Bae, S.; Kim, D.; Meintanis, K.; Moore, J.M.; Zacchi, A.; Shipman, F.; Hsieh, H.; Marshall, C.C. Supporting Document Triage via Annotation-based Multi-application Visualizations. In Proceedings of the 10th ACMIEEECS Joint Conference on Digital Libraries, Queensland, Australia, 21–25 June 2010; pp. 177–186. [Google Scholar]

- Buchanan, G.; Owen, T. Improving Skim Reading for Document Triage. In Proceedings of the Second International Symposium on Information Interaction in Context, London, UK, 14–17 October 2008; p. 83. [Google Scholar]

- Loizides, F.; Buchanan, G.; Mavri, K. Theory and Practice in Visual Interfaces for Semi-structured Document Discovery and Selection. Inf. Serv. Use 2016, 35, 259–271. [Google Scholar] [CrossRef]

- Vamathevan, J.; Clark, D.; Czodrowski, P.; Dunham, I.; Ferran, E.; Lee, G.; Li, B.; Madabhushi, A.; Shah, P.; Spitzer, M. Applications of Machine Learning in Drug Discovery and Development. Nat. Rev. Drug Discov. 2019, 18, 463–477. [Google Scholar] [CrossRef]

- Wei, J.; Chu, X.; Sun, X.; Xu, K.; Deng, H.; Chen, J.; Wei, Z.; Lei, M. Machine Learning in Materials Science. InfoMat 2019, 1, 338–358. [Google Scholar] [CrossRef]

- Wang, J.; Li, M.; Diao, Q.; Lin, H.; Yang, Z.; Zhang, Y.J. Biomedical document triage using a hierarchical attention-based capsule network. BMC Bioinform. 2020, 21, 380. [Google Scholar] [CrossRef]

- Holzinger, A. Knowledge Discovery and Data Mining in Biomedical Informatics: The Future is in Integrative, Interactive Machine Learning Solutions. In Interactive Knowledge Discovery and Data Mining in Biomedical Informatics; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8401. [Google Scholar]

- Fiebrink, R.; Cook, P.R.; Trueman, D. Human Model Evaluation in Interactive Supervised Learning. In Proceedings of the 2011 Annual Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; p. 147. [Google Scholar]

- Dey, A. Machine Learning Algorithms: A Review. Int. J. Comput. Sci. Inf. Technol. 2016, 7, 1174–1179. [Google Scholar]

- Celebi, M.E.; Aydin, K. Unsupervised Learning Algorithms; Springer: Berlin, Germany, 2016; ISBN 3319242113. [Google Scholar]

- Sun, G.; Lv, H.; Wang, D.; Fan, X.; Zuo, Y.; Xiao, Y.; Liu, X.; Xiang, W.; Guo, Z. Visualization analysis for business performance of chinese listed companies based on gephi. Comput. Mater. Contin. 2020, 63, 959–977. [Google Scholar]

- Sacha, D.; Sedlmair, M.; Zhang, L.; Lee, J.A.; Weiskopf, D.; North, S.; Keim, D. Human-centered Machine Learning Through Interactive Visualization: Review and Open Challenges. In Proceedings of the ESANN 2016 24th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 27–29 April 2016; pp. 641–646. [Google Scholar]

- Hoeber, O. Visual Search Analytics: Combining Machine Learning and Interactive Visualization to Support Human-Centred Search. In Proceedings of the Beyond Single-Shot Text Queries: Bridging the Gap(s) Between Research Communities, Berlin, Germany, 4 March 2014; pp. 37–43. [Google Scholar]

- Holzinger, A. Interactive Machine Learning for Health Informatics: When Do We Need the Human-in-the-loop? Brain Inform. 2016, 3, 119–131. [Google Scholar] [CrossRef] [PubMed]

- Tresp, V.; Marc Overhage, J.; Bundschus, M.; Rabizadeh, S.; Fasching, P.A.; Yu, S. Going Digital: A Survey on Digitalization and Large-Scale Data Analytics in Healthcare. Proc. IEEE 2016, 104, 2180–2206. [Google Scholar] [CrossRef]

- Mehta, N.; Pandit, A. Concurrence of Big Data Analytics and Healthcare: A Systematic Review. Int. J. Med. Inform. 2018, 114, 57–65. [Google Scholar] [CrossRef] [PubMed]

- Arp, R.; Smith, B.; Spear, A.D. American Journal of Sociology. In Building Ontologies with Basic Formal Ontology; Mit Press: Cambridge, MA, USA, 2015; Volume 53, ISBN 0262527812. [Google Scholar]

- Xing, Z.; Wang, L.; Xing, W.; Ren, Y.; Li, T.; Xia, J. Application of Ontology in the Web Information Retrieval. J. Big Data 2019, 1, 79–88. [Google Scholar] [CrossRef]

- Gruber, T.R. Technical Report KSL 92-71 Revised April 1993 A Translation Approach to Portable Ontology Specifications by A Translation Approach to Portable Ontology Specifications. Knowl. Creat. Diffus. Util. 1993, 5, 199–220. [Google Scholar]

- Jusoh, S.; Awajan, A.; Obeid, N. The Use of Ontology in Clinical Information Extraction. J. Phys. Conf. Ser. 2020, 1529, 052083. [Google Scholar] [CrossRef]

- Lytvyn, V.; Dosyn, D.; Vysotska, V.; Hryhorovych, A. Method of Ontology Use in OODA. In Proceedings of the 2020 IEEE Third International Conference on Data Stream Mining & Processing, Lviv, Ukraine, 21–25 August 2020; pp. 409–413. [Google Scholar]

- Román-Villarán, E.; Pérez-Leon, F.P.; Escobar-Rodriguez, G.A.; Martínez-García, A.; Álvarez-Romero, C.; Parra-Calderóna, C.L. An Ontology-based Personalized Decision Support System for Use in the Complex Chronically Ill Patient. Stud. Health Technol. Inform. 2019, 264, 758–762. [Google Scholar]

- Spoladore, D.; Colombo, V.; Arlati, S.; Mahroo, A.; Trombetta, A.; Sacco, M. An ontology-based framework for a telehealthcare system to foster healthy nutrition and active lifestyle in older adults. Electronics 2021, 10, 2129. [Google Scholar] [CrossRef]

- Sherimon, P.C.; Krishnan, R. OntoDiabetic: An Ontology-Based Clinical Decision Support System for Diabetic Patients. Arab. J. Sci. Eng. 2016, 41, 1145–1160. [Google Scholar] [CrossRef]

- Spoladore, D.; Pessot, E. Collaborative ontology engineering methodologies for the development of decision support systems: Case studies in the healthcare domain. Electronics 2021, 10, 1060. [Google Scholar] [CrossRef]

- Simperl, E.P.B.; Tempich, C. Ontology engineering: A reality check. In OTM Confederated International Conferences” On the Move to Meaningful Internet Systems”; Springer: Berlin/Heidelberg, Gremany, 2006; Volume 4275, pp. 836–854. [Google Scholar]

- Jakus, G.; Milutinovic, V.; Omerović, S.; Tomazic, S. Concepts, Ontologies, and Knowledge Representation; Springer: New York, NY, USA, 2013; ISBN 978-1-4614-7821-8. [Google Scholar]

- Rector, A.; Schulz, S.; Rodrigues, J.M.; Chute, C.G.; Solbrig, H. On beyond Gruber: “Ontologies” in Today’s Biomedical Information Systems and the Limits of OWL. J. Biomed. Inform. X 2019, 2, 100002. [Google Scholar] [CrossRef] [PubMed]

- Tobergte, D.R.; Curtis, S. A Survey on Ontologies for Human Behavior Recognition. J. Chem. Inf. Model. 2013, 53, 1689–1699. [Google Scholar]

- Katifori, A.; Torou, E.; Vassilakis, C.; Lepouras, G.; Halatsis, C. Selected Results of a Comparative Study of Four Ontology Visualization Methods for Information Retrieval Tasks. In Proceedings of the Second International Conference on Research Challenges in Information Science, Marrakech, Morocco, 3–6 June 2008; pp. 133–140. [Google Scholar]

- Springer, A.; Whittaker, S. Progressive Disclosure: Designing for Effective Transparency. arXiv 2018, arXiv:1811.02164. [Google Scholar]

- William, L.; Kritina Holden, J.B. Progressive Disclosure. In Universal Principles of Design; Rockport Publishers: Beverly, MA, USA, 2010; pp. 188–189. ISBN 978-1592535873. [Google Scholar]

- Soldaini, L.; Yates, A.; Yom-Tov, E.; Frieder, O.; Goharian, N. Enhancing Web Search in the Medical Domain via Query Clarification. Inf. Retr. Boston. 2016, 19, 149–173. [Google Scholar] [CrossRef]

- Anderson, J.D.; Wischgoll, T. Visualization of Search Results of Large Document Sets. Electron. Imaging 2020, 2020, 388-1–388-7. [Google Scholar] [CrossRef]

- Saha, D.; Floratou, A.; Sankaranarayanan, K.; Minhas, U.F.; Mittal, A.R.; Özcan, F.; Deacy, S. ATHENA: An Ontology-Driven System for Natural Language Querying over Relational Data Stores. Proc. VLDB Endow. 2016, 9, 1209–1220. [Google Scholar] [CrossRef]

- Munir, K.; Sheraz Anjum, M. The Use of Ontologies for Effective Knowledge Modelling and Information Retrieval. Appl. Comput. Inform. 2018, 14, 116–126. [Google Scholar] [CrossRef]

- Amershi, S.; Cakmak, M.; Knox, W.B.; Kulesza, T. Power to the People: The Role of Humans in Interactive Machine Learning. Ai Mag. 2014, 35, 105–120. [Google Scholar] [CrossRef]

- Cortez, P.; Embrechts, M.J. Using Sensitivity Analysis and Visualization Techniques to Open Black Box Data Mining Models. Inf. Sci. 2013, 225, 1–17. [Google Scholar] [CrossRef]

- Spence, R. Interaction. In Information Visualization; Springer: Berlin/Heidelberg, Germany, 2014; pp. 173–223. [Google Scholar]

- Spence, R. Sensitivity Encoding to Support Information Space Navigation: A Design Guideline. Inf. Vis. 2002, 1, 120–129. [Google Scholar] [CrossRef]

- Spence, R. Sensitivity, Residue and Scent. Inf. Des. J. Doc. Des. 2004, 12, 1–18. [Google Scholar] [CrossRef]

- Azzopardi, L.; Zuccon, G. An Analysis of the Cost and Benefit of Search Interactions. In Proceedings of the 2016 ACM International Conference on the Theory of Information Retrieval, Newark, DE, USA, 12–16 September 2016; pp. 59–68. [Google Scholar]

- Chandrasegaran, S.; Badam, S.K.; Kisselburgh, L.; Ramani, K.; Elmqvist, N. Integrating Visual Analytics Support for Grounded Theory Practice in Qualitative Text Analysis. Comput. Graph. Forum 2017, 36, 201–212. [Google Scholar] [CrossRef]

- Bostock, M. D3.js Data-Driven Documents. Available online: https://d3js.org/ (accessed on 10 May 2020).

- Loizides, F.; Buchanan, G. What Patrons Want: Supporting Interaction for Novice Information Seeking Scholars. In Proceedings of the 9th ACM/IEEE-CS joint conference on Digital libraries, Austin, TX, USA, 14–19 June 2009; Volume 60558. [Google Scholar]

- Mavri, A.; Loizides, F.; Photiadis, T.; Zaphiris, P. We Have the Content … Now What? The role of Structure and Interactivity in Academic Document Triage Interfaces. Inf. Des. J. 2013, 20, 247–265. [Google Scholar]

- Loizides, F. Information Seekers’ Visual Focus During Time Constraint Document Triage. In International Conference on Theory and Practice of Digital Libraries; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7489, pp. 25–31. [Google Scholar]

- Solr Cloud. Available online: https://lucene.apache.org/solr/ (accessed on 10 May 2020).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Louppe, G.; Prettenhofer, P.; Weiss, R.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2012, 12, 2825–2830. [Google Scholar]

| Search Categories | Keywords | Metadata Screening | Abstract Screening |

|---|---|---|---|

| Information-seeking process | Information seeking model, information seeking process, and information seeking stages. | Google Scholar: 95; IEEE Xplore: 60; Total: 155 | Google Scholar: 18; IEEE Xplore: 4; Total: 22 |

| Information search interface | Information search interface, search interface, and search interface design. | Google Scholar: 90; IEEE Xplore: 27; Total: 117 | Google Scholar: 10; IEEE Xplore: 1; Total: 11 |

| Information triage interface | Information triage, information triage interface, document triage, and triage interface design. | Google Scholar: 38; IEEE Xplore: 3; Total: 41 | Google Scholar: 6; IEEE Xplore: 0; Total: 6 |

| Ontology use within user-facing information-seeking interfaces | Ontology interface, ontology integration, ontology-based interface, and user-facing interfaces with ontologies. | Google Scholar: 214; IEEE Xplore: 12; Total: 226 | Google Scholar: 2; IEEE Xplore: 0; Total: 2 |

| Stage | Associated Task | Alignment with Existing Models | Functional Descriptions |

|---|---|---|---|

| Query building | Information search | Pre-focus (initiation, selection, exploration) | Users communicate their information-seeking objectives via the tool’s interface. |

| Search | Information search | Focus formulation (formulation) | Users specify the formulation of their search and, when satisfied, initiate the performance of computational search. |

| High-level triage | Information triage | Low-specificity post-formulation (collection and presentation) | Users encounter sets of similar information entities generated from computational search, make initial high-level assessments of general alignment with information-seeking objectives, and direct further triaging encounters. |

| Low-level triage | Information triage | High-specificity post-formulation (collection and presentation) | Users encounter individual information entities previously encountered in high-level triage to perform final, low-level assessments of relevance to information-seeking objectives. |

| Design Criteria | Integration | Potential Uses of Ontologies within User-Facing Interfaces | |

|---|---|---|---|

| 1 | Use progressive disclosure when sequencing the stages of the information-seeking process. | All stages | Ontology entities and relations can be consistent and transparent guideposts between stages, particularly for non-active stages which must be pruned of unnecessary elements. |

| 2 | Attune users to the characteristics and domain of the document set before beginning search formulation. | Query building | Ontology entities and relations can promote the characteristics and domain of document sets. |

| 3 | Be cognizant of users’ domain expertise. | Query building | Ontology entities and relations can provide a bridge between task vocabularies and the common vocabularies of non-expert users, as well as previously formed domain vocabularies of expert users. |

| 4 | Create search formulation and refinement environments supplemented by query building. | Search | Ontology entities and relations can be useful within interface elements that suggest expansions and refinements to their search formulation. |

| 5 | Leverage sensitivity encoding when previewing the document set mappings of search formulations. | Search | Ontology entities and relations can be useful sensitivity encoded displays, which can suggest refinement opportunities for re-aligning their search formulation to the document set being searched and information-seeking objectives. |

| 6 | Present overview displays which arrange and compare document groupings using shared characteristics. | High-level triage | Ontology entities and relations can be useful in abstraction, such as locating shared document characteristics and when forming document groupings. |

| 7 | Utilize non-linear inspection flows which support actions for traversing, previewing, contrasting, and judging relevance. | High-level triage | Ontology entities and relations can help users connect to and assess the general characteristics and contents of a document grouping, allowing them to inspect, assess, and judge relevance on multiple documents at a time. |

| 8 | Offer document-level displays that allow users to apply domain expertise during relevance decision making. | Low-level triage | Ontology entities and relations can be useful for directing summation and annotation actions, as well as provide familiar cues for interactions like sorting and relevance judgment. |

| 9 | Persist relevance decision-making results externally to allow for repeat information-seeking sequences. | Low-level triage | Ontology entities and relations can be useful for indexing document selections as well as for recordkeeping users’ prior search and triage sequences. |

| 10 | Allow users to encounter search results without a demand for immediate appraisal. | All triage | Ontology entities and relations can help direct search formulation previews and the results of a full mapping of the document set, allowing users to quickly associate their predictions against search results. |

| 11 | Promote positive feedback over negative feedback. | All stages | Ontology entities and relations can provide familiar cues to direct positive feedback interactions within information-seeking sequences. |

| Target Stage | Task Description | |

|---|---|---|

| T1 | Query building | Consider two terms and contrast their rate of occurrence within the document set. |

| T2 | Query building | Consider a term and determine its alignment with a set of provided definitions. |

| T3 | Search | Consider how provided set of terms aligns with the document set, both individually and in combinations. |

| T4 | High-level triage | Without opening a specific document, predict its alignment to a provided set of terms. |

| T5 | High-level triage | Without opening a specific pair of documents, compare and predict which of them would contain a higher rate of occurrence of a specific term. |

| T6 | Low-level triage | Given a specific document, count and order the rate of occurrences of a provided set of terms within that document. |

| T7 | Multi-stage | Given a domain research question, using all stages and available functionalities of the interface, produce five relevant documents from the document set. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demelo, J.; Sedig, K. Interfaces for Searching and Triaging Large Document Sets: An Ontology-Supported Visual Analytics Approach. Information 2022, 13, 8. https://doi.org/10.3390/info13010008

Demelo J, Sedig K. Interfaces for Searching and Triaging Large Document Sets: An Ontology-Supported Visual Analytics Approach. Information. 2022; 13(1):8. https://doi.org/10.3390/info13010008

Chicago/Turabian StyleDemelo, Jonathan, and Kamran Sedig. 2022. "Interfaces for Searching and Triaging Large Document Sets: An Ontology-Supported Visual Analytics Approach" Information 13, no. 1: 8. https://doi.org/10.3390/info13010008

APA StyleDemelo, J., & Sedig, K. (2022). Interfaces for Searching and Triaging Large Document Sets: An Ontology-Supported Visual Analytics Approach. Information, 13(1), 8. https://doi.org/10.3390/info13010008