Deep Hybrid Network for Land Cover Semantic Segmentation in High-Spatial Resolution Satellite Images

Abstract

1. Introduction

- Single scale problem: Current state-of-the-art FCN networks [23,24,25,26,27,28] are single scale and cannot exploit multi-scale information that results in the loss of valuable information. Generally, high-resolution satellite images contain a wide variety of objects having aspect ratios and scales. Furthermore, satellite images of land covers often consist of irregular regions, such as agriculture areas, forests, water, etc. To acquire a precise and rich semantic map of land cover remote sensing images, multi-scale contextual information is required. This will discriminate the targets with similar appearances but distinct semantic classes.

- Large number of parameters: FCN-based semantic segmentation models require a large number of parameters for training, which leads to computation and memory constraints.

- Long training time: Large number of redundant convolutional layers cause gradient vanishing problems and take a long time to train [29].

- We design an efficient hybrid network for land cover classification in high-resolution satellite images by carefully integrating two networks.

- The proposed network learns low-level features and high-level contexts in an efficient manner for improved land cover segmentation in satellite images.

- The network is trained in an end-to-end manner and improves the flow of information and parameters and avoids the problem of a long training time.

- We evaluated the performance of the proposed framework on a publicly available benchmark dataset. From experiment results, we demonstrate that the proposed framework exhibits a superior performance compared to other state-of-the-art methods.

2. Related Work

3. Methodology

3.1. Loss Function, Training and Testing Strategies

3.1.1. Loss Function

3.1.2. Training Scheme

3.1.3. Testing Scheme

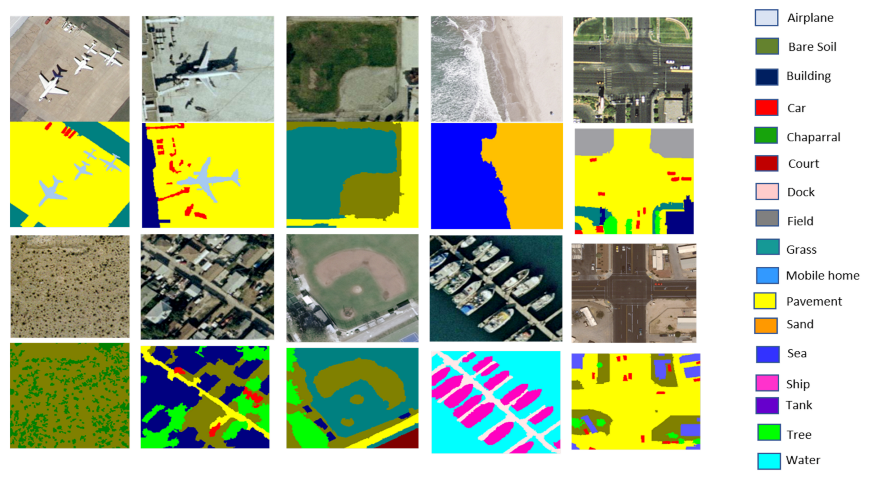

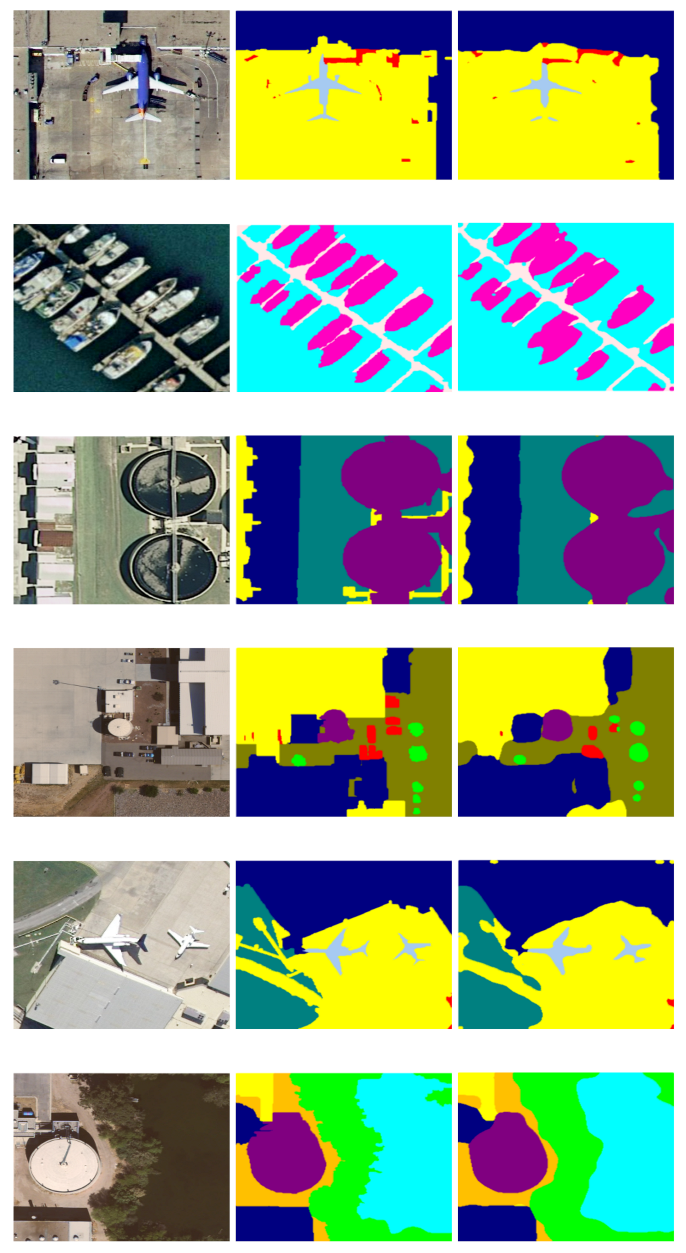

4. Experiment Results

4.1. Hand-Crafted Feature Models

4.2. Deep Learning Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mboga, N.; Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Wolff, E. Fully convolutional networks and geographic object-based image analysis for the classification of VHR imagery. Remote Sens. 2019, 11, 597. [Google Scholar] [CrossRef]

- Seferbekov, S.; Iglovikov, V.; Buslaev, A.; Shvets, A. Feature pyramid network for multi-class land segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 272–275. [Google Scholar]

- Kuo, T.S.; Tseng, K.S.; Yan, J.W.; Liu, Y.C.; Frank Wang, Y.C. Deep aggregation net for land cover classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 252–256. [Google Scholar]

- Rakhlin, A.; Davydow, A.; Nikolenko, S. Land cover classification from satellite imagery with u-net and lovász-softmax loss. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 262–266. [Google Scholar]

- Chiu, M.T.; Xu, X.; Wei, Y.; Huang, Z.; Schwing, A.G.; Brunner, R.; Khachatrian, H.; Karapetyan, H.; Dozier, I.; Rose, G.; et al. Agriculture-vision: A large aerial image database for agricultural pattern analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2828–2838. [Google Scholar]

- Maddikunta, P.K.R.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.V. Unmanned aerial vehicles in smart agriculture: Applications, requirements, and challenges. IEEE Sens. J. 2021. [Google Scholar] [CrossRef]

- Larsen, S.Ø; Salberg, A.B.; Eikvil, L. Automatic system for operational traffic monitoring using very-high-resolution satellite imagery. Int. J. Remote Sens. 2013, 34, 4850–4870. [Google Scholar] [CrossRef]

- Drouyer, S.; de Franchis, C. Highway traffic monitoring on medium resolution satellite images. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1228–1231. [Google Scholar]

- Wheeler, B.J.; Karimi, H.A. Deep learning-enabled semantic inference of individual building damage magnitude from satellite images. Algorithms 2020, 13, 195. [Google Scholar] [CrossRef]

- Hu, S.; Lee, G.H. Image-based geo-localization using satellite imagery. Int. J. Comput. Vis. 2020, 128, 1205–1219. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. A probabilistic framework to detect buildings in aerial and satellite images. IEEE Trans. Geosci. Remote Sens. 2010, 49, 211–221. [Google Scholar] [CrossRef]

- Kazemzadeh-Zow, A.; Darvishi Boloorani, A.; Samany, N.N.; Toomanian, A.; Pourahmad, A. Spatiotemporal modelling of urban quality of life (UQoL) using satellite images and GIS. Int. J. Remote Sens. 2018, 39, 6095–6116. [Google Scholar] [CrossRef]

- Su, M.; Guo, R.; Chen, B.; Hong, W.; Wang, J.; Feng, Y.; Xu, B. Sampling Strategy for Detailed Urban Land Use Classification: A Systematic Analysis in Shenzhen. Remote Sens. 2020, 12, 1497. [Google Scholar] [CrossRef]

- MohanRajan, S.N.; Loganathan, A.; Manoharan, P. Survey on Land Use/Land Cover (LU/LC) change analysis in remote sensing and GIS environment: Techniques and Challenges. Environ. Sci. Pollut. Res. 2020, 27, 29900–29926. [Google Scholar] [CrossRef]

- Zhang, C.; Han, Y.; Li, F.; Gao, S.; Song, D.; Zhao, H.; Fan, K.; Zhang, Y. A new CNN-Bayesian model for extracting improved winter wheat spatial distribution from GF-2 imagery. Remote Sens. 2019, 11, 619. [Google Scholar] [CrossRef]

- Basso, B.; Liu, L. Seasonal crop yield forecast: Methods, applications, and accuracies. Adv. Agron. 2019, 154, 201–255. [Google Scholar]

- Davydow, A.; Nikolenko, S. Land cover classification with superpixels and jaccard index post-optimization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 280–284. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. Enet: A deep neural network architecture for real-time semantic segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Wang, Y.; Zhou, Q.; Liu, J.; Xiong, J.; Gao, G.; Wu, X.; Latecki, L.J. Lednet: A lightweight encoder-decoder network for real-time semantic segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1860–1864. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Huang, G.; Sun, Y.; Liu, Z.; Sedra, D.; Weinberger, K.Q. Deep networks with stochastic depth. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 646–661. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Farag, A.; Lu, L.; Roth, H.R.; Liu, J.; Turkbey, E.; Summers, R.M. A bottom-up approach for pancreas segmentation using cascaded superpixels and (deep) image patch labeling. IEEE Trans. Image Process. 2016, 26, 386–399. [Google Scholar] [CrossRef]

- Zhou, Y.; Xie, L.; Fishman, E.K.; Yuille, A.L. Deep supervision for pancreatic cyst segmentation in abdominal CT scans. In Proceedings of the InternationaL Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 11–13 September 2017; pp. 222–230. [Google Scholar]

- Roth, H.R.; Lu, L.; Lay, N.; Harrison, A.P.; Farag, A.; Sohn, A.; Summers, R.M. Spatial aggregation of holistically-nested convolutional neural networks for automated pancreas localization and segmentation. Med. Image Anal. 2018, 45, 94–107. [Google Scholar] [CrossRef]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; pp. 424–432. [Google Scholar]

- Li, X.; Chen, H.; Qi, X.; Dou, Q.; Fu, C.W.; Heng, P.A. H-DenseUNet: Hybrid densely connected UNet for liver and tumor segmentation from CT volumes. IEEE Trans. Med. Imaging 2018, 37, 2663–2674. [Google Scholar] [CrossRef]

- Shah, S.; Ghosh, P.; Davis, L.S.; Goldstein, T. Stacked U-Nets: A no-frills approach to natural image segmentation. arXiv 2018, arXiv:1804.10343. [Google Scholar]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1925–1934. [Google Scholar]

- Pohlen, T.; Hermans, A.; Mathias, M.; Leibe, B. Full-resolution residual networks for semantic segmentation in street scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4151–4160. [Google Scholar]

- Yang, T.; Wu, Y.; Zhao, J.; Guan, L. Semantic segmentation via highly fused convolutional network with multiple soft cost functions. Cogn. Syst. Res. 2019, 53, 20–30. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Learning a discriminative feature network for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1857–1866. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Yang, M.; Yu, K.; Zhang, C.; Li, Z.; Yang, K. Denseaspp for semantic segmentation in street scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3684–3692. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Liu, Q.; Kampffmeyer, M.; Jenssen, R.; Salberg, A.B. Dense dilated convolutions’ merging network for land cover classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6309–6320. [Google Scholar] [CrossRef]

- Kampffmeyer, M.; Salberg, A.B.; Jenssen, R. Urban land cover classification with missing data modalities using deep convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1758–1768. [Google Scholar] [CrossRef]

- Pascual, G.; Seguí, S.; Vitria, J. Uncertainty gated network for land cover segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 276–279. [Google Scholar]

- Tian, C.; Li, C.; Shi, J. Dense fusion classmate network for land cover classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 192–196. [Google Scholar]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. Deepglobe 2018: A challenge to parse the earth through satellite images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–181. [Google Scholar]

- Garipov, T.; Izmailov, P.; Podoprikhin, D.; Vetrov, D.; Wilson, A.G. Loss surfaces, mode connectivity, and fast ensembling of dnns. arXiv 2018, arXiv:1802.10026. [Google Scholar]

- Shao, Z.; Yang, K.; Zhou, W. Performance evaluation of single-label and multi-label remote sensing image retrieval using a dense labeling dataset. Remote Sens. 2018, 10, 964. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Geographic image retrieval using local invariant features. IEEE Trans. Geosci. Remote Sens. 2012, 51, 818–832. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face recognition with local binary patterns. In Proceedings of the European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 469–481. [Google Scholar]

- Mehrotra, R.; Namuduri, K.R.; Ranganathan, N. Gabor filter-based edge detection. Pattern Recognit. 1992, 25, 1479–1494. [Google Scholar] [CrossRef]

- Oliva, A.; Torralba, A. Modeling the shape of the scene: A holistic representation of the spatial envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Sivic, J.; Zisserman, A. Video Google: A text retrieval approach to object matching in videos. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 3, p. 1470. [Google Scholar]

- Idrissa, M.; Acheroy, M. Texture classification using Gabor filters. Pattern Recognit. Lett. 2002, 23, 1095–1102. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C. Land cover classification from remote sensing images based on multi-scale fully convolutional network. arXiv 2020, arXiv:2008.00168. [Google Scholar]

- Jégou, S.; Drozdzal, M.; Vazquez, D.; Romero, A.; Bengio, Y. The one hundred layers tiramisu: Fully convolutional densenets for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 11–19. [Google Scholar]

- Ji, S.; Zhang, Z.; Zhang, C.; Wei, S.; Lu, M.; Duan, Y. Learning discriminative spatiotemporal features for precise crop classification from multi-temporal satellite images. Int. J. Remote Sens. 2020, 41, 3162–3174. [Google Scholar] [CrossRef]

- Gu, Z.; Cheng, J.; Fu, H.; Zhou, K.; Hao, H.; Zhao, Y.; Zhang, T.; Gao, S.; Liu, J. Ce-net: Context encoder network for 2d medical image segmentation. IEEE Trans. Med. Imaging 2019, 38, 2281–2292. [Google Scholar] [CrossRef]

- Kim, J.H.; Lee, H.; Hong, S.J.; Kim, S.; Park, J.; Hwang, J.Y.; Choi, J.P. Objects segmentation from high-resolution aerial images using U-Net with pyramid pooling layers. IEEE Geosci. Remote Sens. Lett. 2018, 16, 115–119. [Google Scholar] [CrossRef]

- Hoang, H.H.; Trinh, H.H. Improvement for Convolutional Neural Networks in Image Classification Using Long Skip Connection. Appl. Sci. 2021, 11, 2092. [Google Scholar] [CrossRef]

| Layer | Operation | Kernel Size | # of Channels | Stride | Feature Size |

|---|---|---|---|---|---|

| Input | - | - | - | - | 256 × 256 |

| Encoder Part | |||||

| Convolution | Conv | 7 × 7 | 96 | 2 | 128 × 128 |

| Pooling | Max pooling | 3 × 3 | - | 2 | 64 × 64 |

| Denseblock1 × 6 | Conv | 1 × 1 | 192 | 1 | 64 × 64 |

| Conv | 3 × 3 | 48 | 1 | 64 × 64 | |

| Transition Layer1 | Conv | 1 × 1 | 48 | 1 | 64 × 64 |

| Avg Pooling | 2 × 2 | - | 2 | 32 × 32 | |

| Denseblock2 × 12 | Conv | 1 × 1 | 192 | 1 | 32 × 32 |

| Conv | 3 × 3 | 48 | 1 | 32 × 32 | |

| Transition Layer2 | Conv | 1 × 1 | 48 | 1 | 32 × 32 |

| Avg Pooling | 2 × 2 | - | 2 | 16 × 16 | |

| Denseblock3 × 48 | Conv | 1 × 1 | 192 | 1 | 16 × 16 |

| Conv | 3 × 3 | 48 | 1 | 16 × 16 | |

| Decoder Part | |||||

| Transition layer3 | Conv | 1 × 1 | 48 | 1 | 16 × 16 |

| Avg Pooling | 2 × 2 | - | 2 | 8 × 8 | |

| Denseblock4 × 32 | Conv | 1 × 1 | 192 | 1 | 8 × 8 |

| Conv | 3 × 3 | 48 | 1 | 8 × 8 | |

| Up sampling layer 1 | D-conv | 2 × 2 | - | - | 16 × 16 |

| Conv | 3 × 3 | 768 | 1 | 16 × 16 | |

| Up sampling layer 2 | D-conv | 2 × 2 | - | - | 32 × 32 |

| Conv | 3 × 3 | 384 | 1 | 32 × 32 | |

| Up sampling layer 3 | D-conv | 2 × 2 | - | - | 64 × 64 |

| Conv | 3 × 3 | 384 | 1 | 64 × 64 | |

| Up sampling layer 3 | D-conv | 2 × 2 | - | - | 128 × 128 |

| Conv | 3 × 3 | 96 | 1 | 128 × 128 | |

| Up sampling layer 3 | D-conv | 2 × 2 | - | - | 256 × 256 |

| Conv | 3 × 3 | 96 | 1 | 256 × 256 | |

| Convolution | Conv | 1 × 1 | 17 | 1 | 256 × 256 |

| Accuracy | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| Local Binary Pattern | 49.04 | 44.71 | 42.83 | 43.75 |

| Gabor Filter | 51.29 | 49.75 | 43.29 | 46.30 |

| GIST Features | 39.26 | 37.19 | 39.62 | 38.37 |

| Bag-of-Visual-Words | 54.54 | 45.23 | 51.34 | 48.09 |

| Color Histogram | 48.95 | 40.33 | 42.39 | 41.33 |

| Proposed | 77.67 | 75.20 | 70.54 | 72.80 |

| Accuracy | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| U-Net | 65.73 | 64.27 | 57.24 | 60.55 |

| U-Net++ | 70.29 | 61.75 | 70.25 | 65.73 |

| SegNet | 63.24 | 65.46 | 57.27 | 61.09 |

| MS-FCN | 71.52 | 68.95 | 65.29 | 67.07 |

| CE-Net | 69.79 | 59.29 | 64.95 | 61.99 |

| U-NetPPL | 68.55 | 55.67 | 66.38 | 60.56 |

| FGC | 63.29 | 52.37 | 65.43 | 58.18 |

| Tiramisu | 69.42 | 60.89 | 62.28 | 61.58 |

| DenseNet | 57.12 | 49.65 | 55.24 | 52.30 |

| Proposed | 77.67 | 75.20 | 70.54 | 72.80 |

| Accuracy | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| Airplane | 89.56 | 86.24 | 82.76 | 84.46 |

| Bare soil | 78.94 | 79.14 | 69.45 | 73.98 |

| Building | 83.43 | 78.62 | 81.02 | 79.80 |

| Car | 79.38 | 70.92 | 79.79 | 75.09 |

| Chaparral | 65.95 | 71.69 | 52.76 | 60.79 |

| Court | 78.16 | 77.76 | 69.19 | 73.23 |

| Dock | 82.67 | 83.48 | 72.79 | 77.77 |

| Field | 73.25 | 78.94 | 55.72 | 65.33 |

| Grass | 88.47 | 84.64 | 82.79 | 83.70 |

| Mobile home | 67.73 | 67.65 | 58.76 | 62.89 |

| Pavement | 82.19 | 79.23 | 75.64 | 77.39 |

| Sand | 76.92 | 68.47 | 75.08 | 71.62 |

| Sea | 74.02 | 73.97 | 65.48 | 69.47 |

| Ship | 87.54 | 92.17 | 75.46 | 82.98 |

| Tank | 73.32 | 64.39 | 67.42 | 65.87 |

| Tree | 64.74 | 56.75 | 60.37 | 58.50 |

| Water | 74.25 | 64.38 | 74.76 | 69.18 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, S.D.; Alarabi, L.; Basalamah, S. Deep Hybrid Network for Land Cover Semantic Segmentation in High-Spatial Resolution Satellite Images. Information 2021, 12, 230. https://doi.org/10.3390/info12060230

Khan SD, Alarabi L, Basalamah S. Deep Hybrid Network for Land Cover Semantic Segmentation in High-Spatial Resolution Satellite Images. Information. 2021; 12(6):230. https://doi.org/10.3390/info12060230

Chicago/Turabian StyleKhan, Sultan Daud, Louai Alarabi, and Saleh Basalamah. 2021. "Deep Hybrid Network for Land Cover Semantic Segmentation in High-Spatial Resolution Satellite Images" Information 12, no. 6: 230. https://doi.org/10.3390/info12060230

APA StyleKhan, S. D., Alarabi, L., & Basalamah, S. (2021). Deep Hybrid Network for Land Cover Semantic Segmentation in High-Spatial Resolution Satellite Images. Information, 12(6), 230. https://doi.org/10.3390/info12060230