Unpaired Underwater Image Enhancement Based on CycleGAN

Abstract

:1. Introduction

- We introduce a content loss regularizer into the generator in CycleGAN, which keeps more detailed information in the corresponding generated clear image. This strategy is different from CartoonGAN [24];

- We add a blur-promoting adversarial loss regularizer into the discriminator in CycleGAN, which reduces the effects of blur and noise and enhances the image clarity;

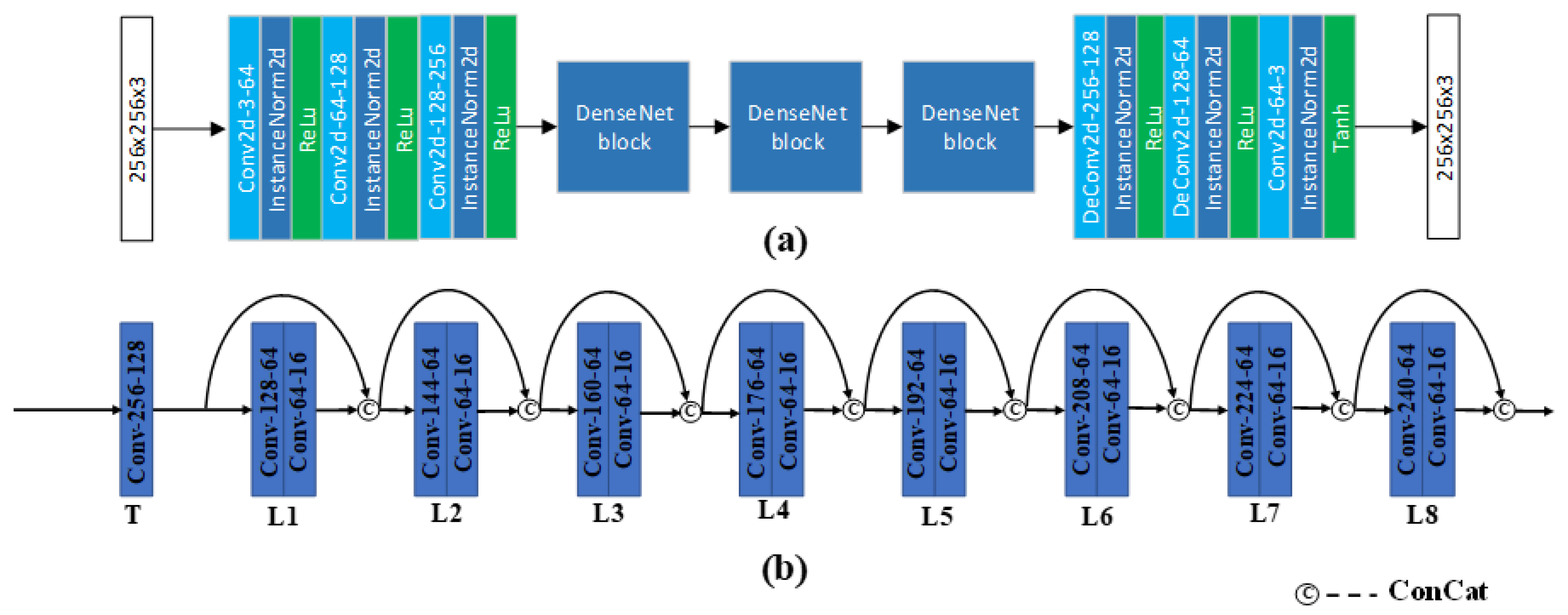

- We exploit the improved DenseNet Block in the generator to strengthen the forward transfer of feature maps, so that every feature map can be utilized;

- We test our proposed UW-CycleGAN on different types of underwater images and obtain a satisfactory performance.

2. Underwater Image Enhancement

3. Underwater CycleGAN (UW-CycleGAN)

- (1)

- The mapping function G generates the clear image from .

- (2)

- Another mapping function F reconstructs the degraded image x by .

- (3)

- Discriminator judges whether the generated image and clear image y derive from the same distribution.

3.1. Loss Function

3.1.1. Content Loss

3.1.2. Blur-Promoting Adversarial Loss

3.1.3. Full Loss Funtion

3.2. Network Architectures

4. Experiment and Evaluation

4.1. Datasets and Metrics

4.2. Experimental Assessment

4.3. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, C.; Guo, J.; Guo, C.; Cong, R.; Gong, J. A hybrid method for underwater image correction. Pattern Recognit. Lett. 2017, 94, 62–67. [Google Scholar] [CrossRef]

- Wang, Y.; Song, W.; Fortino, G.; Qi, L.; Zhang, W.; Liotta, A. An Experimental-Based Review of Image Enhancement and Image Restoration Methods for Underwater Imaging. IEEE Access 2019, 7, 140233–140251. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Pan, X.; Zou, P.; Qin, L.; Xu, W. A Survey of Restoration and Enhancement for Underwater Images. IEEE Access 2019, 7, 182259–182279. [Google Scholar] [CrossRef]

- Schettini, R.; Corchs, S. Underwater Image Processing: State of the Art of Restoration and Image Enhancement Methods. EURASIP J. Adv. Signal Process. 2010, 2010, 746052. [Google Scholar] [CrossRef] [Green Version]

- Weng, C.C.; Chen, H.; Fuh, C.S. A novel automatic white balance method for digital still cameras. In Proceedings of the IEEE International Symposium on Circuits and Systems, Kobe, Japan, 23–26 May 2005. [Google Scholar]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; Romeny, B.T.H.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Hitam, M.S.; Awalludin, E.A.; Yussof, W.N.J.H.W.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the International Conference on Computer Applications Technology, Sousse, Tunisia, 20–22 January 2013. [Google Scholar]

- Cheng, C.; Sung, C.; Chang, H. Underwater image restoration by red-dark channel prior and point spread function deconvolution. In Proceedings of the IEEE International Conference on Signal and Image Processing Applications, Kuala Lumpur, Malaysia, 19–21 October 2015. [Google Scholar]

- Sun, F.; Zhang, X.; Wang, G. An Approach for Underwater Image Denoising Via Wavelet Decomposition and High-pass Filter. In Proceedings of the International Conference on Intelligent Computation Technology and Automation, Shenzhen, China, 28–29 March 2011. [Google Scholar]

- Shahrizan, A.; Ghani, A. Image contrast enhancement using an integration of recursive-overlapped contrast limited adaptive histogram specification and dual-image wavelet fusion for the high visibility of deep underwater image. Ocean Eng. 2018, 162, 224–238. [Google Scholar]

- Khan, A.; Ali, S.S.A.; Malik, A.S.; Anwer, A.; Meriaudeau, F. Underwater image enhancement by wavelet based fusion. In Proceedings of the IEEE International Conference on Underwater System Technology: Theory and Applications, Penang, Malaysia, 13–14 December 2016. [Google Scholar]

- Sun, J.; Wang, W. Study on Underwater Image Denoising Algorithm Based on Wavelet Transform. J. Phys. Conf. Ser. 2017, 806, 1–10. [Google Scholar]

- Vasamsetti, S.; Mittal, N.; Neelapu, B.C.; Sardana, H.K. Wavelet based perspective on variational enhancement technique for underwater imagery. Ocean Eng. 2017, 141, 88–100. [Google Scholar] [CrossRef]

- Mukherjee, S.; Valenzise, G.; Cheng, I. Potential of deep features for opinion-unaware, distortion-unaware, no-reference image quality assessment. In Smart Multimedia. ICSM 2019; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; pp. 87–95. [Google Scholar]

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep CNN method for underwater image enhancement. In Proceedings of the International Conference on Image Processing, Beijing, China, 17–20 September 2017. [Google Scholar]

- Anwar, S.; Li, C.; Porikli, F. Deep Underwater Image Enhancement. arXiv 2018, arXiv:1807.03528. [Google Scholar]

- Hou, M.; Liu, R.; Fan, X.; Luo, Z. Joint Residual Learning for Underwater Image Enhancement. In Proceedings of the International Conference on Image Processing, Athens, Greece, 7–10 October 2018. [Google Scholar]

- Sun, X.; Liu, L.; Li, Q.; Dong, J.; Lima, E.; Yin, R. Deep Pixel to Pixel Network for Underwater Image Enhancement and Restoration. IET Image Process. 2018, 13, 469–474. [Google Scholar] [CrossRef]

- Uplavikar, P.; Wu, Z.; Wang, Z. All-In-One Underwater Image Enhancement using Domain-Adversarial Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshop, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing Underwater Imagery Using Generative Adversarial Networks. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Yang, M.; Hu, K.; Du, Y.; Wei, Z.; Sheng, Z.; Hu, J. Underwater image enhancement based on conditional generative adversarial network. Signal Process. Image Commun. 2020, 81, 115723. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wang, W. A Fusion Adversarial Underwater Image Enhancement Network with a Public Test Dataset. Comput. Sci. 2019, 95. [Google Scholar] [CrossRef]

- Hu, K.; Zhang, Y.; Weng, C.; Wang, P.; Deng, Z.; Liu, Y. An Underwater Image Enhancement Algorithm Based on Generative Adversarial Network and Natural Image Quality Evaluation Index. J. Mar. Sci. Eng. 2021, 9, 691. [Google Scholar] [CrossRef]

- Chen, Y.; Lai, Y.; Liu, Y. CartoonGAN: Generative Adversarial Networks for Photo Cartoonization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Chiang, J.Y.; Chen, Y. Underwater Image Enhancement by Wavelength Compensation and Dehazing. IEEE Trans. Image Process. 2012, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.; Park, T.; Isola, P.; Efros, A. Unpaired Image-To-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-world Underwater Enhancement: Challenges, Benchmarks, and Solutions under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Hautiere, N.; Tarel, J.P.; Aubert, D.; Dumont, E. Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal. Stereol. 2008, 27, 87–95. [Google Scholar] [CrossRef]

- Gadre, S.R. Information entropy and thomas-Fermi theory. Phys. Rev. A 1984, 30, 620–621. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-systeminspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- Pan, P.; Yuan, F.; Cheng, E. Underwater Image De-scattering and Enhancing using Dehazenet and HWD. J. Mar. Sci. Technol. 2018, 26, 531–540. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Diving into Haze-Lines: Color Restoration of Underwater Images. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017. [Google Scholar]

- Iqbal, K.; Odetayo, M.; James, A.; Salam, R.A.; Talib, A.Z.H. Enhancing the low quality images using Unsupervised Colour Correction Method. In Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef] [Green Version]

| AG ↑ | IE ↓ | UIQM ↑ | |

|---|---|---|---|

| Deunderwater | 7.5047 | 7.8178 | 5.1460 |

| HL | 7.3021 | 7.4033 | 4.0719 |

| UCM | 4.9102 | 7.3955 | 3.8221 |

| FUnIE-GAN-UP | 5.9444 | 7.3819 | 4.2130 |

| CartoonGAN | 4.9079 | 7.2567 | 4.4997 |

| CycleGAN | 6.4737 | 7.2785 | 4.8380 |

| UW-CycleGAN | 7.6345 | 7.1824 | 5.1689 |

| AG ↑ | IE ↓ | UIQM ↑ | |

|---|---|---|---|

| Deunderwater | 2.4945 | 7.7830 | 1.5500 |

| HL | 2.0565 | 7.4271 | 1.5769 |

| UCM | 2.5489 | 7.2451 | 2.0124 |

| FUnIE-GAN-UP | 2.6014 | 7.3463 | 0.9782 |

| CartoonGAN | 2.7224 | 6.7422 | 2.0883 |

| CycleGAN | 2.9969 | 6.7452 | 2.6075 |

| UW-CycleGAN | 3.1370 | 6.4827 | 2.7497 |

| AG ↑ | IE ↓ | UIQM ↑ | |

|---|---|---|---|

| w/o | 7.3567 | 7.3727 | 5.1268 |

| w/o | 7.5490 | 7.2750 | 5.1530 |

| 6.9984 | 7.2864 | 5.0271 | |

| UW-CycleGAN | 7.6345 | 7.1824 | 5.1689 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, R.; Li, W.; Chen, S.; Li, C.; Zhang, Y. Unpaired Underwater Image Enhancement Based on CycleGAN. Information 2022, 13, 1. https://doi.org/10.3390/info13010001

Du R, Li W, Chen S, Li C, Zhang Y. Unpaired Underwater Image Enhancement Based on CycleGAN. Information. 2022; 13(1):1. https://doi.org/10.3390/info13010001

Chicago/Turabian StyleDu, Rong, Weiwei Li, Shudong Chen, Congying Li, and Yong Zhang. 2022. "Unpaired Underwater Image Enhancement Based on CycleGAN" Information 13, no. 1: 1. https://doi.org/10.3390/info13010001

APA StyleDu, R., Li, W., Chen, S., Li, C., & Zhang, Y. (2022). Unpaired Underwater Image Enhancement Based on CycleGAN. Information, 13(1), 1. https://doi.org/10.3390/info13010001