Abstract

Automatic severity assessment and progression prediction can facilitate admission, triage, and referral of COVID-19 patients. This study aims to explore the potential use of lung lesion features in the management of COVID-19, based on the assumption that lesion features may carry important diagnostic and prognostic information for quantifying infection severity and forecasting disease progression. A novel LesionEncoder framework is proposed to detect lesions in chest CT scans and to encode lesion features for automatic severity assessment and progression prediction. The LesionEncoder framework consists of a U-Net module for detecting lesions and extracting features from individual CT slices, and a recurrent neural network (RNN) module for learning the relationship between feature vectors and collectively classifying the sequence of feature vectors. Chest CT scans of two cohorts of COVID-19 patients from two hospitals in China were used for training and testing the proposed framework. When applied to assessing severity, this framework outperformed baseline methods achieving a sensitivity of 0.818, specificity of 0.952, accuracy of 0.940, and AUC of 0.903. It also outperformed the other tested methods in disease progression prediction with a sensitivity of 0.667, specificity of 0.838, accuracy of 0.829, and AUC of 0.736. The LesionEncoder framework demonstrates a strong potential for clinical application in current COVID-19 management, particularly in automatic severity assessment of COVID-19 patients. This framework also has a potential for other lesion-focused medical image analyses.

1. Introduction

The rapid escalation in the number of COVID-19 infections exceeded the capacity of healthcare systems to respond in many nations, and consequently reduced patient outcomes [1]. In such circumstances, it is of paramount importance to develop efficient diagnostic and prognostic models for COVID-19, so that the patients’ care can be optimized.

Chest CT scans have been found to provide important diagnostic and prognostic information for COVID-19 [2,3,4,5,6,7]. Although there is still debate on the use of chest CT in screening and diagnosing COVID-19 cases [8], a surge of computational methods for chest CT have been developed to support medical decision making during the current pandemic [9,10,11,12,13,14,15]. Study population, model performance, and reporting quality vary substantially between studies. An in-depth comparison of these studies can be found in a recent systematic review [16].

In addition to diagnostic and screening models, several prediction models have been proposed based on an assessment of lung lesions. There are three typical classes of lesions that can be detected in COVID-19 chest CT scans: ground glass opacity (GGO), consolidation, and pleural effusion [3,4]. Imaging features of the lesions including shape, location, extent and distribution of involvement of each abnormality have been found to have good predictive power for mortality [17] or hospital stay [18]. These features, however, are mostly derived from the delineated lesions, and so depend heavily on lesion segmentation. Manual delineation of lesions often takes one to five hours, which substantially undermines clinical applicability of these methods.

Automatic lung lesion segmentation for COVID-19 has been actively investigated in recent studies [19,20]. A VB-Net model based on a neural network was proposed to segment the infection regions in CT scans [19]. This model, when trained using CT scans of 249 COVID-19 patients, achieved a Dice score of 0.92 between automatic and manual segmentations, and successfully reduced the delineation time to less than 4 min. In another recent study [20], a lesion segmentation model based on the 3D-Dense U-Net architecture was proposed and trained on CT scans of a combination of 160 COVID-19, 172 viral pneumonia, and 296 interstitial lung disease patients. Although the lesion masks were not compared voxel-to-voxel, the volumetric measures of lesions, such as percentage of opacity and consolidation, showed a high correlation (0.97–0.98) between automatic and manual segmentations.

Previous studies [19,20] have suggested that lesion features might be a useful biomarker for COVID-19 patient severity assessment, but the effectiveness of lesion features is yet to be verified. Lesion features may have additional applications in the management of COVID-19, which need to be investigated further. In this study, we aim to test the effectiveness of using lesion features in COVID-19 patients for disease severity assessment, and to explore the potential use of lesion features in predicting disease progression.

Automatic severity assessment and progression prediction will substantially facilitate admission, triage, and referral of patients. The first goal of this study is to develop a method for assessing severity of COVID-19 patients based on their baseline chest CT scans. Four severity types: mild, ordinary, severe, and critical, can be defined based on a core outcome set (COS) encapsulating clinical symptoms, physical and chemical detection, viral nuclei aid detection, disease process, etc. [21]. Supportive treatments, such as supplementary oxygen and mechanical ventilation, are usually required for severe and critical cases [22]. We represent the assessment severity task as a binary classification problem (i.e., to classify a patient as a mild/ordinary case (mild class) or a severe/critical case (severe class)).

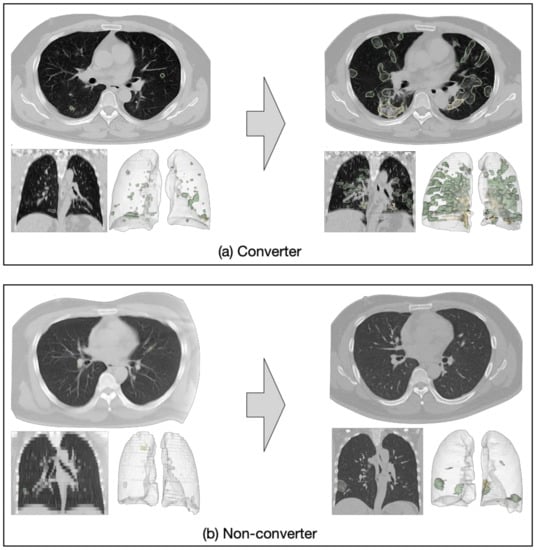

The second goal of this study is to predict disease progression for the mild/ordinary cases based on their baseline CT scans. In other words, we aim to predict which of the mild/ordinary severity patients are likely to progress to the severe/critical category (converter class) in the short term (within seven days), and which patients would remain stable or recover (non-converter class), based on the assumption that lesion features may carry important prognostic information for forecasting disease progression. We again consider the task as a binary classification problem (i.e., to classify the non-converter cases and converter cases). Figure 1a presents an example of a COVID-19 case with mild symptoms. In less than seven days, the patient’s symptoms rapidly worsened and progressed to severe. Figure 1b is an example of a non-converter case whose symptoms progressed slowly and remained mild seven days after the baseline CT scan.

Figure 1.

Examples of converter and non-converter cases. (a) A mild case progressed to severe within seven days; (b) a mild case did not progress to severe within seven days.

To achieve the above two goals, a novel LesionEncoder framework is proposed to detect lesions in CT scans and encode lesion features for automatic severity assessment and progression prediction. The LesionEncoder framework consists of two modules: (1) A U-Net module which detects lesions and extracts features from CT slices, and (2) a recurrent neural network (RNN) module for learning the relationship between feature vectors and classifying the sequence of feature vectors as a whole.

We applied the LesionEncoder framework for both severity assessment and progression prediction. With access to data of two COVID-19 confirmed patient cohorts from two hospitals, we trained our proposed model with CT scans of a cohort of patients from one hospital and tested it on an independent cohort from the other hospital. The models built on the LesionEncoder framework outperformed the baseline models that used lesion volumetric features and general imaging features, demonstrating a high potential for clinical applications in the current COVID-19 management, particularly in automatic severity assessment of COVID-19 patients. This framework may also have a strong potential in similar lesion-focused analyses, such as neuroimaging based diagnosis of brain tumors [23,24] and neurological disorders [25,26], CT-based lung nodule classification [27], and retinal imaging based ophthalmic disease detection [28].

2. Datasets

A total of 346 COVID patients confirmed by reverse transcription polymerase chain reaction (RT-PCR) were retrospectively selected from two local hospitals in the Hubei Province, China, namely Huang Shi Central Hospital (HSCH) and Xiang Yang Central Hospital (XYCH). Severity types of all patients at baseline and follow-up (in seven days) were assessed and confirmed by clinicians according to the COS for COVID-19 [21]. More details of the demographics and baseline characteristics of patients can be found in our previous study [29]. This analysis was approved by the Institutional Review Board of both hospitals, and written informed consent was obtained from all the participants.

Table 1 and Table 2 illustrate, respectively, the demographics of patients for the development of a severity assessment model (Task 1—mild vs. severe) and a progression prediction model (Task 2—converter vs. non-converter). For both tasks, CT scans of the HSCH cohort were used for training the models, and CT scans of the XYCH cohort were used as an independent dataset to test the trained models. Patients may have either a lung-window scan, a mediastinal-window scan, or both in their baseline CT examination. All scans were included in the analysis. The total number of CT scans for Task 1 was 639, and that for Task 2 was 601. An internal validation set (20% of the training samples) was split from the training set and used to evaluate the model’s performance during training.

Table 1.

Demographics of the patients in Task 1 dataset.

Table 2.

Demographics of the patients in Task 2 dataset.

Note that there is a highly imbalanced distribution of samples in the datasets (i.e., 324 (93.6%) patients in mild class for Task 1, and 300 (92.6%) patients in non-converter class for Task 2). A weighting strategy was used to address the imbalanced distribution in datasets, and the details are presented in Section 3.3.

3. Methods

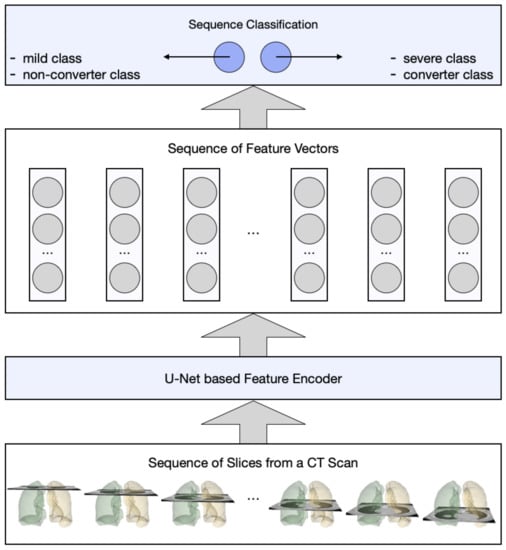

Figure 2 gives an overview of the LesionEncoder framework, which consists of two modules: (1) A lesion encoder module for lesion detection and feature encoding, and (2) a RNN module for sequence classification. The lesion encoder module extracts features from individual CT slices; therefore, a CT scan with multiple CT slices can be represented as a sequence of feature vectors. The sequence classification module takes the sequence of feature vectors as input and then classifies the entire sequence collectively.

Figure 2.

An overview of the proposed LesionEncoder framework.

3.1. Image Pre-Processing

All CT scans were pre-processed with intensity normalization, contrast limited adaptive histogram equalization, and gamma adjustment, using the same pre-processing pipeline as in our previous study [30]. We further performed lung segmentation on the CT slices using an established model—R231CovidWeb [31]. This model (The binary executable software for the lung segmentation model is available online (https://github.com/JoHof/lungmask (accessed on 11 February 2021))) was trained on a large and diverse dataset of non-COVID-19 CT scans and further fine-tuned with an additional COVID-19 dataset [32]. The CT slices with less than 3 mm2 lung tissue were removed from our datasets, since they bear little or no information of the lung.

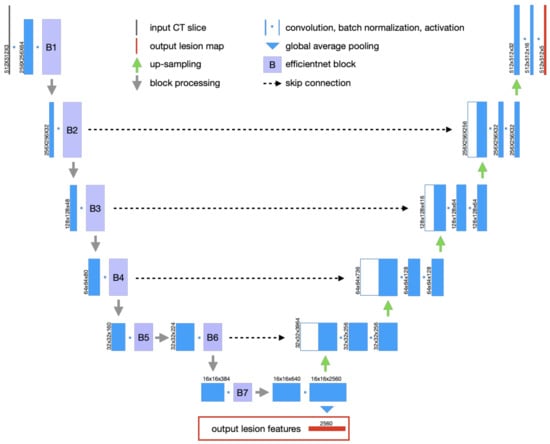

3.2. Lesion Encoder

The U-Net architecture [33] is adopted for the lesion encoder module. It consists of an encoder and a decoder, where the encoder captures the lesion features and the decoder maps lesion features back to the original image space. In other words, the encoder is responsible for extracting features from the input images (i.e., CT slices), whereas the decoder generates the segmentation maps (i.e., lesion masks). Figure 3 illustrates the encoder-decoder architecture of the lesion encoder module.

Figure 3.

The U-Net architecture for lesion detection and feature encoding.

We used the EfficientNetB7 model [34] as the backbone to build the lesion encoder module, as it represents the state-of-the-art in object detection while being 8.4 times smaller and 6.1 times faster on inference than the best existing models in the ImageNet Challenge [35]. The ImageNet pre-trained weights were used to initialize the EfficientNetB7 model. There are 7 blocks in the EfficientNetB7 model, as shown in Figure 3. The skip connections were built between the expand activation layers in Blocks 2, 3, 4, and 6 and their corresponding up-sampling layers in our model. The output of the bottom layer is the final output feature vector representing the lesion features of the input slice.

A publicly available dataset was used to train the EfficientNetB7 U-Net, which consisted of 100 axial CT slices from 60 COVID-19 patients [32]. All the CT slices were annotated by an experienced radiologist with 3 different lesion classes, including GGO, consolidation, and pleural effusion. Since this dataset is very small, we applied different augmentations, including horizontal flip, affine transforms, perspective transforms, contrast manipulation, image blurring and sharpening, Gaussian noise, and random crops, to the dataset using the Albumentations library [36]. The model (The Tensorflow implementation of the EfficientNetB7 U-Net is available online (https://github.com/qubvel/segmentation_models (accessed on 11 February 2021))) was trained using Adam optimizer [37] with a learning rate of 0.0001 and 300 epochs.

The lesion encoder module was applied to process individual slices in a CT scan. For each CT slice, a high-dimensional feature vector (d = 2560) was derived. Independent component analysis (ICA) was performed on the training samples to reduce dimensionality (d = 64). The ICA model was then applied to the test samples, so that they have the same feature dimension as the training samples. The output of the lesion encoder is a sequence of feature vectors, which are then classified using a sequence classifier, as explained in the next section.

3.3. Sequence Classification

A RNN model was built for sequence classification. Its input is a sequence of feature vectors generated by the lesion encoder. The structure of the RNN model is illustrated in Table 3—two bidirectional long short-term memory (LSTM) layers, followed by a dense layer with a dropout rate of 0.5, and an output dense layer. For comparison purposes, another pooling model was created (Table 3)—using max pooling and average pooling to combine the slice-based feature vectors, as inspired by a previous study [9]. The difference between these two models is that the RNN model captures the relationship between feature vectors in a sequence, whereas the pooling model ignores such relationships.

Table 3.

The architectures of the RNN model and the pooling model.

Adam optimizer [37] with a learning rate of 0.001 was used for training the models in 100 epochs. A validation set (20%) was split from the training set for monitoring the training process. Every 20 epochs, the validation set was reselected from the training set, so that the model will be internally validated by all training samples during training. To address the imbalanced distribution in the datasets, we assigned different weights to the two classes (mild/non-converter class: 0.2, severe/converter class: 1.8) when training the models. In addition, if a patient has multiple CT scans, the scan with a higher probability of a positive prediction overrules the others when applying the models for inference.

3.4. Performance Evaluation

We tested the LesionEncoder framework with two configurations: (1) Using the pooling model as the classifier (LE_Pooling) and (2) using the RNN model as the classifier (LE_RNN). These methods were compared to 3 baseline methods. The first baseline method (BS_Volumetric) was inspired by a previous study [20], which was based on a Logistic Regression model using 4 lesion volumetric features as input: GGO percentage, consolidation percentage, pleural effusion percentage, and total lesion percentage. The second (BS_Pooling) and third (BS_RNN) baseline methods were based on the same classification models as in LE_Pooling and LE_RNN; however, the features were extracted from an EfficientNetB7 model without a lesion encoder module. The purpose of the second and third baseline models was to estimate the contribution of the lesion encoder. Following previous studies [38,39], sensitivity, specificity, accuracy, and area under curve (AUC) were used to evaluate the methods’ performance. Receiver operating characteristic (ROC) curves were also compared between methods.

3.5. Development Environment

All the neural network models, including the EfficientNetB7 U-Net, the Pooling model and the RNN model, were implemented in Python (v3.6.9) and Tensorflow (v2.0.0). The models were trained using a Fujitsu server with Intel Xeon Gold 5218 GPU, 128 G memory, and NVidia V100 32 G GPU. The same server was used for image pre-processing, feature extraction, and classification.

4. Results

4.1. Lung and Lesion Segmentation

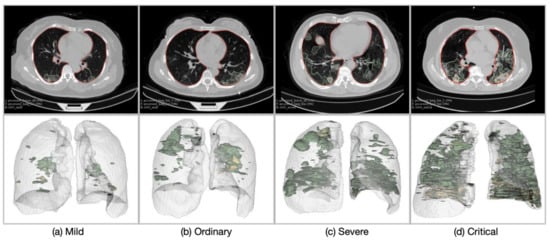

The lung masks generated using the R231CovidWeb model [31] and the lesion masks generated by the lesion encoder module were visually inspected by an experienced image analyst (S.L.). Overall, the lung segmentation results were visually reliable with few severe and critical cases having infection areas missed out in their lung masks. The lesion encoder achieved a Dice of 0.92 on the COVID-19 CT segmentation dataset [32]. Figure 4 presents four examples of the lung and lesion segmentation results (reconstructed using 3D Slicer (v4.6.2) [40]) of the COVID-19 patients, one for each severity class. It shows that higher severity of COVID-19 is reflected in CT scans as increasing number and volume of lesions.

Figure 4.

Examples of the patients in different severity groups: (a) Mild, (b) Ordinary, (c) Severe, and (d) Critical. The upper row presents the axial CT slices with the lung (red) and lesion (green: GGO; yellow: consolidation; brown: pleural effusion) boundaries overlaid on the CT slices. The lower row illustrates the 3D models of the lung and lesions.

4.2. Severity Assessment

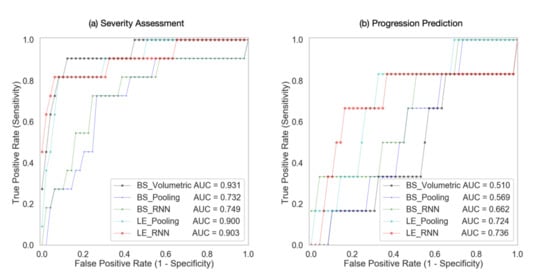

Five different methods were compared in the automatic severity assessment of COVID-19 patients, including three baseline methods and two proposed methods, as described in Section 3.4. Table 4 illustrates the performance metrics of different methods on the severity assessment task, and Figure 5a shows the ROC curves of these methods. The three methods using lesion features (BS_Volumetric, LE_Pooling, and LE_RNN) consistently outperformed the models that did not use lesion features by a marked difference in sensitivity (>9.1%), specificity (>15.3%), accuracy (>14.7%), and AUC (>15.1%). In particular, BS_Volumetric achieved the highest AUC of 0.931, indicating that the lesion volumetric features were highly effective in distinguishing between severe and mild cases.

Table 4.

Performance of different methods in baseline severity assessment. Bold font indicates best result in each performance metric achieved by the methods.

Figure 5.

ROC curves of different models in (a) severity assessment and (b) progression prediction.

The proposed LE_RNN method achieved higher specificity (0.952) than the BS_Volumetric method (0.933), showing that the features captured by the lesion encoder might be useful in reducing the false positive rate compared with the volumetric features. When comparing the pooling models and RNN models, we found that the RNN models performed slightly better than the pooling models; and the impact of the sequence classifier on the classification performance was much lower than that of the lesion features.

4.3. Progression Prediction

The results of different methods in the prediction of disease progression task are presented in Table 5 and Figure 5b presents the ROC curves of these methods. The BS_Volumetric method performed poorly (sensitivity = 0.5, specificity = 0.465, accuracy = 0.467, AUC = 0.51), indicating that lesion volumetric features were not predictive of COVID-19 disease progression. This finding was not surprising, since the converter and non-converter cases both showed mild symptoms at baseline and presented a small quantity of lesions in the lungs. The BS_Pooling and BS_RNN methods achieved slightly better performance than BS_Volumetric, although they did not use any lesion features.

Table 5.

Performance of different methods in prediction of disease progression. Bold font indicates best result in each performance metric achieved by the methods.

The LE_Pooling and LE_RNN methods outperformed the baseline methods with a substantial increase of 20–30% in specificity. LE_RNN was the best method in all the evaluation metrics (sensitivity = 0.667, specificity = 0.838, accuracy = 0.829, AUC = 0.736). The results indicate that the lesion features extracted by the lesion encoder may bear useful diagnostic information for predicting disease progression. However, it is still challenging to predict disease progression using the lesion features, and the low sensitivity (0.667) may restrict clinical applicability of the proposed methods.

5. Discussion

Clinical value in the management of COVID-19. The rapid spread of COVID-19 has put a strain on healthcare systems, necessitating efficient and automatic diagnosis and prognosis to facilitate the admission, triage, and referral of COVID-19 patients. Chest CT plays a key role in COVID-19 management by providing important diagnostic and prognostic information of patients. Several computational models have been developed to support automatic screening and diagnosis of COVID-19 [9,10,11,12,13,14,15]. There are also a few studies [19,20] using CT to quantify infection severity with a focus on development of lesion segmentation models. A few measures based on the lesion volumes have been proposed to quantify infection severity [19]; however, the intricate patterns of the lesion shape, texture, location, extent, and distribution were less investigated.

To capture the complex features in the lesions, we proposed a novel LesionEncoder framework. Two specific applications of this framework (i.e., assessment of severity and prediction of disease progression for COVID-19 patients) were explored in this study. To the best of our knowledge, this work represents the first attempt to predict COVID-19 patient disease progression using chest CT scans. Models built on this framework are able to take CT scans as input, detect, and extract features from the lesions, and quantify the severity or predict progression in a fully automated manner. The analysis of a high-resolution CT scan of 512 × 12 × 430 voxels takes less than 1 min, which is substantially faster than radiologists’ reading time. This can also save the burden of manual delineation of the lesions. The quantitative measures based on the features are of high clinical relevance, and can be used to support medical decision making or to track changes in patients.

We should note that this framework is not designed to analyze the COVID-19 suspects who are not confirmed by RT-PCR, or the covert/asymptomatic cases that are not documented [41,42,43]. The community-acquired pneumonia cases, such as viral pneumonia and interstitial lung disease patients, were also not considered in this study. As pointed out in a systematic review [44], normal controls and diseased controls will be needed for the development of screening or diagnostic models, thus the selection bias in the cohort may lead to a risk of overestimated performance. Since our model focuses on the confirmed and hospitalized COVID-19 cases; therefore, will not be exposed to such risk.

Models based on lesion features outperformed the baseline models without lesion features in both severity assessment and progression prediction. An interesting finding in severity assessment is that the lesion volumetric features are highly effective in distinguishing the severe cases from the mild cases. The features extracted by the lesion encoder did not improve the sensitivity of detecting the severe cases, but only reduced false positive predictions. This finding indicates that lesion volumetric features, such as GGO percentage and consolidation percentage, are prominent biomarkers in identifying the severe cases. The lesion encoder makes marginal contribution to severity assessment. In contrast, in progression prediction the models with the lesion encoder performed much better than those with volumetric features, indicating that the intricate pattern captured by the lesion encoder provide useful prognostic information for identifying the COVID-19 patients at higher risk of converting to the severe type. The LesionEncoder framework demonstrates a clinical applicability in COVID-19 management, particularly in the automatic severity assessment of COVID-19 patients (sensitivity = 0.818, specificity = 0.952, AUC = 0.931). However, it is still challenging to predict disease progression using lesion features, and the low sensitivity (0.667) may restrict clinical applicability of the proposed methods.

Technical contributions of the LesionEncoder framework. The technical contributions of this work are two-fold. Most importantly, this framework extends the use of lesion features beyond conventional lesion segmentation and volumetric analysis. There is a wealth of information in the lesions including shape, texture, location, extent, and distribution of involvement of the abnormality, that can be extracted by the lesion encoder. We demonstrated two novel applications of the lesion features in severity assessment and progression prediction. However, they also have a strong potential in a wide range of other clinical and research applications, such as supporting clinical decision making and providing insights of the pathological mechanism.

In addition, the proposed LesionEncoder framework attempts to address a common challenge in medical image analysis: how to reconcile local information and global information to improve medical image perception [45]. In this study, the slices from a CT scan were used as input for classification, but not every slice in the scan carries the same diagnostic/prognostic information. That is, the ground truth label of the entire scan cannot be propagated to label individual slices. For example, a CT slice with no lesion from a severe case might appear more ‘normal’ compared to a slice with some lesions from a mild case. Our proposed framework is a feasible approach to infer the holistic prediction with a focus on the analysis of region of interest. The RNN module in the framework is also a more sophisticated approach than the conventional feature fusion methods that use average pooling or max pooling to combine the local features. There are many analyses of the same nature, e.g., neuroradiologists may use features such as tumoral infiltration of surrounding tissues in MRI for tumor grading [46]; ophthalmologists may focus on lesions, such as hemorrhages and microaneurysms, hard exudates, and cotton-wool spots, when grading diabetic retinopathy [47]; and pathologists are more likely fixate on regions of highest diagnostic relevance when interpreting the biopsy whole slide images (WSI) [48]. The LesionEncoder framework may be generalizable to these lesion-focused medical image analyses.

Limitations. A limitation of this study is that we only had access to a retrospective cohort. Although it includes 639 CT scans of 346 patients, it is still a relatively small dataset compared to other datasets for development of deep learning models. It also refuted the idea of developing 3D deep learning models for scan-based classification. Since 3D models are usually more complicated than 2D models and have substantially more parameters, the small sample size will lead to undertrained models. In addition, there is a highly imbalanced distribution in the datasets. Among the 346 samples for the development of the severity assessment model, 324 (93.6%) patients were in the mild class. For the disease progression model, there are 300 (92.6%) patients in the non-converter class. Although this reflects the real distribution, it will be ideal to have more severe/converter class samples for training. To address this imbalance distribution problem, we used a class weighting strategy to give the positive class higher weight during training, and used a prediction weighting strategy during inference to enhance the prediction of the positive class if that patient has multiple scans. A larger sample size with more severe and converter cases in the datasets would help train more accurate and robust models as well as produce reliable performance estimates. Other techniques, such as synthetic minority oversampling [29], spherical coordinates transformation [49], and generative adversarial network [50], will be investigated in further study.

The lung masks generated using the R231CovidWeb model [31] and the lesion masks generated by the lesion encoder module were visually inspected by an experienced image analyst. The segmentation results were visually reliable, but the missed-out lung or lesion regions in the segmentation masks were noted in a few severe and critical cases. Since there were no lesion masks for our datasets, no quantitative analyses were performed to evaluate the automatic segmentation results. Further improvements can be made if the ground truth annotation of the lung and lesion can be provided to optimize the performance of the current lung segmentation model and lesion encoder module on our datasets.

Furthermore, it is still challenging for LesionEncoder alone to predict disease progression using lesion features. However, combining the proposed method with other biomarkers, such as short-time changes in neutrophil-to-lymphocyte ratio and urea-to-creatinine ratio [51] might further help stratify patients’ severity.

There are a few recent studies that used explainable AI in chest CT segmentation and classification of COVID-19 patients, such as these based on class activation map [52], few-shot learning [53], and the shapely addictive explanations framework [29]. Another potential future extension of this work is to use explainable AI frameworks to explain the model’s logics and decision-making processes, thereby unlocking the black box of deep learning and helping the end users to understand the models better.

6. Conclusions

In this study, a novel LesionEncoder framework was proposed to encode the enriched lesion features in chest CT scans for automatic severity assessment and progression prediction of COVID-19 patients. Models built on this framework outperformed the evaluated baseline models with a marked improvement. The lesion volumetric features were prominent biomarkers in identifying severe/critical cases, but intricate features captured by the lesion encoder were found effective in identifying the COVID-19 patients who have higher risks of converting to the severe or critical type. Overall, the LesionEncoder framework demonstrates a high clinical applicability in the current COVID-19 management, particularly in automatic severity assessment of COVID-19 patients.

An important future direction of this framework lies in the combination of clinical data and imaging data for better prediction performance, especially for the progression prediction, since clinical data may provide essential indicators of the clinical risks of the patients. Furthermore, the applications of the LesionEncoder framework to other types of lesion-focused analyses will be further investigated.

Author Contributions

The project was initially conceptualized and supervised by X.-R.C., X.-M.Q. and L.S. The patient data and imaging data were acquired by Y.-Z.F., Z.-Y.C. and D.R. The analysis methods were designed and implemented by S.L. and J.C.Q. The data were analyzed by S.L. and J.C.Q. The research findings were interpreted by P.-K.C., Q.-T.L., L.Q., X.-F.L., S.B. and E.C. All authors were involved in the design of the work. The manuscript was drafted by Y.-Z.F., S.L. and L.Q., and all authors have substantively revised it. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by Natural Science Foundation of Guangdong Province (grant no. 2017A030313901), Guangzhou Science, Technology and Innovation Commission (grant no. 201804010239) and Foundation for Young Talents in Higher Education of Guangdong Province (grant no. 2019KQNCX005).

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of Xiang Yang Central Hospital and Huang Shi Central Hospital (approval number LL-2020-032-02, on 23 February 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not Applicable, the study does not report any data.

Acknowledgments

We acknowledge Fujitsu Australia Limited for providing the computational resources for this study. Special thanks to Eva Huang for proofreading this paper.

Conflicts of Interest

The authors have no conflict of interest nor any competing interest to declare.

Abbreviations

RNN—recurrent neural network; GGO—ground glass opacity; COS—core outcome set; LSTM—long short term memory; RT-PCR—reverse transcription polymerase chain reaction

References

- Ji, Y.; Ma, Z.; Peppelenbosch, M.P.; Pan, Q. Potential association between COVID-19 mortality and health-care resource availability. Lancet Glob. Health 2020, 8, e480. [Google Scholar] [CrossRef] [Green Version]

- Fang, Y.; Zhang, H.; Xie, J.; Lin, M.; Ying, L.; Pang, P.; Ji, W. Sensitivity of Chest CT for COVID-19: Comparison to RT-PCR. Radiology 2020, 296, E115–E117. [Google Scholar] [CrossRef]

- Shi, H.; Han, X.; Jiang, N.; Cao, Y.; Alwalid, O.; Gu, J.; Fan, Y.; Zheng, C. Radiological findings from 81 patients with COVID-19 pneumonia in Wuhan, China: A descriptive study. Lancet Infect. Dis. 2020, 20, 425–434. [Google Scholar] [CrossRef]

- Ng, M.-Y.; Lee, E.Y.; Yang, J.; Yang, F.; Li, X.; Wang, H.; Lui, M.M.-S.; Lo, C.S.-Y.; Leung, B.; Khong, P.-L.; et al. Imaging Profile of the COVID-19 Infection: Radiologic Findings and Literature Review. Radiol. Cardiothorac. Imaging 2020, 2, e200034. [Google Scholar] [CrossRef] [Green Version]

- Ai, T.; Yang, Z.; Hou, H.; Zhan, C.; Chen, C.; Lv, W.; Tao, Q.; Sun, Z.; Xia, L. Correlation of chest CT and PT-PCR testing in coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases. Radiology 2020, 296, E32E40. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.; Wang, Y.; Li, X.; Ren, L.; Zhao, J.; Hu, Y.; Zhang, L.; Fan, G.; Xu, J.; Gu, X.; et al. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet 2020, 395, 497–506. [Google Scholar] [CrossRef] [Green Version]

- Inui, S.; Fujikawa, A.; Jitsu, M.; Kunishima, N.; Watanabe, S.; Suzuki, Y.; Umeda, S.; Uwabe, Y. Chest CT Findings in Cases from the Cruise Ship “Diamond Princess” with Coronavirus Disease 2019 (COVID-19). Radiol. Cardiothorac. Imaging 2020, 2, e200110. [Google Scholar] [CrossRef] [Green Version]

- The Royal Australian and New Zealand Colleague of Radiologists. Advice on Appropriate Use of CT Throughout the COVID-19 Pandemic. 2020. Available online: https://www.ranzcr.com/college/document-library/advice-on-appropriate-use-of-ct-throughout-the-covid-19-pandemic (accessed on 9 April 2020).

- Li, L.; Qin, L.; Xu, Z.; Yin, Y.; Wang, X.; Kong, B.; Bai, J.; Lu, Y.; Fang, Z.; Song, Q.; et al. Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology 2020, 296, E65–E71. [Google Scholar] [CrossRef]

- Song, Y.; Zheng, S.; Li, L.; Zhang, X.; Zhang, X.; Huang, Z.; Chen, J.; Wang, R.; Zhao, H.; Zha, Y.; et al. Deep learning Enables Accurate Diagnosis of Novel Coronavirus (COVID-19) with CT images. medRxiv 2020, arXiv:2020.02.23.20026930. [Google Scholar] [CrossRef]

- Gozes, O.; Frid-Adar, M.; Greenspan, H.; Browning, P.D.; Zhang, H.; Ji, W.; Bernheim, A.; Siegel, E. Rapid AI Development Cycle for the Coronavirus (COVID-19) Pandemic: Initial Results for Automated Detection & Patient Monitoring using Deep Learning CT Image Analysis. arXiv 2020, arXiv:2003.05037. [Google Scholar]

- Xu, X.; Jiang, X.; Ma, C.; Du, P.; Li, X.; Lv, S.; Yu, L.; Ni, Q.; Chen, Y.; Su, J.; et al. Deep Learning System to Screen Coronavirus Disease 2019 Pneumonia. arXiv 2020, arXiv:2002.09334. [Google Scholar] [CrossRef]

- Wang, S.; Kang, B.; Ma, J.; Zeng, X.; Xiao, M.; Guo, J.; Cai, M.; Yang, J.; Li, Y.; Meng, X.; et al. A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19). Eur. Radiol. 2021, 31, 6096–6104. [Google Scholar] [CrossRef]

- Chen, J.; Wu, L.; Zhang, J.; Zhang, L.; Gong, D.; Zhao, Y.; Chen, Q.; Huang, S.; Yang, M.; Yang, X.; et al. Deep learning-based model for detecting 2019 novel coronavirus pneumonia on high-resolution computed tomography: A prospective study. medRxiv 2020, arXiv:2020.02.25.20021568. [Google Scholar]

- Wang, L.; Wong, A. COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest radiography images. arXiv 2020, arXiv:2003.09871v1. [Google Scholar] [CrossRef] [PubMed]

- Shi, F.; Wang, J.; Shi, J.; Wu, Z.; Wang, Q.; Tang, Z.; He, K.; Shi, Y.; Shen, D. Review of Artificial Intelligence Techniques in Imaging Data Acquisition, Segmentation, and Diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 2021, 14, 4–15. [Google Scholar] [CrossRef] [Green Version]

- Yuan, M.; Yin, W.; Tao, Z.; Tan, W.; Hu, Y. Association of radiologic findings with mortality of patients infected with 2019 novel coronavirus in Wuhan, China. PLoS ONE 2020, 15, e0230548. [Google Scholar] [CrossRef] [Green Version]

- Qi, X.; Jiang, Z.; Yu, Q.; Shao, C.; Zhang, H.; Yue, H.; Ma, B.; Wang, Y.; Liu, C.; Meng, X.; et al. Machine learning-based CT radiomics model for predicting hospital stay in patients with pneumonia associated with SARS-CoV-2 infection: A multicenter study. medRxiv 2020. [Google Scholar] [CrossRef]

- Shan, F.; Gao, Y.; Wang, J.; Shi, W.; Shi, N.; Han, M.; Xue, Z.; Shen, D.; Shi, Y. Lung Infection Quantification of COVID-19 in CT Images with Deep Learning. arXiv 2020, arXiv:2003.04655. [Google Scholar]

- Chaganti, S.; Balachandran, A.; Chabin, G.; Cohen, S.; Flohr, T.; Georgescu, B.; Grenier, P.; Grbic, S.; Liu, S.; Mellot, F.; et al. Quantification of tomographic patterns associated with COVID-19 from chest CT. arXiv 2020, arXiv:2004.01279. [Google Scholar]

- Jin, X.; Pang, B.; Zhang, J.; Liu, Q.; Yang, Z.; Feng, J.; Liu, X.; Zhang, L.; Wang, B.; Huang, Y.; et al. Core outcome set for clinical trials on coronavirus disease 2019 (COS-COVID). Engineering 2020, 6, 1147–1152. [Google Scholar] [CrossRef]

- Cascella, M.; Rajnik, M.; Cuomo, A.; Scott, C. Features, Evaluation and Treatment Coronavirus (COVID-19); StatPearls: Treasure Island, FL, USA, 2020. Available online: https://www.ncbi.nlm.nih.gov/books/NBK554776/ (accessed on 2 September 2020).

- Jian, A.; Jang, K.; Maurizio, M.; Liu, S.; Magnussen, J.; di Ieva, A. Machine learning for the prediction of molecular markers in glioma on magnetic resonance imaging: A systematic review and meta-analysis. Neurosurgery 2021, 89, 1. [Google Scholar] [CrossRef]

- Gao, Y.; Xiao, X.; Han, B.; Li, G.; Ning, X.; Wang, D.; Cai, W.; Kikinis, R.; Berkovsky, S.; Di Ieva, A.; et al. Deep learning methodology for differentiating glioma recurrence from radiation necrosis using magnetic resonance imaging: Algorithm development and validation. JMRI Med. Info. 2020, 8, e19805. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Song, Y.; Liu, S.; Lill, S.; Wang, C.; Tang, Z.; You, Y.; Gao, Y.; Klistorner, A.; Barnett, M.; et al. MS-GAN: GAN-based semantic segmentation of multiple sclerosis lesions in brain magnetic resonance imaging. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, ACT, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Liu, S.; Cai, W.; Wen, L.; Feng, D.; Pujol, S.; Kikinis, R.; Fulham, M.; Eberl, S. Multi-channel neurodegenerative pattern analysis and its application in Alzheimer’s disease characterization. Comput. Med. Imaging Graph. 2014, 38, 6–436. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, F.; Song, Y.; Cai, W.; Liu, S.; Liu, S.; Pujol, S.; Kikinis, R.; Xia, Y.; Fulham, M.; Feng, D. Pairwise latent semantic association for similarity computation in medical imaging. IEEE Trans. Biomed. Eng. 2016, 63, 5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, S.; Graham, S.L.; Schulz, A.; Kalloniatis, M.; Zangerl, B.; Cai, W.; Gao, Y.; Chua, B.; Arvind, H.; Grigg, J.; et al. A deep learning-based algorithm identifies glaucomatous discs using monoscopic fundus photographs. Ophthal. Glaucoma 2018, 1, 15–22. [Google Scholar] [CrossRef]

- Quiroz, J.C.; Feng, Y.-Z.; Cheng, Z.-Y.; Rezazadegan, D.; Chen, P.-K.; Lin, Q.-T.; Qian, L.; Liu, X.-F.; Berkovsky, S.; Coiera, E.; et al. Development and Validation of a Machine Learning Approach for Automated Severity Assessment of COVID-19 Based on Clinical and Imaging Data: Retrospective Study. JMIR Med. Inform. 2021, 9, e24572. [Google Scholar] [CrossRef]

- Liu, S.; Shah, Z.; Sav, A.; Russo, C.; Berkovsky, S.; Qian, Y.; Coiera, E.; Di Ieva, A. Isocitrate dehydrogenase (IDH) status prediction in histopathology images of gliomas using deep learning. Sci. Rep. 2020, 10, 7733. [Google Scholar] [CrossRef] [PubMed]

- Hofmanninger, J.; Prayer, F.; Pan, J.; Rohrich, S.; Prosch, H.; Langs, G. Automatic lung segmentation in routine imaging is a data diversity problem, not a methodology problem. arXiv 2020, arXiv:2001.11767v1. [Google Scholar] [CrossRef]

- COVID-19 CT Segmentation Dataset. Available online: http://medicalsegmentation.com/covid19/ (accessed on 1 April 2020).

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Tan, M.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946v3. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Do, D.T.; Le, N.Q.K. Using extreme gradient boosting to identify origin of replication in Saccharomyces cerevisiae via hybrid features. Genomics 2020, 112, 2445–2451. [Google Scholar] [CrossRef] [PubMed]

- Le, N.Q.K.; Kha, Q.H.; Nguyen, V.H.; Chen, Y.-C.; Cheng, S.-J.; Chen, C.-Y. Machine Learning-Based Radiomics Signatures for EGFR and KRAS Mutations Prediction in Non-Small-Cell Lung Cancer. Int. J. Mol. Sci. 2021, 22, 9254. [Google Scholar] [CrossRef] [PubMed]

- Fedorov, A.; Beichel, R.; Kalpathy-Cramer, J.; Finet, J.; Fillion-Robin, J.-C.; Pujol, S.; Bauer, C.; Jennings, D.; Fennessy, F.; Sonka, M.; et al. 3D Slicer as an image computing platform for the Quantitative Imaging Network. Magn. Reson. Imaging 2012, 30, 1323–1341. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nishiura, H.; Kobayashi, T.; Miyama, T.; Suzuki, A.; Jung, S.-M.; Hayashi, K.; Kinoshita, R.; Yang, Y.; Yuan, B.; Akhmetzhanov, A.R.; et al. Estimation of the asymptomatic ratio of novel coronavirus infections (COVID-19). Int. J. Infect. Dis. 2020, 94, 154–155. [Google Scholar] [CrossRef]

- Li, R.; Pei, S.; Chen, B.; Song, Y.; Zhang, T.; Yang, W.; Shaman, J. Substantial undocumented infection facilitates the rapid dissemination of novel coronavirus (SARS-CoV2). Science 2020, 368, 489–493. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qiu, J. Covert coronavirus infections could be seeding new outbreaks. Nature 2020. [Google Scholar] [CrossRef] [PubMed]

- Wynants, L.; van Calster, B.; Collins, G.S.; Riley, R.D.; Heinze, G.; Schuit, E.; Bonten, M.M.J.; Dahly, D.L.; Damen, J.A.; Debray, T.P.A.; et al. Systematic review and critical appraisal of prediction models for diagnosis and prognosis of COVID-19 infection. BMJ 2020, 369, m1328. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krupinski, E.A. Medical image perception: Evaluating the role of experience. Proc. SPIE 2000, 3959, 281–289. [Google Scholar] [CrossRef]

- Castillo, M. History and Evolution of Brain Tumor Imaging: Insights throughRadiology. Radiology 2014, 273, S111–S125. [Google Scholar] [CrossRef] [Green Version]

- Nayak, J.; Bhat, P.S.; Acharya, R.; Lim, C.M.; Kagathi, M. Automated Identification of Diabetic Retinopathy Stages Using Digital Fundus Images. J. Med. Syst. 2007, 32, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Brunye, T.; Carney, P.A.; Allison, K.H.; Shapiro, L.G.; Weaver, D.L.; Elmore, J.G. Eye Movements as an Index of Pathologist Visual Expertise: A Pilot Study. PLoS ONE 2014, 9, e103447. [Google Scholar] [CrossRef] [Green Version]

- Russo, C.; Liu, S.; Di Ieva, A. Spherical coordinates transformation pre-processing in Deep Convolution Neural Networks for brain tumor segmentation in MRI. arXiv 2020, arXiv:2008.07090. [Google Scholar] [CrossRef] [PubMed]

- Jose, L.; Liu, S.; Russo, C.; Nadort, A.; Di Ieva, A. Generative adversarial networks in digital pathology and histopathological image processing: A review. J. Pathol. Inform. 2021, 12, 43. [Google Scholar] [CrossRef]

- Solimando, A.G.; Susca, N.; Borrelli, P.; Prete, M.; Lauletta, G.; Pappagallo, F.; Buono, R.; Inglese, G.; Forina, B.M.; Bochicchio, D.; et al. Short-Term Variations in Neutrophil-to-Lymphocyte and Urea-to-Creatinine Ratios Anticipate Intensive Care Unit Admission of COVID-19 Patients in the Emergency Department. Front. Med. 2021, 7, 625176. [Google Scholar] [CrossRef]

- Ye, Q.; Xia, J.; Yang, G. Explainable AI for COVID-19 CT Classifiers: An Initial Comparison Study. In Proceedings of the 2021 IEEE 34th International Symposium on Computer-Based Medical Systems (CBMS), Aveiro, Portugal, 7–9 June 2021; pp. 521–526. [Google Scholar] [CrossRef]

- Voulodimos, A.; Protopapadakis, E.; Katsamenis, I.; Doulamis, A.; Doulamis, N. A Few-Shot U-Net Deep Learning Model for COVID-19 Infected Area Segmentation in CT Images. Sensors 2021, 21, 2215. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).