1. Introduction

As the prominent component of the modern agriculture industry, the pear industry is quite sensitive to the products’ quality and overall profits [

1]. One of the crucial evaluation criteria of pear grade is the defect of pears, for example, blackspot [

2], one of the relatively pervasive pear defects. Once the defect occurs and corresponding treatments are not taken in time, a series of fruits cracking and falling will follow irreversibly, resulting in significant economic losses. At present, the detection of pear defects still relies on a labor workforce, with long working hours, high cost, and low efficiency. With the prosperity of computer vision technology, plenty of artificial neural network structures have been proposed and applied in various fields, with significant advantages. As regards pear blackspot, the traditional detection method is time-consuming and inefficient. With the flourishing of convolutional neural networks in computer vision, various network structures based on them have been applied to pear defect detection and achieved terrific results.

A convolutional neural network can effectively overcome the demerits mentioned above. Lecun put forward LeNet in 1999 [

3], while Alexei won the ImageNet image recognition race champion and slashed the top-5 error in 2012 [

4]. During this period, further research led to the boom of convolutional neural networks. The convolutional neural network was rapidly popularized in wide application scenarios of computer vision and made a breakthrough in agricultural production. For instance, the LeNet-5 network model was improved by Lichao Zhang et al. [

5] and used for crop variety identification. The detection accuracy was as high as 93.7%, which was considerably increased in this domain. Additionally, crop lesions were detected based on DBN (Deep Belief Nets) structure by Zhaoyong Zhou et al. [

6], and this practice has proved it works better than the traditional algorithm.

In recent years, with the continuous rise of Kaggle and ImageNet visual data mining and computer network events, several brilliant convolutional neural network structures with superior properties have emerged, such as the VGG series [

7], ResNet series [

8], GoogLeNet series [

9], and DenseNet series [

10]. All the network structures above have obtained the ImageNet Challenge’s championship and proven their outstanding robustness and generalization capability in many kinds of product identification and positioning problems. Hitherto, the pear defect detection still mainly depends on the traditional algorithm, such as the support vector machine (SVM) algorithm [

11]. Yet, the convolutional neural network use in agriculture remains rarely investigated [

12,

13,

14].

In this study, we adopted the accuracy parameter to conduct experiments, and ResNet50 was compared with mainstream CNNs, including the champion network of the ImageNet Challenge and traditional algorithms. Experiments indicated that after data enhancement and augmentation and using warm-up and other training techniques, ResNet50 could reach an accuracy of 97.35%.

Since a convolutional neural network requires a lot of data for training, and image data of defective pears are usually tricky to obtain in reality, the deep convolutional adversarial generation network was used to train fewer real defective images. It automatically generated defective images to provide training materials for CNNs, which could balance the unbalanced positive and negative sample ratio and achieve good results.

Author Contributions

Conceptualization, Y.Z. and S.W.; methodology, P.S.; validation, Y.Z., S.W. and P.S.; writing—original draft preparation, Y.Z.; writing—review and editing, Y.Z.; visualization, Y.Z.; supervision, Y.W.; project administration, Y.Z.; funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Beijing Nunicipal Natural Science Foundation Youth Project (5214026), the 2115 Talent Development Program of China Agricultural University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We are grateful to the ECC of CIEE in China Agricultural University for their strong support during our thesis writing.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DCGAN | Deep convolutional generative adversarial networks |

| CNN | Convolutional neural network |

| SVM | Support vector machines |

| RF | Random forest |

| KNN | K-nearest neighbor |

| ML | Machine learning |

| AUC | Area under curve |

References

- Zhang, S.; Xie, Z. Current status, trends, main problems and the suggestions on development of pear industry in China. In Journal of Fruit Science; Magazines Publishing House: New York, NY, USA, 2019; Volume 36, pp. 1067–1072. [Google Scholar]

- Tanaka, S.H. Stucdies on black spot disease of the Japanese Pear (Pirus serotina Rehd). In Memoirs of the College of Agriculture; Number 28; Kyoto University: Kyoto, Japan, 1933. [Google Scholar]

- Jackel, L.; Lecun, Y.; Stenard, C.; Strom, B.; Sharman, D.; Zuckert, D. Optical character recogntion for automatic teller machines. In Industrial Applications of Neural Networks; World Scientific: Singapore, 1998; pp. 375–378. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- El-Sawy, A.; El-Bakry, H.; Loey, M. CNN for Handwritten Arabic Digits Recognition Based on LeNet-5; Springer International Publishing: Basel, Switzerland, 2016. [Google Scholar]

- Zhaoyong, Z.; Dongjian, H.; Haihui, Z.; Yu, L.; Dong, S.; Ketao, C. Non-Destructive Detection of Moldy Core in Apple Fruit Based on Deep Belief Network. Food Sci. 2017, 38, 304–310. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556v6. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Huang, G.; Liu, Z.; Laurens, V.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Hearst, M.; Dumais, S.; Osman, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef] [Green Version]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Gomes, J.F.S.; Leta, F.R. Applications of computer vision techniques in the agriculture and food industry: A review. Eur. Food Res. Technol. 2012, 235, 989–1000. [Google Scholar] [CrossRef]

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Guo, C.; Zhang, C.; Liu, Z.; Jiang, H.; Lou, B.; He, Y. Visual detection study on early bruises of Korla pear based on hyperspectral imaging technology. Guang Pu Xue Yu Guang Pu Fen Xi = Guang Pu 2017, 37, 150–155. [Google Scholar] [PubMed]

- Jinhe, Z.; Futang, P. A Method of Selective Image Graying. Comput. Eng. 2006, 20, 198–200. [Google Scholar]

- Chen, S.; Haralick, R.M. Recursive erosion, dilation, opening, and closing transforms. IEEE Trans. Image Process. 1995, 4, 335–345. [Google Scholar] [CrossRef] [PubMed]

- Gil, J.Y.; Kimmel, R. Efficient dilation, erosion, opening, and closing algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1606–1617. [Google Scholar] [CrossRef] [Green Version]

- Wand, M.P.; Schucany, W.R. Gaussian-based kernels. In Canadian Journal of Statistics; Wiley Online Library: Hoboken, NJ, USA, 1990; Volume 18, pp. 197–204. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2014, 3, 2672–2680. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Pateria, A.; Vyas, V.; Pratyush, M. Enhanced Image Capturing Using CNN, 1990. Int. J. Eng. Adv. Technol. 2019, 8, 320–324. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the CVPR, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Blum, A.; Kalai, A.; Langford, J. Beating the hold-out: Bounds for k-fold and progressive cross-validation. In Proceedings of the Twelfth Annual Conference on Computational Learning Theory, Santa Cruz, CA, USA, 7–9 July 1999; pp. 203–208. [Google Scholar]

- Bottou, L. Stochastic Gradient Descent Tricks. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Cutkosky, A.; Mehta, H. Momentum improves normalized sgd. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 2260–2268. [Google Scholar]

- Abdullahi, H.S.; Sheriff, R.; Mahieddine, F. Convolution neural network in precision agriculture for plant image recognition and classification. In Proceedings of the 2017 Seventh International Conference on Innovative Computing Technology (INTECH), Luton, UK, 16–18 August 2017; IEEE: Piscataway, NJ, USA, 2017; Volume 10. [Google Scholar]

- Jurman, G.; Riccadonna, S.; Furlanello, C. A Comparison of MCC and CEN Error Measures in Multi-Class Prediction; Public Library of Science: San Francisco, CA, USA, 2012. [Google Scholar]

- Breiman, L. Random forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Cristea, P.D. Application of neural networks in image processing and visualization. In GeoSpatial Visual Analytics; Springer: Berlin/Heidelberg, Germany, 2009; pp. 59–71. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 6023–6032. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

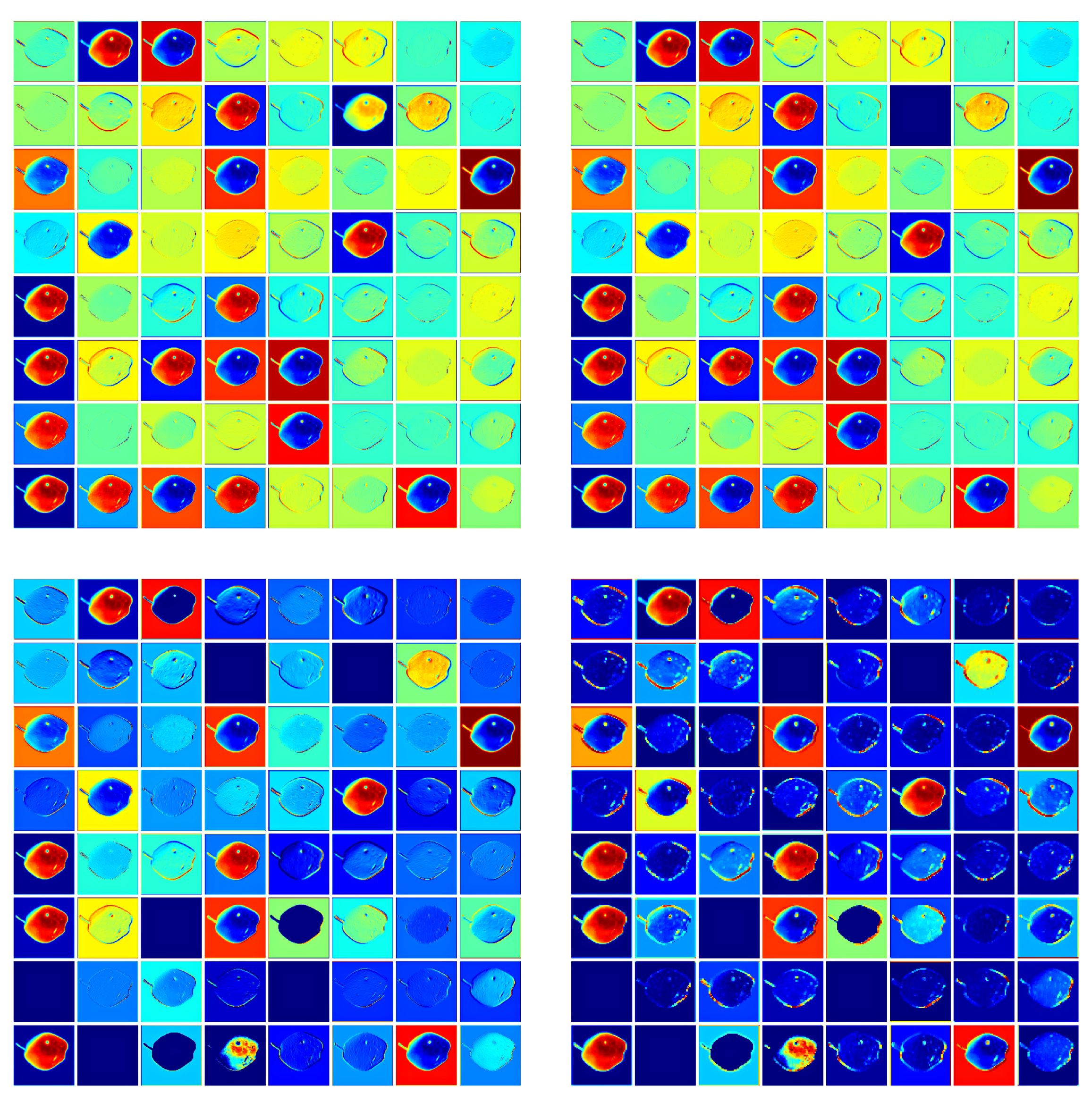

Figure 1.

All amplified images corresponding to a single image.

Figure 2.

Image comparison before and after image grayscale processing.

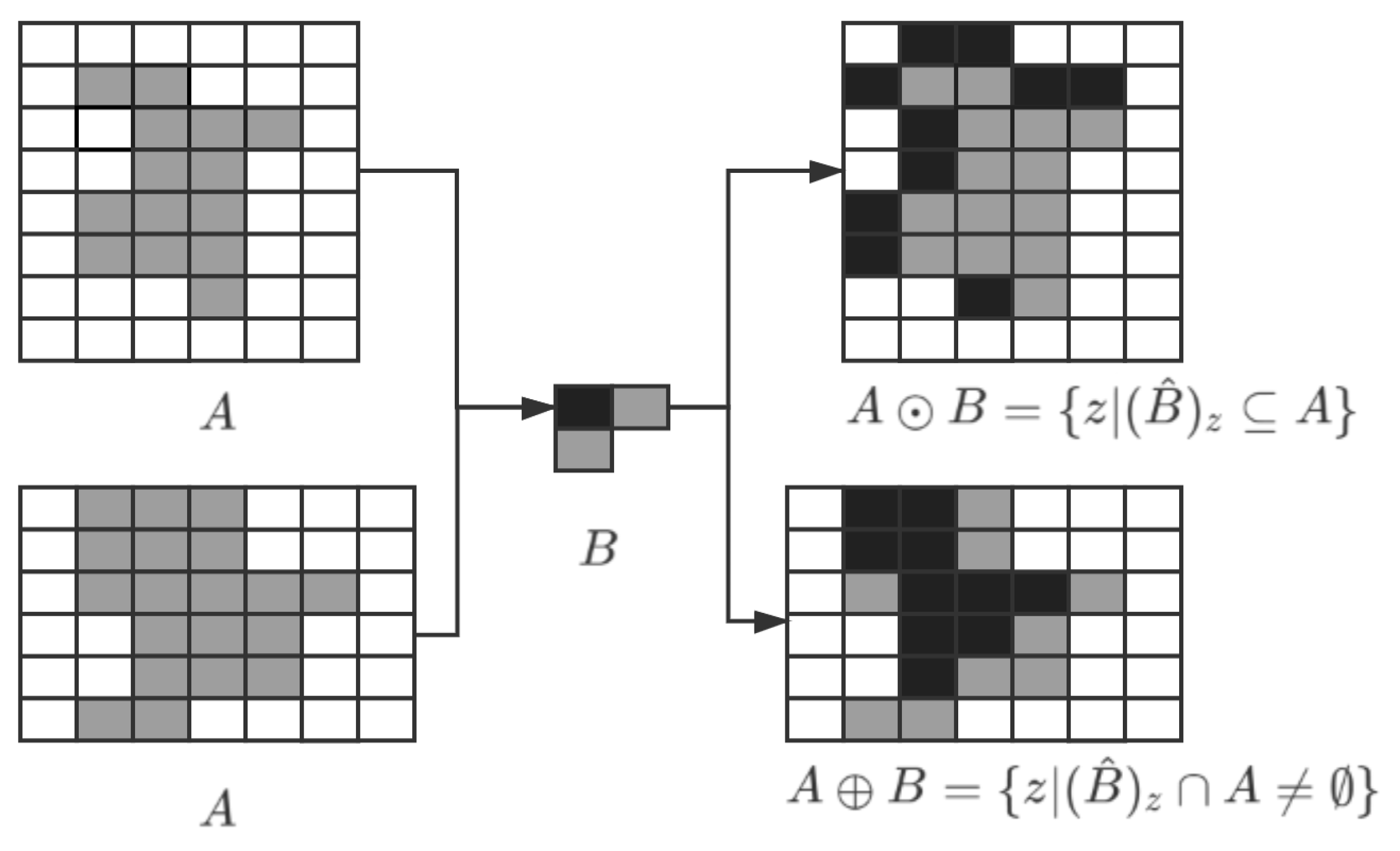

Figure 3.

Processing of dilation and erosion.

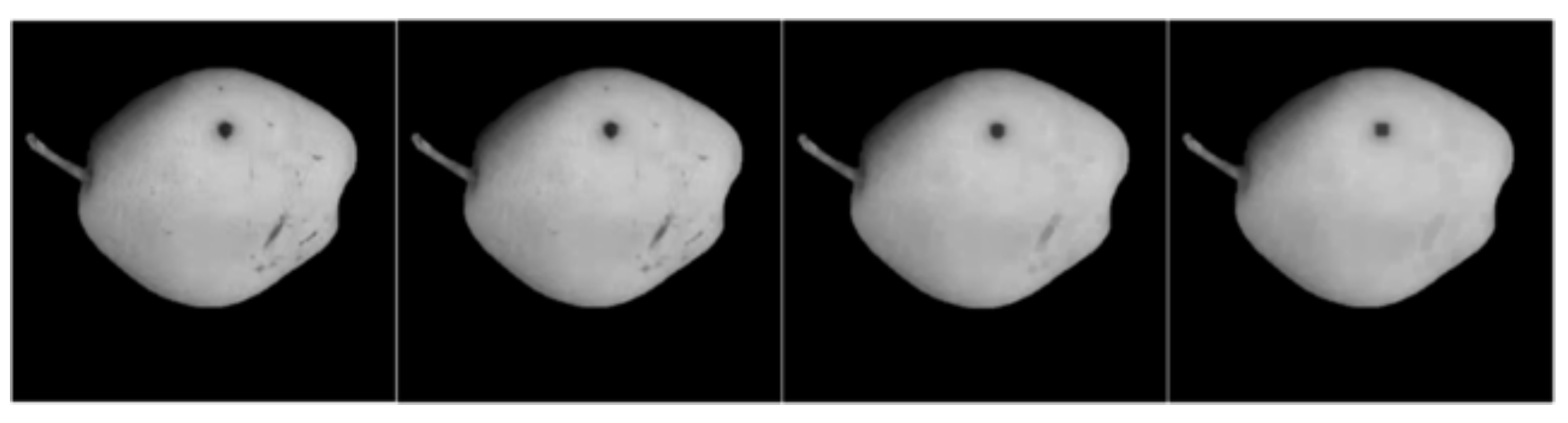

Figure 4.

Image comparison before and after the opening operation of the defective pear (kernel coefficients are 1, 3, 6, 9 respectively).

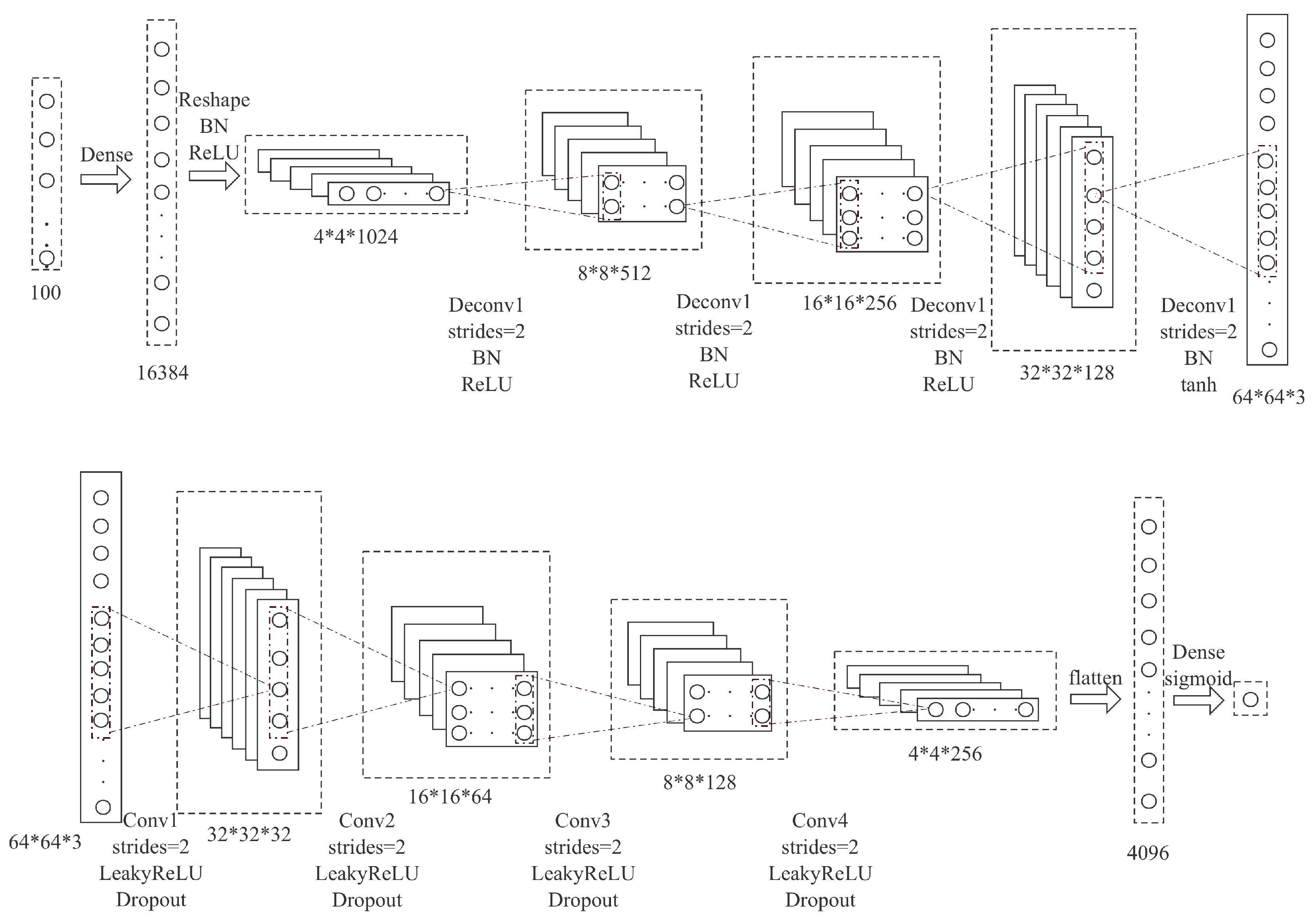

Figure 5.

A 100 dimensional uniform distribution z is projected and reshaped.

Figure 6.

Flow chart of generative adversarial networks.

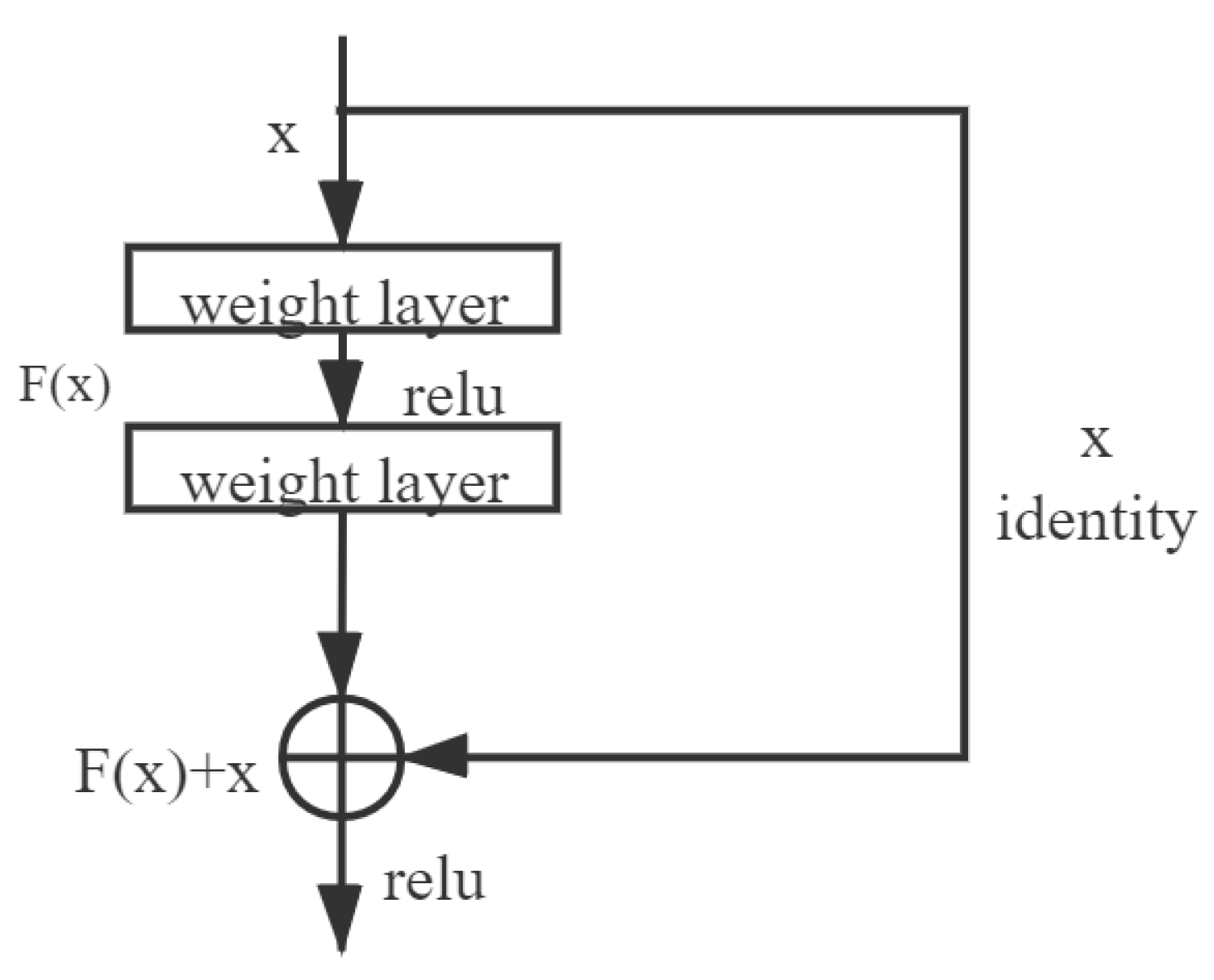

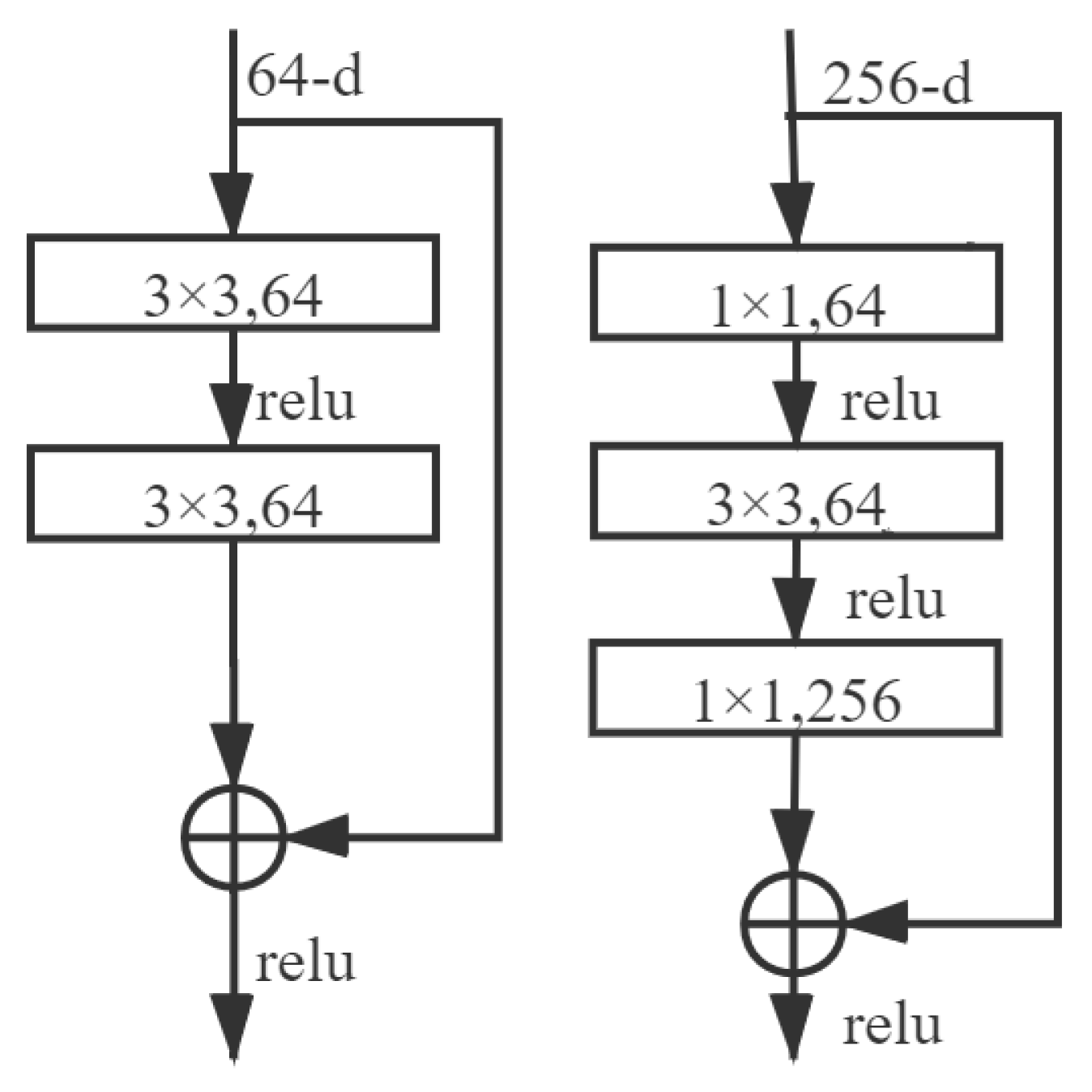

Figure 7.

Schematic diagram of residual structure.

Figure 8.

Two blocks in ResNet series diagrams: the left is BasicBlock and the right is Bottleneck.

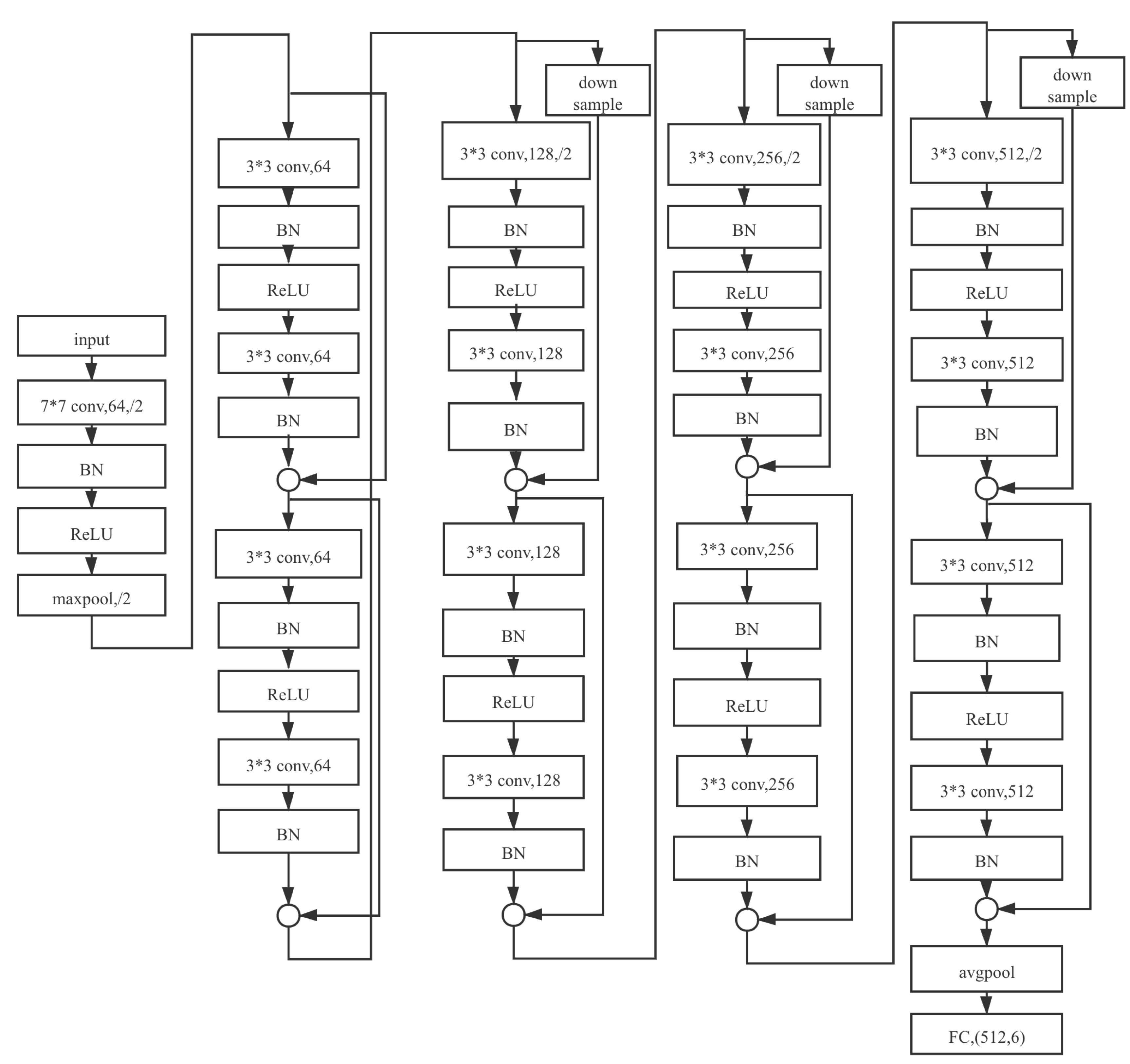

Figure 9.

Schematic diagram of ResNet18.

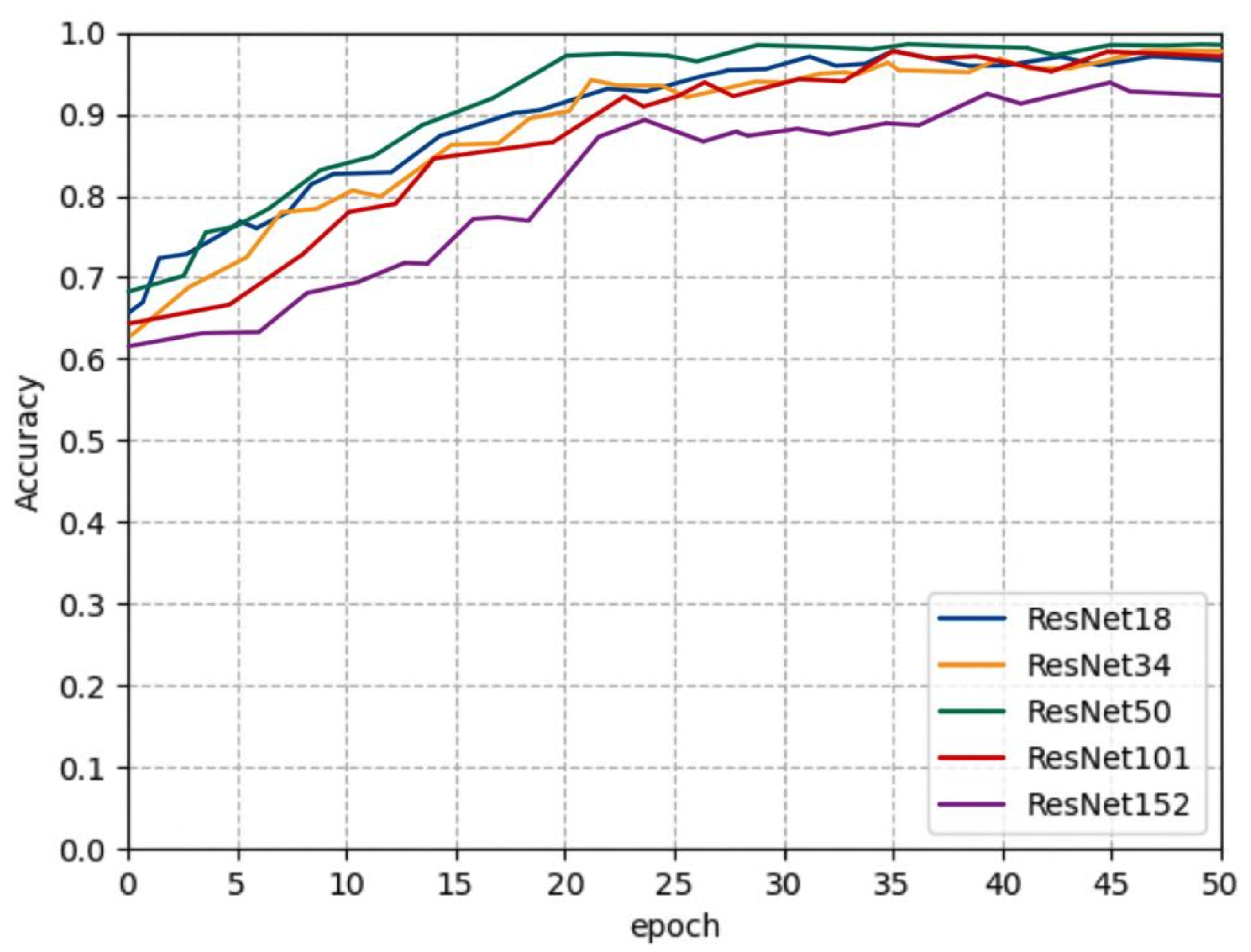

Figure 10.

Training process of ResNet series.

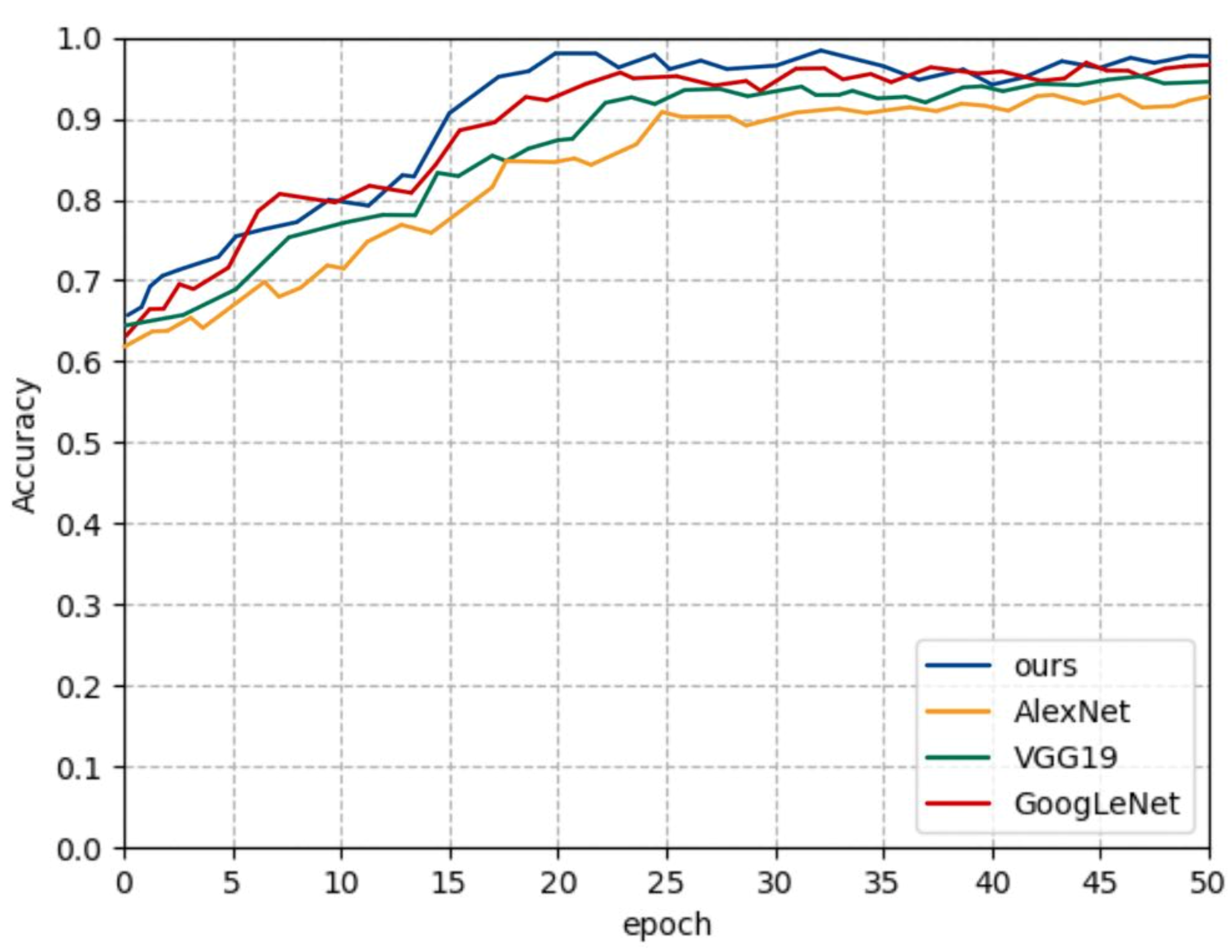

Figure 11.

Training process of CNN models.

Figure 12.

Visualization of shallow feature maps.

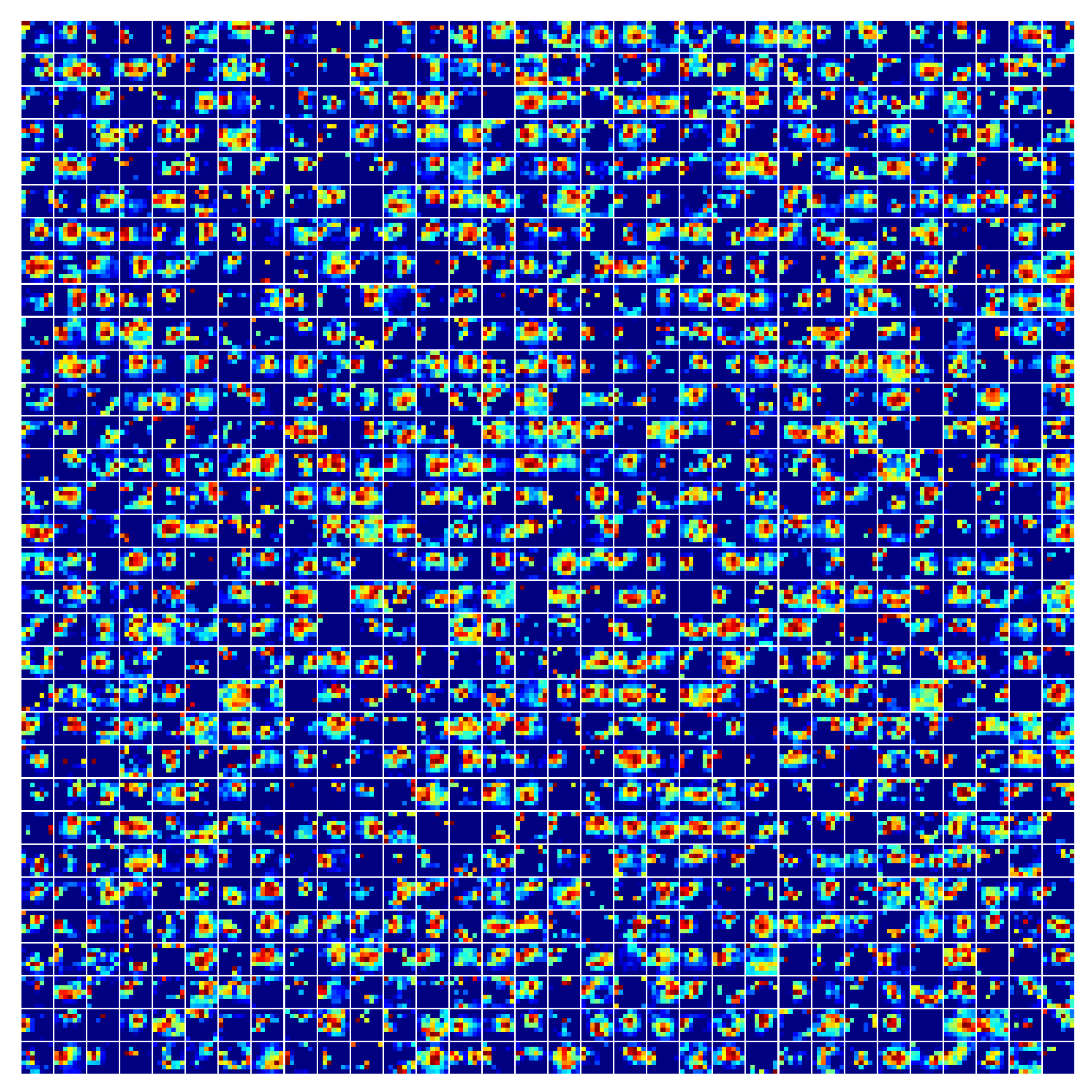

Figure 13.

Visualization of deep feature map.

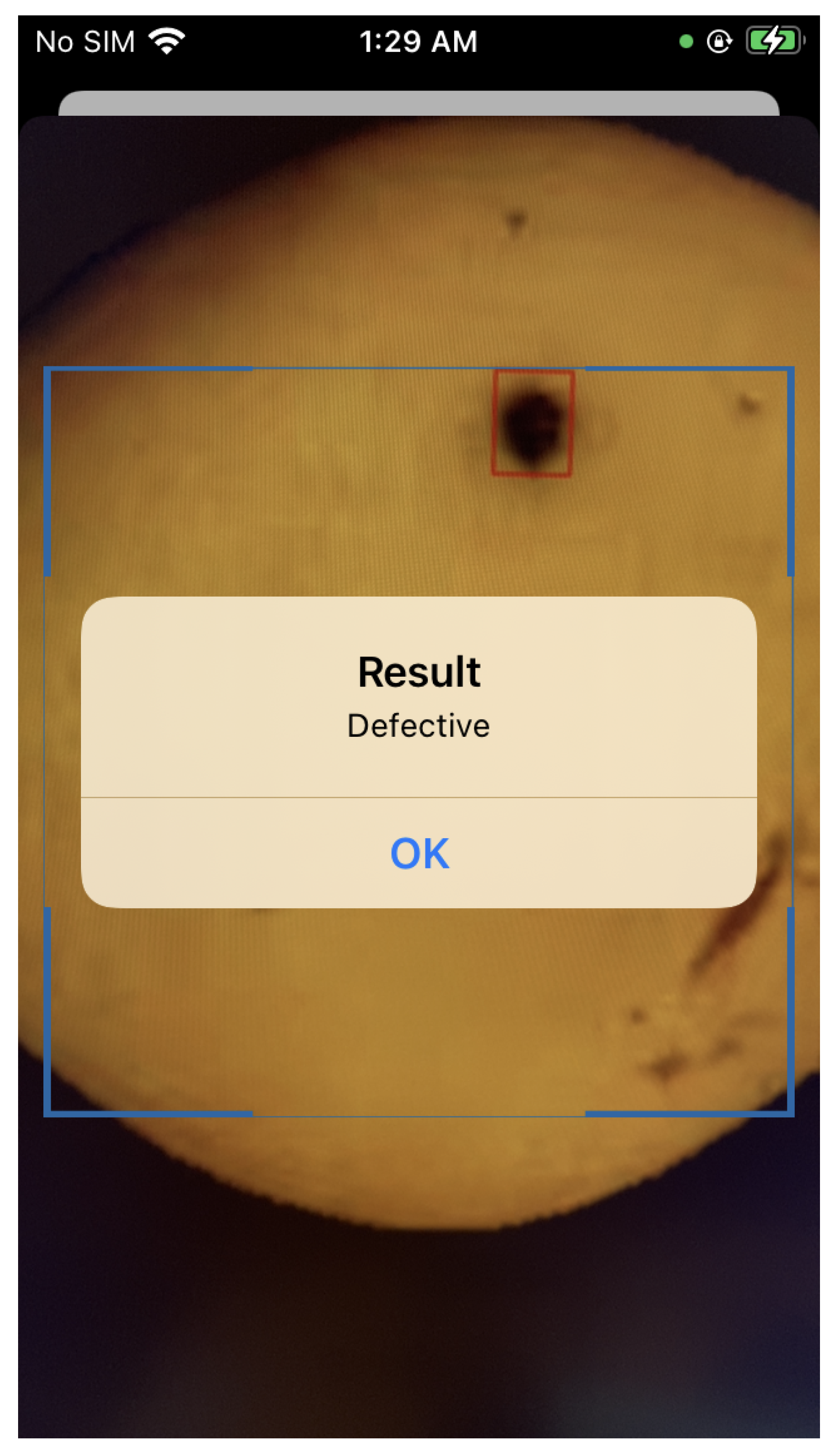

Figure 14.

Intelligent pear defect detection system flow chart.

Figure 15.

Screenshot on iPhone SE.

Table 1.

Dataset details distribution.

| | Normal Pears | Defective Pears |

|---|

| Original data | 1500 | 500 |

| After data augmentation | 13,500 | 6750 |

| Training set | 12,150 | 6075 |

| Validation set | 1350 | 675 |

Table 2.

The number of blocks in each layer of ResNet series.

| | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

|---|

| Blocks | 2, 2, 2, 2 | 3, 4, 6, 3 | 3, 4, 6, 3 | 3, 4, 23, 3 | 3, 8, 36, 3 |

Table 3.

Performance comparison of ResNet series models.

| | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

|---|

| Accuracy | 96.03% | 96.81% | 97.35% | 96.17% | 92.96% |

| Precision | 0.961 | 0.974 | 0.973 | 0.953 | 0.917 |

| MCC | 0.854 | 0.859 | 0.863 | 0.861 | 0.819 |

Table 4.

Comparison of CNN models and our proposed model.

| | Ours | AlexNet | VGG19 | GoogLeNet |

|---|

| Accuracy | 97.35% | 92.25% | 94.88% | 96.04% |

| Precision | 0.973 | 0.927 | 0.943 | 0.958 |

| MCC | 0.863 | 0.848 | 0.851 | 0.859 |

Table 5.

Comparison of traditional ML models and ours.

| | Ours | SVM | RF | KNN |

|---|

| Accuracy | 97.35% | 91.26% | 87.14% | 73.29% |

| Precision | 0.973 | 0.919 | 0.851 | 0.741 |

| MCC | 0.863 | 0.829 | 0.733 | 0.591 |

Table 6.

Comparison of the models’ accuracy between using DCGAN and other situations.

| | DCGAN | Cutmix | Mosaic | Without Data Extending |

|---|

| ResNet50 | 97.35%(ours) | 95.71% | 96.93% | 95.37% |

| SVM | 91.26% | 88.31% | 91.14% | 88.39% |

Table 7.

Accuracy of detection of two pear varieties that have not been trained on the model.

| | Shanxi Bergamot Pear | Dangshansu Pear |

|---|

| Accuracy | 97.11% | 96.73% |

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).