Tool for Measuring Productivity in Software Development Teams

Abstract

:1. Introduction

2. Background

2.1. Productivity

- Stakeholders: different stakeholders can value a different set of characteristics and objectives;

- Context: the particularities of the project and the social and cultural characteristics of the context can change the perception of productivity. For example, if developers feel that helping others is an action valued by the team, they will feel that spending time helping others is productive;

- Level: individuals, teams, organizations, and communities have different perceptions of productivity. For example, under the view of a developer, refactoring a module of a system that is already working can be considered productive and can be understood as unproductive by other team members;

- Period: a process change can slow down the development in the present but lead to improved team learning over time. Likewise, short-term speed improvements can lead to fatigue and less developer satisfaction over a long period.

2.2. Gamification

- C1—Epic Meaning and Calling: seeks to give the person the conviction that he/she is doing something greater than themself or has been chosen to take action.

- C2—Development and Accomplishment represents the internal impulse of the human being to progress, develop skills, achieve mastery and overcome challenges.

- C3—Empowerment of Creativity and Feedback: this happens when users are engaged in a creative process where new things or new combinations are constantly being discovered.

- C4—Social Influence and Relatedness: this core drive contains the social elements that motivate people, such as orientation, social acceptance, social feedback, companionship, competition, envy.

- C5—Unpredictability and Curiosity: niches of people may feel more engaged by participating in unpredictable processes that stimulate their curiosity.

- C6—Loss and Avoidance are the core drive that describes the motivation generated by the will to avoid a negative event.

- C7—Scarcity and Impatience: this drive represents the motivation obtained by wanting something that you cannot easily have.

- C8–=Ownership and Possession represents the motivation obtained by owning something.

2.3. Related Works

3. Study Settings

3.1. Theoretical Study

3.2. Planning

3.2.1. Identified Factors

3.2.2. The Gamification Project

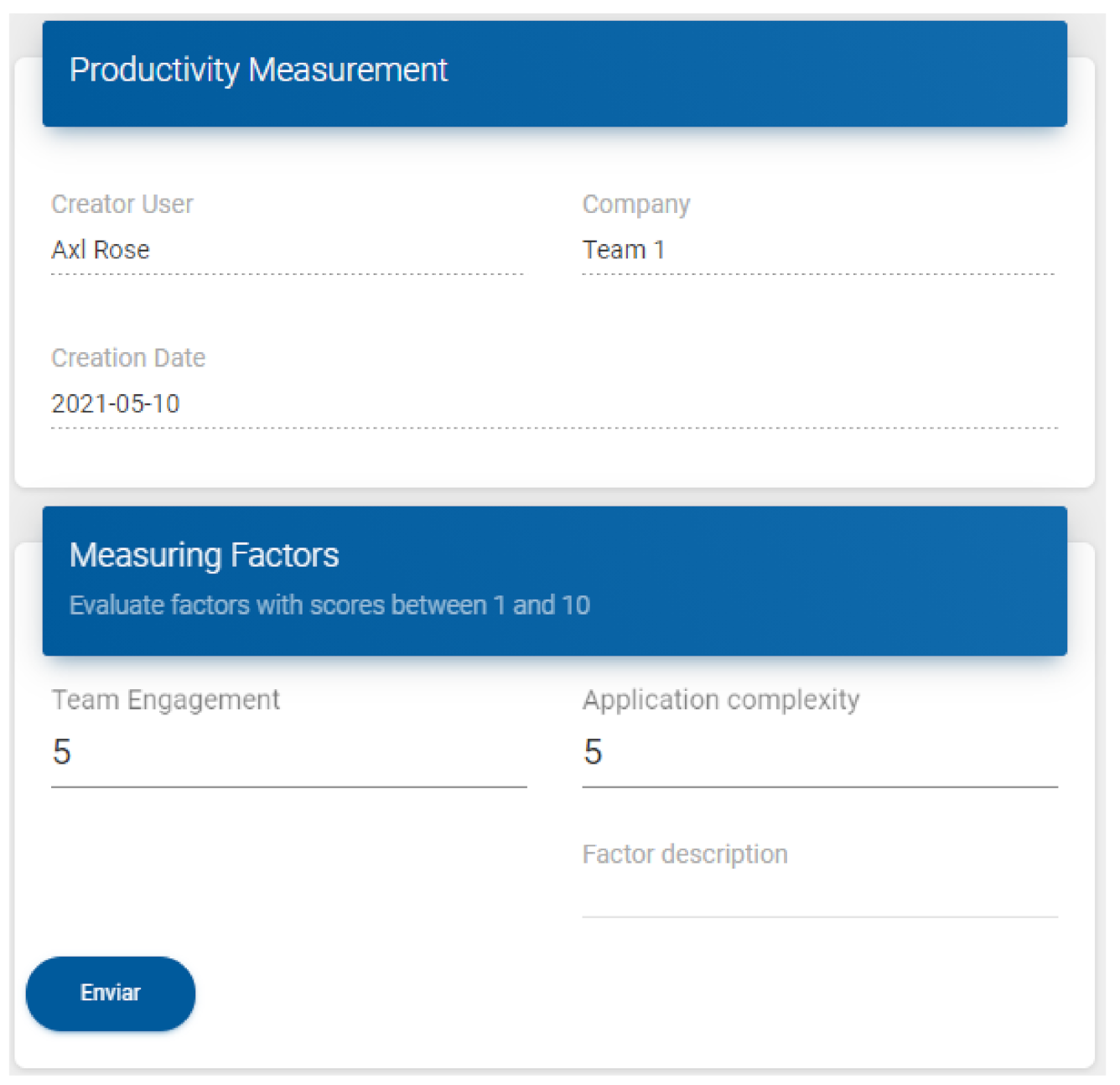

3.2.3. Functionalities of the Web System

3.3. Construction

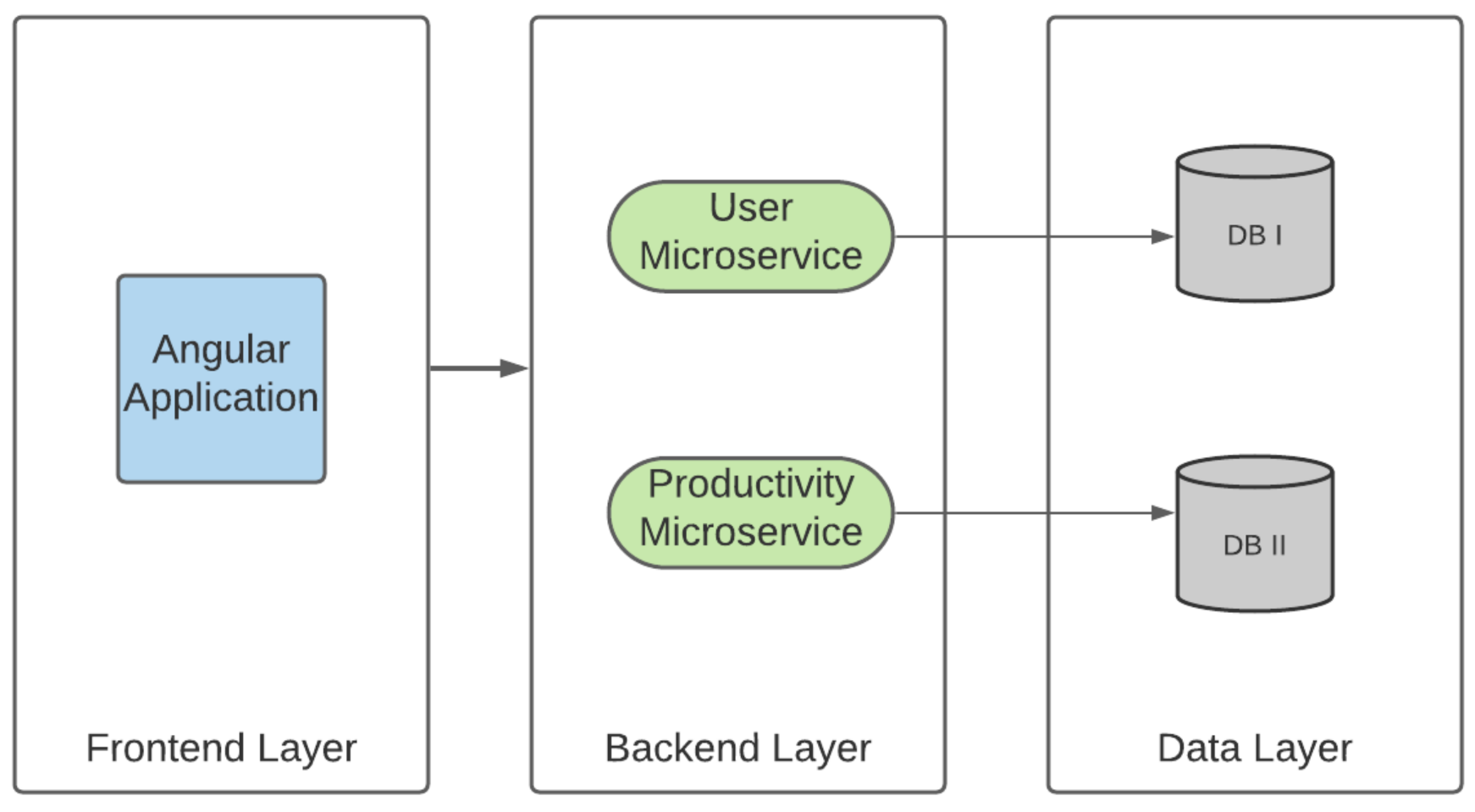

- Frontend Layer: where is the application developed with Angular, a framework created by Google developers to build the application interface using HTML, CSS, and JavaScript (https://angular.io/). The code for this layer is available on GitHub.

- Backend Layer: layer which contains the two microservices built in this work. The “User Microservice“ (code available on GitHub)) is responsible for all operations related to user registration, while the “Productivity Microservice“ (also available on GitHub) is responsible for operations related to the calculation of productivity. Microservices architecture is used as an alternative to monolithic applications because they are simpler to scale, are more flexible, and allow for different contexts to be handled in different code units [51]. The language used to build the microservices was Java with the Spring framework that “makes programming in Java faster, easier and safer”, in addition to being the most popular Java framework in the world (https://spring.io/why-spring/).

- Data Layer: we used the microservices architecture in conjunction with the Database per Service pattern that helps ensure that services are loosely coupled and that changes to a service’s database do not affect any other service. The DBMS (Database Management System) selected was PostgreSQL, which “is a powerful open source object-relational database system” (https://www.postgresql.org/).

3.4. Case Study

4. Results

- People: This group contains nineteen factors related to the characteristics of the people who participate in the software development team. The factors in this group include aspects related to the individual.

- Product: It encompasses the fifteen factors related to the characteristics of the software product itself. The factors present in this include business field, application complexity, and programming language.

- Organization: The twenty-eight factors related to the organization include work environment, knowledge management, team size, and maturity.

- Open Source Software Projects: This group of thirteen factors represents those related to free software projects. The factors of this group include investments in Information and Communication Technology (ICT), contractual relations, and team engagement.

4.1. The Application

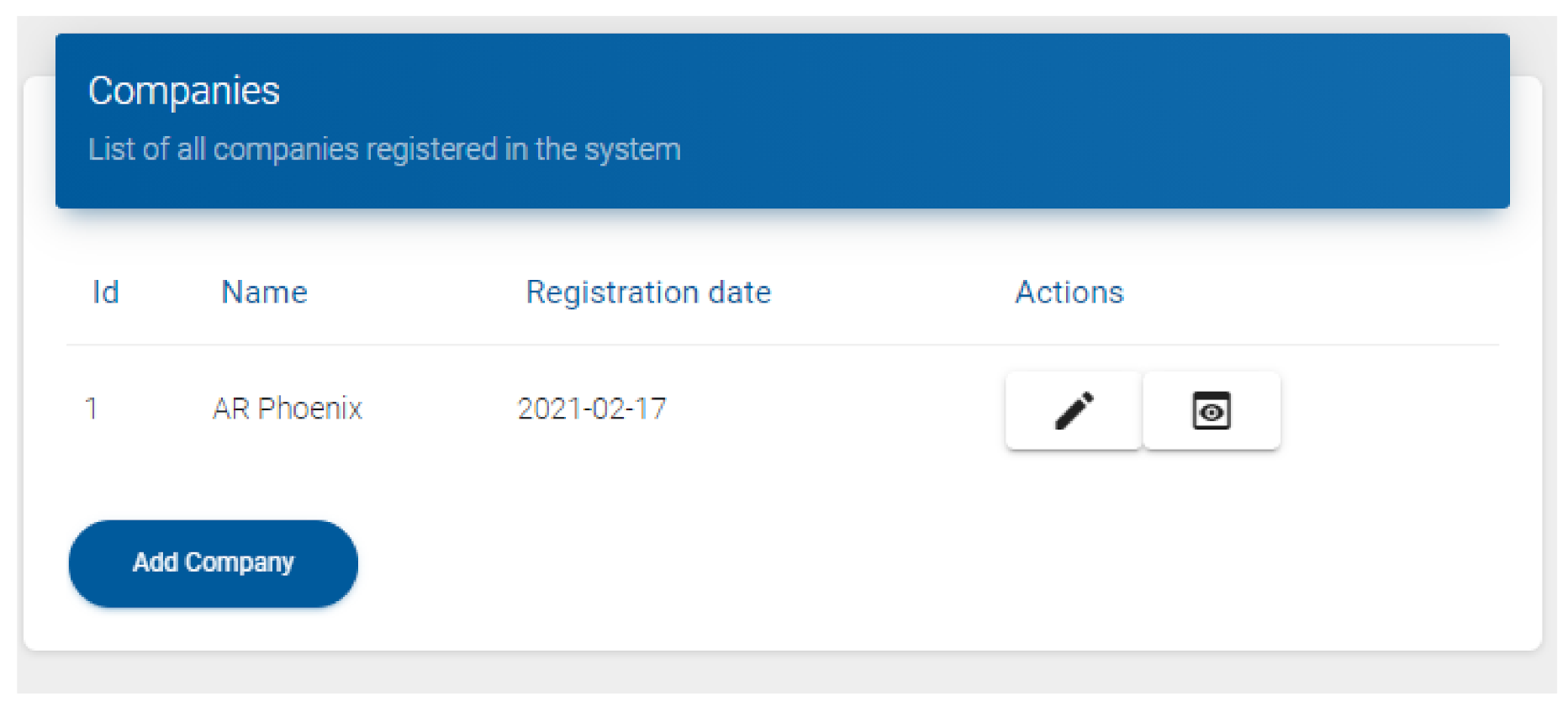

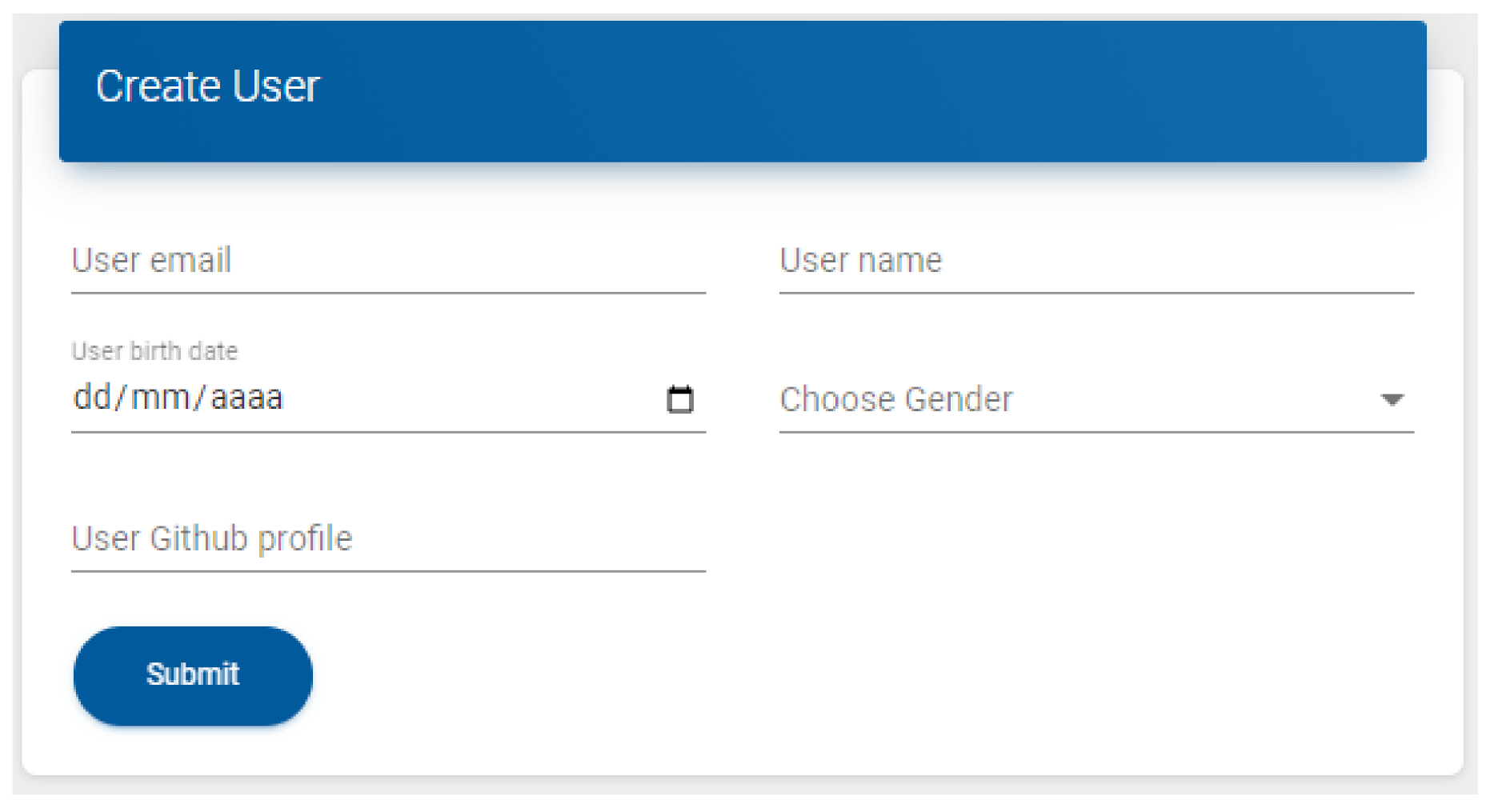

4.1.1. The Admin Features

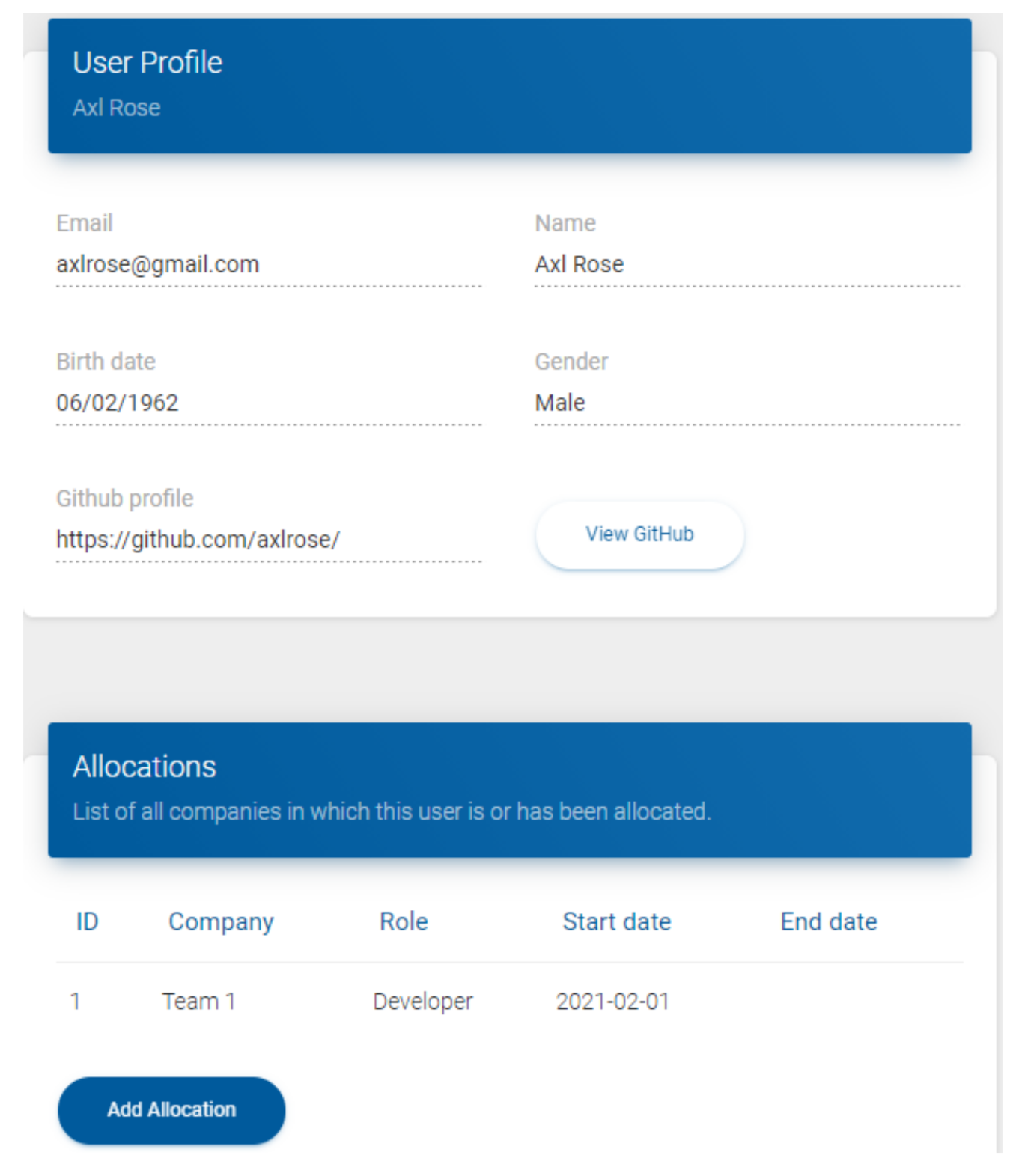

4.1.2. Common Features

| private List<CompanyAssessment> calculateProductivity(List<UserAssessment> lastMonthItems) { |

| // an item of this list represents a company and its productivity |

| List<CompanyAssessment> assessmentsByCompany = new ArrayList<>(); |

| // Scroll through the list of all measurements created in the last month |

| // this list contains an item for each user assessment. |

| lastMonthItems.forEach(userAssessment -> { |

| if (assessmentsByCompany.stream().anyMatch(abc -> abc.getCompanyId() |

| .equals(userAssessment.getCompanyId()))) { |

| // if the valuation is for a company that already exists: update item |

| CompanyAssessment valComp = assessmentsByCompany.stream() |

| .filter(abc -> abc.getCompanyId().equals(userAssessment.getCompanyId())) |

| .collect(Collectors.toList()).get(0); |

| // the productivity of the company is given by the allocated users assessments |

| // so here we take as result the mean between the current value |

| // and the value of the user assessment |

| double productivity = (valComp.getProductivity() + userAssessment.getAssessment())/2; |

| // if its a combo, company gets 10% extra |

| // a combo happens when the company has measurements in all the weeks of the month |

| productivity = isCombo(lastMonthItems, userAssessment.getCompanyId()) |

| ? (productivity * 1.1) : productivity; |

| valComp.setProductivity(productivity); |

| } else { |

| // if the valuation is for a company that does not yet exist: add item |

| // in this case, the productivity value is simply the users’ evaluation |

| CompanyAssessment newItem = CompanyAssessment |

| .leaderboardId(0L) |

| .empresaId(userAssessment.getCompanyId()) |

| .productivity(userAssessment.getAssessment()) |

| .build(); |

| assessmentsByCompany.add(newItem); |

| } |

| }); |

| return assessmentsByCompany; |

| } |

4.2. Case Study Results

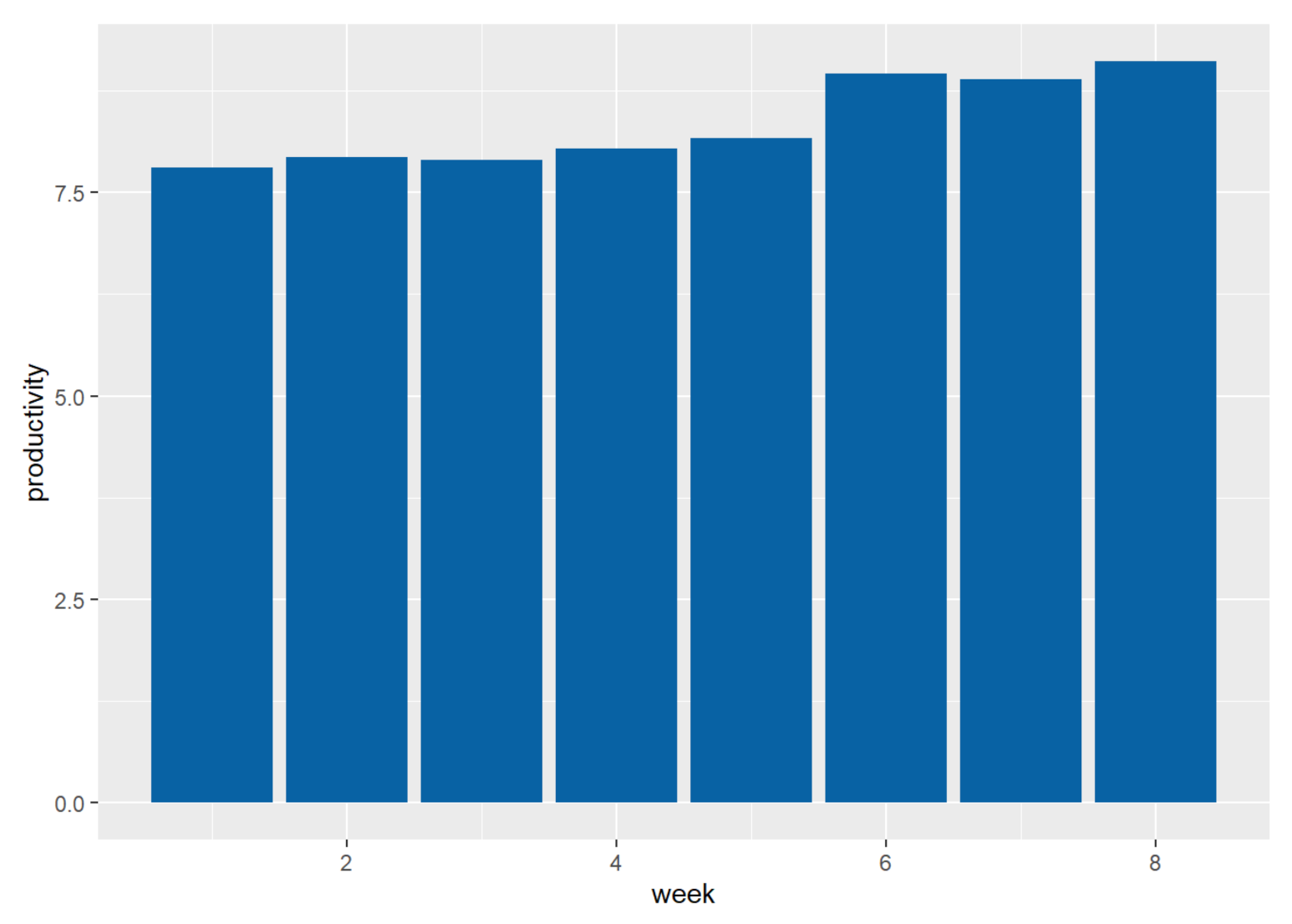

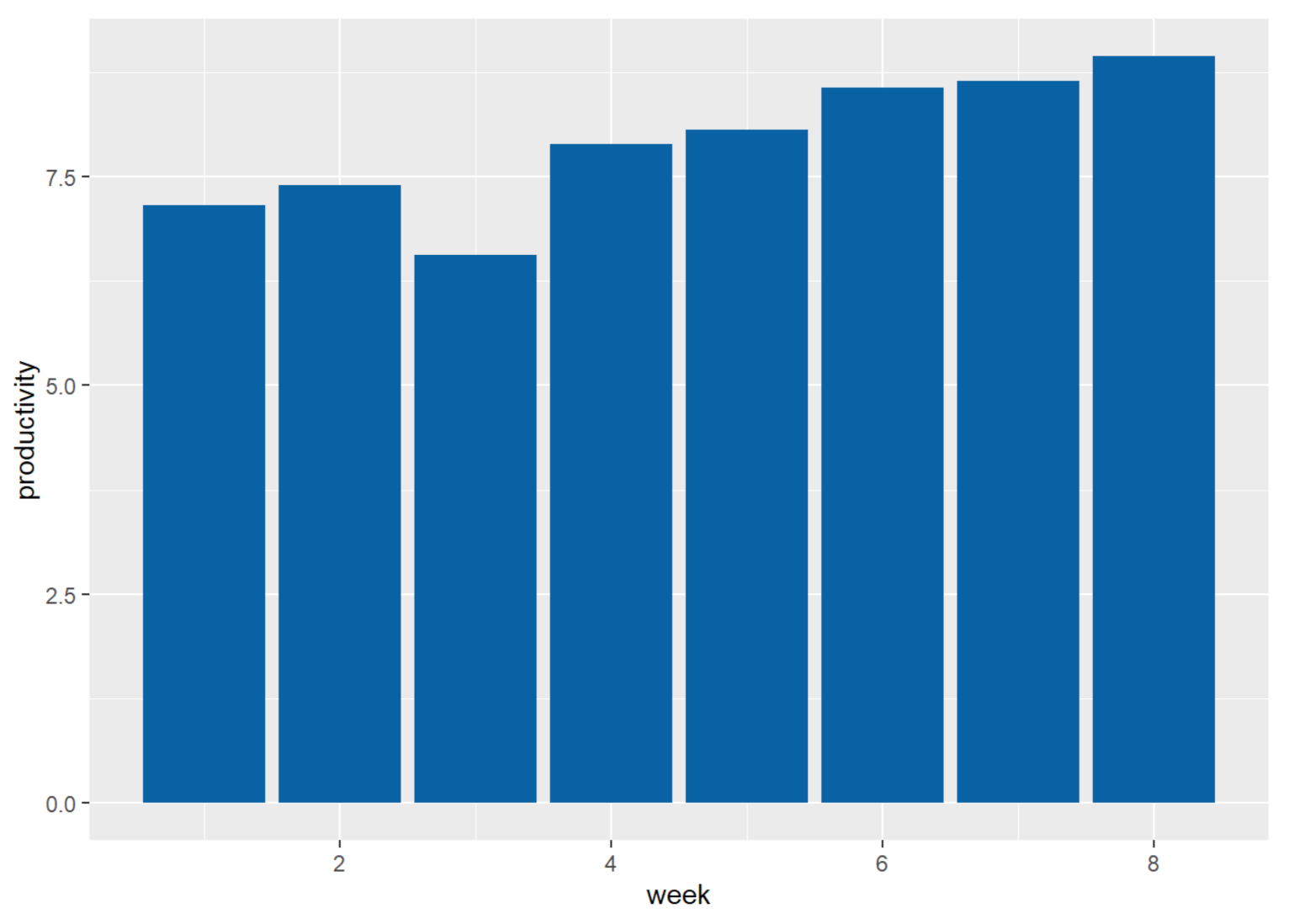

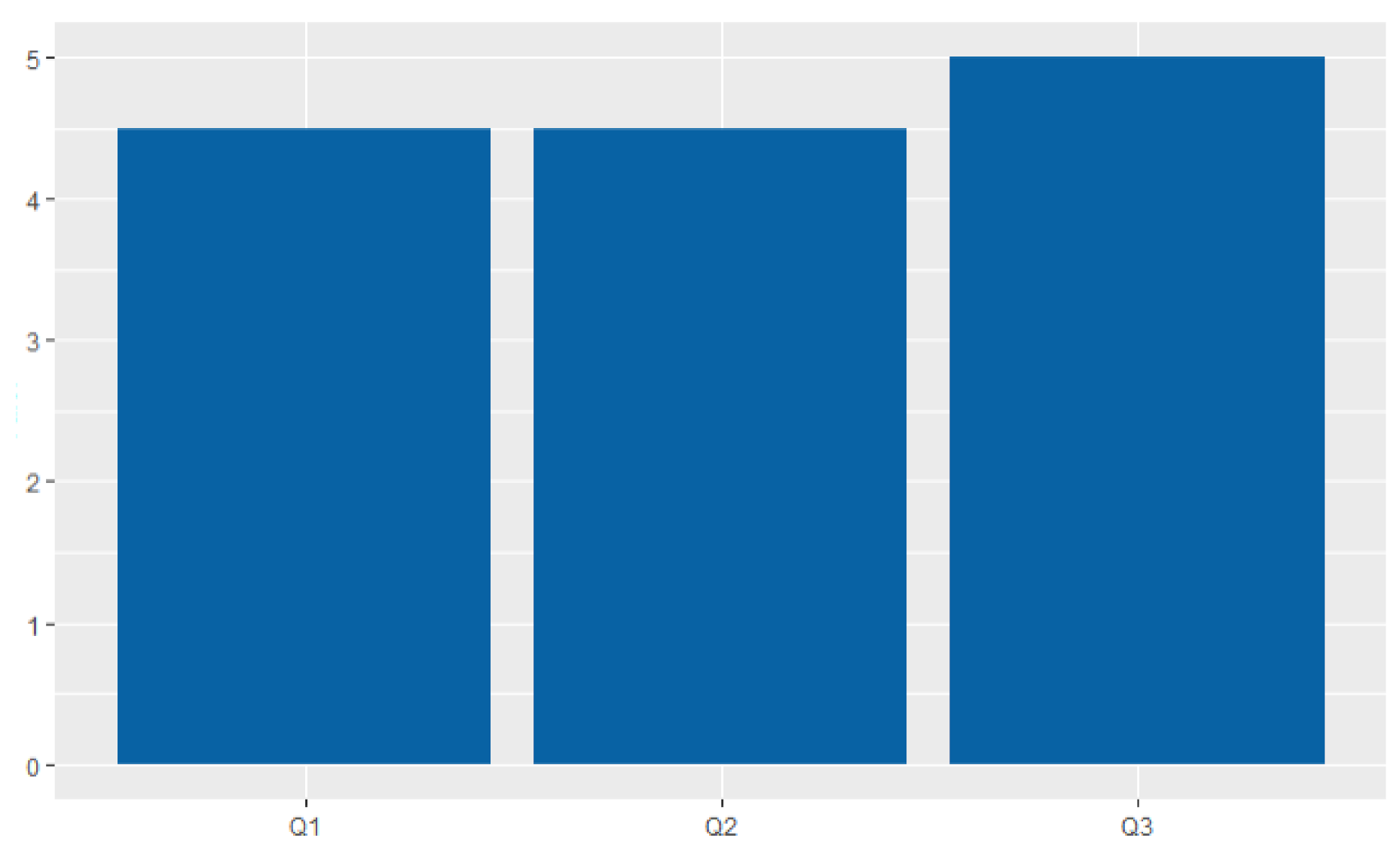

4.2.1. RQ.1. What Is the Effect on Productivity Assessments of Adding Gamification?

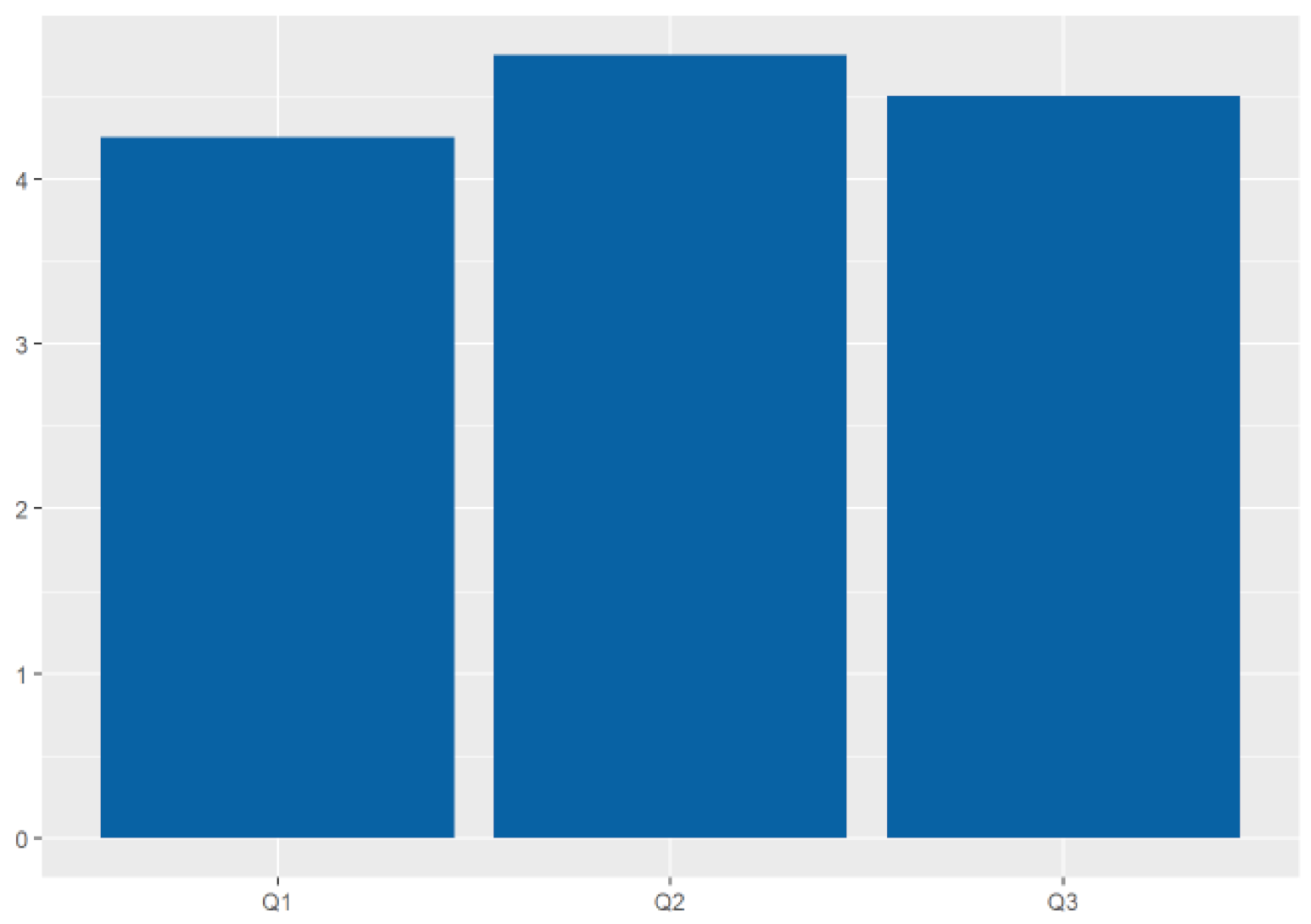

4.2.2. RQ.2. What Is the Perception of Users concerning the Productivity Calculation System?

- Q1: How much do you agree that using the productivity measurement tool helped the team improve productivity?

- Q2: How much do you agree that using gamification helped improve your team’s results?

- Q3: Considering your team’s productivity graph throughout the measurement process, how much do you agree that it represents reality?

5. Threats to Validity

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Factor Detailing

| Category | Factor | Detailing | Rating |

|---|---|---|---|

| People | Focus | the focus factor measures how focused the team is on achieving the iteration goal [53]. | 1.537 |

| People | Collaboration between team members | collaborative work positively influences productivity in software development [22]. | 1.45 |

| People | Ease of communication | in collaborative development environments, the ease of communication between members is an important factor in increasing productivity [22]. | 1.45 |

| People | Motivation | the motivation of those involved in software design positively influences productivity in software development [22]. | 1.425 |

| People | Commitment | it represents the responsibility level that team members are willing to assume in their tasks within their work team [54]. | 1.415 |

| People | Team cohesion | cohesion is one of the factors that positively affect productivity [22]. | 1.325 |

| People | Mental health | the good mental health of employees leads to productivity gains [4]. | 1.3 |

| People | Skills and competences | even without identifying the types of skills and competencies, the relationship between them and productivity is positive [22]. | 1.275 |

| People | Satisfaction | this dimension captures human productivity factors and has several possible subcomponents, including physiological factors, such as fatigue and team comfort measures. [5]. | 1.1 |

| People | Enthusiasm | the degree of enthusiasm for the work is the factor that most affects the productivity of developers [55]. | 1.1 |

| People | Experience | the team members’ experience positively influences productivity in software development [22]. | 1.025 |

| People | Physical health | the good physical health of employees leads to productivity gains [4]. | 0.975 |

| People | Technical qualification | technically well-qualified developers can be more productive because that allows them flexibility in the tasks they can perform [56]. | 0.975 |

| People | Happiness | developers’ happiness positively impacts team productivity [25]. | 0.95 |

| People | Quality of life | the literature points out that the quality of life at work is related to the organization’s productivity [57]. | 0.925 |

| People | Behavioral qualification | the good behavioral qualification of the developers involves focus, concentration, tranquility, commitment [56]. | 0.775 |

| People | Availability of members for allocation | availability of members for allocation to the development team: having the resources available in the necessary time is an important aspect and positively influences productivity [22]. | 0.7 |

| People | Home distractions | in home-office it is common for people to lose productivity because they are distracted from their activities [58]. | 0.488 |

| People | Turnover | the lower the resource turnover within a project, the better for productivity. In such a way, this is a factor of negative influence [22]. | 0.175 |

| Category | Factor | Detailing | Rating |

|---|---|---|---|

| Product | Quality | represents the quality of the work conducted. Such a metric can be obtained according to internal values (for example, the quality of the code and the number of bugs produced) or external (for example, the quality of the product from the perspective of the end users) [5]. | 1.3 |

| Product | Adequate documentation | this factor represents how well the documentation fits the needs [59]. | 0.95 |

| Product | Requirements | considering the dependence on several factors (such as ambiguity and volatility of requirements) the influence of this factor varies according to the context [22]. | 0.85 |

| Product | Business area | it consists of the business area covered by the software, and its impact varies according to the product area [22]. | 0.725 |

| Product | Completeness of design | it shows that the more complete the design when starting development, the better [59]. | 0.725 |

| Product | Poor code quality | the lack of quality in the code developed directly impacts the motivation and productivity of developers [25]. | 0.7 |

| Product | Technological platform | each platform has a productivity impact, so an organization’s analysis of historical bases is needed to define which is more productive [22]. | 0.675 |

| Product | Programming language | the higher the level of abstraction of the language used in the solution, the better software development productivity [22]. | 0.625 |

| Product | Project duration | the duration of a project is a factor that negatively affects productivity [22]. | 0.6 |

| Product | Application complexity | can be defined as the degree of difficulty for a project or part of it [22]. | 0.575 |

| Product | Speed | is the ratio of the time spent required to perform a given amount of work. This factor, as presented by [5], resembles Sharpe’s definition of productivity [7]. | 0.575 |

| Product | Lost time | a quarter of developers’ working time is wasted, and additional code analysis and technical debt cause it [60]. | 0.5 |

| Product | Number/frequency of commits | Helie et al. [41] classify the frequency of code commits in an hour interval as a factor to define productivity. According to the authors, a high number means (according to empirical knowledge) that more work is conducted. | 0.4 |

| Product | Software size | the reason for the negative relationship between productivity and software size is the increased complexity of the project [22]. | 0.35 |

| Product | Type of software developed | the different types of systems have different influences on productivity [22]. | 0.25 |

| Category | Factor | Detailing | Rating |

|---|---|---|---|

| Organization | Trust in other members | the ability of team members to trust each other influences productivity [61]. | 1.61 |

| Organization | Work environment | the work environment contains aspects that together positively influence productivity [22]. | 1.415 |

| Organization | Efficient meetings | the efficiency of meetings and their related practices also affects the productivity of development teams [55]. | 1.415 |

| Organization | Access to information | productivity is positively impacted in a software creation environment where the flow of information between humans and the tools involved is optimized [35]. | 1.29 |

| Organization | Feedback Culture | performance feedbacks influence how well developers produce [55]. | 1.22 |

| Organization | Code reuse | the reuse of code, libraries, or even functionality is a factor that positively impacts productivity in software development [22]. | 1.195 |

| Organization | Maturity | is one of the factors that most positively affect productivity and requires a team with effective communication, high adaptability, conflict management skills, shared decision-making, cohesion, mutual trust, behavioral compliance, clear responsibilities, and shared responsibilities [19]. | 1.195 |

| Organization | Use of best practices in software project management | practices that support the construction of a work environment that favors the commitment and interest of team members are factors of positive impact on productivity [22]. | 1.15 |

| Organization | Merits and rewards system | these systems contribute positively to the productivity of the development team [22]. | 1.098 |

| Organization | Accuracy of information | the accuracy of the information that reaches the development team (such as bug reports, use cases, and change requests) influences its productivity [27] | 1.098 |

| Organization | Team autonomy level | it subjectively represents the extent to which the software team has authority and control in making decisions to carry out the project [62]. | 1.073 |

| Organization | Stakeholder participation in development | in general, it affects productivity positively, but if excessive, this participation can be negative [22]. | 1.07 |

| Organization | Knowledge management | the lack of knowledge exchange between developers is a factor that negatively influences productivity [22]. | 1.05 |

| Organization | Work Tools | the use of good work tools also influences productivity [55]. | 1.05 |

| Organization | Trainings provided by the company | the existence of training is a factor that improves productivity by allowing the acquisition of significant knowledge for software development [22]. | 0.98 |

| Organization | Use of auxiliary tools | no matter how much using different tools requires effort, its use is considered a factor that positively impacts productivity [22]. | 0.951 |

| Organization | Software processes | the improvement of processes leads to improvements of other aspects, such as reuse, the flexibility of adaptation, and process stability achieved under conditions of high maturity. In such a way, this is a positive factor [22]. | 0.951 |

| Organization | Development site | studies indicate that the development location affects productivity (for example, different countries, military or industrial organizations, etc.) [22]. | 0.93 |

| Organization | Sharing members between projects | resource sharing between projects is negative for productivity as developers have to keep different contexts in mind [22]. | 0.829 |

| Organization | Innovative mindset | the existence of a mindset that is always open to new ideas influences the productivity of software developers [55]. | 0.829 |

| Organization | Iteration length | the length of an iteration in days, calculated as the time elapsed between the start and end dates of the iteration, can affect productivity [53]. | 0.756 |

| Organization | Existence of Rework | the existence of rework is negative for productivity as it indicates some other negative aspects as the existence of defects [22]. | 0.63 |

| Organization | Variety of tasks | the variety of types of tasks is one of the factors that affect the productivity of software developers [55]. | 0.54 |

| Organization | Team size | small teams made up of experienced developers have better levels of productivity [22]. | 0.51 |

| Organization | Possibility of remote work | the possibility of doing the work remotely to perform tasks that require uninterrupted concentration positively affects productivity [55]. | 0.49 |

| Organization | Existence of historical measurement history | the existence of historical data positively influences productivity, as such data can serve as a support for comparison and because they can also allow for a better understanding of the behavior of software projects [22]. | 0.46 |

| Organization | Homogeneity | teams with the highest homogeneity levels are more productive, produce better quality code, and are more effective in testing [63]. | 0.415 |

| Organization | Software risk exposure level | represents the level of project uncertainty, having a noticeable impact on how the software can respond to business needs over time [62]. | 0.075 |

| Category | Factor | Detailing | Rating |

|---|---|---|---|

| OSS | Investments in Information and Communication Technology | comprises investments in software, hardware, and laboratories and is a factor of positive influence on productivity [22]. | 2 |

| OSS | Team engagement | in general, developers of open-source software projects are more motivated to contribute and, in addition, there is a very positive exchange of experiences between these individuals [22]. | 1.25 |

| OSS | Developer base | it is natural for developers to be more interested in contributing to open-source software projects that have more developers contributing [64]. Ref. [65] stated that in larger development communities (with a large number of participants) developers are more active. | 0.89 |

| OSS | Application complexity | a modularized architecture without complexity makes it easier for other people to contribute to the project, thus making it more productive [64]. | 0.82 |

| OSS | User base | it is natural for developers to be more interested in contributing to open-source software projects with a larger user base [64]. | 0.64 |

| OSS | Contractual relations | establish more security for developers and therefore make development more productive [22]. | 0.54 |

| OSS | Entry barriers | barriers to entry can directly impact productivity in open-source software projects [22]. | 0.45 |

| OSS | Organizational diversity | open-source software projects with the best organizational diversity, where people from different companies contribute, have better productivity [65]. | 0.43 |

| OSS | Team disengagement | open-source software developers may lose interest in the project due to several factors faced by these [22]. | 0.42 |

| OSS | Gender diversity | teams composed of men and women bring different perspectives and, thus, have better results [22]. | 0.32 |

| OSS | Size correlation (commits X contributors) | Jiang et al. [65] considered, in open-source software development projects, the correlation between the number of commits and the number of contributors as the main factor for productivity. | 0.32 |

| OSS | Project age | software productivity gradually decreases after reaching a peak in the project development cycle. That indicates that project age affects productivity [66]. | 0.275 |

| OSS | Lack of contractual relationships | the lack of contractual relationships allows contributors to free software projects to spend their time contributing to activities that directly increase software productivity [22]. | 0.09 |

References

- Macedo, M.d.M. Gestão da produtividade nas empresas. Rev. Organ. Sistêmica 2012, 1, 110–119. [Google Scholar]

- Ishizaka, A.; Resce, G.; Mareschal, B. Visual management of performance with PROMETHEE productivity analysis. Soft Comput. 2018, 22, 7325–7338. [Google Scholar] [CrossRef] [Green Version]

- Mukred, M.; Yusof, Z.M.; Alotaibi, F.M. Ensuring the Productivity of Higher Learning Institutions Through Electronic Records Management System (ERMS). IEEE Access 2019, 7, 97343–97364. [Google Scholar] [CrossRef]

- Spanbauer, S.J. Reactivating higher education with total quality management: Using quality and productivity concepts, techniques and tools to improve higher education. Total. Qual. Manag. 1995, 6, 519–538. [Google Scholar] [CrossRef]

- Sadowski, C.; Storey, M.D.; Feldt, R. A Software Development Productivity Framework. In Rethinking Productivity in Software Engineering; Sadowski, C., Zimmermann, T., Eds.; Apress Open/Springer: Berlin/Heidelberg, Germany, 2019; pp. 39–47. [Google Scholar] [CrossRef] [Green Version]

- Rosen, E.D. Improving Public Sector Productivity: Concepts and Practice; Sage: Thousand Oaks, CA, USA, 1993. [Google Scholar] [CrossRef]

- Sharpe, A. Productivity Concepts, Trends And Prospects: An Overview. In The Review of Economic Performance and Social Progress 2002: Towards a Social Understanding of Productivity; Andrew Sharpe, E.D., France St-Hilaire, Banting, K., Eds.; Centre for the Study of Living Standards: Ottawa, ON, Canada, 2002; Volume 2. [Google Scholar]

- Bonelli, R.; Fonseca, R. Ganhos de Produtividade e de Eficiência: Novos Resultados para a Economia Brasileira. Pesqui. Planej. Econômico 1998, 28. Available online: http://repositorio.ipea.gov.br/bitstream/11058/2383/1/td_0557.pdf (accessed on 5 September 2021).

- Moreno, A.; Neumann, M.; Mohebalian, P.M.; Thurnher, C.; Hasenauer, H. The Continental Impact of European Forest Conservation Policy and Management on Productivity Stability. Remote. Sens. 2019, 11, 87. [Google Scholar] [CrossRef] [Green Version]

- Triplett, J.E. The Solow Productivity Paradox: What do Computers do to Productivity? Can. J. Econ. Rev. Can. d’Economique 1999, 32, 309–334. [Google Scholar] [CrossRef]

- Ray, D.M.; Samuel, P. Improving the productivity in global software development. In Innovations in Bio-Inspired Computing and Applications; Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2016; Volume 424, pp. 175–185. [Google Scholar] [CrossRef]

- De Aquino Junior, G.S.; de Lemos Meira, S.R. Towards Effective Productivity Measurement in Software Projects. In Proceedings of the Fourth International Conference on Software Engineering Advances, Porto, Portugal, 20–25 September 2009; ICSEA: Porto, Portugal, 2009; pp. 241–249. [Google Scholar] [CrossRef]

- Lavazza, L.; Morasca, S.; Tosi, D. An empirical study on the effect of programming languages on productivity. In Proceedings of the 31st Annual ACM Symposium on Applied Computing, Pisa, Italy, 4–8 April 2016; pp. 1434–1439. [Google Scholar] [CrossRef] [Green Version]

- De Oliveira, E.C.C.; Viana, D.; Cristo, M.; Conte, T. How have Software Engineering Researchers been Measuring Software Productivity? In —A Systematic Mapping Study. In Proceedings of the ICEIS 2017-Proceedings of the 19th International Conference on Enterprise Information Systems, Porto, Portugal, 26–29 April 2017; Volume 2, pp. 76–87. [Google Scholar] [CrossRef]

- Melo, C.D.O.; Cruzes, D.S.; Kon, F.; Conradi, R. Interpretative case studies on agile team productivity and management. Inf. Softw. Technol. 2013, 55, 412–427. [Google Scholar] [CrossRef]

- Morasca, S.; Russo, G. An Empirical Study of Software Productivity. In Proceedings of the 25th International Computer Software and Applications Conference (COMPSAC 2001), Invigorating Software Development, Chicago, IL, USA, 8–12 October 2001; pp. 317–322. [Google Scholar] [CrossRef]

- Yilmaz, M.; O’Connor, R.V.; Clarke, P. Effective Social Productivity Measurements during Software Development—An Empirical Study. Int. J. Softw. Eng. Knowl. Eng. 2016, 26, 457–490. [Google Scholar] [CrossRef] [Green Version]

- Mizuno, O.; Kikuno, T.; Inagaki, K.; Takagi, Y.; Sakamoto, K. Statistical analysis of deviation of actual cost from estimated cost using actual project data. Inf. Softw. Technol. 2000, 42, 465–473. [Google Scholar] [CrossRef]

- Ramirez-Mora, S.L.; Oktaba, H. Team Maturity in Agile Software Development: The Impact on Productivity. In Proceedings of the 2018 IEEE International Conference on Software Maintenance and Evolution, ICSME 2018, Madrid, Spain, 23–29 September 2018; pp. 732–736. [Google Scholar] [CrossRef]

- Fardo, M. A GamificaçãO Aplicada em Ambientes de Aprendizagem. Renote 2013, 11. [Google Scholar] [CrossRef]

- Schlemmer, E. GamificaçãO em EspaçOs de ConvivêNcia HíBridos e Multimodais: Design e CogniçãO em DiscussãO. Rev. FAEEBA Educ. Contemp. 2014, 23. [Google Scholar] [CrossRef]

- Canedo, E.D.; Santos, G.A. Factors Affecting Software Development Productivity: An Empirical Study. In Proceedings of the XXXIII Brazilian Symposium on Software Engineering, New York, NY, USA, 23–27 September 2019; SBES 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 307–316. [Google Scholar] [CrossRef]

- Tangen, S. Understanding the concept of productivity. In Proceedings of the 7th Asia-Pacific Industrial Engineering and Management Systems Conference, Taipei, Taiwan; 2002; pp. 18–20. Available online: https://shorturl.at/vwBCN (accessed on 5 September 2021).

- Kemerer, C.F. Software Development Productivity Measurement. Data Base 1986, 17, 41. [Google Scholar] [CrossRef]

- Graziotin, D.; Fagerholm, F. Happiness and the Productivity of Software Engineers. In Rethinking Productivity in Software Engineering; Sadowski, C., Zimmermann, T., Eds.; Apress Open/Springer: Berlin/Heidelberg, Germany, 2019; pp. 109–124. [Google Scholar] [CrossRef] [Green Version]

- Petersen, K. Measuring and predicting software productivity: A systematic map and review. Inf. Softw. Technol. 2011, 53, 317–343. [Google Scholar] [CrossRef]

- Vasilescu, B.; Yu, Y.; Wang, H.; Devanbu, P.T.; Filkov, V. Quality and productivity outcomes relating to continuous integration in GitHub. In Proceedings of the 2015 10th Joint Meeting on Foundations of Software Engineering, ESEC/FSE 2015, Bergamo, Italy, 30 August–4 September 2015; pp. 805–816. [Google Scholar] [CrossRef]

- Delaney, S.; Schmidt, D. A Productivity Framework for Software Development Literature Review. In Proceedings of the 2nd International Conference on Software Engineering and Information Management, Bali, Indonesia, 10–13 January 2019; ICSIM 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 69–74. [Google Scholar] [CrossRef]

- Navarro, G. Gamificação: A transformação do conceito do termo jogo no contexto da pós-modernidade. Bibl. Lat. Am. Cult. Comun. 2013, 1, 1–26. [Google Scholar]

- Zichermann, G.; Cunningham, C. Gamification by Design: Implementing Game Mechanics in Web and Mobile Apps, 1st ed.; O’Reilly Media, Inc.: Newton, MA, USA, 2011. [Google Scholar]

- Leite, B. Gamificando as aulas de química: Uma análise prospectiva das propostas de licenciandos em química. RENOTE Rev. Novas Tecnol. Educ. 2017, 15, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Chou, Y. Actionable Gamification: Beyond Points, Badges, and Leaderboards; CreateSpace: Scotts Valley, CA, USA, 2015. [Google Scholar]

- Oliveira, E.; Fernandes, E.; Steinmacher, I.; Cristo, M.; Conte, T.; Garcia, A. Code and commit metrics of developer productivity: a study on team leaders perceptions. Empir. Softw. Eng. 2020, 25, 2519–2549. [Google Scholar] [CrossRef]

- Souza, A.L.M.d.; Evangelista, R.A.; Bueno, A.A.; Silva, L.A.d. A Influência da Qualidade de Vida no Trabalho (QVT) na Produtividade de Equipes de Manutenção; Atena Editora: Ponta Grossa-PR, Brazil, 2019. [Google Scholar] [CrossRef]

- Murphy, G.C.; Kersten, M.; Elves, R.; Bryan, N. Enabling Productive Software Development by Improving Information Flow. In Rethinking Productivity in Software Engineering; Apress: Berkeley, CA, USA, 2019; pp. 281–292. [Google Scholar] [CrossRef] [Green Version]

- Naik, N.; Jenkins, P. Relax, It’s a Game: Utilising Gamification in Learning Agile Scrum Software Development. In Proceedings of the 2019 IEEE Conference on Games (CoG), London, UK, 20–23 August 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Haefner, J.; Makrigeorgis, C. A Study of the Systemic Relationship Between Worker Motivation and Productivity. IJTD 2010, 1, 52–69. [Google Scholar] [CrossRef] [Green Version]

- Moldon, L.; Strohmaier, M.; Wachs, J. How Gamification Affects Software Developers: Cautionary Evidence from a Natural Experiment on GitHub. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE), Madrid, Spain, 25–28 May 2021; IEEE/ACM: Piscataway, NJ, USA, 2021; pp. 549–561. [Google Scholar]

- Coonradt, C.; Nelson, L. The Game of Work: How to Enjoy Work as Much as Play; Shadow Mountain: Layton, UT, USA, 1985. [Google Scholar]

- De Quadros, G.B.F. Construindo o estado da arte da gamificação. In Anais do Encontro Virtual de Documentação em Software Livre e Congresso Internacional de Linguagem e Tecnologia Online; CILTec: Minas Gerais, Brasil, 2016; Volume 4, pp. 1–6. [Google Scholar]

- Hélie, J.; Wright, I.; Ziegler, A. Measuring software development productivity: A machine learning approach. In Proceedings of the Conference on Machine Learning for Programming Workshop, Affiliated with FLoC, Oxford, UK, 18–19 July 2018; Volume 18. [Google Scholar]

- King, N.C.d.O.; Lima, E.P.d.; Costa, S.E.G.d. Produtividade sistêmica: Conceitos e aplicações. Production 2014, 24, 160–176. [Google Scholar] [CrossRef] [Green Version]

- Ciervo, J.; Shen, S.; Stallcup, K.; Thomas, A.; Farnum, M.; Lobanov, V.; Agrafiotis, D. A new risk and issue management system to improve productivity, quality, and compliance in clinical trials. JAMIA Open 2019, 2. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Palvalin, M.; Vuolle, M.; Jääskeläinen, A.; Laihonen, H.; Lönnqvist, A. SmartWoW—Constructing a tool for knowledge work performance analysis. Int. J. Product. Perform. Manag. 2015, 64, 479–498. [Google Scholar] [CrossRef]

- Balk, B.M.; Barbero, J.; Zofío, J.L. A toolbox for calculating and decomposing Total Factor Productivity indices. Comput. Oper. Res. 2020, 115, 104853. [Google Scholar] [CrossRef]

- Allen, I.E.; Seaman, C.A. Likert scales and data analyses. Qual. Prog. 2007, 40, 64–65. [Google Scholar]

- Pressman, R. Software Engineering: A Practitioner’s Approach, 7th ed.; McGraw-Hill, Inc.: New York, NY, USA, 2009. [Google Scholar]

- Martin, R.C. Agile Software Development: Principles, Patterns, and Practices; Prentice Hall PTR: Hoboken, NJ, USA, 2003. [Google Scholar]

- Ampatzoglou, A.; Tsintzira, A.; Arvanitou, E.; Chatzigeorgiou, A.; Stamelos, I.; Moga, A.; Heb, R.; Matei, O.; Tsiridis, N.; Kehagias, D.D. Applying the Single Responsibility Principle in Industry: Modularity Benefits and Trade-offs. In Proceedings of the Evaluation and Assessment on Software Engineering, EASE 2019, Copenhagen, Denmark, 15–17 April 2019; Ali, S., Garousi, V., Eds.; ACM: Copenhagen, Denmark, 2019; pp. 347–352. [Google Scholar] [CrossRef]

- Rinker, F.; Waltersdorfer, L.; Biffl, S. Towards Test-Driven Model Development in Production Systems Engineering. In Proceedings of the 22nd International Conference on Enterprise Information Systems, ICEIS 2020, Prague, Czech Republic, 5–7 May 2020; Filipe, J., Smialek, M., Brodsky, A., Hammoudi, S., Eds.; SCITEPRESS: Prague, Czech Republic, 2020; Volume 1, pp. 213–219. [Google Scholar] [CrossRef]

- Richardson, C. Microservices Patterns: With Examples in Java; Manning Publications: New York, NY, USA, 2018. [Google Scholar]

- Sutherland, J. Scrum, a Arte de Fazer o Dobro do Trabalho em Metade do Tempo; LUA DE PAPEL: New York, NY, USA, 2016. [Google Scholar]

- Scott, E.; Charkie, K.N.; Pfahl, D. Productivity, Turnover, and Team Stability of Agile Teams in Open-Source Software Projects. In Proceedings of the 46th Euromicro Conference on Software Engineering and Advanced Applications, SEAA 2020, Portoroz, Slovenia, 26–28 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 124–131. [Google Scholar] [CrossRef]

- Machuca-Villegas, L.; Gasca-Hurtado, G.P.; Puente, S.M.; Tamayo, L.M.R. An Instrument for Measuring Perception about Social and Human Factors that Influence Software Development Productivity. JUCS J. Univers. Comput. Sci. 2021, 27, 111–134. [Google Scholar] [CrossRef]

- Murphy-Hill, E.; Jaspan, C.; Sadowski, C.; Shepherd, D.; Phillips, M.; Winter, C.; Knight, A.; Smith, E.; Jorde, M. What Predicts Software Developers’ Productivity? IEEE Trans. Softw. Eng. 2019, 47, 582–594. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, E.; Conte, T.; Cristo, M. Fatores de InfluêNcia na Produtividade dos Desenvolvedores de Organizações de Software. Ph.D. Thesis, Universidade Federal do Amazonas, Manaus, Spain, 2017. [Google Scholar]

- Sauerssig, R.H.S.S.; Sparemberger, A.; Zamberlan, L.; Büttenbender, P.L.; Kuhn, I.N. Impacto e Influência dos Fatores da Qualidade de vida no Desempenho Pessoal: O caso de uma Instituição de Ensino Superior (IES/RS). XIX Coloq. Int. Gest. Univ. 2019. [Google Scholar]

- Russo, D.; Hanel, P.H.P.; Altnickel, S.; van Berkel, N. Predictors of well-being and productivity among software professionals during the COVID-19 pandemic - a longitudinal study. Empir. Softw. Eng. 2021, 26, 62. [Google Scholar] [CrossRef] [PubMed]

- Wagner, S.; Ruhe, M. A Systematic Review of Productivity Factors in Software Development. arXiv 2018, arXiv:1801.06475. [Google Scholar]

- Besker, T.; Martini, A.; Bosch, J. Software developer productivity loss due to technical debt - A replication and extension study examining developers’ development work. J. Syst. Softw. 2019, 156, 41–61. [Google Scholar] [CrossRef]

- Vargas, P.S.C.; Mauricio, D. New Factors Affecting Productivity of the Software Factory. IJITSA 2020, 13, 1–26. [Google Scholar] [CrossRef]

- Chapetta, W.A.; Travassos, G.H. Towards an evidence-based theoretical framework on factors influencing the software development productivity. Empir. Softw. Eng. 2020, 25, 3501–3543. [Google Scholar] [CrossRef]

- Qamar, N.; Malik, A.A. Birds of a Feather Gel Together: Impact of Team Homogeneity on Software Quality and Team Productivity. IEEE Access 2019, 7, 96827–96840. [Google Scholar] [CrossRef]

- Midha, V.; Palvia, P. Factors affecting the success of Open Source Software. J. Syst. Softw. 2012, 85, 895–905. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Q.; Lee, Y.C.; Davis, J.G.; Zomaya, A.Y. Diversity, Productivity, and Growth of Open Source Developer Communities. arXiv 2018, arXiv:1809.03725. [Google Scholar]

- Liao, Z.; Zhao, Y.; Liu, S.; Zhang, Y.; Liu, L.; Long, J. The Measurement of the Software Ecosystem’s Productivity with GitHub. Comput. Syst. Sci. Eng. 2021, 36, 239–258. [Google Scholar] [CrossRef]

| Feature | Iteration |

|---|---|

| User registration | 1 |

| Login | 1 |

| Companies registration | 2 |

| Factor registration | 2 |

| Measurement with registered factors | 3 |

| Leaderboards | 4 |

| Trophy room | 4 |

| Combos | 4 |

| ID | Team | Role | Experience | Level |

|---|---|---|---|---|

| P01 | 1 | Project Manager | more 30 years | Graduate |

| P02 | 1 | Full-Stack Developer | 7 years | Postgraduate |

| P03 | 1 | Integration Architect | 14 years | Graduate |

| P04 | 1 | Full-Stack Developer | 5 years | Master’s student |

| P05 | 2 | Back-End Developer | 8 years | Postgraduate |

| P06 | 2 | Front-End Developer | 15 years | High school |

| P07 | 2 | Project Manager | more 20 years | Graduate |

| P08 | 2 | Full-Stack Developer | 5 years | Master’s student |

| Category | Selected Factors |

|---|---|

| People | 1. Collaboration between team members |

| 2. Ease of communication | |

| 3. Motivation | |

| 4. Team cohesion | |

| 5. Skills and competences | |

| Product | 6. Lost time |

| 7. Poor code quality | |

| 8. Completeness of design | |

| 9. Requirements | |

| 10. Quality | |

| 11. Adequate documentation | |

| Organization | 12. Code reuse |

| 13. Feedback Culture | |

| 14. Access to information | |

| 15. Efficient meetings | |

| 16. Working environment | |

| 17. Trust in other members | |

| OSS | 18. Organizational diversity |

| 19. Application complexity | |

| 20. Team engagement | |

| 21. IT investments |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mota, J.S.; Tives, H.A.; Canedo, E.D. Tool for Measuring Productivity in Software Development Teams. Information 2021, 12, 396. https://doi.org/10.3390/info12100396

Mota JS, Tives HA, Canedo ED. Tool for Measuring Productivity in Software Development Teams. Information. 2021; 12(10):396. https://doi.org/10.3390/info12100396

Chicago/Turabian StyleMota, Jhemeson Silva, Heloise Acco Tives, and Edna Dias Canedo. 2021. "Tool for Measuring Productivity in Software Development Teams" Information 12, no. 10: 396. https://doi.org/10.3390/info12100396

APA StyleMota, J. S., Tives, H. A., & Canedo, E. D. (2021). Tool for Measuring Productivity in Software Development Teams. Information, 12(10), 396. https://doi.org/10.3390/info12100396