Exploring Multiple and Coordinated Views for Multilayered Geospatial Data in Virtual Reality

Abstract

1. Introduction

- with a novel spatial multiplexing approach based on a stack of maps, derived from a study of the available design space for comparison tasks and its application in VR; and,

- an evaluation of this stack wholly done in VR, in comparison to two more traditional systems within a controlled user study.

1.1. Related Work

1.1.1. Urban Data Visualization

1.1.2. Immersive Analytics

1.1.3. Multiple and Coordinated Views

1.2. System Design

1.2.1. Visual Composition Design Patterns

- Juxtaposition: placing visualizations side-by-side in one view;

- Superimposition:overlaying visualizations;

- Overloading: utilizing the space of one visualizations for another;

- Nesting: nesting the contents of one visualization inside another; and,

- Integration: placing visualizations in the same view with visual links.

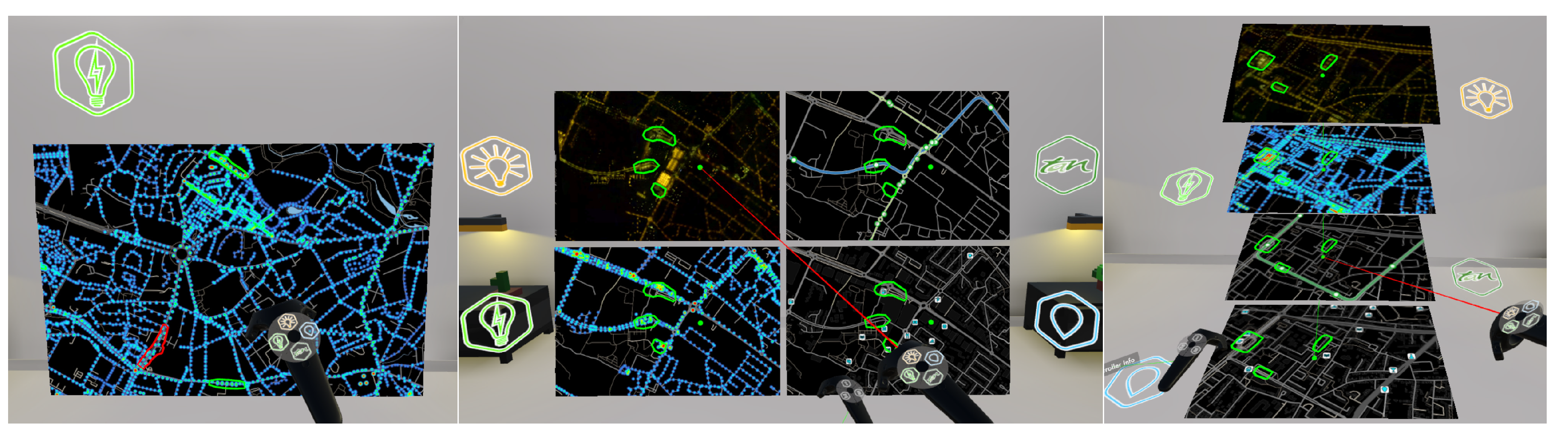

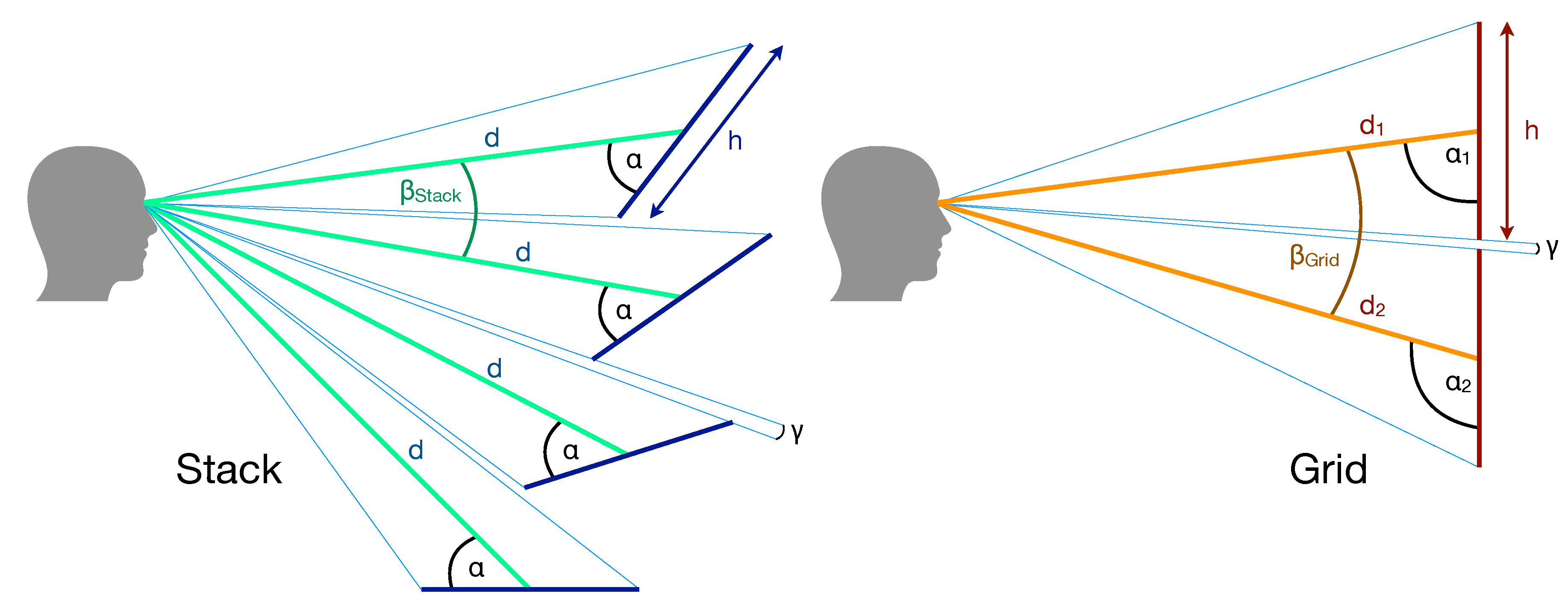

1.2.2. The Stack

2. Materials and Methods

2.1. Task Design and Considerations

- Data + Task= Visualization? and

- Data + Visualization= Task?

- the number of items being compared;

- the size or complexity of the individual items; and,

- the size or complexity of the relationships.

2.2. Use Case: Urban Illumination

- Light pollution: an orthoimage taken at night over the city,

- Energy consumption: a heatmap visualization of the electrical energy each street lamp consumes,

- Night transportation: a map of public transit lines that operate at night and their stops, including bike-sharing stations; and,

- Night POIs: a map of points of interest that are relevant to nighttime activities.

2.3. Implementation and Interaction

2.3.1. Technology

2.3.2. Configuration

2.3.3. Interaction

2.4. Participants

2.5. Stimuli

2.6. Design and Procedure

2.6.1. Tutorial Phase

2.6.2. Evaluation Phase

2.6.3. Balancing

2.7. Apparatus and Measurements

3. Results

3.1. Preferences

- Map legibility: which system showed the map layers in the clearest way and made them easier to understand for you?

- Ease of use: which system made interacting with the maps and candidate areas easier for you?

- Visual design: which system looked more appealing to you?

3.2. Participant Characteristics

3.3. Subgroupings

- (z)ero (never, n = 9),

- (s)ome (some per year, n = 9), or

- (f)requent (at least some per month, n = 8) 3D video (G)aming,

- (r)are (1–3 per year, n = 10),

- (s)ome (>3 per year, n = 7), or

- (f)requent (at least multiple per month, n = 9) city (M)ap consultation.

3.4. System Interactions and Performance

3.5. Visuo-Motor Behavior

3.5.1. Saccade Amplitudes

3.5.2. Saccade Directions

3.5.3. Fixation Durations and Saccade Velocities

3.6. User Feedback

3.7. User Comfort

3.8. Urbanism Use-Case Results

4. Discussion

Limitations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| HMD | Head-Mounted Display |

| MCV | Multiple and Coordinated Views |

| PCM | Pairwise Comparison Matrix |

| VR | Virtual Reality |

Appendix A. Stack Layout Views and Comparisons

Appendix B. Saccade Polar Plots

References

- Bertin, J. Sémiologie Graphique: Les Diagrammes-Les Réseaux-Les cartes; Gauthier-Villars: Paris, France, 1967. [Google Scholar]

- Ware, C. Information Visualization: Perception for Design; Morgan Kaufmann: Waltham, MA, USA, 2012. [Google Scholar]

- Munzner, T. Visualization Analysis and Design; AK Peters/CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Andrienko, G.; Andrienko, N.; Jankowski, P.; Keim, D.; Kraak, M.; MacEachren, A.; Wrobel, S. Geovisual analytics for spatial decision support: Setting the research agenda. Int. J. Geogr. Inf. Sci. 2007, 21, 839–857. [Google Scholar] [CrossRef]

- Lobo, M.J.; Pietriga, E.; Appert, C. An evaluation of interactive map comparison techniques. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems—CHI ’15, Seoul, Korea, 18–23 April 2015; ACM Press: New York, NY, USA, 2015; pp. 3573–3582. [Google Scholar] [CrossRef]

- Roberts, J.C. State of the art: Coordinated & multiple views in exploratory visualization. In Proceedings of the Fifth International Conference on Coordinated and Multiple Views in Exploratory Visualization (CMV 2007), Zurich, Switzerland, 2 July 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 61–71. [Google Scholar] [CrossRef]

- Goodwin, S.; Dykes, J.; Slingsby, A.; Turkay, C. Visualizing Multiple Variables Across Scale and Geography. IEEE Trans. Vis. Comput. Graph. 2016, 22, 599–608. [Google Scholar] [CrossRef] [PubMed]

- Wang Baldonado, M.Q.; Woodruff, A.; Kuchinsky, A. Guidelines for Using Multiple Views in Information Visualization. In Proceedings of the Working Conference on Advanced Visual Interfaces AVI ’00, Palermo, Italy, 23–26 May 2000; Association for Computing Machinery: New York, NY, USA, 2000; pp. 110–119. [Google Scholar] [CrossRef]

- Healey, C.; Enns, J. Attention and visual memory in visualization and computer graphics. IEEE Trans. Vis. Comput. Graph. 2011, 18, 1170–1188. [Google Scholar] [CrossRef] [PubMed]

- Spur, M.; Tourre, V.; Coppin, J. Virtually physical presentation of data layers for spatiotemporal urban data visualization. In Proceedings of the 2017 23rd International Conference on Virtual System & Multimedia (VSMM), Dublin, Ireland, 31 October–2 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Spur, M.; Houel, N.; Tourre, V. Visualizing Multilayered Geospatial Data in Virtual Reality to Assess Public Lighting. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B4-2020, 623–630. [Google Scholar] [CrossRef]

- Javed, W.; Elmqvist, N. Exploring the design space of composite visualization. In Proceedings of the 2012 IEEE Pacific Visualization Symposium, Songdo, Korea, 28 February–2 March 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–8. [Google Scholar] [CrossRef]

- Zheng, Y.; Wu, W.; Chen, Y.; Qu, H.; Ni, L.M. Visual Analytics in Urban Computing: An Overview. IEEE Trans. Big Data 2016, 2, 276–296. [Google Scholar] [CrossRef]

- Keim, D.; Andrienko, G.; Fekete, J.D.; Görg, C.; Kohlhammer, J.; Melançon, G. Visual analytics: Definition, process, and challenges. In Information Visualization; Springer: Berlin/Heidelberg, Germany, 2008; pp. 154–175. [Google Scholar]

- Dwyer, T.; Marriott, K.; Isenberg, T.; Klein, K.; Riche, N.; Schreiber, F.; Stuerzlinger, W.; Thomas, B.H. Immersive analytics: An introduction. In Immersive Analytics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–23. [Google Scholar]

- Ferreira, N.; Poco, J.; Vo, H.T.; Freire, J.; Silva, C.T. Visual Exploration of Big Spatio-Temporal Urban Data: A Study of New York City Taxi Trips. IEEE Trans. Vis. Comput. Graph. 2013, 19, 2149–2158. [Google Scholar] [CrossRef]

- Liu, H.; Gao, Y.; Lu, L.; Liu, S.; Qu, H.; Ni, L.M. Visual analysis of route diversity. In Proceedings of the 2011 IEEE Conference on Visual Analytics Science and Technology (VAST), Providence, RI, USA, 23–28 October 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 171–180. [Google Scholar]

- Ferreira, N.; Lage, M.; Doraiswamy, H.; Vo, H.; Wilson, L.; Werner, H.; Park, M.; Silva, C. Urbane: A 3D framework to support data driven decision making in urban development. In Proceedings of the 2015 IEEE Conference on Visual Analytics Science and Technology (VAST), Chicago, IL, USA, 25–30 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 97–104. [Google Scholar] [CrossRef]

- Chen, Z.; Qu, H.; Wu, Y. Immersive Urban Analytics through Exploded Views. In Proceedings of the IEEE VIS Workshop on Immersive Analytics: Exploring Future Visualization and Interaction Technologies for Data Analytics, Phoenix, AZ, USA, 2017; Available online: http://groups.inf.ed.ac.uk/vishub/immersiveanalytics/papers/IA_1052-paper.pdf (accessed on 31 August 2020).

- Edler, D.; Dickmann, F. Elevating Streets in Urban Topographic Maps Improves the Speed of Map-Reading. Cartogr. Int. J. Geogr. Inf. Geovis. 2015, 50, 217–223. [Google Scholar] [CrossRef]

- Fonnet, A.; Prié, Y. Survey of Immersive Analytics. IEEE Trans. Vis. Comput. Graph. 2019, 1. [Google Scholar] [CrossRef]

- Chandler, T.; Cordeil, M.; Czauderna, T.; Dwyer, T.; Glowacki, J.; Goncu, C.; Klapperstueck, M.; Klein, K.; Marriott, K.; Schreiber, F.; et al. Immersive analytics. In Proceedings of the 2015 Big Data Visual Analytics (BDVA), Hobart, Australia, 22–25 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Yang, Y.; Jenny, B.; Dwyer, T.; Marriott, K.; Chen, H.; Cordeil, M. Maps and globes in virtual reality. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2018; Volume 37, pp. 427–438. [Google Scholar]

- Yang, Y.; Dwyer, T.; Jenny, B.; Marriott, K.; Cordeil, M.; Chen, H. Origin-Destination Flow Maps in Immersive Environments. IEEE Trans. Vis. Comput. Graph. 2018, 25, 693–703. [Google Scholar] [CrossRef]

- Hurter, C.; Riche, N.H.; Drucker, S.M.; Cordeil, M.; Alligier, R.; Vuillemot, R. Fiberclay: Sculpting three dimensional trajectories to reveal structural insights. IEEE Trans. Vis. Comput. Graph. 2018, 25, 704–714. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, Y.; Sun, T.; Gao, X.; Chen, W.; Pan, Z.; Qu, H.; Wu, Y. Exploring the Design Space of Immersive Urban Analytics. Vis. Inform. 2017, 1, 132–142. [Google Scholar] [CrossRef]

- Filho, J.A.W.; Stuerzlinger, W.; Nedel, L. Evaluating an Immersive Space-Time Cube Geovisualization for Intuitive Trajectory Data Exploration. IEEE Trans. Vis. Comput. Graph. 2019, 26, 514–524. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Prouzeau, A.; Ens, B.; Dwyer, T. Design and evaluation of interactive small multiples data visualisation in immersive spaces. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22–26 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 588–597. [Google Scholar]

- Knudsen, S.; Carpendale, S. Multiple views in immersive analytics. In Proceedings of the IEEEVIS 2017 Immersive Analytics (IEEEVIS), Phoenix, AZ, USA, 1 October 2017. [Google Scholar]

- Lobo, M.J.; Appert, C.; Pietriga, E. MapMosaic: Dynamic layer compositing for interactive geovisualization. Int. J. Geogr. Inf. Sci. 2017, 31, 1818–1845. [Google Scholar] [CrossRef]

- Mahmood, T.; Butler, E.; Davis, N.; Huang, J.; Lu, A. Building multiple coordinated spaces for effective immersive analytics through distributed cognition. In Proceedings of the 4th International Symposium on Big Data Visual and Immersive Analytics, Konstanz, Germany, 17–19 October 2018; pp. 119–128. [Google Scholar]

- Trapp, M.; Döllner, J. Interactive close-up rendering for detail+ overview visualization of 3D digital terrain models. In Proceedings of the 2019 23rd International Conference Information Visualisation (IV), Paris, France, 2–5 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 275–280. [Google Scholar]

- North, C.; Shneiderman, B. A Taxonomy of Multiple Window Coordination; Technical Report; University of Maryland: College Park, MD, USA, 1997. [Google Scholar]

- Gleicher, M.; Albers, D.; Walker, R.; Jusufi, I.; Hansen, C.D.; Roberts, J.C. Visual comparison for information visualization. Inf. Vis. 2011, 10, 289–309. [Google Scholar] [CrossRef]

- Gleicher, M. Considerations for Visualizing Comparison. IEEE Trans. Vis. Comput. Graph. 2018, 24, 413–423. [Google Scholar] [CrossRef]

- Vishwanath, D.; Girshick, A.R.; Banks, M.S. Why pictures look right when viewed from the wrong place. Nat. Neurosci. 2005, 8, 1401. [Google Scholar] [CrossRef]

- Amini, F.; Rufiange, S.; Hossain, Z.; Ventura, Q.; Irani, P.; McGuffin, M.J. The impact of interactivity on comprehending 2D and 3D visualizations of movement data. IEEE Trans. Vis. Comput. Graph. 2014, 21, 122–135. [Google Scholar] [CrossRef]

- Ware, C.; Mitchell, P. Reevaluating stereo and motion cues for visualizing graphs in three dimensions. In Proceedings of the 2nd Symposium on Applied Perception in Graphics and Visualization, A Coruña, Spain, 26–28 August 2005; ACM: New York, NY, USA, 2005; pp. 51–58. [Google Scholar]

- Schulz, H.J.; Nocke, T.; Heitzler, M.; Schumann, H. A Design Space of Visualization Tasks. IEEE Trans. Vis. Comput. Graph. 2013, 19, 2366–2375. [Google Scholar] [CrossRef]

- Theuns, J. Visualising Origin-Destination Data with Virtual Reality: Functional Prototypes and a Framework for Continued VR Research at the ITC Faculty. Bachelor’s Thesis, University of Twente, Enschede, The Netherlands, 2017. [Google Scholar]

- Guse, D.; Orefice, H.R.; Reimers, G.; Hohlfeld, O. TheFragebogen: A Web Browser-based Questionnaire Framework for Scientific Research. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–3. [Google Scholar]

- Kurzhals, K.; Fisher, B.; Burch, M.; Weiskopf, D. Eye tracking evaluation of visual analytics. Inf. Vis. 2016, 15, 340–358. [Google Scholar] [CrossRef]

- David, E.J.; Gutiérrez, J.; Coutrot, A.; Da Silva, M.P.; Callet, P.L. A dataset of head and eye movements for 360 videos. In Proceedings of the 9th ACM Multimedia Systems Conference, Amsterdam, The Netherlands, 12–15 June 2018; ACM: New York, NY, USA, 2018; pp. 432–437. [Google Scholar]

- David, E.J.; Lebranchu, P.; Da Silva, M.P.; Le Callet, P. Predicting artificial visual field losses: A gaze-based inference study. J. Vis. 2019, 19, 22. [Google Scholar] [CrossRef]

- Salvucci, D.D.; Goldberg, J.H. Identifying fixations and saccades in eye-tracking protocols. In Proceedings of the 2000 Symposium on Eye Tracking Research & Applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; ACM: New York, NY, USA, 2000; pp. 71–78. [Google Scholar]

- Bradley, R.A. 14 paired comparisons: Some basic procedures and examples. Handb. Stat. 1984, 4, 299–326. [Google Scholar]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Sevinc, V.; Berkman, M.I. Psychometric evaluation of simulator sickness questionnaire and its variants as a measure of cybersickness in consumer virtual environments. Appl. Ergon. 2020, 82, 102958. [Google Scholar] [CrossRef] [PubMed]

- Bimberg, P.; Weissker, T.; Kulik, A. On the usage of the simulator sickness questionnaire for virtual reality research. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 464–467. [Google Scholar]

- Yan, Y.; Chen, K.; Xie, Y.; Song, Y.; Liu, Y. The effects of weight on comfort of virtual reality devices. In International Conference on Applied Human Factors and Ergonomics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 239–248. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Spur, M.; Tourre, V.; David, E.; Moreau, G.; Le Callet, P. Exploring Multiple and Coordinated Views for Multilayered Geospatial Data in Virtual Reality. Information 2020, 11, 425. https://doi.org/10.3390/info11090425

Spur M, Tourre V, David E, Moreau G, Le Callet P. Exploring Multiple and Coordinated Views for Multilayered Geospatial Data in Virtual Reality. Information. 2020; 11(9):425. https://doi.org/10.3390/info11090425

Chicago/Turabian StyleSpur, Maxim, Vincent Tourre, Erwan David, Guillaume Moreau, and Patrick Le Callet. 2020. "Exploring Multiple and Coordinated Views for Multilayered Geospatial Data in Virtual Reality" Information 11, no. 9: 425. https://doi.org/10.3390/info11090425

APA StyleSpur, M., Tourre, V., David, E., Moreau, G., & Le Callet, P. (2020). Exploring Multiple and Coordinated Views for Multilayered Geospatial Data in Virtual Reality. Information, 11(9), 425. https://doi.org/10.3390/info11090425