Learning a Convolutional Autoencoder for Nighttime Image Dehazing

Abstract

1. Introduction

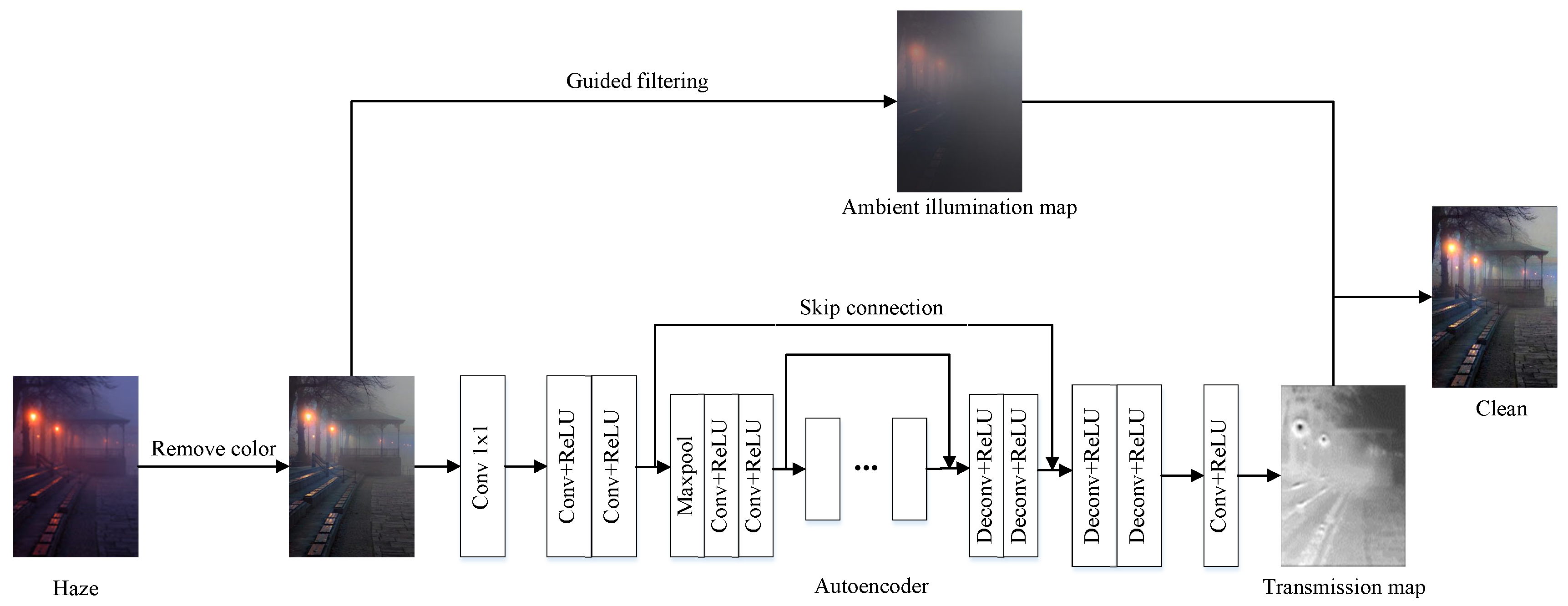

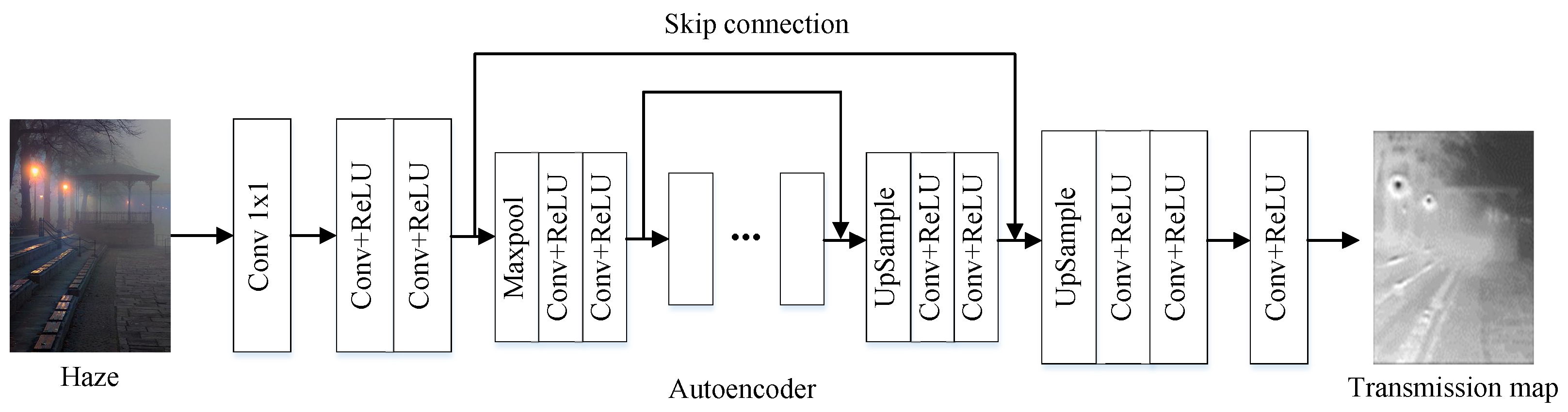

- We propose a novel method for estimating the transmission map of the hazy image in the night scene, in which we have developed an autoencoder method to solve the problem of overestimation or underestimation of transmission in the traditional methods.

- The ambient illumination mainly comes from the low-frequency components of an image. We propose to use a guided filtering method to obtain the ambient illumination. This method is more accurate than the local pixel maximum method.

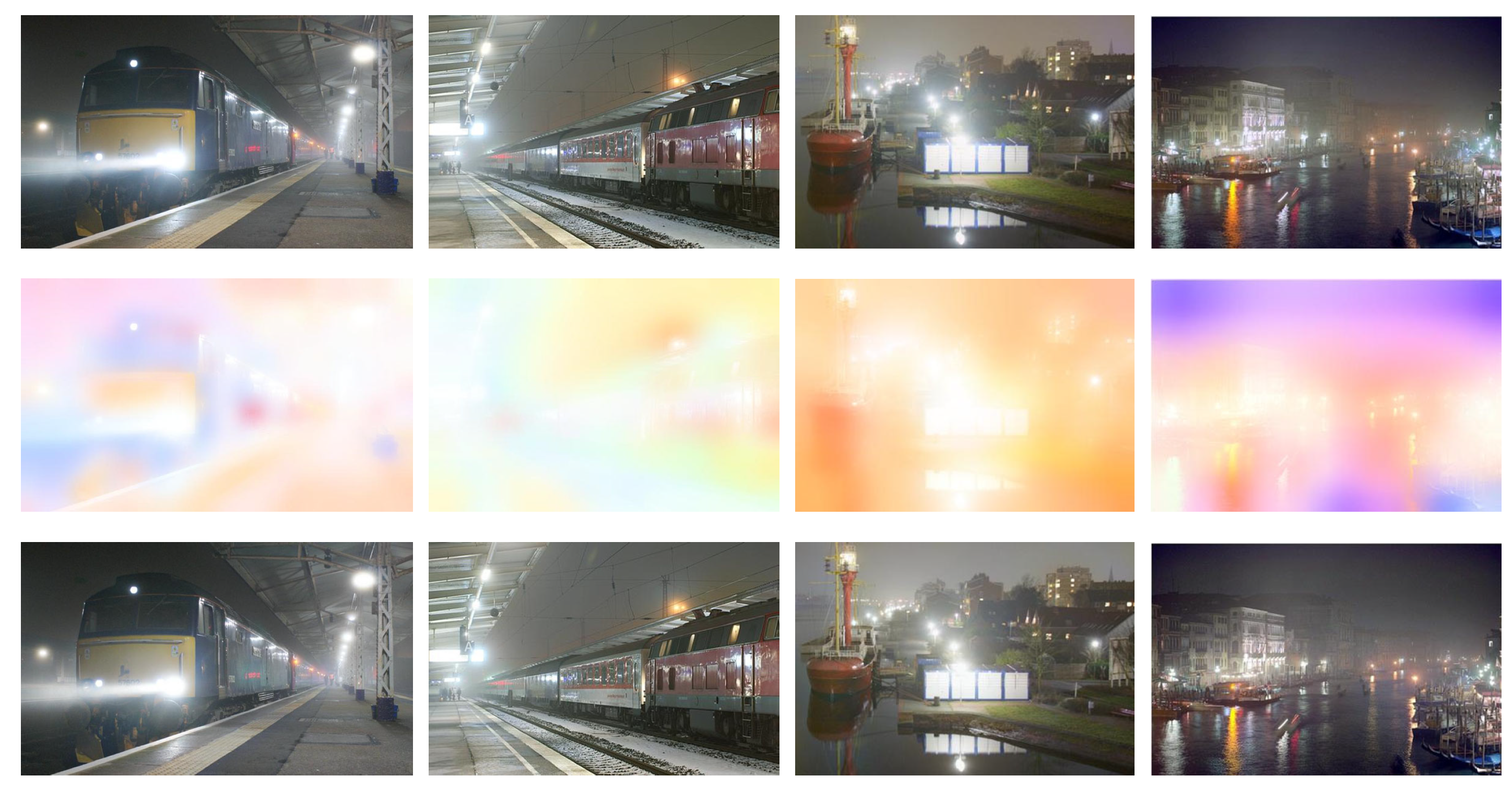

- In order to make the synthesized image close to the real situation at night, we propose a new method of synthesizing the night haze training set.

2. Related Works

3. Our Method

3.1. Nighttime Haze Model

3.2. Color Correction

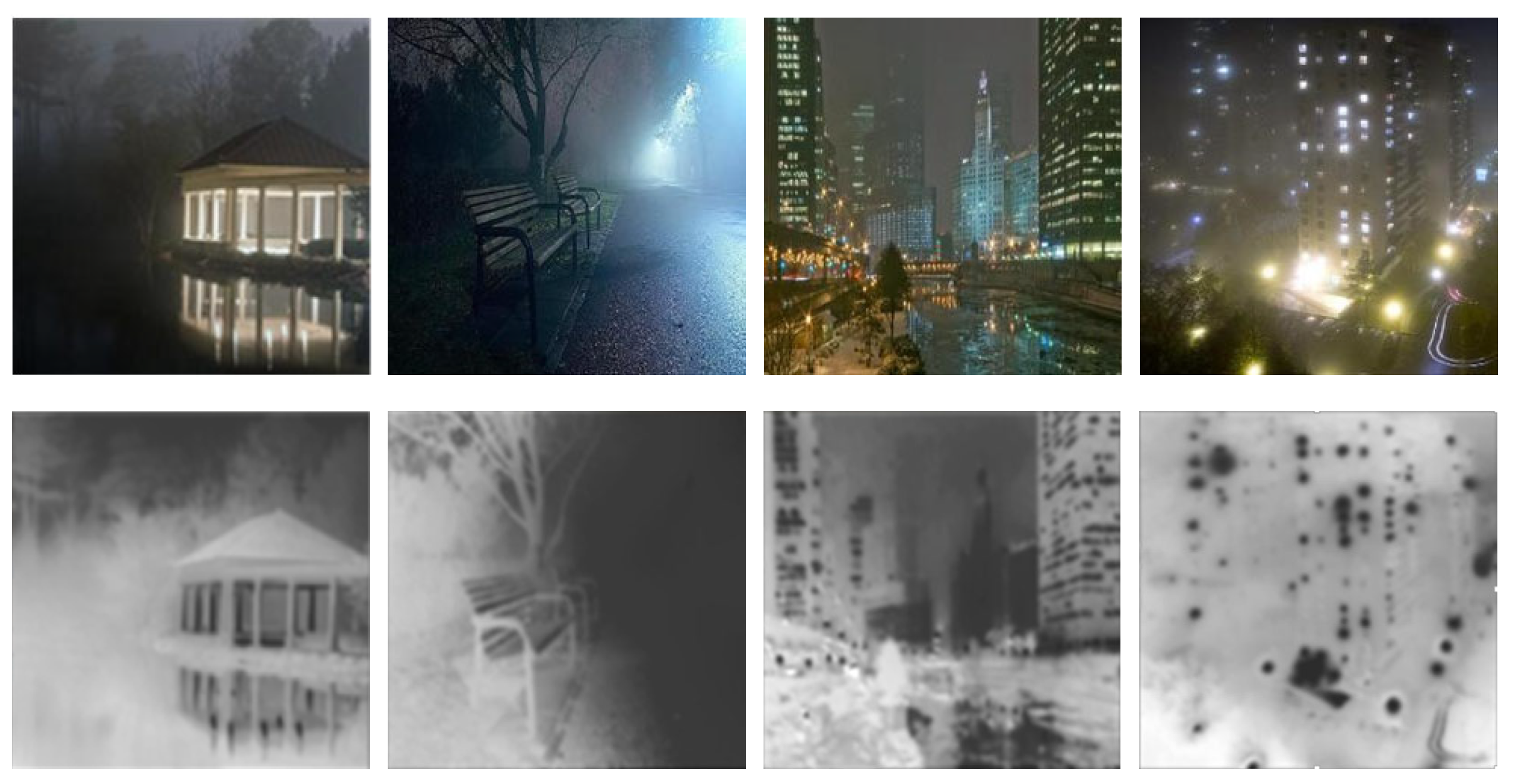

3.3. Transmission Estimation

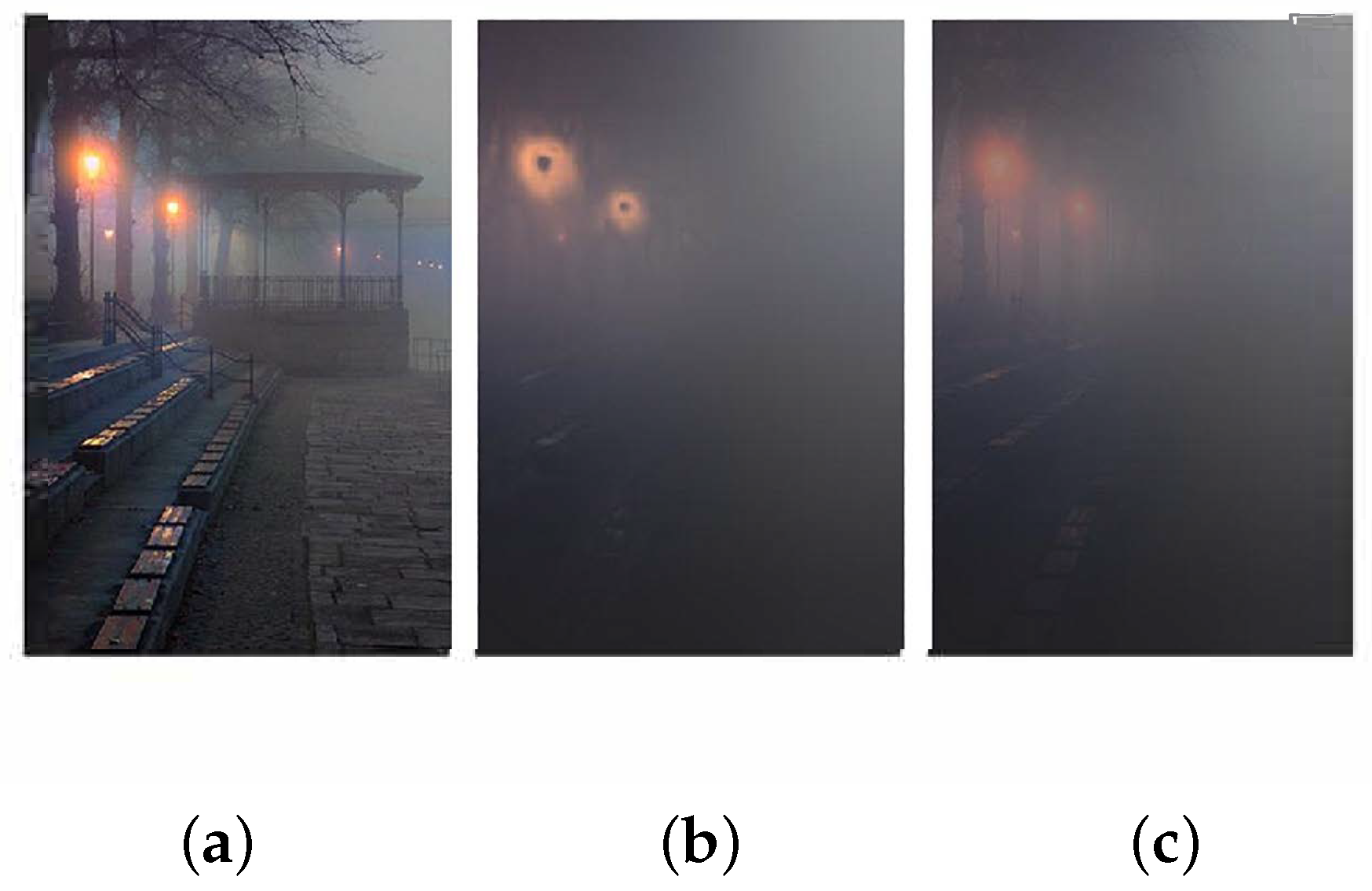

3.4. Ambient Illumination Estimation

4. Experimental

4.1. Data Synthesis

| Algorithm 1: Algorithm for synthesizing nighttime training images |

Input: clean image c and depth map d;

|

4.2. Experimental Details

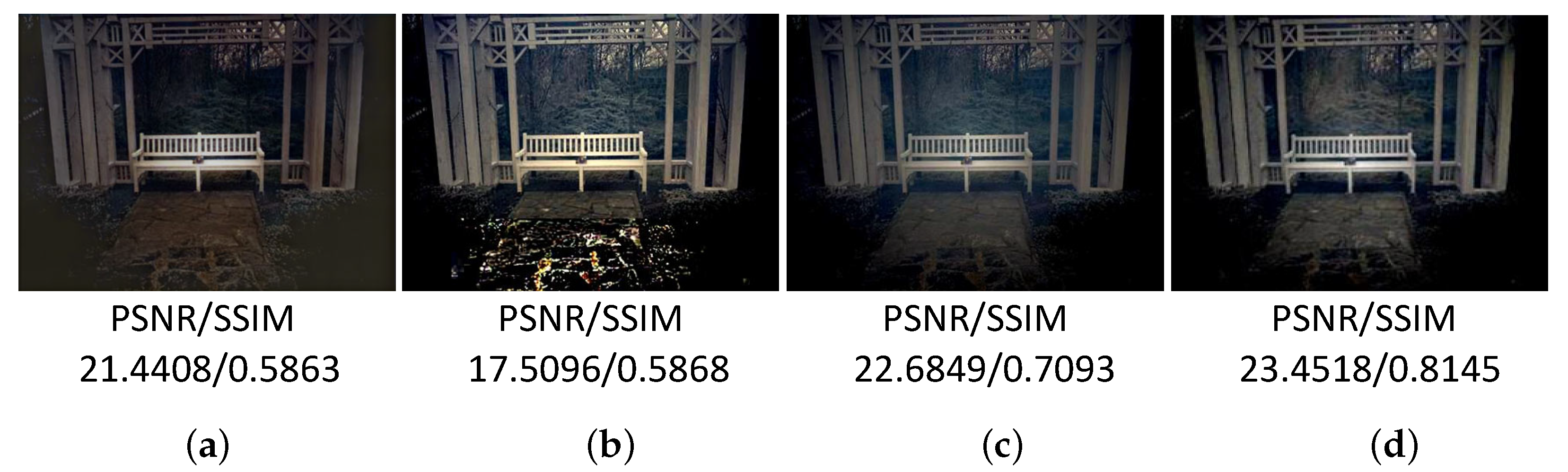

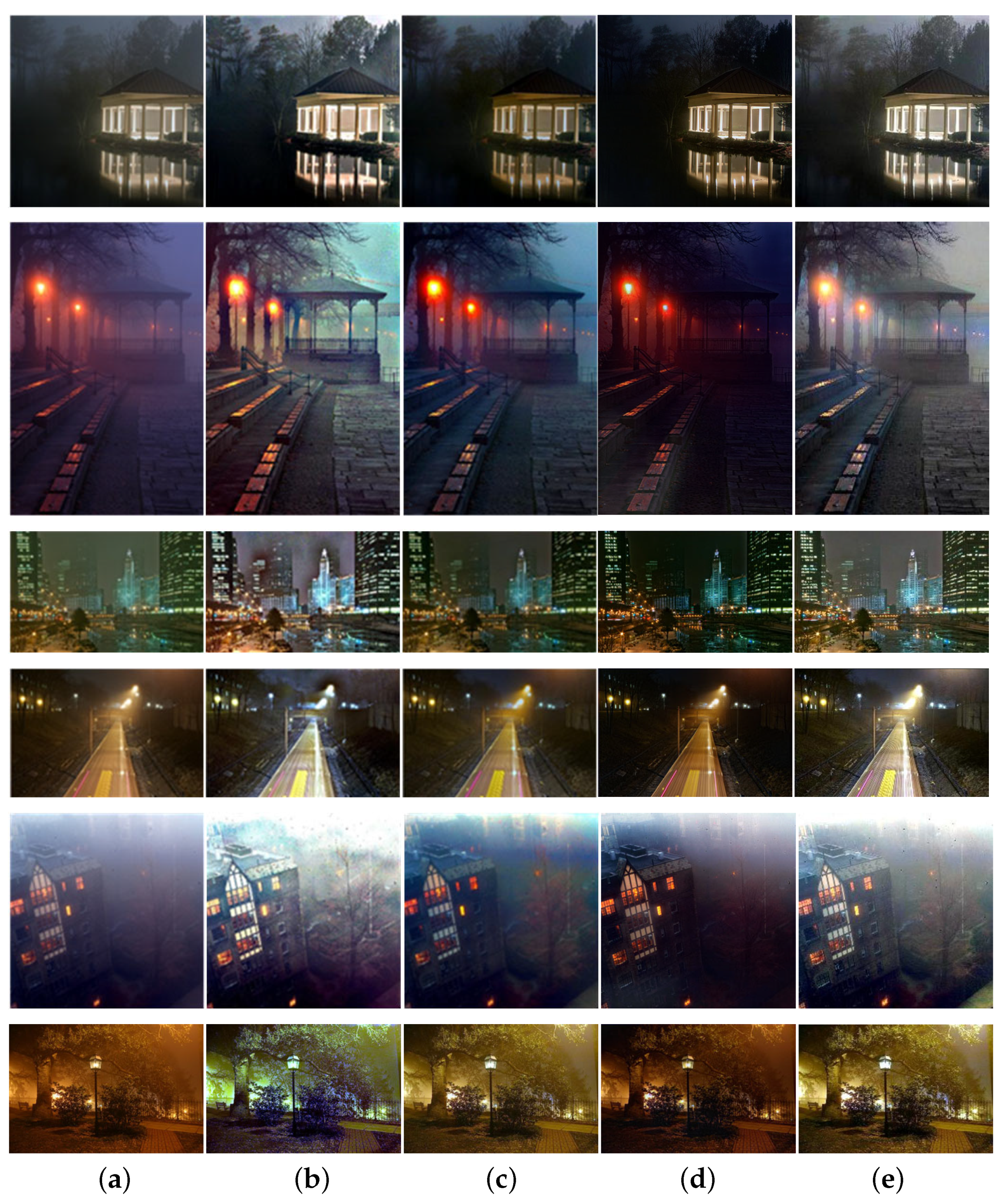

4.3. Comparison of Real Images

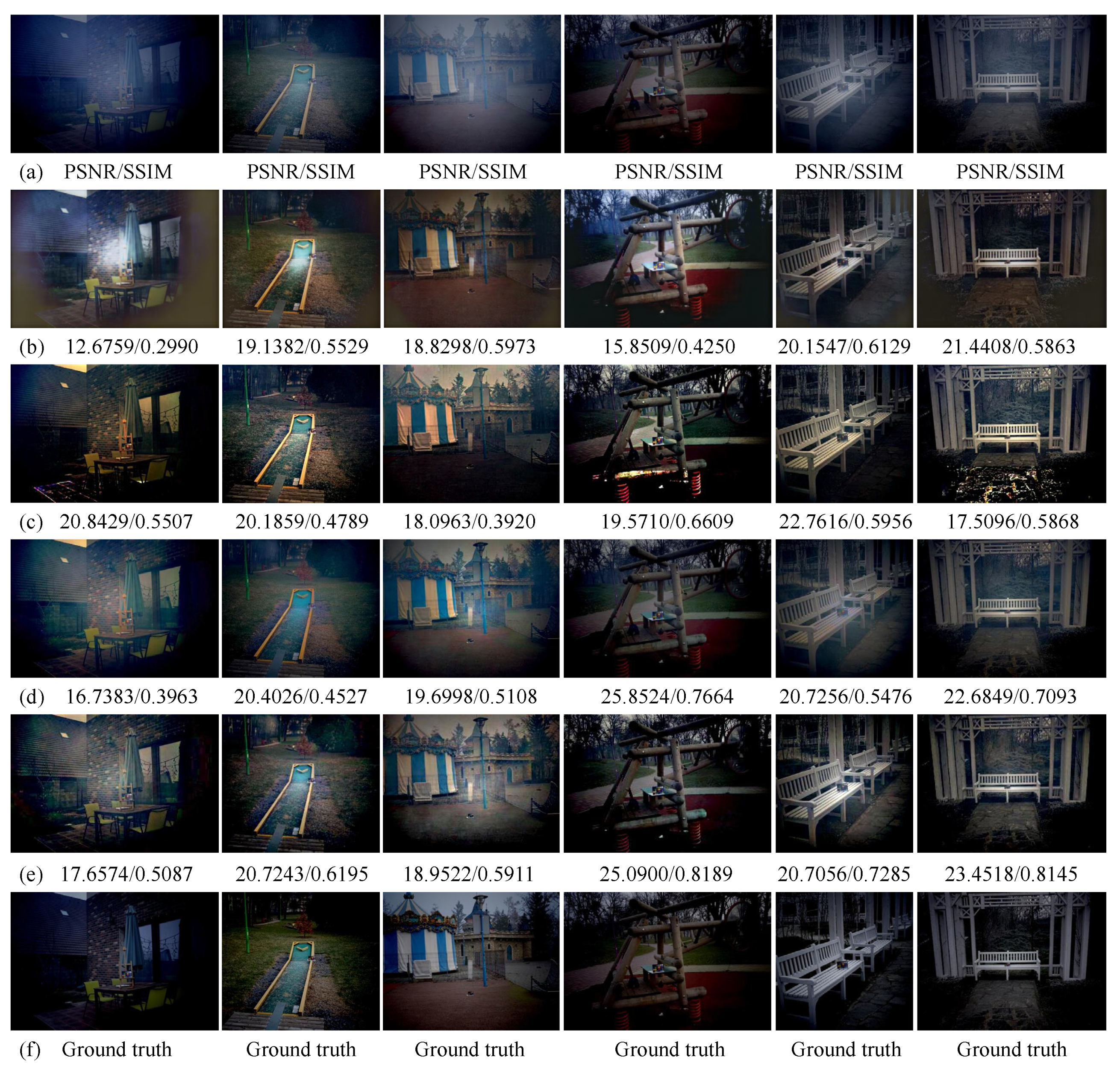

4.4. Comparation of Synthetic Images

5. Summary

Author Contributions

Funding

Conflicts of Interest

References

- Ivanov, Y.; Peleshko, D.; Makoveychuk, O.; Izonin, I.; Malets, I.; Lotoshunska, N.; Batyuk, D. Adaptive moving object segmentation algorithms in cluttered environments. In The Experience of Designing and Application of CAD Systems in Microelectronics; IEEE: Toulouse, France, 2015; pp. 97–99. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed]

- Fattal, R. Dehazing using color-lines. ACM Trans. Graph. (TOG) 2014, 34, 1–14. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, J. Edge-preserving decomposition-based single image haze removal. IEEE Trans. Image Process. 2015, 24, 5432–5441. [Google Scholar] [CrossRef] [PubMed]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar]

- Nishino, K.; Kratz, L.; Lombardi, S. Bayesian defogging. Int. J. Comput. Vis. 2012, 98, 263–278. [Google Scholar] [CrossRef]

- Lou, W.; Li, Y.; Yang, G.; Chen, C.; Yang, H.; Yu, T. Integrating Haze Density Features for Fast Nighttime Image Dehazing. IEEE Access 2020, 8, 113318–113330. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Peng, X.; Chandrasekhar, V.; Li, L.; Lim, J.H. DehazeGAN: When Image Dehazing Meets Differential Programming. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; pp. 1234–1240. [Google Scholar]

- Li, R.; Cheong, L.F.; Tan, R.T. Heavy rain image restoration: Integrating physics model and conditional adversarial learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 8 September 2018; pp. 1633–1642. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely connected pyramid dehazing network. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3194–3203. [Google Scholar]

- McCartney, E.J. Optics of the atmosphere: Scattering by molecules and particles. NYJW 1976, 1, 421. [Google Scholar] [CrossRef]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 154–169. [Google Scholar]

- Li, Y.; Tan, R.T.; Brown, M.S. Nighttime haze removal with glow and multiple light colors. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 226–234. [Google Scholar]

- Zhang, J.; Cao, Y.; Wang, Z. Nighttime haze removal based on a new imaging model. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4557–4561. [Google Scholar]

- Yu, S.Y.; Hong, Z. Lighting model construction and haze removal for nighttime image. Opt. Precis. Eng. 2017, 25, 729–734. [Google Scholar] [CrossRef]

- Zhang, J.; Cao, Y.; Fang, S.; Kang, Y.; Wen Chen, C. Fast haze removal for nighttime image using maximum reflectance prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21 July 2017; pp. 7418–7426. [Google Scholar]

- Pei, S.C.; Lee, T.Y. Nighttime haze removal using color transfer pre-processing and dark channel prior. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 957–960. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; De Vleeschouwer, C.; Bovik, A.C. Night-time dehazing by fusion. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2256–2260. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–14. [Google Scholar]

- Qu, Y.; Chen, Y.; Huang, J.; Xie, Y. Enhanced Pix2pix Dehazing Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 8152–8160. [Google Scholar] [CrossRef]

- Deng, Z.; Zhu, L.; Hu, X.; Fu, C.W.; Xu, X.; Zhang, Q.; Qin, J.; Heng, P.A. Deep multi-model fusion for single-image dehazing. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 22 April 2019; pp. 2453–2462. [Google Scholar]

- Sharma, P.; Jain, P.; Sur, A. Scale-aware conditional generative adversarial network for image dehazing. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Village, CO, USA, 1–5 March 2020; pp. 2355–2365. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Song, Y.; Li, J.; Wang, X.; Chen, X. Single image dehazing using ranking convolutional neural network. IEEE Trans. Multimed. 2017, 20, 1548–1560. [Google Scholar] [CrossRef]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Yu, T.; Song, K.; Miao, P.; Yang, G.; Yang, H.; Chen, C. Nighttime Single Image Dehazing via Pixel-Wise Alpha Blending. IEEE Access 2019, 7, 114619–114630. [Google Scholar] [CrossRef]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 746–760. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. O-haze: A dehazing benchmark with real hazy and haze-free outdoor images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 754–762. [Google Scholar]

| Activation Size | Kernel Size | Stride | Padding | Max-Pooling |

|---|---|---|---|---|

| 3 × 256 × 256 | 64 × 3 × 3 | 1 | 1 | - |

| 64 × 256 × 256 | 64 × 3 × 3 | 1 | 1 | 2 |

| 64 × 128 × 128 | 128 × 3 × 3 | 1 | 1 | - |

| 128 × 128 × 128 | 128 × 3 × 3 | 1 | 1 | 2 |

| 128 × 64 × 64 | 256 × 3 × 3 | 1 | 1 | - |

| 256 × 64 × 64 | 256 × 3 × 3 | 1 | 1 | 2 |

| 256 × 32 × 32 | 512 × 3 × 3 | 1 | 1 | - |

| 512 × 32 × 32 | 512 × 3 × 3 | 1 | 1 | 2 |

| 512 × 16 × 16 | 512 × 3 × 3 | 1 | 1 | - |

| 512 × 16 × 16 | 512 × 3 × 3 | 1 | 1 | - |

| Activation Size | Up-Sampled | Kernel Size | Stride | Padding |

|---|---|---|---|---|

| 512 × 16 × 16 | 2 | 256 × 3 × 3 | 1 | 1 |

| 256 × 32 × 32 | - | 256 × 3 × 3 | 1 | 1 |

| 256 × 32 × 32 | 2 | 128 × 3 × 3 | 1 | 1 |

| 128 × 64 × 64 | - | 128 × 3 × 3 | 1 | 1 |

| 128 × 64 × 64 | 2 | 64 × 3 × 3 | 1 | 1 |

| 64 × 128 × 128 | - | 64 × 3 × 3 | 1 | 1 |

| 64 × 128 × 128 | 2 | 64 × 3 × 3 | 1 | 1 |

| 64 × 256 × 256 | - | 64 × 3 × 3 | 1 | 1 |

| 64 × 256 × 256 | - | 1 × 1 × 1 | 1 | 0 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, M.; Yu, T.; Jing, M.; Yang, G. Learning a Convolutional Autoencoder for Nighttime Image Dehazing. Information 2020, 11, 424. https://doi.org/10.3390/info11090424

Feng M, Yu T, Jing M, Yang G. Learning a Convolutional Autoencoder for Nighttime Image Dehazing. Information. 2020; 11(9):424. https://doi.org/10.3390/info11090424

Chicago/Turabian StyleFeng, Mengyao, Teng Yu, Mingtao Jing, and Guowei Yang. 2020. "Learning a Convolutional Autoencoder for Nighttime Image Dehazing" Information 11, no. 9: 424. https://doi.org/10.3390/info11090424

APA StyleFeng, M., Yu, T., Jing, M., & Yang, G. (2020). Learning a Convolutional Autoencoder for Nighttime Image Dehazing. Information, 11(9), 424. https://doi.org/10.3390/info11090424