1. Introduction

In today’s digital era, social media platforms generate huge volumes of data with high velocity and variety (i.e., images, text, and video). Every day, approx. 900 million photos are uploaded on Facebook, 500 million tweets are posted on Twitter, and 0.4 million hours of videos are uploaded on YouTube [

1], causing potentially unbounded and ever-growing Big Data streams. Twitter, a popular microblogging social media platform, is widely used today by millions of people globally to remain socially connected or obtain information about worldwide events [

2,

3], natural disasters [

4], healthcare [

5], etc. Twitter users act as distributed “social sensors” which report happenings globally [

4]. The short text messages used in Twitter for communication are known as tweets, which can be up to 280 characters of length. A user can follow other users, read their tweets, and also repost the tweets, which are known as retweets. Due to this large deluge of data being generated by millions of Twitter users every day, tweet analytics is viewed as a fundamental problem of Big Data streams. Automated detection of topics being discussed in tweets using tweet analytics techniques is extremely useful in certain cases; for instance, detecting real-time events such as an earthquake [

4], predicting needs of people during natural disasters such as Hurricane Harvey in Houston [

6], and detecting influenza epidemics [

5].

Hashtags, user-defined hyperlinked words, act as a convention for adding additional context and metadata to tweets and are extensively used in Twitter [

7]. They are used to indicate the topic of the tweet and facilitate the searching of tweets with a common subject. Hashtags cover an immense range of topics and user interests such as #health, #graduation, #presedentialelection, #angry, #Oscars2016, and #HarveyRescue, used to convey a health state, topic, political campaigns, emotion, event, disaster rescue, etc.

Recent research in tweet analytics has studied how hashtags can be effectively applied for determining peak time popularity [

8,

9], tweet retrieval [

10,

11,

12], and tweet keyword extraction [

13]. While many hashtag applications are successful, tweet topic classification using hashtags remains challenging due to the evolving nature of tweets and hashtags. Moreover, tweets are very noisy with the combination of slang, abbreviations, emoticons, URLs, and ambiguous words, which make the extraction of relevant information an arduous task. To make things worse, there is no standard on how hashtags are created or expressed. The same subject can be expressed with different hashtags at different points of time (e.g., #omg, #ohmygod) by a user. Typically, users use multiple topic hashtags in a tweet that makes the task of identifying relevant hashtags for a specific topic harder. For example, if a user tweets “Enjoyed Friday with #movie, #hollywood, #food, #restaurant, #art, #sketch”, this tweet shows the user’s intent that he enjoyed Friday by watching a movie, going to restaurant, and drawing a sketch. This tweet discusses multiple topics, i.e., entertainment, food, and art, which makes it hard to determine a specific topic of discussion.

The majority of existing approaches [

14,

15,

16,

17,

18,

19,

20,

21,

22,

23] utilizing hashtags for tweet topic classification are lexicon-based approaches that focus on extracting hashtag concepts using external lexicon resources to derive semantics. However, due to the rapid evolution and casual expression of hashtags, these approaches lack generality and scalability. Lexicon-based approaches are widely used for sentiment analysis [

7,

14,

15,

24,

25,

26,

27,

28] but not for general topic classes. Some lexicon-based approaches [

20,

21,

22,

23,

29,

30] that use existing knowledge bases [

31,

32,

33] for general tweet topic classification suffer from a lack of hashtag words in their knowledge bases due to the highly variant nature of hashtags, and have complex structures that makes their automation harder and unsuitable for real-time Twitter stream analytics.

Some tweet topic classification studies [

34,

35] have focused on classifying tweets using a combination of other features with hashtags instead of primarily improving the semantics of hashtags. Research in [

34] used social network information, while [

35] used a set of features from a user’s profile with hashtags to classify tweets into topics.

Another major limitation of existing tweet topic classification approaches [

34,

35,

36] is that they work in a centralized environment, while real tweet big data stream requires an online and distributed approach to deal with fast and dynamic arrival rates of tweets. Therefore, there is a need to develop tweet topic classification approaches that scale well and provide real-time processing. The research literature includes large-scale implementations for Twitter sentiment analysis [

26,

27] but not for tweet topic classification. Moreover, the topic classifier must have the capability to update its preprocessing and learning model incrementally so that the classifier automatically adapts to new features and improves accuracy with time.

Due to the large variation in the hashtags, tweet topic classification using batch learning alone is not sufficient since the fast evolution of topics and user interests rapidly make the classifier obsolete, which leads to incorrect predictions. Stream learning can resolve this issue by continuous model updates over time as new data arrives but using it alone will not be beneficial, due to less supervision in feature selection and high dependence on arriving data for model predictions. Both the batch and online mechanisms can have performance advantages in different situations. For example, batch learning can be beneficial in initially identifying domain-specific hashtags that are representative of a given domain class in tweet topic classification, while stream learning can help in updating the relevant hashtags in real-time as new data arrives. Hence, batch learning can work well with a fixed set of features in a non-evolving environment, while stream learning is best suited to a varying number of features in an evolving environment. This shows that both batch and online mechanisms are applicable in specific problem domains when used alone and lack generality. In addition, the batch classifier will be obsolete due to data evolution over time, while the stream classifier might not improve predictions with initial low-quality features. This issue can be resolved by combining batch and online mechanisms, since the batch component might help in building an initial model with good set of features, while the online component can help improve the model with new sets of features in real-time. This leads to our motivation to propose a framework that exploits both batch and online mechanisms for tweet topic classification using hashtags.

This research aims to exploit the domain-specific knowledge first, to identify a set of strong hashtag predictors (i.e., Hybrid Hashtags) for tweet topic classification [

37]. The proposed approach consists of two types of hashtags: 1) those extracted from input tweet data and 2) those derived from a knowledge base of topic (or class) concepts (or topic ontology) by using “Hashtagify” [

38], a tool to generate “similar” hashtags from a given term (see more details in [

38]). The Hybrid Hashtags approach helps improve accuracy of classifying topic classes using hashtags alone and resolves ambiguity. The effectiveness of this approach is evaluated in a batch environment, i.e., the Hybrid Hashtag-based Tweet topic classification (HHTC)-Batch version of the framework, which shows that our proposed approach is effective in classifying tweet topics as compared to other state-of-the-art approaches. Furthermore, it is general in the sense that it can be applied to any given class concept of any domain.

To deal with the speed and volume of Big Data streams, we developed the Hybrid Hashtags approach for online computation [

39]. This provides efficient updates for preprocessing and learning of the model incrementally in real-time processing. This online method helps in automatically updating the Hybrid Hashtags as the new tweet data arrives, and enhances distributed and scalable processing. The effectiveness of this approach is evaluated in a stream environment, i.e., the HHTC-Stream version of the framework. Being deployed in a real-time stream processing framework, Apache Storm [

40], we not only evaluate the real-time model updates of our proposed online method, but also its scalability, by throughput and speed experiments.

Because the batch and online mechanisms can have performance advantages in different situations, this paper proposes a comprehensive framework (Hybrid Hashtag-based Tweet topic classification (HHTC) framework) for tweet topic classification using Hybrid Hashtags, which combines batch and online mechanisms in the most effective way in a real-time distributed framework (Apache Storm) [

40]. Our proposed framework, HHTC, not only helps improve tweet topic classification by building an initial model with a good set of hashtag features selected through the Hybrid Hashtags approach but also helps in providing a real-time analytics solution by continuously updating those Hybrid Hashtags. Overall, our proposed framework provides a comprehensive solution for tweet analytics using hashtags that first provides a simple and effective technique to extract relevant hashtags for any given class/topic and then employs that technique in an online and distributed manner to deal with large scale Twitter Big Data streams. Extensive experimental evaluations not only show the individual performance advantages of batch and online mechanisms, but also the combined performance in the proposed framework.

The remainder of the paper is organized as follows:

Section 2 discusses related work. In

Section 3, we describe some of the preliminaries for the approach and the Apache Storm framework.

Section 4 presents the proposed tweet topic classification framework with all three versions i.e., batch, streaming, and a combination of both.

Section 5 provides information about the dataset used and its characteristics.

Section 6 presents an experimental evaluation and the results. Finally,

Section 7 concludes the paper with a discussion and future work.

2. Related Work

The last decade has seen an increase of interest in studies of tweet analytics using hashtags. Most existing approaches can be broadly categorized into lexicon-based approaches, sentiment and emotion analytics approaches, tweet retrieval approaches, and hashtag recommendation approaches.

There is a large body of work using lexicon-based approach for sentiment analysis. Lexicon-based approaches are widely studied for conventional texts such as blogs, forums, and reviews [

18,

41]. However, very few studies focus on analyzing hashtags using the lexicon-based approach. Among those that do, a selection focus on identifying sentiment-bearing or non-sentiment-bearing hashtags using lexicon resources (i.e., dictionary of opinion terms) and use the identified hashtags as features for tweet sentiment classification. Simeon et al. [

14,

15] applied word segmentation algorithms and combined multiple lexical resources (e.g., AFINN [

42], SentiStrength [

19], and Bing Liu Lexicon [

43]) to identify sentiment- and non-sentiment-bearing hashtags. In their approach, hashtags are first divided into smaller semantic units using a word segmentation algorithm and then combined with different lexical resources to build classification models. Finally, each model is being tested individually and in combination on real Twitter data to evaluate its effectiveness. The majority of works focus on leveraging lexicon resources for the whole tweet textual content [

16,

17,

18,

19]. Ortega et al. [

16] used WordNet [

44] and SentiWordNet [

45] to identify polarity and performed rule-based classification on Twitter data. Saif et al. [

17] proposed a lexicon-based approach (i.e., SentiCircles) that uses co-occurrence patterns of words in different contexts to identify sentiment orientation of words. In the current study, we also analyze hashtags using additional resources (i.e., manually constructed knowledge base) but the difference between the above work and ours is that we focus in identifying general topic-based hashtags and use them for tweet topic classification.

Other research has used external lexical sources such as Wikipedia [

31], DBPedia [

32], and Freebase [

33] to enhance the semantic context of tweets for tweet topic classification [

20,

21,

22,

23,

29,

30]. Research in [

20,

22,

23] used Wikipedia to enhance contextual semantics of tweets. Genc et al. [

20] used the semantic distance between the tweet textual content and the Wikipedia pages to determine similar pages and categories. Authors in [

22,

23] used concepts derived from Wikipedia for identifying semantic relatedness of text. Cano et al. [

21] utilized multiple knowledge sources (i.e., DBPedia, Freebase, etc.) for detecting the topics in tweets. They used the entities present in the tweets and enriched their contextual semantics by utilizing multiple knowledge sources. Semantic features were derived from this information to improve the Twitter topic classification. These studies utilized external knowledge bases similarly to us but they used the approach to enhance the concepts present in the tweet, while we utilize the concepts derived from a knowledge base to improve the quality of hashtags to be used as features for tweet topic classification. Furthermore, our approach is top-down as opposed to the existing bottom-up approaches.

Hashtags work well for sentiment and emotion classification [

24,

25,

28]. Research in [

25] uses hashtags and smileys as sentiment labels to classify diverse sentiment types. Hashtags and smileys are first grouped into five categories, i.e., strong sentiment, most likely sentiment, context-dependent sentiment, focused, and no sentiment, and then various features are used to classify tweets. Mohammad et al. [

24] uses emotion word hashtags as labels to create a hashtag emotion corpus for six basic emotions and used it to extract a word–emotion association lexicon. In [

28], authors proposed a bootstrap approach to automatically identify the emotion hashtags; they started with a small number of seed hashtags and used them to collect and label tweets with five prominent emotion classes. Then, an emotion classifier was built for every emotion class and applied to a large pool of unlabeled tweets. The hashtags extracted from these unlabeled tweets were scored and ranked to extract high ranked hashtags, which was then used to form an emotion hashtag repository. A key limitation of these approaches is that they can be easily applied for identifying sentiments since their semantics can be easily captured by a single hashtag (e.g., #sad, #happy) but they are not suitable for identifying general topics (e.g., #health, #entertainment) whose semantics require diverse set of hashtags.

Work on hashtag clustering includes metadata-based [

46,

47,

48] and text-based contextual semantic clustering [

49,

50,

51] approaches. Authors in [

47] used WordNet and Wikipedia as dictionary metadata sources to identify semantics of hashtags. They used WordNet concepts if the hashtag matched an entry in it, otherwise Wikipedia to identify concept candidates. Then, a similarity matrix was formed between all pairs of hashtags to cluster them. A major drawback of their approach is that they focused on word-level rather than concept-level semantics because clustering results were not effective. To resolve this issue, [

46] and [

48] used sense-level semantics for clustering hashtags. Research in [

49,

50,

51] used hashtags present in tweet texts to derive contextual semantics. Work in [

52] proposed a hybrid approach combining metadata- and text-based semantic clustering to improve the semantics of hashtags. Song et al. [

36] proposed a clustering approach to classify hashtags in various news categories, e.g., entertainment and sports, and then provided a ranking method to extract the top hashtags in each category. Belainine et al. [

53] developed an approach to improve the semantics of hashtags by using a combination of knowledge from WordNet to disambiguate the meaning in tweets and reduce synonymous terms. In the current study, we utilize the metadata provided by a knowledge base to derive concepts for the given topic to identify hashtags using the tool Hashtagify, combined with the tweet hashtags to derive contextual semantics. Hence our work focuses on deriving relevant hashtags for the topic using the semantic concepts derived from ontology instead of focusing on extracting hashtag concepts to derive semantics.

Some research [

54,

55] uses ontology to classify long documents and for Twitter sentiment analysis. In [

54], ontology is used to identify appropriate classes for multilabel classification of economics documents in various categories. Work in [

55] uses ontology for more elaborate sentiment analysis of Twitter posts, instead of determining sentiment polarity of tweets. None of these approaches [

54,

54] uses ontology to identify appropriate hashtags for tweet topic classification.

Due to the massive volume of data generated by Twitter, a significant amount of research has been undertaken in designing large-scale systems for sentiment analysis [

26,

27,

56,

57]. Research in [

26,

57] used all hashtags and emoticons as sentiment labels and exploited various pattern, words, and punctuation features to classify tweet sentiment in a distributed manner using MapReduce [

58] and Apache Spark [

59] frameworks. Work in [

27] proposed a large-scale distributed system for real-time Twitter sentiment analysis using MapReduce [

58]. They used a lexicon-based approach in which they assigned sentiment polarity according to the sum of sentiment scores of each word in a tweet. The main distinction of the current study work is that we propose completely online and distributed preprocessing and learning approaches. In terms of distributed processing, our processing is completely online, implemented on the Storm framework, as opposed to using MapReduce [

58], which is a form of distributed batch processing. Although both Apache Storm and Apache Spark are forms of data stream processing, Apache Storm is an online framework while Apache Spark [

59] processes data in micro-batches and is therefore not applicable to our work.

Research in tweet retrieval and hashtag recommendation [

49,

50,

52,

60,

61,

62,

63,

64,

65,

66] is focused on retrieving relevant tweets and recommending hashtags for the tweets with missing hashtags. Research in [

49,

52,

60,

61] primarily focuses on retrieving semantically connected hashtags. Bellaachia et al. [

60] exploit hashtags to enhance graph-based key phrase extraction from tweets. They extract topical tweets using latent Dirichlet allocation (LDA) [

67] and then auxiliary hashtag tweets from the topical tweets by following the hashtag links. Furthermore, the hashtags from topical and auxiliary hashtag tweets are combined in a lexical graph and a ranking algorithm is applied to rank the keywords for a specific topic. Muntean et al. [

49] applied k-means to cluster a large set of hashtags in a distributed environment using MapReduce [

58]. Recent work on hashtag recommendation [

65] uses word embeddings to recommend hashtags for health-related keywords. In [

66], the authors first find similar tweets for a given tweet query and rank hashtags in those tweets for recommendations.

Some recent studies identify topic-relevant hashtags [

10] and predict peak time popularity [

8] for tweet hashtags. Research in [

10] used latent Dirichlet allocation (LDA) [

67] first to determine the topic distributions and then a support vector machine (SVM) to divide the tweets according to their relevance to the topic. Finally, all of the topic distributions are associated with their class labels to identify relevant and irrelevant hashtags. This approach utilizes a combination of topic modelling with a supervised machine learning technique to retrieve the relevant hashtags, while the current study utilizes a domain-specific knowledge base for relevant hashtags retrieval.

4. Hybrid Hashtag-Based Tweet Topic Classification Framework

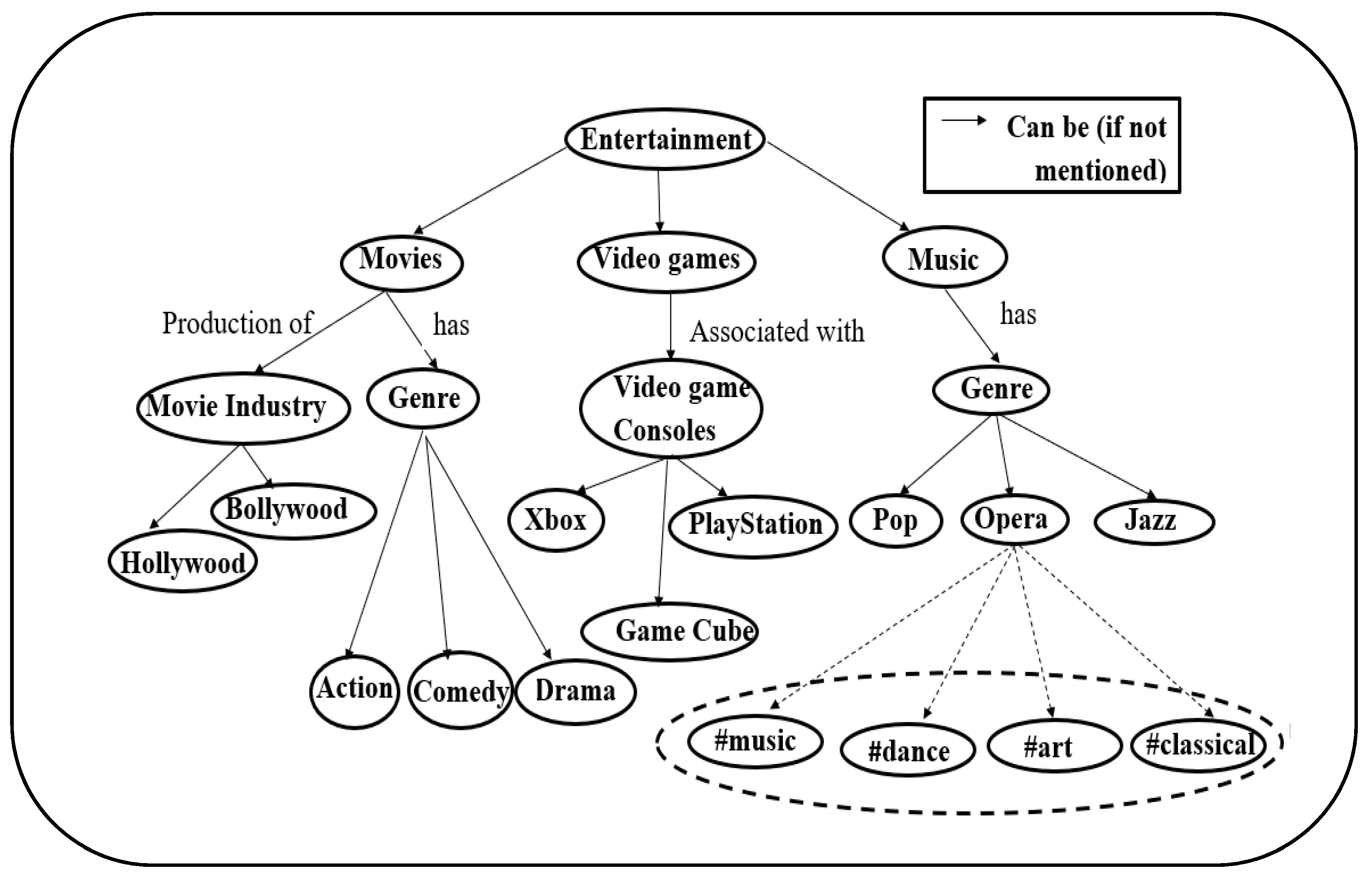

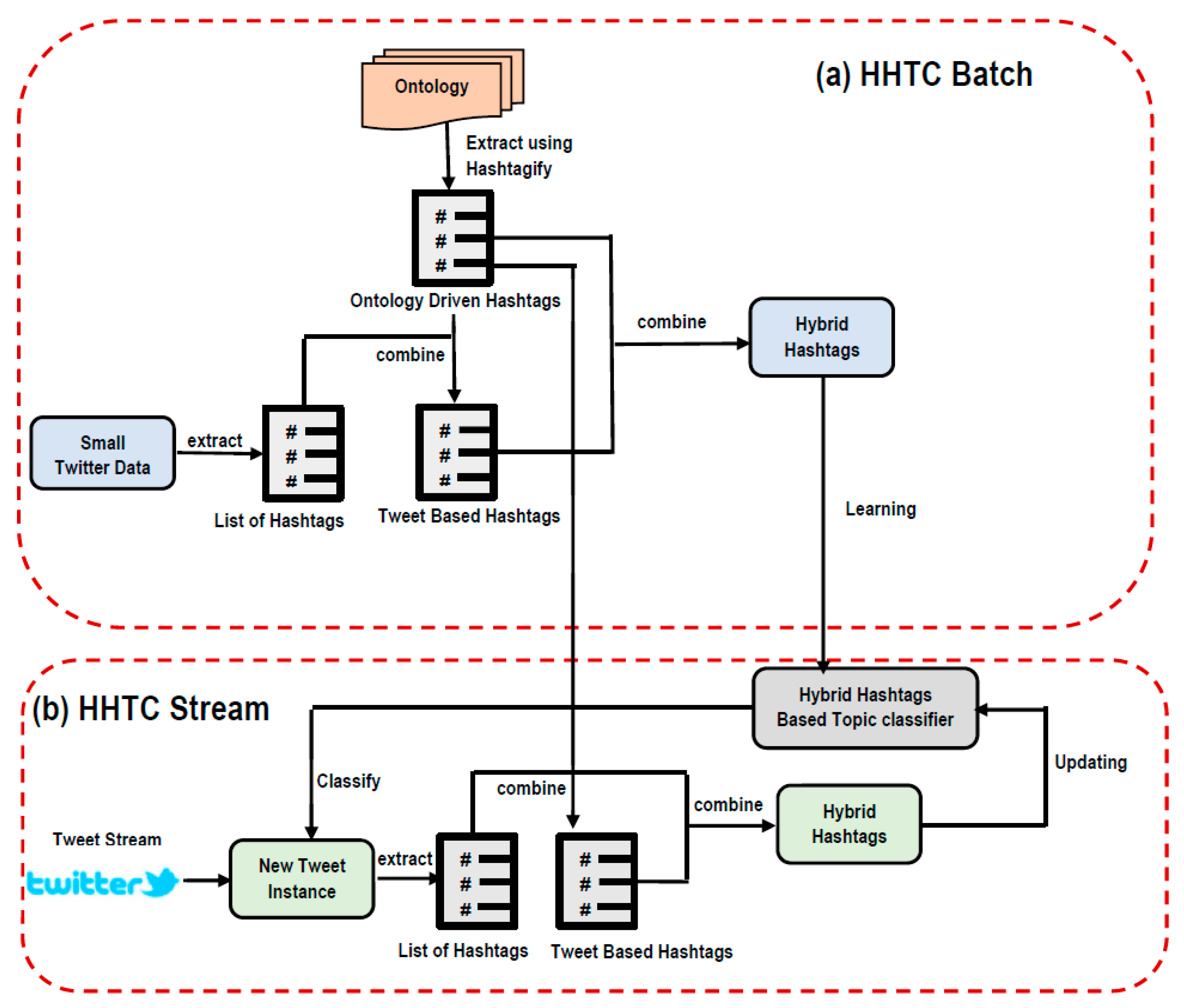

We start this section with a formal definition of the task of tweet topic classification using Hybrid Hashtags in our proposed framework. Given a set of tweets Tb = {tw1, tw2, tw3,….., twp}, where each tweet twi ∈ Tb, contains a set of hashtags H = {h1, h2, h3,…, hn} and |H| (i.e., number of hashtags) varies with each tweet. We aim to classify the topics, y = {y1, y2} where yi ∈ {sports/entertainment, others} for each tweet twi, using hybrid hashtags that are derived by the following procedure. We build an ontology graph OG = {C, E} first, where vertices C represent the set of concepts and edge set E represents a relationship (has, associated with, etc.) between concepts. The terminal node concepts are fed into Hashtagify to produce a hashtag set H’ = {h1’, h2’,……., hm’}, known as concept-based hashtags. These concept-based hashtags are sorted in decreasing order of correlation with the terminal node concepts of the ontology graph (OG) and extract top-k correlated hashtags known as ontology-driven hashtags, Hoi = {ho1, ho2,……, hok} where Hoi ⊂ H’. Further, the correlation of Hoi with H produces top k-correlated hashtags known as tweet-based hashtags, Hti = {ht1, ht2,…….., htk}.

Combination of Hoi and Hti forms a set of Hybrid Hashtags Hhhi = {hhh1, hhh2,……, hhhm} where each Hhhi is associated with each tweet twi ∈ Tb. This set of constructed Hybrid Hashtags (Hhhi) is used as features to build the Hybrid Hashtags-based Tweet topic classifier (MT ) which is used for further processing in our framework. This part is being implemented on tweet set Tb in batch.

For the online part of the framework, given a stream of tweets Ts = {tw1, tw2, tw3,….., tws} where each tweet twi ∈ Ts, contains a set of hashtags H = {h1, h2, h3,…, hn}, where |H| (i.e., number of hashtags) varies with each tweet. Here, instead of processing a whole batch of tweets Tb at a time, each tweet instance twi ∈ Ts is processed individually for both Hybrid Hashtag construction and classifier building. For each tweet twi, MTb first classifies the topic yi where yi ∈ {sports/entertainment, others}. Then a set of hashtags Hs’ = {h1’, h2’, h3’,…, hm’} contained in twi are extracted. The correlation of these hashtags (Hs’) is first computed with the existing ontology-driven hashtags (Hoi), to produce the top-k correlated tweet-based hashtags Hs = {ht1, ht2,…….., htk} for the tweet instance twi. These tweet-based hashtags (Hs) are further combined with Hoi to produce Hybrid Hashtags Hhhs = {hhh1, hhh2,……, hhhm}. This process is repeated for all the tweets in Ts. Each time a set of Hybrid Hashtags (Hhhs) is constructed for the current tweet twcurrent, they are combined with the Hybrid Hashtags generated from all the earlier tweets i.e., tw1, tw2,………., twcurrent. Moreover, the existing classifier model MTb is incrementally updated with new Hybrid Hashtags generated for each new arriving tweet instance.

Figure 3 depicts our proposed Hybrid Hashtag-based Tweet topic classification (HHTC) framework; the upper part shows HHTC-Batch while the lower part shows the HHTC-Stream version of the framework. Both versions are explained in further sections.

5. Data Collection and Preprocessing

We used data collected through the Twitter search API [

37] and Twitter streaming API [

70] to evaluate our Hybrid Hashtag approach in the proposed framework. The data collected through the Twitter search API was utilized for the batch part while the Twitter streaming API was used for the online part. The evaluation of hashtag-level topic classification is challenging due to the lack of a “gold standard” labeled dataset. Hence, we used a self-annotation procedure to label the dataset. We collected tweets during four consecutive days from 12–16 August 2019. The data collection process is described as follows. We first created a list of hashtags that are strongly related to the given domain (i.e., sports/entertainment and others in our case). For example, for the sports domain we picked “NFL”, “NBA”, “football”, “Cowboys”, “Rio2016”. Then we searched in the tweet pool to retrieve tweets containing these hashtags as our seeds. By searching in the tweet pool, we not only retrieved the hashtags being queried but also other hashtags that co-occurred with at least one of the seed hashtags. We considered two classes sports/entertainment and others in our experiments. All the tweets containing hashtags related to sports and entertainment were labeled as sports/entertainment and remainder as others.

We crawled a total of 495,980 tweets following the above process, merged with the old dataset of 8000 tweets collected by the same process in [

37] for our experiments. Many tweets were retrieved repeatedly due significant retweeting by users. The detailed raw data collection statistics are shown in

Table 2. After removal of repetitive tweets, a unique, final raw dataset of 289,455 tweets was obtained, of which 271,310 tweets contained hashtags. We analyzed only the tweets containing hashtags, so removed the other tweets from the dataset. Thus, the total number of hashtags in our raw dataset was 1,815,363, containing hashtags related to sports, entertainment, and others domains. As shown in

Table 2, the total number of distinct hashtags was 36,049 and the average number of hashtags in each tweet was 6.

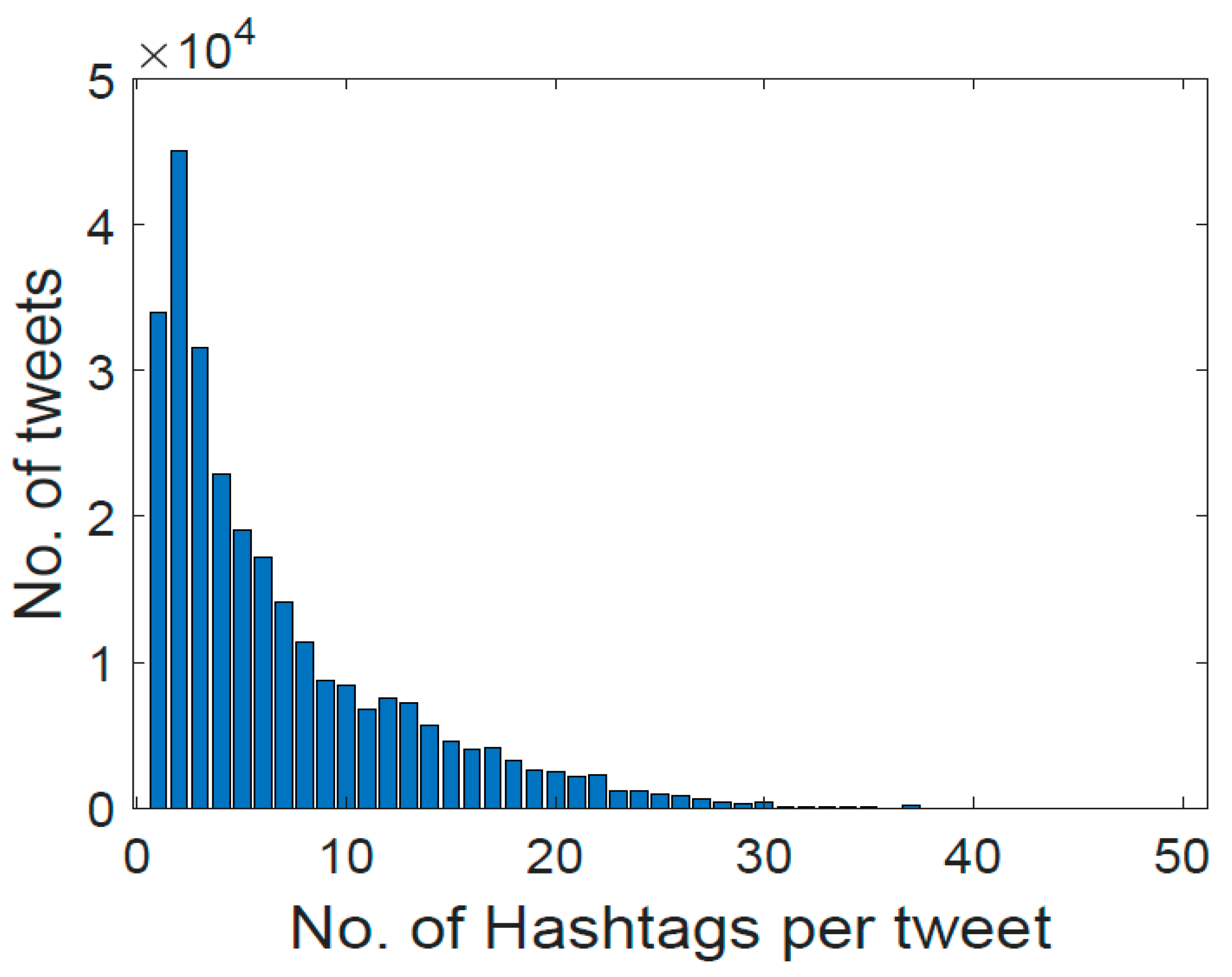

Figure 5 shows the distribution of the number of hashtags in each tweet. As shown, a large number of tweets have a hashtag count in the range of 1–5 but few tweets have hashtags with a count greater than 30. As shown in

Figure 5, only one tweet has a hashtag count of 42 and two have hashtag counts of 50.

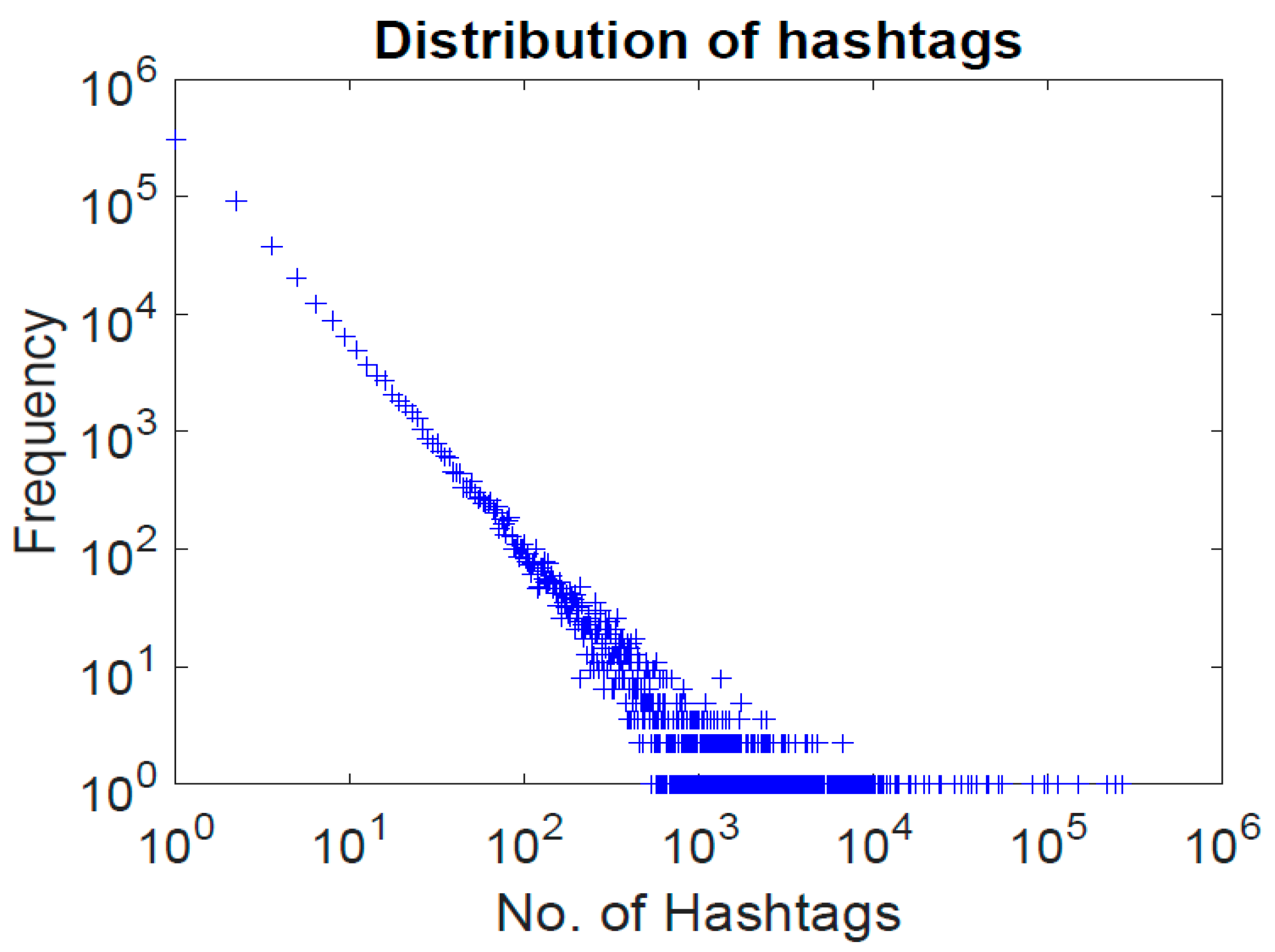

Figure 6 represents the hashtag distribution in our raw tweet collection. It shows the frequency of occurrence of each type of hashtag; for example, the number of hashtags that occur one time, two times, or more. We can observe that the hashtag distribution follows a power law [

71], where a small percentage of hashtags has a higher frequency while a large number of hashtags occur in the range of 1–5, as shown in

Figure 6. This indicates the diversity of hashtags being generated daily by users and the rapidly evolving nature of tweets. It also shows that some of the popular hashtags are used repeatedly by users. The fact that a large number of hashtags occur infrequently creates a very sparse vector representation of the dataset. This indicates the sparseness of Twitter data, which makes it harder to derive context for tweet topic classification.

Moreover, it is quite challenging and unavoidably labor-intensive to obtains a consistent set of tweets for analytics after crawling due to a large number of retweets, spam tweets, and the noisy nature of tweets. In order to process the data, we first cleaned the dataset by removing URLs, symbols, punctuation, abbreviations, and unnecessary space. We also processed some hashtags by splitting them into smaller segments. Finally, we used stemming to convert the words and hashtags to their original stems.

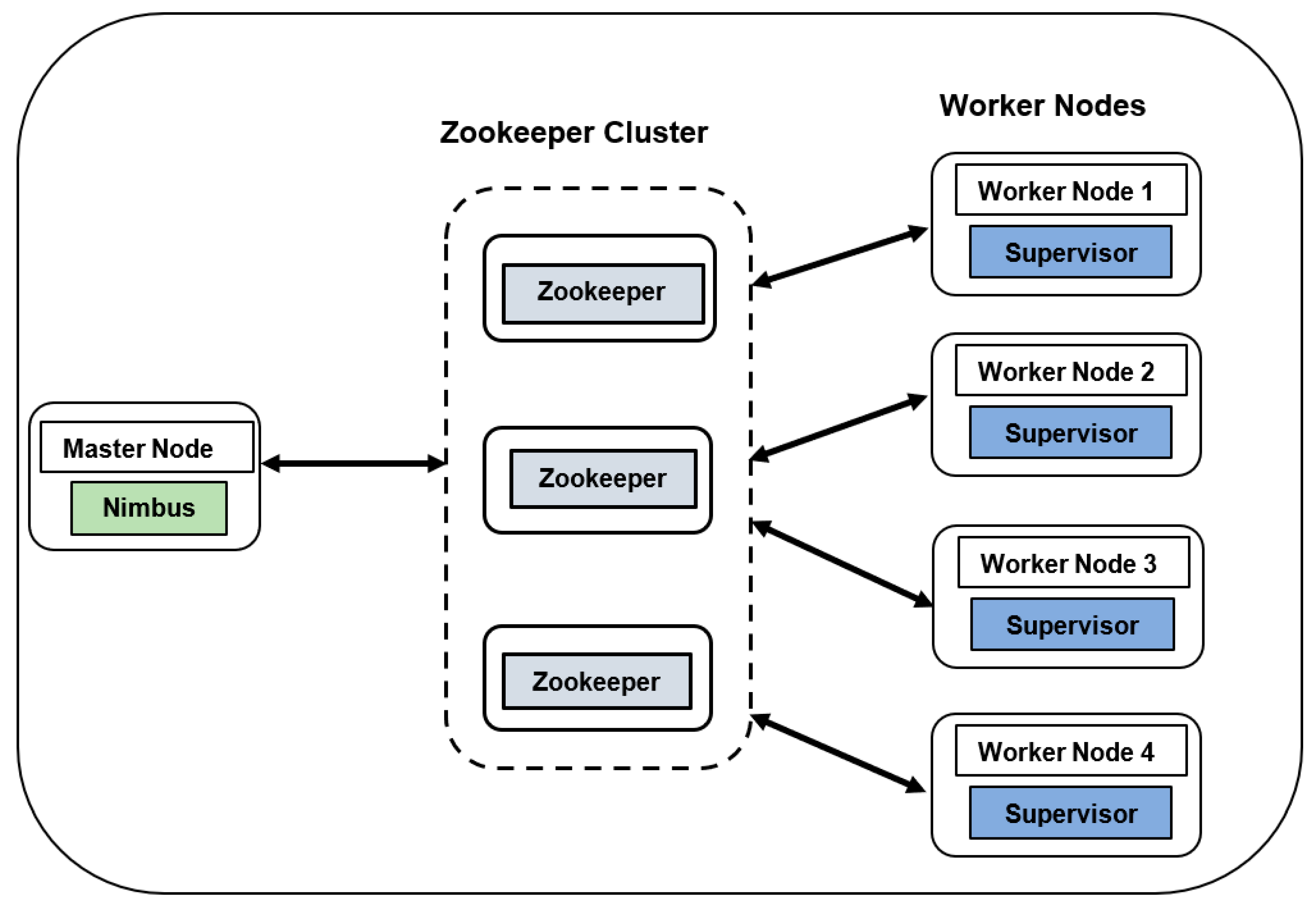

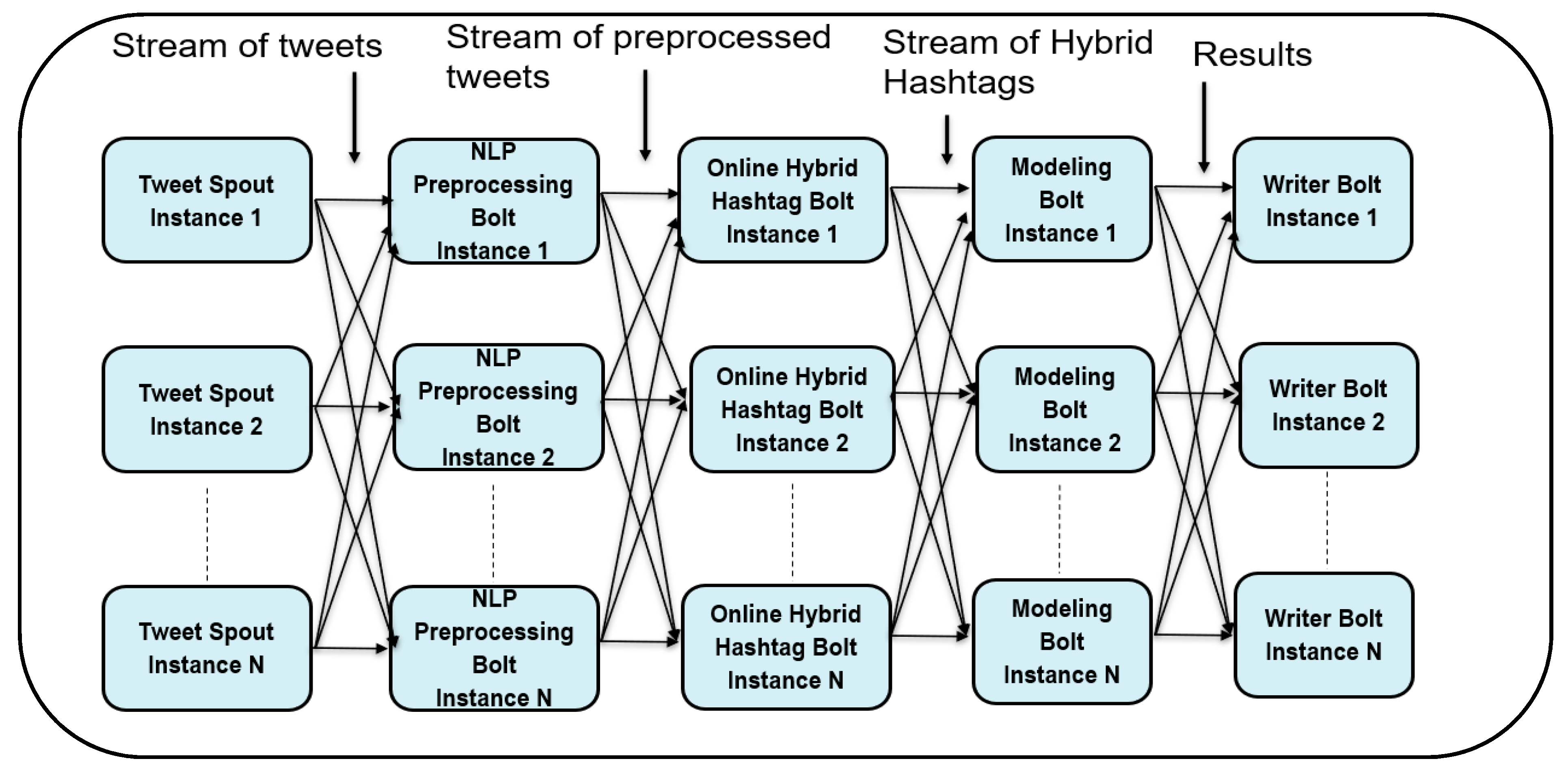

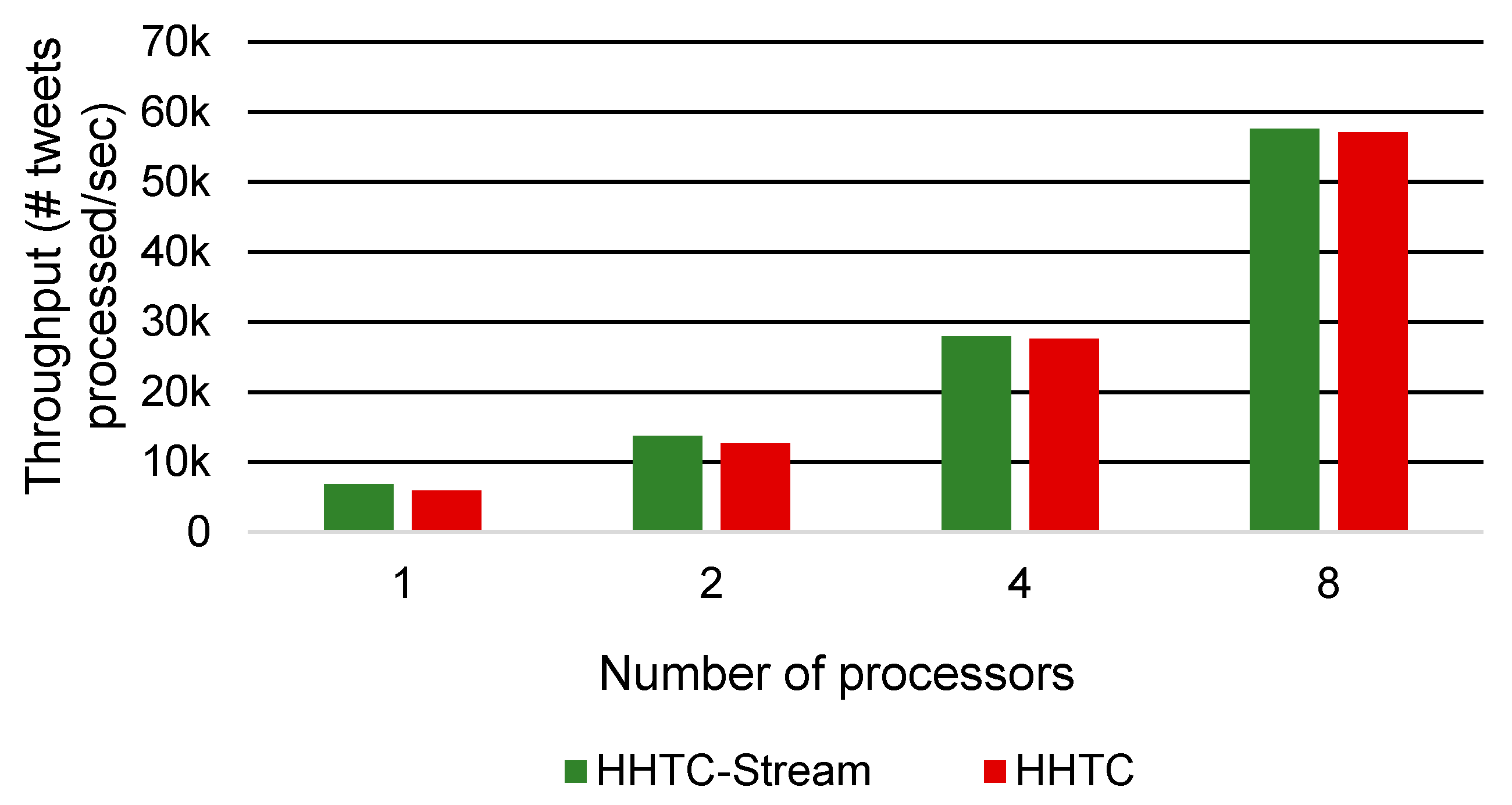

After preprocessing, a total of 155,382 tweets were considered for analysis. We performed experiments in the Storm framework using single and multiple processors to evaluate the effectiveness of our proposed approach in a Big Data stream environment. The Storm cluster was composed of a varying number of virtual machines (VMs or processors) (i.e., 1, 2, 4, and 8) in a system with an Intel Core -i7-8550U CPU 2 GHz processor, 16 GB RAM 8 cores, and 1 TB hard disk. Each of the virtual machines was configured with 4 vCPU and 4 GB RAM. We installed Ubuntu 14.04.05 64 bits OS on each of the VMs with JDK/JRE v 1.8. The Apache Storm version used was 1.1.1 with zookeeper 3.4.9 [

68].