Eye-Tracking Studies of Web Search Engines: A Systematic Literature Review

Abstract

1. Introduction

- To provide descriptive statistics and overviews on the uses of eye-tracking technology for search engines;

- To examine and analyze the papers and discuss procedural and methodological issues when using eye trackers in search engines;

- To propose avenues for further research for researchers conducting and reporting eye-tracking studies.

2. The Concept of Eye-tracking Studies for Search Engines

2.1. Eye-Movement Measures

2.2. Eye-Tracking Results Presentation

- Heat map: in the image being examined there are spots in the colors from red to green, which represent the length of the user’s eye concentration in a given area.

- Fixation map: presented using points defining the areas of concentration of the line of sight. Points are numbered and connected with lines.

- Table: the elements on which the eyes were concentrated, together with the duration of the concentration and the order of their observation, are presented in the rows.

- Charts: presents the time of fixation and clickability, together with the position of each result from search engine results pages.

2.3. Eye-Tracking Participants

3. Literature Review

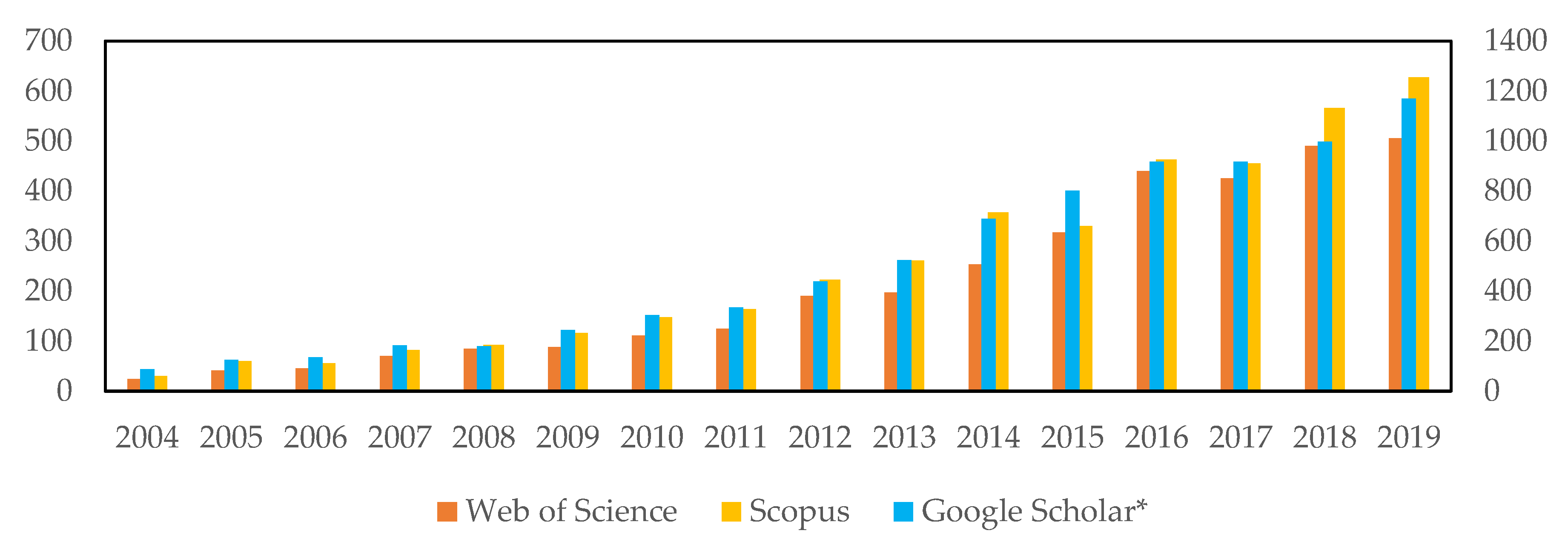

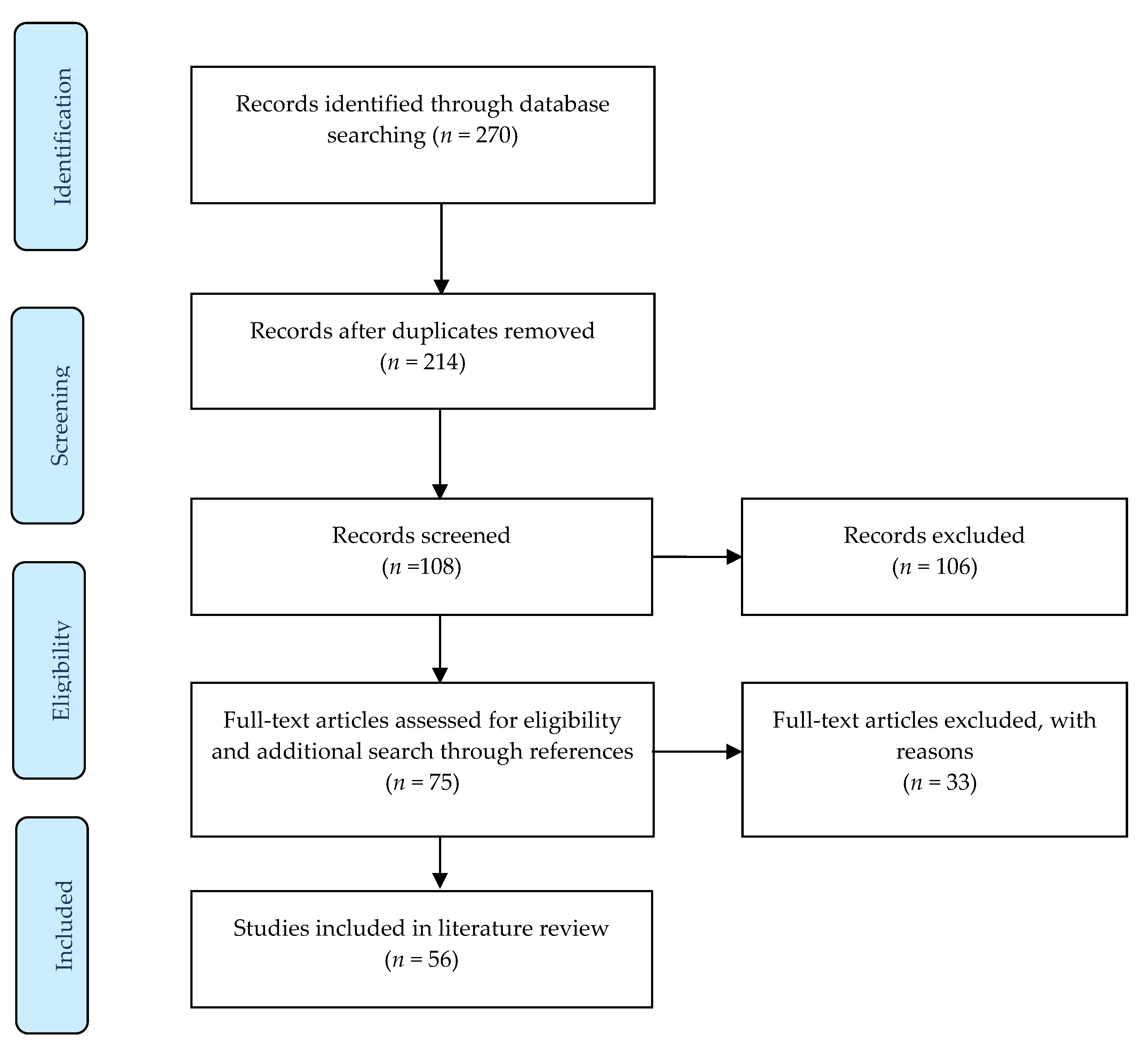

3.1. General Database Search

3.2. Focused Searches

3.3. Additional Searches Through References

- Papers on click rate and log analysis, which were only compared to eye-tracking;

- Papers on eye-tracking for search engines but for which the scientific rigor was poor and presented obvious results;

- Eye-tracking was mentioned in the text but the actual substance was not eye-tracking-related.

3.4. Analysis

3.5. Research Questions and Coding Procedure

- Which search engine(s) were tested?

- What type of apparatus was used for the study?

- What kind of participants took part in the studies? (e.g., age, gender, education)

- What type of interface was tested?

- Which types of results or parts of the results were tested?

- Which eye-movement measures were used?

- What kind of scenarios were prepared for participants?

- What types of tasks were performed using the search engines?

- Which language was used when queries were provided by participants?

- How were the results of the eye-tracking study presented?

- What research question are addressed by each study?

- What key findings are presented in each study?

- Search engine. Each paper described an eye-tracking study on at least one search engine. Some studies presented research on two different search engines as a comparison study. Although some papers did not mention the chosen search engine, it was possible to obtain this information from the screenshots provided.

- Apparatus. Most papers had a detailed section about the device(s) used and basic settings like size of screen in inches, screen resolution, web browser used, and distance from the screen.

- Participants. Each study described how many participants took part in tests and almost all of them described the basic characteristics of participant groups, e.g., age, gender, and education.

- Interface. Either desktop or mobile.

- Search engine results. Generally, search results can be either organic or organic and sponsored. Organic results are produced and sorted by ranking using the search engine’s algorithm [26]. Sponsored search results come from marketing platforms that usually belong to the search engines. On these platforms, advertisers create sponsored results and pay to display it and when it is clicked. Search engines display sponsored results in a way to distinguish them from organic results [27]. Some studies were focused on other areas of search engines’ results pages, e.g., knowledge graphs.

- Eye-movement measures. This measure is based on a temporal–spatial–count framework [13].

- Scenario. Search results can be displayed using the normal output format of the search engine or modified by researchers and displayed in a changed form. The most common changes involve switching the first two results to see if a participant can recognize this change or reversing the order of all results.

- Tasks. Tasks are grouped into navigational, informational, or transactional. Navigational tasks involve finding a certain website. Informational tasks involve finding information on any website. Transactional tasks involve finding information about a product or price.

- Language. Participants always belong to one language group and use this language in the search engine.

- Presentation of results. Researchers can illustrate the results of eye-tracking studies in several ways, e.g., heat maps, fixation maps, gaze plots, charts, and tables.

- Research questions. Summary the sort of research interests and questions on user search behavior utilizing eye tracking and how effective eye tracking methodology was for providing insights on these specific questions.

- Key findings. Summary of key finding presented in each study.

4. Results

4.1. Search Engine

4.2. Apparatus

4.3. Participants

4.4. Interface

4.5. Search Engine Results

4.6. Eye-Movement Measures

4.7. Scenario

4.8. Tasks

4.9. Language

4.10. Presentation of Results

4.11. Research Questions

4.12. Key Findings

5. Discussion

5.1. Methodological Limitations in The Reviewed Studies

5.2. Avenues for Future Research

5.3. Limitations of This Literature Review

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Study Reference | N | Search Engine | Participants | Device | Interface | Results | Measure | Scenario | Tasks | Language | Presentation |

| Granka et al. (2004) [28] | 26 | Students | ASL 504 | Desktop | Organic | Temporal, count | Regular | Informational | English | Table | |

| Klöckner et al. (2004) [72] | 41 | Random | ASL 504 | Desktop | Organic | Temporal | Modified | Informational | German | n/a | |

| Pan et al. (2004) [33] | 30 | Google, Yahoo | Students | ASL 504 | Desktop | Organic | Temporal | Regular | At will | English | Table |

| Aula et al. (2005) [73] | 28 | Students | ASL 504 | Desktop | Organic | Temporal | Regular | Informational, navigational | English | AOI | |

| Joachims et al. (2005) [29] | 29 | Students | ASL 504 | Desktop | Organic | Temporal, spatial, count | Modified | Informational, navigational | English | Table | |

| Radlinski and Joachims (2005) [35] | 36 | Students | ASL 504 | Desktop | Organic | Temporal | Regular | At will | English | Table | |

| Rele and Duchowski (2005) [62] | 16 | Random | Tobii 1750 | Desktop | Organic | Temporal, spatial, count | Modified | Informational, navigational | English | Heat map | |

| Lorigo et al. (2006) [31] | 23 | Students | ASL 504 | Desktop | Organic | Temporal, spatial, count | Regular | Informational, navigational | English | Gaze plot | |

| Guan and Cutrell (2007) [37] | 18 | MSN | Random | Tobii x50 | Desktop | Organic | Temporal, spatial, count | Modified | Informational, navigational | English | Table |

| Joachims et al. (2007) [30] | 29 | Students | ASL 504 | Desktop | Organic | Temporal, spatial, count | Modified | Informational, navigational | English | Chart | |

| Pan et al. (2007) [34] | 22 | Students | ASL 504 | Desktop | Organic | Temporal, spatial, count | Modified | Informational, navigational | English | Table | |

| Cutrell and Guan (2007) [38] | 18 | MSN | Random | Tobii x50 | Desktop | Organic | Temporal, spatial, count | Modified | Informational, navigational | English | Heat map |

| Lorigo et al. (2008) [32] | 40 | Google, Yahoo | Students | ASL 504 | Desktop | Organic | Temporal, spatial, count | Modified | Informational, navigational | English | Chart |

| Egusa et al. (2008) [70] | 16 | Yahoo | Students | n/a | Desktop | Organic | Temporal, count | Regular | Informational | Japanese | Gaze plot |

| Xu et al. (2008) [7] | 5 | n/a | web camera/OpenGazer | Desktop | Organic | Spatial | Modified | Informational | English | Chart | |

| Buscher et al. (2009) [36] | 32 | Live | Students | Tobii 1750 | Desktop | Organic | Temporal, count | Modified | Informational | German | Chart |

| Kammerer et al. (2009) [66] | 30 | Students | Tobii 1750 | Desktop | Organic | Temporal, count | Modified | Informational | German | Chart | |

| Buscher et al. (2010) [41] | 38 | Bing | Random | Tobii x50 | Desktop | Paid | Temporal, count | Modified | Informational, navigational | English | Chart |

| Dumais et al. (2010) [11] | 38 | Bing | Random | Tobii x50 | Desktop | Organic, paid | Temporal, spatial, count | Modified | Informational, navigational | English | Heat map |

| Marcos and González-Caro (2010) [60] | 58 | Google, Yahoo | Random | Tobii T120 | Desktop | Organic, paid | Temporal, spatial, count | Regular | Informational, navigational, transactional, multimedia | Spanish | Heat map |

| Gerjets et al. (2011) [74] | 30 | Students | Tobii 1750 | Desktop | Organic | Temporal, count | Modified | Informational | German | Table | |

| González-Caro and Marcos (2011) [61] | 58 | Google, Yahoo | Random | Tobii T120 | Desktop | Organic, paid | Temporal, spatial, count | Regular | Informational, navigational, transactional | Spanish | Heat map |

| Huang et al. (2011) [40] | 36 | Bing | Random | Tobii x50 | Desktop | Organic, paid | Temporal, spatial, count | Regular | Informational, navigational | English | Heat map |

| Balatsoukas and Ruthven (2012) [75] | 24 | Students | Tobii T60 | Desktop | Organic | Temporal, count | Regular | Informational | English | Table | |

| Huang et al. (2012) [39] | 36 | Bing | Random | Tobii x50 | Desktop | Organic, paid | Temporal, count | Regular | Informational, navigational | English | Chart |

| Kammerer and Gerjets (2012) [64] | 58 | Students | Tobii 1750 | Desktop | Organic | Temporal | Modified | Informational | German | Chart | |

| Kim et al. (2012) [49] | 32 | Students | Facelab 5 | Mobile | Organic | Temporal | Modified | Informational, navigational | English | AOI | |

| Marcos et al. (2012) [76] | 57 | Students | Tobii 1750 | Desktop | Organic | Temporal, count | Regular | Informational | Spanish | Table | |

| Nettleton and González-Caro (2012) [77] | 57 | Students | Tobii 1750 | Desktop | Organic | Temporal, spatial, count | Regular | Informational | Spanish | Heat map | |

| Djamasbi et al. (2013a) [42] | 11 | Students | Tobii X120 | Desktop | Organic | Temporal, spatial, count | Regular | Informational | English | AOI | |

| Djamasbi et al. (2013b) [43] | 16 | Students | Tobii X120 | Desktop/mobile | Paid | Temporal, spatial, count | Regular | Informational | English | Heat map | |

| Djamasbi et al. (2013c) [44] | 34 | Students | Tobii X120 | Desktop/mobile | Paid | Temporal, spatial, count | Regular | Informational | English | Heat map | |

| Hall-Phillips et al. (2013) [45] | 18 | Students | Tobii X120 | Desktop | Paid | Temporal, spatial, count | Regular | Informational | English | AOI | |

| Bataineh and Al-Bataineh (2014) [55] | 25 | Students | Tobii T120 | Desktop | Organic | Temporal, spatial, count | Modified | Informational | English | Heat map | |

| Dickerhoof and Smith (2014) [78] | 18 | Students | Tobii T60 | Desktop | Organic | Temporal, spatial, count | Regular | Informational | English | Table | |

| Gossen et al. (2014) [53] | 31 | Google and Blinde-Kuh | Children, adults | Tobii T60 | Desktop | Organic | Temporal, spatial, count | Regular | Informational, navigational | German | Heat map |

| Hofmann et al. (2014) [67] | 25 | Bing | Random | Tobii TX-300 | Desktop | Organic | Temporal, spatial, count | Regular | Informational, navigational | English | Heat map |

| Jiang et al. (2014) [69] | 20 | Students | Tobii 1750 | Desktop | Organic | Temporal, count | Modified | Informational, transactional | English | Chart | |

| Lagun et al. (2014) [57] | 24 | Random | Tobii X60 | Mobile | Knowledge graph | Temporal, spatial | Regular | Informational | English | Heat map | |

| Y. Liu et al. (2014) [6] | 37 | Sogou | Students | Tobii X2-30 | Desktop | Organic | Temporal, count | Regular | Informational, navigational | Chinese | Chart |

| Z. Liu et al. (2014) [46] | 30 | Baidu | Students | Tobii X2-30 | Desktop | Paid | Temporal, count | Regular | Informational, navigational | Chinese | Chart |

| Lu and Jia (2014) [63] | 58 | Baidu | Students | Tobii T120 | Desktop | Image | Temporal, spatial, count | Regular | Informational | Chinese | Chart |

| Mao et al. (2014) [48] | 31 | Baidu | Students | Tobii X2-30 | Desktop | Organic | Temporal, count | Regular | Informational, navigational, transactional | Chinese | Table |

| Kim et al. (2015) [50] | 35 | Students | Facelab 5 | Desktop/mobile | Organic | Temporal, count | Modified | Informational, navigational | English | Table | |

| Z. Liu et al. (2015) [47] | 32 | Sogou | Students | Tobii X2-30 | Desktop | Organic | Temporal, spatial, count | Modified | Informational | Chinese | Heat map |

| Bilal and Gwizdka (2016) [54] | 21 | Children | Tobii X2-60 | Desktop | Organic | Temporal, count | Modified | Informational | English | Table | |

| Domachowski et al. (2016) [59] | 20 | Random | Tobii X2-60 | Mobile | Paid | Temporal, spatial, count | Modified | Informational, transactional | German | Heat map | |

| Kim et al. (2016a) [56] | 18 | Students | Eye Tribe | Mobile | Organic | Temporal, spatial, count | Modified | Informational | English | AOI | |

| Kim et al. (2016b) [51] | 24 | Students | Facelab 5 | Mobile | Organic | Temporal, spatial, count | Modified | Informational | English | Chart | |

| Lagun et al. (2016) [58] | 24 | Random | SMI Glasses | Mobile | Paid | Temporal, spatial, count | Modified | Transactional | English | Heat map | |

| Kim et al. (2017) [52] | 24 | Students | Facelab 5 | Mobile | Organic | Temporal, count | Modified | Informational, navigational | English | Chart | |

| Papoutsaki et al. (2017) [8] | 36 | Bing and Google | Random | SearchGazer | Desktop | Organic, paid | Temporal, count | Modified | Informational, navigational | English | Heat map |

| Bhattacharya and Gwizdka (2018) [79] | 26 | Students | Tobii TX300 | Desktop | Organic | Temporal, count | Regular | Informational | English | Table | |

| Hautala et al. (2018) [10] | 36 | Children | EyeLink 1000 | Desktop | Organic | Temporal, count | Modified | Informational | Finnish | Chart | |

| Schultheiss et al. (2018) [65] | 25 | Students | Tobii T60 | Desktop | Organic | Temporal, spatial, count | Modified | Informational, navigational | German | Chart | |

| Sachse (2019) [80] | 31 | Random | Pupil ET | Mobile | Organic | Count | Modified | Informational, navigational | German | Chart |

References

- Goldberg, J.H.; Wichansky, A.M. Eye tracking in usability evaluation: A practitioner’s guide. In The Mind’s Eye: Cognitive and Applied Aspects of Eye Movement Research; Hyönä, J., Radach, R., Deubelk, H., Eds.; Elsevier: New York, NY, USA, 2003; pp. 493–516. ISBN 9780444510204. [Google Scholar]

- Goldberg, J.H.; Stimson, M.J.; Lewenstein, M.; Scott, N.; Wichansky, A.M. Eye tracking in web search tasks. In Proceedings of the Symposium on Eye Tracking Research & Applications—ETRA ’02; ACM: New York, NY, USA, 2002; pp. 51–58. [Google Scholar]

- Duchowski, A.T. Eye Tracking Methodology; Springer: Champangne, IL, USA, 2017; ISBN 978-3-319-57881-1. [Google Scholar]

- Wedel, M.; Pieters, R. A review of eye-tracking research in marketing. In Review of Marketing Research; Malhotra, N.K., Ed.; Emerald Group Publishing Limited: Bingley, UK, 2008; pp. 123–147. [Google Scholar]

- Granka, L.; Feusner, M.; Lorigo, L. Eye monitoring in online search. In Passive Eye Monitoring: Signals and Communication Technologies; Hammoud, R., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 347–372. [Google Scholar]

- Liu, Y.; Wang, C.; Zhou, K.; Nie, J.; Zhang, M.; Ma, S. From skimming to reading. In Proceedings of the 23rd ACM International Conference on Information and Knowledge Management—CIKM ’14; ACM: New York, NY, USA, 2014; pp. 849–858. [Google Scholar]

- Xu, S.; Lau, F.C.M.M.; Jiang, H.; Lau, F.C.M.M. Personalized online document, image and video recommendation via commodity eye-tracking categories and subject descriptors. In Proceedings of the 2008 ACM Conference on Recommender Systems; ACM: New York, NY, USA, 2008; pp. 83–90. [Google Scholar]

- Papoutsaki, A.; Laskey, J.; Huang, J. SearchGazer: Webcam eye tracking for remote studies of web search. In Proceedings of the 2nd ACM SIGIR Conference on Information Interaction and Retrieval, CHIIR 2017; ACM: New York, NY, USA, 2017; pp. 17–26. [Google Scholar]

- Wade, N.J.; Tatler, B.W. Did Javal measure eye movements during reading. J. Eye Mov. Res. 2009, 2, 1–7. [Google Scholar] [CrossRef]

- Hautala, J.; Kiili, C.; Kammerer, Y.; Loberg, O.; Hokkanen, S.; Leppänen, P.H.T. Sixth graders’ evaluation strategies when reading Internet search results: An eye-tracking study. Behav. Inf. Technol. 2018, 37, 761–773. [Google Scholar] [CrossRef]

- Dumais, S.; Buscher, G.; Cutrell, E. Individual differences in gaze patterns for web search. In Proceedings of the Third Symposium on Information Interaction in Context—IIiX ’10; ACM: New York, NY, USA, 2010; pp. 185–194. [Google Scholar]

- Alemdag, E.; Cagiltay, K. A systematic review of eye tracking research on multimedia learning. Comput. Educ. 2018, 125, 413–428. [Google Scholar] [CrossRef]

- Lai, M.-L.; Tsai, M.-J.; Yang, F.-Y.; Hsu, C.-Y.; Liu, T.-C.; Lee, S.W.-Y.; Lee, M.-H.; Chiou, G.-L.; Liang, J.-C.; Tsai, C.-C. A review of using eye-tracking technology in exploring learning from 2000 to 2012. Educ. Res. Rev. 2013, 10, 90–115. [Google Scholar] [CrossRef]

- Leggette, H.; Rice, A.; Carraway, C.; Baker, M.; Conner, N. Applying eye-tracking research in education and communication to agricultural education and communication: A review of literature. J. Agric. Educ. 2018, 59, 79–108. [Google Scholar] [CrossRef]

- Rosch, J.L.; Vogel-Walcutt, J.J. A review of eye-tracking applications as tools for training. Cogn. Technol. Work 2013, 15, 313–327. [Google Scholar] [CrossRef]

- Yang, F.-Y.; Tsai, M.-J.; Chiou, G.-L.; Lee, S.W.-Y.; Chang, C.-C.; Chen, L.L. Instructional suggestions supporting science learning in digital environments. J. Educ. Technol. Soc. 2018, 21, 22–45. [Google Scholar]

- Meissner, M.; Oll, J. The Promise of Eye-Tracking Methodology in Organizational Research: A Taxonomy, Review, and Future Avenues. Organ. Res. Methods 2019, 22, 590–617. [Google Scholar] [CrossRef]

- Lund, H. Eye tracking in library and information science: A literature review. Libr. Hi Tech 2016, 34, 585–614. [Google Scholar] [CrossRef]

- Sharafi, Z.; Soh, Z.; Guéhéneuc, Y.-G. A systematic literature review on the usage of eye-tracking in software engineering. Inf. Softw. Technol. 2015, 67, 79–107. [Google Scholar] [CrossRef]

- Rayner, K. Eye Movements in Reading and Information Processing: 20 Years of Research. Psychol. Bull. 1998, 124, 372–422. [Google Scholar] [CrossRef] [PubMed]

- Liversedge, S.P.; Paterson, K.B.; Pickering, M.J. Eye Movements and Measures of Reading Time. Eye Guid. Read. Scene Percept. 1998, 55–75. [Google Scholar] [CrossRef]

- Liversedge, S.P.; Findlay, J.M. Saccadic eye movements and cognition. Trends Cogn. Sci. 2000, 4, 6–14. [Google Scholar] [CrossRef]

- Pernice, K.; Nielsen, J. How to Conduct and Evaluate Usability Studies Ssing Eyetracking; Nielsen Norman Group: Fremont, CA, USA, 2009. [Google Scholar]

- Moher, D. Preferred reporting Items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–267. [Google Scholar] [CrossRef] [PubMed]

- Webster, J.; Watson, R.T. Analyzing the past to prepare for the future: Writing a literature review. MIS Q. 2002, 26, xiii. [Google Scholar]

- Lewandowski, D. The retrieval effectiveness of web search engines: Considering results descriptions. J. Doc. 2008, 64, 915–937. [Google Scholar] [CrossRef]

- Jansen, B.J.; Mullen, T. Sponsored search: An overview of the concept, history, and technology. Int. J. Electron. Bus. 2008, 6, 114–171. [Google Scholar] [CrossRef]

- Granka, L.A.; Joachims, T.; Gay, G. Eye-tracking analysis of user behavior in WWW search. In Proceedings of the 27th Annual International Conference on Research and Development in Information Retrieval—SIGIR ’04; ACM: New York, NY, USA, 2004; pp. 478–479. [Google Scholar]

- Joachims, T.; Granka, L.; Pan, B.; Hembrooke, H.; Gay, G. Accurately interpreting clickthrough data as implicit feedback. ACM SIGIR Forum. 2005, 51, 154–161. [Google Scholar]

- Joachims, T.; Granka, L.; Pan, B.; Hembrooke, H.; Radlinski, F.; Gay, G. Evaluating the accuracy of implicit feedback from clicks and query reformulations in Web search. ACM Trans. Inf. Syst. 2007, 25, 7. [Google Scholar] [CrossRef]

- Lorigo, L.; Pan, B.; Hembrooke, H.; Joachims, T.; Granka, L.; Gay, G. The influence of task and gender on search and evaluation behavior using Google. Inf. Process. Manag. 2006, 42, 1123–1131. [Google Scholar] [CrossRef]

- Lorigo, L.; Haridasan, M.; Brynjarsdóttir, H.; Xia, L.; Joachims, T.; Gay, G.; Granka, L.; Pellacini, F.; Pan, B. Eye tracking and online search: Lessons learned and challenges ahead. J. Am. Soc. Inf. Sci. Technol. 2008, 59, 1041–1052. [Google Scholar] [CrossRef]

- Pan, B.; Hembrooke, H.A.; Gay, G.K.; Granka, L.A.; Feusner, M.K.; Newman, J.K. The determinants of web page viewing behavior. In Proceedings of the Eye Tracking Research & Applications—ETRA’2004; ACM: New York, NY, USA, 2004; Volume 1, pp. 147–154. [Google Scholar]

- Pan, B.; Hembrooke, H.; Joachims, T.; Lorigo, L.; Gay, G.; Granka, L. In Google we trust: Users’ decisions on rank, position, and relevance. J. Comput. Commun. 2007, 12, 801–823. [Google Scholar] [CrossRef]

- Radlinski, F.; Joachims, T. Query chains: Learning to rank from implicit feedback. In Proceedings of the Eleventh ACM SIGKDD International Conference on Knowledge Discovery in Data Mining; ACM: New York, NY, USA, 2005; pp. 239–248. [Google Scholar]

- Buscher, G.; van Elst, L.; Dengel, A. Segment-level display time as implicit feedback. In Proceedings of the 32nd international ACM SIGIR Conference on Research and Development in Information Retrieval—SIGIR ’09; ACM: New York, NY, USA, 2009; pp. 67–74. [Google Scholar]

- Guan, Z.; Cutrell, E. An eye tracking study of the effect of target rank on web search. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems—CHI ’07; ACM: New York, NY, USA, 2007; pp. 417–420. [Google Scholar]

- Cutrell, E.; Guan, Z. What are you looking for? An eye-tracking study of information usage in web search. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems—CHI ’07; ACM: New York, NY, USA, 2007; pp. 407–416. [Google Scholar]

- Huang, J.; White, R.W.; Buscher, G. User see, user point. In Proceedings of the 2012 ACM Annual Conference on Human Factors in Computing Systems—CHI ’12; ACM: New York, NY, USA, 2012; pp. 1341–1350. [Google Scholar]

- Huang, J.; White, R.W.; Dumais, S. No clicks, no problem: Using cursor movements to understand and improve search. In Proceedings of the 2011 Annual Conference on Human Factors in Computing Systems—CHI ’11; ACM: New York, NY, USA, 2011; pp. 1225–1234. [Google Scholar]

- Buscher, G.; Dumais, S.; Cutrell, E. The good, the bad, and the random: An eye-tracking study of ad quality in web search. In Proceedings of 33rd International ACM SIGIR; ACM: New York, NY, USA, 2010; pp. 42–49. [Google Scholar]

- Djamasbi, S.; Hall-Phillips, A.; Yang, R. Search results pages and competition for attention theory: An exploratory eye-tracking study. In Human Interface and the Management of Information. Information and Interaction Design; Yamamoto, S., Ed.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 576–583. [Google Scholar]

- Djamasbi, S.; Hall-Phillips, A.; Yang, R. SERPs and ads on mobile devices: An eye tracking study for generation Y. In Universal Access in Human-Computer Interaction. User and Context Diversity; Stephanidis, C., Antona, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 259–268. [Google Scholar]

- Djamasbi, S.; Hall-Phillips, A.; Yang, R. An examination of ads and viewing behavior: An eye tracking study on desktop and mobile devices. In Proceedings of the AMCIS 2013, Chicago, IL, USA, 15–17 August 2013. [Google Scholar]

- Hall-Phillips, A.; Yang, R.; Djamasbi, S. Do ads matter? An exploration of web search behavior, visual hierarchy, and search engine results pages. In Proceedings of the 2013 46th Hawaii International Conference on System Sciences; IEEE: Piscatway, NJ, USA, 2013; pp. 1563–1568. [Google Scholar]

- Liu, Z.; Liu, Y.; Zhang, M.; Ma, S. How do sponsored search. In Information Retrieval Technology; Jaafar, A., Ali, N.M., Noah, S.A.M., Smeaton, A.F., Bruza, P., Bakar, Z.A., Jamil, N., Sembok, T.M.T., Eds.; Springer: Champagne, IL, USA, 2014; pp. 73–85. [Google Scholar]

- Liu, Z.; Liu, Y.; Zhou, K.; Zhang, M.; Ma, S. Influence of vertical result in web search examination. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval—SIGIR ’15; ACM: New York, NY, USA, 2015; pp. 193–202. [Google Scholar]

- Mao, J.; Liu, Y.; Zhang, M.; Ma, S. Estimating credibility of user clicks with mouse movement and eye-tracking information. In Natural Language Processing and Chinese Computing; Zong, C., Nie, J.-Y., Zhao, D., Feng, Y., Eds.; Springer: Berlin, Germany, 2014; pp. 263–274. [Google Scholar]

- Kim, J.; Thomas, P.; Sankaranarayana, R.; Gedeon, T. Comparing scanning behaviour in web search on small and large screens. In Proceedings of the Seventeenth Australasian Document Computing Symposium on—ADCS ’12; ACM: New York, NY, USA, 2012; pp. 25–30. [Google Scholar]

- Kim, J.; Thomas, P.; Sankaranarayana, R.; Gedeon, T.; Yoon, H.-J. Eye-tracking analysis of user behavior and performance in web search on large and small screens. J. Assoc. Inf. Sci. Technol. 2015, 66, 526–544. [Google Scholar] [CrossRef]

- Kim, J.; Thomas, P.; Sankaranarayana, R.; Gedeon, T.; Yoon, H.-J. Pagination versus scrolling in mobile web search. In Proceedings of the 25th ACM International on Conference on Information and Knowledge Management—CIKM ’16; ACM: New York, NY, USA, 2016; pp. 751–760. [Google Scholar]

- Kim, J.; Thomas, P.; Sankaranarayana, R.; Gedeon, T.; Yoon, H.-J. What snippet size is needed in mobile web search? In Proceedings of the 2017 Conference on Conference Human Information Interaction and Retrieval—CHIIR ’17; ACM: New York, NY, USA, 2017; pp. 97–106. [Google Scholar]

- Gossen, T.; Höbel, J.; Nürnberger, A. Usability and perception of young users and adults on targeted web search engines. In Proceedings of the 5th Information Interaction in Context Symposium on—IIiX ’14; ACM: New York, NY, USA, 2014; pp. 18–27. [Google Scholar]

- Bilal, D.; Gwizdka, J. Children’s eye-fixations on google search results. Assoc. Inf. Sci. Technol. 2016, 53, 1–6. [Google Scholar] [CrossRef]

- Bataineh, E.; Al-Bataineh, B. An analysis study on how female college students view the web search results using eye tracking methodology. In Proceedings of the International Conference on Human-Computer Interaction, Crete, Greece, 22–27 June 2014; p. 93. [Google Scholar]

- Kim, J.; Thomas, P.; Sankaranarayana, R.; Gedeon, T.; Yoon, H.-J. Understanding eye movements on mobile devices for better presentation of search results. J. Assoc. Inf. Sci. Technol. 2016, 67, 2607–2619. [Google Scholar] [CrossRef]

- Lagun, D.; Hsieh, C.-H.; Webster, D.; Navalpakkam, V. Towards better measurement of attention and satisfaction in mobile search. In Proceedings of the 37th International ACM SIGIR Conference on Research & Development in Information Retrieval—SIGIR ’14; ACM: New York, NY, USA, 2014; pp. 113–122. [Google Scholar]

- Lagun, D.; McMahon, D.; Navalpakkam, V. Understanding mobile searcher attention with rich ad formats. In Proceedings of the 25th ACM International on Conference on Information and Knowledge Management—CIKM ’16; ACM: New York, NY, USA, 2016; pp. 599–608. [Google Scholar]

- Domachowski, A.; Griesbaum, J.; Heuwing, B. Perception and effectiveness of search advertising on smartphones. Assoc. Inf. Sci. Technol. 2016, 53, 1–10. [Google Scholar] [CrossRef]

- Marcos, M.-C.; González-Caro, C. Comportamiento de los usuarios en la página de resultados de los buscadores. Un estudio basado en eye tracking. El Prof. Inf. 2010, 19, 348–358. [Google Scholar] [CrossRef][Green Version]

- González-Caro, C.; Marcos, M.-C. Different users and intents: An Eye-tracking analysis of web search. In Proceedings of the 4th ACM International Conference on Web Search and Data Mining, Hong Kong, China, 9–12 February 2011. [Google Scholar]

- Rele, R.S.; Duchowski, A.T. Using eyetracking to evaluate alternative search results interfaces. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2005, 49, 1459–1463. [Google Scholar] [CrossRef]

- Lu, W.; Jia, Y. An eye-tracking study of user behavior in web image search. In PRICAI 2014: Trends in Artificial Intelligence; Pham, D.-N., Park, S.-B., Eds.; Springer: Cham, Germany, 2014; pp. 170–182. [Google Scholar]

- Kammerer, Y.; Gerjets, P. Effects of search interface and Internet-specific epistemic beliefs on source evaluations during Web search for medical information: An eye-tracking study. Behav. Inf. Technol. 2012, 31, 83–97. [Google Scholar] [CrossRef]

- Schultheiss, S.; Sünkler, S.; Lewandowski, D. We still trust in Google, but less than 10 years ago: An eye-tracking study. Inf. Res. An Int. Electron. J. 2018, 23, 799. [Google Scholar]

- Kammerer, Y.; Wollny, E.; Gerjets, P.; Scheiter, K. How authority related epistemological beliefs and salience of source information influence the evaluation of web search results—An eye tracking study. In Proceedings of the 31st Annual Conference of the Cognitive Science Society (CogSci), Amsterdam, The Netherlands, 29 July–1 August 2009; pp. 2158–2163. [Google Scholar]

- Hofmann, K.; Mitra, B.; Radlinski, F.; Shokouhi, M. An eye-tracking study of user interactions with query auto completion. In Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management—CIKM ’14; ACM: New York, NY, USA, 2014; pp. 549–558. [Google Scholar]

- Broder, A. A taxonomy of web search. ACM SIGIR Forum. 2002, 36, 3–10. [Google Scholar] [CrossRef]

- Jiang, J.; He, D.; Allan, J. Searching, browsing, and clicking in a search session. In Proceedings of the 37th International ACM SIGIR Conference on Research & Development in Information Retrieval—SIGIR ’14; ACM: New York, NY, USA, 2014; pp. 607–616. [Google Scholar]

- Egusa, Y.; Takaku, M.; Terai, H.; Saito, H.; Kando, N.; Miwa, M. Visualization of user eye movements for search result pages. In Proceedings of the EVIA. 2008 (NTCIR-7 Pre-Meeting Workshop), Tokyo, Japan, 16–19 December 2008; pp. 42–46. [Google Scholar]

- Guy, I. Searching by talking: Analysis of voice queries on mobile web search. In Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval—SIGIR ’16; ACM: New York, NY, USA, 2016; pp. 35–44. [Google Scholar]

- Klöckner, K.; Wirschum, N.; Jameson, A. Depth- and breadth-first processing of search result lists. In Proceedings of the Extended Abstracts of the 2004 Conference on Human Factors and Computing Systems—CHI ’04; ACM: New York, NY, USA, 2004; p. 1539. [Google Scholar]

- Aula, A.; Majaranta, P.; Räihä, K.-J. Eye-tracking reveals the personal styles for search result evaluation. Lect. Notes Comput. Sci. 2005, 3585, 1058–1061. [Google Scholar]

- Gerjets, P.; Kammerer, Y.; Werner, B. Measuring spontaneous and instructed evaluation processes during Web search: Integrating concurrent thinking-aloud protocols and eye-tracking data. Learn. Instr. 2011, 21, 220–231. [Google Scholar] [CrossRef]

- Balatsoukas, P.; Ruthven, I. An eye-tracking approach to the analysis of relevance judgments on the Web: The case of Google search engine. J. Am. Soc. Inf. Sci. Technol. 2012, 63, 1728–1746. [Google Scholar] [CrossRef]

- Marcos, M.-C.; Nettleton, D.; Sáez-Trumper, D. A user study of web search session behaviour using eye tracking data. In Proceedings of the BCS HCI 2012- People and Computers XXVI; ACM: New York, NY, USA, 2012; Volume XXVI, pp. 262–267. [Google Scholar]

- Nettleton, D.; González-Caro, C. Analysis of user behavior for web search success using eye tracker data. In Proceedings of the 2012 Eighth Latin American Web Congress; IEEE: Piscatway, NJ, USA, 2012; pp. 57–63. [Google Scholar]

- Dickerhoof, A.; Smith, C.L. Looking for query terms on search engine results pages. In Proceedings of the ASIST Annual Meeting, Seattle, WA, USA, 31 October–4 November 2014; Volume 51. [Google Scholar]

- Bhattacharya, N.; Gwizdka, J. Relating eye-tracking measures with changes in knowledge on search tasks. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications—ETRA ’18; ACM: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Sachse, J. The influence of snippet length on user behavior in mobile web search. Aslib J. Inf. Manag. 2019, 71, 325–343. [Google Scholar] [CrossRef]

- Cetindil, I.; Esmaelnezhad, J.; Li, C.; Newman, D. Analysis of instant search query logs. In Proceedings of the Fifteenth International Workshop on the Web and Databases (WebDB 2012), Scottsdale, AZ, USA, 20 May 2012; pp. 1–20. [Google Scholar]

- Mustafaraj, E.; Metaxas, P.T. From obscurity to prominence in minutes: Political speech and real-time search. In Proceedings of the WebSci10: Extending the Frontiers of Society On-Line, Raleigh, NC, USA, 26–27 April 2020. [Google Scholar]

- Bilal, D.; Huang, L.-M. Readability and word complexity of SERPs snippets and web pages on children’s search queries. Aslib J. Inf. Manag. 2019, 71, 241–259. [Google Scholar] [CrossRef]

- Lewandowski, D.; Kammerer, Y. Factors influencing viewing behaviour on search engine results pages: A review of eye-tracking research. Behav. Inf. Technol. 2020, 1–31. [Google Scholar] [CrossRef]

| Mean | Median | SD | Min. | Max. | |

|---|---|---|---|---|---|

| Participants | 30.19 | 30 | 12.21 | 5 | 58 |

| Study Reference. | Research Questions | Topic |

|---|---|---|

| Granka et al. (2004) [28] | How do users interact with the list of ranked results of WWW search engines? Do they read the abstracts sequentially from top to bottom, or do they skip links? How many of the results do users evaluate before clicking on a link or reformulating the search? | BSB |

| Klöckner et al. (2004) [72] | In what order do users look at the entries in a search result list? | BSB |

| Pan et al. (2004) [33] | Are standard ocular metrics such as mean fixation duration, gazing time, and saccade rate, and also scan path differences determined by individual differences, different types of web pages, the order of a web page being viewed, or different tasks at hand? | BSB |

| Aula et al. (2005) [73] | What are the personal styles for search result evaluation? | BSB |

| Joachims et al. (2005) [29] | Do users scan the results from top to bottom? How many abstracts do they read before clicking? How does their behavior change, if we artificially manipulate Google’s ranking? | BSB |

| Radlinski and Joachims (2005) [35] | How query chains can be used to extract useful information from a search engine log file? | RP |

| Rele and Duchowski (2005) [62] | Will tabular interface increase efficiency and accuracy in scanning search results which will be achieved through a spatial grouping of results into distinct element/category columns? | RP |

| Lorigo et al. (2006) [31] | How does the effort spent reading abstracts compare with selection behaviors, and how does the effort vary concerning user and task? Or, can we safely assume that when a user clicks on the nth abstract, that they are making an informed decision based on n-1 abstracts preceding it? | BSB |

| Guan and Cutrell (2007) [37] | How users’ search behaviors vary when target results were displayed at various positions for informational and navigational tasks? | RP |

| Joachims et al. (2007) [30] | How users behave on Google’s results page? Do users scan the results from top to bottom? How many abstracts do they read before clicking? How does their behavior change, if we artificially manipulate Google’s ranking? | BSB |

| Pan et al. (2007) [34] | What is the relevance through human judgments of the abstracts returned by Google (abstract relevance), as well as the pages associated with those abstracts (Web page relevance)? | BSB |

| Cutrell and Guan (2007) [38] | Do users read the descriptions? Are the URLs and other metadata used by anyone other than expert searchers? Does the context of the search or the type of task being supported matter? | BSB |

| Lorigo et al. (2008) [32] | How do users view the ranked results on a SERP? What is the relationship between the search result abstracts viewed and those clicked on, and whether gender, search task, or search engine influence these behaviors? | BSB |

| Egusa et al. (2008) [70] | What are the visualization techniques for the user behavior using search engine results pages? | RP |

| Xu et al. (2008) [7] | How to prepare a personalized online content recommendation according to the user’s previous reading, browsing, and video watching behaviors? | RP |

| Buscher et al. (2009) [36] | What is the relationship between segment-level display time and segment-level feedback from an eye tracker in the context of information retrieval tasks? How do they compare to each other and a pseudo relevance feedback baseline on the segment level? How much can they improve the ranking of a large commercial Web search engine through re-ranking and query expansion? | BSB |

| Kammerer et al. (2009) [66] | How do authority-related epistemological beliefs and salience of source information influence the evaluation of web search results? | CSB |

| Buscher et al. (2010) [41] | How do users distribute their visual attention on different components on a SERP during web search tasks, especially on sponsored links? | AR |

| Dumais et al. (2010) [11] | How is the visual attention devoted to organic results influenced by page elements, in particular ads and related searches? | AR |

| Marcos and González-Caro (2010) [60] | What is the relationship between the users’ intention and their behavior when they browse the SERPs? | BSB |

| Gerjets et al. (2011) [74] | What type of spontaneous evaluation processes occur when users solve a complex search task related to a medical problem by using a standard search-engine design? | CSB |

| González-Caro and Marcos (2011) [61] | Whether user browsing behavior in SERPs is different for queries with an informational, navigational, and transactional intent? | BSB |

| Huang et al. (2011) [40] | To what extent does gaze correlate with cursor behavior on SERPs and non-SERPs? What does cursor behavior reveal about search engine users’ result examination strategies, and how does this relate to search result clicks and prior eye-tracking research? Can we demonstrate useful applications of large-scale cursor data? | CCM |

| Balatsoukas and Ruthven (2012) [75] | What is the relationship between relevance criteria use and visual behavior in the context of predictive relevance judgments using the Google search engine? | CSB |

| Huang et al. (2012) [39] | When is the cursor position is a good proxy for gaze position and what is the effect of various factors such as time, user, cursor behavior patterns, and search task on the gaze-cursor alignment? | CCM |

| Kammerer and Gerjets (2012) [64] | How both the search interface (resource variable) and Internet-specific epistemic beliefs (individual variable) influenced medical novices’ spontaneous source evaluations on SERPs during Web search for a complex, unknown medical problem? | CSB |

| Kim et al. (2012) [49] | On small devices, what are users’ scanning behavior when they search for information on the web? | BSB |

| Marcos et al. (2012) [76] | What is the user behavior during a query session, that is, a sequence of user queries, results page views and content page views, to find a specific piece of information? | MD |

| Nettleton and González-Caro (2012) [77] | How learning to interpret user search behavior would allow systems to adapt to different kinds of users, needs, and search settings? | BSB |

| Djamasbi et al. (2013a) [42] | Can competition for attention theory help predict users’ viewing behavior on SERPs? | BSB |

| Djamasbi et al. (2013b) [43] | Would the presence of ads affect the attention to search results on a mobile phone? Do users spend more time looking at advertisements than at entries? Can advertisements distract users’ attention from the search results? | AR |

| Djamasbi et al. (2013c) [44] | Is there the phenomenon of banner blindness on SERPS on computers and mobile phones? | AR |

| Hall-Phillips et al. (2013) [45] | Would the presence of ads affect the attention to the returned search results? Do users spend more time looking at ads than at entries? | AR |

| Bataineh and Al-Bataineh (2014) [55] | How do female college students view the web search results? | AG |

| Dickerhoof and Smith (2014) [78] | Do people fixate on their query terms on a search engine results page? | BSB |

| Gossen et al. (2014) [53] | Are children more successful with Blinde-Kuh than with Google? Do children prefer BK over Google? Do children and adults have different perception of web search interfaces? Do children’s and adults’ employ different search strategies? | AG |

| Hofmann et al. (2014) [67] | How many suggestions do users consider while formulating a query? Does position bias affect query selection, possibly resulting in suboptimal queries? How does the quality of query auto-completion affect user interactions? Can observed behavior be used to infer query auto-completion quality? | BSB |

| Jiang et al. (2014) [69] | How do users’ search behaviors, especially browsing and clicking behaviors, vary in complex tasks of different types? How do users’ search behaviors change over time in a search session? | CSB |

| Lagun et al. (2014) [57] | Does tracking the browser viewport (the visible portion of a web page) on mobile phones could enable accurate measurement of user attention at scale, and provide a good measurement of search satisfaction in the absence of clicks? | CCM |

| Y. Liu et al. (2014) [6] | What is the true examination sequence of the user in SERPs? | BSB |

| Z. Liu et al. (2014) [46] | How do sponsored search results affect user behavior in web search? | AR |

| Lu and Jia (2014) [63] | How users view the results returned by image search engines. Do they scan the results from left to right and top to bottom, i.e., following the Western reading habit? How many results do users view before clicking on one? | BSB |

| Mao et al. (2014) [48] | How to estimate the credibility of user clicks with mouse movement and eye-tracking Information on SERPs? | CCM |

| Kim et al. (2015) [50] | Is there any difference between user search behavior on large and small screens? | MD |

| Z. Liu et al. (2015) [47] | What is the influence of vertical results on search examination behaviors? | MD |

| Bilal and Gwizdka (2016) [54] | What online reading behavior patterns do children between ages 11 and 13, demonstrate in reading Google SERPs? What eye fixation patterns and dwell times do children in ages 11 and 13, exhibit in reading Google SERPs? | AG |

| Domachowski et al. (2016) [59] | How is attention distributed on the first screen of a mobile SERP and which actions do users take? How effective are ads on mobile SERPs regarding the clicks generated as an indicator of relevance? Are users subjectively aware of ads on mobile SERPs and what is their estimation of ads? | AR |

| Kim et al. (2016a) [56] | How the diversification of screen sizes on hand-held devices affects how users search? | MD |

| Kim et al. (2016b) [51] | Is horizontal swiping for mobile web search better than vertical scrolling? | MD |

| Lagun et al. (2016) [58] | How do we evaluate rich, answer-like results, what is their effect on users’ gaze and how do they impact search satisfaction for queries with commercial intent? | AR |

| Kim et al. (2017) [52] | What snippet size is needed in mobile web search? | MD |

| Papoutsaki et al. (2017) [8] | Can SearchGazer be useful for search behavior studies? | BSB |

| Bhattacharya and Gwizdka (2018) [79] | Are the changes in verbal knowledge, from before to after a search task, observable in eye-tracking measures? | BSB |

| Hautala et al. (2018) [10] | Are sixth-grade students able to utilize information provided by search result components (i.e., title, URL, and snippet) as reflected in the selection rates? What information sources do the students pay attention to and which evaluation strategies do they use during their selection? Does the early positioning of correct search results on the search list decrease the need to inspect other search results? Are there differences between students in how they read and evaluate Internet search results? | AG |

| Schultheiss et al. (2018) [65] | Can the results by Pan et al. (2007) be replicated, despite temporal and geographical differences? | BSB |

| Sachse, (2019) [80] | What snippet size to use in mobile web search? | BSB |

| Study Reference | Key Findings |

|---|---|

| Granka et al. (2004) [28] | The amount of time spent viewing the presented abstracts, the total number of abstracts viewed, as well as measures of how thoroughly searchers evaluate their results set. |

| Klöckner et al. (2004) [72] | Defining depth- and breadth-first processing of search result lists. |

| Pan et al. (2004) [33] | Gender of subjects, the viewing order of a web page, and the interaction effects of page order and site type on online ocular behavior. |

| Aula et al. (2005) [73] | Based on evaluation styles, the users were divided into economic and exhaustive evaluators. Economic evaluators made their decision about the next action faster and based on less information than exhaustive evaluators. |

| Joachims et al. (2005) [29] | Users’ clicking decisions were influenced by the relevance of the results, but that they were biased by the trust they had in the retrieval function, and by the overall quality of the result set. |

| Radlinski and Joachims (2005) [35] | Presented a novel approach for using clickthrough data to learn ranked retrieval functions for web search results. |

| Rele and Duchowski (2005) [62] | The eye-movement analysis provided some insights into the importance of search result’s abstract elements such as title, summary, and the URL of the interface while searching. |

| Lorigo et al. (2006) [31] | The query result abstracts were viewed in the order of their ranking in only about one fifth of the cases, and only an average of about three abstracts per result page were viewed at all. |

| Guan and Cutrell (2007) [37] | Users spent more time on tasks and were less successful in finding target results when targets were displayed at lower positions in the list. |

| Joachims et al. (2007) [30] | Relative preferences were accurate not only between results from an individual query, but across multiple sets of results within chains of query reformulations. |

| Pan et al. (2007) [34] | College student users had substantial trust in Google’s ability to rank results by their true relevance to the query. |

| Cutrell and Guan (2007) [38] | Adding information to the contextual snippet significantly improved performance for informational tasks but degraded performance for navigational tasks. |

| Lorigo et al. (2008) [32] | Strong similarities between behaviors on Google and Yahoo! |

| Egusa et al. (2008) [70] | New visualization techniques for user behaviors when using search engine results pages. |

| Xu et al. (2008) [7] | New recommendation for an algorithm for online documents, images, and videos, which is personalized. |

| Buscher et al. (2009) [36] | Feedback based on display time on the segment level was much coarser than feedback from eye-tracking for search personalization. |

| Kammerer et al. (2009) [66] | Authority-related epistemological beliefs affected the evaluation of web search results presented by a search engine. |

| Buscher et al. (2010) [41] | The amount of visual attention that people devoted to ads depended on their quality, but not the type of task. |

| Dumais et al. (2010) [11] | Provided insights about searchers’ interactions with the whole page, and not just individual components. |

| Marcos and González-Caro (2010) [60] | A relationship existed between the users’ intention and their behavior when they browsed the results page. |

| Gerjets et al. (2011) [74] | Measured spontaneous and instructed evaluation processes during a web search. |

| González-Caro and Marcos (2011) [61] | Organic results were the main focus of attention for all intentions; apart from for transactional queries, the users did not spend much time exploring sponsored results. |

| Huang et al. (2011) [40] | The cursor position was closely related to eye gaze, especially on SERPs. |

| Balatsoukas and Ruthven (2012) [75] | Novel stepwise methodological framework for the analysis of relevance judgments and eye movements on the web. |

| Huang et al. (2012) [39] | Gaze and cursor alignment in web search. |

| Kammerer and Gerjets (2012) [64] | Students using the tabular interface paid less visual attention to commercial search results and selected objective search results more often and commercial ones less often than students using the list interface. |

| Kim et al. (2012) [49] | On a small screen, users needed relatively more time to conduct a search than they did on a large screen, despite tending to look less far ahead beyond the link that they eventually selected. |

| Marcos et al. (2012) [76] | Proposed a model of user search behavior which consists of five possible navigation patterns. |

| Nettleton and González-Caro (2012) [77] | Successful users formulated fewer queries per session and visited a smaller number of documents than unsuccessful users. |

| Djamasbi et al. (2013a) [42] | Viewing behavior can potentially have an impact on effective search and thus user experience of SERPs. |

| Djamasbi et al. (2013b) [43] | The presence of advertisements and location on the screen can have an impact on user experience and search. |

| Djamasbi et al. (2013c) [44] | Top ads may be more effective in attracting users’ attention on mobile phones compared to desktop computers. |

| Hall-Phillips et al. (2013) [45] | Findings provided support for the competition for attention theory in that users were looking at advertisements and entries when evaluating SERPs. |

| Bataineh and Al-Bataineh (2014) [55] | Making searches in not native language required more time for the scanning and reading of results. |

| Dickerhoof and Smith (2014) [78] | Users fixated on some of the displayed query terms; however, they fixated on other words and parts of the page more frequently. |

| Gossen et al. (2014) [53] | Children used a breadth-first-like search strategy, examining the whole result list, while adults only examined the first top results and reformulated the query. |

| Hofmann et al. (2014) [67] | Focus on top suggestions (query auto completion) is due to examination bias, not ranking quality. |

| Jiang et al. (2014) [69] | User behavior in the four types of tasks differed in various aspects, including search activeness, browsing style, clicking strategy, and query reformulation. |

| Lagun et al. (2014) [57] | Identified increased scrolling past direct answer and increased time below direct answer as clear, measurable signals of user dissatisfaction with direct answers. |

| Y. Liu et al. (2014) [6] | Proposed a two-stage examination model: skimming to the reading stage; and reading to clicking stage. |

| Z. Liu et al. (2014) [46] | Different presentation styles among sponsored links might lead to different behavior biases, not only for the sponsored search results but also for the organic ones. |

| Lu and Jia (2014) [63] | Image search results at certain locations, e.g., the top-center area in a grid layout, were more attractive than others. |

| Mao et al. (2014) [48] | Credible user behaviors could be separated from non-credible ones with many interaction behavior features. |

| Kim et al. (2015) [50] | Users had more difficulty extracting information from search results pages on the smaller screens, although they exhibited less eye movement as a result of an infrequent use of the scroll function. |

| Z. Liu et al. (2015) [47] | Influence of vertical results in web search examination. |

| Bilal and Gwizdka (2016) [54] | Grade level or age had a more significant effect on reading behaviors, fixations, first result rank, and interactions with SERPs than task type. |

| Domachowski et al. (2016) [59] | There was no ad blindness on mobile searches but, similar to desktop searches, users also tended to avoid search advertising on smartphones. |

| Kim et al. (2016a) [56] | Behavior on three different small screen sizes (early smartphones, recent smartphones, and phablets) revealed no significant difference concerning the efficiency of carrying out tasks. |

| Kim et al. (2016b) [51] | Users using pagination were: more likely to find relevant documents, especially those on different pages; spent more time attending to relevant results; and were faster to click while spending less time on the search result pages overall. |

| Lagun et al. (2016) [58] | Showing rich ad formats improved search experience by drawing more attention to the information-rich ad and allowing users to interact to view more offers. |

| Kim et al. (2017) [52] | Users with long snippets on mobile devices exhibited longer search times with no better search accuracy for informational tasks. |

| Papoutsaki et al. (2017) [8] | Introduced SearchGazer, a web-based eye tracker for remote web search studies using common webcams already present in laptops and some desktop computers. |

| Bhattacharya and Gwizdka (2018) [79] | Users with higher change in knowledge differed significantly in terms of their total reading-sequence length, reading-sequence duration, and number of reading fixations, when compared to participants with lower knowledge change. |

| Hautala et al. (2018) [10] | Students generally made a flexible use both of eliminative and confirmatory evaluation strategies when reading Internet search results. |

| Schultheiss et al. (2018) [65] | Although the viewing behavior was influenced more by the position than by the relevance of a snippet, the crucial factor for a result to be clicked was the relevance and not its position on the results page. |

| Sachse, (2019) [80] | Short snippets provide too little information about the result. Long snippets of five lines lead to better performance than medium snippets for navigational queries, but worse performance for informational queries. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Strzelecki, A. Eye-Tracking Studies of Web Search Engines: A Systematic Literature Review. Information 2020, 11, 300. https://doi.org/10.3390/info11060300

Strzelecki A. Eye-Tracking Studies of Web Search Engines: A Systematic Literature Review. Information. 2020; 11(6):300. https://doi.org/10.3390/info11060300

Chicago/Turabian StyleStrzelecki, Artur. 2020. "Eye-Tracking Studies of Web Search Engines: A Systematic Literature Review" Information 11, no. 6: 300. https://doi.org/10.3390/info11060300

APA StyleStrzelecki, A. (2020). Eye-Tracking Studies of Web Search Engines: A Systematic Literature Review. Information, 11(6), 300. https://doi.org/10.3390/info11060300