1. Introduction

Cloud computing has become an attractive paradigm for organisations because it enables “

convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort [

1]”. However, security concerns related to the outsourcing of data and applications to the cloud have slowed down cloud adoption. In fact, cloud customers are afraid of loosing control over their data and applications and of being exposed to data loss, data compliance and privacy risks. Therefore, when it comes to select a cloud service provider (CSP), cloud customers evaluate CSPs first on security (82%), and data privacy (81%) and then on cost (78%) [

2]. This means that a cloud customer will more likely engage with a CSP that shows the best capabilities to fully protect information assets in its cloud service offerings. To identify the “ideal” CSP, a customer has first to assess and compare the security posture of the CSPs offering similar services. Then, the customer has to select among the candidate CSPs, the one that best meets his security requirements.

Selecting the most secure CSP is not straightforward. When the tenant outsources his services to a CSP, he also delegates to the CSP the implementation of security controls to protect his services. However, since the CSP’s main objective is to make profit, it can be assumed that he does not want to invest more than necessary in security. Thus, there is a tension between tenant and CSP on the provision of security. In addition, for security compared to other providers’ attributes like cost or performance there are no measurable and precise metrics to quantify it [

3]. The consequences are twofold. It is not only hard for the tenant to assess the security of outsourced services, it is also hard for the CSP to demonstrate his security capabilities and thus to negotiate a contract. Thus, even if a CSP puts a lot of effort in security, it will be hard for him to demonstrate it, since malicious CSPs will pretend to do the same. This imbalance of knowledge is known as information asymmetry [

4] and together with the cost of cognition to identify a good provider and negotiate a contract [

5] has been widely studied in economics.

Furthermore, information gathering on the security of a provider is not easy because there is no standard framework to assess which security controls are supported by a CSP. The usual strategy for the cloud customer is to ask the CSP to answer a set of questions from a proprietary questionnaire and then try to fix the most relevant issues in the service level agreements. But this makes the evaluation process inefficient and costly for the customers and the CSPs.

In this context, the Cloud Security Alliance (CSA) has provided a solution to the assessment of the security posture of CSPs. The CSA published the Consensus Assessments Initiative Questionnaire (CAIQ), which consists of questions that providers should answer to document which security controls exist in their cloud offerings. The answers of CSPs to CAIQ could be used by tenants for selecting the provider the best suit their security needs.

However, there are many CSPs offering the same service—Spamina Inc. lists around 850 CSPs worldwide. While it can be considered acceptable to manually assess and compare the security posture of an handful of providers, this task becomes unfeasible when the number of providers grows up to hundreds. As a consequence, many tenants do not have an elaborated process to select a secure CSP based on security requirement elicitation. Instead, often CSPs are chosen by chance or the tenant just sticks to big CSPs [

6]. Therefore, there is the need for an approach that helps cloud customers in comparing and ranking CSPs based on the level of security they offer.

The existing approaches to CSP ranking and selection either do not consider security as a relevant criteria for selection or they do but do not provide a way to assess security in practice. To the best of our knowledge there are no approaches that have used CAIQs to assess and compare the security capabilities of CSPs.

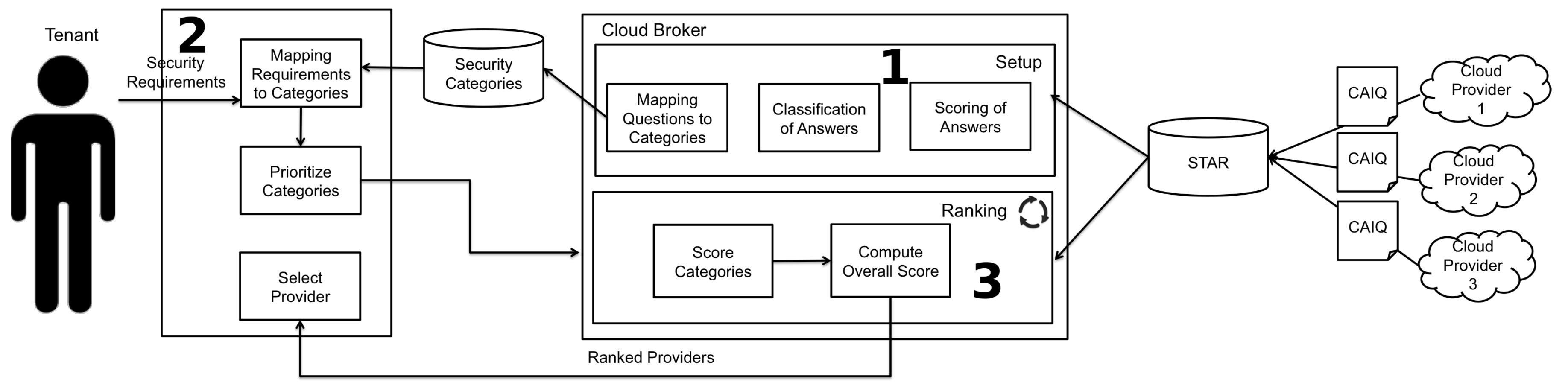

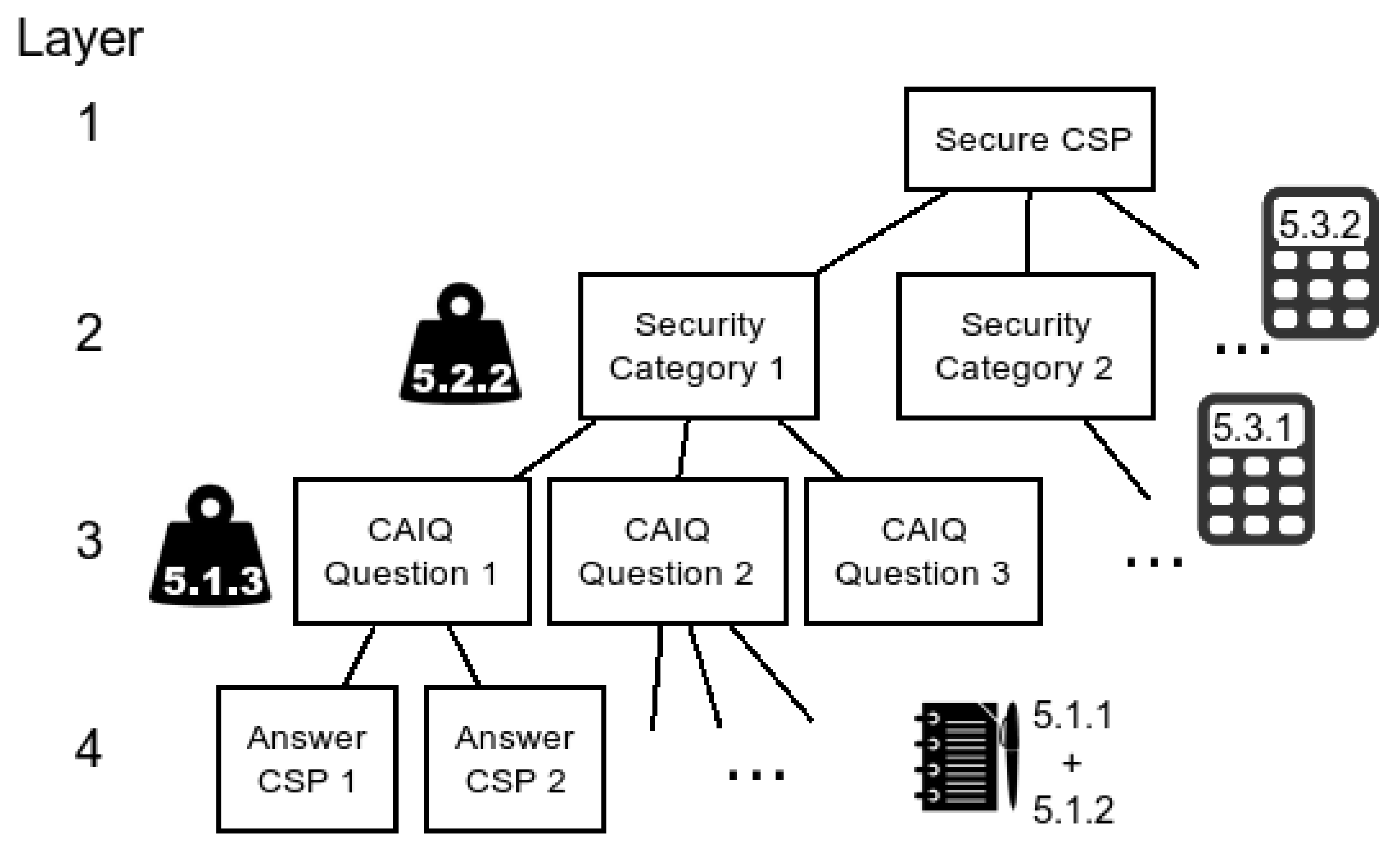

Hence, we investigate in this paper whether manually comparing and ranking CSPs based on CAIQ’s answers is feasible in practice. For this aim we have conducted an empirical study that has shown that manually comparing CSPs based on CAIQ is too time consuming. To facilitate the use of CAIQ to compare and ranking CSPs, we have proposed an approach that automates the processing of CAIQ’s answers. The approach uses CAIQ’s answers to assign a value to the different security capabilities of CSPs and then uses an Analytic Hierarchy Process (AHP) to compare and rank the providers based on those capabilities.

The contribution of this paper is threefold. First, we discuss the issues related to processing CAIQ for provider selection that could hinder its adoption in practice. Second, we refined the security categories used to classify the questions in the CAIQ into a set of categories that can be directly mapped to low-level security requirements. Then, we propose an approach to CSP comparing and ranking that assigns a weight to the security categories based on CAIQ’s answers.

To the best of our knowledge, our approach is the only one which provides an effective way to measure the level of security of a provider.

The rest of the paper is structured as follows.

Section 2 presents related work and

Section 3 discusses the issues related to processing CAIQs. Then,

Section 4 presents the design and the results of the experiment and discusses the implications that our results have for security-aware provider selection.

Section 5 introduces our approach to comparing and ranking CSPs’ security. We evaluate it in

Section 6 and

Section 7 concludes the paper and outlines future works.

In the in

Appendix A we give an illustrative example for the application of our approach.

2. Related Work

The problem of service selection has been widely investigated both in the context of web services and cloud computing. Most of the works based the selection on Quality of Service (QoS) but adopt different techniques to comparing and ranking CSPs such as genetic algorithms [

7], ontology mapping [

8,

9], game theory [

10] and multi-criteria decision making [

11]. In contrast, only few works considered security as a relevant criteria for the comparison and ranking of CSPs [

12,

13,

14,

15,

16,

17,

18] but none of them provided a way to assess and measure the security of a CSP in practice.

Sundareswaran et al. [

12] proposed an approach to select an optimal CSP based on different features including price, QoS, operating systems and security. In order to select the best CSP they encode the property of the providers and the requirements of the tenant as bit array. Then to identify the candidate providers, they find the service providers whose properties encoding are the k-nearest neighbours of the encoding of the tenant’s requirements. However, Sundareswaran et al., do not describe how an overall score for security is computed, while in our approach overall security level of a CSP is computed based on the security controls that the provider declares to support in the CAIQ.

More recently, Ghosh et al. [

13] proposed SelCSP, a framework that supports cloud customers in selecting the provider that minimises the security risk related to the outsourcing of their data and application to the CSP. The approach consists in estimating the interaction risk the customer is exposed to if it decides to interact with a CSP. The interaction is computed based on the trustworthiness the customer places in the provider and the competence of the CSP. The trustworthiness is computed based on direct and indirect ratings obtained through either direct interaction or other customers’ feedback. The competence of the CSP is estimated from the transparency of SLAs. The CSP with minimum interaction risk is the one ideal for the cloud customer. Similarly to us, to estimate confidence Ghosh et al., have identified a set of security categories and mapped those categories to low-level security controls supported by the CSPs. However, they do not mention how a value can be assigned to the security categories based on the security controls. Mouratidis et al. [

19] describe a framework to select a CSP based on security and privacy requirements. They provide a modelling language and a structured process, but only give a vague description how a structured security elicitation at the CSP works. Akinrolabu [

20] develops a framework for supply-chain risk assessment which can also be used to assess the security of different CSPs. For each CSP a score has to be determined for nine different dimensions. However, they do not mention how a value can be assigned to each security dimension. Habib et al. [

18] also propose an approach to compute a trustworthiness score for CSPs in terms of different attributes, for example, compliance, data governance, information security. Similarly to us, Habib et al. use CAIQ as a source to assign a value to the attributes on the basis of which the trustworthiness is computed. However, in their approach the attributes match the security domains in the CAIQ and therefore a tenant has to specify its security requirements in terms of the CAIQ security domains. In our approach, we do not have such a limitation: the tenant specifies his security requirements that are then mapped to security categories, that can be mapped to specific security features offered by a CSP. Mahesh et al. [

21] investigate audit practices, map the risk to technology that mitigates the risk and come up with a list of efficient security solutions. However, their approach is used to compare different security measures and not different CSPs. Bleikertz et al. [

22] support cloud customers with the security assessments. Their approach is focused on a systematic analysis of attacks and parties in cloud computing to provide a better understanding of attacks and find new ones.

Other approaches [

14,

15,

16] focus on identifying a hierarchy of relevant attributes to compare CSPs and then use multi-criteria decision making techniques to rank them based on those attributes.

Costa et al. [

14] proposed a multi-criteria decision model to evaluate cloud services based on the MACBETH method. The services are compared with respect to 19 criteria including also some aspects of security like data confidentiality, data loss and data integrity. However, the MACBETH approach does not support the automatic selection of the CSP because it requires the tenant to give for each evaluation criteria a neutral reference level and a good reference level and to rate the attractiveness of each criteria. While in our approach the input provided by the tenant is minimised: the tenant only specifies the security requirements and their importance and then our approach automatically compares and ranks the candidate CSPs.

Garg et al. proposed a selection approach based on the Service Measurement Index (SMI) [

23,

24] developed by the Cloud Services Measurement Initiative Consortium (CSMIC) [

25]. SMI aims to provide a standard method to measure cloud-based business services based on an organisation’s specific business and technology requirements. It is a hierarchical framework consisting of seven categories which are refined into a set of measurable key performance indicators (KPI). Each KPI gets a score and each layer of the hierarchy gets weights assigned. The SMI is then calculated by multiplying the resulting scores by the assigned weights. Garg et al. have extended the SMI approach to derive the relative service importance values from KPIs, and then use the Analytic Hierarchy Process (AHP) [

26,

27] for ranking the services. Furthermore, they have distinguished between essential, where KPI values are required, and non-essential attributes. They have also explained how to handle the lack of KPI values for non-essential attributes. Built upon this approach, Patiniotakis et al. [

16] discuss an alternative classification based on the fuzzy AHP method [

28,

29] to handle fuzzy KPIs’ values and requirements. To assess security and privacy, Patiniotakis et al. assume that a subset of the controls of the cloud control matrix is referenced as KPIs and that the tenant should ask the provider (or get its responses from the CSA STAR registry) and assign each answer a score and a weight.

As the approaches to CSP selection proposed in References [

15,

16,

17], our approach adopts a multi-criteria decision model based on AHP to rank the CSPs. However, there are significant differences. First, we refine the categories proposed to classify the questions in the CAIQ into sub-categories that represent well-defined security aspects like access control, encryption, identity management, and malware protection that have been defined by security experts. Second, a score and weight to these categories is automatically assigned based on the answers that providers give to corresponding questions in the CAIQ. This reduces the effort for the cloud customer who can rely on the data published in CSA STAR rather than interviewing the providers to assess their security posture.

Table 1 provides and overview of the mentioned related work. The columns “dimension” list if the approach considers security and/or other dimensions, the column “data security” lists if the approach proposes a specific method how to evaluate security and the column “security categories” lists how many different categories are considered for security.

In summary, to the best of our knowledge, our approach is the first approach to CSP selection that provides an effective way to measure the security of a provider. Our approach could be used as a building block for the existing approaches to CSP selection that consider also other providers’ attributes like cost and performance.

3. Standards and Methods

In the first subsection we introduce the Cloud Security Alliance (CSA), the Cloud Controls Matrix (CCM) and the Consensus Assessments Initiative Questionnaire (CAIQ). In the second subsection, we discuss the issues related to the use of CAIQs to compare and ranking CSPs’ security.

3.1. Cloud Security Alliance’s Consensus Assessments Initiative Questionnaire

The Cloud Security Alliance is a non-profit organisation with the aim to promote best practices for providing security assurance within Cloud Computing [

30]. To this end, the Cloud Security Alliance has provided the Cloud Controls Matrix [

31] and the Consensus Assessments Initiative Questionnaire [

32]. The CCM is designed to guide cloud vendors in improving and documenting the security of their services and to assist potential customers in assessing the security risks of a CSP.

Each control consists of a control specification which describes a best practice to improve the security of the offered service. The controls are mapped to other industry-accepted security standards, regulations, and controls frameworks, for example, ISO/IEC 27001/27002/27017/27018, NIST SP 800-53, PCI DSS, and ISACA COBIT.

Controls covered by the CCM are preventive, to avoid the occurrence of an incident, detective, to notice an incident and corrective, to limit the damage caused by the incident. Controls are in the ranges of legal controls (e.g., policies), physical controls (e.g., physical access controls), procedural controls (e.g., training of staff), and technical controls (e.g., use of encryption or firewalls).

For each control in the CCM the CAIQ contains an associated question which is in general a ’yes or no’ question asking if the CSP has implemented the respective control.

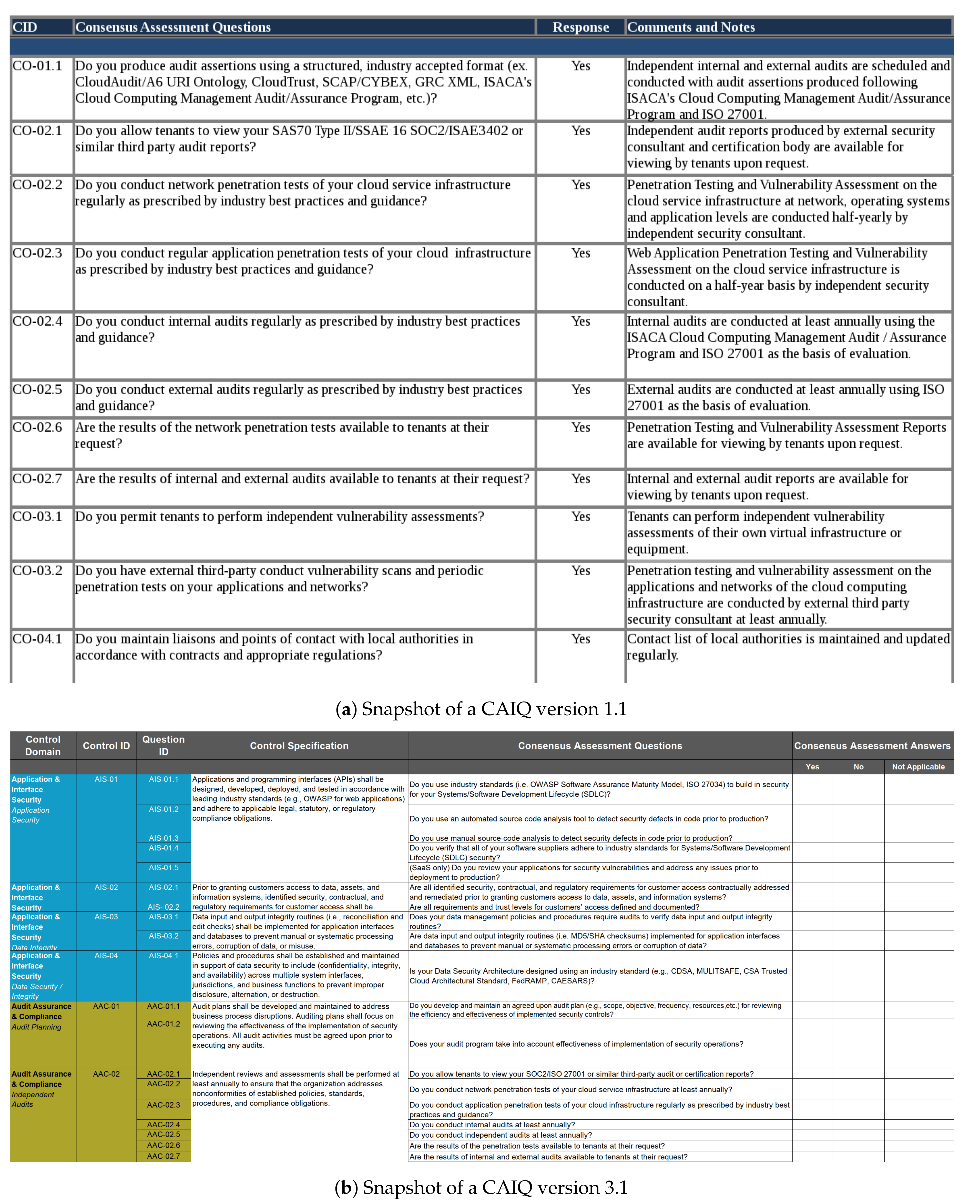

Figure 1 shows some examples of questions and answers. Tenants may use this information to assess the security of CSPs whom they are considering contracting.

As of today, there are two relevant versions of the CAIQ: version 1.1 from December 2010 and version 3.0.1 from July 2014. CAIQ version 1.1 consists of 197 questions in 11 domains (see

Table 2), while CAIQ version 3.0.1 instead consists of 295 questions grouped in 16 domains (see

Table 3). In November 2019 version 3.1 of the CAIQ was published and it was stated that 49 new questions were added, and 25 existing ones were revised. Furthermore, with CAIQ-Lite, there exists a smaller version consisting of 73 Questions covering the same 16 Control Domains.

CAIQ version 3.0.1 contains a high level mapping to CAIQ version 1.1, but there is no direct mapping of the questions. Therefore, we mapped the questions. In order to determine the differences, we computed the Levenshtein distance (The Levenshtein distance is a string metric which measures the difference between two strings by the minimum number of single-character edits (insertions, deletions or substitutions) required to change one string into the other) [

33] between each question from version 3.0.1 and version 1.1. The analysis shows that out of the 197 questions of CAIQ version 1.1 one question was a duplicate, 15 were removed, 12 were reformulated, 79 have undergone editorial changes (mostly Levenshtein distance less than 25), and 90 were taken over unchanged. Additionally 114 new questions were introduced to CAIQ version 3.0.1.

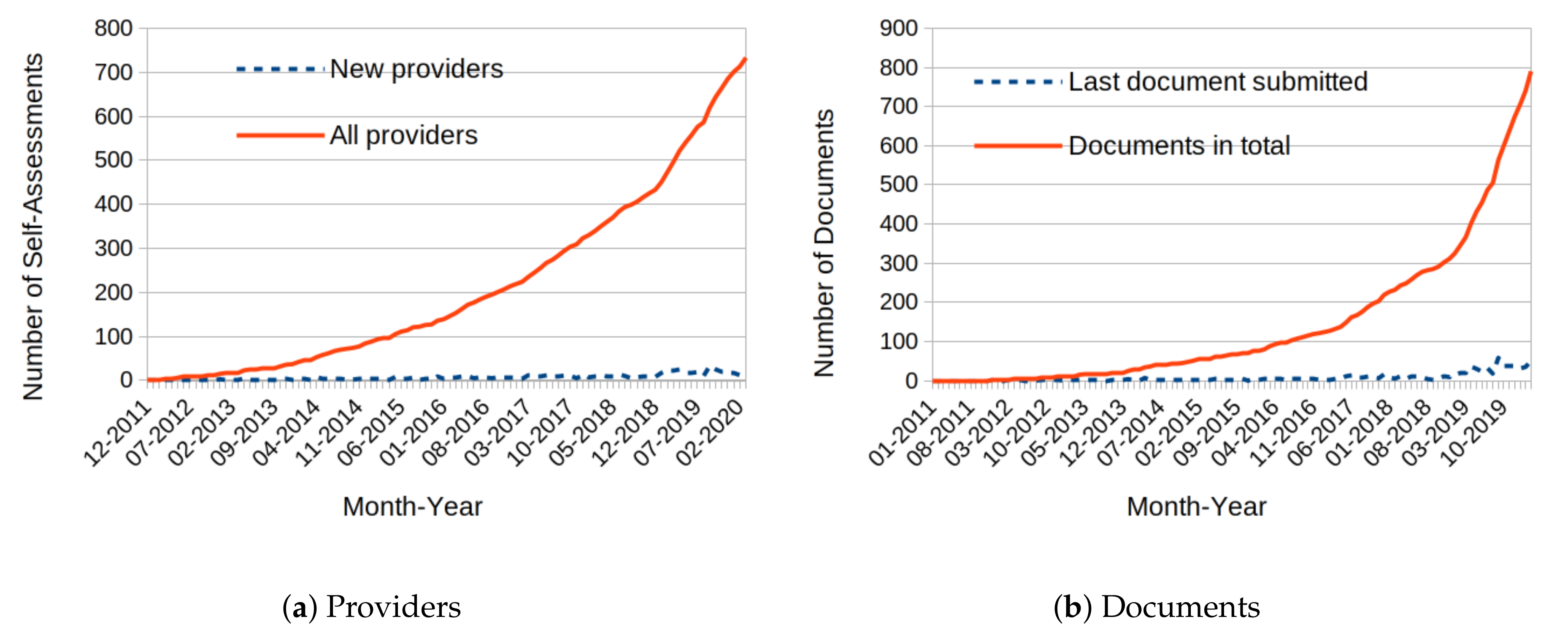

The CSA provides a registry, the Cloud Security Alliance Security, Trust and Assurance Registry (STAR), where the answers to the CAIQ of each participating provider are listed. As shown in

Figure 2, the STAR is continuously updated. The overview of answers to CAIQ submitted to STAR in

Figure 2 shows that from the beginning in 2011 each year there are more providers contributing to it. At the beginning of October 2014 there were 85 documents in STAR: 65 answers to CAIQ, 10 statements to the CCM, and 10 STAR certifications, where the companies did not publish corresponding self-assessments. In March 2020, there were 733 providers listed with 690 CAIQs (53 versions 1.* or 2515 version 3.0.1, 122 version 3.1), and 106 certifications/attestations. Some companies list the self-assessment along with their certification, some do not provide their self-assessment when they got a certification.

3.2. Processing the CAIQ

Each CAIQ is stored in a separate file with a unique URL. Thus, there is no way to get all CAIQs in a bunch and no single file containing all the answers. Therefore, we had to manually download the CAIQs with some tool support. After downloading, we extracted the answers to the questions and stored them in an SQL database. A small number of answers was not in English and we disregarded them when evaluating the answers.

One challenge was, that there was no standardization of the document format. In October 2014, the 65 answers to CAIQ were in various document formats (52 XLS, 7 PDF, 5 XLS+PDF, 1 DOC). In March 2020, the majority of the document formats was based on Microsoft Excel (615), but there were also others (41 PDFs, 33 Libre Office documents (33), 1 DOC). Besides the different versions, that is, version 1.1 and version 3.0.1, another issue was that many CSP do not comply with the standard format for the answers proposed by the CSA. This makes it not trivial to determine whether a CSP implements a given security control.

For CAIQ version 1.1 the CSA intended the CSPs to use one column for yes/no/not applicable (Y/N/NA) answers and one column for additional, optional comments (C) when answering the CAIQ. But only a minority (17 providers) used it that way. The majority (44 providers) used only a single column which mostly (22 providers), partly (11 providers) or not at all (11 providers) included an explicit Y/N/NA answer. For CAIQ version 3.0.1 the CSA has introduced a new style: three columns where the provider should indicate whether yes, no or not applicable holds, followed by a column for optional comments. So far, this format for answers seems to work better, since most providers answering CAIQ version 3.0.1 followed it, however, since some providers merged cells, added or deleted columns or put their answer in other places, the answers to the CAIQ can not be gather automatically.

To make it even harder for a customer to determine whether a CSP supports a given security control, the providers did not follow a unique scheme for answers. For example to questions of the kind “Do you provide [some kind of documentation] to the tenant?” some provider answered “Yes, upon request” when others answered “No, only on request”. Similarly, some questions asking if controls are in place were answered by some providers with “Yes, starting from [Date in the future]” while others answered “No, not yet”. However, these are basically the same answers, but expressed differently. Similar issues could be found for various other questions, too.

Additionally, some providers did not provide a clear answer. For example, some providers claim that they have to clarify some questions with a third party or did not provide answers for questions at all. Some providers also make use of Amazon AWS (e.g., Acquia, Clari, Okta, Red Hat, Shibumi) but gave different answers when referring to controls implemented by Amazon as IaaS-Provider or did not give an answer and just referred to Amazon.

In order to facilitate the CSPs’ answers for comparison and ranking, we give a brief overview of the processed data.

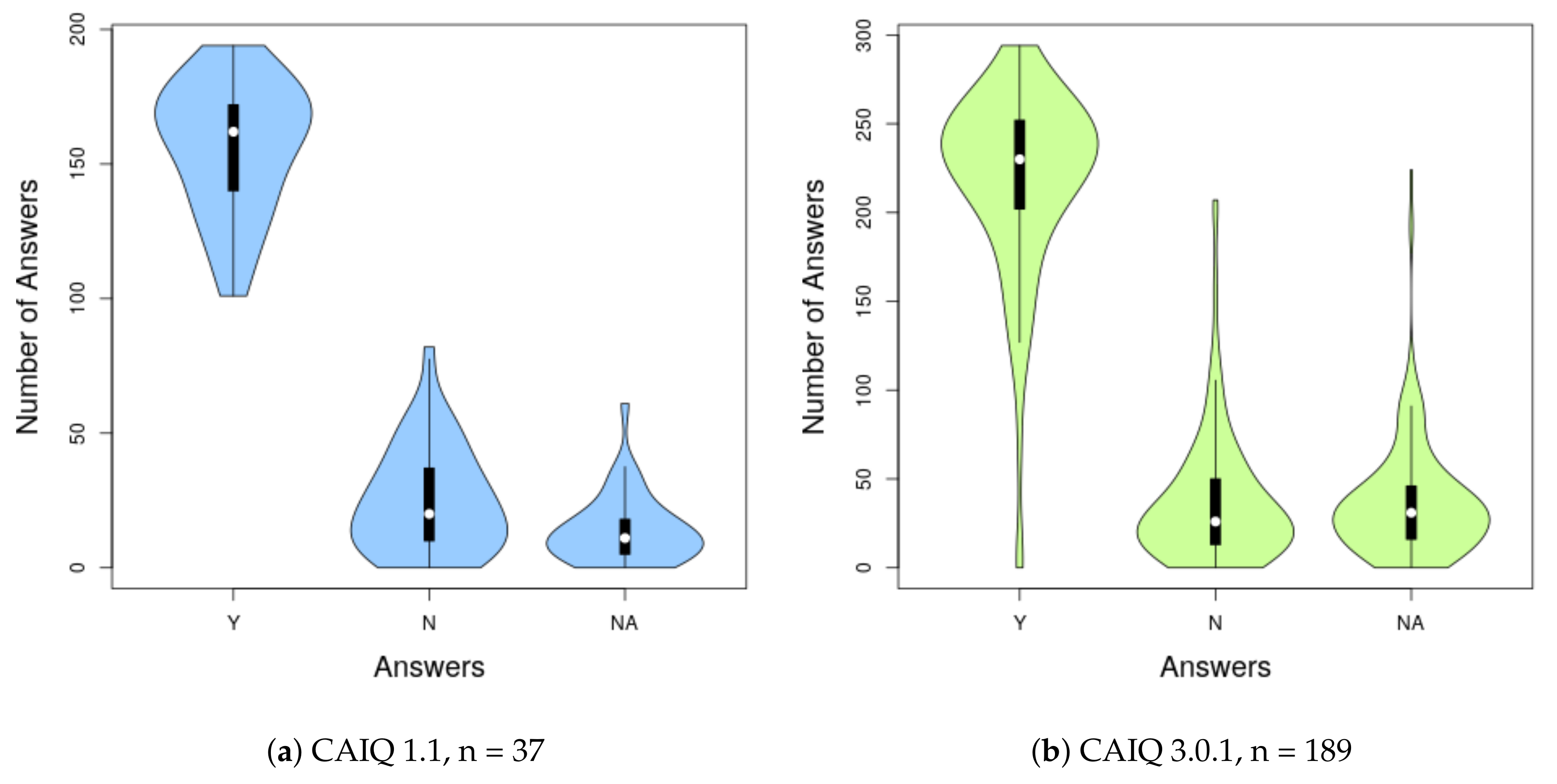

Figure 3 (cf.

Section 5.4 for information how we processed the data) shows the distribution of the CSPs’ answers to the CAIQ. Neglecting the number of questions, there is no huge difference between the distribution in the different versions of the questionnaires. The majority of controls seem to be in place, since “yes” is the most common answer. It can also be seen that the deviation of all answers is quite large which suits to the observation that they are not equally distributed. Regarding the comments on average every second answer had a comment. However, we noticed that comments are a double edge sword: sometimes they help to clarify an answer because they provide the rationale for the answer while at other times they make the answer unclear because they provide information that is conflicting with the yes/no-answer.

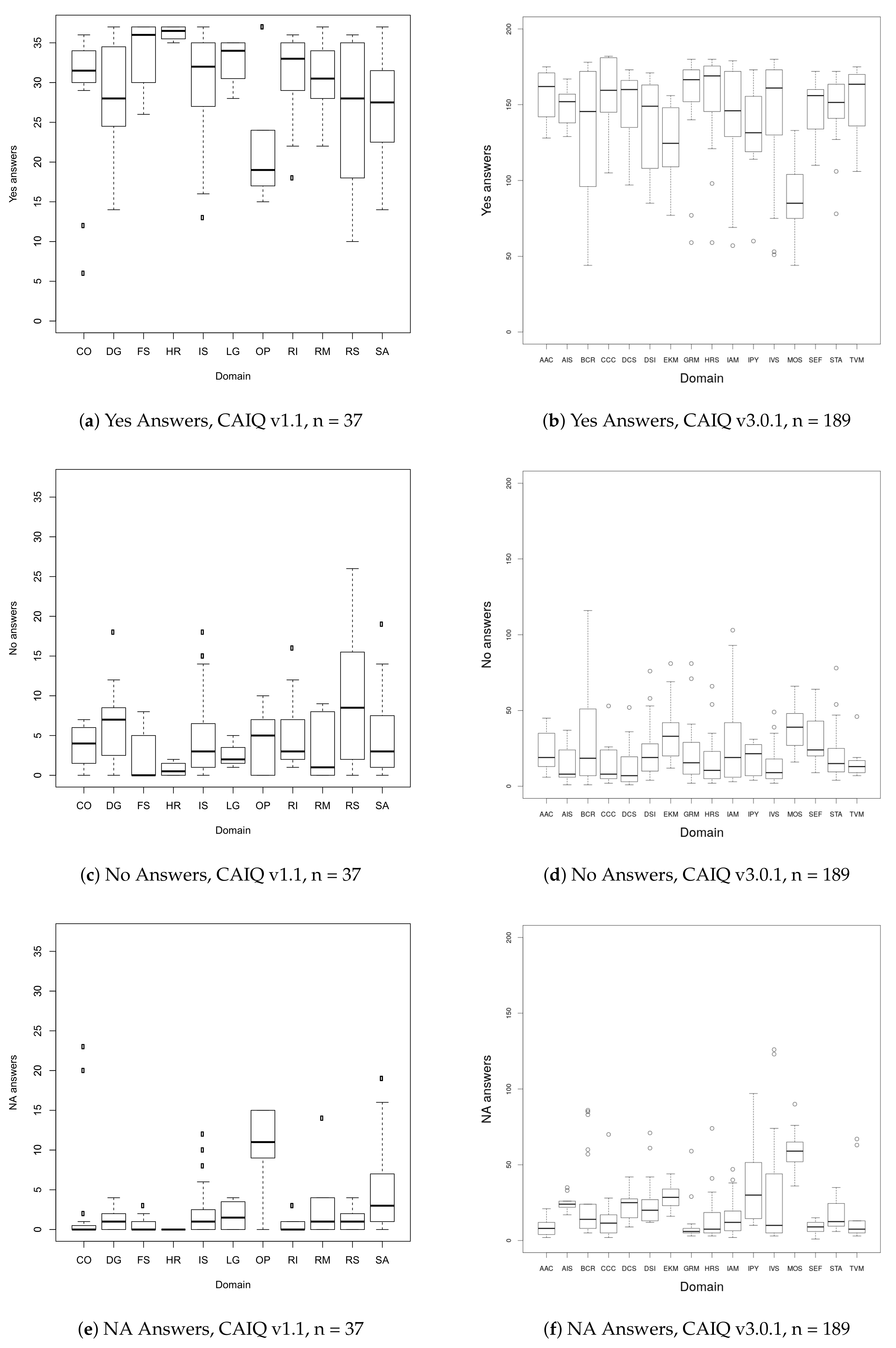

We also grouped questions by their domain (x-axis) and for each question within that domain determined the number of providers (y-axis) who answered with yes, no or not applicable. The number of questions per domain can be seen in

Table 2 and

Table 3.

Figure 4 shows that for most domains, questions with mostly yes answers dominate (e.g., the domain “human resources” (HR) contains questions with 35 to 37 yes answers from a total of 37 providers (cf.

Figure 4a). The domain of “operation management” (OP) holds questions with a significant lower count of yes answers due to questions with many NA answers (cf.

Figure 4e), similarly to the domain of “mobile security” (MOS) in version 3.0.1 (cf.

Figure 4f). The domains “data governance” (DG), “information security” (IS), “resilience” (RS) and “security architecture” (SA) share a larger variance that means that they contain questions with mostly yes answers as well as questions with only some yes answers.

The above issues indicate that gathering information on the CSPs’ controls and especially comparing and ranking the security of CSPs using the answers to CAIQ is not straight forward. For this reason, we have conducted a controlled experiment to assess whether it is feasible in practice to select a CSP using CAIQ. We also tested if comments help to determine if a security control is supported or not by CPSs.

6. Evaluation

In this section we assess different aspects of our approach to cloud provider ranking based on CAIQs. First of all we evaluate how ease is for the tenant to map the security categories to the security requirements and assign a score to the categories. Then, we evaluate the effectiveness of the approach with the respect to correctness of CSP selection. Last, we evaluate the performance of the approach.

Scoring of Security Categories. We wanted to evaluate how ease is for a tenant to perform the only manual step required by our approach to CSP ranking: map their security requirements to security categories and assign a score to the categories. Therefore, we asked to the same participants of the study presented in

Section 4 to perform the following task. The participants were requested to map the security requirements of Bank Gemini with a provided list of security categories. For each category they were provided with a definition. Then, the participants had to assign an absolute score from 1 (not important) to 9 (very important) denoting the importance of the security category for Bank Gemini. They had 30 min to complete task and then 5 min to fill in a post task-questionnaire on the perceived ease of use of performing the task. The results of analysis of the post-task questionnaire are summarized in

Table 11. Participants believe that the definition of security categories was clear and ease to understand since the mean of the answers is around 2 which corresponds to the answer “Agree”. We tested the statistical significance of this result using the one sample Wilcox signed rank test setting the null hypothesis

= 3, and the significance level

= 0.05. The

p-value is <0.05 which means that result is statistically significant. Similarly, the participant agree that it was ease to assign a weight to security categories with statistical significance (one sample t-test returned

p-value = 0.04069). However, they are not certain (mean of answers is 3) that assigning weights to security categories was ease for the specific case of Bank Gemini scenario. This result, though, is not statistically significant (one sample t-test returned

p-value = 0.6733). Therefore, we can conclude the scoring of security categories that a tenant has to perform in our approach does not require too much effort to performed.

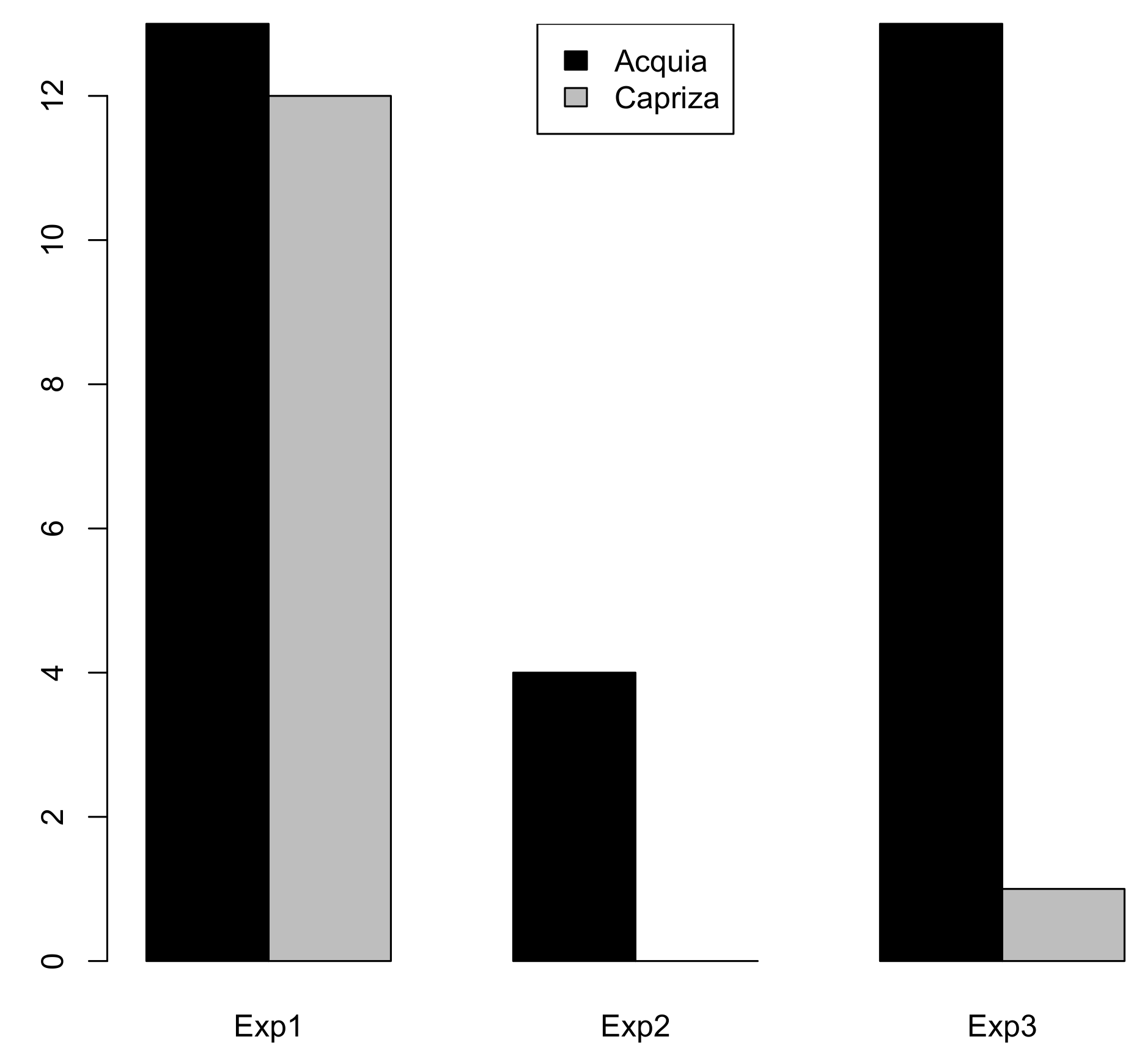

Effectiveness of the Approach. To evaluate the correctness of our approach, we determined if the overall score assigned by our approach to each CSP reflects the level of security provided by the CSPs and thus if our approach leads to select the most secure CSP. For this reason we used the three scenarios from our experiment and additionally created a more complicated test case based on the FIPS200 standard [

40]. The more sophisticated example makes use of the full CAIQ version 1.1 (197 questions) and comes up with 75 security categories. As we did for the results produced by the participants of our experiments, we have compared the results produced by our approach for the three scenarios and the additional test case with the results produced by the three experts on the same scenarios. Our approach results were consistent with the results of the experts. Furthermore, the results of the 17 participants who compared two CSPs by answers and comments on 20 questions, are also in accordance to the result of our approach.

Performance. We evaluated the performance of our approach with respect to the number of providers to be compared and the number of questions used from the CAIQ. For that purpose we generated two test cases. The first test case is based on the banking scenario that we used to run the experiment with the students. It consists of 3 security requirements, 20 CAIQ’s questions and 5 security categories. The second test case is the one based on the FIPS200 standard and described above (15 security requirements, 197 questions, 75 security categories). We first compared only 2 providers as in the experiment and then compared all the 37 providers in our data set for version 1.1. The tests were run on a laptop with an Intel(R) Core(TM) i7-4550U CPU.

Table 12 reports the execution time of our approach. It shows the execution time for ranking the providers (cf.

Section 5.3) and the overall execution time, which also includes the time to load some libraries and query the database to fetch the setup information (cf.

Section 5.1 and

Section 5.2).

Our approach takes 35 min to compare and rank all 37 providers from our data based on a full CAIQ version 1.1. This is quite fast compared to our estimation that the participants of our experiment would need 80 min to manually compare only two providers with an even easier scenario. This means that our approach makes it feasible to compare CSPs based on CAIQ’s answers. Another result is that as expected the execution time increases with the number of CSPs to be compared, the number of questions and the number of security categories. This execution time could be further reduced if the ranking of each security category would be run in parallel rather then sequentially.

Feasibility. The setup of this approach requires some effort, which need only to be rendered once. Therefore, it is not feasible for the tenants to do the set-up for a single comparison and ranking. However, if the comparison and ranking is offered as a service by a cloud broker, and thus is used for multiple queries, the set-up share of the effort decreases. Alternatively, a third party such as the Cloud Security Alliance could provide the needed database to the tenants and enable them do to their own comparisons.

Limitations. Since security cannot be measured directly, our approach is based on the assumption that the implementation of the controls defined by the CCM is related to security. Should the CCM’s controls fail to cover some aspects or be not related to the security of the CSPs the result of our approach would be effected. Additionally, our approach relies on the assumption that the statements given in the CSPs’ self-assessments are correct. The results would be more valuable, if all answers would have been audited by an independent trusted party and certificates were given, but unfortunately as of today this is only the case for a very limited number of CSPs.

Evolving CAIQ versions. While our approach is based on CAIQ version 1.1, it is straight forward to run it on version 3.0.1 respectively version 3.1 also. However, with different versions in use cross version comparisons can only be done with the overlapping common questions. We provide a mapping between the 169 overlapping questions for version 1.1 and 3.0.1 (cf.

Section 3.1). If CAIQ version 1.1 will no longer be used or the corresponding providers are not of interest, the mappings of the questions to the security categories may be enhanced to make use of all 295 questions of CAIQ version 3.0.1.

Author Contributions

Conceptualization, F.M., S.P., F.P., and J.J.; methodology, F.M., S.P., F.P.; software, S.P.; validation, S.P., F.P., J.J., and F.M.; investigation, S.P. and F.P.; resources, S.P., F.P., and F.M.; data curation, S.P. and F.P.; writing—original draft preparation, S.P. and F.P.; writing—review and editing, F.M. and J.J.; visualization, S.P.; supervision, F.M. and J.J.; funding acquisition, J.J. and F.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly funded by the European Union within the projects Seconomics (grant number 285223), ClouDAT (grant number 300267102) and CyberSec4Europe (grant number 830929).

Acknowledgments

We thank Woohyun Shim for fruitful discussions on the economic background of this paper and Katsiaryna Labunets for her help in conducting the experiment.

Conflicts of Interest

The authors declare no conflict of interest.

References

- NIST Special Publication 800-53—Security and Privacy Controls for Federal Information Systems and Organizations. Available online: http://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-53r4.pdf (accessed on 31 March 2020).

- KPMG. 2014 KPMG Cloud Survey Report. Available online: http://www.kpmginfo.com/EnablingBusinessInTheCloud/downloads/7397-CloudSurvey-Rev1-5-15.pdf#page=4 (accessed on 31 March 2020).

- Böhme, R. Security Metrics and Security Investment Models. Advances in Information and Computer Security. In Proceedings of the 5th International Workshop on Security, IWSEC 2010, Kobe, Japan, 22–24 November 2010; Echizen, I., Kunihiro, N., Sasaki, R., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2010; Volume 6434, pp. 10–24. [Google Scholar]

- Akerlof, G.A. The Market for ’Lemons’: Quality Uncertainty and the Market Mechanism. Q. J. Econ. 1970, 84, 488–500. [Google Scholar] [CrossRef]

- Tirole, J. Cognition and Incomplete Contracts. Am. Econ. Rev. 2009, 99, 265–294. [Google Scholar] [CrossRef]

- Pape, S.; Stankovic, J. An Insight into Decisive Factors in Cloud Provider Selection with a Focus on Security. Computer Security. In Proceedings of the ESORICS 2019 International Workshops, CyberICPS, SECPRE, SPOSE, ADIoT, Luxembourg, 26–27 September 2019; Katsikas, S., Cuppens, F., Cuppens, N., Lambrinoudakis, C., Kalloniatis, C., Mylopoulos, J., Antón, A., Gritzalis, S., Pallas, F., Pohle, J., et al., Eds.; Revised Selected Papers; Lecture Notes in Computer Science. Springer International Publishing: Cham, Switzerland, 2019; Volume 11980, pp. 287–306. [Google Scholar]

- Anastasi, G.; Carlini, E.; Coppola, M.; Dazzi, P. QBROKAGE: A Genetic Approach for QoS Cloud Brokering. In Proceedings of the 2014 IEEE 7th International Conference on Cloud Computing (CLOUD), Anchorage, AK, USA, 27 June–2 July 2014; pp. 304–311. [Google Scholar]

- Ngan, L.D.; Kanagasabai, R. OWL-S Based Semantic Cloud Service Broker. In Proceedings of the 2012 IEEE 19th International Conference on Web Services (ICWS), Honolulu, HI, USA, 24–29 June 2012; pp. 560–567. [Google Scholar]

- Sim, K.M. Agent-Based Cloud Computing. Serv. Comput. IEEE Trans. 2012, 5, 564–577. [Google Scholar]

- Wang, P.; Du, X. An Incentive Mechanism for Game-Based QoS-Aware Service Selection. In Service-Oriented Computing; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8274, pp. 491–498. [Google Scholar]

- Karim, R.; Ding, C.; Miri, A. An End-to-End QoS Mapping Approach for Cloud Service Selection. In Proceedings of the 2013 IEEE Ninth World Congress on Services (SERVICES), Santa Clara, CA, USA, 28 June–3 July 2013; pp. 341–348. [Google Scholar]

- Sundareswaran, S.; Squicciarini, A.; Lin, D. A Brokerage-Based Approach for Cloud Service Selection. In Proceedings of the 2012 IEEE 5th International Conference on Cloud Computing (CLOUD), Honolulu, HI, USA, 24–29 June 2012; pp. 558–565. [Google Scholar] [CrossRef]

- Ghosh, N.; Ghosh, S.; Das, S. SelCSP: A Framework to Facilitate Selection of Cloud Service Providers. IEEE Trans. Cloud Comput. 2014, 3, 66–79. [Google Scholar] [CrossRef]

- Costa, P.; Lourenço, J.; da Silva, M. Evaluating Cloud Services Using a Multiple Criteria Decision Analysis Approach. In Service-Oriented Computing; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8274, pp. 456–464. [Google Scholar]

- Garg, S.; Versteeg, S.; Buyya, R. SMICloud: A Framework for Comparing and Ranking Cloud Services. In Proceedings of the 2011 Fourth IEEE International Conference on Utility and Cloud Computing (UCC), Melbourne, Australia, 5–8 December 2011; pp. 210–218. [Google Scholar] [CrossRef]

- Patiniotakis, I.; Rizou, S.; Verginadis, Y.; Mentzas, G. Managing Imprecise Criteria in Cloud Service Ranking with a Fuzzy Multi-criteria Decision Making Method. In Service-Oriented and Cloud Computing; Lau, K.K., Lamersdorf, W., Pimentel, E., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8135, pp. 34–48. [Google Scholar]

- Wittern, E.; Kuhlenkamp, J.; Menzel, M. Cloud Service Selection Based on Variability Modeling. In Service-Oriented Computing; Liu, C., Ludwig, H., Toumani, F., Yu, Q., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7636, pp. 127–141. [Google Scholar]

- Habib, S.M.; Ries, S.; Mühlhäuser, M.; Varikkattu, P. Towards a trust management system for cloud computing marketplaces: using CAIQ as a trust information source. Secur. Commun. Netw. 2014, 7, 2185–2200. [Google Scholar] [CrossRef]

- Mouratidis, H.; Islam, S.; Kalloniatis, C.; Gritzalis, S. A framework to support selection of cloud providers based on security and privacy requirements. J. Syst. Softw. 2013, 86, 2276–2293. [Google Scholar] [CrossRef]

- Akinrolabu, O.; New, S.; Martin, A. CSCCRA: A novel quantitative risk assessment model for cloud service providers. In Proceedings of the European, Mediterranean, and Middle Eastern Conference on Information Systems, Limassol, Cyprus, 4–5 October 2018; pp. 177–184. [Google Scholar]

- Mahesh, A.; Suresh, N.; Gupta, M.; Sharman, R. Cloud risk resilience: Investigation of audit practices and technology advances-a technical report. In Cyber Warfare and Terrorism: Concepts, Methodologies, Tools, and Applications; IGI Global: Hershey, PA, USA, 2020; pp. 1518–1548. [Google Scholar]

- Bleikertz, S.; Mastelic, T.; Pape, S.; Pieters, W.; Dimkov, T. Defining the Cloud Battlefield—Supporting Security Assessments by Cloud Customers. In Proceedings of the IEEE International Conference on Cloud Engineering (IC2E), San Francisco, CA, USA, 25–27 March 2013; pp. 78–87. [Google Scholar] [CrossRef]

- Siegel, J.; Perdue, J. Cloud Services Measures for Global Use: The Service Measurement Index (SMI). In Proceedings of the 2012 Annual SRII Global Conference (SRII), San Jose, CA, USA, 24–27 July 2012; pp. 411–415. [Google Scholar] [CrossRef]

- Cloud Services Measurement Initiative Consortium. Service Measurement Index Version 2.1; Technical Report; Carnegie Mellon University: Pittsburgh, PA, USA, 2014. [Google Scholar]

- Cloud Services Measurement Initiative Consortium. Available online: https://www.iaop.org/Download/Download.aspx?ID=1779&AID=&SSID=&TKN=6a4b939cba11439e9d3a (accessed on 31 March 2020).

- Saaty, T.L. Theory and Applications of the Analytic Network Process: Decision Making with Benefits, Opportunities, Costs, and Risks; RWS Publications: Pittsburgh, PA, USA, 2005. [Google Scholar]

- Saaty, T.L. Decision making with the analytic hierarchy process. Int. J. Serv. Sci. 2008, 1, 83–98. [Google Scholar] [CrossRef]

- Buckley, J.J. Ranking alternatives using fuzzy numbers. Fuzzy Sets Syst. 1985, 15, 21–31. [Google Scholar] [CrossRef]

- Chang, D.Y. Applications of the extent analysis method on fuzzy AHP. Eur. J. Oper. Res. 1996, 95, 649–655. [Google Scholar] [CrossRef]

- Cloud Security Alliance. Available online: https://cloudsecurityalliance.org/ (accessed on 31 March 2020).

- Cloud Security Alliance. Cloud Controls Matrix. v3.0.1. Available online: https://cloudsecurityalliance.org/research/cloud-controls-matrix/ (accessed on 31 March 2020).

- Cloud Security Alliance. Consensus Assessments Initiative Questionnaire. v3.0.1. Available online: https://cloudsecurityalliance.org/artifacts/consensus-assessments-initiative-questionnaire-v3-1/ (accessed on 31 March 2020).

- Levenshtein, V.I. Binary codes capable of correcting deletions, insertions and reversals. Sov. Phys. Dokl. 1966, 10, 707–710. [Google Scholar]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Svahnberg, M.; Aurum, A.; Wohlin, C. Using Students As Subjects—An Empirical Evaluation. In Proceedings of the Second ACM-IEEE International Symposium on Empirical Software Engineering and Measurement; ACM: New York, NY, USA, 2008; pp. 288–290. [Google Scholar]

- Höst, M.; Regnell, B.; Wohlin, C. Using Students As Subjects: A Comparative Study of Students and Professionals in Lead-Time Impact Assessment. Empir. Softw. Eng. 2000, 5, 201–214. [Google Scholar] [CrossRef]

- NIST Cloud Computing Security Working Group. NIST Cloud Computing Security Reference Architecture; Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2013. [Google Scholar]

- Deutsche Telekom. Cloud Broker: Neues Portal von T-Systems lichtet den Cloud-Nebel. Available online: https://www.telekom.com/de/medien/medieninformationen/detail/cloud-broker-neues-portal-von-t-systems-lichtet-den-cloud-nebel-347356 (accessed on 31 March 2020).

- Schneier, B. Security and compliance. Secur. Priv. IEEE 2004, 2. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology. Minimum Security Requirements for Federal Information and Information Systems (FIPS 200). Available online: http://csrc.nist.gov/publications/fips/fips200/FIPS-200-final-march.pdf (accessed on 31 March 2020).

- Decision Deck. The XMCDA Standard. Available online: http://www.decision-deck.org/xmcda/ (accessed on 31 March 2020).

Figure 1.

Consensus Assessments Initiative Questionnaire (CAIQ) questionnaires.

Figure 2.

Submissions to Security, Trust and Assurance Registry (STAR).

Figure 3.

Distribution of Answers per Provider of the CAIQ as Violin-/Boxplot.

Figure 4.

Distribution of Answers per Question grouped by Domain of CAIQ v1.1 and v3.0.1.

Figure 5.

Actual Effectiveness—Cloud Provider Selected in the Experiments).

Figure 6.

Security-Aware Cloud Provider Selection Approach.

Figure 7.

Hierarchies of Analytic Hierarchy Process (AHP) based Approach.

Table 1.

Comparison of Different cloud service provider (CSP) Comparison/Selection Approaches.

| | | Dimensions | Security |

|---|

| Reference | Method | Other | Security | Data | Categories |

|---|

| Anastasi et al. [7] | genetic algorithms | ✓ | ✗ | ✗ | ✗ |

| Ngan and Kanagasabai [8] | ontology mapping | ✓ | ✗ | ✗ | ✗ |

| Sim [9] | ontology mapping | ✓ | ✗ | ✗ | ✗ |

| Wang and Du [10] | game theory | ✓ | ✗ | ✗ | ✗ |

| Karim et al. [11] | MCDM 1 | ✓ | ✗ | ✗ | ✗ |

| Sundareswaran et al. [12] | k-nearest neighbours | ✓ | ✓ | ✗ | ✗ |

| Ghosh et al. [13] | minimize interaction risk | ✓ | ✓ | ✗ | 12 |

| Costa et al. [14] | MCDM 1 | ✓ | ✓ | ✗ | 3 |

| Garg et al. [15] | MCDM 1 | ✓ | ✓ | ✗ | 7 |

| Patiniotakis et al. [16] | MCDM 1 | ✓ | ✓ | ✗ | 1 |

| Wittern et al. [17] | MCDM 1 | ✓ | ✓ | ✗ | unspec. |

| Habib et al. [18] | trust computation | ✓ | ✓ | (✓) 2 | 11 |

| Mouratidis et al. [19] | based on Secure Tropos | ✗ | ✓ | ✗ | unspec. |

| Akinrolabu et al. [20] | risk assessment | ✗ | ✓ | ✗ | 9 |

| Our Approach | MCDM 1 | ✗ | ✓ | ✓ | flexible |

Table 2.

Cloud Controls Matrix (CCM)-Item and CAIQ-Question Numbers per Domain (version 1.1).

| ID | Domain | CCM-Items | CAIQ-Questions |

|---|

| CO | Compliance | 6 | 16 |

| DG | Data Governance | 8 | 16 |

| FS | Facility Security | 8 | 9 |

| HR | Human Resources | 3 | 4 |

| IS | Information Security | 34 | 75 |

| LG | Legal | 2 | 4 |

| OP | Operations Management | 4 | 9 |

| RI | Risk Management | 5 | 14 |

| RM | Release Management | 5 | 6 |

| RS | Resiliency | 8 | 12 |

| SA | Security Architecture | 15 | 32 |

| | Total | 98 | 197 |

Table 3.

Cloud Controls Matrix (CCM)-Item and CAIQ-Question Numbers per Domain (version 3.1).

| ID | Domain | CCM | CAIQ |

|---|

| AIS | Application & Interface Security | 4 | 9 |

| AAC | Audit Assurance & Compliance | 3 | 13 |

| BCR | Business Continuity Management & Operational Resilience | 11 | 22 |

| CCC | Change Control & Configuration Management | 5 | 10 |

| DSI | Change Control & Configuration Management | 7 | 17 |

| DCS | Datacenter Security | 9 | 11 |

| EKM | Encryption & Key Management | 4 | 14 |

| GRM | Governance and Risk Management | 11 | 22 |

| HRS | Human Resources | 11 | 24 |

| IAM | Identity & Access Management | 13 | 40 |

| IVS | Infrastructure & Virtualization Security | 13 | 33 |

| IPY | Interoperability & Portability | 5 | 8 |

| MOS | Mobile Security | 20 | 29 |

| SEF | Security Incident Management, E-Discovery & Cloud Forensics | 5 | 13 |

| STA | Supply Chain Management, Transparency and Accountability | 9 | 20 |

| TVM | Threat and Vulnerability Management | 3 | 10 |

| | Total | 133 | 295 |

Table 4.

Overall Participants’ Demographic Statistics.

| Variable | Scale | Mean/Median | Distribution |

|---|

| Education Length | Years | 4.7 | 56.8% had less than 4 years;

36.4% had 4–7 years;

6.8% had more than 7 years |

| Work Experience | Years | 2.1 | 29.5% had no experience;

47.7% had 1–3 years;

18.2% had 4–7 years;

4.5% had more than 7 years |

| Level of Expertise in Security | 0 1–4 2 | 1 3 | 20.5% novices;

40.9% beginners;

22.7% competent users;

13.6% proficient users;

2.3% experts |

| Level of Expertise in Privacy | 0 1–4 2 | 1 3 | 22.7% novices;

38.6% beginners;

31.8% competent users;

6.8 % proficient users |

| Level of Expertise in Online Banking | 0 1–4 2 | 1 3 | 47.7% novices;

34.1% beginners;

15.9% competent users;

2.3% proficient users |

Table 5.

Questionnaire Analysis Results—Descriptive Statistics.

| | | Mean | |

|---|

| Q | Type | Exp1 | Exp2 | Exp3 | All | p-Value |

|---|

| Q1 | PEOU | 3 | 3.7 | 2.9 | 3.0 | 0.3436 |

| Q2 | PEOU | 2.9 | 2.7 | 2.7 | 2.8 | 0.8262 |

| Q3 | PEOU | 2.4 | 2.2 | 2.4 | 2.4 | 0.9312 |

| Q4 | PU | 2.4 | 2.5 | 2.2 | 2.3 | 0.9187 |

| Q5 | PU | 3.1 | 3.2 | 3.0 | 3.1 | 0.8643 |

| PEOU | | 2.8 | 2.9 | 2.7 | 2.7 | 0.7617 |

| PU | | 2.7 | 3.0 | 2.6 | 2.7 | 0.9927 |

Table 6.

Possible Classes for Answers in CAIQ.

| Answer | Comment Class | Description |

|---|

| Yes | Conflicting | The comment conflicts the answer. |

| Yes | Depending | The control depends on someone else. |

| Yes | Explanation | Further explanation on the answer is given. |

| Yes | Irrelevant | Comment is irrelevant to the answer. |

| Yes | Limitation | The answer ’yes’ is limited or related due to the comment. |

| Yes | No comment | No comment was given. |

| No | Conflicting | The comment conflicts the answer. |

| No | Depending | The control depends on someone else. |

| No | Explanation | Further explanation on the answer is given. |

| No | Irrelevant | Comment is irrelevant to the answer. |

| No | No comment | No comment was given. |

| NA | Explanation | Further explanation on the answer is given. |

| NA | Irrelevant | Comment is irrelevant to the answer. |

| NA | No Comment | No comment was given. |

| Empty | No comment | No answer at all |

| Unclear | Irrelevant | Only comment was given and thereupon it was not possible to classify the answer as one of Y/N/NA. |

Table 7.

Possible Scoring for Tenants Interested in Security or Compliance.

| Answer | Comment Class | Security | Compliance |

|---|

| Yes | Explanation | 9 | 9 |

| Yes | No comment | 8 | 9 |

| Yes | Depending | 8 | 9 |

| Yes | Irrelevant | 7 | 9 |

| Yes | Limitation | 6 | 7 |

| Yes | Conflicting | 5 | 5 |

| No | Explanation | 4 | 1 |

| No | Conflicting | 4 | 1 |

| No | No comment | 3 | 1 |

| No | Depending | 3 | 1 |

| No | Irrelevant | 2 | 1 |

| NA | Explanation | 3 | 3 |

| NA | No comment | 2 | 3 |

| NA | Irrelevant | 2 | 3 |

| Empty | No comment | 1 | 2 |

| Unclear | Irrelevant | 1 | 2 |

Table 8.

Weights for Comparing Importance of Categories and Questions.

| Weight | Explanation |

|---|

| 1 | Two categories (questions) describe an equal importance to the overall security (respective category) |

| 3 | One category (question) is moderately favoured over the other |

| 5 | One category (question) is strongly favoured over the other |

| 7 | One category (question) is very strongly favoured over the other |

| 9 | One category (question) is favoured over the other in the highest possible order |

Table 9.

Scores for Comparing Quality of Answers to CAIQ.

| Score | Explanation |

|---|

| 1 | Two answers describe an equal implementation of the security control |

| 3 | One answer is moderately favoured over the other |

| 5 | One answer is strongly favoured over the other |

| 7 | One answer is very strongly favoured over the other |

| 9 | One answer is favoured over the other in the highest possible order |

Table 10.

Comparison Table.

| Superior | Inferior | Comp. |

|---|

| CSP 1 | CSP 2 | x |

| CSP 3 | CSP 1 | y |

| CSP 3 | CSP 2 | z |

| ⋮ | ⋮ | ⋮ |

Table 11.

Scoring of Categories Questionnaire—Descriptive Statistics.

| Type | ID | Questions | Mean | Median | sd | p-Value |

|---|

| PEOU | | In general, I found the definition of security categories clear and ease to understand | 2.29 | 2 | 0.93 | |

| PEOU | | I found the assignment of weights to security categories complex and difficult to follow | 3.4 | 4 | 1.4 | 0.04069 |

| PEOU | | For the specific case of the Home Banking Cloud-Based Service it was ease to assign weights to security categories | 3.06 | 3 | 1.06 | 0.6733 |

| Overall PEOU | | 2.91 | 3 | 1.13 | 0.3698 |

Table 12.

Performance Time of Our Approach as a Function of the Number of CSP and the Number of Questions.

| N CSP | N Questions | N Categories | Ranking | Total |

|---|

| 2 | 20 | 5 | ∼0.5 s | <1 s |

| 37 | 20 | 5 | 48 s | 50 s |

| 2 | 197 | 75 | 1 min 50 s | <2 min |

| 37 | 197 | 75 | 34 min | <35 min |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).