Usability Evaluation—Advances in Experimental Design in the Context of Automated Driving Human–Machine Interfaces

Abstract

1. Introduction

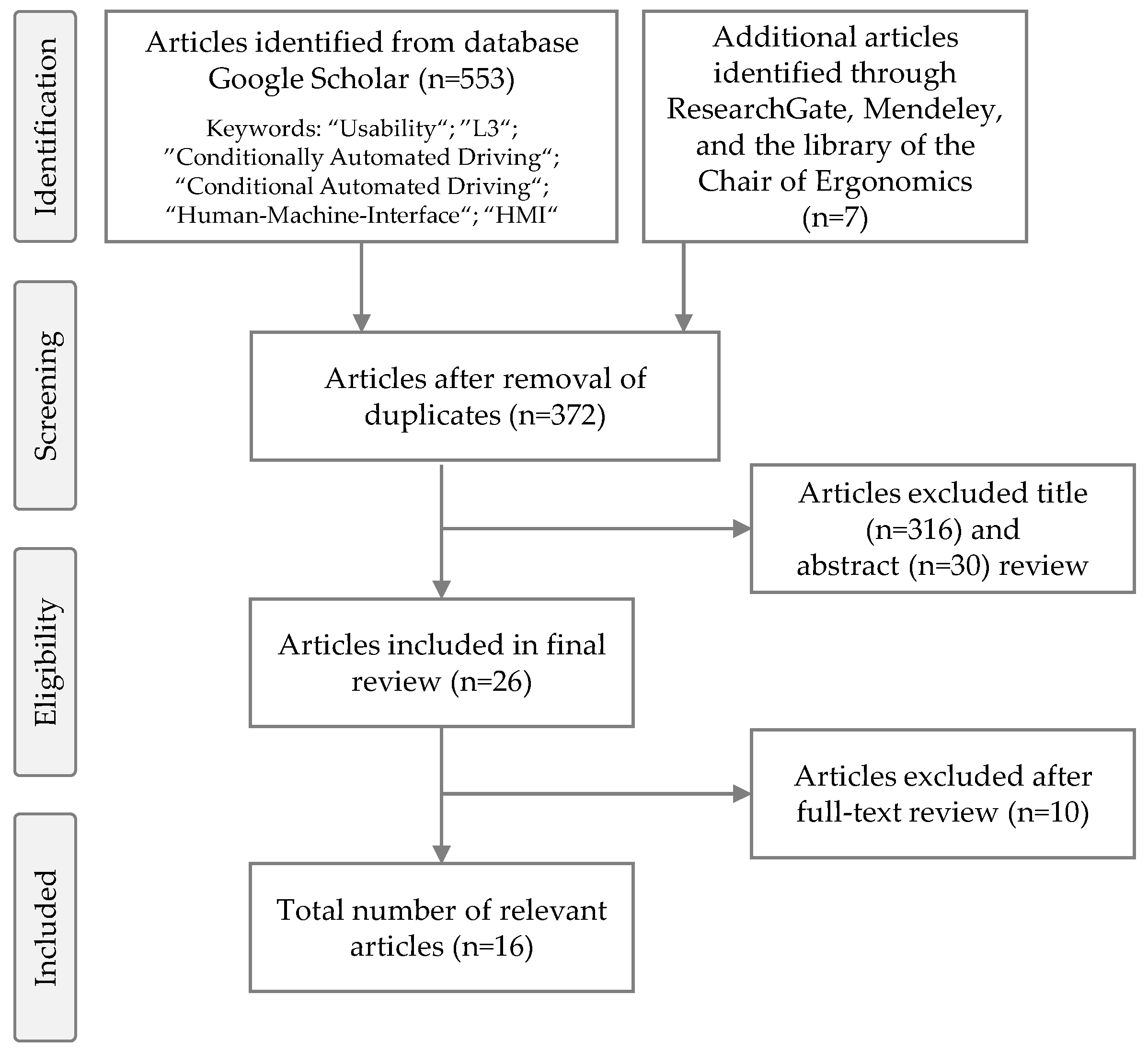

2. Paper Selection and Aggregation

2.1. Paper Selection

2.2. Aggregation

- Definition of Usability

- Testing Environment

- Sample Characteristics

- Test Cases

- Dependent Variables

- Conditions of Use

2.2.1. Definition of Usability

2.2.2. Testing Environment

2.2.3. Sample Characteristics

2.2.4. Test Cases

2.2.5. Dependent Variables

2.2.6. Conditions of Use

3. Discussions

4. Conclusions

5. Outlook

Author Contributions

Funding

Conflicts of Interest

References

- SAE. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; Society of Automotive Engineers: Warrendale, PA, USA, 2018; Volume 3016, pp. 1–16. [Google Scholar]

- Lorenz, L.; Kerschbaum, P.; Hergeth, S.; Gold, C.; Radlmayr, J. Der Fahrer im Hochautomatisierten Fahrzeug. Vom Dual-Task zum Sequential-Task Paradigma. In Proceedings of the 7. Tagung Fahrerassistenz, München, Germany, 25–26 June 2015. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; The PRISMA Group. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.; Keinath, A. User Education in Automated Driving: Owner’s Manual and Interactive Tutorial Support Mental Model Formation and Human-Automation Interaction. Information 2019, 10, 143. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Beggiato, M.; Krems, J.F.; Keinath, A. Learning and Development of mental models during interactions with driving automation: A simulator study. In Proceedings of the Tenth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Santa Fe, NM, USA, 24–27 June 2019. [Google Scholar]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Beggiato, M.; Krems, J.F.; Keinath, A. Learning to use automation: Behavioral changes in interaction with automated driving systems. Transp. Res. Part F Traffic Psychol. Behav. 2019, 62, 599–614. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.F.; Keinath, A. Self-report measures for the assessment of human–machine interfaces in automated driving. Cogn. Technol. Work 2019. [Google Scholar] [CrossRef]

- Guo, C.; Sentouh, C.; Popieul, J.-C.; Haué, J.-B.; Langlois, S.; Loeillet, J.-J.; Soualmi, B.; Nguyen That, T. Cooperation between driver and automated driving system: Implementation and evaluation. Transp. Res. Part F Traffic Psychol. Behav. 2019, 61, 314–325. [Google Scholar] [CrossRef]

- Kettwich, C.; Haus, R.; Temme, G.; Schieben, A. Validation of a HMI Concept Indicating the Status of the Traffic Light Signal in the Context of Automated Driving in Urban Environment. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Morgan, P.L.; Voinescu, A.; Alford, C.; Caleb-Solly, P. Exploring the Usability of a Connected Autonomous Vehicle Human Machine Interface Designed for Older Adults. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Orlando, FL, USA, 21–25 July 2018; pp. 591–603. [Google Scholar] [CrossRef]

- Naujoks, F.; Forster, Y.; Wiedemann, K.; Neukum, A. A Human-Machine Interface for Cooperative Highly Automated Driving. In Advances in Human Aspects of Transportation; Stanton, N.A., Landry, S., Di Bucchianico, G., Vallicelli, A., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 585–595. ISBN 978-3-319-41681-6. [Google Scholar]

- Richardson, N.T.; Lehmer, C.; Lienkamp, M.; Michel, B. Conceptual Design and Evaluation of a Human Machine Interface for Highly Automated Truck Driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 2072–2077. [Google Scholar]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.F. How Usability Can Save the Day—Methodological Considerations for Making Automated Driving a Success Story. In Proceedings of the 10th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ’18), Toronto, ON, Canada, 23–25 September 2018; pp. 278–290. [Google Scholar] [CrossRef]

- François, M.; Osiurak, F.; Fort, A.; Crave, P.; Navarro, J. Automotive HMI design and participatory user involvement: Review and perspectives. Ergonomics 2016, 60, 541–552. [Google Scholar] [CrossRef] [PubMed]

- Gold, C.; Naujoks, F.; Radlmayr, J.; Bellem, H.; Jarosch, O. Testing Scenarios for Human Factors Research in Level 3 Automated Vehicles. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Los Angeles, CA, USA, 17–21 July 2017; pp. 551–559. [Google Scholar] [CrossRef]

- Naujoks, F.; Hergeth, S.; Wiedemann, K.; Schömig, N.; Keinath, A. Use Cases for Assessing, Testing, and Validating the Human Machine Interface of Automated Driving Systems. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Philadelphia, PA, USA, 1–5 October 2018; Volume 62, pp. 1873–1877. [Google Scholar] [CrossRef]

- Naujoks, F.; Hergeth, S.; Wiedemann, K.; Schömig, N.; Forster, Y.; Keinath, A. Test procedure for evaluating the human-machine interface of vehicles with automated driving systems. Traffic Inj. Prev. 2019, 20, S146–S151. [Google Scholar] [CrossRef] [PubMed]

- Naujoks, F.; Wiedemann, K.; Schömig, N.; Hergeth, S.; Keinath, A. Towards guidelines and verification methods for automated vehicle HMIs. Transp. Res. Part F Traffic Psychol. Behav. 2019, 60, 121–136. [Google Scholar] [CrossRef]

- Pauzie, A.; Orfila, O. Methodologies to assess usability and safety of ADAS and automated vehicle. IFAC PapersOnLine 2016, 72–77. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A ‘Quick and Dirty’ Usability Scale. In Usability Evaluation in Industry; Jordan Patrick, W., Thomas, B., Weerdmeester, B.A., McClelland, I.L., Eds.; Taylor & Francis: London, UK, 1996; pp. 189–194. [Google Scholar]

- Lewis, J.R. Psychometric Evaluation of the PSSUQ Using Data from Five Years of Usability Studies. Int. J. Hum. Comput. Interact. 2002, 14, 463–488. [Google Scholar] [CrossRef]

- Van der Laan, J.D.; Heino, A.; De Waard, D. A Simple Procedure for the Assessment of Acceptance of Advanced Transport Telematics. Transp. Res. Part C Emerg. Technol. 1997, 5, 1–10. [Google Scholar] [CrossRef]

- ISO 9241-11: 2018. Ergonomics of Human-System Interaction. Part. 11: Usability: Definitions and Concepts; International Organization of Standardization: Geneva, Switzerland, 2018. [Google Scholar]

- NHTSA. Automated Driving Systems 2.0: A Vision for Safety; NHTSA: Washington, DC, USA, 2017.

- Nielsen, J. Usability Engineering; Elsevier: Amsterdam, The Netherlands, 1993; ISBN 0125184069. [Google Scholar]

- Caird, J.K.; Horrey, W.J. Twelve Practical and Twelve Practical and Useful Questions About Driving Simulation. In Handbook of Driving Simulation for Engineering, Medicine, and Psychology; Fisher, D.L., Rizzo, M., Caird, J.K., Lee, J.D., Eds.; CRC Press: Boca Raton, FL, USA, 2011; ISBN 978-1-4200-6101-7. [Google Scholar]

- Mullen, N.; Charlton, J.; Devlin, A.; Bédard, M. Simulator Validity: Behaviors Observed on the Simulator and on the Road. In Handbook of Driving Simulation for Engineering, Medicine, and Psychology; Fisher, D.L., Rizzo, M., Caird, J.K., Lee, J.D., Eds.; CRC Press: Boca Raton, FL, USA, 2011; ISBN 978-1-4200-6101-7. [Google Scholar]

- Nielsen, J. Usability Inspection Methods. In Conference Companion on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 1994; pp. 413–414. [Google Scholar]

- Tan, W.-S.; Liu, D.; Bishu, R. Web Evaluation: Heuristic Evaluation vs. User Testing. Int. J. Ind. Ergon. 2009, 39, 621–627. [Google Scholar] [CrossRef]

- NHTSA. Visual–Manual NHTSA Driver Distraction Guidelines for In-Vehicle Electronic Devices; NHTSA: Washington, DC, USA, 2014.

- Henrich, J.; Heine, S.J.; Norenzayan, A. The weirdest people in the world? Behav. Brain Sci. 2010, 33, 61–83; Discussion 83–135. [Google Scholar] [CrossRef] [PubMed]

- Prümper, J.; Anft, M. ISONORM 9241/110 (Langfassung): Beurteilung von Software auf Grundlage der Internationalen Ergonomie-Norm DIN EN ISO 9241-110. Available online: http://people.f3.htw-berlin.de/Professoren/Pruemper/instrumente/ISONORM%209241-110-L.pdf (accessed on 2 November 2017).

- Ventakesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar]

- Mcknight, D.H.; Carter, M.; Thatcher, J.B.; Clay, P.F. Trust in a specific Technology. ACM Trans. Manag. Inf. Syst. 2011, 2, 1–25. [Google Scholar] [CrossRef]

- Chien, S.-Y.; Semnani-Azad, Z.; Lewis, M.; Sycara, K. Towards the Development of An Inter-Cultural Scale to measure Trust in Automation. In Cross-Cultural Design; Rau, P.L.R., Ed.; Springer International Publishing: Cham, Switzerland, 2014; ISBN 978-3-319-07307-1. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Endsley, M.R. Measurement of Situation Awareness in Dynamic Systems. Hum. Factors 1995, 37, 65–84. [Google Scholar] [CrossRef]

- Beggiato, M.; Pereira, M.; Petzoldt, T.; Krems, J. Learning and Development of Trust, Acceptance and the Mental Model of ACC. A longitudinal on-road Study. Transp. Res. Part F Traffic Psychol. Behav. 2015, 35, 75–84. [Google Scholar] [CrossRef]

- Boren, T.; Ramey, J. Thinking Aloud: Reconciling Theory and Practice. IEEE Trans. Prof. Commun. 2000, 43, 261–278. [Google Scholar] [CrossRef]

- Nielsen, J.; Molich, R. Heuristic Evaluation of User Interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Seattle, WA, USA, 1–5 April 1990; pp. 249–256. [Google Scholar]

- Jian, J.-Y.; Bisantz, A.M.; Drury, C.G.; Llinas, J. Foundations for an Empirically Determined Scale of Trust in Automated Systems; Air Force Research laboratory: Buffalo, NY, USA, 2000. [Google Scholar]

- Hassenzahl, M.; Burmester, M.; Koller, F. AttrakDiff: Ein Fragebogen zur Messung wahrgenommener hedonischer und pragmatischer Qualität. In Mensch & Computer 2003: Interaktion in Bewegung; Szwillus, G., Ziegler, J., Eds.; B. G. Teubner: Stuttgart, Germany, 2003; pp. 187–196. [Google Scholar]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire. HCI Usability Educ. Work 2008, 63–76. [Google Scholar] [CrossRef]

- Minge, M.; Thüring, M.; Wagner, I.; Kuhr, C.V. The meCUE Questionnaire. A Modular Tool for Measuring User Experience. In Advances in Ergonomics Modeling, Usability & Special Populations, Proceedings of the 7th Applied Human Factors and Ergonomics Society Conference, Orlando, FL, USA, 27–31 July 2016; Soares, M., Falcão, C., Ahram, T.Z., Eds.; Springer International Press: Cham, Switzerland, 2016; pp. 115–128. [Google Scholar]

- Taylor, R.M. Situational Awareness Rating Technique (SART): The Development of a Tool for Aircrew Systems Design. In “Situation Awareness in Aerospace Operations”. In Situational Awareness; Salas, E., Dietz, A.S., Eds.; Routledge: New York, NY, USA, 2016; pp. 111–128. ISBN 9780754629733. [Google Scholar]

- Jay, G.M.; Willis, S.L. Influence of Direct Computer Experience on Older Adults’ Attitudes toward Computers. J. Gerontol. Psychol. Sci. 1992, 47, 250–257. [Google Scholar] [CrossRef] [PubMed]

- Van den Beukel, A.P.; Van der Voort, M.C. Design Considerations on User-Interaction for Semi-Automated Driving. In Proceedings of the FISITA 2014 World Automotive Congress, Maastricht, The Netherlands, 2–6 June 2014; pp. 1–8. [Google Scholar]

- Pauzié, A. A Method to assess the Driver Mental Workload: The Driving Activity Load Index (DALI). IET Intell. Transp. Syst. 2008, 2, 315–322. [Google Scholar] [CrossRef]

- ISO 15007-2. Road Vehicles—Measurement of Driver Visual Behaviour with Respect to Transport Information and Control Systems. Part. 2: Equipment and Procedures; International Organization for Standardization: Geneva, Switzerland, 2013. [Google Scholar]

- Parasuraman, R.; Riley, V. Humans and Automation: Use, Misuse, Disuse, Abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

| Article | ISO Standard 9241 [23] | Nielsen [25] | NHTSA Minimum Requirements [24] | Operationalization Through Dependent Variables |

|---|---|---|---|---|

| Forster et al. (2019c) [6] | Effectiveness and efficiency | |||

| Forster et al. (2019d) [7] | x | |||

| Kettwich et al. (2016) [9] | Satisfaction and usefulness (VDL [22]), expectations, suggestions | |||

| Morgan et al. (2018) [10] | x | |||

| Naujoks et al. (2017) [11] | Comprehensibility, SUS [20] | |||

| Richardson et al. (2018) [12] | Efficiency, effectiveness, and usefulness | |||

| Forster et al. (2018) 1 [13] | SUS [20]/PSSUQ [21] | |||

| François et al. (2016) 1 [14] | x | |||

| Naujoks et al. (2018) 1 [16] | Usability and safety | |||

| Naujoks et al. (2019a) 1 [17] | Effectiveness and efficiency | x | ||

| Naujoks et al. (2019b) 1 [18] | 20-item guideline | |||

| Pauzie and Orfila (2016) 1 [19] | Acceptability, acceptance, trust, situation awareness, workload |

| Article | Driving Simulator | Instrumented Car | Desktop Methods |

|---|---|---|---|

| Forster et al. (2019a) [4] | Fix-base | ||

| Forster et al. (2019b) [5] | Moving-base | ||

| Forster et al. (2019c) [6] | Moving-base | ||

| Forster et al. (2019d) [7] | Fix-base | ||

| Guo et al. (2019) [8] | x | ||

| Kettwich et al. (2016) [9] | Fix-base | ||

| Morgan et al. (2018) [10] | Fix-base | ||

| Naujoks et al. (2017) [11] | Low-fidelity | ||

| Richardson et al. (2018) [12] | Workshop | ||

| Naujoks et al. (2019a) 1 [17] | x | ||

| Naujoks et al. (2019b) 1 [18] | High-fidelity | x | |

| Pauzie and Orfila (2016) 1 [19] | x |

| Article | Users | Experts |

|---|---|---|

| Forster et al. (2019a) [4] | n = 24; age 20–62; BMW employees | |

| Forster et al. (2019b) [5] | n = 52; age 20–62; BMW employees | |

| Forster et al. (2019c) [6] | n = 55; age 20–62; BMW employees | |

| Forster et al. (2019d) [7] | n = 57; age 25–60; BMW employees | |

| Guo et al. (2019) [8] | n = 22; age 24–61; Renault or IRT System X employees | |

| Kettwich et al. (2016) [9] | n = 12; age 23–49 | |

| Morgan et al. (2018) [10] | n = 31; age 47–88 | |

| Naujoks et al. (2017) [11] | n = 6; field of cognitive ergonomics | |

| Richardson et al. (2018) [12] | n1 = 5, n2 = 9; field of ergonomics, HMI, driver assistance systems; from university and industry | |

| Forster et al. (2018) 1 [13] | x | x |

| François et al. (2016) 1 [14] | x | |

| Naujoks et al. (2018) 1 [16] | x | x |

| Naujoks et al. (2019a) 1 [17] | n > 20; diverse age distribution [30]; potential users, comparable prior experience, not affiliated with tester’s company | |

| Naujoks et al. (2019b) 1 [18] | x | n > 4 |

| Article | Upward Transitions 2 | Downward Transitions 2 | System Mode/Availability 2 | Others |

|---|---|---|---|---|

| Forster et al. (2019a) [4] | L0 → L2 L0 → L3 L2 → L3 | L3 → L2 | ||

| Forster et al. (2019b) [5] | L0 → L2 (driver) L0 → L3 (driver) L2 → L3 (driver) | L3 → L2 (driver) | ||

| Forster et al. (2019c) [6] | L0 → L2 L0 → L3 L2 → L3 | L3 → L0 L3 → L2 L2 → L0 | ||

| Forster et al. (2019d) [7] | L0 → Lx (initial) L0 → Lx (re-activation) L0 → Lx (re-activation) | Lx → L0 (driver) Lx → L0 (system; TOR) Lx → L0 (driver; TOR) | Maneuver (lane change, speed adaptation) | |

| Guo et al. (2019) [8] | Highway entry section with different traffic conditions | |||

| Kettwich et al. (2016) [9] | Environment (traffic light) | |||

| Morgan et al. (2018) [10] | Operating a navigation system | |||

| Naujoks et al. (2017) [11] | Lx → L0 | Maneuver and environment (splitting lanes, curvature, speed limit) | ||

| Richardson et al. (2018) [12] | L0 → Lx | Lx → L0 | x | |

| Gold et al. (2017) 1 [15] | x | |||

| Naujoks et al. (2018) 1 [16] | 84 TC | 84 TC | 14 TC | |

| Naujoks et al. (2019a) 1 [17] | L2 → L3 | L3 → L2 (driver) L3 → L2 (system) L3 → L1 (system) L3 → L0 (system) | L2 steady state L3 steady state L3 degraded L3 unavailable | |

| Naujoks et al. (2019b) 1 [18] | L0 → Lx | Lx → L0 | x |

| Article | Observational Metrics (Visual Behavior, Interaction and NDRA Performance, etc.) | Usability Questionnaire | Other Constructs (Questionnaires) and Methods |

|---|---|---|---|

| Forster et al. (2019a) [4] | Experimenter rating | Mental model [38] | |

| Forster et al. (2019b) [5] | Visual behavior (no. of gaze switches) | Mental model [38] | |

| Forster et al. (2019c) [6] | SUS [20] | ||

| Forster et al. (2019d) [7] | SUS [20] | Acceptance (VDL [22], UTAUT [33]); trust (Trust in Automated Systems [41], UTA [35]); user experience (AttrakDiff [42], UEQ [43], meCUE [44]) | |

| Guo et al. (2019) [8] | Time & frequency of button use | Interview; Thinking Aloud Method [39] | |

| Kettwich et al. (2016) [9] | Acceptance (VDL [22]); interview thinking aloud method [39] | ||

| Morgan et al. (2018) [10] | SUS [20] | Workload (NASA-TLX [36]); Trust (ATS [41], GTS [34]); Situation Awareness (SART [45]); Technical Affiliation (ATCQ [46]) | |

| Naujoks et al. (2017) [11] | Take-Over Performance No. of unnecessary system deactivations | SUS [20] | Interview; Expert Evaluation |

| Richardson et al. (2018) [12] | SUS [20], ISO 9241 [32] as cited by [12] | Desirable HMI Aspects [47]; Thinking Aloud Method [39]; Heuristic Evaluation [40] | |

| Forster et al. (2018) 1 [13] | Visual Behavior; Reaction Times; Interaction and NDRA Performance; Expert Assessment | SUS [20], PSSUQ [21] | |

| Naujoks et al. (2019a) 1 [17] | Heuristic Evaluation [40] | ||

| Naujoks et al. (2019b) 1 [18] | Heuristic Evaluation [40] | ||

| Pauzie, & Orfila (2016) 1 [19] | Visual Behavior | Acceptance; Workload (DALI [48]); Trust; Situation Awareness (SAGAT [37], SART [45]); Interview |

| Article | First Contact | Repeat Use |

|---|---|---|

| Forster et al. (2019a) [4] | Intuitive use, manual, and interactive tutorial | |

| Forster et al. (2019b) [5] | Intuitive use | x |

| Forster et al. (2019c) [6] | Intuitive use | x |

| Forster et al. (2019d) [7] | x | |

| Guo et al. (2019) [8] | Intuitive use | |

| Kettwich et al. (2016) [9] | x | |

| Morgan et al. (2018) [10] | x | x |

| Naujoks et al. (2017) [11] | x | |

| Richardson et al. (2018) [12] | x | x |

| Forster et al. (2018) 1 [13] | x | x |

| François et al. (2016) 1 [14] | x | x |

| Naujoks et al. (2018) 1 [16] | Intuitive use | |

| Naujoks et al. (2019a) 1 [17] | x | x |

| Naujoks et al. (2019b) 1 [18] | x |

| Study Characteristic | Best Practice Advice |

|---|---|

| Definition of Usability | General Definition: “extent to which a system, product or service can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use” [23] (p. 2) Practical Realization: the user understands that the ADS is “(1) functioning properly; (2) currently engaged in ADS mode; (3) currently “unavailable” for use; (4) experiencing a malfunction; and/or (5) requesting control transition from the ADS to the operator” [24] (p. 10) |

| Testing Environment | Driving Simulator |

| Sample Characteristics | Sample Group: represents the potential user population (age, gender, prior experience, affiliation with technical devices, etc.) Sample Size: determined by the statistical procedure |

| Test Cases | Scenarios: (1) transitions between different automation modes and (2) availability of different automation modes Criticality: non-critical situations |

| Dependent Variables | General: Combination of observational and subjective metrics Observational metrics: (1) visual behavior according to [49] (e.g., percent on Area of Interest) and (2) the interaction performance with CAD HMI (e.g., operating errors or reaction time for a button press) Subjective Metrics: (1) System Usability Scale [20], (2) short interviews after test trials and questionnaires, and (3) supplementary standardized questionnaires |

| Conditions of Use | First contact between user and ADS Instructions contain only general information on the ADS |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Albers, D.; Radlmayr, J.; Loew, A.; Hergeth, S.; Naujoks, F.; Keinath, A.; Bengler, K. Usability Evaluation—Advances in Experimental Design in the Context of Automated Driving Human–Machine Interfaces. Information 2020, 11, 240. https://doi.org/10.3390/info11050240

Albers D, Radlmayr J, Loew A, Hergeth S, Naujoks F, Keinath A, Bengler K. Usability Evaluation—Advances in Experimental Design in the Context of Automated Driving Human–Machine Interfaces. Information. 2020; 11(5):240. https://doi.org/10.3390/info11050240

Chicago/Turabian StyleAlbers, Deike, Jonas Radlmayr, Alexandra Loew, Sebastian Hergeth, Frederik Naujoks, Andreas Keinath, and Klaus Bengler. 2020. "Usability Evaluation—Advances in Experimental Design in the Context of Automated Driving Human–Machine Interfaces" Information 11, no. 5: 240. https://doi.org/10.3390/info11050240

APA StyleAlbers, D., Radlmayr, J., Loew, A., Hergeth, S., Naujoks, F., Keinath, A., & Bengler, K. (2020). Usability Evaluation—Advances in Experimental Design in the Context of Automated Driving Human–Machine Interfaces. Information, 11(5), 240. https://doi.org/10.3390/info11050240