On the Integration of Knowledge Graphs into Deep Learning Models for a More Comprehensible AI—Three Challenges for Future Research

Abstract

1. Introduction

2. Background: Learning Approach in Connectionist and Symbolic AI

3. Explanations for AI Experts: Technical Issues and Solutions

3.1. Technical Issues in a Connectionist Perspective

3.2. Explainable Systems for AI Experts

- Simulatability: checking through a heuristic approach whether a human reaches the mechanistic understanding of how the model functions, and consequently if he is able to simulate the decision process. In this context, within a user study [34] that involved thousand participants, Friedler et al. measured human performance in operations that mimic the definition of simulatability, using as evaluation metric the runtime operation count.

- Decomposability: in this case each component of the model, including a single input, parameter, and computation has to be clearly interpretable. In a recent work, Assaf et al. [35] introduce a Convolutional Neural Network (CNN) to predict multivariate time series, in the domain of renewable energy. The goal is to produce saliency maps [36] to provide two different types of explanation on the predictions: (i) which features are the most important in a specific interval of time; (ii) in which time intervals the joint contribution of the features has the greatest impact.

- Algorithmic transparency: for techniques such as linear models there is a margin of confidence that the training will converge to a unique solution, so the model might behave in an online setting in an expected way. At the opposite, deep learning models cannot provide guarantees that they will work in the same way on new problems. Datta et al. [37] designed a set of Quantitative Input Influence (QII) for capturing the joint influence of the inputs on the outputs of an AI system, with the goal to produce transparency reports.

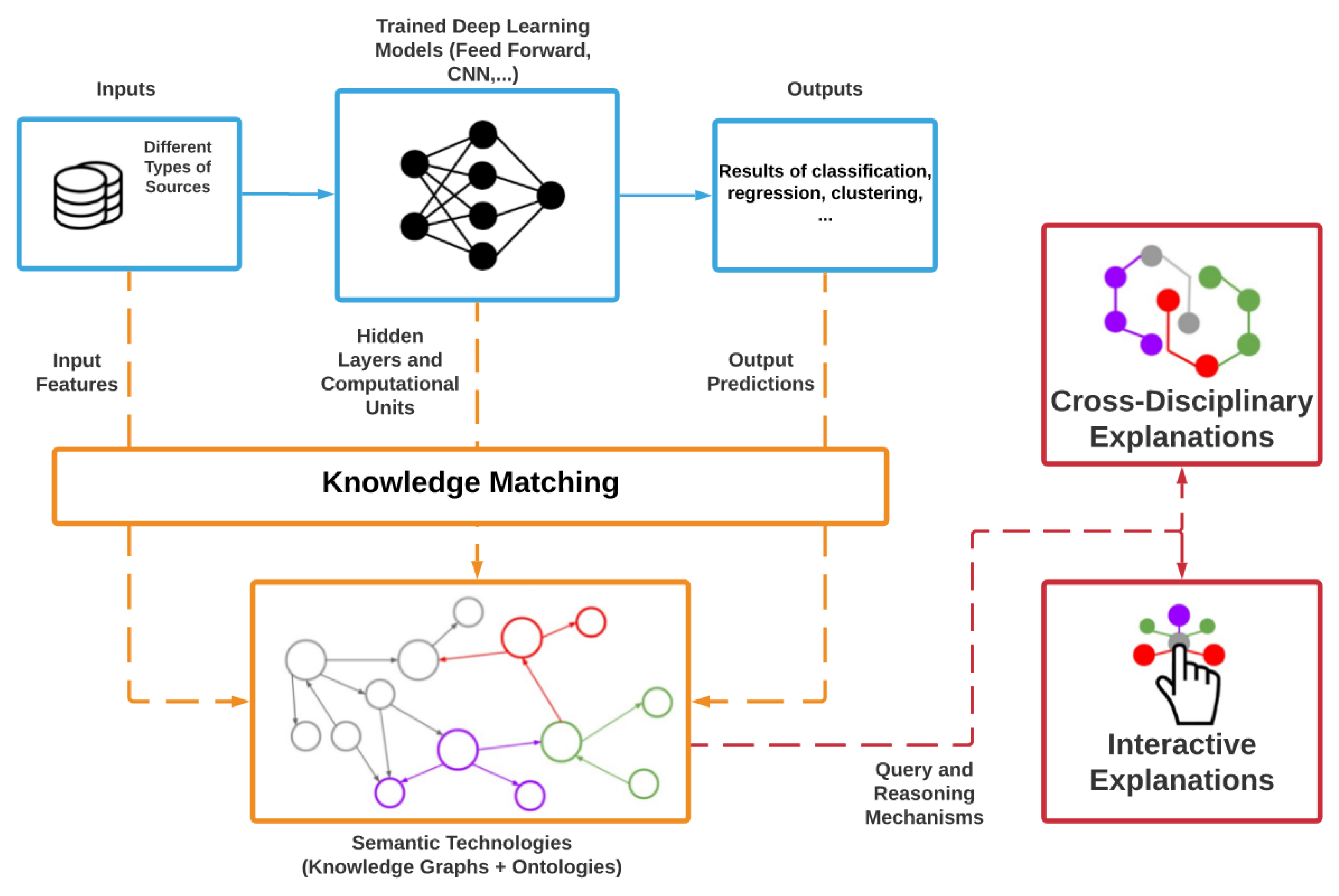

4. Explanations for Non-Insiders: Three Research Challenges with Symbolic Systems

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F.Y. Traffic flow prediction with big data: A deep learning approach. IEEE Trans. Intell. Transp. Syst. 2014, 16, 865–873. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, Y.; Liu, T.; Duan, J. Deep learning for event-driven stock prediction. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Mayr, A.; Klambauer, G.; Unterthiner, T.; Hochreiter, S. DeepTox: Toxicity prediction using deep learning. Front. Environ. Sci. 2016, 3, 80. [Google Scholar] [CrossRef]

- Doran, D.; Schulz, S.; Besold, T.R. What does explainable AI really mean? A new conceptualization of perspectives. arXiv 2017, arXiv:1710.00794. [Google Scholar]

- Saradhi, V.V.; Palshikar, G.K. Employee churn prediction. Expert Syst. Appl. 2011, 38, 1999–2006. [Google Scholar] [CrossRef]

- Kang, H.W.; Kang, H.B. Prediction of crime occurrence from multi-modal data using deep learning. PLoS ONE 2017, 12, e0176244. [Google Scholar] [CrossRef]

- Al Hasan, M.; Chaoji, V.; Salem, S.; Zaki, M. Link prediction using supervised learning. In Proceedings of the SDM04: Workshop on Link Analysis, Counter-Terrorism and Security, Lake Buena Vista, FL, USA, 24 April 2004. [Google Scholar]

- Lee, H.; Yune, S.; Mansouri, M.; Kim, M.; Tajmir, S.H.; Guerrier, C.E.; Ebert, S.A.; Pomerantz, S.R.; Romero, J.M.; Kamalian, S.; et al. An explainable deep-learning algorithm for the detection of acute intracranial haemorrhage from small datasets. Nat. Biomed. Eng. 2019, 3, 173. [Google Scholar] [CrossRef]

- Ehrlinger, L.; Wöß, W. Towards a Definition of Knowledge Graphs. SEMANTiCS (Posters, Demos, SuCCESS) 2016. Available online: https://2016.semantics.cc/posters-and-demos-madness (accessed on 21 February 2020).

- Seeliger, A.; Pfaff, M.; Krcmar, H. Semantic Web Technologies for Explainable Machine Learning Models: A Literature Review. PROFILES 2019. Available online: http://ceur-ws.org/Vol-2465/ (accessed on 21 February 2020).

- Chekol, M.W.; Pirrò, G.; Schoenfisch, J.; Stuckenschmidt, H. Marrying uncertainty and time in knowledge graphs. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Munch, M.; Dibie, J.; Wuillemin, P.H.; Manfredotti, C. Interactive Causal Discovery in Knowledge Graphs. 2019. Available online: http://ceur-ws.org/Vol-2465/ (accessed on 21 February 2020).

- Gruber, T.R. Toward principles for the design of ontologies used for knowledge sharing? Int. J. Hum.-Comput. Stud. 1995, 43, 907–928. [Google Scholar] [CrossRef]

- Dumontier, M.; Callahan, A.; Cruz-Toledo, J.; Ansell, P.; Emonet, V.; Belleau, F.; Droit, A. Bio2RDF release 3: A larger connected network of linked data for the life sciences. In Proceedings of the 2014 International Conference on Posters & Demonstrations Track, Riva del Garda, Italy, 21 October 2014; Volume 1272, pp. 401–404. [Google Scholar]

- Banerjee, S. A Semantic Web Based Ontology in the Financial Domain. In Proceedings of World Academy of Science, Engineering and Technology; World Academy of Science, Engineering and Technology (WASET): Paris, France, 2013; Number 78; p. 1663. [Google Scholar]

- Casanovas, P.; Palmirani, M.; Peroni, S.; van Engers, T.; Vitali, F. Semantic web for the legal domain: The next step. Semant. Web 2016, 7, 213–227. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Rumelhart, D.E. Parallel distributed processing: Explorations in the microstructure of cognition. Learn. Intern. Represent. Error Propag. 1986, 1, 318–362. [Google Scholar]

- Hinton, G.E.; McClelland, J.L.; Rumelhart, D.E. Distributed Representations; Carnegie-Mellon University: Pittsburgh, PA, USA, 1984. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Koh, P.W.; Liang, P. Understanding black-box predictions via influence functions. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 7–9 August 2017; Volume 70, pp. 1885–1894. [Google Scholar]

- Lecue, F. On the Role of Knowledge Graphs in Explainable AI. Semantic Web Journal (Forthcoming). 2019. Available online: http://www.semantic-web-journal.net/content/role-knowledge-graphs-explainable-ai (accessed on 21 February 2020).

- Haugeland, J. Artificial Intelligence: The Very Idea; MIT Press: Cambridge, MA, USA, 1989. [Google Scholar]

- Heath, T.; Bizer, C. Linked data: Evolving the web into a global data space. Synth. Lect. Semant. Web Theory Technol. 2011, 1, 1–136. [Google Scholar] [CrossRef]

- Hoehndorf, R.; Queralt-Rosinach, N. Data science and symbolic AI: Synergies, challenges and opportunities. Data Sci. 2017, 1, 27–38. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking inside the black-box: A survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Miller, T.; Howe, P.; Sonenberg, L. Explainable AI: Beware of inmates running the asylum or: How I learnt to stop worrying and love the social and behavioural sciences. arXiv 2017, arXiv:1712.00547. [Google Scholar]

- Mittelstadt, B.; Russell, C.; Wachter, S. Explaining explanations in AI. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; ACM: New York, NY, USA, 2019; pp. 279–288. [Google Scholar]

- Hall, P.; Gill, N. An Introduction to Machine Learning Interpretability-Dataiku Version; O’Reilly Media, Incorporated: Sebastopol, CA, USA, 2018. [Google Scholar]

- Johansson, U.; König, R.; Niklasson, L. The Truth is In There-Rule Extraction from Opaque Models Using Genetic Programming. In Proceedings of the International Florida Artificial Intelligence Research Society Conference, FLAIRS 2004, Miami Beach, FL, USA, 17–19 May 2004; pp. 658–663. [Google Scholar]

- Sadowski, P.; Collado, J.; Whiteson, D.; Baldi, P. Deep learning, dark knowledge, and dark matter. In Proceedings of the NIPS 2014 Workshop on High-energy Physics and Machine Learning, Montreal, QC, Canada, 13 December 2015; pp. 81–87. [Google Scholar]

- Breiman, L. Statistical modeling: The two cultures (with comments and a rejoinder by the author). Stat. Sci. 2001, 16, 199–231. [Google Scholar] [CrossRef]

- Lepri, B.; Oliver, N.; Letouzé, E.; Pentland, A.; Vinck, P. Fair, transparent, and accountable algorithmic decision-making processes. Philos. Technol. 2018, 31, 611–627. [Google Scholar] [CrossRef]

- Friedler, S.A.; Roy, C.D.; Scheidegger, C.; Slack, D. Assessing the local interpretability of machine learning models. arXiv 2019, arXiv:1902.03501. [Google Scholar]

- Assaf, R.; Schumann, A. Explainable deep neural networks for multivariate time series predictions. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 6488–6490. [Google Scholar]

- Adebayo, J.; Gilmer, J.; Muelly, M.; Goodfellow, I.; Hardt, M.; Kim, B. Sanity checks for saliency maps. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 9505–9515. [Google Scholar]

- Datta, A.; Sen, S.; Zick, Y. Algorithmic transparency via quantitative input influence: Theory and experiments with learning systems. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; pp. 598–617. [Google Scholar]

- Lipton, Z.C. The mythos of model interpretability. arXiv 2016, arXiv:1606.03490. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 1135–1144. [Google Scholar]

- Tamagnini, P.; Krause, J.; Dasgupta, A.; Bertini, E. Interpreting black-box classifiers using instance-level visual explanations. In Proceedings of the 2nd Workshop on Human-In-the-Loop Data Analytics, Chicago, IL, USA, 14 May 2017; ACM: New York, NY, USA, 2017; p. 6. [Google Scholar]

- Adler, P.; Falk, C.; Friedler, S.A.; Rybeck, G.; Scheidegger, C.; Smith, B.; Venkatasubramanian, S. Auditing black-box models by obscuring features. arXiv 2016, arXiv:1602.07043. [Google Scholar]

- Kim, B.; Rudin, C.; Shah, J.A. The bayesian case model: A generative approach for case-based reasoning and prototype classification. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 1952–1960. [Google Scholar]

- Sarker, M.K.; Xie, N.; Doran, D.; Raymer, M.; Hitzler, P. Explaining trained neural networks with semantic web technologies: First steps. arXiv 2017, arXiv:1710.04324. [Google Scholar]

- Angelov, P.; Soares, E. Towards Explainable Deep Neural Networks (xDNN). arXiv 2019, arXiv:1912.02523. [Google Scholar]

- Selvaraju, R.R.; Chattopadhyay, P.; Elhoseiny, M.; Sharma, T.; Batra, D.; Parikh, D.; Lee, S. Choose Your Neuron: Incorporating Domain Knowledge through Neuron-Importance. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 526–541. [Google Scholar]

- Batet, M.; Valls, A.; Gibert, K.; Sánchez, D. Semantic Clustering Using Multiple Ontologies. In Proceedings of the Catalan Conference on AI (CCIA), Terragona, Spain, 20–22 October 2010; pp. 207–216. [Google Scholar]

- Miller, G.A. WordNet: A lexical database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Geng, Y.; Chen, J.; Jimenez-Ruiz, E.; Chen, H. Human-centric transfer learning explanation via knowledge graph. arXiv 2019, arXiv:1901.08547. [Google Scholar]

- Wilcke, X.; Bloem, P.; de Boer, V. The Knowledge Graph as the Default Data Model for Machine Learning; IOS Press: Amsterdam, The Netherlands, 2017. [Google Scholar]

- Sopchoke, S.; Fukui, K.i.; Numao, M. Explainable cross-domain recommendations through relational learning. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Wang, P.; Wu, Q.; Shen, C.; Hengel, A.V.; Dick, A. Explicit knowledge-based reasoning for visual question answering. arXiv 2015, arXiv:1511.02570. [Google Scholar]

- Lehmann, J.; Isele, R.; Jakob, M.; Jentzsch, A.; Kontokostas, D.; Mendes, P.N.; Hellmann, S.; Morsey, M.; Van Kleef, P.; Auer, S.; et al. DBpedia–a large-scale, multilingual knowledge base extracted from Wikipedia. Semant. Web 2015, 6, 167–195. [Google Scholar] [CrossRef]

- Liao, L.; He, X.; Zhao, B.; Ngo, C.W.; Chua, T.S. Interpretable multimodal retrieval for fashion products. In Proceedings of the 2018 ACM Multimedia Conference on Multimedia Conference, Seoul, Korea, 22–26 October 2018; pp. 1571–1579. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Futia, G.; Vetrò, A. On the Integration of Knowledge Graphs into Deep Learning Models for a More Comprehensible AI—Three Challenges for Future Research. Information 2020, 11, 122. https://doi.org/10.3390/info11020122

Futia G, Vetrò A. On the Integration of Knowledge Graphs into Deep Learning Models for a More Comprehensible AI—Three Challenges for Future Research. Information. 2020; 11(2):122. https://doi.org/10.3390/info11020122

Chicago/Turabian StyleFutia, Giuseppe, and Antonio Vetrò. 2020. "On the Integration of Knowledge Graphs into Deep Learning Models for a More Comprehensible AI—Three Challenges for Future Research" Information 11, no. 2: 122. https://doi.org/10.3390/info11020122

APA StyleFutia, G., & Vetrò, A. (2020). On the Integration of Knowledge Graphs into Deep Learning Models for a More Comprehensible AI—Three Challenges for Future Research. Information, 11(2), 122. https://doi.org/10.3390/info11020122