Bots as Active News Promoters: A Digital Analysis of COVID-19 Tweets

Abstract

1. Introduction

2. Literature Review

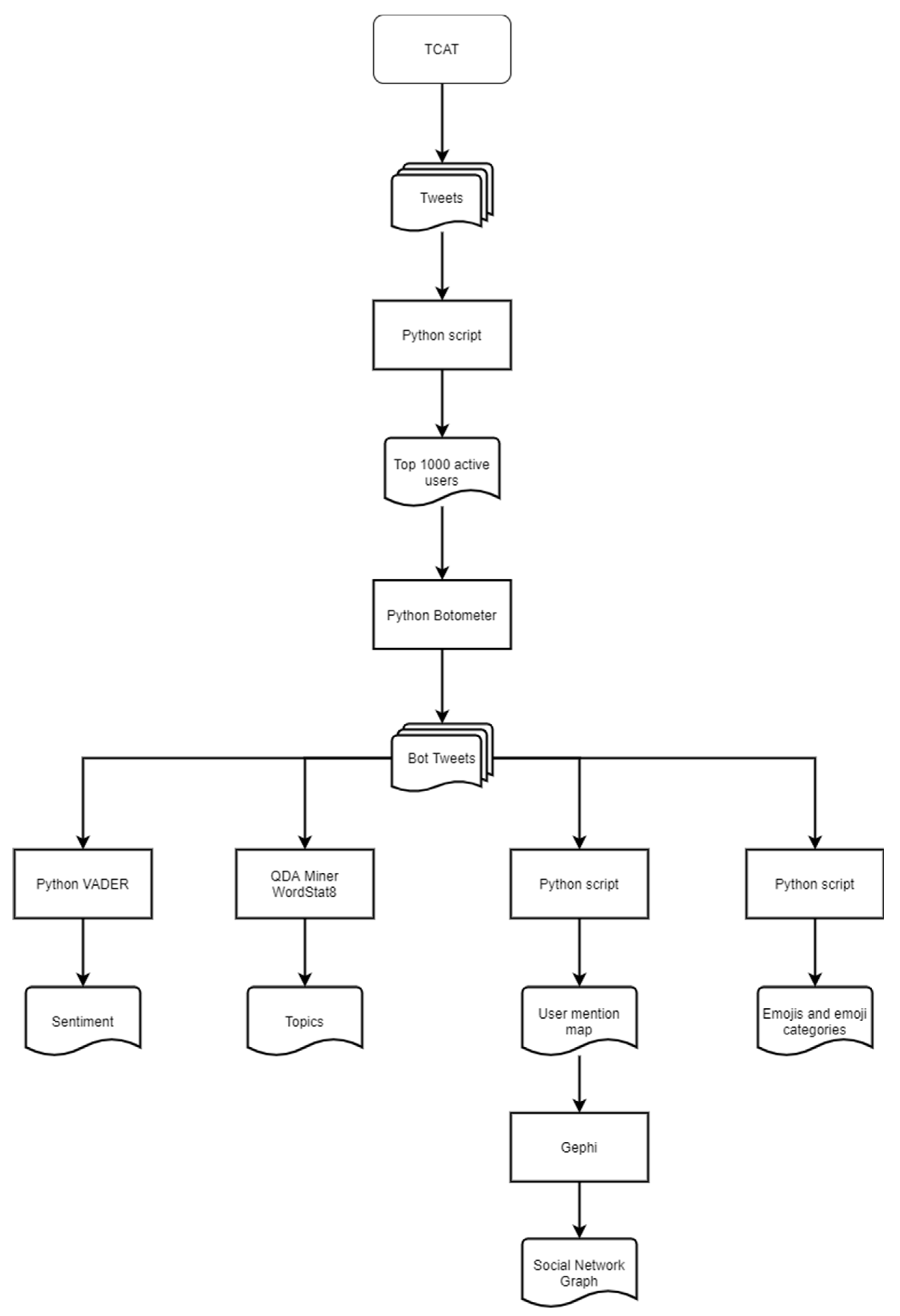

3. Methods

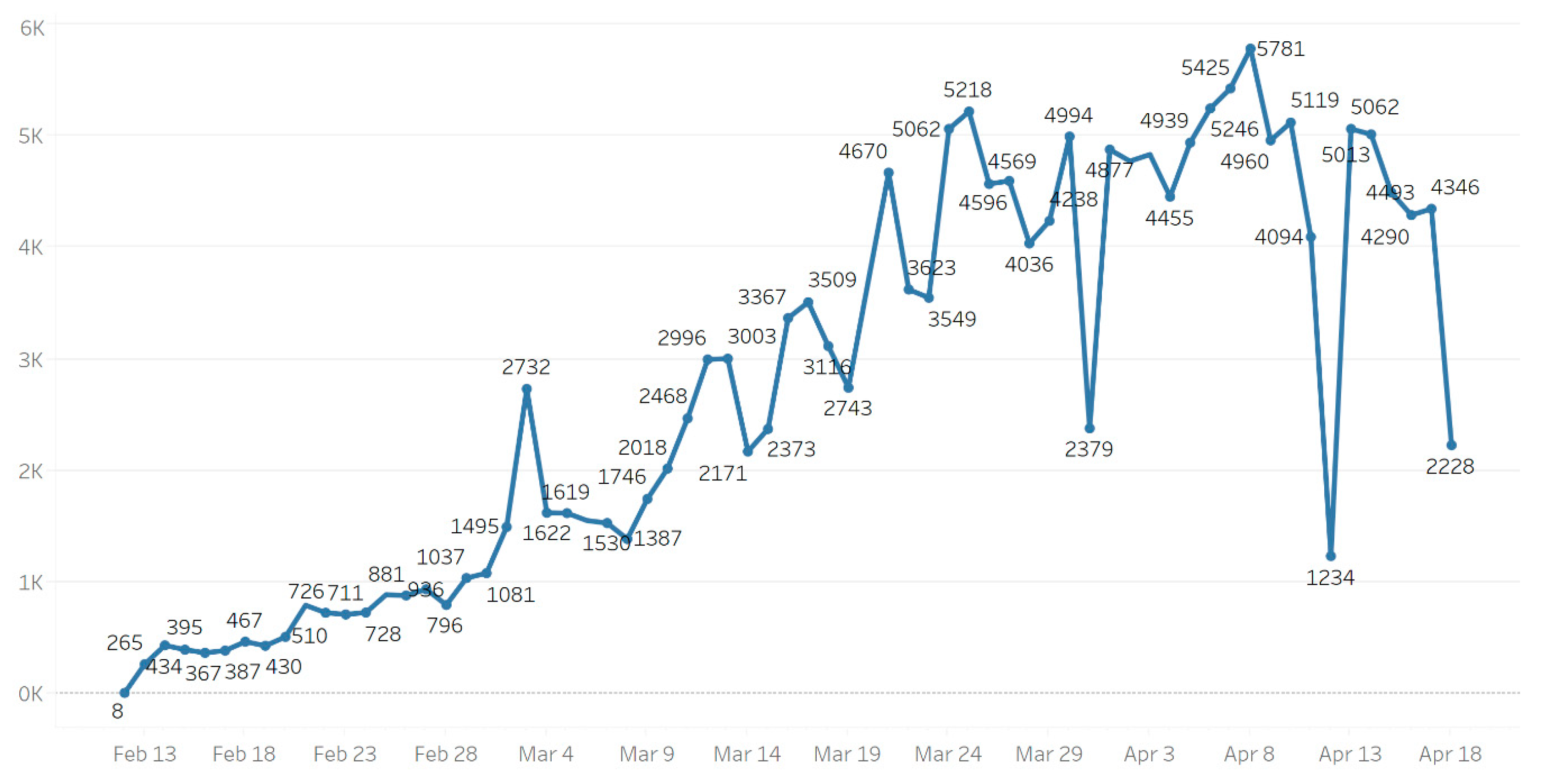

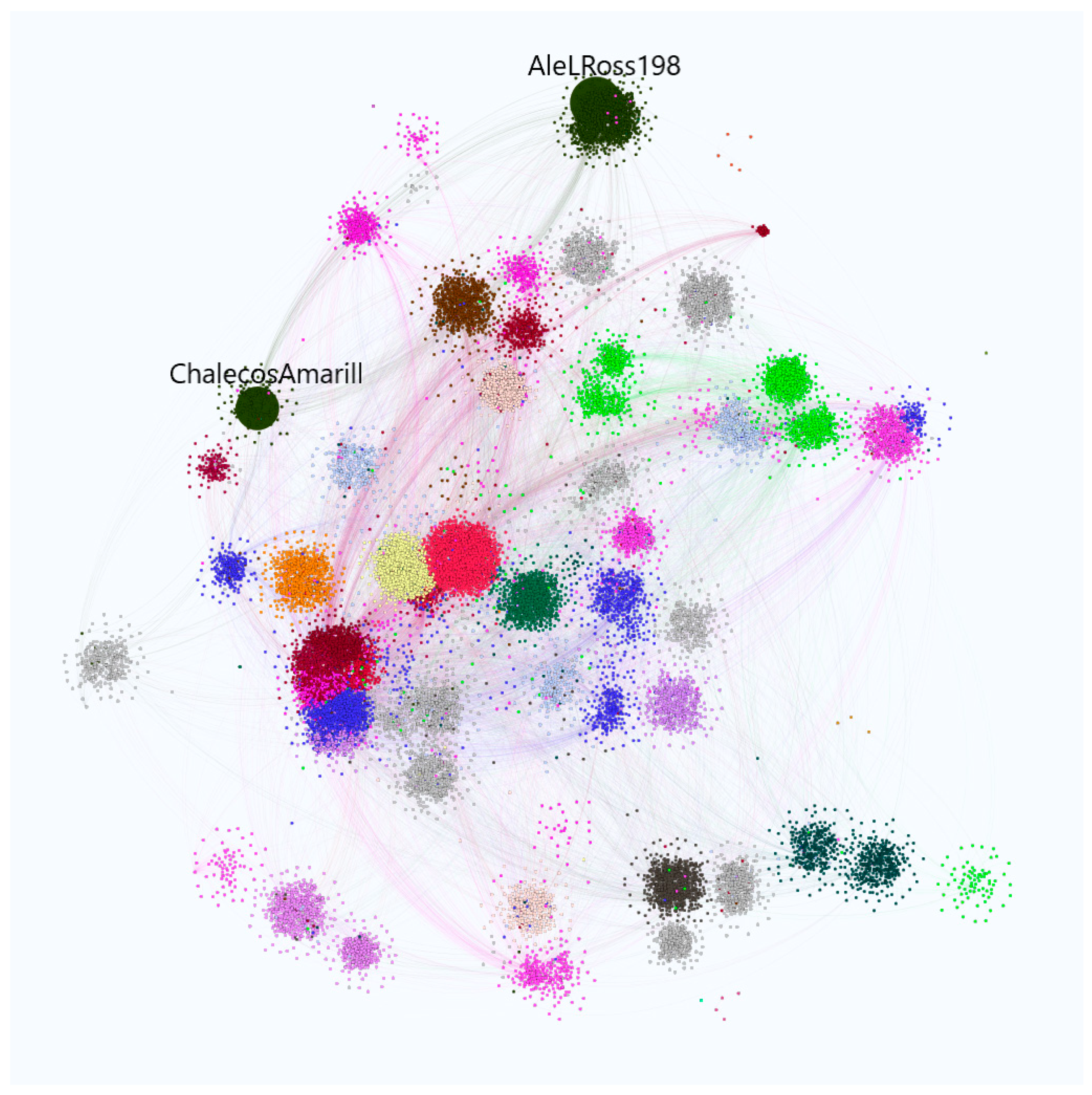

4. Results

(confirmed cases, n = 8529),

(confirmed cases, n = 8529),  (deaths, n = 4773),

(deaths, n = 4773),  (recovered, n = 3685),

(recovered, n = 3685),  (virus, n = 7932) as well as other related emojis like

(virus, n = 7932) as well as other related emojis like  (virus, n = 4181),

(virus, n = 4181),  (Face mask, n = 2076),

(Face mask, n = 2076),  (death, n = 3278), and

(death, n = 3278), and  (SOS, n = 1135). The sequence of emojis gives another insight on the conveyed meaning of these information items; for example, we find that country emoji flags constitute some of the top 20 most recurrent sequences, indicating the ongoing news updates on the number of COVID’s patients, deaths, and recoveries (See Table 2). We also find other emoji sequences like

(SOS, n = 1135). The sequence of emojis gives another insight on the conveyed meaning of these information items; for example, we find that country emoji flags constitute some of the top 20 most recurrent sequences, indicating the ongoing news updates on the number of COVID’s patients, deaths, and recoveries (See Table 2). We also find other emoji sequences like  (disseminating news on the virus, = 678),

(disseminating news on the virus, = 678),  (disseminating videos on the virus, = 666),

(disseminating videos on the virus, = 666),  (n = 110),

(n = 110),  (please wash your hands with soap and water, n = 39), and

(please wash your hands with soap and water, n = 39), and  (sick people around the world, n = 12). In terms of emoji categorization, the results show that emoji symbols are the most frequent ones followed by smileys and people, travel, places and flags, objects, food and drink, and animals and nature. In addition, the list of the top 20 emojis subcategories contain important cue like warnings, sick face, and negative face emojis (Table 3). Finally, we identified 3232 tweets posted by Twitter users that referenced bots in English language.

(sick people around the world, n = 12). In terms of emoji categorization, the results show that emoji symbols are the most frequent ones followed by smileys and people, travel, places and flags, objects, food and drink, and animals and nature. In addition, the list of the top 20 emojis subcategories contain important cue like warnings, sick face, and negative face emojis (Table 3). Finally, we identified 3232 tweets posted by Twitter users that referenced bots in English language.5. Discussion

,

,  ,

,  ) as well as reminders to wear face masks (

) as well as reminders to wear face masks ( ) or wash hands (

) or wash hands ( ).

).6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Allem, J.P.; Ferrara, E. Could social bots pose a threat to public health? Am. J. Public Health 2018, 108, 1005. [Google Scholar] [CrossRef] [PubMed]

- Broniatowski, D.A.; Jamison, A.M.; Qi, S.; AlKulaib, L.; Chen, T.; Benton, A.; Dredze, M. Weaponized health communication: Twitter bots and Russian trolls amplify the vaccine debate. Am. J. Public Health 2018, 108, 1378–1384. [Google Scholar] [CrossRef] [PubMed]

- Brandtzaeg, P.B.; Følstad, A. Chatbots: Changing user needs and motivations. Interactions 2018, 25, 38–43. [Google Scholar] [CrossRef]

- Greer, S.; Ramo, D.; Chang, Y.J.; Fu, M.; Moskowitz, J.; Haritatos, J. Use of the Chatbot “Vivibot” to Deliver Positive Psychology Skills and Promote Well-Being Among Young People After Cancer Treatment: Randomized Controlled Feasibility Trial. JMIR MHealth UHealth 2019, 7, e15018. [Google Scholar] [CrossRef]

- Kretzschmar, K.; Tyroll, H.; Pavarini, G.; Manzini, A.; Singh, I. NeurOx Young People’s Advisory Group. Can your phone be your therapist? Young people’s ethical perspectives on the use of fully automated conversational agents (chatbots) in mental health support. Biomed. Inform. Insights 2019, 11. [Google Scholar] [CrossRef]

- Skjuve, M.; Brandtzæg, P.B. Chatbots as a new user interface for providing health information to young people. In Youth and News in a Digital Media Environment–Nordic-Baltic Perspectives; Nordicom: Göteborg, Sweden, 2018; Available online: https://sintef.brage.unit.no/sintef-xmlui/handle/11250/2576290 (accessed on 25 September 2020).

- Battineni, G.; Chintalapudi, N.; Amenta, F. AI Chatbot Design during an Epidemic like the Novel Coronavirus. Healthcare 2020, 8, 154. [Google Scholar] [CrossRef]

- Datta, C.; Yang, H.Y.; Kuo, I.H.; Broadbent, E.; MacDonald, B.A. Software platform design for personal service robots in healthcare. In Proceedings of the 6th IEEE Conference on Robotics, Automation and Mechatronics (RAM), Manila, Philippines, 12–15 November 2013; pp. 156–161. [Google Scholar]

- Følstad, A.; Brandtzæg, P.B. Chatbots and the new world of HCI. Interactions 2017, 24, 38–42. [Google Scholar] [CrossRef]

- Iyengar, S. Mobile health (mHealth). In Fundamentals of Telemedicine and Telehealth; Academic Press: Cambridge, MA, USA, 2020; pp. 277–294. [Google Scholar] [CrossRef]

- Sciarretta, E.; Alimenti, L. Wellbeing Technology: Beyond Chatbots. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2019; pp. 514–519. [Google Scholar]

- Vaagan, R.W.; Biseth, H.; Sevincer, V. Faktuell: Youths as journalists in online newspapers and magazines in Norway. In Youth and News in a Digital Media Environment–Nordic-Baltic Perspectives; Nordicom: Göteborg, Sweden, 2018. [Google Scholar]

- WHO. WHO and Rakuten Viber Fight COVID-19 Misinformation with Interactive Chatbot. Available online: https://www.who.int/news-room/feature-stories/detail/who-and-rakuten-viber-fight-covid-19-misinformation-with-interactive-chatbot (accessed on 31 March 2020).

- WHO. WHO Launches a Chatbot on Facebook Messenger to Combat COVID-19 Misinformation. Available online: https://www.who.int/news-room/feature-stories/detail/who-launches-a-chatbot-powered-facebook-messenger-to-combat-covid-19-misinformation (accessed on 15 April 2020).

- Lichter, S. The Media. In Understanding America: The Anatomy of an Exceptional Nation; Schuck, P.H., Wilson, J.Q., Eds.; Public Affairs: New York, NY, USA, 2008; pp. 181–218. [Google Scholar]

- Mills, A.J.; Pitt, C.; Ferguson, S.L. The relationship between fake news and advertising: Brand management in the era of programmatic advertising and prolific falsehood. J. Advert. Res. 2019, 59, 3–8. [Google Scholar] [CrossRef]

- Rains, S.A. Big data, computational social science, and health communication: A review and agenda for advancing theory. Health Commun. 2020, 35, 26–34. [Google Scholar] [CrossRef]

- Bode, L.; Vraga, E.K. See something, say something: Correction of global health misinformation on social media. Health Commun. 2018, 33, 1131–1140. [Google Scholar] [CrossRef]

- Swire-Thompson, B.; Lazer, D. Public health and online misinformation: Challenges and recommendations. Annu. Rev. Public Health 2020, 41, 433–451. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, E. What types of COVID-19 conspiracies are populated by Twitter bots? First Monday 2020, 25. [Google Scholar] [CrossRef]

- Ahmed, W.; Vidal-Alaball, J.; Downing, J.; Seguí, F.L. COVID-19 and the 5G conspiracy theory: Social network analysis of Twitter data. J. Med. Internet Res. 2020, 22, e19458. [Google Scholar] [CrossRef] [PubMed]

- Jahanbin, K.; Rahmanian, V. Using Twitter and web news mining to predict COVID-19 outbreak. Asian Pac. J. Trop. Med. 2020, 8, 378–380. [Google Scholar]

- Mackey, T.; Purushothaman, V.; Li, J.; Shah, N.; Nali, M.; Bardier, C.; Cuomo, R. Machine Learning to Detect Self-Reporting of Symptoms, Testing Access, and Recovery Associated With COVID-19 on Twitter: Retrospective Big Data Infoveillance Study. JMIR Public Health Surveill. 2020, 6, e19509. [Google Scholar] [CrossRef] [PubMed]

- Klein, A.; Magge, A.; O’Connor, K.; Cai, H.; Weissenbacher, D.; Gonzalez-Hernandez, G. A Chronological and Geographical Analysis of Personal Reports of COVID-19 on Twitter. MedRxiv 2020. [Google Scholar] [CrossRef]

- Bisanzio, D.; Kraemer, M.U.; Bogoch, I.I.; Brewer, T.; Brownstein, J.S.; Reithinger, R. Use of Twitter social media activity as a proxy for human mobility to predict the spatiotemporal spread of COVID-19 at global scale. Geospat. Health 2020, 15. [Google Scholar] [CrossRef]

- Park, H.W.; Park, S.; Chong, M. Conversations and medical news frames on twitter: Infodemiological study on covid-19 in South Korea. J. Med. Internet Res. 2020, 22, e18897. [Google Scholar] [CrossRef]

- Basch, C.H.; Hillyer, G.C.; Meleo-Erwin, Z.C.; Jaime, C.; Mohlman, J.; Basch, C.E. Preventive behaviors conveyed on YouTube to mitigate transmission of COVID-19: Cross-sectional study. JMIR Public Health Surveill. 2020, 6, e18807. [Google Scholar] [CrossRef]

- Basch, C.E.; Basch, C.H.; Hillyer, G.C.; Jaime, C. The role of YouTube and the entertainment industry in saving lives by educating and mobilizing the public to adopt behaviors for community mitigation of COVID-19: Successive sampling design study. JMIR Public Health Surveill. 2020, 6, e19145. [Google Scholar] [CrossRef]

- WHO. Novel Coronavirus (2019-nCoV); Situation Report-13; World Health Organization: Geneva, Switzerland, 2020; Available online: https://www.who.int/docs/default-source/coronaviruse/situation-reports/20200202-sitrep-13-ncov-v3.pdf (accessed on 25 September 2020).

- Kouzy, R.; Abi Jaoude, J.; Kraitem, A.; El Alam, M.B.; Karam, B.; Adib, E.; Baddour, K. Coronavirus goes viral: Quantifying the COVID-19 misinformation epidemic on Twitter. Cureus 2020, 12, e7255. [Google Scholar] [CrossRef] [PubMed]

- Pulido, C.M.; Villarejo-Carballido, B.; Redondo-Sama, G.; Gómez, A. COVID-19 infodemic: More retweets for science-based information on coronavirus than for false information. Int. Sociol. 2020. [Google Scholar] [CrossRef]

- Lwin, M.O.; Lu, J.; Sheldenkar, A.; Schulz, P.J.; Shin, W.; Gupta, R.; Yang, Y. Global sentiments surrounding the COVID-19 pandemic on Twitter: Analysis of Twitter trends. JMIR Public Health Surveill. 2020, 6, e19447. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, A.R.; Murad, H.R. The impact of social media on panic during the COVID-19 pandemic in Iraqi Kurdistan: Online questionnaire study. J. Med. Internet Res. 2020, 22, e19556. [Google Scholar] [CrossRef]

- Ni, M.Y.; Yang, L.; Leung, C.M.; Li, N.; Yao, X.I.; Wang, Y.; Liao, Q. Mental Health, Risk Factors, and Social Media Use During the COVID-19 Epidemic and Cordon Sanitaire Among the Community and Health Professionals in Wuhan, China: Cross-Sectional Survey. JMIR Ment. Health 2020, 7, e19009. [Google Scholar] [CrossRef]

- Budhwani, H.; Sun, R. Creating COVID-19 Stigma by Referencing the Novel Coronavirus as the “Chinese virus” on Twitter: Quantitative Analysis of Social Media Data. J. Med. Internet Res. 2020, 22, e19301. [Google Scholar] [CrossRef]

- Jimenez-Sotomayor, M.R.; Gomez-Moreno, C.; Soto-Perez-de-Celis, E. Coronavirus, Ageism, and Twitter: An Evaluation of Tweets about Older Adults and COVID-19. J. Am. Geriatr. Soc. 2020. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.; Alhuwail, D.; Househ, M.; Hamdi, M.; Shah, Z. Top concerns of Tweeters during the COVID-19 pandemic: Infoveillance study. J. Med. Internet Res. 2020, 22, e19016. [Google Scholar] [CrossRef]

- Bruns, A.; Weller, K.; Borra, E.; Rieder, B. Programmed method: Developing a toolset for capturing and analyzing tweets. Aslib J. Inf. Manag. 2014. [Google Scholar] [CrossRef]

- Al-Rawi, A.; Groshek, J.; Zhang, L. What the fake? Assessing the extent of networked political spamming and bots in the propagation of# fakenews on Twitter. Online Inf. Rev. 2019, 43, 53–71. [Google Scholar]

- Hutto, C.; Gilbert, E. VADER: A Parsimonious Rule-based Model for Sentiment Analysis of Social Media Text. In Proceedings of the Eighth International Conference on Weblogs and Social Media (ICWSM-14), Ann Arbor, MI, USA, 1–4 June 2014. [Google Scholar]

- Al-Rawi, A. Gatekeeping fake news discourses on mainstream media versus social media. Soc. Sci. Comput. Rev. 2019, 37, 687–704. [Google Scholar] [CrossRef]

- Péladeau, N.; Davoodi, E. Comparison of latent Dirichlet modeling and factor analysis for topic extraction: A lesson of history. In Proceedings of the the Hawaii International Conference on System Sciences (HICSS), Waikoloa Village, HI, USA, 3–6 January 2018; pp. 1–9. [Google Scholar]

- Blondel, V.D.; Guillaume, J.L.; Lambiotte, R.; Lefebvre, E. Fast unfolding of communities in large networks. J. Stat. Mech. Theory Exp. 2008. [Google Scholar] [CrossRef]

- Himelboim, I.; Xiao, X.; Lee, D.K.L.; Wang, M.Y.; Borah, P. A social networks approach to understanding vaccine conversations on Twitter: Network clusters, sentiment, and certainty in HPV social networks. Health Commun. 2020, 35, 607–615. [Google Scholar] [CrossRef] [PubMed]

- Sadri, A.M.; Hasan, S.; Ukkusuri, S.V.; Lopez, J.E.S. Analysis of social interaction network properties and growth on Twitter. Soc. Netw. Anal. Min. 2018, 8, 56. [Google Scholar] [CrossRef]

- Twitter. Automation Rules. Available online: https://help.twitter.com/en/rules-and-policies/twitter-automation#:~:text=Trending%20topics%3A%20You%20may%20not,over%20multiple%20accounts%20you%20operate (accessed on 3 November 2017).

- Al-Rawi, A. The fentanyl crisis & the dark side of social media. Telemat. Inform. 2019, 45, 101280. [Google Scholar]

- Kabel, A.; Chmidling, C. Disaster prepper: Health, identity, and American survivalist culture. Hum. Organ. 2014, 73, 258–266. [Google Scholar] [CrossRef]

| No | Topic | Keywords | Eigenvalue |

|---|---|---|---|

| 1. | Total cases | Totaling; Place; Worldwide; Confirmed; Case; Recoveries; Coronavirus; Death Confirmed Case; Stay; Country; Home; Update; Cases; Deaths; Active; Total; Recovered Total Deaths; Total Recovered; Active Cases; Total Cases; Stay at Home; Total Active Cases; Confirmed Cases; Total Number; Total Confirmed; Positive Cases | 8.09 |

| 2. | Covid news | Bajucovid; Coronaviruscovid; Indonesiabebascovid; Fightingcovid; Memes; Testing; Italia; India; News | 7.22 |

| 3. | Prepper bushcraft | Prepper; Bushcraft; Survival; Follow; Coronavirusoutbreak; Corona; Stayhomesavelives | 6.76 |

| 4. | Stay at home | CMSTU; Protectyourselfandyourfamily; JPTU; Prayformalaysiaп; Sayangimalaysiaku; Stayathome; Dudukrumah; Jabatanpenerangan Allahpeliharakanlahterengganu; Washyourhands; Staysafe; Stayhome; Stayathome | 6.42 |

| No. | Emoji Sequence | Count |

|---|---|---|

| 1. | CN | 4029 |

| 2. | US | 3803 |

| 3. | ES | 1950 |

| 4. | MY | 1726 |

| 5. | IT | 1572 |

| 6. | CU | 1487 |

| 7. | DE | 1102 |

| 8. | IR | 1099 |

| 9. |  | 913 |

| 10. | FR | 911 |

| 11. | CA | 904 |

| 12. | BR | 883 |

| 13. | GB | 734 |

| 14. |  | 678 |

| 15. | EC | 675 |

| 16. |  | 666 |

| 17. | KR | 598 |

| 18. | MX | 584 |

| 19. | AR | 517 |

| 20. | NO | 508 |

| No. | Main Categories | Count | No. | Subcategories | Count |

|---|---|---|---|---|---|

| 1. | symbols | 46,048 | 1. | arrow | 20,145 |

| 2. | smileys and people | 22,972 | 2. | warning | 9991 |

| 3. | travel, places, and flags | 13,378 | 3. | geometric | 9238 |

| 4. | objects | 6633 | 4. | body | 8592 |

| 5. | activities | 339 | 5. | place map | 5332 |

| 6. | food and drink | 300 | 6. | sky weather | 4306 |

| 7. | animals and nature | 149 | 7. | other symbol | 3477 |

| 8. | face fantasy | 3363 | |||

| 9. | emotion | 3212 | |||

| 10. | transport ground | 2492 | |||

| 11. | sick face | 2244 | |||

| 12. | face neutral | 1821 | |||

| 13. | light and video | 1753 | |||

| 14. | alphanum | 1638 | |||

| 15. | book paper | 1352 | |||

| 16. | av symbol | 1295 | |||

| 17. | office | 1269 | |||

| 18. | face negative | 1168 | |||

| 19. | place building | 764 | |||

| 20. | face positive | 717 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Rawi, A.; Shukla, V. Bots as Active News Promoters: A Digital Analysis of COVID-19 Tweets. Information 2020, 11, 461. https://doi.org/10.3390/info11100461

Al-Rawi A, Shukla V. Bots as Active News Promoters: A Digital Analysis of COVID-19 Tweets. Information. 2020; 11(10):461. https://doi.org/10.3390/info11100461

Chicago/Turabian StyleAl-Rawi, Ahmed, and Vishal Shukla. 2020. "Bots as Active News Promoters: A Digital Analysis of COVID-19 Tweets" Information 11, no. 10: 461. https://doi.org/10.3390/info11100461

APA StyleAl-Rawi, A., & Shukla, V. (2020). Bots as Active News Promoters: A Digital Analysis of COVID-19 Tweets. Information, 11(10), 461. https://doi.org/10.3390/info11100461