Information Usage Behavior and Importance: Korean Scientist and Engineer Users of a Personalized Recommendation Service

Abstract

1. Introduction

1.1. Background and Purpose of the Research

1.2. Objective of the Research

2. Theoretical Framework

2.1. Existing Literature

2.1.1. Information Behavior of Scientists and Engineers

2.1.2. The PRS and Relevant Studies

2.1.3. Satisfaction Rate of Information Service Users

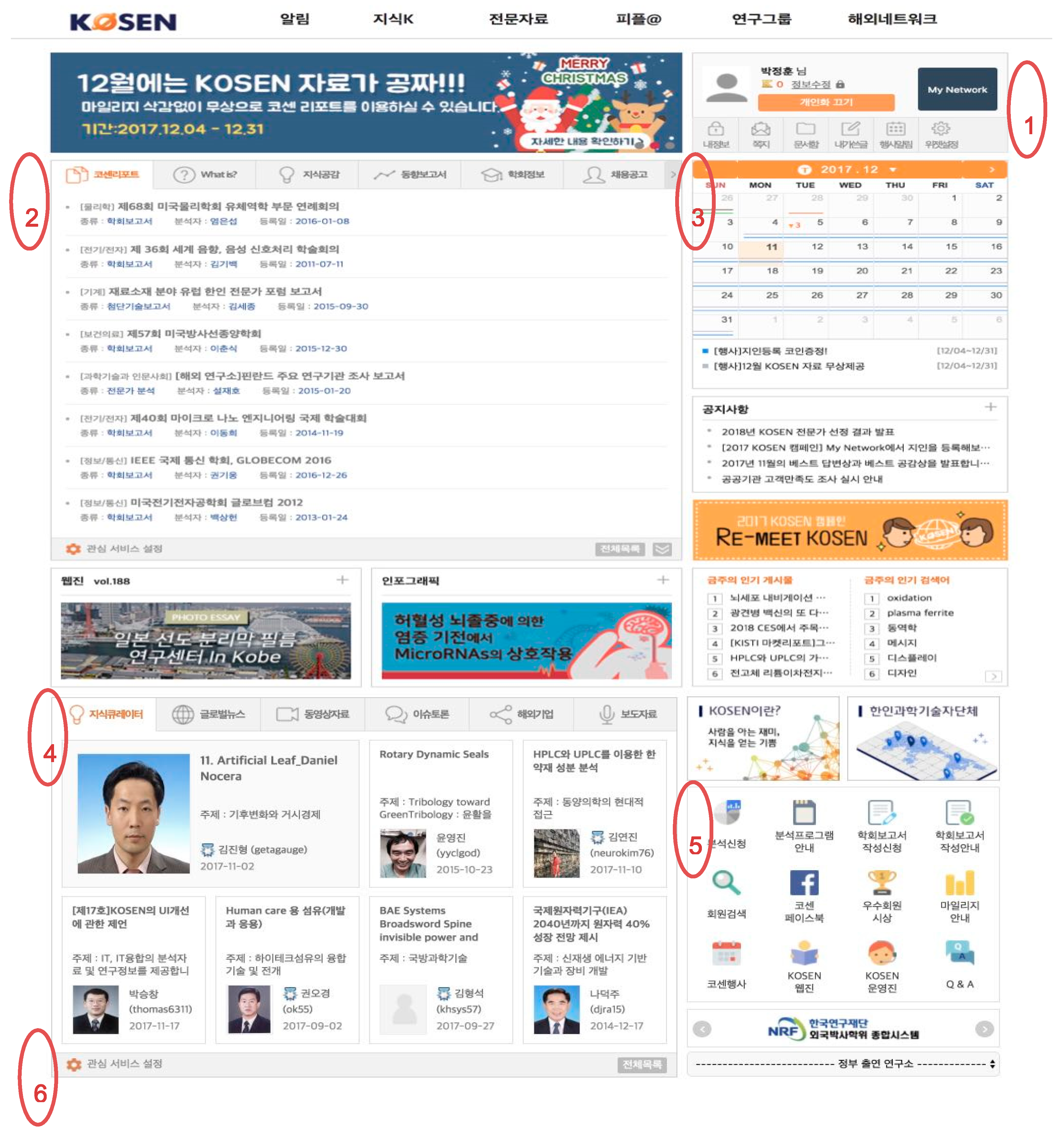

2.2. Overview of KOSEN and PRS

2.2.1. Overview of KOSEN

2.2.2. PRS of KOSEN

2.2.3. PRS Item Introduction

3. Research and Analysis of User’s Information Usage Behavior

3.1. Design of the Research Instrument

3.2. Research Subjects and Methodology

3.2.1. Factorial and Reliability Analysis of the Measurement Instrument

3.2.2. Basic Statistical Analysis

3.2.3. Analysis of PRS Usage Behavior According to Demographic Characteristics

3.2.4. Demographic Analysis of the Satisfaction Rate for System, Content, and Service Support

3.2.5. Analysis of the Importance in Determining Factors in the Three Quality Areas of the PRS and the Interactivity of its Components

3.2.6. Pearson’s Correlation Analysis for Examining the Correlation among the PRS Components

4. Implications

5. Conclusions

5.1. Proposals to Improve the PRS

5.2. Limitations of the Ppresent Study and Future Research

Author Contributions

Funding

Conflicts of Interest

Appendix A—Survey

| Item | Answer | ||||

| Strongly Disagree | Disagree | Neutral | Agree | Strongly agree | |

| KOSEN’s PRS uses easy-to-view design (color, icon, image, layout, etc.) | ① | ② | ③ | ④ | ⑤ |

| PRS information is displayed for easy understanding | ① | ② | ③ | ④ | ⑤ |

| Finding the information I’m looking for and related information (navigation) is easy | ① | ② | ③ | ④ | ⑤ |

| Item | Answer | ||||

| Strongly Disagree | Disagree | Neutral | Agree | Strongly agree | |

| KOSEN site connection and recommendation speed is fast | ① | ② | ③ | ④ | ⑤ |

| I can download the information I’m looking for | ① | ② | ③ | ④ | ⑤ |

| Item | Answer | ||||

| Strongly Disagree | Disagree | Neutral | Agree | Strongly agree | |

| KOSEN PRS is available on PC and mobile | ① | ② | ③ | ④ | ⑤ |

| Dead links are checked, fixed, and maintained by site administration | ① | ② | ③ | ④ | ⑤ |

| PRS is available without using a separate device | ① | ② | ③ | ④ | ⑤ |

| Item | Answer | ||||

| Strongly Disagree | Disagree | Neutral | Agree | Strongly agree | |

| Information related to my field or me is provided | ① | ② | ③ | ④ | ⑤ |

| The information provided by the referral service is reliable | ① | ② | ③ | ④ | ⑤ |

| Presented up-to-date information from PRS | ① | ② | ③ | ④ | ⑤ |

| Item | Answer | ||||

| Strongly Disagree | Disagree | Neutral | Agree | Strongly agree | |

| The information provided by PRS is comprehensive | ① | ② | ③ | ④ | ⑤ |

| Provides information in various forms (PDF, MS office, etc.) through PRS | ① | ② | ③ | ④ | ⑤ |

| Item | Answer | ||||

| Strongly Disagree | Disagree | Neutral | Agree | Strongly agree | |

| The information provided in the referral service is useful for my learning / research | ① | ② | ③ | ④ | ⑤ |

| I have used the information provided in the recommendation service in my thesis, assignment, experiment, etc. | ① | ② | ③ | ④ | ⑤ |

| Item | Answer | ||||

| Strongly Disagree | Disagree | Neutral | Agree | Strongly agree | |

| Provides support for use of referral services (tips, FAQs, Q & A, etc.) | ① | ② | ③ | ④ | ⑤ |

| Staff is available to provide professional support for referral services. | ① | ② | ③ | ④ | ⑤ |

| Item | Answer | ||||

| Strongly Disagree | Disagree | Neutral | Agree | Strongly agree | |

| It provides instruction and instructional materials on recommended services | ① | ② | ③ | ④ | ⑤ |

| Provides information on recommended services in the form of email or online inquiries | ① | ② | ③ | ④ | ⑤ |

| |

| Item | Answer | ||||

| Not very Important | Not Important | Neutral | Important | Very Important | |

| System (homepage) design, usability, accessibility | ① | ② | ③ | ④ | ⑤ |

| The suitability, completeness, and usefulness of the featured content | ① | ② | ③ | ④ | ⑤ |

| Service-related supportability (supportability, interactivity) | ① | ② | ③ | ④ | ⑤ |

References

- Brown, C.M. Information-seeking behaviour of scientists in the electronic information age: Astronomers, chemists, mathematicians, and physicists. J. Am. Soc. Inf. Sci. 1999, 50, 929–943. [Google Scholar] [CrossRef]

- Majid, S.; Anwar, M.A.; Eisenschitz, T.S. Information needs and informationseeking behavior of agricultural scientists in Malaysia. Libr. Inf. Sci. Res. 2000, 22, 145–163. [Google Scholar] [CrossRef]

- Kim, N.-w. Construction and Feedback of an Information System by Analyzing Phusicians’ Information-Seeking Behavior. J. Korean Soc. Inf. Manag. 2016, 33, 161–180. [Google Scholar]

- Fidzani, B.T. Information needs and information-seeking behaviour of graduate students at the University of Botswana. Libr. Rev. 1998, 47, 329–340. [Google Scholar] [CrossRef]

- Hemminger, B.M.; Lu, D.; Vaughan, K.T.L.; Adams, S.J. Information seeking behavior of academic scientists. J. Am. Soc. Inf. Sci. Technol. 2007, 58, 2205–2225. [Google Scholar] [CrossRef]

- Lee, L.-J. An Exploratory Study of Information Services Based on User’s Charateriscitc and Needs. J. Korean Biblia Soc. Libr. Inf. Sci. 2016, 27, 291–312. [Google Scholar]

- Yoo, Y. Evaluation of Collaborative Filtering Methods for Developing Online Music Contents Recommendation System. Trans. Korean Inst. Electr. Eng. 2017, 66, 1083–1091. [Google Scholar]

- Jung, K.-Y.; Lee, J.-H. Comparative Evaluation of User Similarity Weight for Improving Prediction Accuracy in Personalized Recommender System. J. Inst. Electron. Eng. Korea Comput. Inf. 2005, 42, 63–74. [Google Scholar]

- Kim, Y.; Moon, S.-B. A Study on Hybrid Recommendation System Based on Usage Frequency for Multimedia Contents. J. Korean Soc. Inf. Manag. 2006, 23, 91–125. [Google Scholar]

- Lee, Y.-J.; Lee, S.-H.; Wang, C.-J. Improving Sparsity Problem of Collaborative Filtering in Educational Contents Recommendation System. In Proceedings of the 30th KISS Spring Conference, Jeju University, Jeju, Korea, 23–24 April 2003; Volume 30, pp. 830–832. [Google Scholar]

- Sarwar, B.; Karpis, G.; Konstan, J.; Riedl, J. Item based collaborative filtering recommendation algorithms. In Proceedings of the 10th International World Wide Conference, Hong Kong, China, 1–5 May 2001; pp. 285–295. [Google Scholar]

- Kim, J.-S.; Kim, T.-Y.; Choi, J.-H. Dynamic Recommendation System Using Web Document Type and Document Similarity in Cluster. J. KIISE Softw. Appl. 2004, 31, 586–594. [Google Scholar]

- Balabanovic, M.; Shoham, Y. Fab: Content-based, collaborative recommendation. Commun. ACM 1997, 40, 66–72. [Google Scholar] [CrossRef]

- Billsus, D.; Pazzani, M. Learning Collaborative information filters. In Proceedings of the International Conference on Machine Learning, San Francisco, CA, USA, 24–27 July 1998; pp. 46–54. [Google Scholar]

- Burke, R. Hybrid Recommender Systems: Survey and Experiments. User Model. User Adapt. Interact. 2002, 12, 331–370. [Google Scholar] [CrossRef]

- Linden, G.; Smith, B.; York, J. Amazon.com recommendations: Item-to-item collaborative filtering. Internet Comput. IEEE 2003, 7, 76–80. [Google Scholar] [CrossRef]

- Kim, H.-H.; Khu, N.-Y. A Study on the Design and Evaluation of the Model of MyCyber Library for a Customized Information Service. J. Korean Soc. Inf. Manag. 2002, 19, 132–157. [Google Scholar]

- Bae, K.-J. The Analysis of the Differences of Information Needs and Usages among Academic Uses in the Field of Science and Technology. J. Korean Soc. Libr. Inf. Sci. 2010, 44, 157–176. [Google Scholar]

- Yoo, S.R. User-oriented Evaluation of NDSL Information Service. J. Korean Soc. Libr. Inf. Sci. 2002, 36, 25–40. [Google Scholar]

- Kwak, B.-H. A Study on Information Seeking Behavior of University Libraries Users. J. Korean Libr. Inf. Sci. Soc. 2004, 35, 257–281. [Google Scholar]

- Yoo, J.O. A Study on Academic Library User’s Information Literacy. J. Korean Biblia Soc. Libr. Inf. Sci. 2004, 15, 241–254. [Google Scholar]

- Lee, J.-Y.; Han, S.-H.; Joo, S.-H. The Analysis of the Information Users’ Needs and Information Seeking Behavior in the Field of Science and Technology. J. Korea Soc. Inf. Manag. 2008, 25, 127–141. [Google Scholar]

- Fescemyer, K. Information-seeking behavior of undergraduate geography students. Res. Strateg. 2000, 17, 307–317. [Google Scholar] [CrossRef]

- Kim, B.-M.; Li, Q.; Kim, S.-G. A New Approach Combining Content—Based Filtering and Collaborative Filtering for Recommender Systems. J. KIISE Softw. Appl. 2004, 31, 332–342. [Google Scholar]

- Ko, H.-M.; Kim, S.-m. Design and Implementation of Smart-Mirror Supporting Recommendation Service based on Personal Usage Data. Kiise Trans. Comput. Pract. 2017, 23, 65–73. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, Y.; Zhou, C. Web service success factors from users’ behavioral perspective. In Computer Supported Cooperative Work in Design III; Shen, W., Luo, J., Lin, Z., Barthès, J.P.A., Hao, Q., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 540–548. [Google Scholar]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information. Mis Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Martensen, A.L.G. Improving Library Users’ Perceived Quality, Satisfaction and Loyalty: An Integrated Measurement and Management System. J. Acad. Librariansh. 2003, 29, 140–147. [Google Scholar] [CrossRef]

- Khan, S.A.; Shafique, F. Role of Departmental Library in Satisfying the Information Needs of Students: A Survey of Two Departments of the Islamia University of Bahawalpur. Pak. J. Libr. Inf. Sci. 2011, 12, I1–I6. [Google Scholar]

- Seeholzer, J.; Salem, J.A. Library on the go: A focus group study of the Mobile Web and the academic. Coll. Res. Libr. 2011, 72, 9–20. [Google Scholar] [CrossRef][Green Version]

- Chang, Y.-K. A study of e-service quality and user satisfaction in public libraries. J. Korean Soc. Libr. Inf. Sci. 2007, 41, 315–329. [Google Scholar]

- Hwang, J.-Y. Influences of Digital Library E-Service Quality on Customer Satisfaction. Master’s Thesis, Ajou University, Woldeukeom-ro, Korea, 2007. [Google Scholar]

- Park, J.-S.; Son, J.-H. An Effect of Service Value of Korea Search Engine on Customer Satisfaction. Korea Inst. Enterp. Archit. 2008, 5, 79–90. [Google Scholar]

- Nam, Y.-J.; Choi, S.-E. A Study on User Satisfaction with e-Book Services in University Libraries. J. Korean Soc. Libr. Inf. Sci. 2011, 45, 287–310. [Google Scholar]

- Oliver, R.L. Cognitive, Affective, and Attribute Base of the Satisfaction Responses. J. Consum. Res. 1993, 20, 418–430. [Google Scholar] [CrossRef]

- Bridges, L.; Rempel, H.G.; Griggs, K. Making the case for a mobile library Web site. Ref. Serv. Rev. 2010, 38, 309–320. [Google Scholar] [CrossRef]

- Byun, D.H.; Finnie, G. Evaluating usability, user satisfaction and intention to revisit for successful e-government websites. Electron. Gov. Int. J. 2011, 8, 1–19. [Google Scholar] [CrossRef]

- Khan, A.; Ahmed, S. The impact of digital library resources on scholarly communication: Challenges and opportunities for university libraries in Pakistan. Libr. Hi Tech News 2013, 30, 12–29. [Google Scholar] [CrossRef]

- Park, Y.-h. A Study on the Book Recommendation Standards of Book-Curaion Service for School Library. J. Korean Libr. Inf. Sci. Soc. 2016, 47, 279–303. [Google Scholar]

- Osborne, R. Open access publishing, academic research and scholarly communication. Online Inf. Rev. 2015, 39, 637–648. [Google Scholar] [CrossRef]

- Suarez-Torrente, M.D.C.; Conde-Clemente, P.; Martínez, A.B.; Juan, A.A. Improving web user satisfaction by ensuring usability criteria compliance: The case of an economically depressed region of Europe. Online Inf. Rev. 2016, 40, 187–203. [Google Scholar] [CrossRef]

- Tahira, M. Information Needs and Seeking Behaviour of Science and Technology Teachers of the University of the Punjab, Lahore. Unpublished Master’s Thesis, University of the Punjab, Lahore, Pakistan, 2008. [Google Scholar]

- Tosuntas, S.B.; Karadag, E.; Orhan, S. The factors affecting acceptance and use of interactive whiteboard within the scope of FATIH project: A structural equation model based on the unified theory of acceptance and use of technology. Comput. Educ. 2015, 81, 169–178. [Google Scholar] [CrossRef]

- Warriach, N.F.; Ameen, K. Perceptions of library and information science professionals about a national digital library program. Libr. Hi Tech News Inc. Online Cd Notes 2008, 25, 15–19. [Google Scholar] [CrossRef]

- Warriach, N.F.; Ameen, K.; Tahira, M. Usability study of a federated search product at Punjab University. Libr. Hi Tech News 2009, 26, 14–15. [Google Scholar] [CrossRef]

- Zazelenchuk, T.W.; Boling, E. Considering User Satisfaction in Designing Web-based. Educ. Q. 2003, 26, 35–40. [Google Scholar]

- Awwad, M.S.; Al-Majali, S.M. Electronic library services acceptance and use: An empirical validation of unified theory of acceptance and use of technology. Electron. Libr. 2015, 33, 1100–1120. [Google Scholar] [CrossRef]

| Measured Areas | Measured Indicators | No. of Questions | Remarks | |

|---|---|---|---|---|

| User characteristics | 1. Demographic characteristics | 1) Age 2) Sex 3) Educational attainment 4) Major 5) Occupation | 5 | Kang (2008), Nam (2010) |

| 2. Usage behavior of personalized recommendation service | 1) Whether to use personalized recommendation service 2) Reason for non-use 3) Primary purpose of using it 4) Number of visits 5) Service usage time | 5 | Kang (2008), Nam (2010) | |

| Personalized recommendation service quality | 1. System (website) | 1) Design 2) Ease of use 3) Accessibility | 3 | Kang (2008) |

| 2. Content | 1) Adequacy 2) Sufficiency 3) Utility | 3 | Nam (2010), Lee (2017) | |

| 3. Service support | 1) User support 2) Interactivity | 2 | Kang (2008), Nam (2010) | |

| Personalized recommendation service importance | 1. Importance for each personalized recommendation service area | 1 | Nam (2010) | |

| Area and Number of Items | Factor | Cronbach’s Alpha |

|---|---|---|

| System (3) | Ease of use | 0.750 |

| Design | 0.863 | |

| Accessibility | 0.765 | |

| Content (3) | Sufficiency | 0.794 |

| Adequacy | 0.744 | |

| Usefulness | 1.000 | |

| Service support (2) | User support | 0.861 |

| Interactivity | 0.810 |

| N | Min | Max | Mean | Standard Deviation | Skewness | Kurtosis | |

|---|---|---|---|---|---|---|---|

| Ease of use | 192 | 1 | 5 | 3.72 | 0.786 | −0.378 | 0.675 |

| Design | 192 | 1 | 5 | 3.58 | 0.828 | −0.252 | 0.155 |

| Accessibility | 192 | 1 | 5 | 3.54 | 0.734 | 0.157 | 0.319 |

| Sufficiency | 192 | 1 | 5 | 3.71 | 0.768 | −0.366 | 0.705 |

| Adequacy | 192 | 1 | 5 | 3.85 | 0.739 | −0.471 | 0.518 |

| Utility | 192 | 1 | 5 | 3.51 | 1.008 | −0.309 | −0.313 |

| User support | 192 | 1 | 5 | 3.41 | 0.818 | 0.028 | −0.190 |

| Interactivity | 192 | 1 | 5 | 3.60 | 0.766 | −0.196 | 0.244 |

| Importance | 192 | 1 | 5 | 3.92 | 0.712 | −0.203 | −0.627 |

| Variables | Items | Frequency | % |

|---|---|---|---|

| Age | 20s | 14 | 7.3 |

| 30s | 60 | 31.3 | |

| 40s | 70 | 36.5 | |

| 50s | 34 | 17.7 | |

| Over 60s | 14 | 7.3 | |

| Gender | Male | 168 | 87.5 |

| Female | 24 | 12.5 | |

| High test level of education | Bachelor’s degree | 35 | 18.2 |

| Master’s degree | 53 | 27.6 | |

| Doctor’s degree | 104 | 54.2 | |

| Major | Construction/Transportation | 5 | 2.6 |

| Science and technology, Humanities, and social science | 10 | 5.2 | |

| Mechanical engineering | 15 | 7.8 | |

| Food, agriculture, forestry, and fisheries | 4 | 2.1 | |

| Brain science | 0 | 0.0 | |

| Physics | 4 | 2.1 | |

| Health care | 16 | 8.3 | |

| Life science | 43 | 22.4 | |

| Mathematics | 1 | 0.5 | |

| Energy/Resources | 4 | 2.1 | |

| Atomic energy | 0 | 0.0 | |

| Cognitive/Emotional science | 3 | 1.6 | |

| Material engineering | 18 | 9.4 | |

| Electrical/Electronic engineering | 12 | 6.3 | |

| Information/Communication | 15 | 7.8 | |

| Earth science | 1 | 0.5 | |

| Chemical engineering | 13 | 6.8 | |

| Chemistry | 12 | 6.3 | |

| Environmental engineering | 16 | 8.3 | |

| Occupation | Researcher | 80 | 41.7 |

| Student | 16 | 8.3 | |

| Professor | 19 | 9.9 | |

| Company employee | 59 | 30.7 | |

| Others | 18 | 9.4 |

| Variable | Item | Frequency | % |

|---|---|---|---|

| Whether the user uses KOSEN’s personalized recommendation service | Yes | 192 | 37.4 |

| No | 321 | 62.6 | |

| The reason for non-use of personalized recommendation service | Do not know how to use it | 258 | 80.6 |

| Difficult to use | 8 | 2.5 | |

| Unnecessary | 35 | 10.9 | |

| Recommendation result is not adequate | 5 | 1.6 | |

| Others | 14 | 4.4 | |

| The primary reason for using personalized recommendation service | Information accessibility | 98 | 51.0 |

| Adequate information | 46 | 24.0 | |

| Useful information | 18 | 9.4 | |

| Variety of information provided | 28 | 14.6 | |

| Others | 2 | 1.0 | |

| Number of visits to KOSEN service | 1–7 times a week | 123 | 64.1 |

| 1–7 times a month | 50 | 26.0 | |

| 1–10 times a year | 19 | 9.9 | |

| Service usage time | No less than 1 h | 158 | 82.3 |

| 1–2 h | 31 | 16.1 | |

| 3 h or longer | 3 | 1.6 |

| Variable | Male | Female | t | P | ||

|---|---|---|---|---|---|---|

| Average | Standard Deviation | Average | Standard Deviation | |||

| Ease of use | 3.76 | 0.781 | 3.44 | 0.771 | 1.923 | 0.056 |

| Design | 3.61 | 0.851 | 3.33 | 0.602 | 1.555 | 0.122 |

| Accessibility | 3.58 | 0.755 | 3.29 | 0.509 | 1.813 | 0.071 |

| Sufficiency | 3.74 | 0.787 | 3.50 | 0.590 | 1.407 | 0.161 |

| Adequacy | 3.88 | 0.751 | 3.63 | 0.612 | 1.575 | 0.117 |

| Utility | 3.54 | 0.996 | 3.29 | 1.083 | 1.110 | 0.268 |

| User support | 3.45 | 0.832 | 3.15 | 0.667 | 1.693 | 0.092 |

| Interactivity | 3.62 | 0.780 | 3.46 | 0.658 | 0.944 | 0.346 |

| Importance | 3.95 | 0.697 | 3.75 | 0.800 | 1.267 | 0.207 |

| Variable | Items | Average | Standard Deviation | F | p | Post Verification |

|---|---|---|---|---|---|---|

| Ease of use | Researcher (a) | 3.59 | 0.856 | 2.966 | 0.021 * | d > b |

| Student (b) | 3.38 | 0.866 | ||||

| Professor (c) | 3.79 | 0.652 | ||||

| Company employee (d) | 3.97 | 0.662 | ||||

| Others (e) | 3.78 | 0.712 | ||||

| Design | Researcher (a) | 3.41 | 0.860 | 3.557 | 0.008 ** | c > b |

| Student (b) | 3.16 | 0.908 | ||||

| Professor (c) | 3.82 | 0.671 | ||||

| Company employee (d) | 3.79 | 0.794 | ||||

| Others (e) | 3.75 | 0.600 | ||||

| Accessibility | Researcher (a) | 3.44 | 0.694 | 4.245 | 0.003 ** | d > b |

| Student (b) | 3.06 | 0.727 | ||||

| Professor (c) | 3.58 | 0.534 | ||||

| Company employee (d) | 3.81 | 0.799 | ||||

| Others (e) | 3.53 | 0.606 | ||||

| Sufficiency | Researcher (a) | 3.50 | 0.868 | 5.045 | 0.001 ** | d > b |

| Student (b) | 3.41 | 0.664 | ||||

| Professor (c) | 3.71 | 0.384 | ||||

| Company employee (d) | 4.02 | 0.707 | ||||

| Others (e) | 3.86 | 0.479 | ||||

| Adequacy | Researcher (a) | 3.71 | 0.814 | 3.209 | 0.014 * | d > b |

| Student (b) | 3.53 | 0.826 | ||||

| Professor (c) | 3.87 | 0.574 | ||||

| Company employee (d) | 4.08 | 0.651 | ||||

| Others (e) | 3.94 | 0.511 | ||||

| Utility | Researcher (a) | 3.35 | 0.995 | 2.000 | 0.096 | n/a |

| Student (b) | 3.50 | 1.033 | ||||

| Professor (c) | 3.21 | 0.918 | ||||

| Company employee (d) | 3.76 | 1.088 | ||||

| Others (e) | 3.67 | 0.686 | ||||

| User Support | Researcher (a) | 3.23 | 0.811 | 2.473 | 0.046 * | d > b |

| Student (b) | 3.28 | 0.547 | ||||

| Professor (c) | 3.55 | 0.911 | ||||

| Company employee (d) | 3.64 | 0.824 | ||||

| Others (e) | 3.44 | 0.784 | ||||

| Interactivity | Researcher (a) | 3.44 | 0.813 | 2.434 | 0.049 * | d > b |

| Student (b) | 3.47 | 0.694 | ||||

| Professor (c) | 3.63 | 0.742 | ||||

| Company employee (d) | 3.83 | 0.717 | ||||

| Others (e) | 3.61 | 0.654 |

| Importance | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Independent Variable | B | SE | Beta | t | p | VIF | DW | R2 | F |

| (Constant) | 1.963 | 0.236 | 1 | 8.313 | 0.000 | 2.232 | 0.314 | 28.725 ** (0.000) | |

| Ease of use | 0.322 | 0.076 | 0.356 | 4.249 | 0.000 ** | 1.924 | |||

| Design | 0.247 | 0.074 | 0.288 | 3.341 | 0.001 ** | 2.031 | |||

| Accessibility | −0.036 | 0.079 | −0.037 | −0.451 | 0.653 | 1.837 | |||

| Importance | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Independent Variable | B | SE | Beta | t | p | VIF | DW | R2 | F |

| (Constant) | 1.855 | 0.249 | 7.446 | 0.000 | 2.296 | 0.276 | 23.886 ** (0.000) | ||

| Sufficiency | 0.137 | 0.080 | 0.148 | 1.714 | 0.088 | 1.936 | |||

| Adequacy | 0.357 | 0.084 | 0.371 | 4.257 | 0.000 ** | 1.969 | |||

| Utility | 0.053 | 0.051 | 0.075 | 1.036 | 0.302 | 1.360 | |||

| Importance | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Independent Variable | B | SE | Beta | t | p | VIF | DW | R2 | F |

| (Constant) | 2.322 | 0.221 | 1 | 10.498 | 0.000 | 2.046 | 0.227 | 27.809 ** (0.000) | |

| User support | 0.197 | 0.090 | 0.227 | 2.184 | 0.030 * | 2.636 | |||

| Interactivity | 0.258 | 0.096 | 0.277 | 2.672 | 0.008 ** | 2.636 | |||

| Section | Ease of Use | Design | Accessibility | Sufficiency | Adequacy | Utility | User Support | Interactivity | Importance |

|---|---|---|---|---|---|---|---|---|---|

| Ease of use | 1 | ||||||||

| Design | 0.647 ** | 1 | |||||||

| Accessibility | 0.598 ** | 0.626 ** | 1 | ||||||

| Sufficiency | 0.615 ** | 0.648 ** | 0.588 ** | 1 | |||||

| Adequacy | 0.673 ** | 0.641 ** | 0.587 ** | 0.677 ** | 1 | ||||

| Utility | 0.452 ** | 0.489 ** | 0.416 ** | 0.464 ** | 0.478 ** | 1 | |||

| User support | 0.519 ** | 0.574 ** | 0.478 ** | 0.580 ** | 0.633 ** | 0.549 ** | 1 | ||

| Interactivity | 0.554 ** | 0.633 ** | 0.549 ** | 0.623 ** | 0.704 ** | 0.578 ** | 0.788 ** | 1 | |

| Importance | 0.520 ** | 0.495 ** | 0.356 ** | 0.434 ** | 0.507 ** | 0.321 ** | 0.445 ** | 0.456 ** | 1 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.-H.; Park, S.E.; Choi, H.-S.; Hwang, Y.-Y. Information Usage Behavior and Importance: Korean Scientist and Engineer Users of a Personalized Recommendation Service. Information 2019, 10, 181. https://doi.org/10.3390/info10050181

Park J-H, Park SE, Choi H-S, Hwang Y-Y. Information Usage Behavior and Importance: Korean Scientist and Engineer Users of a Personalized Recommendation Service. Information. 2019; 10(5):181. https://doi.org/10.3390/info10050181

Chicago/Turabian StylePark, Jung-Hoon, Seong Eun Park, Hee-Seok Choi, and Yun-Young Hwang. 2019. "Information Usage Behavior and Importance: Korean Scientist and Engineer Users of a Personalized Recommendation Service" Information 10, no. 5: 181. https://doi.org/10.3390/info10050181

APA StylePark, J.-H., Park, S. E., Choi, H.-S., & Hwang, Y.-Y. (2019). Information Usage Behavior and Importance: Korean Scientist and Engineer Users of a Personalized Recommendation Service. Information, 10(5), 181. https://doi.org/10.3390/info10050181