Abstract

Aimed at the problem of how to objectively obtain the threshold of a user’s cognitive load in a virtual reality interactive system, a method for user cognitive load quantification based on an eye movement experiment is proposed. Eye movement data were collected in the virtual reality interaction process by using an eye movement instrument. Taking the number of fixation points, the average fixation duration, the average saccade length, and the number of the first mouse clicking fixation points as the independent variables, and the number of backward-looking times and the value of user cognitive load as the dependent variables, a cognitive load evaluation model was established based on the probabilistic neural network. The model was validated by using eye movement data and subjective cognitive load data. The results show that the absolute error and relative mean square error were 6.52%–16.01% and 6.64%–23.21%, respectively. Therefore, the model is feasible.

1. Introduction

A cognitive load is the ratio of task complexity to the cognitive ability required by the user to complete the task, which can be described as the limited capacity of working memory and attention [1]. The cognitive load has a tremendous impact on the user’s ability to execute tasks, which is an important humanistic factor directly related to the efficiency of the system operation, job safety, and production efficiency in different fields [2]. In the in-vehicle information system (IVIS), the complex and indiscriminate provision of multiple large sets of data may trigger the cognitive load of drivers, resulting in operational errors and traffic accidents [3]. Therefore, researchers have been conducting quantitative research on the cognitive load, mainly measuring the working memory capacity and selective attention mechanism changes in two stages [1,2,4,5]. Physiological signals (such as heart rate and respiratory rate), brain activity, blood pressure, skin electrical response, pupil diameter, blinking, and gaze are considered biomarkers for quantifying the cognitive load [6,7]. There is an information structure that can effectively quantify the cognitive load in Web browsing and Web shopping, minimize the user’s information browsing time, or define the optimal point in time to guide the purchase [8].

Differences in individual cognitive ability and how to enhance the cognitive load affect human cognitive control, which leads to different discoveries of physiological changes as the cognitive load [9], and eye movement technology can objectively measure the cognition of users [10]. The pupil measure is the cognitive activity index (ICA), which assesses the association between expected eye movements and immediate cognitive load [11,12]. The analysis of eye tracking data provides quantitative evidence for the change of the interface layout and its effect on the user’s understanding and cognitive load [13]. Many researchers use eye movement behavior data [14,15,16] to obtain the user’s behavior habits and interest difference to judge the user’s cognitive load. Among them, Asan et al. [17] studied the physiological index associated with the eye movement tracking technology and cognitive load. These studies have focused on the use of physiological methods to assess the cognitive load of users but have not yet resolved how to construct a quantitative relationship between physiological indicators and cognitive load.

In addition to analyzing the impact of users’ physiological indicators on cognitive load, some researchers have also used machine learning to predict the quantitative cognitive load. The K-NN (k-NearestNeighbor) algorithm has been used to calculate the cognitive load of the user based on, for example, a change in the blood oxygen content of the prefrontal lobe [18,19]. Other studies have shown that both artificial neural networks [20] and classifiers based on linear discriminant analysis [21] can monitor the workload of the EEG (Electroencephalogram) power spectrum in real time. In addition, artificial neural networks [22], aggregation methods [23], and similar approaches have been applied to the data collection of psychophysiological indicators to predict the cognitive load of users.

The main research purpose of this paper was to obtain objective and accurate user cognitive load values in the virtual reality (VR) interactive system. The eye movement test was used, where the number of fixation points of the user was obtained by the eye movement instrument. Additionally, the average fixation duration, average saccade length, the number of fixation points clicked by the first mouse, and the number of backward-looking times were used as the evaluation indexes. A cognitive load evaluation model was then constructed based on the probabilistic neural network, which quantifies the cognitive load and provides a theoretical basis for the design and development of the subsequent virtual reality interactive system.

2. Related Work

2.1. Multi-Channel Interactive Information Integration in the VR System

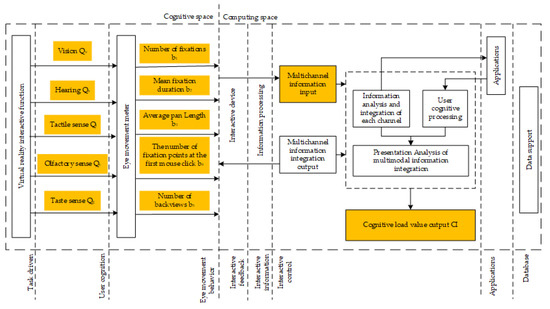

To solve the problem that it is difficult to quantify the cognitive load of users in a virtual reality interactive system, in order to reduce the difficulty of interactive cognitive analysis, some researchers have constructed a multi-modal cognitive processing model that integrates touch, hearing, and vision [24]. In order to improve the naturalness and efficiency of interaction, some researchers have also established a multi-modal conceptual model and a system model of human–computer interaction based on the elements of human–computer interaction in command and control [25]. By simulating the process of human brain cognition, this paper studies the interactive behavior of a virtual reality system from cognitive and computational perspectives, and then constructs the interactive information integration model of virtual reality, and the final output value is the cognitive load value of users, such that the cognitive load can be quantified. As shown in Figure 1, in order to realize the functions in the interactive system, users use visual, auditory, and other cognitive channels to analyze the task, and eye movement is studied to collect the user’s eye movement behaviors under single-channel, double-channel, and triple-channel conditions. The user’s cognitive load in the virtual reality system can then be quantified.

Figure 1.

Multi-modal interactive information integration model in a virtual reality system.

2.2. Construction of Cognitive Load Quality Evaluation Model

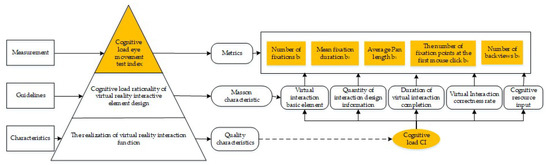

The evaluation model is generally composed of three layers: the first layer is the basic layer, that is, the evaluation quality characteristics; the second layer is the middle layer, which is further explanation of the first layer, that is, the characteristics of the mass quantum; and the third layer is the measurement index. Based on the hierarchical partition theory of the quality evaluation model, this paper analyzes the attributes of a virtual reality interactive system, takes the size of user cognitive load as the quality characteristics of a virtual reality interactive system quality evaluation model, deduces the quality sub-characteristics, and finally establishes the cognitive load quality evaluation model of the virtual reality interactive system with the eye movement technical index as the measurement index, as shown in Figure 2.

Figure 2.

Eye movement assessment model of cognitive load in a virtual reality system.

2.3. Physiological Index of Cognitive Load Based on an Eye Movement Experiment

In an eye movement experiment as a method of implicitly obtaining cognitive load, the visual behavior recorded by the eye movement instrument is more intuitive than the operating behavior for reflecting the cognitive awareness of users. As the most widely used cognitive load assessment method, eye movement technology is mainly based on the number of fixation points, average fixation duration, average saccade length, number of fixation points at the first mouse click, number of backward-looking times, and other experimental data [26] in order to objectively and scientifically evaluate the cognitive load of a virtual reality interactive system. Therefore, this paper chooses eye movement technology as the experimental approach to establish the cognitive load evaluation model based on the probabilistic neural network.

1. Number of Fixations

The number of fixation points is proportional to the cognitive load of the virtual reality intersection system. The greater the number of fixation points, the larger the cognitive load is, and vice versa [27,28]. Therefore, the number of fixation points is introduced as a physiological index to measure the cognitive load of households.

2. Mean Fixation Duration

The more information you carry, the longer your eyes stay fixed, and the more cognitive load you have. To some extent, this evaluation index can reflect the cognitive load of users intuitively [28,29,30]. For this reason, the average fixation duration is used as a physiological index to evaluate the cognitive load of users.

3. Average Pan Length

Scanning length is used to calculate the length of the bevel according to the coordinates of the fixation point, which is mainly used to analyze the path [31,32] to be scanned, and thus to analyze the size of the cognitive load of the user.

4. The Number of Fixation Points at the First Mouse Click

Before the first mouse click, the greater the number of the user’s fixation points, the higher the user’s recognition degree, and the smaller the user’s cognitive load [33,34]. This index is inversely proportional to the cognitive load.

5. Number of Back Views

The number of backward-looking views represents the cognitive impairment of the user [35]. The causes of backward-looking include: (1) cognitive bias of the subjects and (2) a big contrast between the cognitive object and the subjects’ mental image symbols. Users need to recognize them repeatedly to establish and construct new mental image symbols.

3. Methods

3.1. Cognitive Load Evaluation Model Based on the Probabilistic Neural Network

Theorem 1.

The user’s cognitive domain is represented by , and the cognitive domain is composed of cognitive channels , expressed as:

where each represent a kind of cognitive channel, and the cognitive behavior set of users under the comprehensive effect of each cognitive channel is represented as . Then, the set of cognitive behaviors of the user is:

where is the index of the user’s cognitive behavior, .

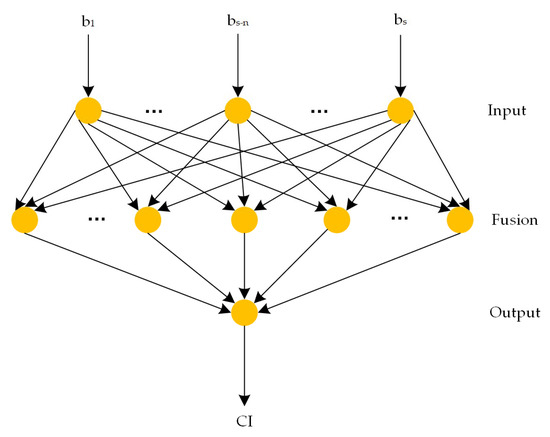

Taking the eye movement characteristic parameters in the virtual reality interactive system as the input layer and the cognitive load as the output layer, a cognitive load quantification model is constructed, as shown in Figure 3.

Figure 3.

Probabilistic neural network model.

- Input layer: This refers to eye movement data of the entire virtual reality tunnel rescue mission, such as the number of fixation points, in a single vision channel, dual vision-audio channel, dual vision-tactile channel, and three visual-audio-tactile channels. It also includes average gaze duration, average squint length, number of gaze points to the first mouse click, number of gaze times, etc.

- Fusion layer: This refers to incorporating the acquired data into the cognitive load quantification model based on the probabilistic neural network for data collation.

- Output layer: This refers to the value of the final output after the data fusion processing, which is the value of the cognitive load quantified by the tester under a certain conditional channel.

There are scheme values and eye movement indicators. The matrix of the eye movement indicator data of each scheme is as follows:

The eye movement index matrix is . Each column of the matrix represents eye movement indicator data, and each row represents a test value. As the units of each indicator data are different, it is difficult to directly compare the data, so it is necessary to normalize the data of each column, perform linear transformation of the original data, and map the result value to . If the cognitive load value increases with the increase of each set of indicator data, the transfer function is as follows:

Conversely, the conversion function is

where is the maximum value of the indicator data, is the minimum value of the indicator data, , and the improved matrix is:

When , is the dimensional column vector of . The goal of this paper is to find an estimation function, , such that the mean square error represented by:

is minimized. For a given set of column vectors , . According to the conditional expectation, the estimated function is:

where is the joint probability distribution function of . The estimate for is:

where is the smoothing parameter; is the dimension of , that is, kinds of eye movement index parameters are selected; and is the number of samples, that is, the number of schemes. Then:

where the physical meaning of is the distance from each input eye movement index to the sample point , which is the Euclidean distance. Here, . Substituting for in Equation (6), substituting in Equation (8), and exchanging the order of summation and integral number, this can be simplified to obtain:

The data is then normalized so that the cognitive load value is in the range of , and the normalized processing function is as follows:

where is the final output, the cognitive load value, of which and .

3.2. Evaluation Index

The experimental output error is defined as:

where denotes the number of cognitive channels, denotes the number of subjective scores for the cognitive load of the virtual reality interactive system under cognitive channels, and denotes the value calculated by the user cognitive load evaluation model under cognitive channels.

In this paper, the maximum absolute error and the relative mean square error are used to evaluate the evaluation effect of the model, and the calculation method is as follows:

where is the total number of channel classes.

4. Application Instance

4.1. Experimental Design

A VR tunnel emergency rescue system mainly obtains rescue information using a visual reading; the auditory system acquires tunnel rescue information, such as tunnel wind sound, water drops sounds, etc., and obtains rescue information; and the touch sense is initiated by touching the handle to obtain the selected rescue information. This paper is mainly focused on the virtual reality system. The tester wore virtual reality equipment and eye-moving equipment; completed the selection of vehicles by visual, auditory, and tactile systems; selected rescue teams; detected life; opened life channels; and provided rescue channels and other rescues. Based on the VR tunnel emergency rescue system, the main focus was on vision. If the experiment was not completed without the visual channel, this paper only studied the cognitive load under the visual , visual-auditory , visual-tactile , and visual-auditory-tactile channels. The experimental task was carried out in the Key Laboratory of Modern Manufacturing Technology of the Ministry of Education of Guizhou University, China, to keep the environment quiet and the light stable, eliminating all interference experimental factors. The study included a task with four layers of cognitive load, from a single channel to three channels. Specifically, the four tasks were as follows:

- Visual channel: The sound equipment and handle of the emergency rescue system of the VR tunnel were switched off, and the tester obtained the rescue mission information only through the visual channel to complete the rescue mission.

- Visual-auditory: The handle of the VR tunnel emergency rescue system was turned off, and the tester obtained rescue mission information through visual and auditory functions to complete the rescue mission.

- Visual-tactile: The sound equipment of the VR tunnel emergency rescue system was turned off. The tester obtained rescue mission information through visual and tactile sensation and completes the rescue mission.

- Visual-auditor-tactile: The tester obtained the rescue information through visual reading; the auditory system acquires the tunnel rescue information, such as the tunnel wind sound, the water drops sounds, etc., and obtains the rescue information; the handle was touched to obtain the selected rescue information to complete the rescue task.

For each tester, random numbering was performed, and each tester had a preparation time of 1 min. The tester’s task schedule is shown in Table 1. The experimenter completed the tunnel emergency rescue task through virtual reality equipment, and acquired the eye movement data in the process of completing the task by using the strap-back eye tracker of Xintuo Inki Technology Company. For example, the number of fixations, mean fixation duration, average pan length, number of fixation points at the first mouse click, and number of back-views were obtained. Subjective measurement and self-assessment is widely used as a measure of cognitive load [9,36,37,38], which can detect small changes in cognitive load with a relatively good sensitivity [39]. Therefore, at the end of the experiment, in order to verify the usability of the cognitive load evaluation model based on the probabilistic neural network and reduce the subjective measurement error of cognitive load, all the subjects were required to complete the cognitive load questionnaire immediately after completing the task.

Table 1.

Testers distribution table.

4.2. Select Subjects

Twenty virtual reality game lovers from Guizhou University were selected as subjects, aged between 24 and 30. The subjects were in good health, had no bad habits (smoking, drinking, etc.), were colorless, were weak or color blind, and their eyesight or corrected eyesight was 1.0. Before the experiment, it was confirmed that the participants did not drink alcohol or coffee or other stimulant drinks on the day of the experiment, and they signed the agreement voluntarily under the condition that they were familiar with the “informed consent form.”

4.3. Experimental Device

In the experiment of the Key Laboratory of Modern Manufacturing Technology of Guizhou University, a 29-inch LED screen and a resolution Lenovo computer were used. The emergency rescue mission of the tunnel was completed using China’s HTC VIVE virtual reality device, and eye movement data was acquired through the new Tony Inge’s EyeSo Ee60 telemetry eye tracker.

4.4. Experimental Variables

4.4.1. Independent Variable

As shown in Table 2, the cognitive channel was an independent variable, and the participants completed the emergency rescue task of the VR tunnel with different cognitive channels.

Table 2.

Independent variable.

4.4.2. Dependent Variable

In order to verify the rationality of the cognitive load evaluation model and analyze the subjective scores of the cognitive load of different subjects, the cognitive load scores were , with for a low subjective load and for a high subjective load, as shown in Table 3. The result is a subjective evaluation of the cognitive load of the virtual reality interactive system. The participants’ questionnaire is shown in Table 4.

Table 3.

Cognitive load rating.

Table 4.

Subjective cognitive load questionnaire.

As the number of cognitive channels changed, so does the eye movement index data, as shown in Table 5.

Table 5.

Dependent variable.

4.4.3. Control Disturbance Variable

In order to avoid repeated experiments and to remember the influence of the VR tunnel emergency rescue system environment and task on the cognitive load supervisor score, each participant could only complete one kind of modal cognitive experiment, such as the one-way to visual cognitive experiment, which was arranged as shown in Table 1.

4.5. Experimental Results

The cognitive load of the emergency rescue system in the VR tunnel in different cognitive channel environments was objectively evaluated. The results are shown in Table 6.

Table 6.

Subjective cognitive load.

Table 7 shows the data of eye movement indices during the emergency rescue of the VR tunnel under different cognitive channels, which have been normalized.

Table 7.

Normalized eye movement index data.

5. Discussion

5.1. Correlation Analysis of Eye Movement Parameters and Cognitive Load of Users

Users’ cognitive load obtained from a single type of eye movement data was limited and one-sided, which cannot accurately reflect the needs of users’ interests. Therefore, it is necessary to integrate the data and establish a model of users’ cognitive load based on an eye movement experiment. Additionally, it is necessary to analyze the correlation between eye movement data and the cognitive load.

In this paper, the Pearson correlation test was used to test the relationship between eye movement parameters and cognitive load, so as to improve the theoretical premise of the cognitive load evaluation. The results of the correlation analysis were obtained and can be viewed in Table 8.

Table 8.

Correlation between each eye movement characteristic parameter and cognitive load.

As can be seen from Table 8, the characteristic parameters of each eye movement index were significantly correlated with the cognitive load of users to varying degrees, and the high correlation between the eye movement index and the cognitive load is demonstrated once again. Average saccade length was more highly correlated with cognitive load than other parameters.

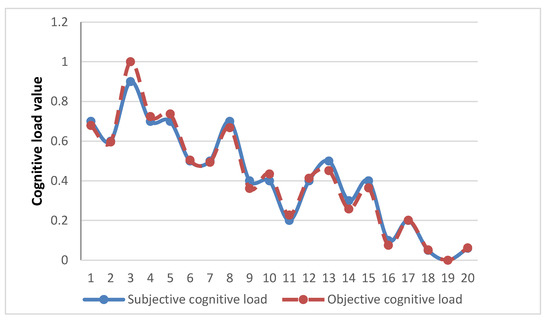

5.2. Model Output Analysis

Comparative analysis of the cognitive load evaluated by the probabilistic neural network model and actual cognitive load is shown in Figure 4, and the fitting degree is high. From Figure 4, it can be seen that the cognitive load evaluation model is close to the actual result, which indicates that the evaluation effect of this model is better.

Figure 4.

The cognitive load evaluated by the probabilistic neural network model is compared with the actual cognitive load.

At the same time, in order to understand the accuracy of the model used, the maximum absolute error and relative mean square error were used to evaluate the model, and the evaluation results are shown in Table 9.

Table 9.

Maximum absolute error and relative mean square error.

In general, the mean absolute error was and the mean relative mean square error was . At the same time, it can be seen from the cognitive load evaluation results of each cognitive channel that the maximum absolute error was , the minimum absolute error was , the maximum relative mean square error was , and the minimum relative mean square error was . This shows that the cognitive load evaluation model based on the probabilistic neural network had a high precision, and the cognitive load model proposed in this paper had a good reliability and can accurately evaluate the cognitive load value of users under different cognitive channels, so as to effectively improve the design rate of the virtual reality interaction system and the user experience.

6. Conclusions

In this paper, the eye movement behavior of the experimenters in a virtual reality interactive environment was studied, and the cognitive load was calculated using the eye movement index such that the cognitive load could be quantified. Eye movement data were recorded using an eye movement instrument, and the subjective cognitive load of the current interactive system was investigated using a questionnaire. The conclusions are as follows.

Based on the experimenter’s eye movement experiment, the number of fixation points, the average fixation duration, the average saccade length, the number of fixation points clicked during the first time, the number of backward-looking views, and other eye movement data were extracted, the user’s cognitive load quantification model in the virtual reality interactive system was constructed by combining the probabilistic neural network.

From the results of the study, it can be seen that there was a significant correlation between each eye movement characteristic parameter and the cognitive load, which indicates that the eye movement index can directly reflect the cognitive load under the interaction of users, thus providing a basis for the study of cognitive load quantification.

The results show that the absolute error of the user cognitive load based on the probabilistic neural network and the subjective cognitive load value of the tester was 6.52%–16.01%, and the relative mean square error is 6.64%–23.21%, indicating that the method has a high precision.

Author Contributions

Conceptualization, X.X. and J.L.; methodology, X.X.; validation, X.X., J.L., and N.D.; formal analysis, X.X.; investigation, X.X.; resources, X.X.; data curation, X.X; writing—original draft preparation, X.X.; writing—review and editing, X.X.; visualization, N.D.; supervision, J.L.; project administration, J.L.

Funding

This research was supported by the Natural Science Foundation of China (Nos. 51865004, 2014BAH05F01) and the Provincial Project Foundation of Guizhou, China (Nos. [2018]1049, [2016]7467).

Acknowledgments

The authors would like to convey their heartfelt gratefulness to the reviewers and the editor for the valuable suggestions and important comments which greatly helped them to improve the presentation of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mun, S.; Whang, M.; Park, S.; Park, M.C. Effects of mental workload on involuntary attention: A somatosensory ERP study. Neuropsychologia 2017, 106, 7–20. [Google Scholar] [CrossRef]

- Puma, S.; Matton, N.; Paubel, P.V.; Raufaste, É.; El-Yagoubi, R. Using theta and alpha band power to assess cognitive workload in multitasking environments. Int. J. Psychophysiol. 2018, 123, 111–120. [Google Scholar] [CrossRef]

- Yae, J.H.; Shin, J.G.; Woo, J.H.; Kim, S.H. A Review of Ergonomic Researches for Designing In-Vehicle Information Systems. J. Ergon. Soc. Korea 2017, 36, 499–523. [Google Scholar]

- Mun, S.; Park, M.; Park, S.; Whang, M. SSVEP and ERP measurement of cognitive fatigue caused by stereoscopic 3D. Neurosci. Lett. 2012, 525, 89–94. [Google Scholar] [CrossRef] [PubMed]

- Mun, S.; Kim, E.; Park, M. Effect of mental fatigue caused by mobile 3D viewing on selective attention: An ERP study. Int. J. Psychophysiol. 2014, 94, 373–381. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Wang, E.M.; Li, W.C.; Braithwaite, G. Pilots’ Visual Scan Patterns and Situation Awareness in Flight Operations. Aviat. Space Environ. Med. 2014, 85, 708–714. [Google Scholar] [CrossRef]

- Hogervorst, M.A.; Brouwer, A.; van Erp, J.B.E. Combining and comparing EEG, peripheral physiology and eye-related measures for the assessment of mental workload. Front. Neurosci. 2014, 8, 322. [Google Scholar] [CrossRef]

- Jimenez-Molina, A.; Retamal, C.; Lira, H. Using Psychophysiological Sensors to Assess Mental Workload during Web Browsing. Sensors 2018, 18, 458. [Google Scholar] [CrossRef] [PubMed]

- Sungchul, M. Overview of Understanding and Quantifying Cognitive Load. J. Ergon. Soc. Korea 2018, 37, 337–346. [Google Scholar]

- Sargezeh, B.A.; Ayatollahi, A.; Daliri, M.R. Investigation of eye movement pattern parameters of individuals with different fluid intelligence. Exp. Brain Res. 2019, 237, 15–28. [Google Scholar] [CrossRef] [PubMed]

- Sekicki, M.; Staudte, M. Eye’ll Help You Out! How the Gaze Cue Reduces the Cognitive Load Required for Reference Processing. Cogn. Sci. 2018, 42, 2418–2458. [Google Scholar] [CrossRef]

- Demberg, V.; Sayeed, A. The Frequency of Rapid Pupil Dilations as a Measure of Linguistic Processing Difficulty. PLoS ONE 2016, 11, e01461941. [Google Scholar] [CrossRef]

- Majooni, A.; Masood, M.; Akhavan, A. An eye-tracking study on the effect of infographic structures on viewer’s comprehension and cognitive load. Inf. Vis. 2018, 17, 257–266. [Google Scholar] [CrossRef]

- Ooms, K.; Coltekin, A.; De Maeyer, P.; Dupont, L.; Fabrikant, S.; Incoul, A.; Kuhn, M.; Slabbinck, H.; Vansteenkiste, P.; Van der Haegen, L. Combining user logging with eyetracking for interactive and dynamic applications. Behav. Res. Methods 2015, 47, 977–993. [Google Scholar] [CrossRef]

- Hua, L.; Dong, W.; Chen, P.; Liu, H. Exploring differences of visual attention in pedestrian navigation when using 2D maps and 3D geo-browsers. Cartogr. Geogr. Inf. Sci. 2016, 44, 1–17. [Google Scholar]

- Anagnostopoulos, V.; Havlena, M.; Kiefer, P.; Giannopoulos, I.; Schindler, K.; Raubal, M. Gaze-Informed location-based services. Int. J. Geogr. Inf. Sci. 2017, 31, 1770–1797. [Google Scholar] [CrossRef]

- Asan, O.; Yang, Y. Using Eye Trackers for Usability Evaluation of Health Information Technology: A Systematic Literature Review. JMIR Hum. Factors 2015, 2, e5. [Google Scholar] [CrossRef]

- Sassaroli, A.; Zheng, F.; Hirshfield, L.M.; Girouard, A.; Solovey, E.T.; Jacob, R.J.K.; Fantini, S. Discrimination of Mental Workload Levels in Human Subjects with Functional Near-infrared Spectroscopy. J. Innov. Opt. Health Sci. 2008, 1, 227–237. [Google Scholar] [CrossRef]

- Herff, C.; Heger, D.; Fortmann, O.; Hennrich, J.; Putze, F.; Schultz, T. Mental workload during n-back task-quantified in the prefrontal cortex using fNIRS. Front. Hum. Neurosci. 2014, 7, 935. [Google Scholar] [CrossRef]

- Wilson, G.F.; Russell, C.A. Real-time assessment of mental workload using psychophysiological measures and artificial neural networks. Hum. Factors 2003, 45, 635–643. [Google Scholar] [CrossRef]

- Mueller, K.; Tangermann, M.; Dornhege, G.; Krauledat, M.; Curio, G.; Blankertz, B. Machine learning for real-time single-trial EEG-analysis: From brain-computer interfacing to mental state monitoring. J. Neurosci. Methods 2008, 167, 82–90. [Google Scholar] [CrossRef]

- Noel, J.B.; Bauer, K.W., Jr.; Lanning, J.W. Improving pilot mental workload classification through feature exploitation and combination: A feasibility study. Comput. Oper. Res. 2005, 32, 2713–2730. [Google Scholar] [CrossRef]

- Oh, H.; Hatfield, B.D.; Jaquess, K.J.; Lo, L.-C.; Tan, Y.Y.; Prevost, M.C.; Mohler, J.M.; Postlethwaite, H.; Rietschel, J.C.; Miller, M.W.; et al. A Composite Cognitive Workload Assessment System in Pilots Under Various Task Demands Using Ensemble Learning. In Proceedings of the AC 2015: Foundations of Augmented Cognition, Los Angeles, CA, USA, 2–7 August 2015. [Google Scholar]

- Lu, L.; Tian, F.; Dai, G.; Wang, H. A Study of the Multimodal Cognition and Interaction Based on Touch, Audition and Vision. J. Comput.-Aided Des. Comput. Graph. 2014, 26, 654–661. [Google Scholar]

- Zhang, G.H.; Lao, S.Y.; Ling, Y.X.; Ye, T. Research on Multiple and Multimodal Interaction in C2. J. Natl. Univ. Def. Technol. 2010, 32, 153–159. [Google Scholar]

- Wei, L.; Yufen, C. Cartography Eye Movements Study and the Experimental Parameters Analysis. Bull. Surv. Mapp. 2012, 10, 16–20. [Google Scholar]

- Chen, X.; Xue, C.; Chen, M.; Tian, J.; Shao, J.; Zhang, J. Quality assessment model of digital interface based on eye-tracking experiments. J. Southeast Univ. (Nat. Sci. Ed.) 2017, 47, 38–42. [Google Scholar]

- Smerecnik, C.M.R.; Mesters, I.; Kessels, L.T.E.; Ruiter, R.A.; De Vries, N.K.; De Vries, H. Understanding the Positive Effects of Graphical Risk Information on Comprehension: Measuring Attention Directed to Written, Tabular, and Graphical Risk Information. Risk Anal. 2010, 30, 1387–1398. [Google Scholar] [CrossRef] [PubMed]

- Henderson, J.M.; Choi, W. Neural Correlates of Fixation Duration during Real-world Scene Viewing: Evidence from Fixation-related (FIRE) fMRI. J. Cogn. Neurosci. 2014, 27, 1137–1145. [Google Scholar] [CrossRef]

- Lin, J.H.; Lin, S.S.J. Cognitive Load for Configuration Comprehension in Computer-Supported Geometry Problem Solving: An Eye Movement Perspective. Int. J. Sci. Math. Educ. 2014, 12, 605–627. [Google Scholar] [CrossRef]

- Wu, X.; Xue, C.; Gedeon, T.; Hu, H.; Li, J. Visual search on information features on digital task monitoring interface. J. Southeast Univ. (Nat. Sci. Ed.) 2018, 48, 807–814. [Google Scholar]

- Allsop, J.; Gray, R.; Bulthoff, H.H.; Chuang, L. Effects of anxiety and cognitive load on instrument scanning behavior in a flight simulation. In Proceedings of the 2016 IEEE Second Workshop on Eye Tracking and Visualization (ETVIS), Baltimore, MD, USA, 23 October 2016. [Google Scholar]

- Nayyar, A.; Dwivedi, U.; Ahuja, K.; Rajput, N. Opti Dwell: Intelligent Adjustment of Dwell Click Time. In Proceedings of the 22nd International Conference, Hong Kong, China, 8–9 December 2017. [Google Scholar]

- Lutteroth, C.; Penkar, M.; Weber, G. Gaze, vs. Mouse: A Fast and Accurate Gaze-Only Click Alternative. In Proceedings of the 28th Annual ACM Symposium, Charlotte, NC, USA, 8–11 November 2015. [Google Scholar]

- Chengshun, W.; Yufen, C.; Shulei, Z. User interest analysis method for dot symbols of web map considering eye movement data. Geomat. Inf. Sci. Wuhan Univ. 2018, 43, 1429–1437. [Google Scholar]

- Paas, F.G.; Van Merri, J.J.; Adam, J.J. Measurement of cognitive load in instructional research. Percept Mot Skills 1994, 79, 419–430. [Google Scholar] [CrossRef] [PubMed]

- Meshkati, N.; Hancock, P.A.; Rahimi, M. Techniques in Mental Workload Assessment. In Evaluation of Human Work: A Practical Ergonomics Methodology; Taylor & Francis: Philadelphia, PA, USA, 1995. [Google Scholar]

- Zarjam, P.; Epps, J.; Lovell, N.H. Beyond Subjective Self-Rating: EEG Signal Classification of Cognitive Workload. IEEE Trans. Auton. Ment. Dev. 2015, 7, 301–310. [Google Scholar] [CrossRef]

- Paas, F.; Tuovinen, J.E.; Tabbers, H. Cognitive Load Measurement as a Means to Advance Cognitive Load Theory. Educ. Psychol. 2003, 38, 63–71. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).