1. Introduction

In recent years, scientific workflow has been applied widely as a new paradigm of data analysis and scientific computation [

1]. Scientific Workflow can be regarded as an orchestration form of the relationships between computational tasks and data transmissions [

2]. However, the growing computational complexity and data size of scientific workflows increase the demand for a high-performance execution environment [

3]. Deploying and running workflows in clouds is an effective solution for the advantages of cloud environments, such as flexible service provision, high cost efficiency and guaranteed Quality of Service (QoS) [

4].

Scientific workflows are usually composed of heterogeneous components, including components inherited from legacy applications and newly developed. Clouds offer a customized execution environment for these components through virtualization technology. In addition, workflows can be deployed and executed in clouds that provide a virtually infinite resource pool in a pay-as-you-go manner [

5]. In this way, workflows can acquire and release cloud resources on-demand to achieve a cost-effective operating mode. These advantages enable clouds to become a preferred execution environment for scientific workflows.

However, there are also challenges for workflows running in clouds because failures occur more frequently due to the complexity and dynamic of both workflow and cloud [

6]. Failures tend to interrupt the continuous execution and significantly affect the performance of workflows, especially for large-scale, long-running ones [

7]. To provide customers with seamless experience, it is critical to make scientific workflow scheduling fault-tolerant.

Because many scientific workflows are time-aware, their successful completion depends not only on the correct computational results, but also on the time instants at which these results become available [

8]. A deadline limitation is set for a workflow that is a time by which the workflow must complete the processing step and produce the computational result [

9]. Without an effective fault-tolerant scheduling scheme, failures will cause deadline-aware workflows cannot complete on time. In this situation, the QoS is severely affected, although the results might be obtained after the deadline. Therefore, fault-tolerant workflow scheduling becomes necessary and important because it makes workflows be able to successfully complete before the deadline.

In failure-prone environments, an effective workflow scheduling approach should not only able to map tasks on to heterogeneous resources, but to deal with failures throughout workflow executions as well. In this paper, we propose a dynamic fault-tolerant workflow scheduling with hybrid spatial-temporal re-execution, called DFTWS. To improve the time efficiency of workflow scheduling, DFTWS separates the scheduling process into initial resource allocation and online workflow scheduling. Meanwhile, DFTWS dynamically implements spatial and temporal re-execution schemes according to the task property and the failure type to achieve a balance between reliability and cost.

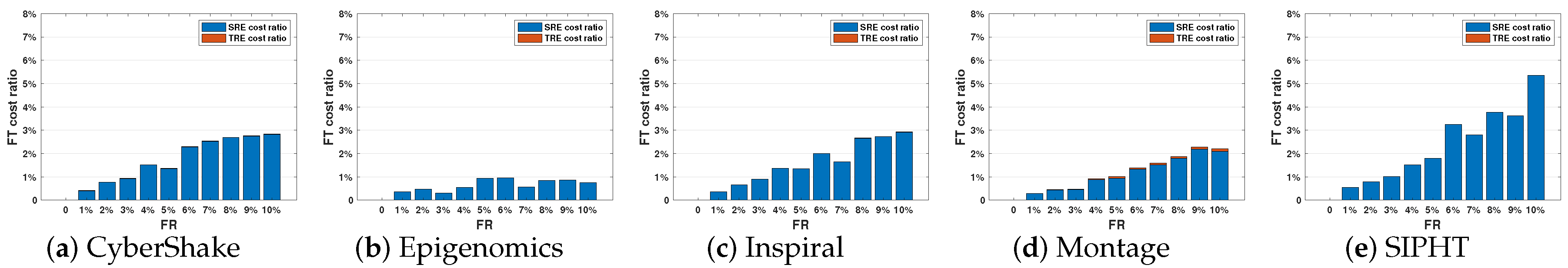

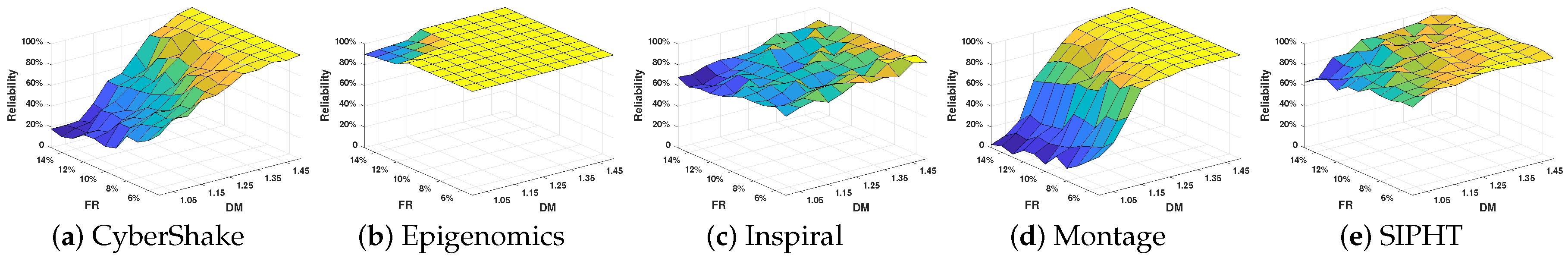

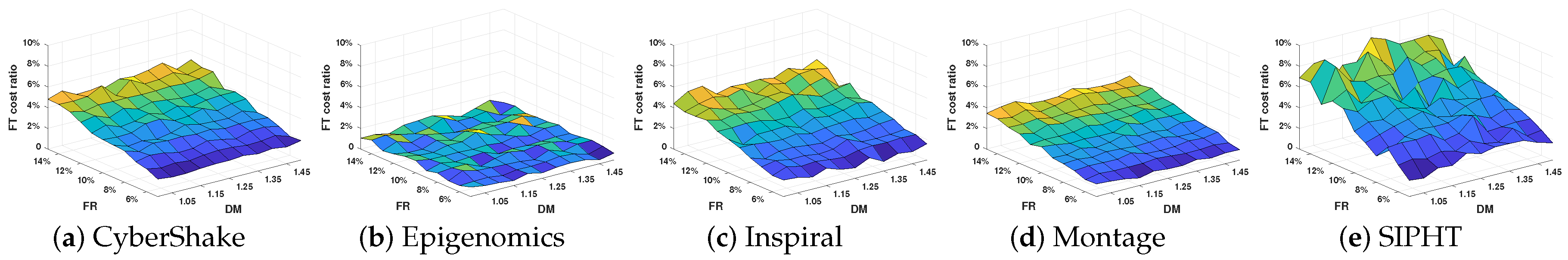

The main contributions of this paper are as follows. First, all static information of a workflow is abstracted and calculated in advance, including a set of time attributes and the critical path (CP). Second, a budget division method is designed to divide the total workflow budget into appropriate quotas for all tasks, which are used to rent suitable VM instances. Third, DFTWS scheduling approach is applied through the real-time execution of workflows, which dynamically employs spatial re-execution (SRE) and temporal re-execution (TRE) scheme in different failure scenarios. SRE scheme is adopted for critical tasks or tasks with permanent failures to maintain high reliability, while TRE scheme for non-critical tasks with transient failures to improve cost efficiency.

The remainder of the paper is organized as follows. The related works are reviewed in

Section 2.

Section 3 describes the preliminaries of fault-tolerant workflow scheduling, including cloud system, workflow model and fault tolerance schemes.

Section 4 introduces the proposed DFTWS algorithm.

Section 5 presents the experiments on the performance of DFTWS using real-world scientific workflows, and analyze the factors that influence the performance of DFTWS. The conclusions of the paper are given in

Section 6.

2. Related Work

Scientific workflow scheduling is one of the important issues in cloud computing, which has been widely studied in recent years [

10]. Some surveys on workflow scheduling have been made. For instance, the taxonomy and survey of fault-tolerant workflow management systems in cloud and distributed computing environments are summarized in [

7]. It details the objects, problems, techniques and solutions involved in workflow scheduling. Masdari et al. conduct a comprehensive analysis on workflow scheduling schemes [

11]. Additionally, it makes a category of workflow scheduling schemes according to the types and objectives, including metaheuristic-based [

12,

13,

14], heuristic [

15,

16,

17,

18,

19] and hybrid metaheuristic and heuristic [

20,

21,

22] scheduling. A total of 23 scheduling schemes are analyzed and classified based on the proposed classification criteria. These works provide an outline of the problems and solutions of workflow scheduling.

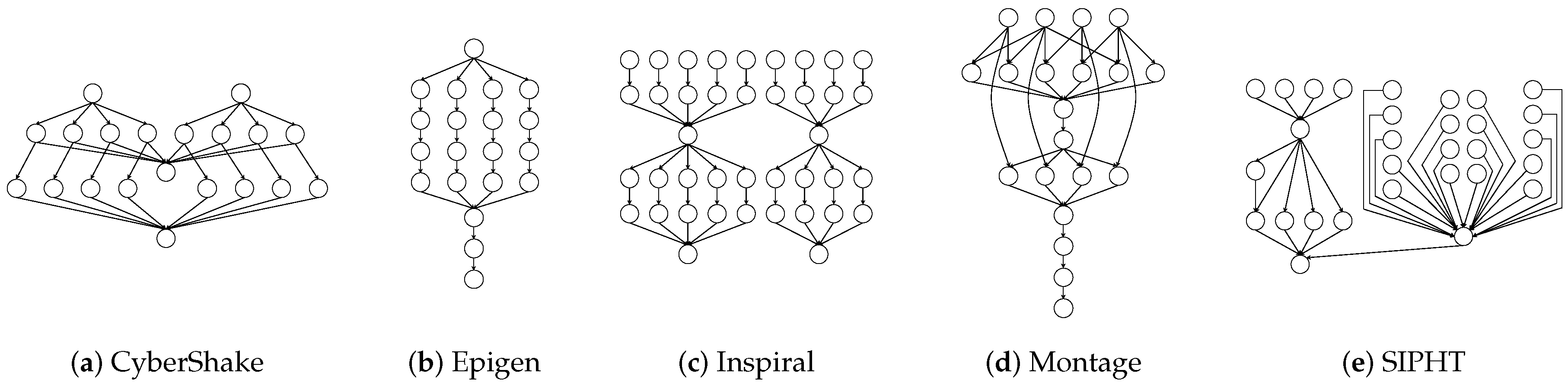

To focus on development and use of scientific workflows, scientific workflow management system is proposed. The Kepler system addresses many of the core requirements and provides support for Web service-based workflows and Grid extensions [

23]. Pegasus is an automatic execution simulation and data analysis platform for workflows, which has been widely used by many researchers and originations for more than a decade. Besides the reliable, scalable workflow simulation environment, Pegasus also offers a batch of real-world workflow models and a set of synthetic workflow generators [

24]. The simulation platform and workflow dataset provide convenience and benchmark for the researchers on workflow scheduling.

According to the phase of creating and executing scheduling scheme, workflow scheduling can be divided into two types: static and dynamic [

25]. Static workflow scheduling completes resource planning on the compile phase, which generally requires preliminary information of workflow and cloud resources. Fard et al. present a general framework and heuristic algorithm for multi-objective static scheduling of scientific workflows in heterogeneous computing environments [

26]. Rodriguez et al. propose a static cost-minimization, deadline-constrained heuristic for scheduling a scientific workflow application in a cloud environment [

9]. The static scheme requires task information in advance, including their sizes, service demands and estimated execution cost. It is easier for a scheduler to adapt a static scheme. The dynamic scheme makes and executes online scheduling decisions according to the real-time performance of workflows. Shi et al. present a dynamic constraint workflow scheduling algorithm to meet the requirements of the bi-criteria scheduling issue, which uses a two-phase heuristic algorithm for multiple-optimal solution [

27]. However, it calls for a longer running time. An elastic resource provisioning and task scheduling mechanism is proposed to perform scientific workflows and submitted at unpredictable time in cloud. It is designed to finish as many high-priority workflows as possible by considering the budget and deadline aspects, which lead to a high cost [

28]. Dynamic scheme is more suitable for executing on-demand variable workflows because it doesn’t need as much information as static one. However, dynamic scheme becomes more complex since it has to monitor and update the real-time system information [

29].

Redundancy is one of the commonly used fault-tolerant mechanisms, which can be realized either in space or in time [

30]. Spatial redundancy means providing additional resources to execute the same task on duplication or replication of resources to provide resilience. For example, parallel execution is a typical spatial redundancy scheme. Parallel execution is running a task on multiple resources simultaneously to guarantee a viable result, which results in a high spatial cost [

31]. By contrast, temporal redundancy relaxes time constraint to provide more time for re-executing the failed task on the original resources [

32]. Re-execution can be either done on another resource or the same resource, that is SRE and TRE, respectively.

To make full use of the advantages of dynamic fault tolerance, DFTWS separates the scheduling process into initial resource allocation and online fault tolerant scheduling. The initial resource allocation scheme can be decided based on the information of workflows and cloud resources before a workflow is executed, and fault handling strategies can be carried out during execution process. Moreover, SRE and TRE are adopted as the fault-tolerant schemes with the aim of improving reliability and resource utilization simultaneously.

Author Contributions

Conceptualization, N.W. and D.Z.; Data curation, N.W.; Funding acquisition, D.Z.; Investigation, N.W. and D.Z.; Methodology, N.W. and Z.Z.; Software, N.W.; Supervision, D.Z.; Validation, N.W. and Z.Z.; Writing—original draft, N.W. and D.Z.; Writing—review and editing, N.W. and Z.Z.

Funding

This work is supported by the National High Technology Development 863 Program of China under Grant No. 2013AA01A215.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Donoho, D. 50 years of data science. J. Comput. Graph. Stat. 2017, 26, 745–766. [Google Scholar] [CrossRef]

- Yu, J.; Buyya, R.; Ramamohanarao, K. Workflow scheduling algorithms for grid computing. In Metaheuristics for Scheduling in Distributed Computing Environments; Springer: Berlin, Germany, 2008; pp. 173–214. [Google Scholar]

- Zhu, X.; Wang, J.; Guo, H.; Zhu, D.; Yang, L.T.; Liu, L. Fault-tolerant scheduling for real-time scientific workflows with elastic resource provisioning in virtualized clouds. IEEE Trans. Parallel Distrib. Syst. 2016, 27, 3501–3517. [Google Scholar] [CrossRef]

- Rao, J.; Wei, Y.; Gong, J.; Xu, C.Z. QoS guarantees and service differentiation for dynamic cloud applications. IEEE Trans. Netw. Serv. Manag. 2013, 10, 43–55. [Google Scholar]

- Armbrust, M.; Fox, A.; Griffith, R.; Joseph, A.D.; Katz, R.; Konwinski, A.; Gunho, L.; Patterson, D.; Rabkin, A.; Stoica, I.; et al. A view of cloud computing. Commun. ACM 2010, 53, 50–58. [Google Scholar] [CrossRef]

- Chen, H.; Wang, F.Z.; Helian, N. Entropy4Cloud: Using Entropy-Based Complexity to Optimize Cloud Service Resource Management. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 13–24. [Google Scholar] [CrossRef]

- Poola, D.; Salehi, M.A.; Ramamohanarao, K.; Buyya, R. A taxonomy and survey of fault-tolerant workflow management systems in cloud and distributed computing environments. In Software Architecture for Big Data and the Cloud; Elsevier: Amsterdam, The Netherlands, 2017; pp. 285–320. [Google Scholar]

- Qin, X.; Jiang, H. A novel fault-tolerant scheduling algorithm for precedence constrained tasks in real-time heterogeneous systems. Parallel Comput. 2006, 32, 331–356. [Google Scholar] [CrossRef]

- Rodriguez, M.A.; Buyya, R. Deadline based resource provisioningand scheduling algorithm for scientific workflows on clouds. IEEE Trans. Cloud Comput. 2014, 2, 222–235. [Google Scholar] [CrossRef]

- Zheng, Q. Improving MapReduce fault tolerance in the cloud. In Proceedings of the 2010 IEEE International Symposium on Parallel & Distributed Processing, Workshops and Phd Forum (IPDPSW), Atlanta, GA, USA, 19–23 April 2010; pp. 1–6. [Google Scholar]

- Masdari, M.; ValiKardan, S.; Shahi, Z.; Azar, S.I. Towards workflow scheduling in cloud computing: A comprehensive analysis. J. Netw. Comput. Appl. 2016, 66, 64–82. [Google Scholar] [CrossRef]

- Yaseen, S.G.; Al-Slamy, N. Ant colony optimization. IJCSNS 2008, 8, 351. [Google Scholar]

- Verma, A.; Kaushal, S. Cost-time efficient scheduling plan for executing workflows in the cloud. J. Grid Comput. 2015, 13, 495–506. [Google Scholar] [CrossRef]

- Cao, J.; Chen, J.; Zhao, Q. An optimized scheduling algorithm on a cloud workflow using a discrete particle swarm. Cybern. Inf. Technol. 2014, 14, 25–39. [Google Scholar]

- Singh, L.; Singh, S. A survey of workflow scheduling algorithms and research issues. Int. J. Comput. Appl. 2013, 74, 21–28. [Google Scholar] [CrossRef]

- Lin, C.; Lu, S. Scheduling scientific workflows elastically for cloud computing. In Proceedings of the 2011 IEEE 4th International Conference on Cloud Computing, Washington, DC, USA, 4–9 July 2011; pp. 746–747. [Google Scholar]

- Wu, H.; Tang, Z.; Li, R. A priority constrained scheduling strategy of multiple workflows for cloud computing. In Proceedings of the 2012 14th IEEE International Conference on Advanced Communication Technology (ICACT), Washington, DC, USA, 5–10 July 2012; pp. 1086–1089. [Google Scholar]

- Verma, A.; Kaushal, S. Deadline and budget distribution based cost-time optimization workflow scheduling algorithm for cloud. In Proceedings of the IJCA Proceedings on International Conference on Recent Advances And Future Trends in Information Technology (iRAFIT 2012), Patiala, India, 21–23 March 2012; iRAFIT (7). Volume 4, pp. 1–4. [Google Scholar]

- Zhu, M.M.; Cao, F.; Wu, C.Q. High-throughput scientific workflow scheduling under deadline constraint in clouds. J. Commun. 2014, 9, 312–321. [Google Scholar] [CrossRef][Green Version]

- Yassa, S.; Chelouah, R.; Kadima, H.; Granado, B. Multi-objective approach for energy-aware workflow scheduling in cloud computing environments. Sci. World J. 2013, 2013, 350934. [Google Scholar] [CrossRef]

- Delavar, A.G.; Aryan, Y. A goal-oriented workflow scheduling in heterogeneous distributed systems. Int. J. Comput. Appl. 2012, 52, 27–33. [Google Scholar]

- Shengjun, X.; Jie, Z.; Xiaolong, X. An improved algorithm based on ACO for cloud service PDTs scheduling. Adv. Inf. Sci. Serv. Sci. 2012, 4. [Google Scholar] [CrossRef]

- Ludäscher, B.; Altintas, I.; Berkley, C.; Higgins, D.; Jaeger, E.; Jones, M.; Lee, E.A.; Tao, J.; Zhao, Y. Scientific workflow management and the Kepler system. Concurr. Comput. Pract. Exp. 2006, 18, 1039–1065. [Google Scholar] [CrossRef]

- Deelman, E.; Vahi, K.; Juve, G.; Rynge, M.; Callaghan, S.; Maechling, P.J.; Mayani, R.; Chen, W.; Da Silva, R.F.; Livny, M.; et al. Pegasus, a workflow management system for science automation. Future Gener. Comput. Syst. 2015, 46, 17–35. [Google Scholar] [CrossRef]

- Mandal, A.; Kennedy, K.; Koelbel, C.; Marin, G.; Mellor-Crummey, J.; Liu, B.; Johnsson, L. Scheduling strategies for mapping application workflows onto the grid. In Proceedings of the 14th IEEE International Symposium on High Performance Distributed Computing (HPDC-14), Research Triangle Park, NC, USA, 24–27 July 2005; pp. 125–134. [Google Scholar]

- Fard, H.M.; Prodan, R.; Barrionuevo, J.J.D.; Fahringer, T. A multi-objective approach for workflow scheduling in heterogeneous environments. In Proceedings of the 2012 12th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (ccgrid 2012), Ottawa, ON, Canada, 13–16 May 2012; pp. 300–309. [Google Scholar]

- Prodan, R.; Wieczorek, M. Bi-criteria scheduling of scientific grid workflows. IEEE Trans. Autom. Sci. Eng. 2010, 7, 364–376. [Google Scholar] [CrossRef]

- Shi, J.; Luo, J.; Dong, F.; Zhang, J. A budget and deadline aware scientific workflow resource provisioning and scheduling mechanism for cloud. In Proceedings of the 2014 IEEE 18th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Hsinchu, Taiwan, 21–23 May 2014; pp. 672–677. [Google Scholar]

- Alkhanak, E.N.; Lee, S.P.; Rezaei, R.; Parizi, R.M. Cost optimization approaches for scientific workflow scheduling in cloud and grid computing: A review, classifications, and open issues. J. Syst. Softw. 2016, 113, 1–26. [Google Scholar] [CrossRef]

- Anghel, L.; Alexandrescu, D.; Nicolaidis, M. Evaluation of a soft error tolerance technique based on time and/or space redundancy. In Proceedings of the 13th Symposium on Integrated Circuits and Systems Design (Cat. No. PR00843), Manaus, Brazil, 18–24 September 2000; pp. 237–242. [Google Scholar]

- Hwang, S.; Kesselman, C. Grid workflow: A flexible failure handling framework for the grid. In Proceedings of the 12th IEEE International Symposium on High Performance Distributed Computing, Seattle, WA, USA, 22–24 June 2003; pp. 126–137. [Google Scholar]

- Gao, Y.; Gupta, S.K.; Wang, Y.; Pedram, M. An energy-aware fault tolerant scheduling framework for soft error resilient cloud computing systems. In Proceedings of the Conference on Design, Automation & Test in Europe. European Design and Automation Association, Dresden, Germany, 24–28 March 2014; p. 94. [Google Scholar]

- Bala, A.; Chana, I. Intelligent failure prediction models for scientific workflows. Expert Syst. Appl. 2015, 42, 980–989. [Google Scholar] [CrossRef]

- Ghosh, S.; Melhem, R.; Mossé, D. Fault-tolerance through scheduling of aperiodic tasks in hard real-time multiprocessor systems. IEEE Trans. Parallel Distrib. Syst. 1997, 8, 272–284. [Google Scholar] [CrossRef]

- Manimaran, G.; Murthy, C.S.R. A fault-tolerant dynamic scheduling algorithm for multiprocessor real-time systems and its analysis. IEEE Trans. Parallel Distrib. Syst. 1998, 9, 1137–1152. [Google Scholar] [CrossRef]

- Sun, D.; Zhang, G.; Wu, C.; Li, K.; Zheng, W. Building a fault tolerant framework with deadline guarantee in big data stream computing environments. J. Comput. Syst. Sci. 2017, 89, 4–23. [Google Scholar] [CrossRef]

- Qiu, X.; Dai, Y.; Xiang, Y.; Xing, L. Correlation modeling and resource optimization for cloud service with fault recovery. IEEE Trans. Cloud Comput. 2017. [Google Scholar] [CrossRef]

- Benoit, A.; Hakem, M.; Robert, Y. Multi-criteria scheduling of precedence task graphs on heterogeneous platforms. Comput. J. 2010, 53, 772–785. [Google Scholar] [CrossRef]

- Xie, G.; Zeng, G.; Li, R.; Li, K. Quantitative fault-tolerance for reliable workflows on heterogeneous IaaS clouds. IEEE Trans. Cloud Comput. 2017. [Google Scholar] [CrossRef]

- Mei, J.; Li, K.; Zhou, X.; Li, K. Fault-tolerant dynamic rescheduling for heterogeneous computing systems. J. Grid Comput. 2015, 13, 507–525. [Google Scholar] [CrossRef]

- Chen, C.Y. Task scheduling for maximizing performance and reliability considering fault recovery in heterogeneous distributed systems. IEEE Trans. Parallel Distrib. Syst. 2016, 27, 521–532. [Google Scholar] [CrossRef]

- Chen, H.C.; Hu, Y.; Lee, P.P.; Tang, Y. NCCloud: A network-coding-based storage system in a cloud-of-clouds. IEEE Trans. Comput. 2014, 63, 31–44. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).