Haze Image Recognition Based on Brightness Optimization Feedback and Color Correction

Abstract

1. Introduction

2. Theories

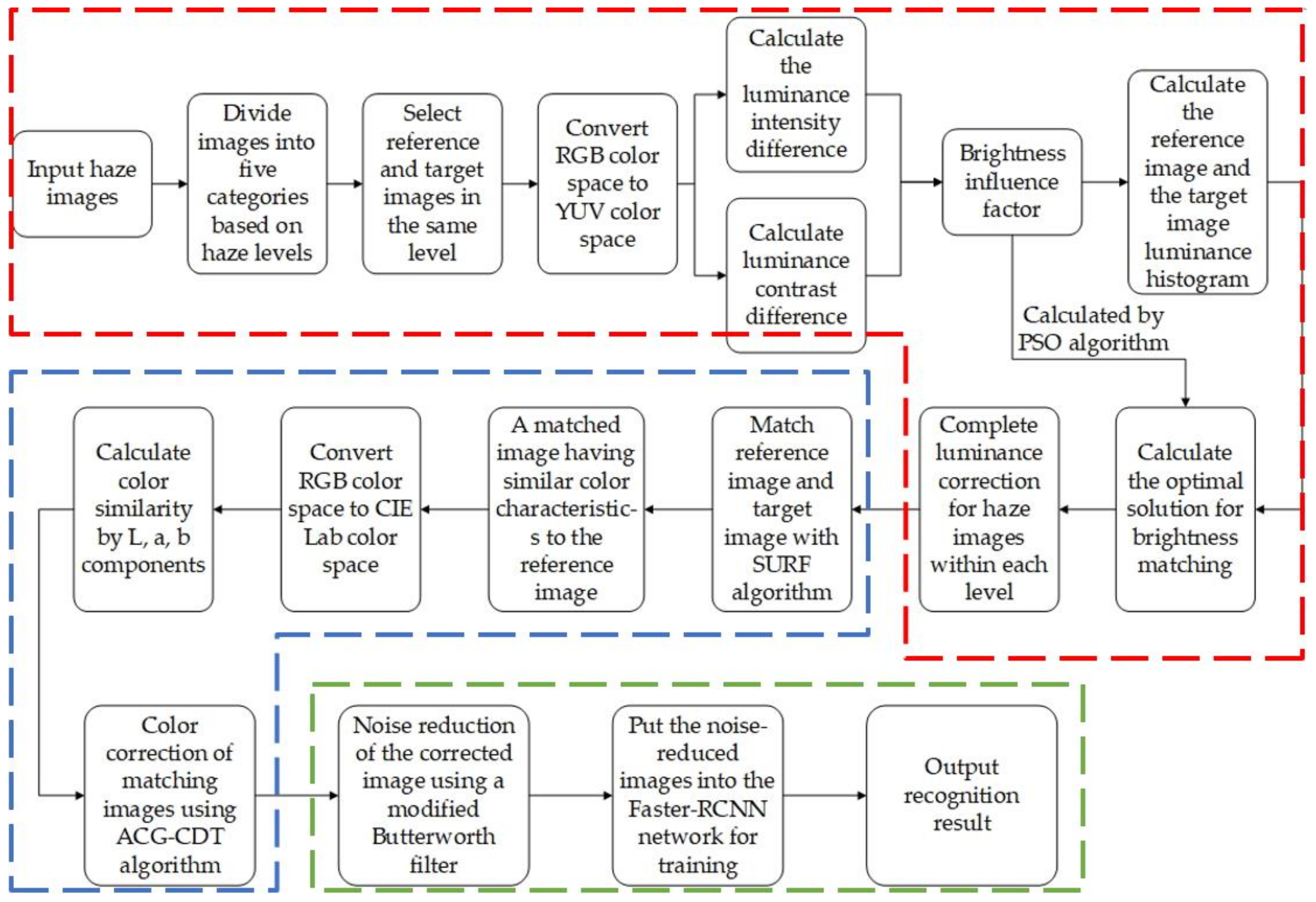

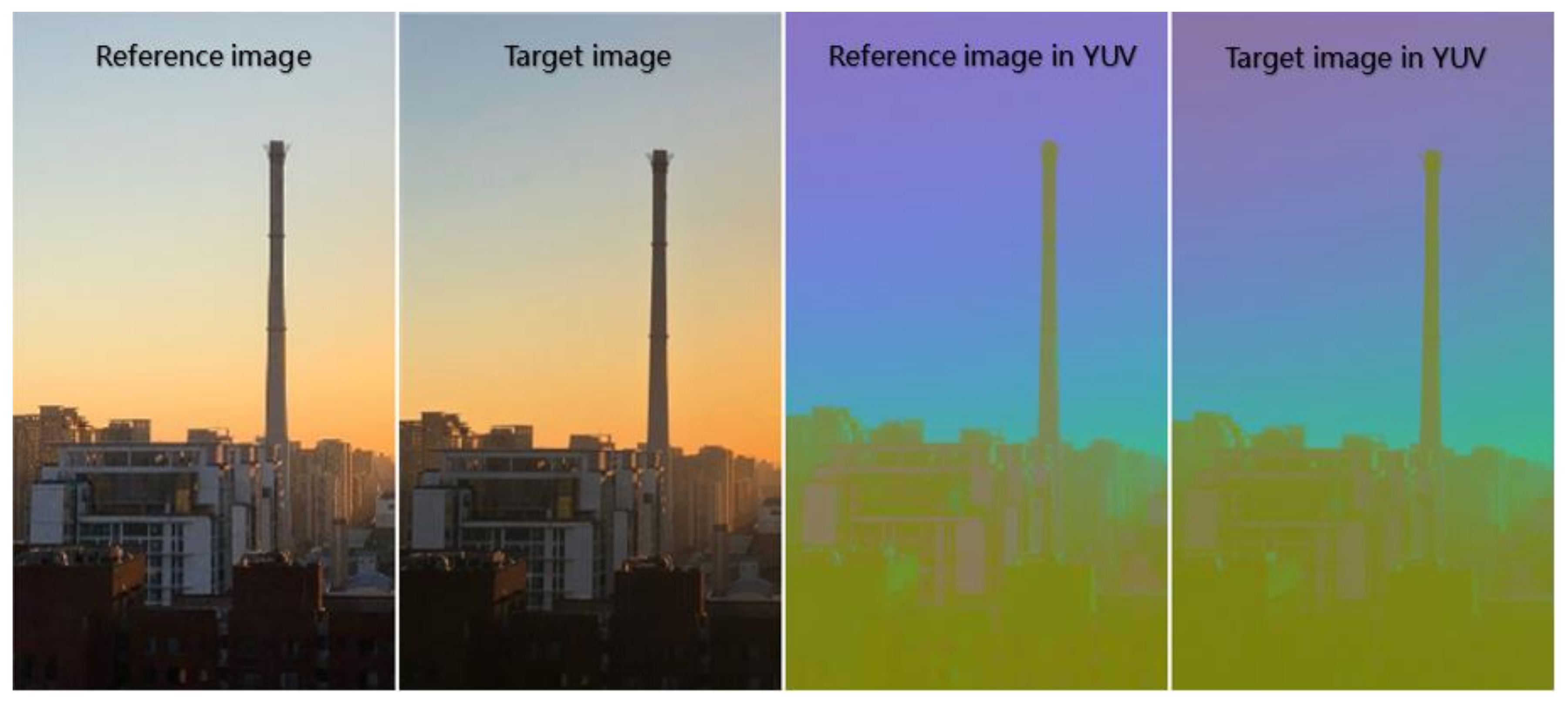

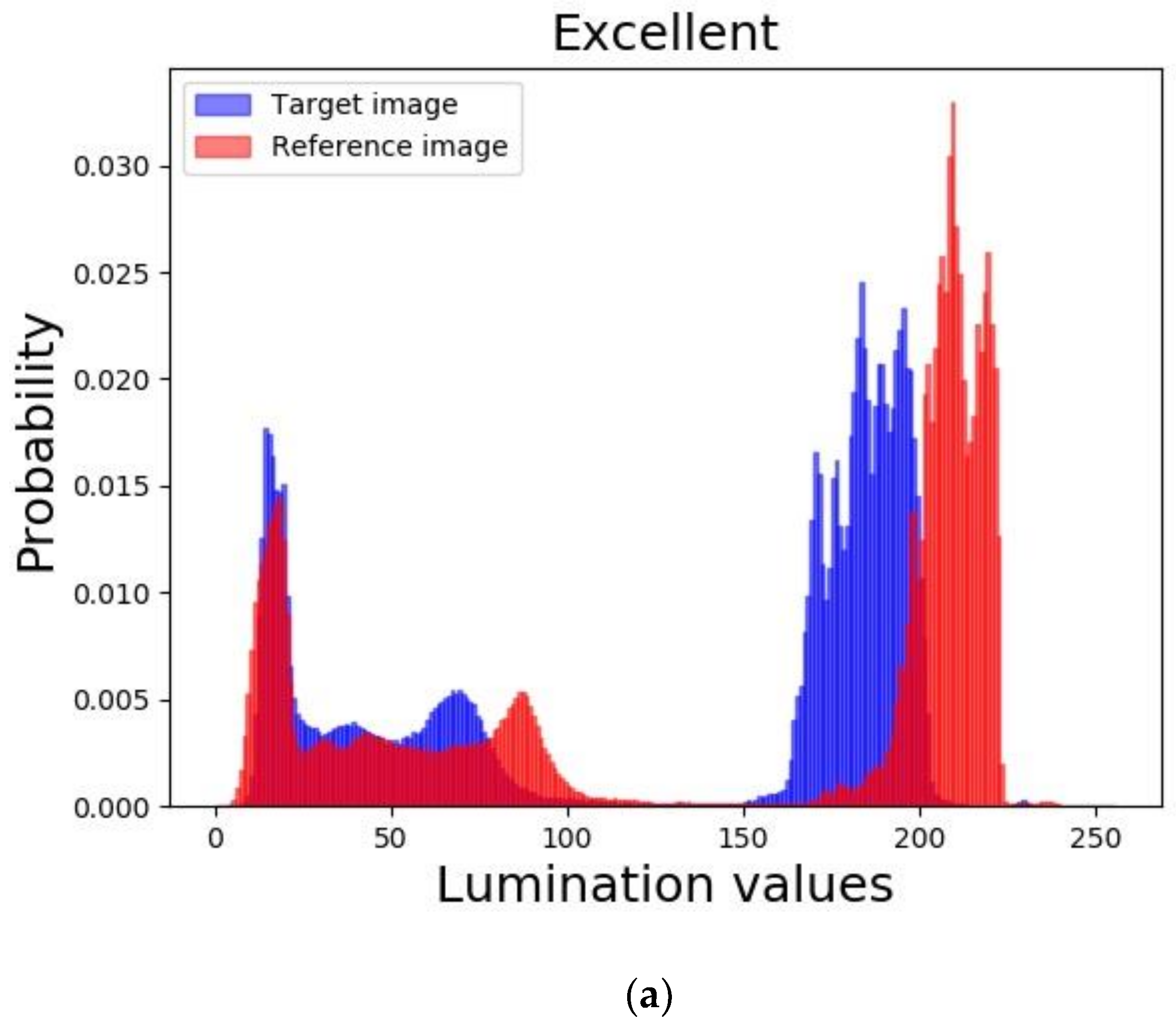

2.1. Brightness Correction Based on PSO Algorithm for Optimal Solution

2.1.1. Image Brightness Influence Factor

2.1.2. PSO Algorithm for Calculating the Optimal Solution of Histogram Matching

| Algorithm 1: choosing the best α using PSO |

| Input: Reference image and target image |

| Create N particles , where representing an α; |

| for each particle do |

| Initialize the position of each particle and its corresponding velocity ; |

| end for |

| for do |

| for each particle |

| Calculate the objective function using equation (12); |

| if |

| ; |

| end if |

| if |

| ; |

| end if |

| end for |

| for each particle do |

| update the velocity using equation (13) |

| update the velocity using equation (15) |

| end for |

| end for |

| Output: the optimal |

2.2. Color Correction Algorithm Based on Feature Matching

2.2.1. Color Similarity

2.2.2. Image Matching and Color Correction

- Construct a scale space of the reference image and the registration image. Calculate the number of reference image scale spaces to form N feature sets; calculate the number of registration image scale spaces, form M feature sets, and arrange the two image feature sets according to their scale.

- The registration image feature set is unchanged, and the feature set of the reference image is in one-to-one correspondence with the feature set of the registration image. Calculate the matching logarithm of the corresponding feature point.

- The registration image feature set is unchanged, the feature set of the reference image is arranged in reverse order, and the matching logarithm of the corresponding feature point is recalculated.

- Compare the feature matching logarithm of two times and the maximum value of the two matchings as the feature point matching relationship. This relationship is used as the image registration relationship to calculate the affine matrix and achieve registration.

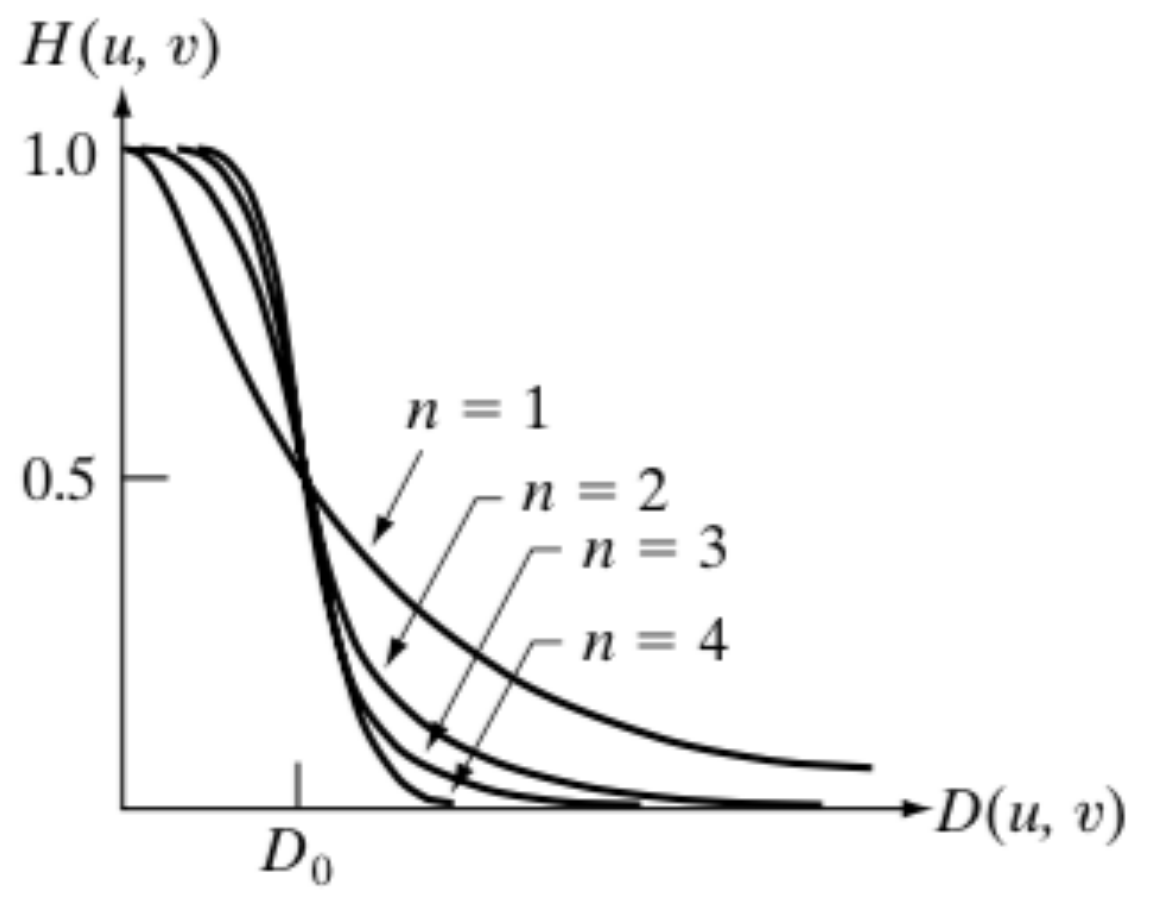

2.3. Improved Butterworth Filter

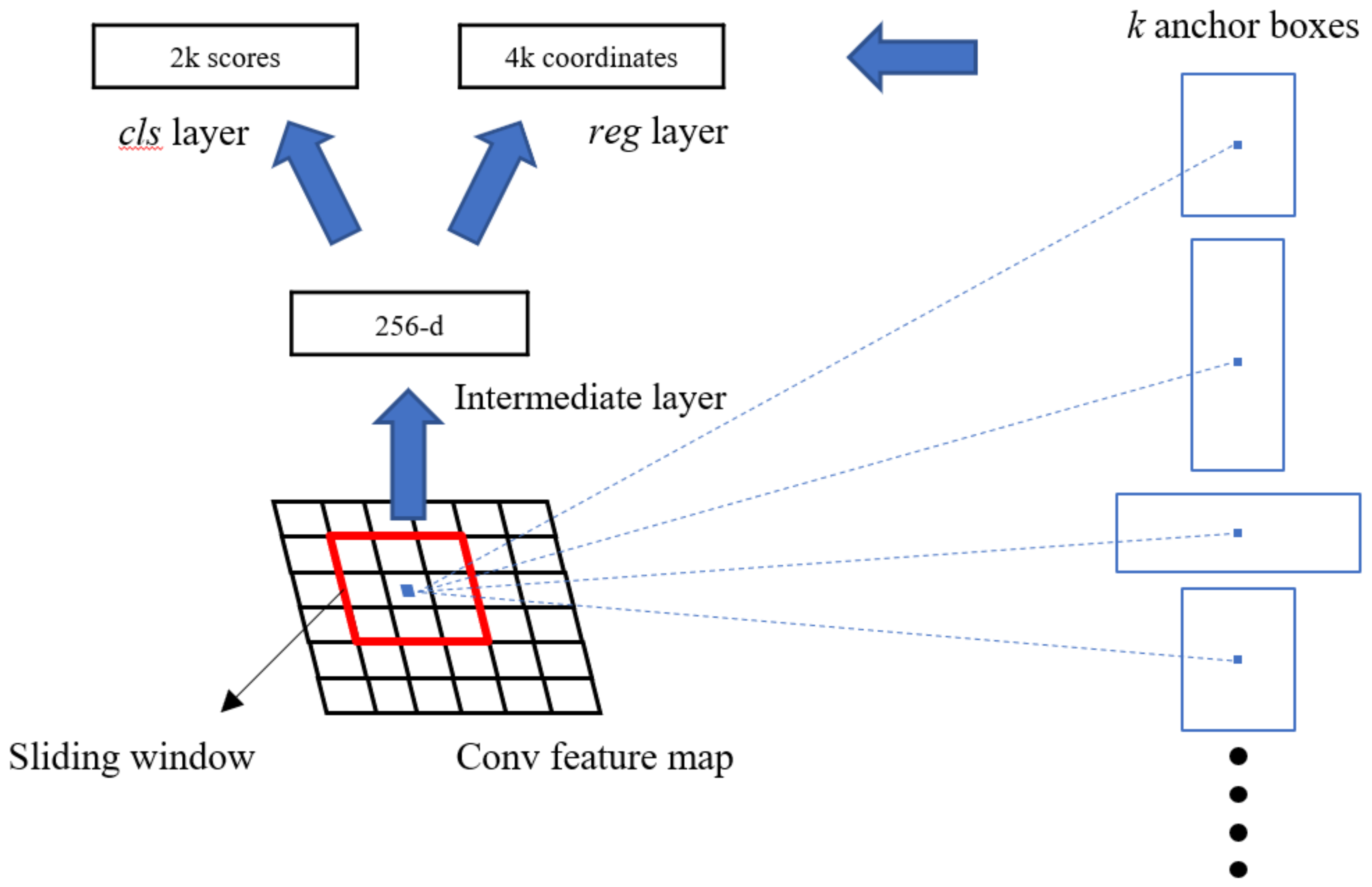

2.4. Haze Image Recognition Based on Faster R-CNN

- Basic feature extraction network;

- RPN (region proposal network);

- Fast R-CNN. The RPN and Fast R-CNN networks share parameters through alternate training.

3. Experiments and Comparisons

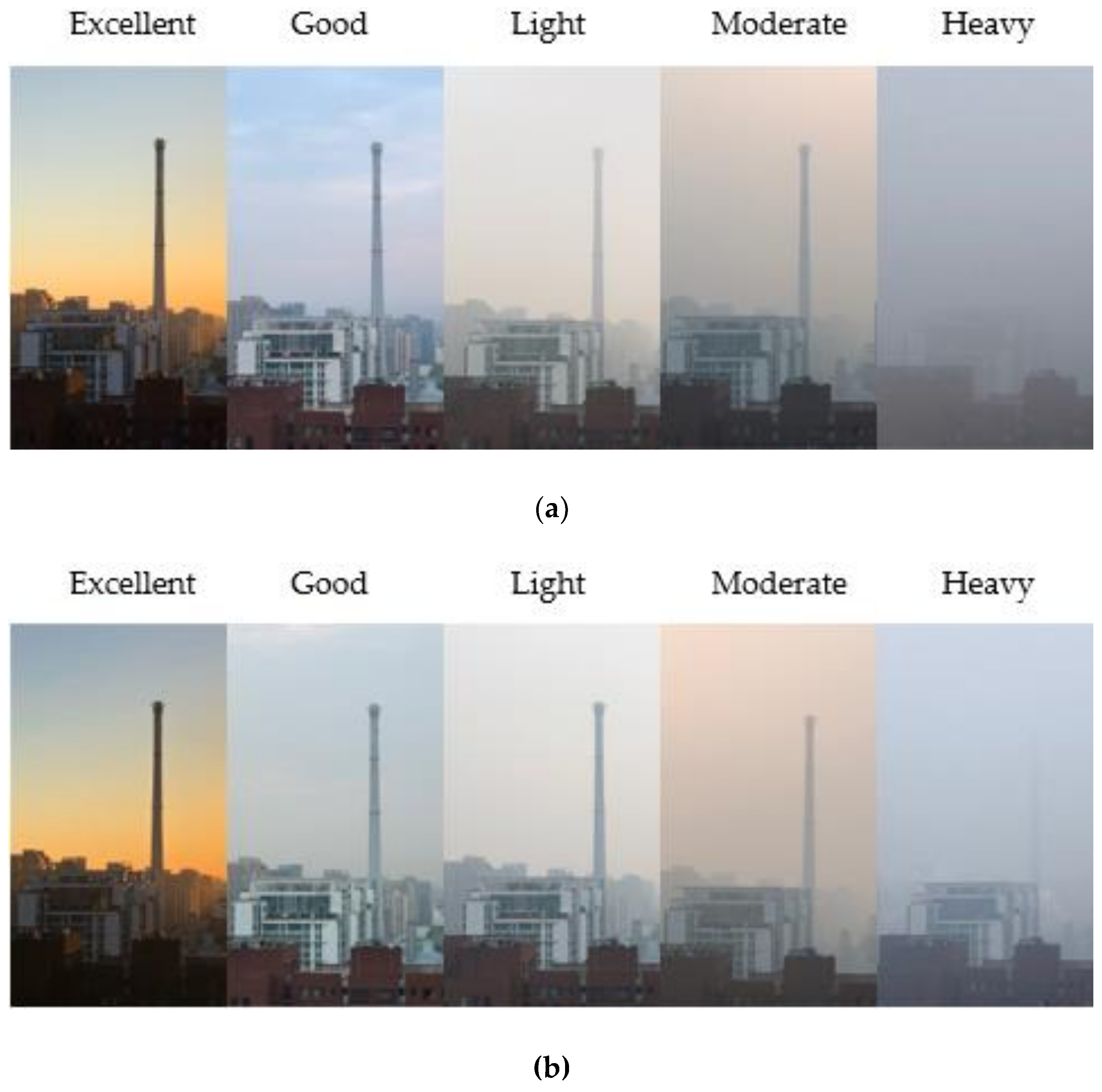

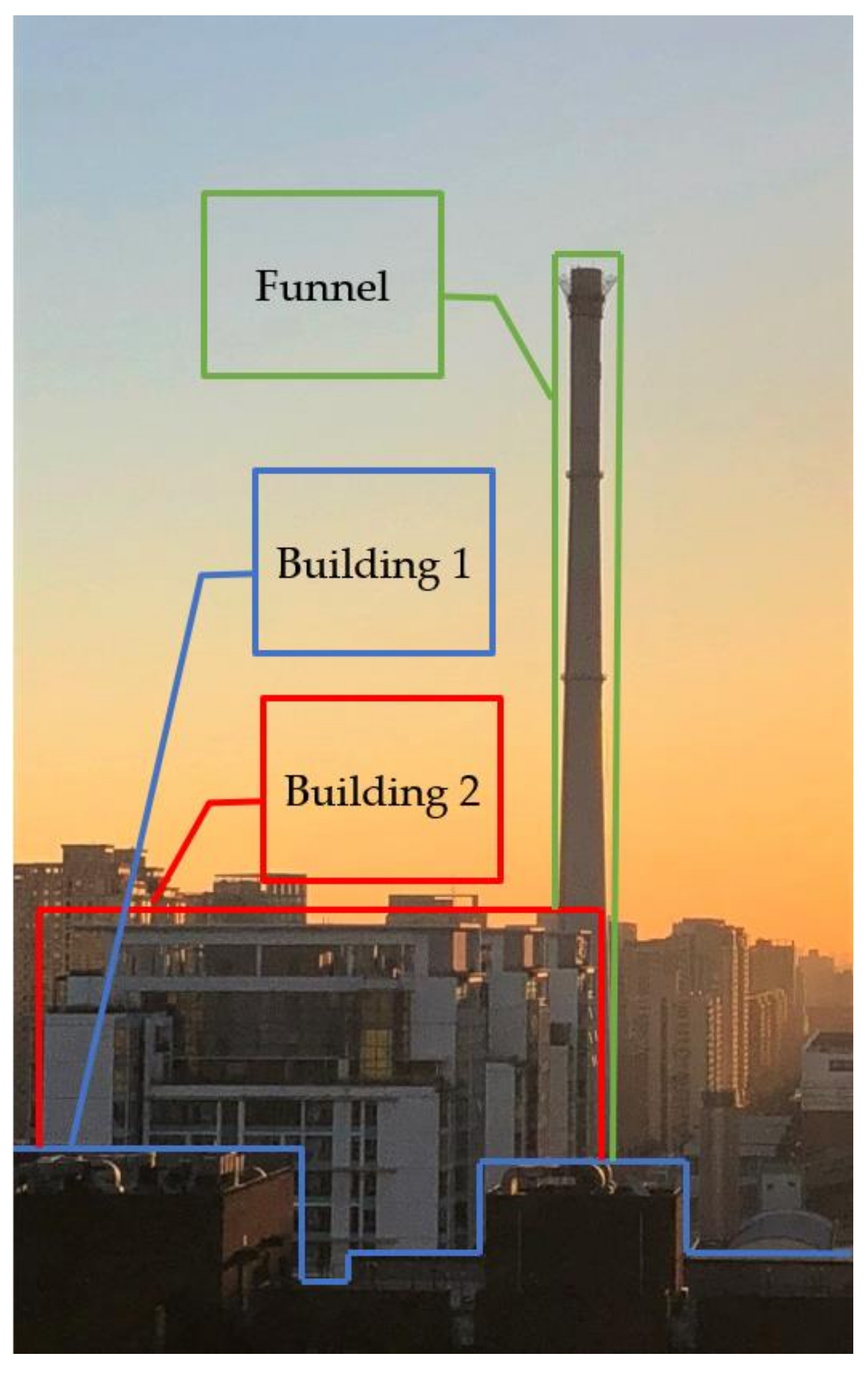

3.1. Image Data Description

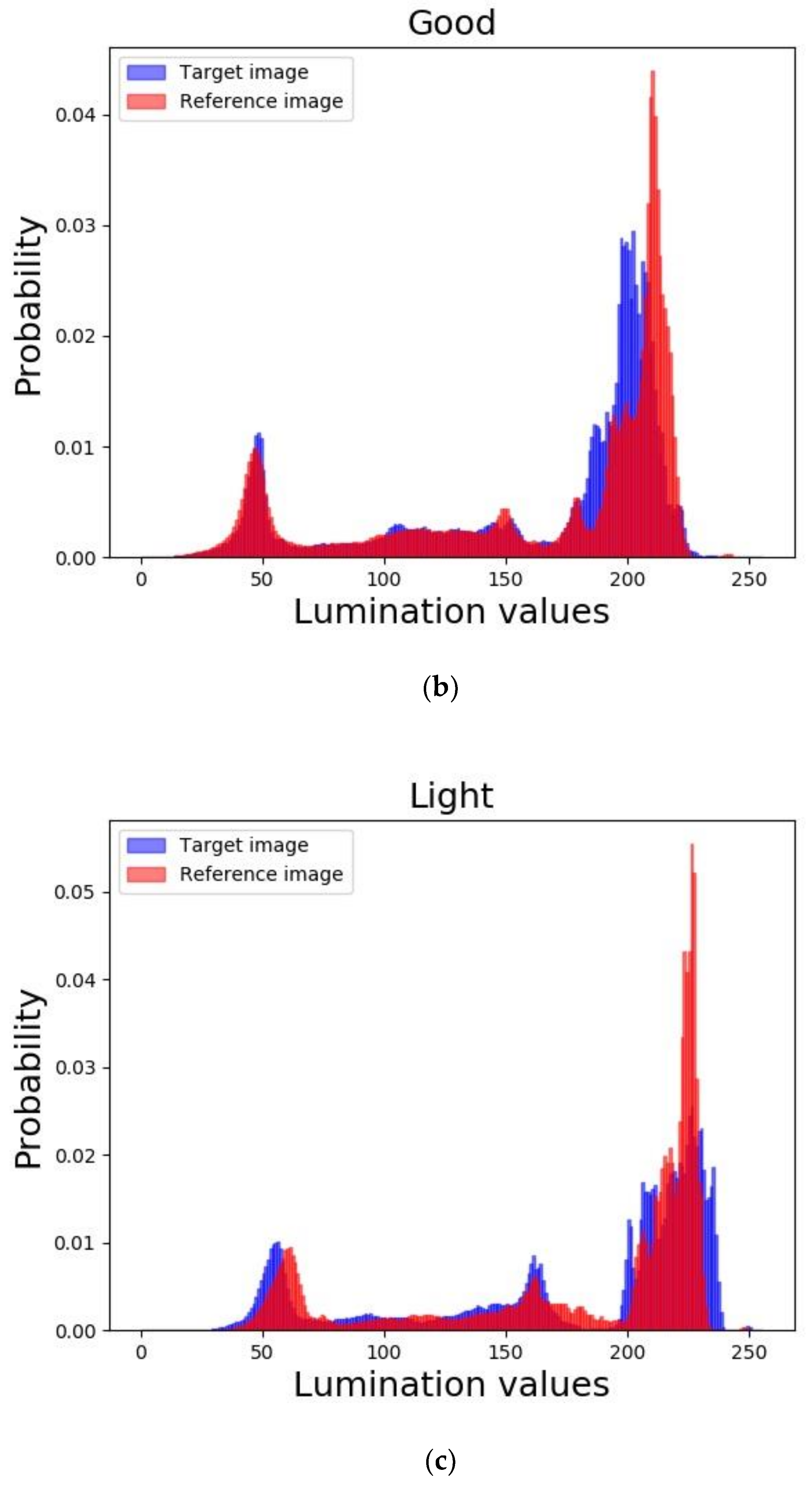

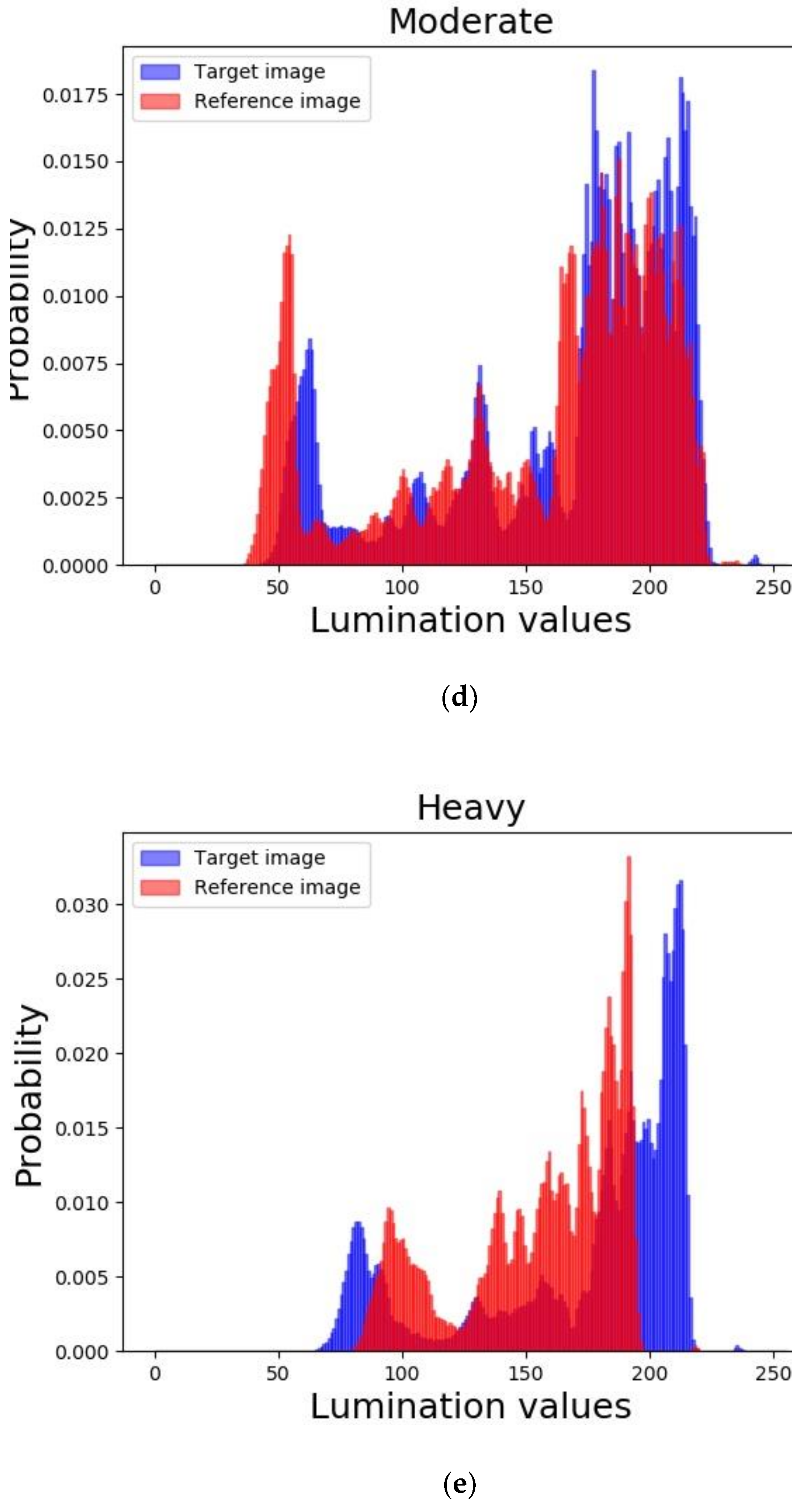

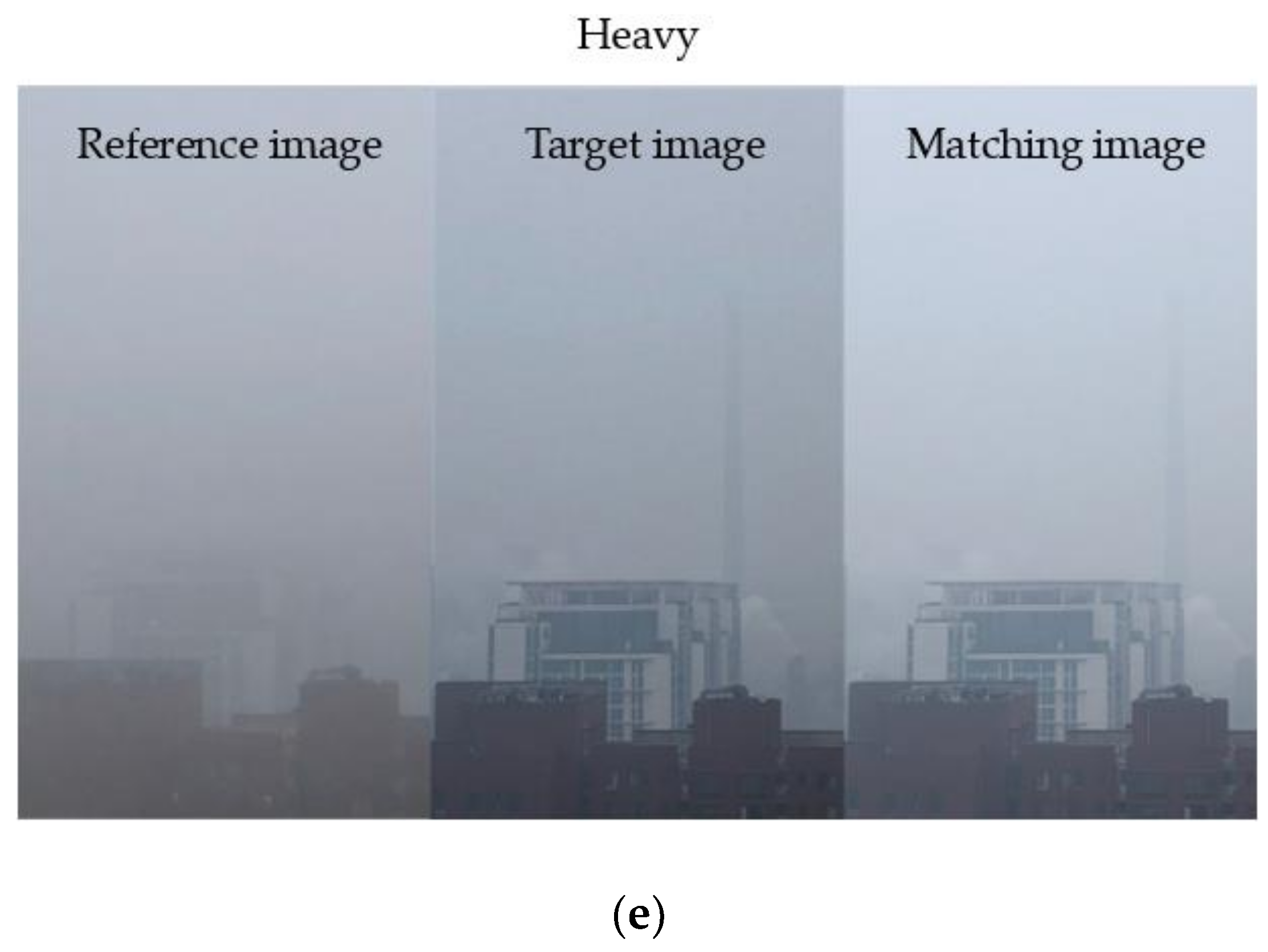

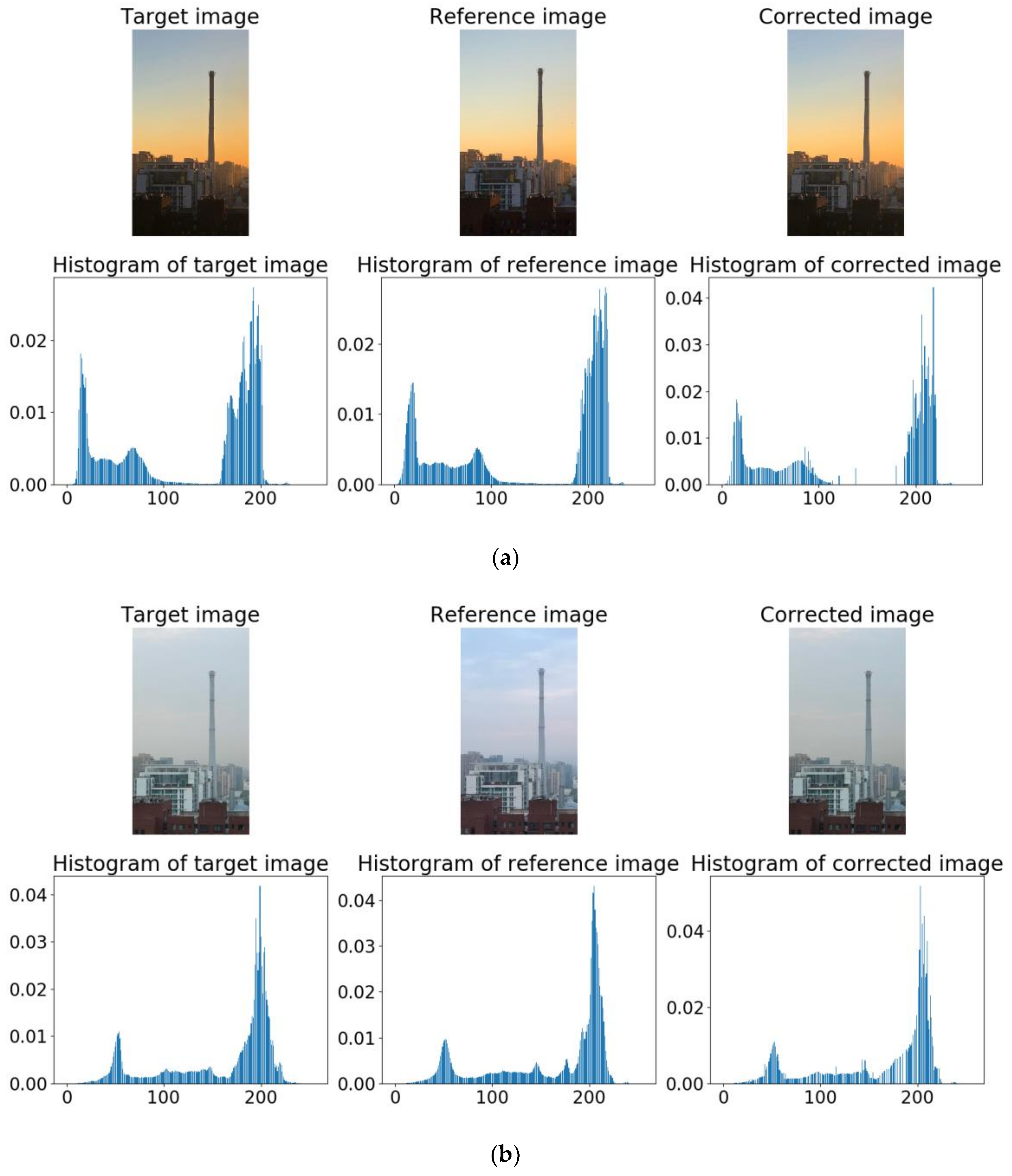

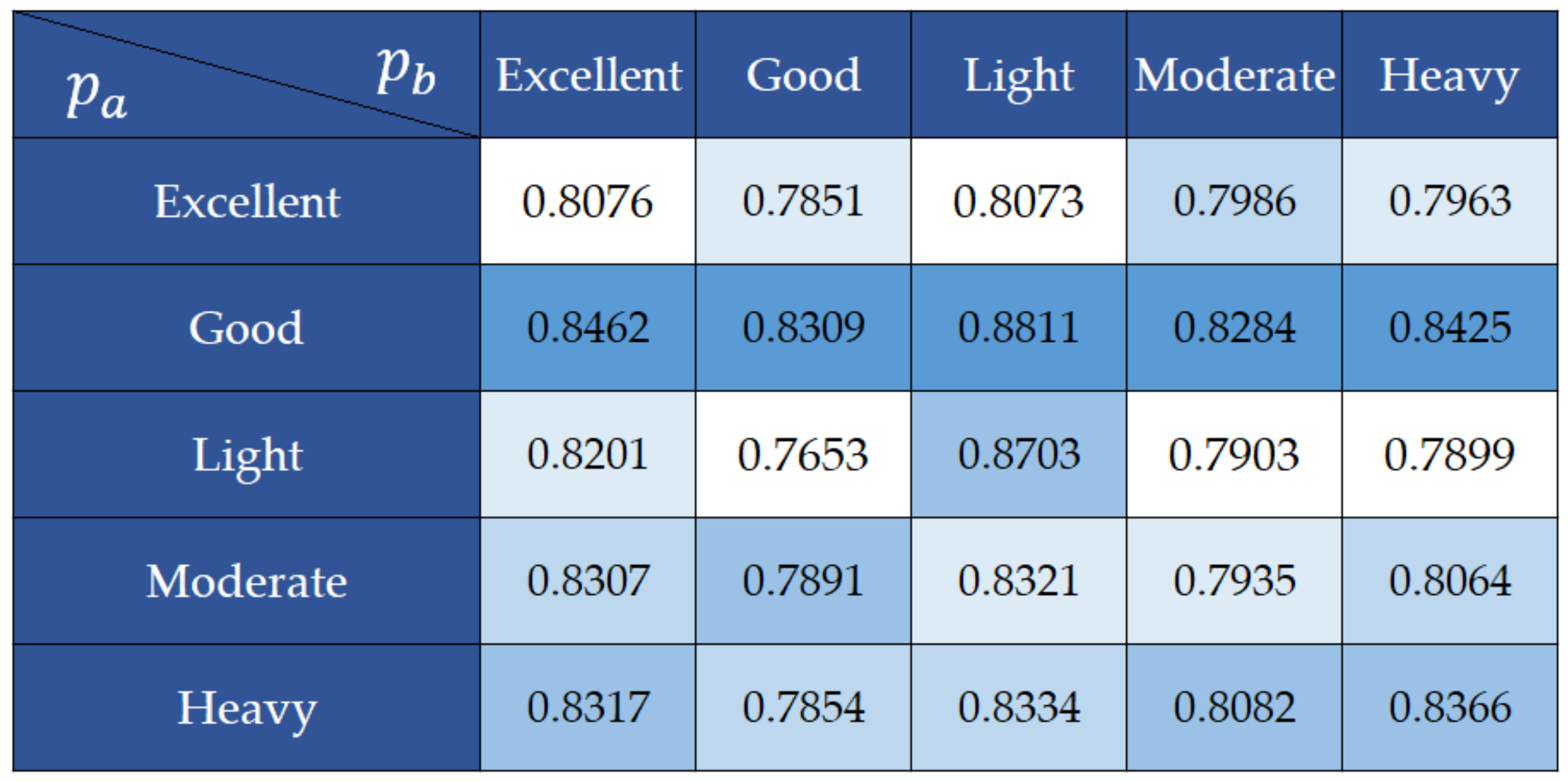

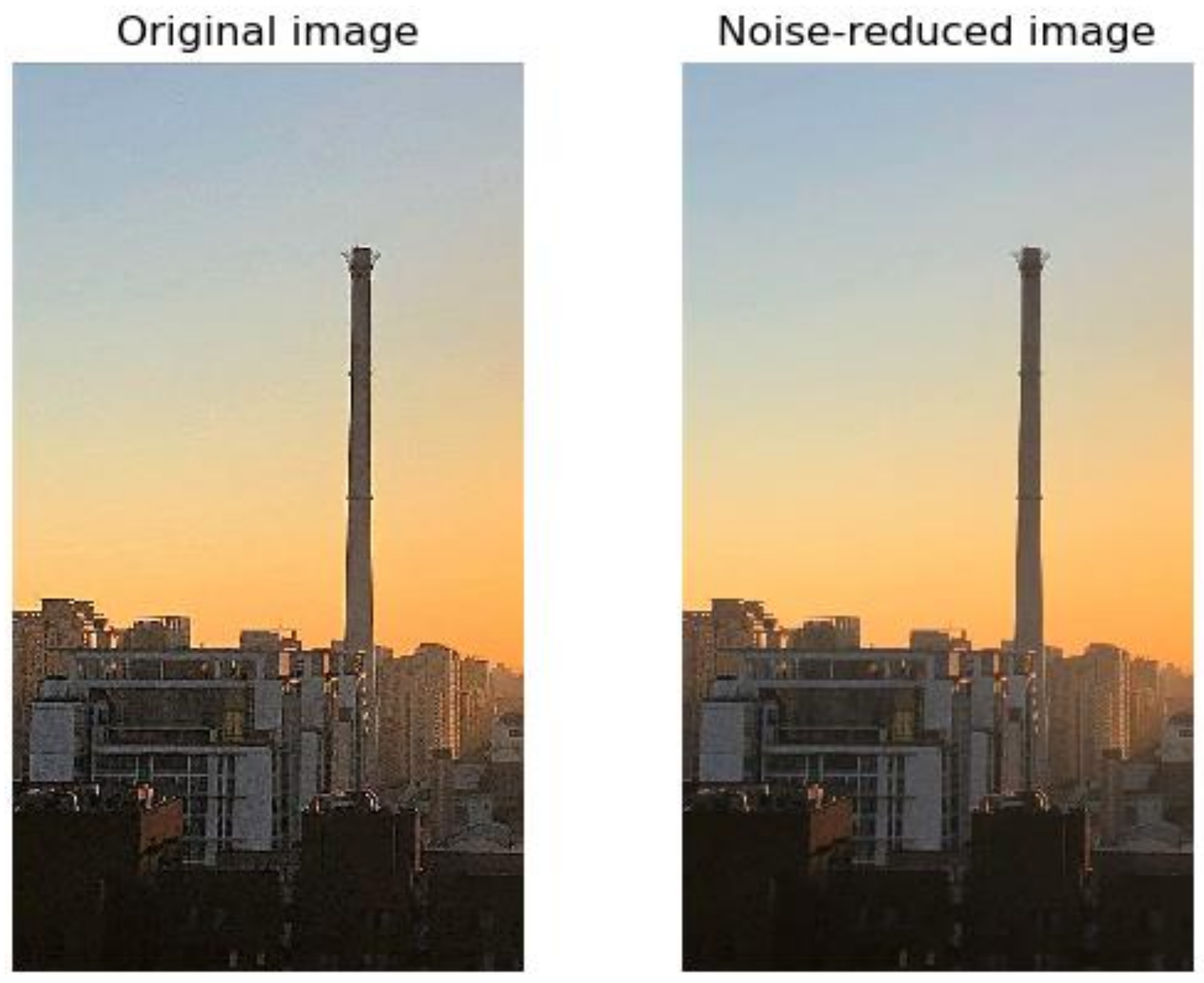

3.2. Brightness Correction Experiment

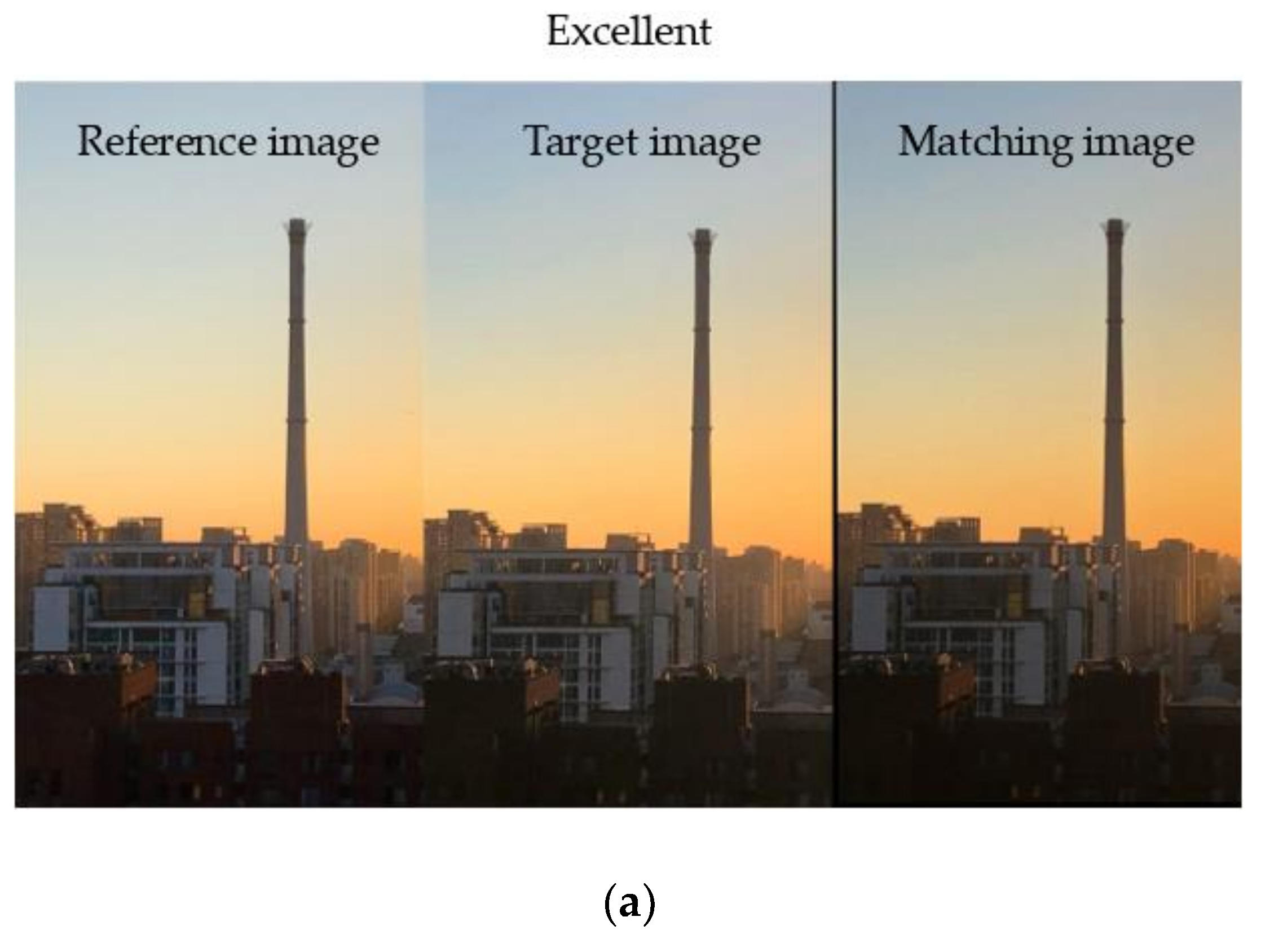

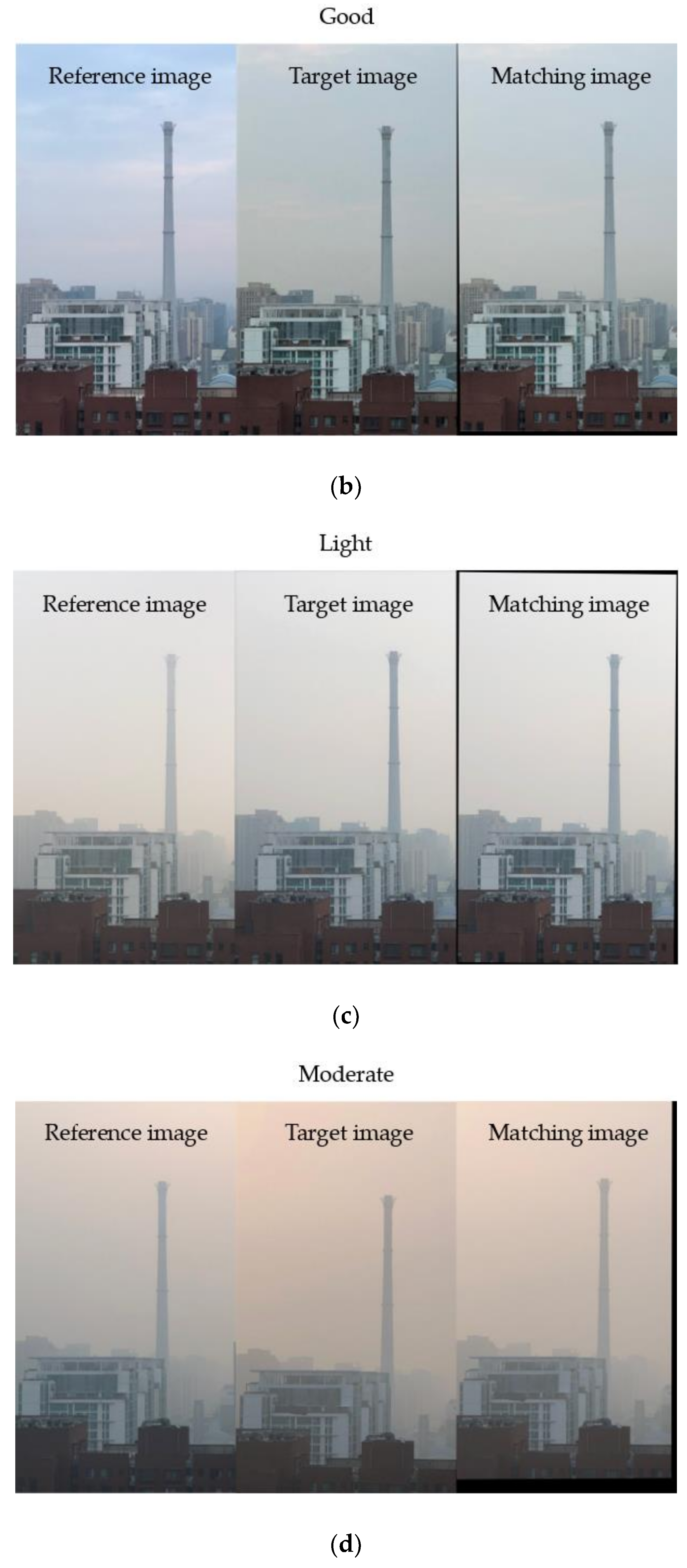

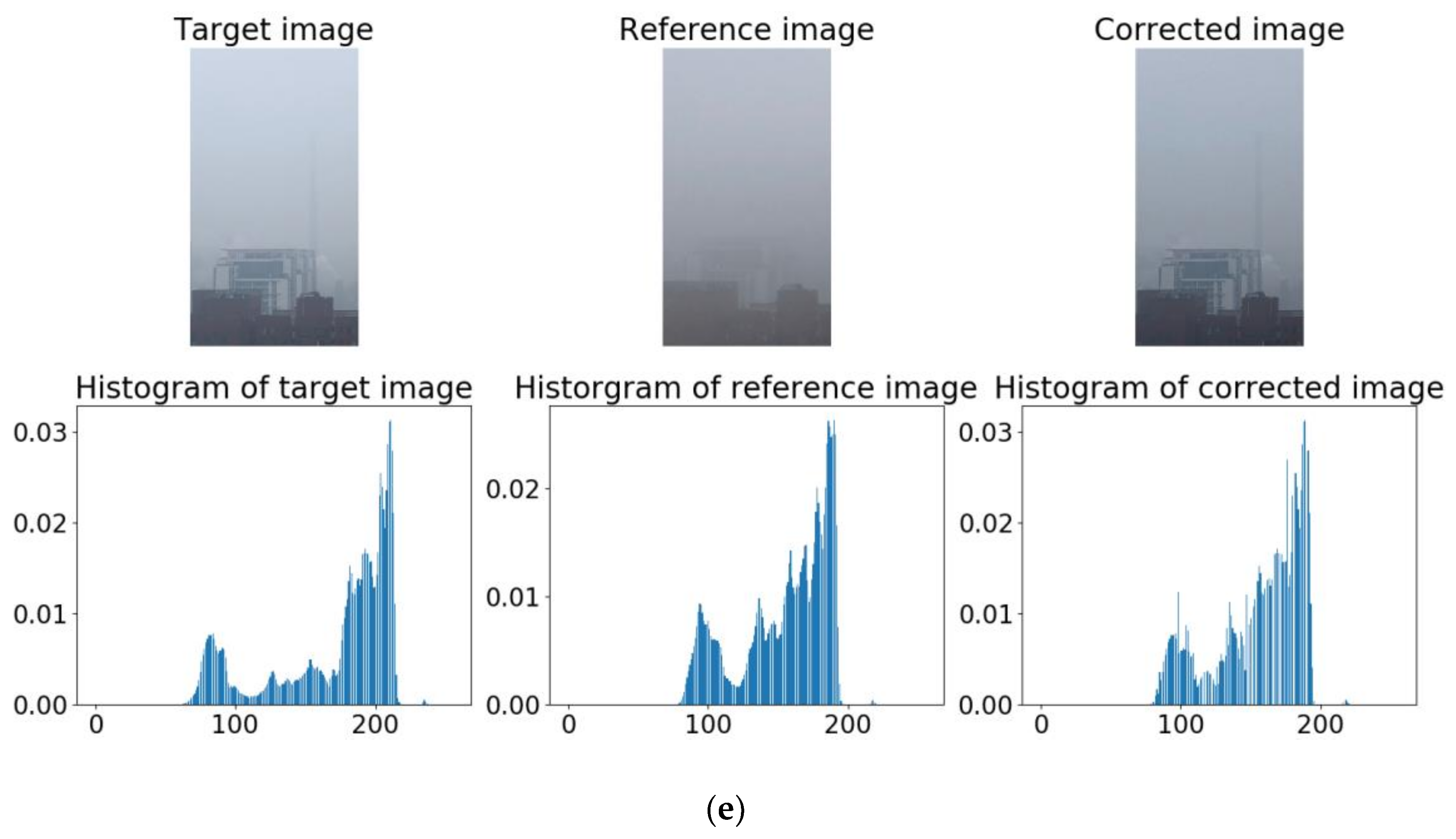

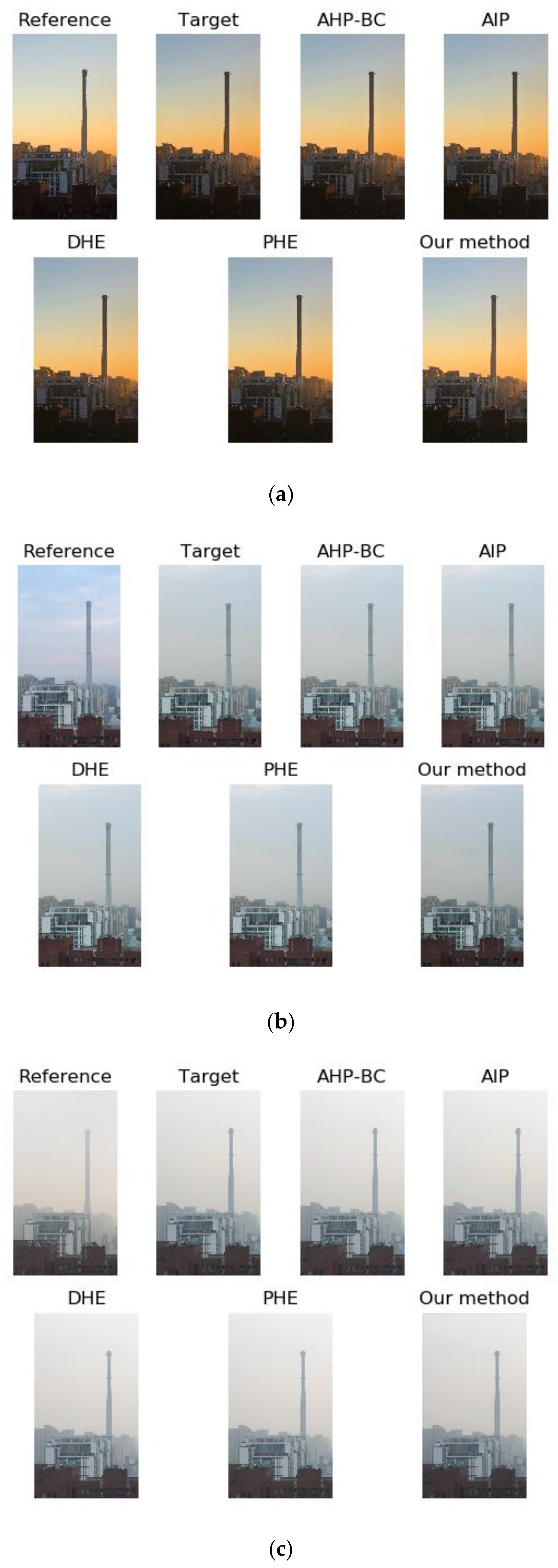

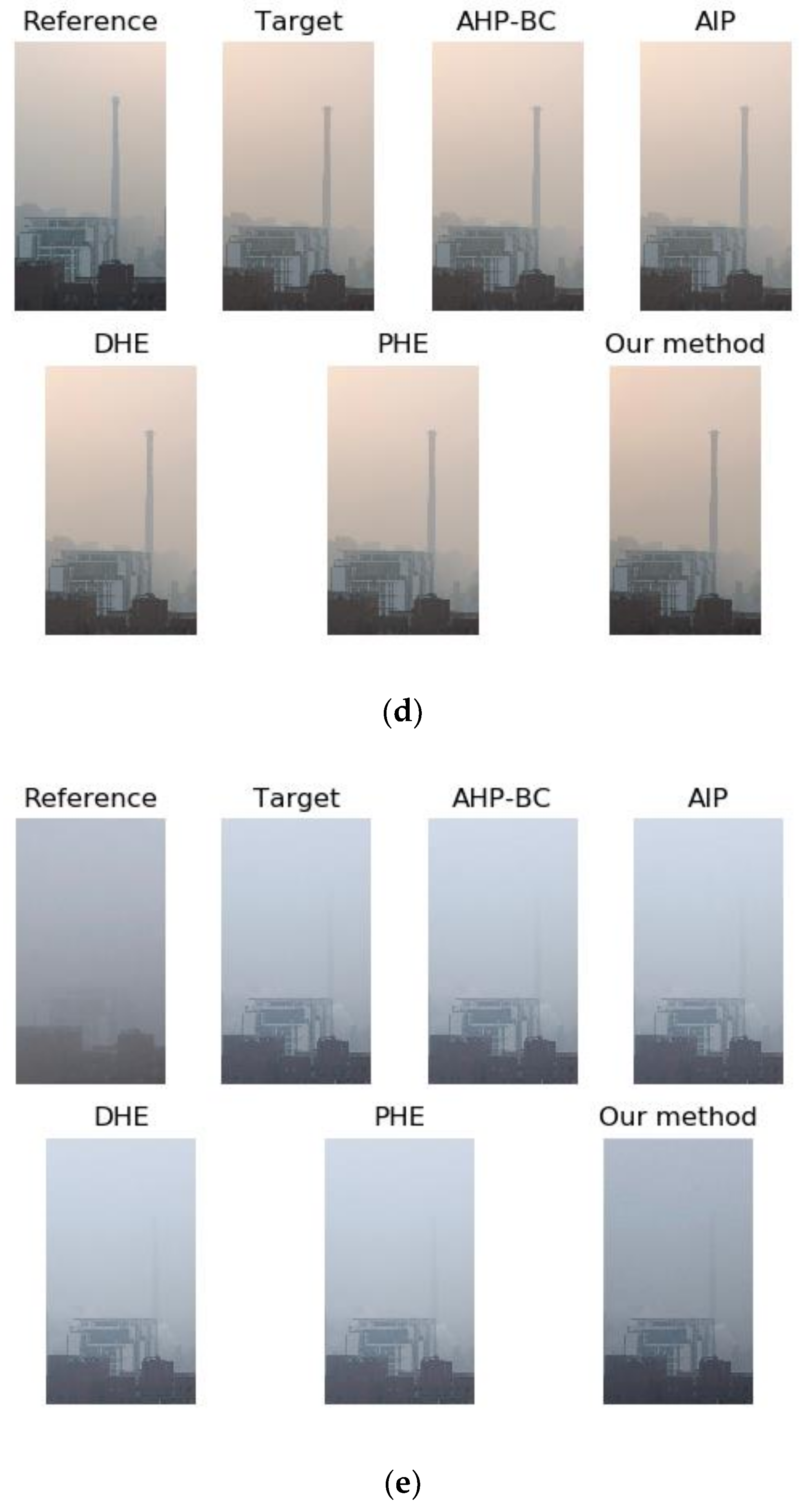

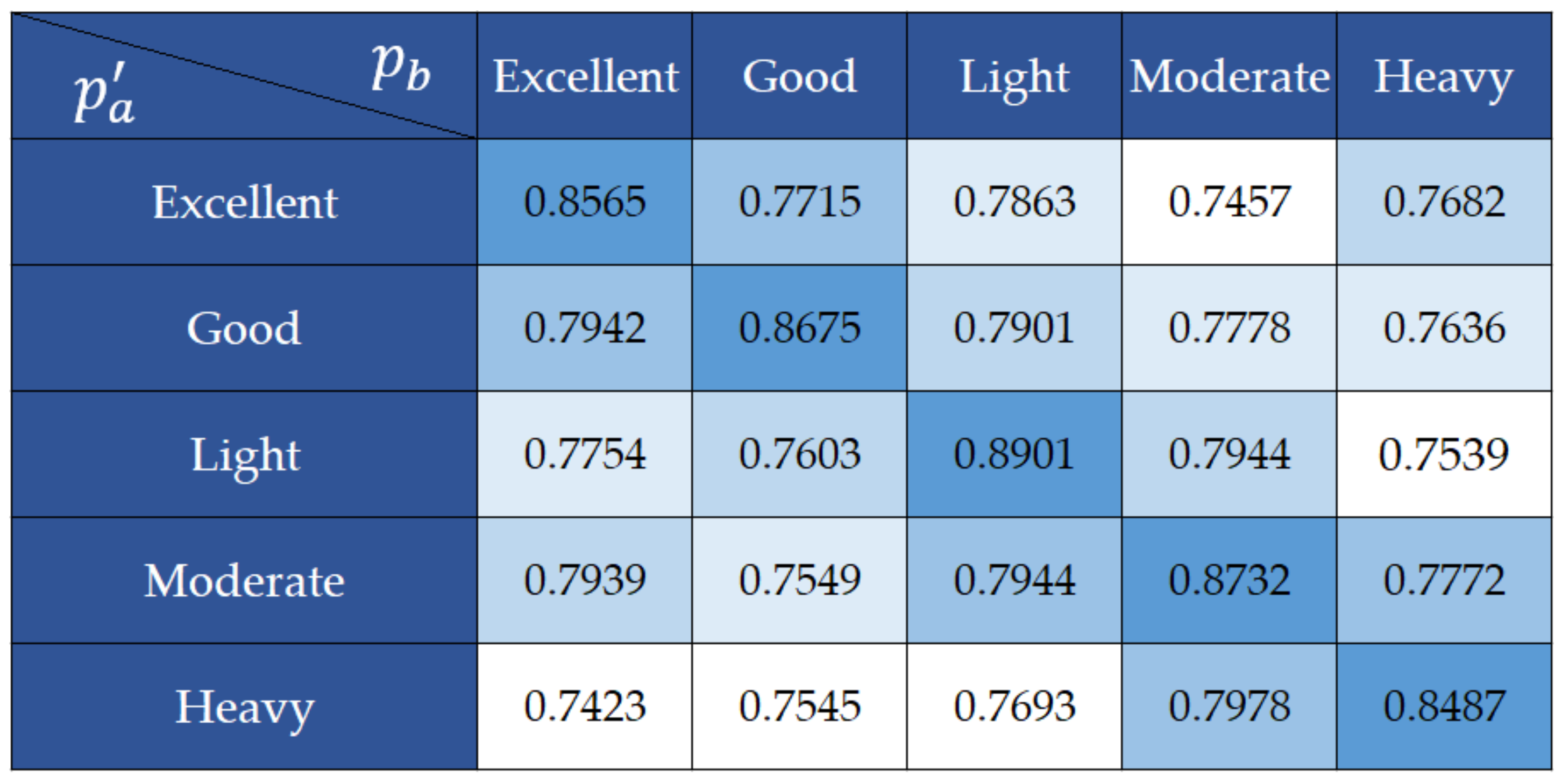

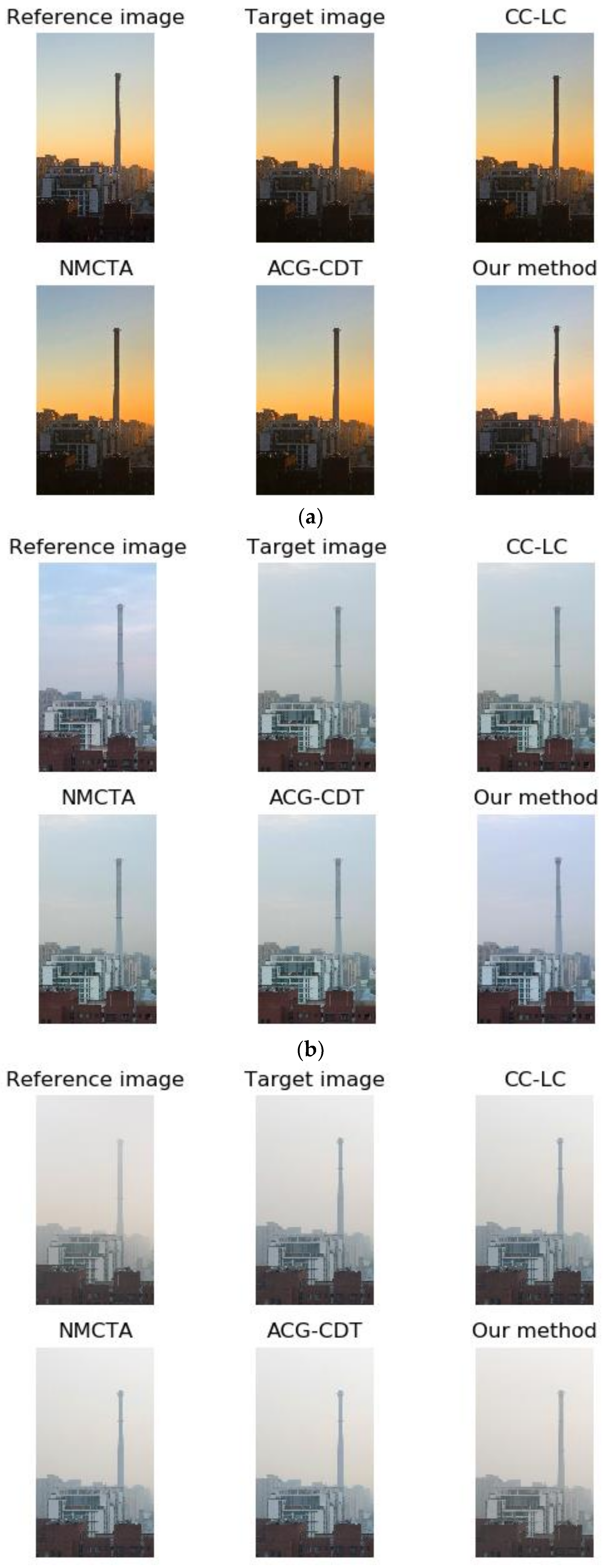

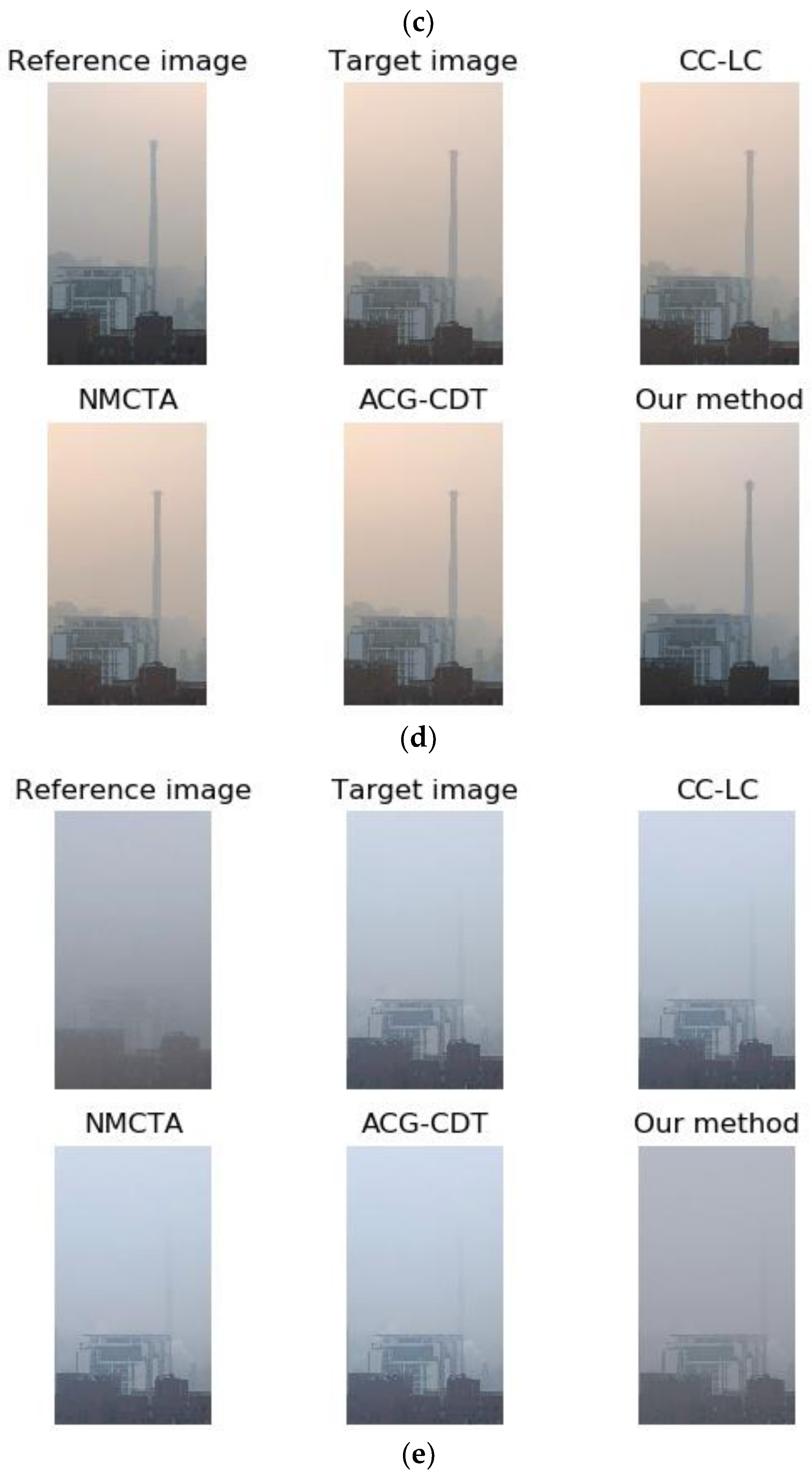

3.3. Color Correction Experiment

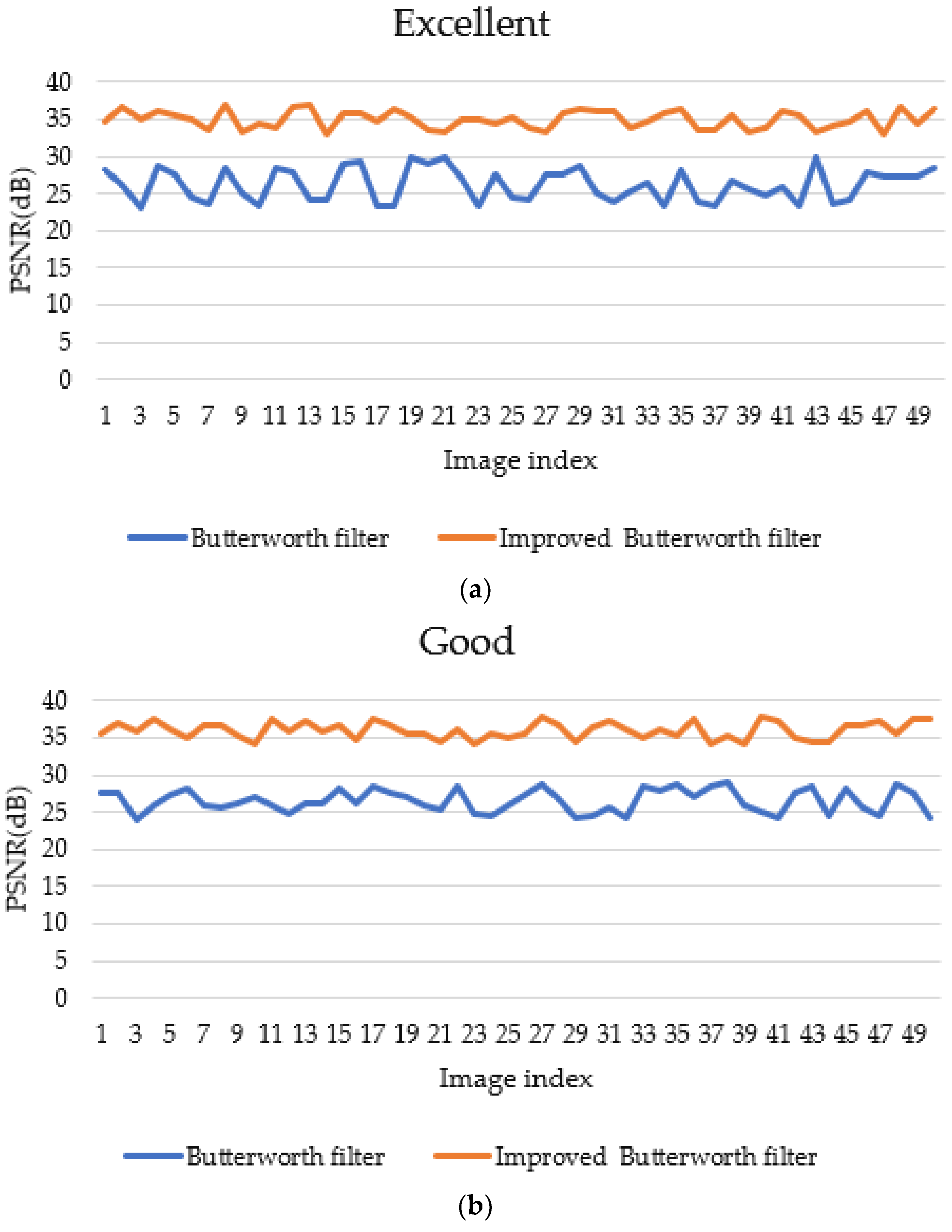

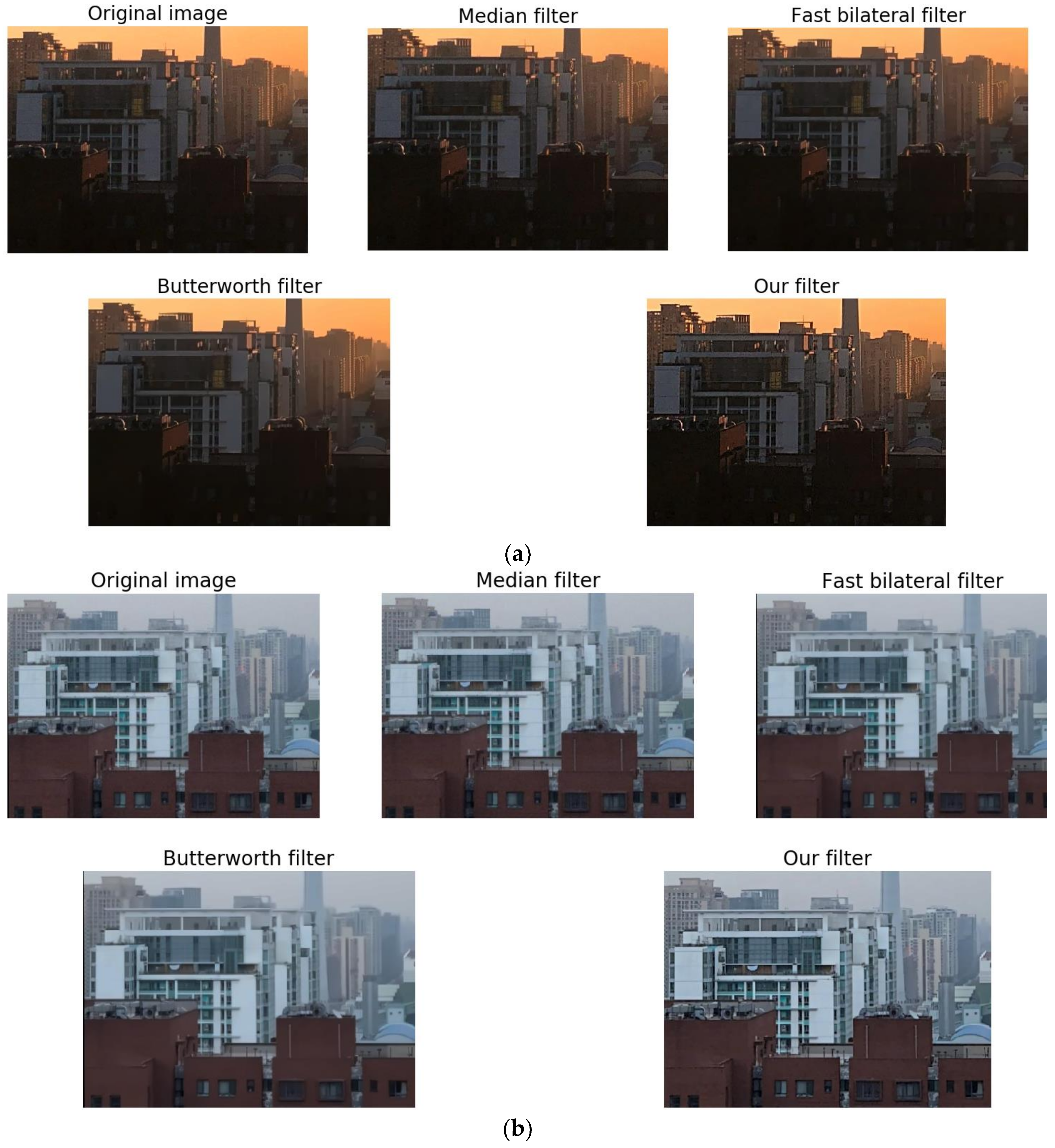

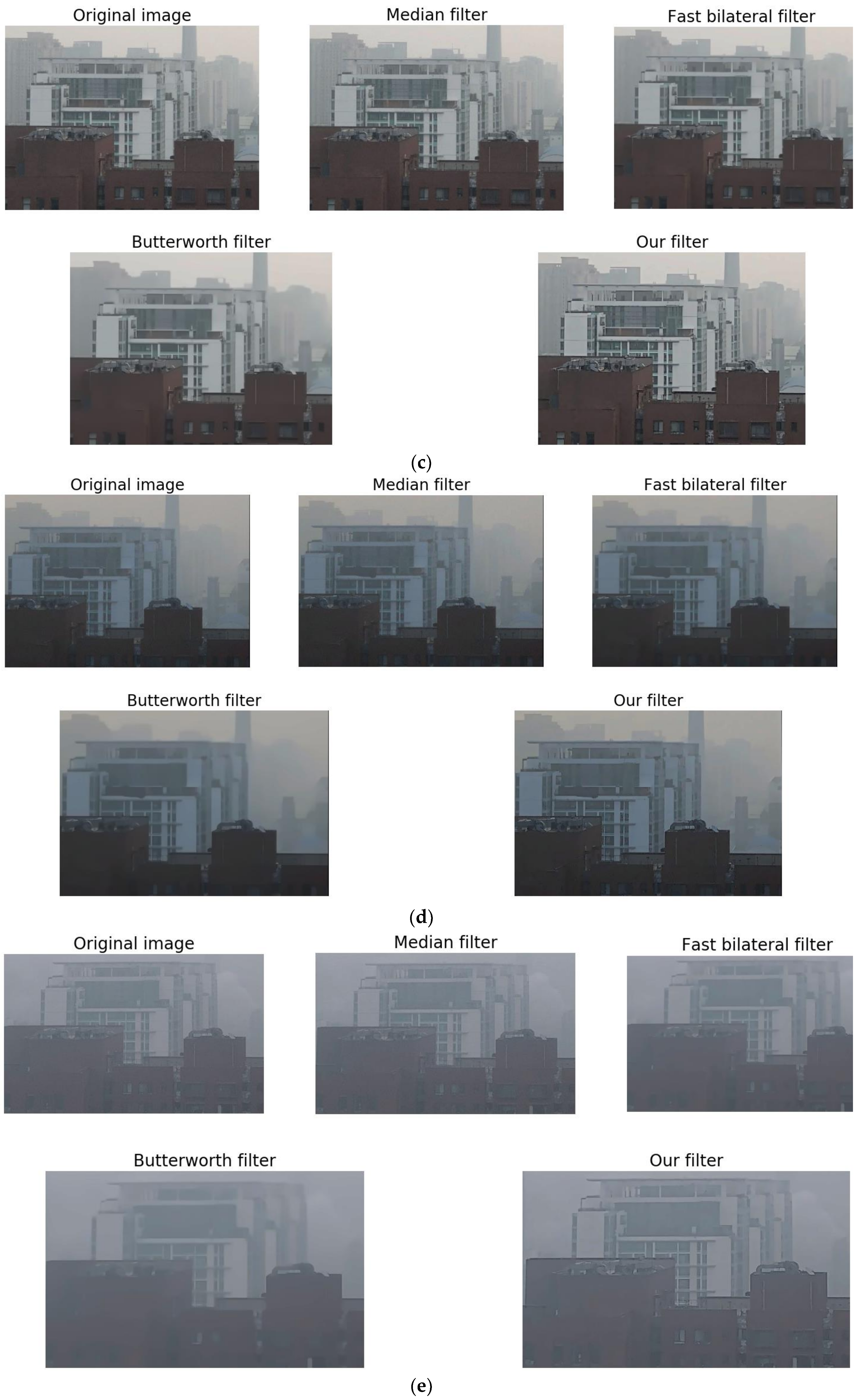

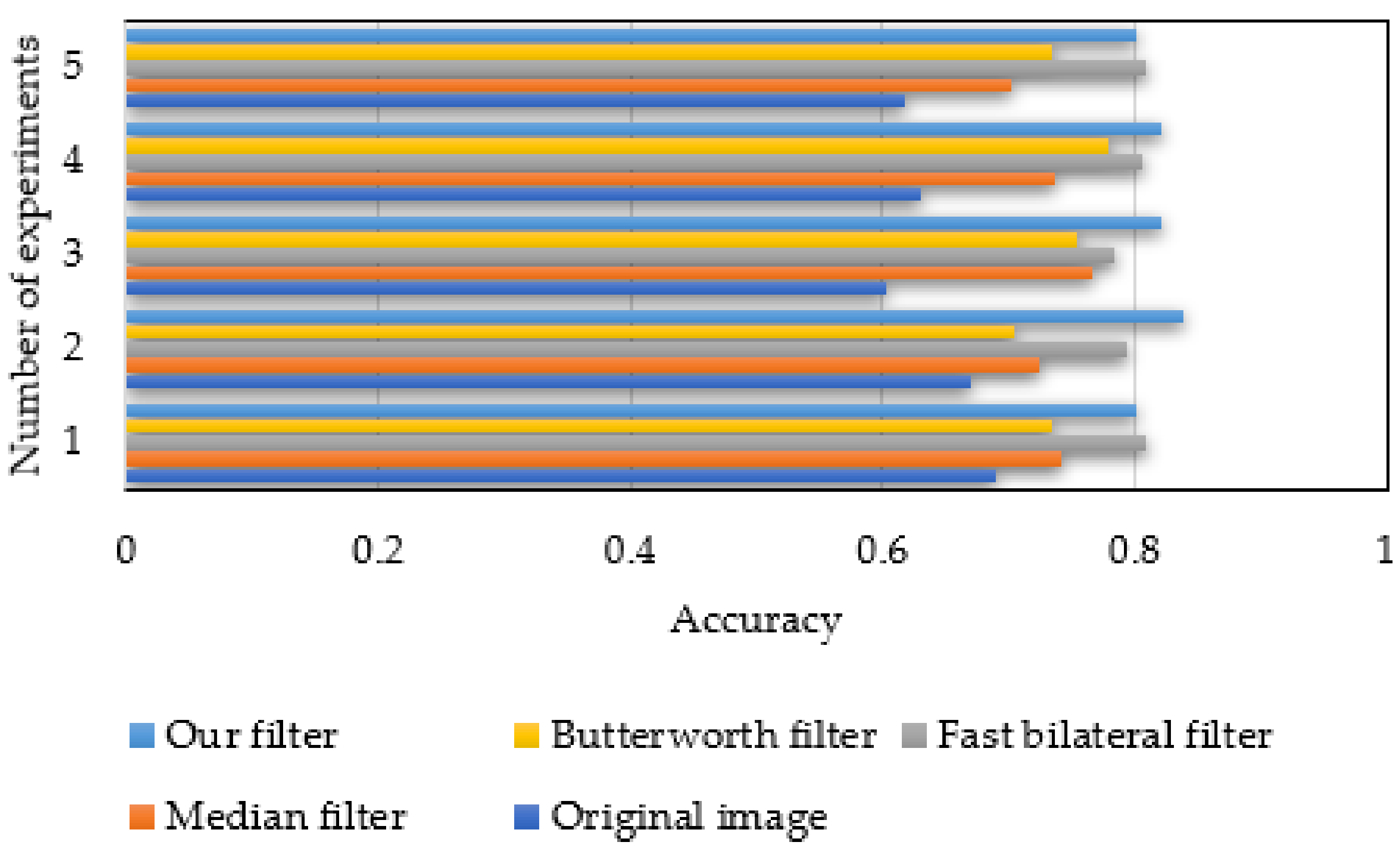

3.4. Filter Comparison Experiments

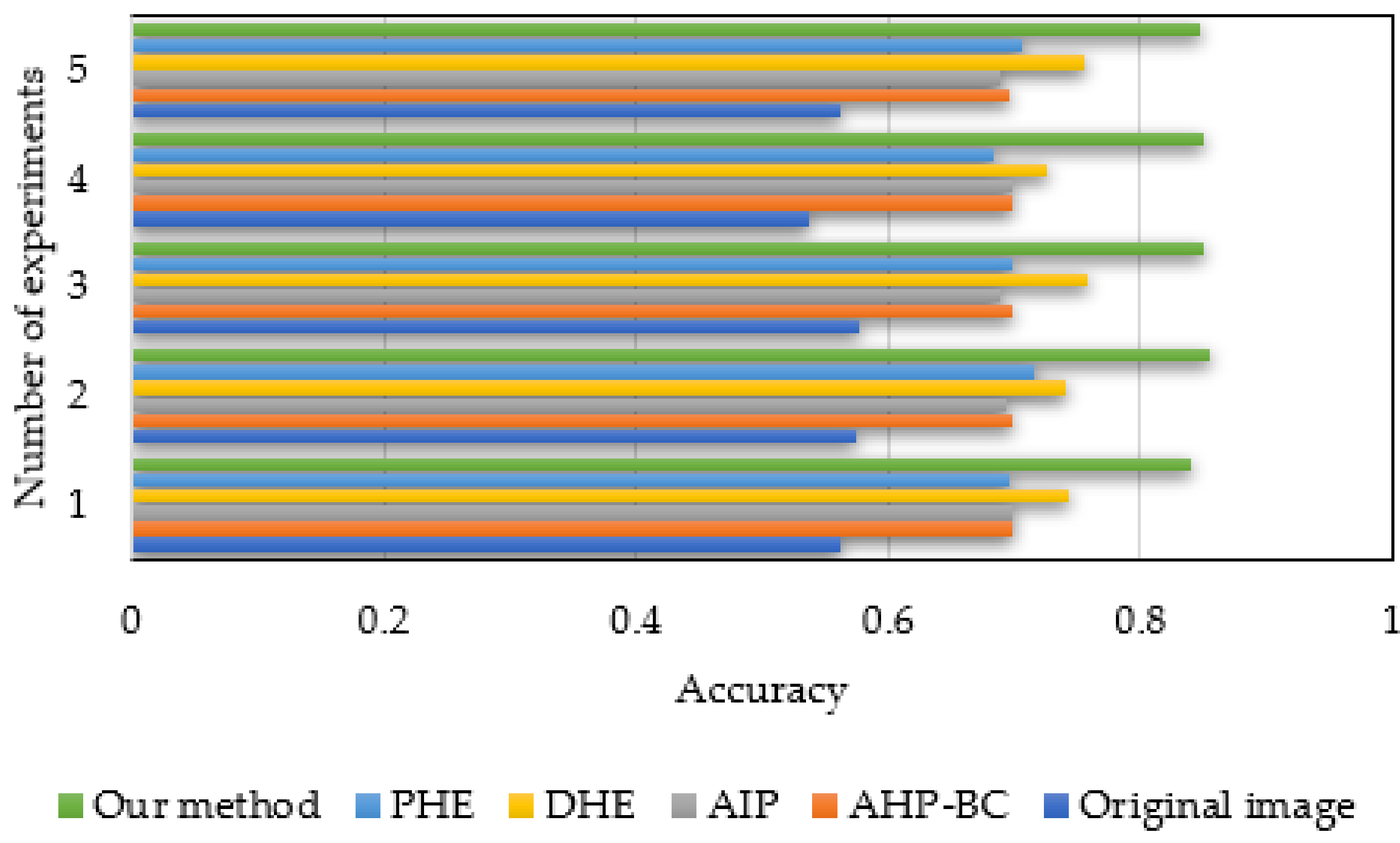

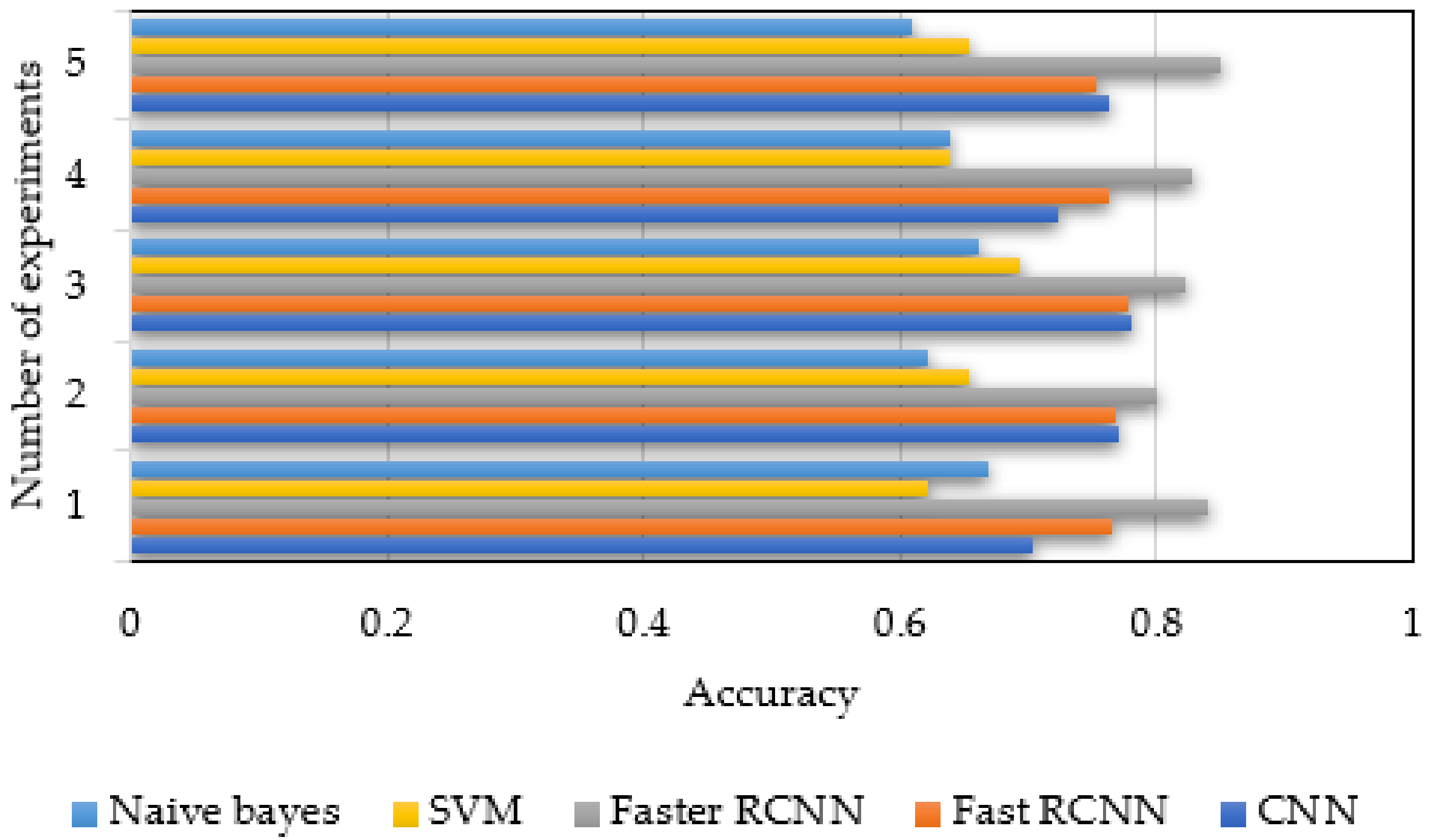

3.5. Comparison Experiments of Identification Methods

4. Conclusions and Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Lipeng, L.; Bin, W.; Hui, L.; Xiaojun, W. Haze Pollution Level Detection Method Based on Image Gray Differential Statistics. Comput. Eng. 2016, 42, 225–230. [Google Scholar]

- Dabo, J.; Xiao, F.; Yanxiao, C.; Heqiang, B.; Hongqiang, W. Measuring Dust Amount of Open-pit Blasting Based on Image Processing. Eng. Blasting 2017, 23, 34–38. [Google Scholar]

- Zhang, H.; Ma, J. Simulation on Judgment Model of Air Pollution Degree Based on Image Processing. Comput. Simul. 2016, 33, 452–455. [Google Scholar]

- Yan, G.; Yu, M.; Shi, S.; Feng, C. The recognition of traffic speed limit sign in hazy weather. J. Intell. Fuzzy Syst. 2017, 33, 873–883. [Google Scholar] [CrossRef]

- Jiang, X.; Sun, J.; Ding, H.; Li, C. Video Image De-fogging Recognition Algorithm based on Recurrent Neural Network. IEEE Trans. Ind. Inform. 2018, 14, 3281–3288. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Chen, Q.; Maldague, X. Infrared Image Enhancement Using Adaptive Histogram Partition and Brightness Correction. Remote Sens. 2018, 10, 682. [Google Scholar] [CrossRef]

- Wang, A.; Wang, W.; Liu, J.; Gu, N. AIPNet: Image-to-Image Single Image Dehazing With Atmospheric Illumination Prior. IEEE Trans. Image Process. 2019, 28, 381–393. [Google Scholar] [CrossRef] [PubMed]

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.A.A.; Chae, O. A Dynamic Histogram Equalization for Image Contrast Enhancement. IEEE Trans. Consum. Electron. 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Vickers, V.E. Plateau equalization algorithm for real-time display of high-quality infrared imagery. Opt. Eng. 1996, 35, 1921. [Google Scholar] [CrossRef]

- Lee, Y.H.; Lee, I.K. Colour correction of stereo images using local correspondence. Electron. Lett. 2014, 50, 1136–1138. [Google Scholar] [CrossRef]

- Panetta, K.; Bao, L.; Agaian, S. Novel Multi-Color Transfer Algorithms and Quality Measure. IEEE Trans. Consum. Electron. 2016, 62, 292–300. [Google Scholar] [CrossRef]

- Wang, D.W.; Han, P.F.; Fan, J.L.; Liu, Y.; Xu, Z.J.; Wang, J. Multispectral image enhancement based on illuminance-reflection imaging model and morphology operation. Acta Phys. Sin. 2018, 67, 21. [Google Scholar]

- Qi-Chong, T.; Cohen, L.D. A variational-based fusion model for non-uniform illumination image enhancement via contrast optimization and color correction. Signal Process. 2018, 153, 210–220. [Google Scholar]

- Gang, L.I.; Fan, R.X. A New Median Filter Algorithm in Image Tracking Systems. J. Beijing Inst. Technol. 2002, 22, 376–378. [Google Scholar]

- Gunturk, B.K. Fast bilateral filter with arbitrary range and domain kernels. IEEE Trans. Image Process. A Publ. IEEE Signal Process. Soc. 2011, 20, 2690–2696. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.H. Image processing method using mixed non-linear Butterworth filter. Comput. Eng. Appl. 2010, 46, 195–198. [Google Scholar]

- Pitié, F.; Kokaram, A.C.; Dahyot, R. Automated colour grading using colour distribution transfer. Comput. Vis. Image Underst. 2007, 107, 123–137. [Google Scholar] [CrossRef]

- Ren, S.; Girshick, R.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Xing, L.; Zeng, H.; Zhangkai, N.; Jing, C.; Canhui, C. Contrast-Changed Image Quality Assessment Method. J. Signal Process. 2017, 33, 319–323. [Google Scholar]

- Wang, L.; Zhao, Y.; Jin, W. Real-time color transfer system for low-light level visible and infrared images in YUV color space. Proc. SPIE Int. Soc. Opt. Eng. 2007, 6567, 65671G. [Google Scholar]

- Reinhard, E.; Stark, M.; Shirley, P.; Ferwerda, J. Photographic tone reproduction for digital images. Proc. ACM Trans. Graph. 2002, 21, 267–276. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice Hall International: Upper Saddle River, NJ, USA, 2008; pp. 150–157. [Google Scholar]

- Shanmugavadivu, P.; Balasubramanian, K.; Muruganandam, A. Particle swarm optimized bi-histogram equalization for contrast enhancement and brightness preservation of images. Vis. Comput. 2014, 30, 387–399. [Google Scholar] [CrossRef]

- Guanghai, L.; Zuoyong, L. Content-Based Image Retrieval Using Three Pixels Color Co-Occurrence Matrix. Comput. Sci. Appl. 2012, 2, 84–89. [Google Scholar]

- Xie, J.T.; Wang, X.H. A Measurement of Color Similarity Based on HSV Color System. J. Hangzhou Dianzi Univ. 2008, 28, 63–66. [Google Scholar]

- Alain, H.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Wendi, W.; Xin, B.; Deng, N.; Li, J.; Liu, N. Single Vision Based Identification of Yarn Hairiness Using Adaptive Threshold and Image Enhancement Method. Measurement 2018, 128, 220–230. [Google Scholar]

- Tavakoli, A.; Mousavi, P.; Zarmehi, F. Modified algorithms for image inpainting in Fourier transform domain. Comput. Appl. Math. 2018, 37, 5239–5252. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Li, H.; Wang, J.; Li, R.; Lu, H. Novel analysis–forecast system based on multi-objective optimization for air quality index. J. Clean. Prod. 2018, 208, 1365–1383. [Google Scholar] [CrossRef]

- Da Silva, N.F.; Hruschka, E.R.; Hruschka, E.R., Jr. Tweet sentiment analysis with classifier ensembles. Decis. Support Syst. 2014, 66, 170–179. [Google Scholar] [CrossRef]

- Muñoz-Marí, J.; Gómez-Chova, L.; Camps-Valls, G.; Calpe-Maravilla, J. Image classification with semi-supervised one-class support vector machine. Image Signal Process. Remote Sens. XIV 2008, 7109, 71090B. [Google Scholar]

| No. | AQI | Level | Number |

|---|---|---|---|

| I | 0–50 | Excellent | 271 |

| II | 51–100 | Good | 674 |

| III | 101–150 | Light | 705 |

| IV | 151–200 | Moderate | 231 |

| V | >200 | Heavy | 219 |

| Particle index | Initial position of excellent | Initial position of good | Initial position of light | Initial position of moderate | Initial position of heavy |

|---|---|---|---|---|---|

| 1 | 0.5487 | 0.4172 | 0.5487 | 0.5492 | 0.5486 |

| 2 | 0.7148 | 0.7199 | 0.7148 | 0.7158 | 0.7143 |

| 3 | 0.6026 | 0.0011 | 0.6026 | 0.6033 | 0.6024 |

| 4 | 0.5448 | 0.3027 | 0.5448 | 0.5452 | 0.5447 |

| 5 | 0.4238 | 0.1475 | 0.4238 | 0.4244 | 0.4241 |

| 6 | 0.6456 | 0.0932 | 0.6456 | 0.6469 | 0.6453 |

| 7 | 0.4377 | 0.1869 | 0.4377 | 0.4383 | 0.4378 |

| 8 | 0.8911 | 0.3459 | 0.8912 | 0.8911 | 0.8902 |

| 9 | 0.9627 | 0.3973 | 0.9627 | 0.9628 | 0.9618 |

| 10 | 0.3837 | 0.5387 | 0.3837 | 0.3843 | 0.3839 |

| 11 | 0.7911 | 0.4194 | 0.7911 | 0.7913 | 0.7906 |

| 12 | 0.5288 | 0.6848 | 0.5288 | 0.5293 | 0.5288 |

| 13 | 0.5679 | 0.2056 | 0.5679 | 0.5683 | 0.5678 |

| 14 | 0.9247 | 0.8774 | 0.9247 | 0.9248 | 0.9239 |

| 15 | 0.0719 | 0.0283 | 0.0719 | 0.0728 | 0.0728 |

| Parameter | Meaning | Default Value |

|---|---|---|

| N | Particle number | 15 |

| Maximal iteration | 10 | |

| Learning factor | 2 | |

| Learning factor | 2 | |

| Maximal intertia weight | 0.9 | |

| Minimal intertia weight | 0.1 |

| Level | Reference image and target image (%) | Reference image and corrected image (%) | Degree of improvement (%) |

|---|---|---|---|

| Excellent | 7.33 | 60.60 | 53.27 |

| Good | 72.34 | 83.57 | 11.23 |

| Light | 79.10 | 83.95 | 4.84 |

| Moderate | 79.19 | 84.28 | 5.09 |

| Heavy | 28.13 | 61.99 | 33.86 |

| Excellent | 7.33 | 60.60 | 53.27 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, S.; Wang, P.; Hu, Y. Haze Image Recognition Based on Brightness Optimization Feedback and Color Correction. Information 2019, 10, 81. https://doi.org/10.3390/info10020081

Hao S, Wang P, Hu Y. Haze Image Recognition Based on Brightness Optimization Feedback and Color Correction. Information. 2019; 10(2):81. https://doi.org/10.3390/info10020081

Chicago/Turabian StyleHao, Shengyu, Peiyi Wang, and Yanzhu Hu. 2019. "Haze Image Recognition Based on Brightness Optimization Feedback and Color Correction" Information 10, no. 2: 81. https://doi.org/10.3390/info10020081

APA StyleHao, S., Wang, P., & Hu, Y. (2019). Haze Image Recognition Based on Brightness Optimization Feedback and Color Correction. Information, 10(2), 81. https://doi.org/10.3390/info10020081