Abstract

We present the technologies and the theoretical background of an intelligent interconnected infrastructure for public security and safety. The innovation of the framework lies in the intelligent combination of devices and human information towards human and situational awareness, so as to provide a protection and security environment for citizens. The framework is currently being used to support visitors in public spaces and events, by creating the appropriate infrastructure to address a set of urgent situations, such as health-related problems and missing children in overcrowded environments, supporting smart links between humans and entities on the basis of goals, and adapting device operation to comply with human objectives, profiles, and privacy. State-of-the-art technologies in the domain of IoT data collection and analytics are combined with localization techniques, ontologies, reasoning mechanisms, and data aggregation in order to acquire a better understanding of the ongoing situation and inform the necessary people and devices to act accordingly. Finally, we present the first results of people localization and the platforms’ ontology and representation framework.

1. Introduction

The Internet of Things (IoT) is becoming more and more popular and its applications are facing an enormous proliferation resulting in a new digital ecosystem. IoT platforms are essentially the linchpin in a holistic IoT solution because they enable data generated at endpoints to be collected and analyzed, spawning the growth of big data analytics and applications. The rapid increase in the number of network-enabled devices and sensors deployed in physical environments, enriched with information processing capabilities, has produced vast numbers of databases. As IoT is based on a wide range of different heterogeneous technologies and devices, there is not a uniform vocabulary for representation and processing of data. This has led to a large number of incompatible IoT platforms. Through this way, it is very difficult for data scientists to extract knowledge from the enormous number of data producing every second through the IoT applications.

IoT platforms have received a significant amount of attention due to the simplicity and efficiency they bring in creating business value, linking the IoT endpoints to applications and analytics. Based on generic middleware, Open APIs (Application Programming Interface) and tools, they provide standard-based, secure infrastructures, and interfaces to build IoT applications, manage connected devices and the data those endpoints generate, and streamline common features that would otherwise require considerable additional time, effort, and expense.

The emphasis in the current IoT landscape, however, is mainly placed on the device-to-device interaction. Machines and sensors are already being combined and passively gathering, transmitting, and sharing data from which we can derive useful insights. Humans have a rather passive role in this hype, acting as data providers, e.g., through wearable sensors, or high-end decision makers. However, recently, there are efforts to move to a more human-centered IoT paradigm, infusing IoT frameworks with human awareness. Examples include Alexa, Siri, and Contrana [1]. Our work is inline with this trend, and it is focused on the intelligent coordination of devices and people in order to reach a common goal in a single framework—for example, in the case of an incident, to understand the content of the situation, in order to prepare the necessary devices that are relevant to the problem, and also inform and coordinate the responsible people (in our case, the personnel or the volunteers) in order to take the appropriate actions to prevent or even mitigate the incident’s consequences. However, people are evolving as an integral part of the IoT ecosystem, interacting with processes, data, and things driving the evolution toward a ubiquitously connected world with immense possibilities. In this new realm, novel concepts and methods are needed to infuse and transform human awareness into situation awareness, support smart links between humans and entities on the basis of goals, and to adapt device operation to comply with human objectives, profiles and privacy.

The rapid increase in the number of network-enabled devices and sensors deployed in physical environments, enriched with information processing capabilities, has allowed the interconnection of people, processes, data and devices, offering enormous potential across many sectors. The large societal and personal impact of pervasive, mobile and interconnected entities in the web, is already apparent in maritime [2], agriculture [3], smart factories [4], and cities [5]. For example, in smart cities, IoT technologies are used from collecting and interrogating city-centre parking metrics, to the use of so-called “smart” street lighting to generate efficiencies. One of the most compelling, however, use cases is the technology’s use for safety. In this context, the challenge is to use humans and devices interchangeably to achieve operational goals and respond to emergency situations, such as natural disasters, vandalism, or missing people in overcrowded places. At the same time, pervasive technologies and eHealth systems seem to offer a promising solution for accessible and affordable self-management of health problems, both in living and working environments. Wearable devices and ambient sensors can be transformed into meaningful lifestyle and work-style monitoring tools, extracting personalized partners and detecting problematic situations to foster healthy and safe living and working environments.

In this paper, we describe the key technologies that underpin the development of DESMOS, a novel framework for the intelligent interconnection of smart infrastructures, mobile and wearable devices and apps for the provision of a secure environment for citizens, especially for visitors and tourists. The platform aims to promote the collaboration between people and devices for protecting tourists, supporting timely reporting of incidents, adaptation of the interconnected environments in case of emergency, and the provision of assistance by empowering local authorities and volunteers. More specifically, the framework aims to support: (a) fast, timely, and accurate notifications in case of emergencies (e.g., medical incidents), sending at the same time all contextual information needed to help authorities coordinate and assist people, protecting the privacy of the monitored people, (b) anonymous reporting of incidents using crowdsourcing, with a special focus on incidents involving tourists, e.g., thefts, and (c) adaptability of services, devices and people to respond to incidents and protect citizens/tourists.

In order to realize the above-mentioned goals, the platform follows a systematic approach for interconnecting people, services, and devices using: (a) applications in mobile and wearable devices that will be used by volunteers, citizens and local authorities, (b) smart spots able to listen for reports and request for help and further propagate them in the local intelligent network, and (c) fusion and interpretation of heterogeneous events and information through semantic reasoning and decision-making. The contribution of this paper lies in the effective combination of two different research fields: multi sensor data analytics and events, developing intelligent machine learning and rule-based algorithms for context-aware data fusion for event recognition, device localization, etc. Semantic representation of information, reasoning and interoperability, based on Semantic Web technologies. In this paper, we will present the main framework of our research and present the results of the localization and the DESMOS ontology

The platform will be evaluated in pilot trials that will take place in the smart city of Trikala (Greece), which has a strong commitment towards enhancing the feeling of safety of people. More specifically, the pilots will take place (a) in the Christmas Theme Park that is reported to have more than one million visitors yearly, and (b) in the central square and sidewalk of the city.

The rest of the paper is organized as follows. In Section 2, we describe relevant work. In Section 3 the architecture of the proposed system is presented. Section 4 describes the primary components of the architecture. Section 5 describes the localisation technique. The semantic representation and analysis can be found in Section 6. In Section 7, different use cases scenarios are presented. Section 8 concludes the paper.

2. Related Work

IoT deployments are not based on a single technology but rather on the integration of multiple technologies. The research process of designing an interconnected infrastructure for smart cities comprises various components. The first components include the sensors interacting with the physical world, the communication between gateways and server platforms and data protocols. The second category components include data representation and analytics. The third stage components are, wherever applicable, the data privacy techniques. The continuous advancement in electronics allowed many IoT devices, e.g., smart phones and smart watches, to be equipped with dozens of sensors. These devices through the incorporated sensors can measure different physical parameters and are deployed in different IoT applications. The measured physical parameters can be from motion, position sensors to optical sensors, etc. Data generated from all these sensors on the devices have to be sent in a central system to be processed. Data communication is done through a network, which, according to its network topology, can be either local (e.g., Local Area Network—LAN) or wide (e.g., Wide Area Network—WAN). However, due to its high power consumption, Low Power Wi-Fi is used instead in IoT devices. Other protocols that don’t consume much energy and are used in LANs are Zigbee [6], Z-Wave [7], and Thread [8]. The downside with these protocols is that they have a low transmission rate. A solution to this problem comes with Bluetooth Low Energy (BLE), which allows data rates of about 1.3 Mbps [9]. In our case, we will use the BLE technology for the wearables for the communication with the smartphones and smartpoints due to its lower power consumption and lower cost.

Ontologies and technologies of Semantic Web are being widely used for the representation of the data and the ontologies of the IoT. Ontologies provide the means for a structured description of an object or concept. They are used to semantically enhance resources, through facilitating the understanding of the meaning of the metadata that are associated with sensor objects [10]. The addition of semantics to sensor networks leads to the so-called Semantic Sensor Web (SSW). In 2012, W3C (World Wide Web Consortium) suggested an innovative ontology, the Semantic Sensor Network (SSN), as a human and machine-readable specification that covers networks of sensors and their deployment on top of sensors and observations [11]. The target of this project was to face the problems that arose from the heterogeneous data from different devices. However, there are limited ontologies that annotate the time–space correlation between the sensor data and the resources. In addition, SSN has only one class for all the sensors, and it is difficult to annotate the different parameters of each sensor. In order to overcome that, the authors in [12] deployed the Sensor, Observation, Sample, and Actuator (SOSA) ontology providing a formal but lightweight general-purpose specification for modelling the interaction between the entities involved in the acts of observation, actuation, and sampling.

A few other research projects on the IoT semantic framework are: (i) the Open-IoT project relies on a blueprint cloud-based IoT architecture, which leverages the W3C SSN ontology for modeling sensors [13]. (ii) The IoT-Lite ontology [14] is an instantiation of the semantic sensor network (SSN) ontology to describe key IoT concepts allowing interoperability, discovery of sensory data in heterogeneous IoT platforms by lightweight semantics. This project was deployed to address the concern that semantic techniques increase the complexity and the processing time. (iii) The FIESTA-IoT [15] proposed a holistic and light-weight ontology that aimed to achieve semantic interoperability among various fragmented testbeds, reusing core concepts from various popular ontologies and taxonomies (SSN, IoT-Lite).

Event [16] was one core ontology for event annotation. This ontology was centered around the notion of event, seen here as the way by which cognitive agents classify arbitrary time/space regions. The agent class was derived from the FOAF (Friend of a Friend) ontology, a core ontology for the social relationships [17]. The authors in [18] presented an ontology for situation awareness (SAW). These ontologies annotated events with general situations and could be expanded with supplementary ontologies. In [19], researchers developed a platform to assist citizens in reporting security threats together with their severity and location. The threats were classified using a general top-level ontology, with domain ontologies supporting the detailed specification of threats. The information about the threats were stored in a knowledge base of the system that allowed for lightweight reasoning with the gathered facts. In [20], an ontology was developed based on dangerous events on the road traffic for the people’s safety. In the ontology, the general categories of threats were stored, whereas, in the database, the actual data about selected areas in particular time were located. In TRILLION H2020 project 2016, a modular ontology was developed for public security. Through this ontology, citizens could report an event through mobile devices. On the framework of this proposed project, new ontologies have to be deployed that are not incorporated in the above-mentioned ontologies. These ontologies must annotate every urgent event related with the public security.

Tracking and localization of human objects is a task of increasing interest and of great relativity to the DESMOS framework. Human localization is quite complex, and its difficulty grows when the task is performed in indoor spaces, due to the existence of crowd and obstacles [21]. Fusion of multiple sensor data in object localization studies is usually performed by Kalman filtering. Its simplicity creates the advantage of speed, but the underlying distribution assumptions cause limitations [22]. In [21], a Kalman filter data fusion technique is proposed that combines sensors embedded in a wearable platform, namely accelerometer, gyroscope and magnetometer, for indoor localization and position tracking. Kalman based fusion is also applied in [22] to combine audio and visual data from heterogeneous sensors for object tracking. A localization algorithm based on an extension of Kalman filtering is presented in [23], where data from vision sensors and smartphone-based acoustic ranging are fused for real-time dynamic position estimation and tracking. Applications that combine activity recognition with localization are often found. In [sensorfusionapproach], the authors used wearable sensors for activity recognition and environmental sensors for motion information and combined the two sources to perform localization in indoor spaces. In [devicefreerf], they exploit the RSSI (Received Signal Strength Indicator) information for localization and fall detection using a Hidden Markov model as a detection algorithm. In [unobtrusive], they developed a device free system with RFID tags, for indoor human localization and activity recognition. The authors aim at reducing the human interference in relevant tasks, so that the information obtained is not dependent on remembering or be willing to carry a wearable sensor.

3. Overview of System Architecture

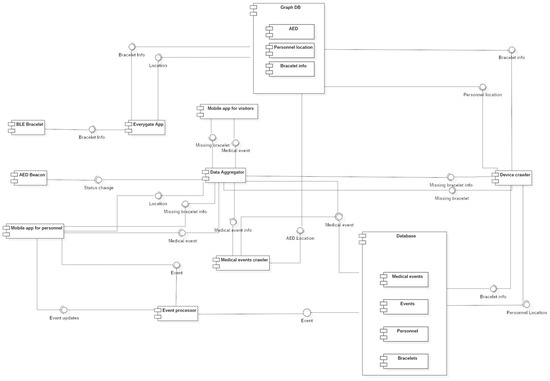

The proposed system architecture (as presented in Figure 1) consists of three basic layers: (i) the Hardware layer which consists of the mobile devices, the sensors and the actuators, (ii) the Middleware layer that supports the easy interaction with the edge components (e.g., sensors, actuators) and is responsible for managing the communication between the two edge layers of DESMOS, and (iii) the DESMOS platform (Data Aggregator, crawlers and databases), a cloud based layer that is used for storing, processing of the collected data, and generation of reports that will be sent back to the Middleware. All the aforementioned layers will be evaluated in the use cases scenarios in real life conditions.

Figure 1.

DESMOS system architecture.

In more detail, the modules that will be part of the Hardware layer include, mainly, mobile devices (e.g., smartphones and wearables) which will incorporate embedded sensors to generate data and “fuse” the system with them. Actuators will also be used (e.g., light beacons, AED (Automated External Defibrillators)) as part of the system’s smart infrastructure, to increase the performance of the system responding to certain, critical events (e.g., health cardiac issues of visitors). In DESMOS, two applications for the mobile devices will be developed. The first one would be used by the visitors before entering certain areas of the city of Trikala, to enable them to enter, register, and use the smart capabilities provided by DESMOS system, when those are needed according to the three use case scenarios. The second mobile application will be used by the responsible personnel to gain access to detailed information regarding on-going events that might need their action. Wearables and button-like sensors will also serve this layer mainly through the collection of data (e.g., user’s location) that will provide to the system. The personnel will use one more application in the mobile devices called Everygate, which is used in telematics solutions [24]. In the DESMOS project, this application will scan the area through mobile anchors equipped with BLE and WiFi technology for specific BLE bracelets that will be used by the children and send this information to the platform. Additionally, it will send the location of each member of the personnel to the platform, which is known as they have GPS or WiFi availability. This allows the modules in the platform to find the exact location of a child wearing such a bracelet in case it is needed. Finally, a number of stationary devices will be deployed which will run the Everygate software. The reason for these devices is to cover inside spaces and key points of the area, such as the entrance.

In the Middleware layer, a data aggregator module will be developed, responsible for handling all the heterogeneous edge devices (e.g., smartphones, wearables) and manage the communication of the data between the components of the two edge layers of DESMOS, achieving bi-directional communication. The design and development of the needed APIs will facilitate and serve the needed inter-section communication. Special care for data integrity and privacy will be applied for this communication. The module in this layer will be hosted on a cloud platform, in order to have the edge devices (mobiles, sensors and actuators) easily communicate with the rest of the modules.

The DESMOS platform layer will be mainly considered as a cloud implementation where the storing and processing of data will take place. Specifically, this involves a semantic repository (triple-store) through the GraphDB database for storing sensor observations, user profiles, as well as ontology reasoning modules to implement a set of data fusion and aggregation services. Moreover, a relational database will be used to store information regarding medical, missing child, and environmental events. All in all, the purpose of this layer is to semantically analyze the combined available information to derive higher level events and adapt the environment, considering both contextual information and business policies. Example adaptations include the generation of appropriate alerts to inform local authorities and volunteers, as well as to prepare certain devices to respond to incidents and protect citizens/tourists, such as defibrillators. This will be achieved through the Device Crawler that will extract semantically and classic queries through the GraphDB and the relational database in order to send information and knowledge to the Data Aggreagator. The specifics of each layer are discussed in the following sections.

4. Monitoring and Data Collection

4.1. Sensor Types

Two mobile applications will be developed for the project aiming at two different user types. The first one will be for the personnel and the other one for the visitors. These applications will take advantage of three sensor types found in every smartphone device: GPS (Global Positioning System), camera, and microphone. Through the GPS, the location of the personnel will be acquired and send the data to the cloud. If an event happens, the system will be able to know who is the closest person to that event and notify him/her. In case a member of the personnel is inside a building, he will be in special areas of the buildings in order to cover all the building infrastructure using the Wi-Fi and mobile networks. There will be WiFi-BLE anchors (stable smart phones) in the entrances and other specific areas that cover all the buildings in order to scan in the building and notify the nearest personnel if there is an emergency event near him. Moreover, in case a citizen needs help, his/her location will be able to be acquired using the GPS or the Wi-Fi and mobile networks and notify the personnel. Using the camera and the microphone in their mobile device, citizens, tourists, and members of the personnel will be able to send media files (e.g., photographs and videos) of critical parts where an event happens or a vandalism has happened.

Communication between the cloud platform and the mobile devices is achieved using Wi-Fi and cellular networks (e.g., LTE).Most of the European cities provide full WiFi coverage in the centers and also a large number of festivals. Mobile devices are connected to the internet using the existing Wi-Fi infrastructure in the testing environment. The infrastructure covers the whole testing environment with Wi-Fi access points and is accessible to both visitors and personnel. However, if the connectivity is lost, the devices can use the cellular networks to access the internet and exchange data with the platform through the middleware.

Children and other special treatment people visiting the testing environment will wear a bracelet equipped with a BLE module which broadcasts its address in regular time intervals. The address is received by the mobile and stationary devices using the Everygate application and, together with the received signal strength indication (RSSI), is sent to the platform. When the person wearing the bracelet goes out of range from the devices, the system notifies the personnel about the event. Furthermore, if a child goes far away from his/her guardian, the system is able to find the child using trilateration because wearables will not have GPS. There will be some stable mobile phones in the entrances of the buildings in order to locate if any child has entered a building. In order to find the exact position of the child indoors, there will be a number of static mobile phones in specific areas of the building in order to provide full signal coverage. Lastly, buttons equipped with BLE technology will be given to citizens who may need help. The citizen will press the button and the device will broadcast its address. In the same way as with bracelets, the address and the RSSI will be uploaded to the platform where, using trilateration, the button’s location will be calculated, and a notification will be sent to the closest personnel person. This BLE localization will happen if a child or a person with special treatment was lost in the testing environment.

4.2. Data Collection Infrastructure

A cloud infrastructure based on OpenStack [25] will be used to host the necessary services of the platform. Using the MQTT (Message Queuing Telemetry Transport) protocol, the cloud infrastructure receives data from the mobile devices. Each service that processes data saves data in the cloud and informs the other services about the availability of new data to trigger additional processing tasks. Depending on the type of data, communication is performed either using MQTT or REST (Representational state transfer) APIs. When real-time data propagation and analysis is needed, MQTT is used as the means to communicate with other modules. Otherwise, REST APIs are used for pushing data to the cloud and sending respective output to other modules.

4.3. Crowdsourcing Techniques

The project will use groups of people in order to gather data in two ways. In both ways, people will use an application in a mobile device which will send the data to the cloud infrastructure. In the first way, the users don’t have to do anything other than installing and setting up the application which runs in the background. It listens for broadcasted addresses from BLE devices and sends the data in the cloud infrastructure along with the BLE device’s RSSI and mobile device’s location. In the second way, the users have to interact with the application to gather and send data. The users will be able to send information about an event to the cloud infrastructure using their mobile devices. The information will include the location of the event, a short description, and a media file, such as a photograph and a video. The event can be anything abnormal in the surrounding area including a theft, vandalism, or a violent incident.

4.4. Data Protection and Privacy

Taking into consideration the ever-increasing concerns people have about their data, one of the most important project’s targets is to protect the users’ data privacy. The first step in accomplishing this is by protecting the communications between the services and the mobile devices using authentication, authorization, and encryption techniques. In that way, only the intended services have access to data they need. All data communicated from the REST APIs will be encrypted using the TLS (Transport Layer Security) protocol. Moreover, data communicated from the MQTT will also be encrypted. Authorization will be used so only specific members will have access to other people’s data. Anonymization techniques will be used, such as the Privacy Protection Communication Gateway (PPCG) [26]. Furthermore, to be compliant with Europe’s strict directive regarding users’ data which started in 2018 under the name “General Data Protection Regulation” (GDPR) [27], only the necessary data for the system’s functionality is gathered from the users. Finally, the data will be used only for purposes related to the project and will not be disclosed to third parties.

5. People Localization

The main motivation of our work in progress stems from the challenge arisen from using the Bluetooth Low Energy (BLE) technology for indoor and outdoor position estimation position in the case of smart bracelet wearing by the children entering the testing environment. BLE is a technology that is facing a great adoption in embedded systems and electronics in general. This happens because it consumes very low power and also it is easy to integrate in electronics. In this case, the RSSI is the only value available to the receiver to correlate it with the distance. One of the most common practices in the literature is to convert the RSSI measurements to distance. However, this is difficult due to radio signals that are distorted due to multipath effects. These effects can be derived from reflective surfaces and walls even from the presence of people. This leads to poor position estimation performance.

We started this study in order to estimate the true location of children in one of the most crowd sourced festivals in Greece (Mills of the Elves). Children will wear a smart bracelet with BLE technology which will be wirelessly connected in the parent’s phone. In our case, the child’s bracelet will be the RSSI transmitter, while the parent’s phone will be the RSSI receiver. When the child will go far away from his parent, a missing-child event will be triggered in the platform. After that, the device crawler will try to find the nearest personnel to the child in order to acquire its position. In order to study the problem with the RSSI and distance, we needed datasets. We faced great difficulty to find a proper dataset to train our machine learning techniques in our case.

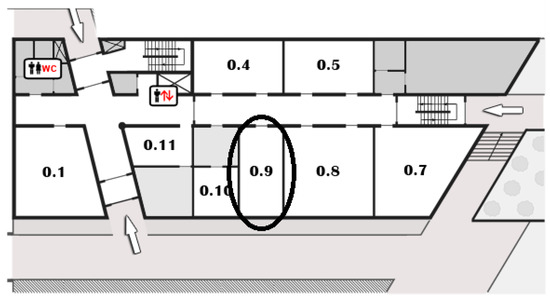

We used an Android Smartphone to communicate through BLE protocol with two different fit wearables at a low cost (Activity Tracker Xiaomi Mi Band 2 and 3 (City, US State abbrev. if applicable, Country) in order to receive the RSSI values. Due to the fact that transmission power fluctuates from time to time, the RSSI values are changing in the same location, resulting in an inaccurate distance-loss model. In order to face this problem, we used different noise filtering algorithms to smooth the signal fluctuation. We took the records from an office, a hall, and outside of the building, whose top view’s picture shows Figure 2. The first indoor environment was a 10 × 4 m office, shown in Figure 2. The office was selected due to its large number of wireless devices, resulting in an environment with a lot of noise interference. The second place used was a 20 × 2 m hall outside the office. This place was selected in order to correlate the distance with the RSSI values in a cleaner environment without a lot of interference. The last place was in the outside environment of our institution (CERTH–ITI). This place was ideal for outside measurements without the presence of wireless devices. This place was also chosen as our target in the final project is to calculate the distance of a user with only a simple BLE bracelet in an outdoor environment.

Figure 2.

Map of the place of measurements.0.x:0 for the ground floor and x is the number of the office.

In order to calculate the correlation between RSSI and distance, a set of BLE modules has to be fixed at specific prior known locations. For this purpose, we used two BLE smart sport-bracelets. The first one was Xiaomi MI Band 2 [28] and the second one was the Xiaomi MI Band 3 [29]. The RSSI tags were sent in JavaScript Object Notation (JSON) format with other information through an Android application in a smartphone that was considered as a fixed anchor station. The smartphone device scans for the BLE bracelets and also sends the JSON tags in an MQTT server. Through the MQTT server, the JSON messages are sent to a MQTT client application in the JAVA software language. After receiving the JSON messages, they were gathered in a MongoDB database for analysis. All the measurements were performed at the same height receiving a similar interference from the surrounding environment. To perform an equal set of tests between all the experiments, a similar transmit power and time measurement interval was required to be used on all the components. The time interval was 5 s. In order to analyze the RSSI fluctuations, we gathered over 100 measured RSSI values for each position. We took measurements from three different environments—from one office with many wireless devices, from one hall, and lastly from an outdoor environment. Figure 2 shows the scatter and the distribution plot for the RSSI measurements from the office for Band 2. On this dataset, we took measurements from 17 different indoor office positions to observe the difference between each position. All the measurements were carried out in the same day. The distance range were varied from 0.2 to 6.0 m. In each position, the measurements were from 100 to 250 recordings. As we can see from the figure, there is a big fluctuation in each position changing over time. In addition, we can observe that, between the distance 0.2 to 1 m, there is a bigger fluctuation between the RSSI values, so it is too difficult to estimate the exact distance on this range.

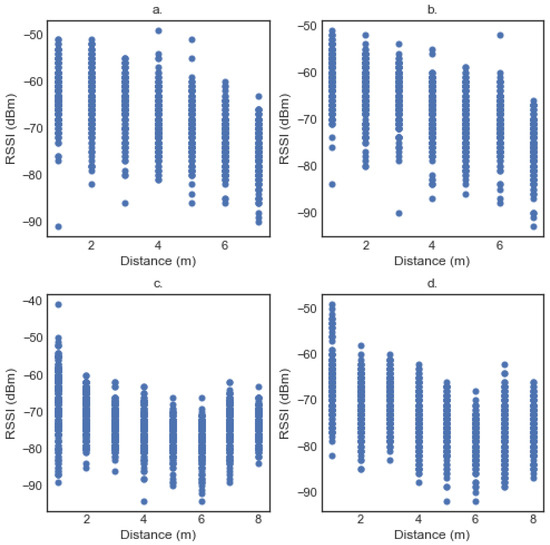

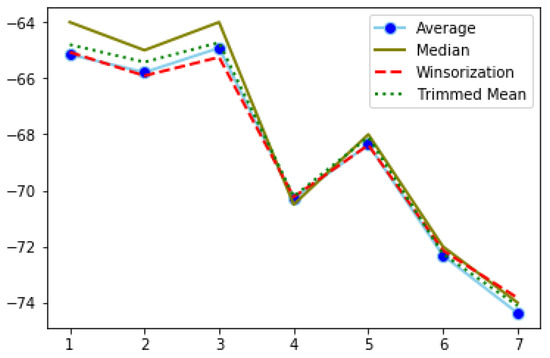

In Figure 3a,b, there are the scatter plots of the RSSI values for Band 2 and Band 3. These plots refer to the hall environment. On this environment, we took measurements from seven different positions (1 to 7 m, with one meter increment), as here we didn’t expect so much interference as in the office. The number of measurements of each position were over 190 values. Here, we can see that there is no so much difference between band 2 and band 3 devices. In addition, there is an almost logarithmic reduction in RSSI with the distance increment.

Figure 3.

(a) Scatter Plots of band 2 in the hall. (b) Scatter Plots of band 3 in the hall. (c) Scatter Plots of band 2 in outdoors. (d) Scatter Plots of band 3 in outdoors.

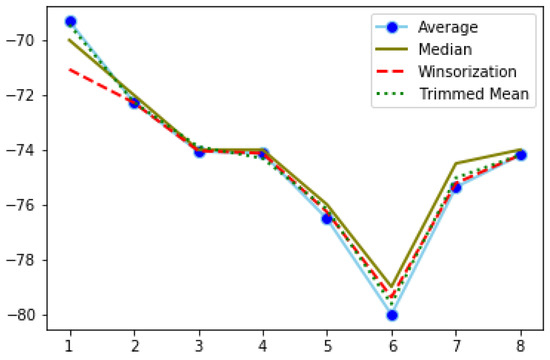

The scatter plots for Band 2 and Band 3 in the outdoor environments are in Figure 3c,d, respectively. The distance range is varied from one meter to eight meters. In each position, we took over 150 measurements. From these figures, we can notice that there is an almost identical behaviour between the two smart bracelets. In addition, we can see that there is an increase after some meters in the RSSI that is due to the outdoor environment with some trees and walls influencing the wireless signal. From the data above, we can conclude that the raw RSSI values are not reliable enough for the localization as it is observed in most of the bibliography [30,31]. We suggest some statistical filters in the next paragraphs, in order to improve the stability of the RSSI values and some machine learning techniques to find the distance from the RSSI values.

A set of recordings that covers the whole range of distances was selected from each experiment, to be further used for fitting the RSSI distance model. This stage of the analysis is usually referred to as calibration or fitting. More specifically, we collected 100 RSSI measurements at known distance points, ranging from 0.2 to 8 m, depending on the restrictions imposed by the space the experiment was conducted. To eliminate the fluctuations of RSSI values that occur especially in indoor environments, the following preprocessing methods, also applied in [32], were evaluated:

- Averaging of the RSSI recordings at each distance point,

- Median value of the RSSI recordings at each distance point,

- The trimmed mean,

- Winsorization technique.

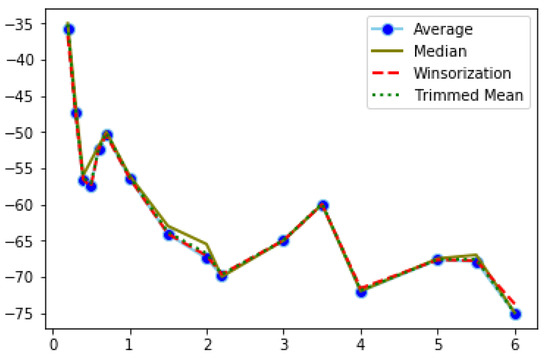

Average and median are well known descriptive measures; however, the average is more sensitive to outlier values. Trimmed mean calculates the average of observations after excluding values outside a specific proportion of data. Winsorization replaces the values exceeding a given lower and upper bound, with the values of the respective bounds [32]. Kernel density was also tested, though it did not perform as well as the other methods, thus the results are not presented here. For the trimmed mean, the observations exceeding 90% of the data were excluded. The Winsorization technique used 5% as lower and upper bounds. Figure 4, Figure 5 and Figure 6 show the results of the filtered RSSI recordings for the calibration set, obtained from band 2. The calibration set consists of 100 recordings of each distance point. The signal is supposed to weaken as the distance increases; however, this is not always the case, as it can be seen from the above-mentioned figures. After applying the Winsorization technique, the average values of the filtered dataset were taken and presented in the aforementioned figures.

Figure 4.

Filtered RSSI recordings using Band 2 in the office.

Figure 5.

Filtered RSSI recordings using Band 2 in the hall.

Figure 6.

Filtered RSSI recordings using Band 2 in the outdoor space.

The fluctuations of RSSI values in the office (Figure 4) are more intense and observed across all distance points. On the other hand, RSSI filtered values from the hall (Figure 5), which is an indoor space but without obstacles, and from outdoors (Figure 6), range approximately between −74 and −64, while, in the office, the interval is much wider. In both of the latter cases, we observe sharp changes at specific distance points.

The filtered recordings are used to fit the RSSI distance model. The RSSI recordings are assumed to relate to distances logarithmically [33]. The path loss model [34] is used to translate RSSI to distances:

In the current application, linear, log-linear, and polynomial regression models were tested to find the tendency of the RSSI curve. In general, linear and polynomial regressions were found to be the best fitted models, although, in indoor spaces with many obstacles, such as the office, the obtained R2 was still quite low. The performance of a model during the stage of fitting was assessed by the R2 value and the value of the coefficients. Models with higher R2 but extremely low coefficients were rejected. In Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6, the best performing methods for each filtering technique are reported. Inside the office, both bands (Table 1 and Table 2) perform poorly, with R2 values around 0.6. The best model is obtained when fitting the trimmed mean of the raw RSSI values with the second degree polynomial regression. The hall was an indoor space without obstacles; therefore, improved fitting of the RSSI values to distances was expected. In this experimental setup, band 3 performed better. All filtering methods resulted in acceptable R2 values, with averaging and winsorization performing better for bands 2 and 3 respectively. Surprisingly, in the experiments conducted outdoors, the two bands had quite diverse performances.

Table 1.

RSSI to distance office band 2.

Table 2.

RSSI to distance office band 3.

Table 3.

RSSI to distance hall band 2.

Table 4.

RSSI to distance hall band 3.

Table 5.

RSSI to distance outdoor band 2.

Table 6.

RSSI to distance outdoor band 3.

RSSI values of band 2 and 3 in the hall were very well, as a result of free interference signals. For the outdoors, RSSI values of band 3 (Table 6) were very well fitted to the respective distances, while regression models of band 2 recordings (Table 5) resulted in quite low performance that would be expected in an indoor space with obstacles. This is mainly because of the sharp increase of RSSI at 7 and 8 m (Figure 6). When the aforementioned distances were removed from the sample, the applied models reached an R2 value of 0.9.

After fitting, the regression models should be applied to new RSSI measurements in order to predict the distances. We are currently working to assign this task.

6. Semantic Representation and Analysis

6.1. Desmos Ontology

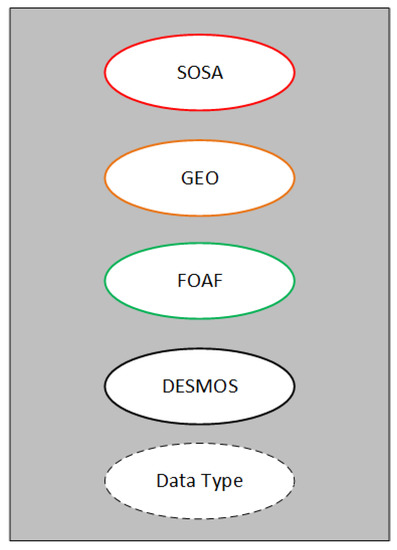

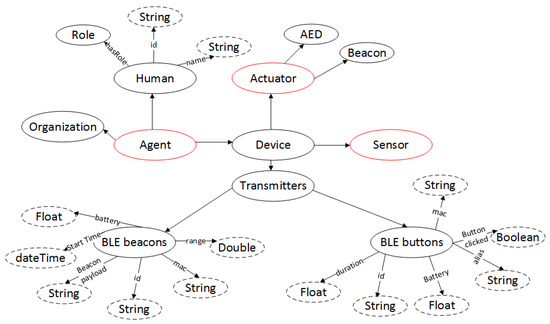

In order to enable the use of deductive reasoning over the collected IoT data to detect hazardous events, we designed the DESMOS ontology. It is an ontology that leverages concepts from SSN and SOSA [12], IoT-lite [14], Geo (i.e., WGS84) and FOAF [17], an ontology whose primary aim is to enhance human awareness using the Web. In Figure 7, there are these basic ontologies that the main idea of DESMOS is based on. Each primary ontology is illustrated with a different color. Data Type is all types of data (string, float, integer, etc.)

Figure 7.

Primary ontologies in DESMOS.

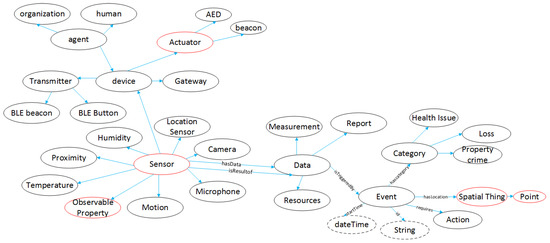

The entities of DESMOS ontology combined with the aforementioned ontologies cover most of the concepts that are needed for the event detection and the selection of the management of the risk response plans. Figure 8 illustrates an abstract representation of these ontologies and how they are linked with DESMOS ontology. The main entities/concepts that we use for representing data in DESMOS are SOSA Sensor for the representation of the devices or even the agents (including humans when it comes to crowdsourcing) that make observations. SOSA Observation representing the data that are produced by the Sensor class. DESMOS: Event represents the events that are generated through the data processing (i.e., observations). DESMOS: Action represents the appropriate actions that are required when a specific event from the use cases occurs. FOAF: Agent represents the human beings or organizations, while DESMOS: Role denotes their role (e.g., public servants, volunteers, etc.). Geo: Point is used for representing the coordinates of a sensor or an event.

Figure 8.

Entities in Desmos ontology.

6.2. Entities Description

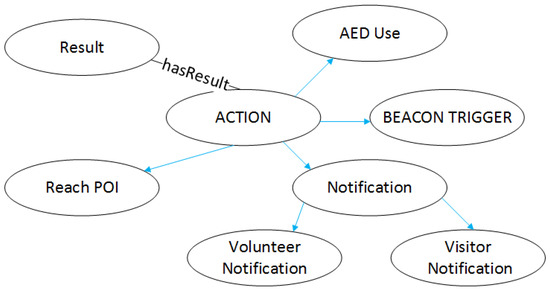

An entity Action is a process that aims to change a state of a process and produce a result. As we can see from Figure 9, an Action might be an AED use, a beacon trigger, a visitor, or volunteer notification and reach of a point of interest. All the previous lead to a Result through hasresult property.

Figure 9.

Action entity.

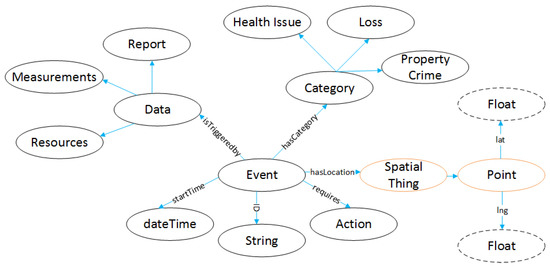

An event entity is an event derived from the data of the different sensors in the field of the use cases in Desmos. In Figure 10, it is shown how an Event is triggered (isTriggedBy) from Data class, which consists of Measurements, Reports, and Resources. Each Event belongs to a specific category (hasCategory) depending on the use case scenarios covered by Desmos. Those are Health Issue, Property Crime, and Loss for the missing people or missing a thing. Each Event has a location (hasLocation) though the Spatial Thing class that targets to a Geo point. In order to identify each event, we add an ID of String class, while also adding a dateTime linked through startTime property. Finally, all Events have an Action entity, in order to face the critical situation.

Figure 10.

Event entity.

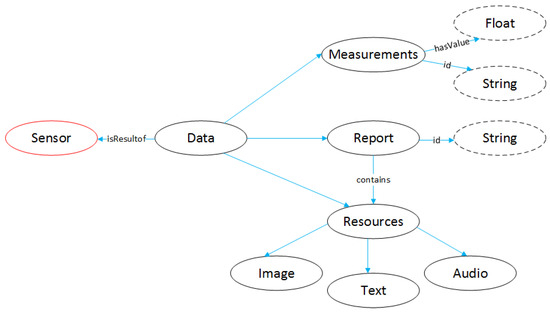

Data ontology refers to the data outputs from the different sensors. In Figure 11, we can see that data isResultOf the ontology Sensor (Figure 12). Data class may contain Measurements, Reports or Resources. Measurement has a string type ID and a property hasValue of float type. The Resource type can be an Image, a Text, or an Audio. Report has an id string and also contains a Resources class.

Figure 11.

Data Entity.

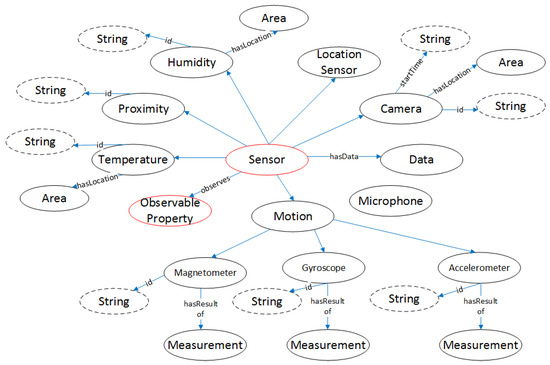

Figure 12.

Sensor entity.

Sensor class is related to all the devices, which operate in the use case scenarios. Depending on the different measuring parameters, Sensor has different subclasses (Temperature, Humidity, Proximity, etc.). On this entity, the RSSI measurements, described in the previous sections will be saved. The information comes as a JSON string and is transformed to this entity, in order to be semantically queered. The RSSI data are saved as a float number. All the sensors produce data in Data ontology through hasData property.

Agent ontology (Figure 13) refers to different people, groups of people, a software or a device. It consists of Organization, Human or Device class. Device can be an Actuator device (AED, LED light, a siren) or a Beacon. In addition, a Device can be a gateway or other transmitting device that transfers data from the devices to the Desmos platform.

Figure 13.

Agent entity.

6.3. Interpretation Layer

The interpretation layer provides a reasoning framework over the ontology representation layer described earlier for achieving context awareness and recognizing critical situations. This is achieved through the combination of the OWL 2 reasoning paradigm and the execution of SPARQL rules [35] in terms of CONSTRUCT query patterns over Resource Description Framework (RDF) graphs.

Although SPARQL is mostly known as a query language for RDF, by using the CONSTRUCT graph pattern, it is able to define SPARQL rules that can create new RDF data, combining existing RDF graphs into larger ones. Such rules are defined in the interpretation layer in terms of a CONSTRUCT and a WHERE clause: the former defines the graph patterns, i.e., the set of triple patterns that should be added to the underlying RDF graph upon the successful pattern matching of the graphs in the WHERE clause. As an example, we present the following rule that is used to notify the volunteers and to trigger a notification to volunteers that are closer to an incident reported as a missing child.

CONSTRUCT {

[] a :Notification;

:to ?v;

:location ?l .

[] a :Volunteer Notification;

:to ?d .

} WHERE {

?e a :Missing

:location ?l.

?v a : Volunteer ;

:near ?l .

?d a :Visitor Notification ;

7. Use Cases

All of the use case scenarios were the result of two focus groups with representatives from the theme park organizing team, the city’s Municipal Authorities, as well as representatives from the theme park security and health personnel. During these meetings, the participants were asked to report, analyze, and prioritize the most frequent security and protection issues occurring both in the theme park and in the city during the Christmas period. The use case scenarios that came out from the focus groups were then presented, further analyzed and finalized during project partners meeting. The final three scenarios to be implemented are the following:

- MylosKarpa and CityKarpa: “Certified KARPA users and Defibrillators, Chronic Health Problems such as Diabetes, Heart Diseases”. This use case scenario is all about accomplishing immediate and on time treatment of a health incident by the appointed health personnel of the theme park and the certified users of KARPA both in the area of the park and in the city center. A patient, or his/her escort, will be able to press the alert button in the mobile application for visitors. The patient’s location will be communicated to the platform and the closest member of the personnel will be notified to go to that location. Furthermore, the closest Automated External Defibrillator’s (AED) beacon light will light in case it is needed.

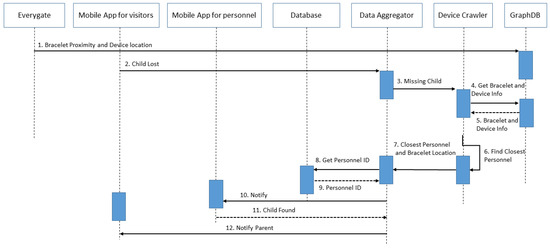

- MylosKidFinder: “Alarm and Locate children that have been lost in the Theme Park”. The second use case scenario concerns applications that will enable the early identification of children that have gone missing inside the Theme Park by the staff and their parents. Visitors in the Theme Park will be able to have their children wear a BLE bracelet. If a guardian loses his/her child from his/her sight, he will be able to press a button in the mobile application for visitors, and the platform will try to locate the missing child. The closest personnel member will be notified to find the child. When the child is found, the guardian will be notified about where he/she can meet the child. In addition, when a child goes out of range from the personnel members and the stationary devices, the platform will calculate the child’s last known location and notify the closest member to that location in order to search for it. The MylosKidFinder use case can be seen in Figure 14. In more detail, when a parent loses his/her child, through the push button, the Mobile App for visitors will send a notification in the Data Aggregator. After that, the Data Aggregator will request from a Data Crawler to find the bracelet’s info and position that the child is wearing. In parallel, the Device Crawler will find the nearest personnel from the child’s last position. All these data will be stored in the Database as a missing children event. After that, the Data Aggregator will notify the nearest personnel to find the child and parents that his/her child has been found.

Figure 14. The Unified Modeling Language (UML) diagram of the MylosKidFinder use case.

Figure 14. The Unified Modeling Language (UML) diagram of the MylosKidFinder use case. - MylosSense and CitySense: “Record and Real-time reporting of natural disasters or vandalism of buildings—monuments—points of interest.” The third use case scenario is about real-time reporting of criminal acts, incidents of violence, theft, etc. for the timely handling of dangerous incidents and the recording of frequency as a preventive criterion in the future in both the theme park and the city center. Both visitors and personnel members will be able to report events that happen through the mobile applications. The report will include a description, the event location, and a media file. Depending on the severity of the event, personnel members will go to the location to check and proceed accordingly.

All the above use case scenarios will be tested and evaluated in three different testing and evaluation scenarios in order to be adapted in real life conditions.

Description of the Testing Environment

The three use case scenarios will be implemented and evaluated in two different places in real life conditions:

- The Christmas Theme Park “Mill of the Elves” which operates in the city of Trikala for 40 days and has a large number of visitors (approximately over 1 million visitors per year during 40 days of operation). In this case, all use case scenarios will be tested.

- The city-center of Trikala. The protection and security challenges for the time period of the Christmas Theme Park are increased. In this place, the first and the third use case scenarios will be evaluated.

8. Conclusions and Future Work

In this paper, we presented the DESMOS platform, an intelligent interconnected infrastructure for public security and safety domains. More specifically, we elaborated on the semantic web-enabled framework to overcome the limitations of device and data heterogeneity. Apart from the ontologies that are used for capturing data and knowledge, we also elaborated on the framework for data collection and crowdsourcing techniques, as well as providing insights on the localization mechanisms we have developed. Finally, issues around access to devices and privacy issues of what information can be shared are discussed. In addition, we provided details on the deployment of the framework in a real-world use case that involve people visiting the largest thematic park in Greece. The system is used to facilitate the direct and fast response for detecting urgent events and selecting the appropriate actions. The platform requirements derived from the Theme Park personnel in order to address, to the highest level, the public needs.

Author Contributions

Conceptualization, A.C. and C.C.; methodology, A.C.; software, D.N., D.G.K.; validation, G.M., P.K., and A.T.; formal analysis, G.M.; investigation, A.T., C.K.; resources, A.C.; data curation, A.T.; writing–original draft preparation, A.C.; writing–review and editing, G.M., S.V.; visualization, A.T., G.G.; supervision, G.M., S.V., I.K., and C.P.; project administration, G.M.; funding acquisition F.A., I.K.

Funding

This research has been co-financed by the European Union and Greek national funds through the Operational Program Competitiveness, Entrepreneurship and Innovation, under the call RESEARCH-CREATE-INNOVATE (project code: T1EDK-03487).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hoy, M.B. Alexa, Siri, Cortana, and more: An introduction to voice assistants. Med. Ref. Serv. Q. 2018, 37, 81–88. [Google Scholar] [CrossRef] [PubMed]

- Al-Zaidi, R.; Woods, J.; Al-Khalidi, M.; Alheeti, K.M.A.; McDonald-Maier, K. Next, generation marine data networks in an IoT environment. In Proceedings of the 2017 Second International Conference on Fog and Mobile Edge Computing (FMEC), Valencia, Spain, 8–11 May 2017; pp. 50–52. [Google Scholar]

- Libelium. Available online: http://www.libelium.com/ (accessed on 21 August 2019).

- Weyer, S.; Meyer, T.; Ohmer, M.; Gorecky, D.; Zühlke, D. Future Modeling and Simulation of CPS-based Factories: An Example from the Automotive Industry. IFAC-PapersOnLine 2016, 49, 97–102. [Google Scholar] [CrossRef]

- Latre, S.; Leroux, P.; Coenen, T.; Braem, B.; Ballon, P.; Demeester, P. City of things: An integrated and multi-technology testbed for IoT smart city experiments. In Proceedings of the 2016 IEEE International Smart Cities Conference (ISC2), Trento, Italy, 12–15 September 2016; pp. 1–8. [Google Scholar]

- Zigbee Aliance. Available online: http://www.zigbee.org/ (accessed on 6 September 2019).

- Z-wave 2019. Available online: http://www.z-wave.com/ (accessed on 6 September 2019).

- Thread Protocol. “What Is Thread”. Available online: https://threadgroup.org (accessed on 6 September 2019).

- Afaneh, M. Bluetooth 5 Speed: How to Achieve Maximum Throughput for Your BLE Application. 2017. Available online: https://www.novelbits.io/bluetooth-5-speed-maximum-throughput/ (accessed on 6 September 2019).

- Soldatos, J.; Kefalakis, N.; Hauswirth, M.; Serrano, M.; Calbimonte, J.-P.; Riahi, M.; Aberer, K. OpenIoT: Open source Internet-of-Things in the cloud. In Interoperability and Open-Source Solutions for the Internet of Things; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Compton, M. The SSN ontology of the W3C semantic sensor network incubator group. J. Web Semant. 2012, 17, 25–32. [Google Scholar] [CrossRef]

- Janowicz, K.; Haller, A.; Cox, S.; Le Phuoc, D.; Lefrançois, M. SOSA: A lightweight ontology for sensors, observations, samples, and actuators. J. Web Semant. 2018, 17, 1–10. [Google Scholar] [CrossRef]

- Van der Schaaf, H.; Herzog, R. Mapping the OGC sensor things API onto the OpenIoT middleware. In International Workshop on Interoperability and Open-Source Solutions for the Internet of Things; Podnar Žarko, I., Pripužić, K., Serrano, M., Eds.; Springer: Berlin, Genmany, 2014; pp. 62–70. [Google Scholar]

- Bermudez-Edo, M.; Elsaleh, T.; Barnaghi, P.; Taylor, K. IoT-Lite: A Lightweight Semantic Model for the Internet of Things. In Proceedings of the 2016 Intl IEEE Conferences on Ubiquitous Intelligence & Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People, and Smart World Congress, Toulouse, France, 18–21 July 2016. [Google Scholar]

- Agarwal, R.; Fernandez, D.G.; Elsaleh, T.; Gyrard, A.; Lanza, J.; Sanchez, L.; Georgantas, N.; Issarny, V. Unified IoT Ontology to Enable Interoperabil-ity and Federation of Testbeds. In Proceedings of the 3rd IEEE World Forum on Internetof Things, Reston, VA, USA, 12–14 December 2016; pp. 70–75. [Google Scholar]

- Raimond, Y. Event Ontology, Motools.sourceforge.net. 2019. Available online: http://motools.sourceforge.net/event/event.html (accessed on 6 September 2019).

- Brickley, D.; Miller, L. FOAF Vocabulary Specification, Motools. Available online: http://xmlns.com/foaf/spec/ (accessed on 6 September 2019).

- Matheus, C.; Kokar, M.; Baclawski, K. A core ontology for situation awareness. In Proceedings of the Sixth International Conference of Information Fusion, Cairns, Australia, 8–11 July 2003. [Google Scholar]

- Bobek, S.; Nalepa, G. Uncertain context data management in dynamic mobile environments. In Future Generation Computer Systems; Elsevier: Amsterdam, The Netherlands, 2017; pp. 110–124. [Google Scholar]

- Adrian, W.; Ciężkowski, P.; Kaczor, K.; Ligęza, A.; Nalepa, G. Web-Based Knowledge Acquisition and Management System Supporting Collaboration for Improving Safety in Urban Environment, Multimedia Communications. In Services and Security; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Kirchmaier, U.; Hawe, S.; Diepold, K. Dynamical information fusion of heterogeneous sensors for 3D tracking using particle swarm optimization. Inf. Fusion 2011, 12, 275–283. [Google Scholar] [CrossRef]

- Colombo, A.; Fontanelli, D.; Macii, D.; Palopoli, L. Flexible indoor localization and tracking based on a wearable platform and sensor data fusion. IEEE Trans. Instrum. Meas. 2014, 63, 864–876. [Google Scholar] [CrossRef]

- Jiang, C.; Fahad, M.; Guo, Y.; Chen, Y. Robot-assisted smartphone localization for human indoor tracking. Robot. Auton. Syst. 2018, 106, 82–94. [Google Scholar] [CrossRef]

- How it Works - Everygate. Available online: https://www.m2massociates.com/how-it-works/ (accessed on 6 September 2019).

- Build the future of Open Infrastructure. Available online: https://www.openstack.org/ (accessed on 25 August 2019).

- Chatzigeorgiou, C.; Toumanidis, L.; Kogias, D.; Patrikakis, C.; Jacksch, E. A communication gateway architecture for ensuring privacy and confidentiality in incident reporting. In Proceedings of the 15th International Conference on Software Engineering Research, Management and Applications, London, UK, 7–9 June 2017; Volume 106, pp. 407–411. [Google Scholar]

- General Data Protection Regulation. Available online: https://eugdpr.org/ (accessed on 20 August 2019).

- Mi Band 2. Specifications. 2019. Available online: https://www.mi.com/global/miband2/ (accessed on 5 May 2019).

- Mi Band 3. Specifications. 2019. Available online: https://www.mi.com/global/mi-band-3/ (accessed on 5 May 2019).

- Röbesaat, J.; Zhang, P.; Abdelaal, M.; Theel, O. An improved BLE indoor localization with Kalman-based fusion: An experimental study. Sensors 2017, 17, 951. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Geng, E.; Ye, Z.; Xu, Y.; Lin, J.; Pang, Y. Indoor positioning algorithm based on the improved RSSI distance model. Sensors 2018, 18, 2820. [Google Scholar] [CrossRef] [PubMed]

- Maratea, A.; Gaglione, S.; Angrisano, A.; Salvi, G.; Nunziata, A. Non parametric and robust statistics for indoor distance estimation through BLE. In Proceedings of the 2018 IEEE International Conference on Environmental Engineering (EE), Milan, Italy, 12–14 March 2018; pp. 1–6. [Google Scholar]

- Davidson, P.; Piché, R. A survey of selected indoor positioning methods for smartphones. IEEE Commun. Surv. Tutor. 2017, 19, 1347–1370. [Google Scholar] [CrossRef]

- Sadowski, S.; Spachos, P. Rssi-based indoor localization with the internet of things. IEEE Access 2018, 6, 30149–30161. [Google Scholar] [CrossRef]

- Knublauch, H. SPIN-SPARQLInferencingNotation. Available online: http://spinrdf.org/ (accessed on 25 August 2019).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).