Computational Stylometrics and the Pauline Corpus: Limits in Authorship Attribution

Abstract

1. Introduction

- The historical Paul authored one or more of these letters.

- Stylometry can contribute to resolving Pauline authorship disputes.

2. Broad Objections to the Validity of Pauline Computational Stylometric Assumptions

2.1. Assumption: The Historical Paul Authored One or More of These Letters

2.2. Assumption: Stylometry Can Contribute to Resolving Pauline Authorship Disputes

3. Basic Study Design

3.1. Core Files

3.2. Data

3.3. Design

3.4. Note on Stylo Tool

4. Computational Methodology

Natural Language Processing Pipeline

- Vocabulary Richness

| def calculate_vocabulary_richness(self, tokens: List[str]) -> Dict[str, float]: if not tokens: return self._empty_vocab_features() N = len(tokens) V = len(set(tokens)) freq_dist = Counter(tokens) V1 = sum(1 for freq in freq_dist.values() if freq == 1) V2 = sum(1 for freq in freq_dist.values() if freq == 2) M1 = N M2 = sum(freq ** 2 for freq in freq_dist.values()) yules_k = 10000 * (M2 − M1) / (M1 ** 2) if N > 0 and M1 > 0 else 0 simpsons_d = sum((freq * (freq − 1)) / (N * (N − 1)) for freq in freq_dist.values()) if N > 1 else 0 herdan_c = math.log(V) / math.log(N) if N > 0 and V > 0 else 0 guiraud_r = V / math.sqrt(N) if N > 0 else 0 probs = [freq / N for freq in freq_dist.values()] entropy = −sum(p * math.log2(p) for p in probs if p > 0) return { ‘type_token_ratio’: V / N if N > 0 else 0, ‘hapax_legomena_ratio’: V1 / N if N > 0 else 0, ‘dis_legomena_ratio’: V2 / N if N > 0 else 0, ‘yules_k’: yules_k, ‘simpsons_d’: simpsons_d, ‘herdan_c’: herdan_c, ‘guiraud_r’: guiraud_r, ‘vocab_size’: V, ‘entropy’: entropy } } |

- 2.

- Sentence Complexity Analysis

| def calculate_sentence_complexity(self, tokenized_sentences: List[List[str]]) -> Dict[str, float]: if not tokenized_sentences: return self._empty_complexity_features() lengths = [len(sent) for sent in tokenized_sentences if sent] if not lengths: return self._empty_complexity_features() mean_length = np.mean(lengths) complexity_score = np.std(lengths) / mean_length if mean_length > 0 else 0 short_ratio = sum(1 for l in lengths if l < 10) / len(lengths) long_ratio = sum(1 for l in lengths if l > 20) / len(lengths) return { ‘mean_length’: float(mean_length), ‘std_length’: float(np.std(lengths)), ‘median_length’: float(np.median(lengths)), ‘length_variance’: float(np.var(lengths)), ‘num_sentences’: len(lengths), #Coefficient of variation (σ/μ) ‘complexity_score’: float(complexity_score), ‘short_sentence_ratio’: short_ratio, ‘long_sentence_ratio’: long_ratio } |

- 3.

- Greek Function Word Analysis

| def calculate_function_word_features(self, tokens: List[str]) -> Dict[str, float]: if not tokens: return {f‘{category}_ratio’: 0 for category in self.function_words.keys()} total_tokens = len(tokens) token_counts = Counter(tokens) features = {} for category, words in self.function_words.items(): count = sum(token_counts.get(word, 0) for word in words) features[f‘{category}_ratio’] = count / total_tokens total_function_words = sum(features.values()) features[‘total_function_word_ratio’] = total_function_words return features self.function_words = { ‘articles’: [‘ὁ’, ‘ἡ’, ‘τό’, ‘οἱ’, ‘αἱ’, ‘τά’, ‘τοῦ’, ‘τῆς’, ‘τῶν’, ‘τῷ’, ‘τῇ’, ‘τοῖς’, ‘ταῖς’, ‘τόν’, ‘τήν’], ‘particles’: [‘δέ’, ‘γάρ’, ‘οὖν’, ‘μέν’, ‘δή’, ‘τε’, ‘γε’, ‘μήν’, ‘τοι’, ‘ἄρα’], ‘conjunctions’: [‘καί’, ‘ἀλλά’, ‘ἤ’, ‘οὐδέ’, ‘μηδέ’, ‘εἰ’, ‘ἐάν’, ‘ὅτι’, ‘ἵνα’, ‘ὡς’], ‘prepositions’: [‘ἐν’, ‘εἰς’, ‘ἐκ’, ‘ἀπό’, ‘πρός’, ‘διά’, ‘ἐπί’, ‘κατά’, ‘μετά’, ‘περί’, ‘ὑπό’, ‘παρά’], ‘pronouns’: [‘αὐτός’, ‘οὗτος’, ‘ἐκεῖνος’, ‘τις’, ‘τί’, ‘ὅς’, ‘ἥ’, ‘ὅ’, ‘ἐγώ’, ‘σύ’] } |

- 4.

- Morphological Complexity

| def calculate_morphological_features(self, nlp_features: Dict) -> Dict[str, float]: features = { ‘morphological_diversity’: 0, ‘tense_variation’: 0, ‘lemma_token_ratio’: 0 } if ‘morphological_features’ in nlp_features and nlp_features[‘morphological_features’]: morph_features = nlp_features[‘morphological_features’] unique_patterns = len(set(str(f) for f in morph_features if f)) total_words = len(morph_features) features[‘morphological_diversity’] = unique_patterns/total_words if total_words > 0 else 0 if ‘lemmas’ in nlp_features and nlp_features[‘lemmas’]: lemmas = nlp_features[‘lemmas’] words = len(lemmas) unique_lemmas = len(set(lemmas)) features[‘lemma_token_ratio’] = unique_lemmas/words if words > 0 else 0 return features |

- 5.

- N-gram Feature Analysis

| def extract_ngrams(self, tokens: List[str], n: int) -> List[Tuple[str, …]]: return [tuple(tokens[i:i + n]) for i in range(len(tokens) − n + 1)] def calculate_ngram_frequency(self, tokens: List[str], n: int = 2) -> Dict[Tuple[str, …], float]: ngrams = self.extract_ngrams(tokens, n) if not ngrams: return {} ngram_counts = Counter(ngrams) total_ngrams = len(ngrams) return {ngram: count/total_ngrams for ngram, count in ngram_counts.most_common(50)} def get_tfidf_features(self, text: str) -> Dict[str, float]: if not self.is_fitted: raise ValueError(“TF-IDF vectorizers must be fitted first”) features = {} char_tfidf = self.tfidf.transform([text]) char_feature_names = self.tfidf.get_feature_names_out() for i, score in enumerate(char_tfidf.toarray()[0]): if score > 0: features[f‘char_tfidf_{char_feature_names[i]}’] = score word_tfidf = self.word_tfidf.transform([text]) word_feature_names = self.word_tfidf.get_feature_names_out() for i, score in enumerate(word_tfidf.toarray()[0]): if score > 0: features[f‘word_tfidf_{word_feature_names[i]}’] = score return features |

- 6.

- TF-IDF Analysis

| def __init__(self): self.tfidf = TfidfVectorizer( analyzer = ‘char’, ngram_range = (3, 5), max_features = 1000 ) self.word_tfidf = TfidfVectorizer( analyzer = ‘word’, max_features = 500, min_df = 2, max_df = 0.8 ) def fit(self, texts: List[str]): self.tfidf.fit(texts) self.word_tfidf.fit(texts) self.is_fitted = True |

5. Machine Learning and Clustering Methodology

5.1. Manuscript Comparison

| class SimilarityCalculator: def __init__(self, feature_selection_k: int = 100, pca_components: float = 0.95): self.scaler = RobustScaler() # Median + IQR scaling self.variance_filter = VarianceThreshold(threshold = 0.01) self.pca = PCA(n_components = pca_components, svd_solver = ‘auto’) self.global_tfidf_vocab = set() self.global_ngram_vocab = set() def build_global_vocabulary(self, all_features: List[Dict[str, Any]]): print(“global vocabulary for consistent features”) for features in all_features: if ‘tfidf’ in features: tfidf_features = features[‘tfidf’] sorted_tfidf = sorted(tfidf_features.items(), key = lambda x: x[1], reverse = True)[:50] for key, _ in sorted_tfidf: self.global_tfidf_vocab.add(key) self.global_tfidf_vocab = sorted(list(self.global_tfidf_vocab)) print(f”Global TF-IDF vocabulary size: {len(self.global_tfidf_vocab)}”) def fit_transform_features(self, feature_matrices: List[np.ndarray], manuscript_names: List[str]) -> np.ndarray: X = np.vstack(feature_matrices) X = np.nan_to_num(X, nan = 0.0, posinf = 0.0, neginf = 0.0) print(f”Original feature matrix shape: {X.shape}”) X_filtered = self.variance_filter.fit_transform(X) print(f”After variance filtering: {X_filtered.shape}”) X_scaled = self.scaler.fit_transform(X_filtered) X_pca = self.pca.fit_transform(X_scaled) print(f”After PCA: {X_pca.shape}”) print(f”Explained variance ratio: {self.pca.explained_variance_ratio_.sum():.3f}”) return X_pca |

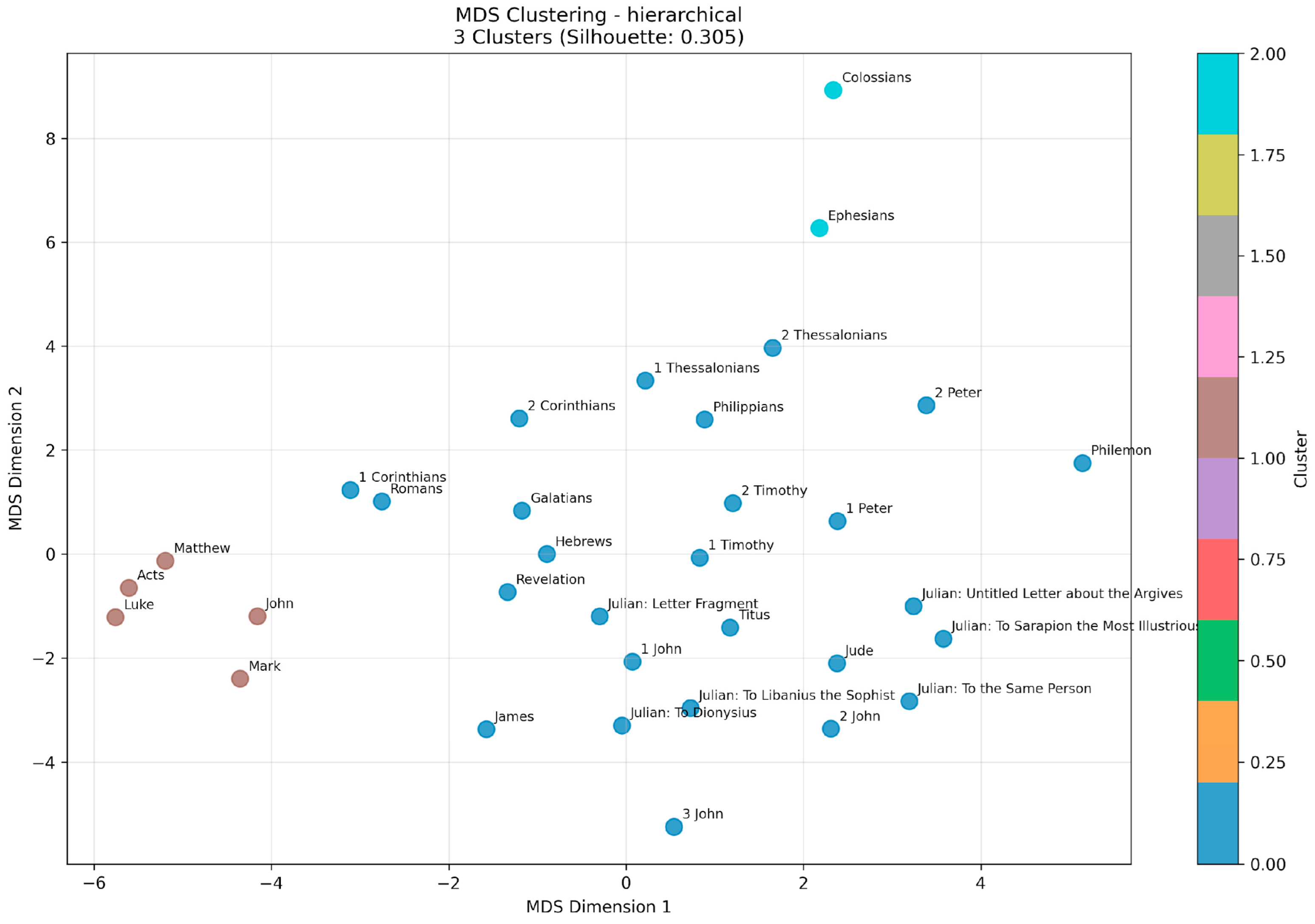

5.2. Clustering Algorithm Testing

| def find_optimal_clustering(self) -> Dict: results_df = pd.DataFrame(all_results) best_idx = results_df[‘silhouette’].idxmax() best_result = results_df.iloc[best_idx] |

| def perform_clustering(self, n_clusters_range: Tuple[int, int] = (2, 33)) -> Dict: X = self.similarity_calculator.fit_transform_features( self.feature_matrices, self.manuscript_names ) clustering_results = {} np.random.seed(42) for n_clusters in range(min_clusters, max_clusters + 1): cluster_results = {} kmeans = KMeans(n_clusters = n_clusters, random_state = 42, n_init = 10) kmeans_labels = kmeans.fit_predict(X) cluster_results[‘kmeans’] = { ‘labels’: kmeans_labels, ‘silhouette’: silhouette_score(X, kmeans_labels), } hierarchical = AgglomerativeClustering(n_clusters = n_clusters, linkage = ‘ward’) hierarchical_labels = hierarchical.fit_predict(X) cluster_results[‘hierarchical’] = { ‘labels’: hierarchical_labels, ‘silhouette’: silhouette_score(X, hierarchical_labels), } clustering_results[n_clusters] = cluster_results return clustering_results |

5.3. Mathematical Foundations of Hierarchical Clustering

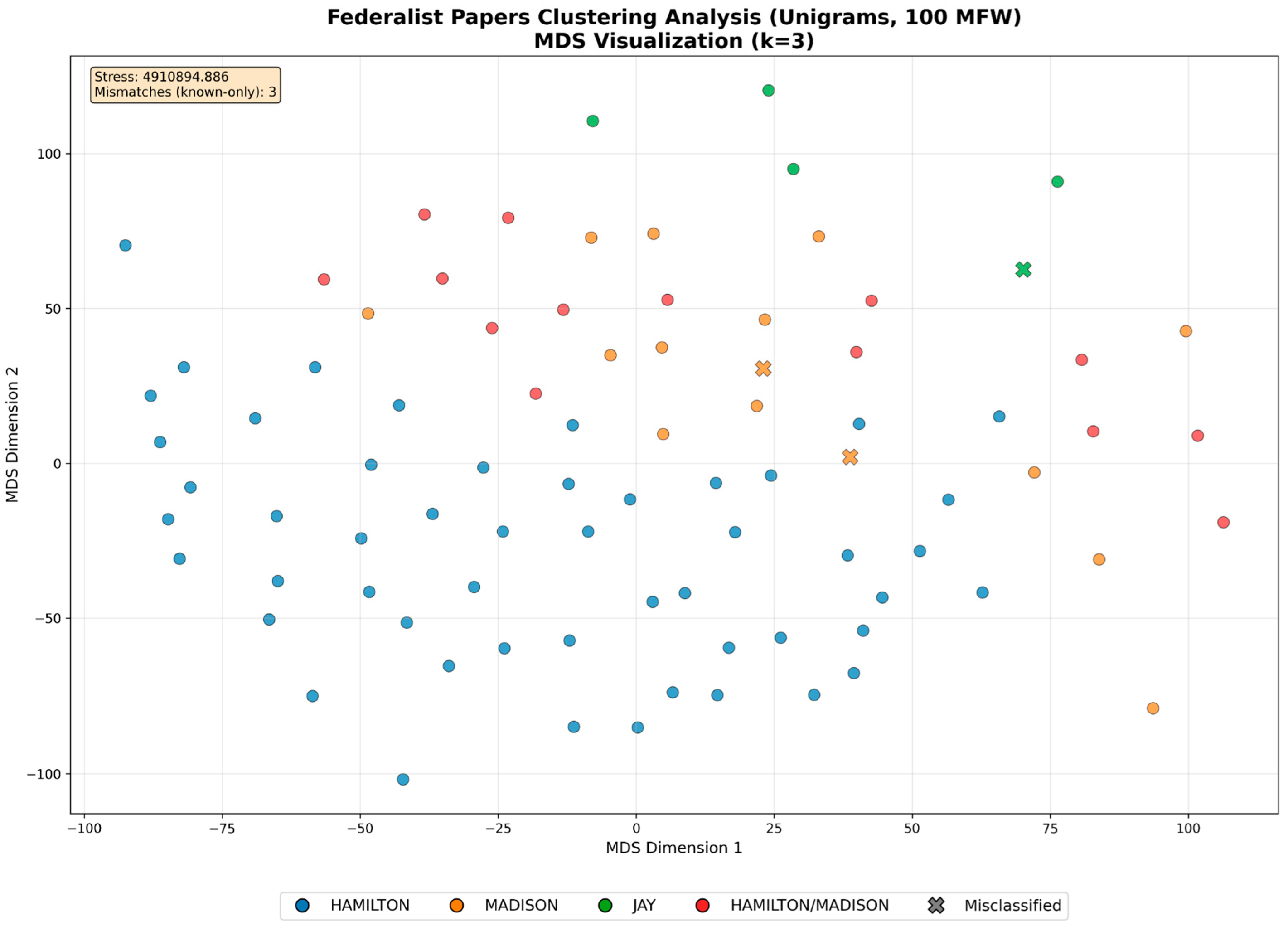

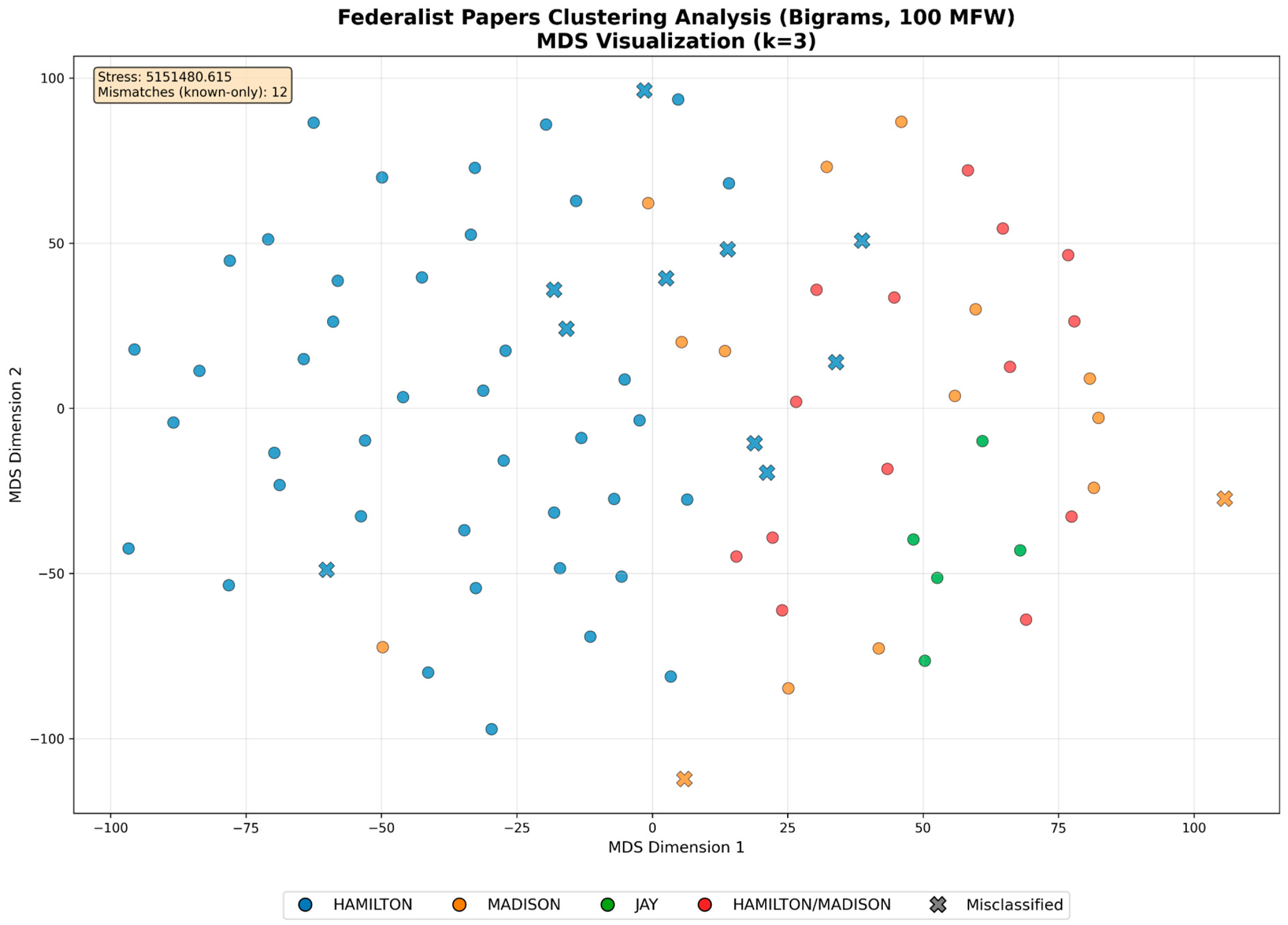

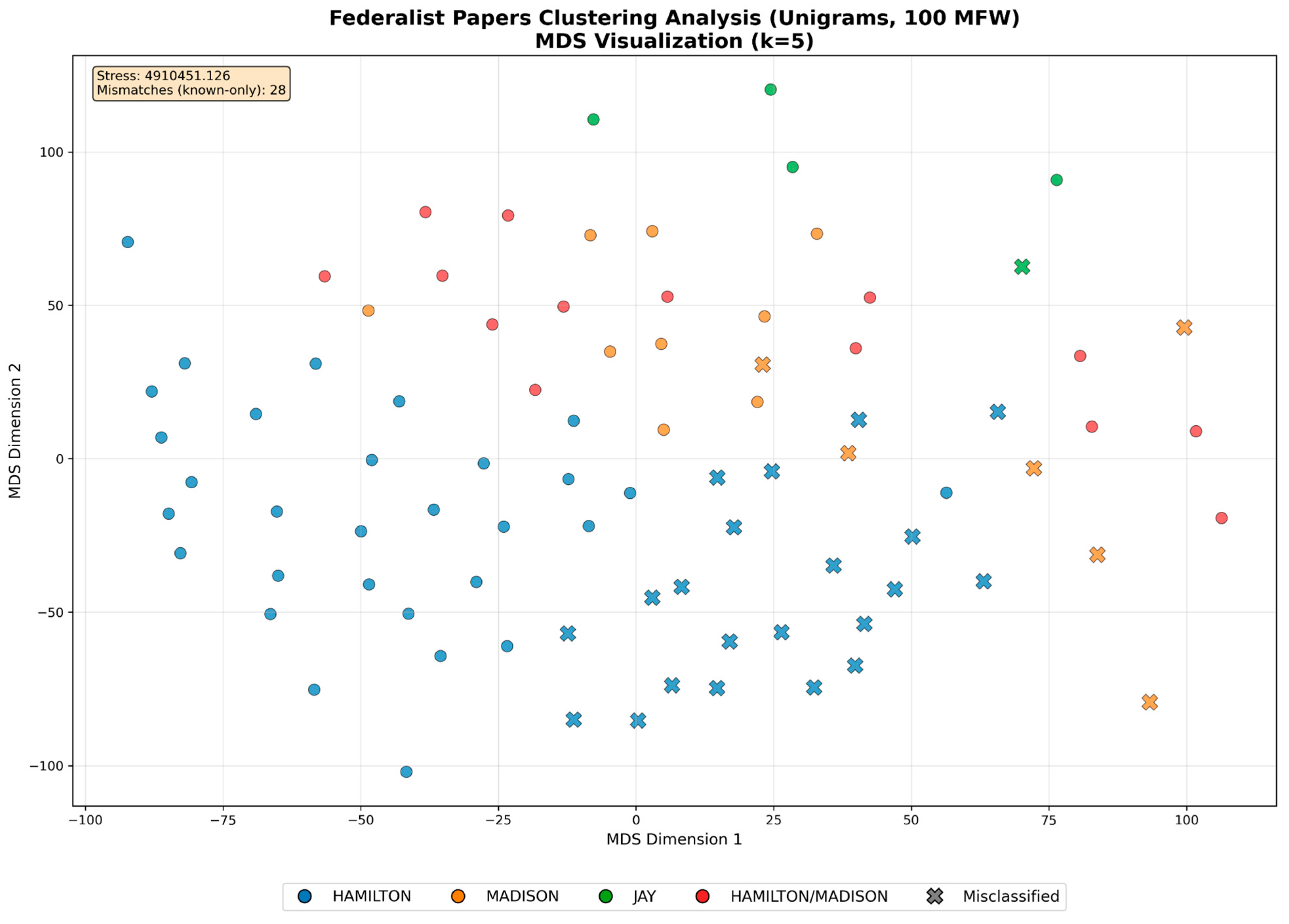

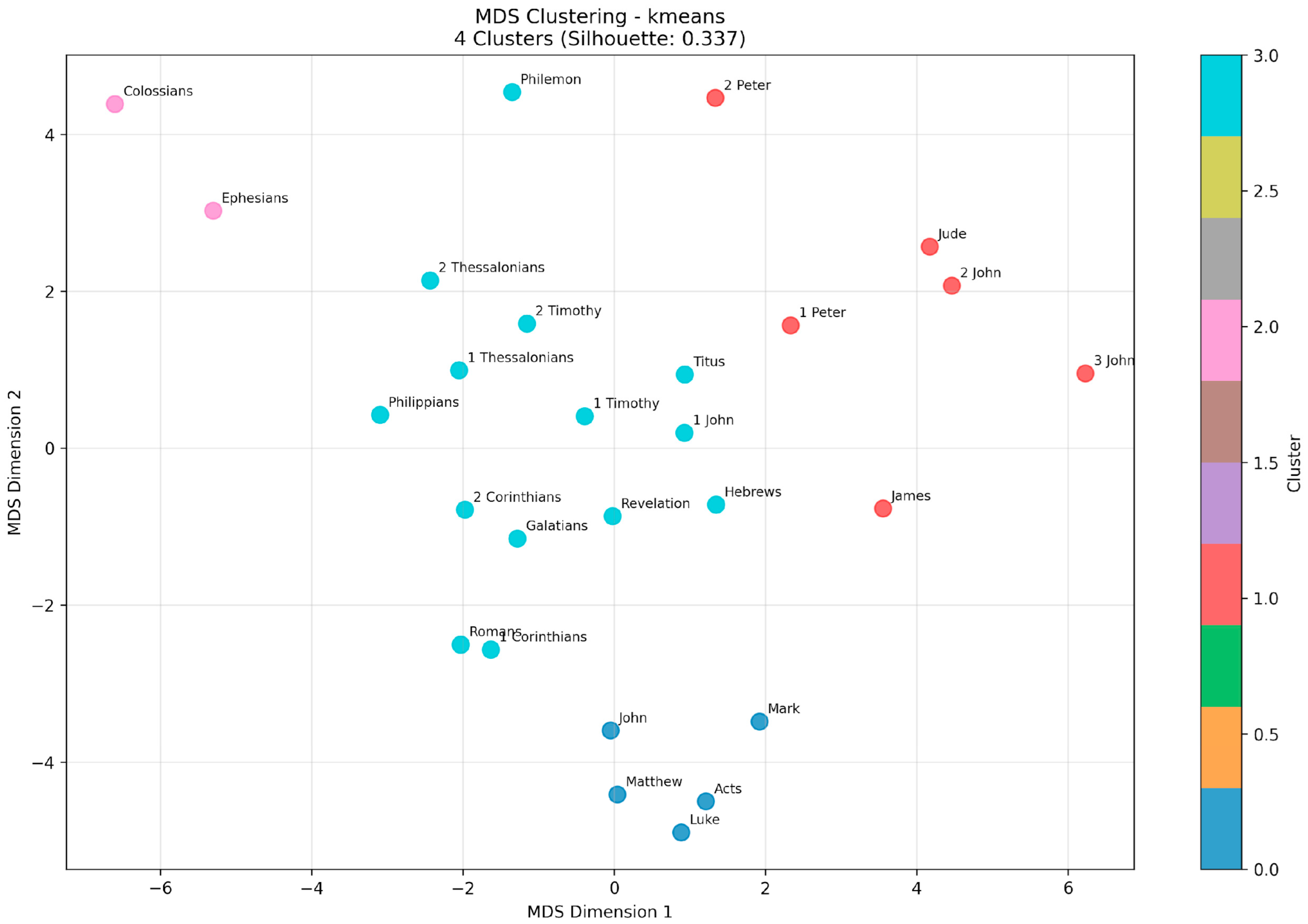

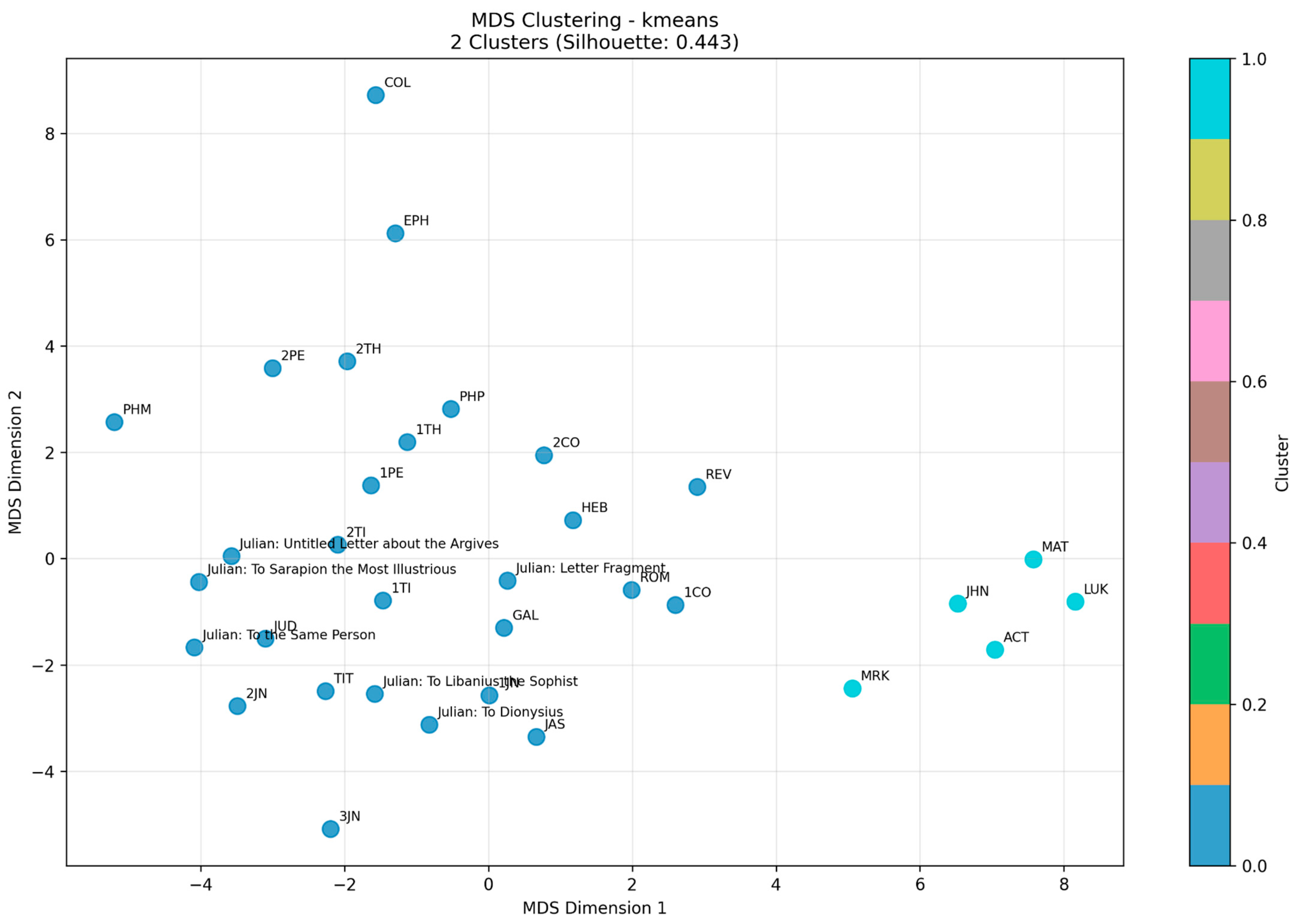

6. Results and Analysis

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Adams, James Noel. 1977. The Vulgar Latin of the Letters of Claudius Terentianus (P.Mich. VIII, 467–72). Manchester: Manchester University Press. [Google Scholar]

- Agarwal, Shivam, Zimin Zhang, Lifan Yuan, Jiawei Han, and Hao Peng. 2025. The Unreasonable Effectiveness of Entropy Minimization in LLM Reasoning. Available online: https://arxiv.org/pdf/2505.15134 (accessed on 7 August 2025).

- Bevendorff, Janek, Martin Potthast, Matthias Hagen, and Benno Stein. 2019. Heuristic Authorship Obfuscation. Kerrville: Association for Computational Linguistics. Available online: https://aclanthology.org/P19-1104.pdf (accessed on 6 April 2025).

- Bevendorff, Janek, Tobias Wenzel, Martin Potthast, Matthias Hagen, and Benno Stein. 2020. On Divergence-Based Author Obfuscation: An Attack on the State of the Art in Statistical Authorship Verification. IT—Information Technology 62: 99–115. [Google Scholar] [CrossRef]

- Brennan, Michael, and Rachel Greenstadt. 2009. Practical Attacks against Authorship Recognition Techniques. Paper presented at Name of the Twenty-First Innovative Applications of Artificial Intelligence Conference, Pasadena, CA, USA, July 14–16; pp. 60–65. Available online: https://cdn.aaai.org/ocs/i/iaai0020/257-3903-1-PB.pdf (accessed on 19 April 2025).

- Can, Fazli, and Jon M. Patton. 2004. Change of Writing Style with Time. Computers and the Humanities 38: 61–82. [Google Scholar] [CrossRef]

- Computational Stylistics Group. 2015. GitHub. Available online: https://github.com/computationalstylistics/stylo/blob/98998cd6b10c0fc097bbc72ed9885a58cc1701d8/R/txt.to.features.R (accessed on 4 August 2025).

- Conley, Brandon. 2017. Minore(m) Pretium: Morphosyntactic Considerations for the Omission of Word-Final-m in Non-Elite Latin Texts; Kent: Kent State University. Available online: https://etd.ohiolink.edu/acprod/odb_etd/ws/send_file/send?accession=kent149253496962922 (accessed on 17 May 2025).

- Eder, Maciej, Jan Rybicki, and Mike Kestemont. 2016. Stylometry with R: A Package for Computational Text Analysis. The R Journal 8: 107–21. Available online: https://journal.r-project.org/archive/2016/RJ-2016-007/RJ-2016-007.pdf (accessed on 4 August 2025). [CrossRef]

- Eusebius. n.d. Church History, Book VI. New Advent, Chapter 25. Available online: https://www.newadvent.org/fathers/250106.htm (accessed on 1 June 2025).

- Evert, Stefan, Fotis Jannidis, Thomas Proisl, Isabella Reger, Steffen Pielström, Christof Schöch, and Thorsten Vitt. 2017. Understanding and Explaining Delta Measures for Authorship Attribution. Digital Scholarship in the Humanities 32: ii4–ii16. [Google Scholar] [CrossRef]

- Fisher, Jillian, Skyler Hallinan, Ximing Lu, Mitchell Gordon, Zaid Harchaoui, and Yejin Choi. 2024. StyleRemix: Interpretable Authorship Obfuscation via Distillation and Perturbation of Style Elements. Paper presented at the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, November 12–16; Available online: https://aclanthology.org/2024.emnlp-main.241.pdf (accessed on 17 April 2025).

- Forero, Pedro, Vassilis Kekatos, and Georgios Giannakis. 2012. Robust Clustering Using Outlier-Sparsity Regularization. IEEE Transactions on Signal Processing 60: 4163–77. [Google Scholar] [CrossRef]

- Fouard, Abbé Constant. 2023. St. Peter and the First Years of Christianity. Bedford: Sophia Institute Press. [Google Scholar]

- Goodacre, Mark. 2025. The Fourth Synoptic Gospel: John’s Knowledge of Matthew, Mark, and Luke. Grand Rapids: Wm. B. Eerdmans Publishing. [Google Scholar]

- Hagen, Matthias, Martin Potthast, and Benno Stein. 2016. Author Obfuscation: Attacking the State of the Art in Authorship Verification. Available online: https://downloads.webis.de/publications/papers/potthast_2016f.pdf (accessed on 18 April 2025).

- Halla-aho, Hilla. 2018. Scribes in Private Letter Writing: Linguistic Perspectives. In Scribal Repertoires in Egypt from the New Kingdom to the Early Islamic Period. Edited by Jennifer Cromwell and Eitan Grossmann. Oxford Studies in Ancient Documents. Oxford: Oxford University Press, pp. 239–77. [Google Scholar] [CrossRef]

- Johnson, Luke Timothy. 2001. The First and Second Letters to Timothy, 1st ed. New York: Doubleday. [Google Scholar]

- Juola, Patrick. 2015. The Rowling Case: A Proposed Standard Analytic Protocol for Authorship Questions. Digital Scholarship in the Humanities 30: fqv040. Available online: https://newtfire.org/courses/tutorials/juola-rowlingcase.pdf (accessed on 11 September 2025). [CrossRef]

- Juola, Patrick, and Darren Vescovi. 2011. Analyzing Stylometric Approaches to Author Obfuscation. In IFIP Advances in Information and Communication Technology. Berlin/Heidelberg: Springer, pp. 115–25. [Google Scholar] [CrossRef]

- Kneusel, Ronald T. 2024. How AI Works. San Francisco: No Starch Press. [Google Scholar]

- Libby, James A. 2016. The Pauline Canon Sung in a Linguistic Key: Visualizing New Testament Text Proximity by Linguistic Structure, System, and Strata. BAGL 5: 122–21. Available online: https://www.researchgate.net/publication/311923095_The_Pauline_Canon_Sung_in_a_Linguistic_Key_Visualizing_New_Testament_Text_Proximity_by_Linguistic_Structure_System_and_Strata (accessed on 2 April 2025).

- Mealand, David L. 1996. The Extent of the Pauline Corpus: A Multivariate Approach. Journal for the Study of the New Testament 18: 61–92. [Google Scholar] [CrossRef]

- Mikros, George K. 2013. Authorship Attribution and Gender Identification in Greek Blogs. London: Academic Mind. Available online: https://www.researchgate.net/publication/236583622_Authorship_Attribution_and_Gender_Identification_in_Greek_Blogs (accessed on 20 May 2025).

- Morton, Andrew Queen. 1965. The Authorship of the Pauline Epistles: A Scientific Solution. University Lectures 3. Saskatoon: University of Saskatchewan Press. [Google Scholar]

- Morton, Andrew Queen. 1978. Literary Detection: How to Prove Authorship and Fraud in Literature and Documents. New York: Scribner’s Sons. [Google Scholar]

- Mosteller, Frederick, and David L. Wallace. 1963. Inference in an Authorship Problem. Journal of the American Statistical Association 58: 275. [Google Scholar] [CrossRef] [PubMed]

- Nicklas, Tobias, Jozef Verheyden, and SchröterJens. 2021. Texts in Context: Essays on Dating and Contextualising Christian Writings from the Second and Early Third Centuries. Leuven, Paris and Bristol: Peeters. [Google Scholar]

- Pracht, Erich Benjamin, and Thomas McCauley. 2025. Paul’s Style and the Problem of the Pastoral Letters: Assessing Statistical Models of Description and Inference. Open Theology 11: 20240034. [Google Scholar] [CrossRef]

- Richards, E. Randolph. 2004. Paul and First-Century Letter Writing: Secretaries, Composition, and Collection. Downers Grove: Intervarsity Press. [Google Scholar]

- Riehle, Alexander, ed. 2020. A Companion to Byzantine Epistolography. Boston: Brill, Vol. 7. [Google Scholar]

- Ríos-Toledo, Germán, Juan Pablo Francisco Posadas-Durán, Grigori Sidorov, and Noé Alejandro Castro-Sánchez. 2022. Detection of Changes in Literary Writing Style Using N-Grams as Style Markers and Supervised Machine Learning. PLoS ONE 17: e0267590. [Google Scholar] [CrossRef] [PubMed]

- Rosa, Anthony. 2025. Federalist Papers Stylo 100 MFW Analysis: 08112025 Archive.zip. Available online: https://archive.anthonyrosa.xyz/ (accessed on 11 August 2025).

- Roy, Ashley, and Paul Robertson. 2022. Applying Cosine Similarity to Paul’s Letters: Mathematically Modeling Formal and Stylistic Similarities. In New Approaches to Textual and Image Analysis in Early Jewish and Christian Studies. Boston: Brill, pp. 88–117. [Google Scholar] [CrossRef]

- Savoy, Jacques. 2019. Authorship of Pauline Epistles Revisited. Journal of the Association for Information Science and Technology 70: 1089–97. [Google Scholar] [CrossRef]

- Silberzahn, Raphael, Eric L. Uhlmann, Daniel P. Martin, Pasquale Anselmi, Frederik Aust, Eli Awtrey, Štěpán Bahník, Feng Bai, Colin Bannard, Evelina Bonnier, and et al. 2018. Many Analysts, One Data Set: Making Transparent How Variations in Analytic Choices Affect Results. Advances in Methods and Practices in Psychological Science 1: 337–56. [Google Scholar] [CrossRef]

- Stowers, Stanley Kent. 1986. Letter Writing in Greco-Roman Antiquity. Philadelphia: The Westminster Press. [Google Scholar]

- Strawbridge, Jennifre. n.d. Paul and Patristics Database. Available online: https://paulandpatristics.web.ox.ac.uk/database#collapse397896 (accessed on 25 May 2025).

- Tanur, Judith, Frederick Mosteller, William Kruskal, Erich Lehmann, Richard Fink, Richard Pieters, and Gerald Rising, eds. 1989. Statistics: A Guide to the Unknown, 3rd ed. Pacific Grove: Wadsworth & Brooks/Cole Advanced Books & Software. [Google Scholar]

- Van der Ventel, Brandon, and Richard Newman. 2022. Application of the Term Frequency-Inverse Document Frequency Weighting Scheme to the Pauline Corpus. Andrews University Seminary Studies (AUSS) 59: 251–72. Available online: https://digitalcommons.andrews.edu/auss/vol59/iss2/4/ (accessed on 2 April 2025).

- Van Nes, Jermo. 2017. Pauline Language and the Pastoral Epistles: A Study of Linguistic Variation in the Corpus Paulinum. Available online: https://theoluniv.ub.rug.nl/92/1/2017%20Nes%20Dissertation.pdf (accessed on 28 March 2025).

- Van Nes, Jermo. 2018. Missing ‘Particles’ in Disputed Pauline Letters? A Question of Method. Journal for the Study of the New Testament 40: 383–98. Available online: http://hdl.handle.net/2263/69051 (accessed on 28 March 2025). [CrossRef]

- Wang, Wanning. 2025. Machine Learning Based Engagement Prediction for Online Courses. ITM Web of Conferences 70: 04014. [Google Scholar] [CrossRef]

- Weima, Jeffrey. 2016. Paul the Ancient Letter Writer. Grand Rapids: Baker Academic. [Google Scholar]

- White, Benjamin L. 2025. Counting Paul. Oxford: Oxford University Press. [Google Scholar]

- Wu, Austin. 2025. Web Guide: An Experimental AI-Organized Search Results Page. The Keyword. Available online: https://blog.google/products/search/web-guide-labs/ (accessed on 3 August 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rosa, A. Computational Stylometrics and the Pauline Corpus: Limits in Authorship Attribution. Religions 2025, 16, 1264. https://doi.org/10.3390/rel16101264

Rosa A. Computational Stylometrics and the Pauline Corpus: Limits in Authorship Attribution. Religions. 2025; 16(10):1264. https://doi.org/10.3390/rel16101264

Chicago/Turabian StyleRosa, Anthony. 2025. "Computational Stylometrics and the Pauline Corpus: Limits in Authorship Attribution" Religions 16, no. 10: 1264. https://doi.org/10.3390/rel16101264

APA StyleRosa, A. (2025). Computational Stylometrics and the Pauline Corpus: Limits in Authorship Attribution. Religions, 16(10), 1264. https://doi.org/10.3390/rel16101264