Underwater Image Enhancement and Mosaicking System Based on A-KAZE Feature Matching

Abstract

:1. Introduction

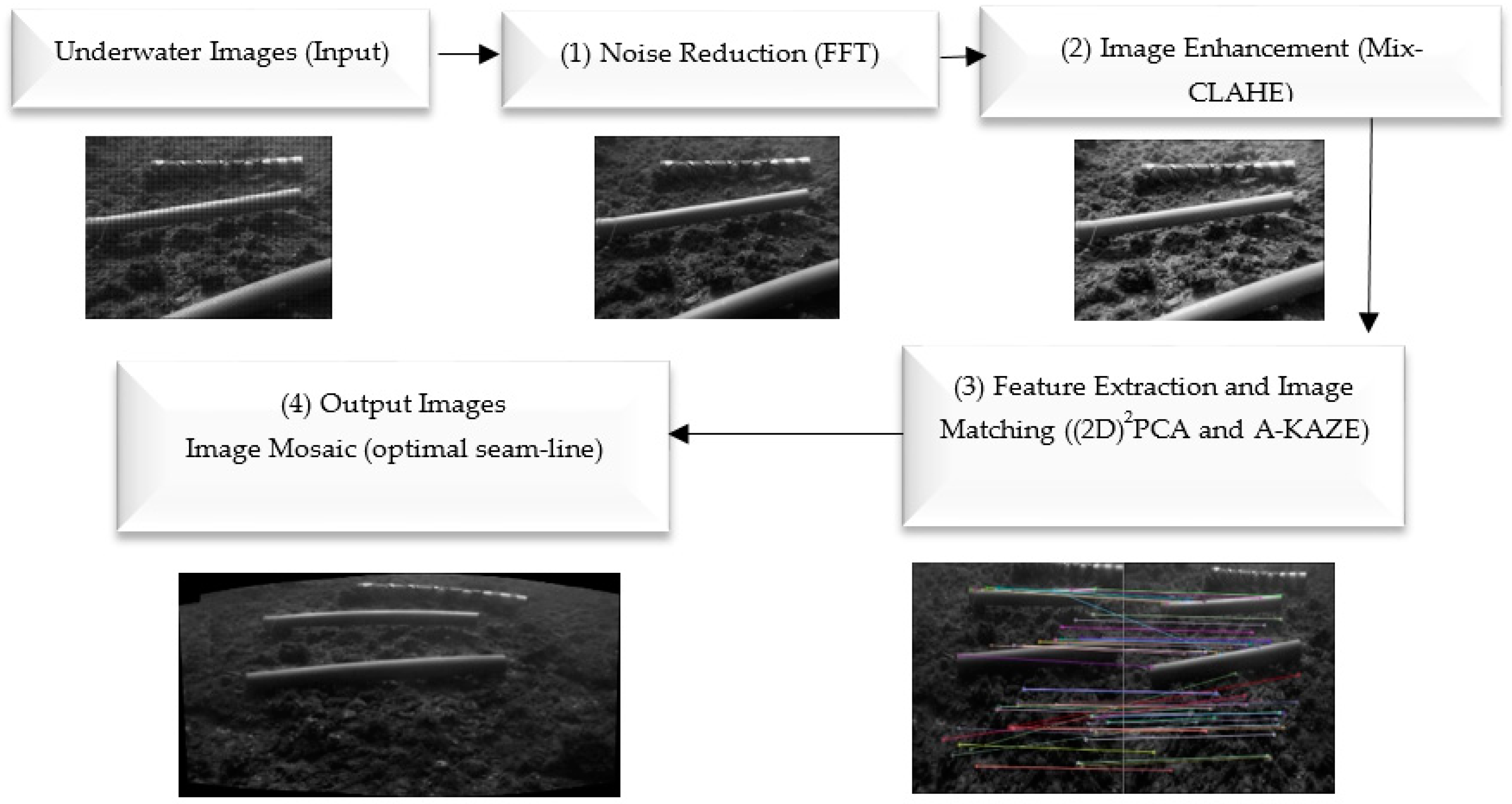

2. Materials and Methods

- Underwater noise removal from images by Fast Fourier Transform (FFT) technique.

- Contrast and intensity of images are increased by Mixture Contrast Limited Adaptive Histogram Equalization (Mix-CLAHE) technique.

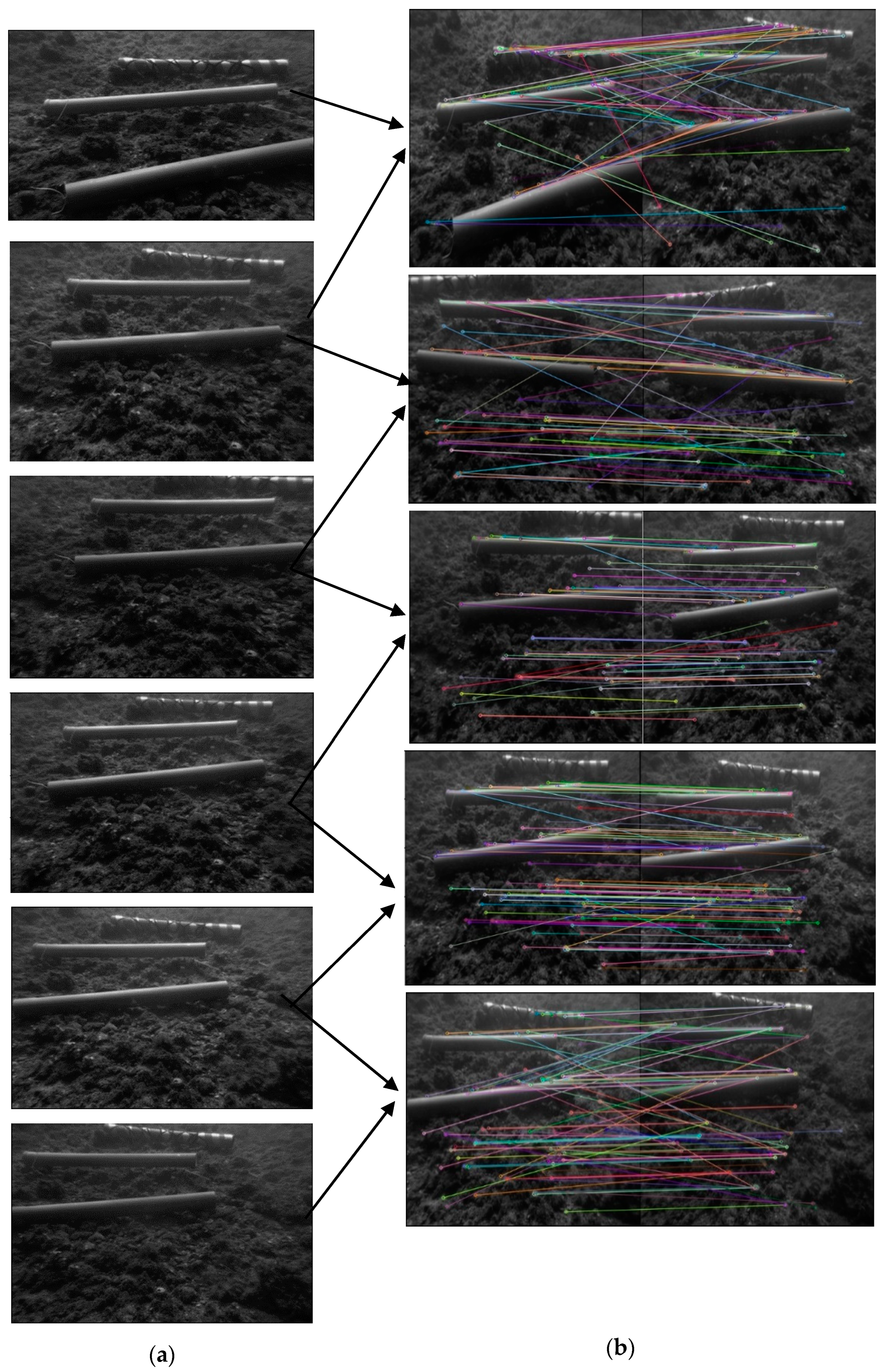

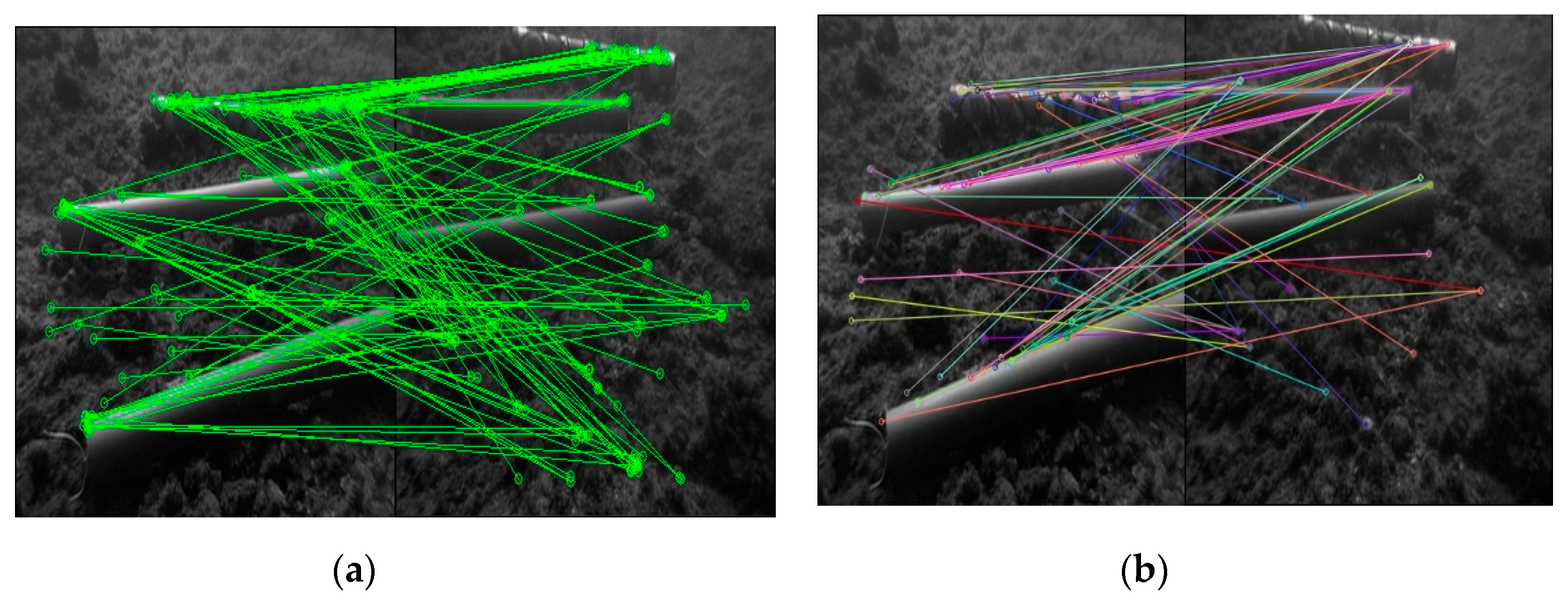

- Important feature extraction and image matching are performed by (2D)2PCA and A-KAZE techniques.

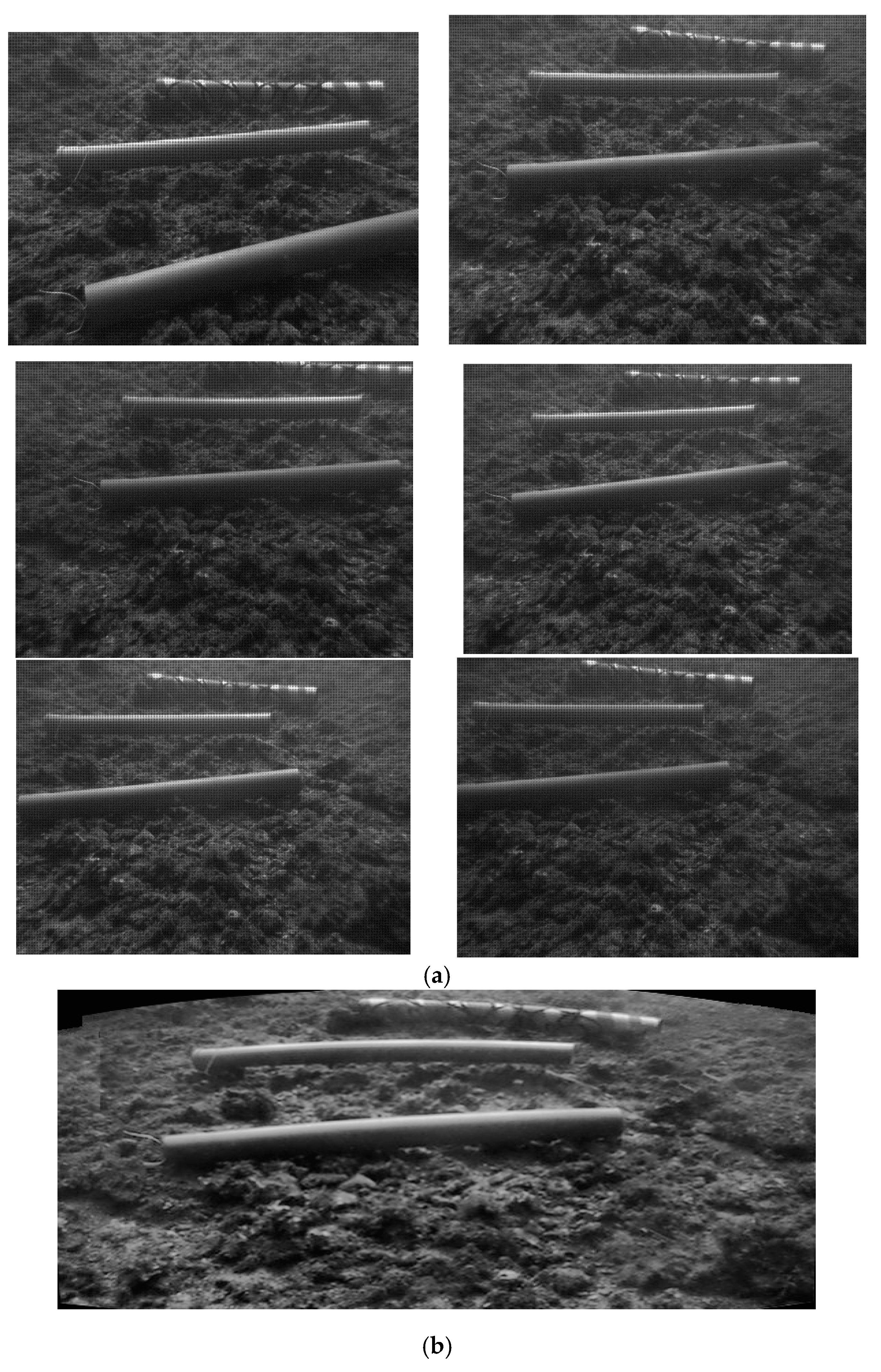

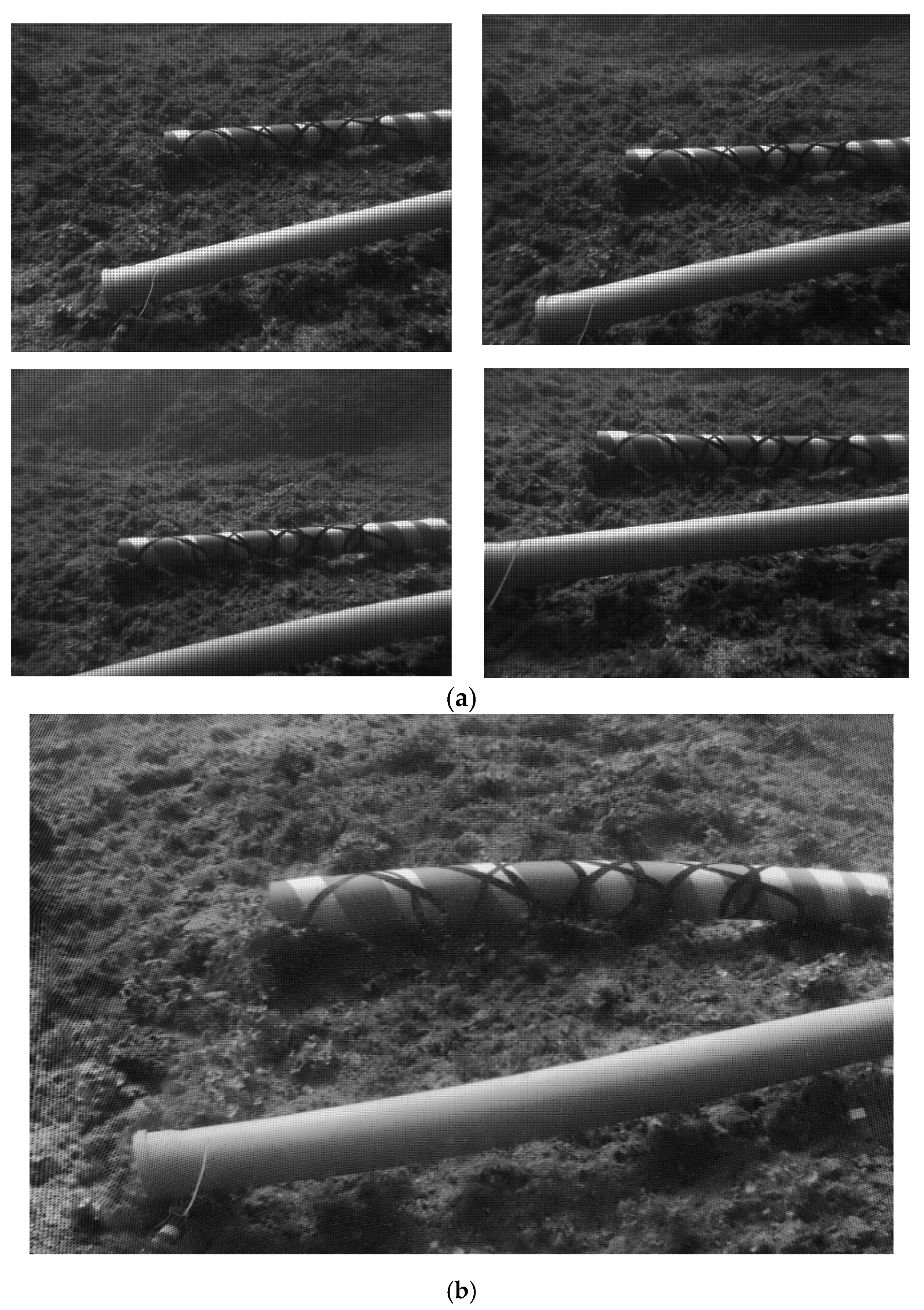

- Image stitching is carried out by the optimal seam-line method.

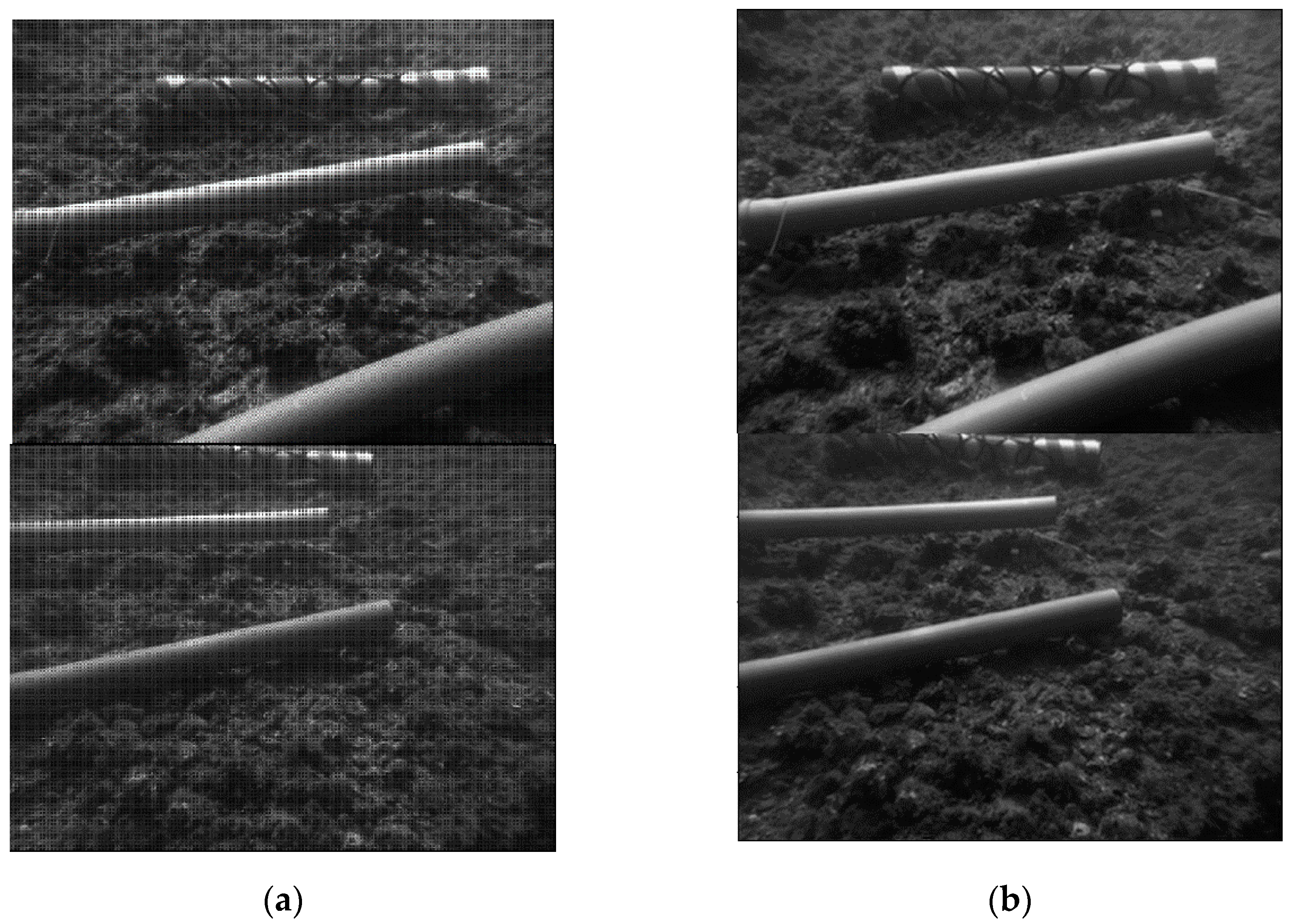

2.1. Noise Reduction

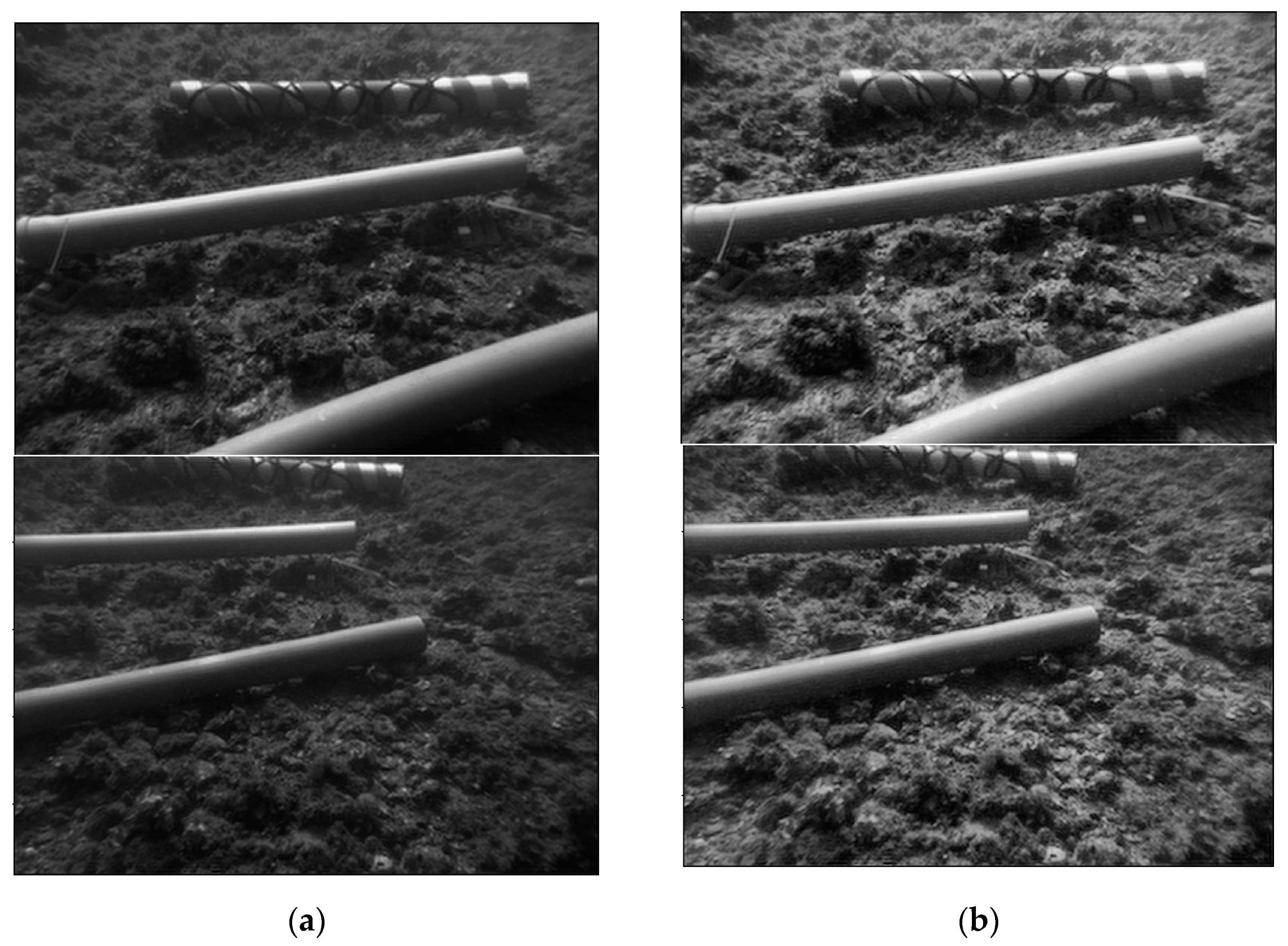

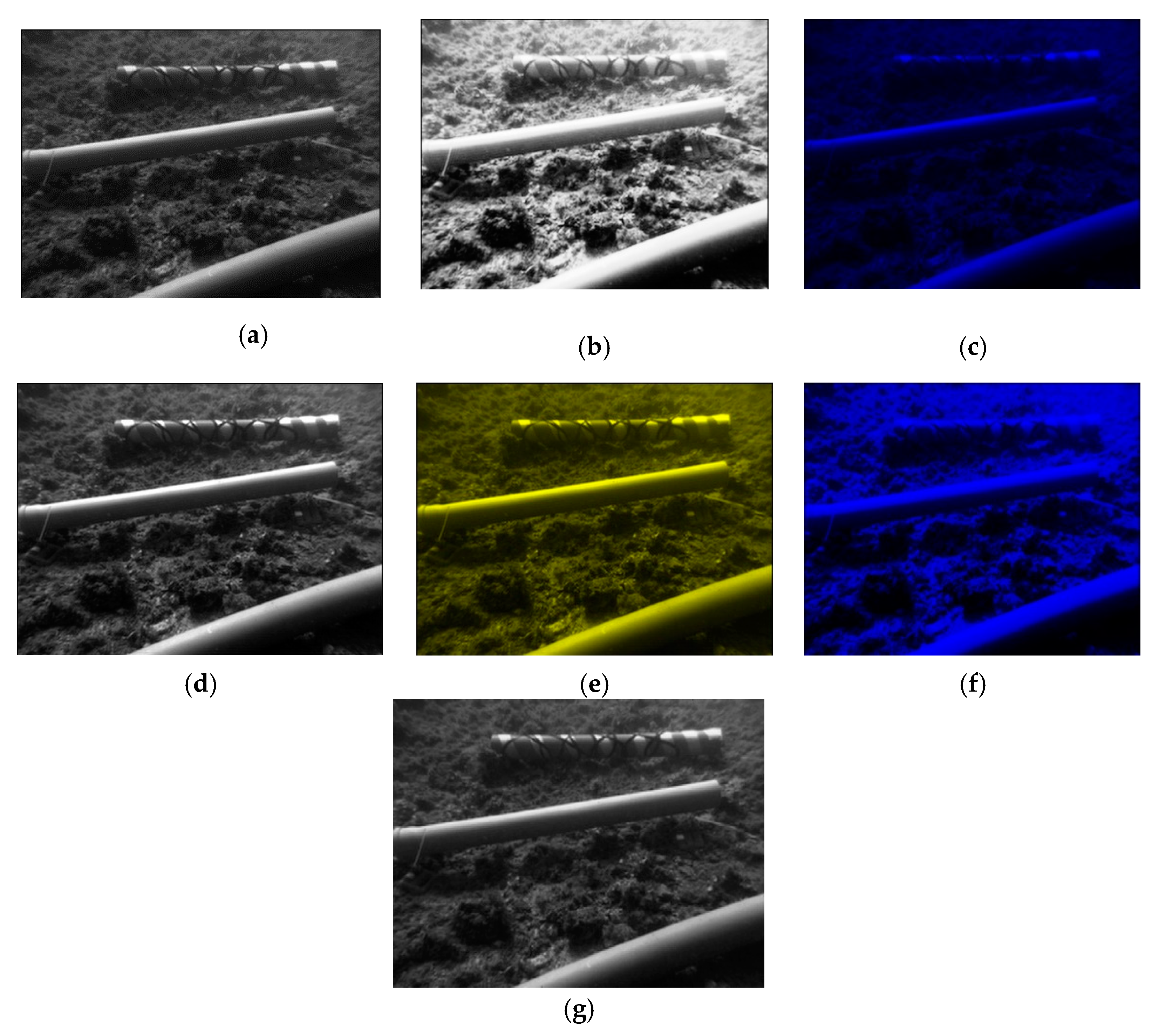

2.2. Image Enhancement

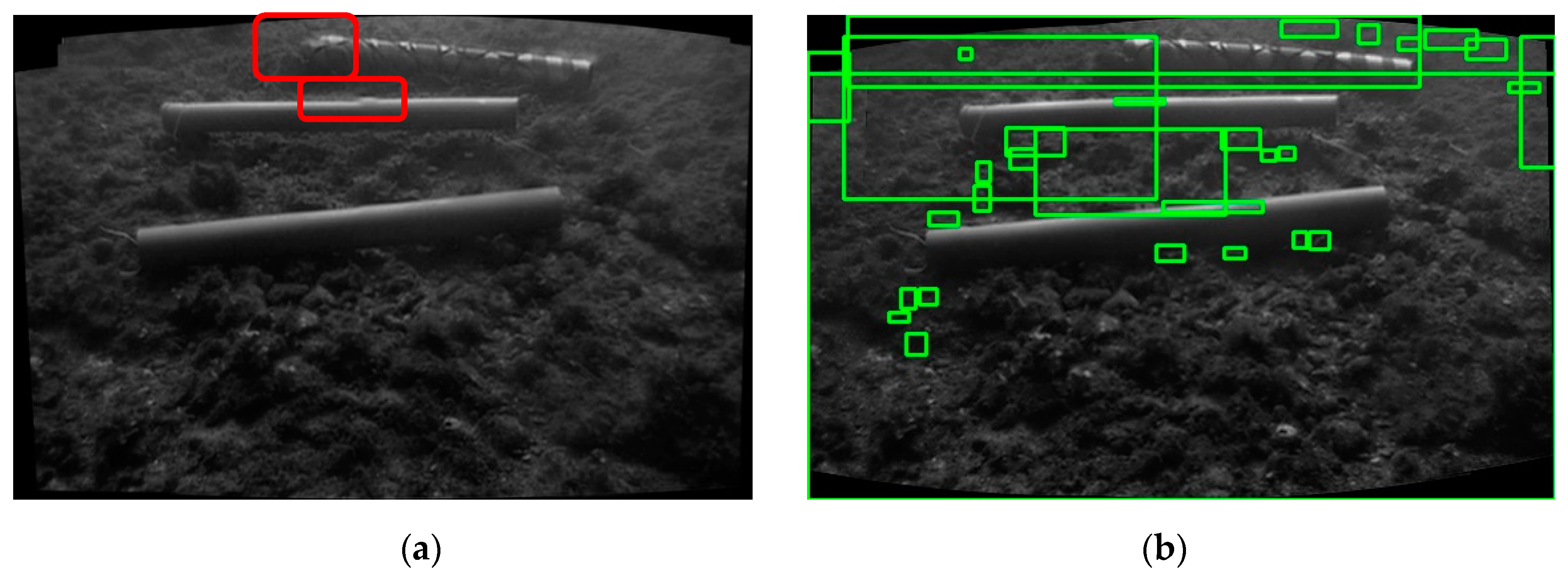

2.3. Image Matching

2.4. Image Mosaicking

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rossi, M.; Trslić, P.; Sivčev, S.; Riordan, J.; Toal, D.; Dooly, G. Real-time underwater stereofusion. Sensors 2018, 18, 3936. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sivčev, S.; Rossi, M.; Coleman, J.; Dooly, G.; Omerdić, E.; Toal, D. Fully automatic visual servoing control for work-class marine intervention ROVs. Control Eng. Pract. 2018, 74, 153–167. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Xu, X.; He, L.; Serikawa, S.; Kim, H. Dust removal from high turbid underwater images using convolutional neural networks. Opt. Laser Technol. 2019, 110, 2–6. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Zhang, L.; Serikawa, S. Contrast enhancement for images in turbid water. J. Opt. Soc. Am. A 2015, 32, 886–893. [Google Scholar] [CrossRef] [Green Version]

- Lu, J.; Li, N.; Zhang, S.; Yu, Z.; Zheng, H.; Zheng, B. Multi-scale adversarial network for underwater image restoration. Opt. Laser Technol. 2019, 110, 105–113. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE Features. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 214–227. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Li, M.; Chen, R.; Zhang, W.; Li, D.; Liao, X.; Wang, L.; Pan, Y.; Zhang, P. A stereo dual-channel dynamic programming algorithm for UAV image stitching. Sensors 2017, 17, 2060. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Xu, Q.; Luo, L.; Wang, Y.; Wang, S. A Robust Method for Automatic Panoramic UAV Image Mosaic. Sensors 2019, 19, 1898. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Guo, B.; Li, M.; Liao, X.; Li, W. Improved Seam-Line Searching Algorithm for UAV Image Mosaic with Optical Flow. Sensors 2018, 18, 1214. [Google Scholar] [CrossRef] [Green Version]

- Nunes, A.P.; Gaspar, A.R.S.; Pinto, A.M.; Matos, A.C. A mosaicking technique for object identification in underwater environments. Sens. Rev. 2019, 39. [Google Scholar] [CrossRef]

- Elibol, A.; Kim, J.; Gracias, N.; Garcia, R. Efficient image mosaicing for multi-robot visual underwater mapping. Pattern Recognit. Lett. 2014, 46, 20–26. [Google Scholar] [CrossRef]

- Ferentinos, G.; Fakiris, E.; Christodoulou, D.; Geraga, M.; Dimas, X.; Georgiou, N.; Kordella, S.; Papatheodorou, G.; Prevenios, M.; Sotiropoulos, M. Optimal sidescan sonar and subbottom profiler surveying of ancient wrecks: The ‘Fiskardo’ wreck, Kefallinia Island, Ionian Sea. J. Archaeol. Sci. 2020, 113, 105032. [Google Scholar] [CrossRef]

- Mkayes, A.A.; Saad, N.M.; Faye, I.; Walter, N. Image histogram and FFT based critical study on noise in fluorescence microscope images. In Proceedings of the 2016 6th International Conference on Intelligent and Advanced Systems (ICIAS), Kuala Lumpur, Malaysia, 15–17 August 2016; pp. 1–4. [Google Scholar]

- Wang, Y.; Song, W.; Fortino, G.; Qi, L.-Z.; Zhang, W.; Liotta, A. An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access 2019, 7, 140233–140251. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. In Graphics Gems IV; Heckbert, P.S., Ed.; Academic Press Professional: San Diego, CA, USA, 1994; pp. 474–485. [Google Scholar]

- Deng, G. A generalized unsharp masking algorithm. IEEE Trans. Image Process. 2010, 20, 1249–1261. [Google Scholar] [CrossRef] [PubMed]

- Huang, D.; Wang, Y.; Song, W.; Sequeira, J.; Mavromatis, S. Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In Proceedings of the International Conference on Multimedia Modeling, Bangkok, Thailand, 5–7 February 2019; pp. 453–465. [Google Scholar]

- Prabhakar, C.; Kumar, P.P. Underwater image denoising using adaptive wavelet subband thresholding. In Proceedings of the 2010 International Conference on Signal and Image Processing, Changsha, China, 14–15 December 2010; pp. 322–327. [Google Scholar]

- Khan, A.; Ali, S.S.A.; Malik, A.S.; Anwer, A.; Meriaudeau, F. Underwater image enhancement by wavelet based fusion. In Proceedings of the 2016 IEEE International Conference on Underwater System Technology: Theory and Applications (USYS), Penang, Malaysia, 13–14 December 2016; pp. 83–88. [Google Scholar]

- Vasamsetti, S.; Mittal, N.; Neelapu, B.C.; Sardana, H.K. Wavelet based perspective on variational enhancement technique for underwater imagery. Ocean Eng. 2017, 141, 88–100. [Google Scholar] [CrossRef]

- Perez, J.; Attanasio, A.C.; Nechyporenko, N.; Sanz, P.J. A deep learning approach for underwater image enhancement. In Proceedings of the International Work-Conference on the Interplay Between Natural and Artificial Computation, Corunna, Spain, 19–23 June 2017; pp. 183–192. [Google Scholar]

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep CNN method for underwater image enhancement. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1382–1386. [Google Scholar]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2017, 3, 387–394. [Google Scholar] [CrossRef] [Green Version]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 20–25 May 2018; pp. 7159–7165. [Google Scholar]

- Yu, X.; Qu, Y.; Hong, M. Underwater-GAN: Underwater image restoration via conditional generative adversarial network. In Proceedings of the International Conference on Pattern Recognition, Beijing, China, 20–24 August 2018; pp. 66–75. [Google Scholar]

- Jordt, A.; Köser, K.; Koch, R. Refractive 3D reconstruction on underwater images. Methods Oceanogr. 2016, 15, 90–113. [Google Scholar] [CrossRef]

- Anwer, A.; Ali, S.S.A.; Khan, A.; Mériaudeau, F. Underwater 3-d scene reconstruction using kinect v2 based on physical models for refraction and time of flight correction. IEEE Access 2017, 5, 15960–15970. [Google Scholar] [CrossRef]

- Łuczyński, T.; Pfingsthorn, M.; Birk, A. The pinax-model for accurate and efficient refraction correction of underwater cameras in flat-pane housings. Ocean Eng. 2017, 133, 9–22. [Google Scholar] [CrossRef]

- Hitam, M.S.; Awalludin, E.A.; Yussof, W.N.J.H.W.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International Conference on Computer Applications Technology (ICCAT), Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar]

- Kazerouni, I.; Haddadnia, J. A mass classification and image retrieval model for mammograms. Imaging Sci. J. 2014, 62, 353–357. [Google Scholar] [CrossRef]

- Alcantarilla, P.F. Fast explicit diffusion for accelerated features in nonlinear scale spaces. In Proceedings of the British Machine Vision Conference, Bristol, UK, 9–13 September 2013. [Google Scholar]

- Yang, X.; Cheng, K.-T. LDB: An ultra-fast feature for scalable augmented reality on mobile devices. In Proceedings of the 2012 IEEE international symposium on mixed and augmented reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 49–57. [Google Scholar]

- Calonder, M.; Lepetit, V.; Ozuysal, M.; Trzcinski, T.; Strecha, C.; Fua, P. BRIEF: Computing a local binary descriptor very fast. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1281–1298. [Google Scholar] [CrossRef] [Green Version]

- Lin, C.-C.; Pankanti, S.U.; Natesan Ramamurthy, K.; Aravkin, A.Y. Adaptive as-natural-as-possible image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1155–1163. [Google Scholar]

- Wang, B.; Li, H.; Hu, W. Research on key techniques of multi-resolution coastline image fusion based on optimal seam-line. Earth Sci. Inform. 2019, 1–12. [Google Scholar] [CrossRef]

- Oleari, F.; Kallasi, F.; Rizzini, D.L.; Aleotti, J.; Caselli, S. An underwater stereo vision system: From design to deployment and dataset acquisition. In Proceedings of the OCEANS 2015, Genova, Italy, 18–21 May 2015; pp. 1–6. [Google Scholar]

- Wang, Y. Single Underwater Image Enhancement and Color Restoration. Available online: https://github.com/wangyanckxx/Single-Underwater-Image-Enhancement-and-Color-Restoration (accessed on 28 May 2020).

- Mangeruga, M.; Bruno, F.; Cozza, M.; Agrafiotis, P.; Skarlatos, D. Guidelines for underwater image enhancement based on benchmarking of different methods. Remote Sens. 2018, 10, 1652. [Google Scholar] [CrossRef] [Green Version]

- Hummel, R. Image enhancement by histogram transformation. Comput. Graph. Image Process. 1975, 6, 184–195. [Google Scholar] [CrossRef]

- Iqbal, K.; Salam, R.A.; Osman, A.; Talib, A.Z. Underwater Image Enhancement Using an Integrated Colour Model. IAENG Int. J. Comput. Sci. 2007, 34, 2–12. [Google Scholar]

- Iqbal, K.; Odetayo, M.; James, A.; Salam, R.A.; Talib, A.Z.H. Enhancing the low quality images using unsupervised colour correction method. In Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010; pp. 1703–1709. [Google Scholar]

- Ghani, A.S.A.; Isa, N.A.M. Underwater image quality enhancement through composition of dual-intensity images and Rayleigh-stretching. SpringerPlus 2014, 3, 757. [Google Scholar] [CrossRef] [Green Version]

- Żak, B.; Hożyń, S. Local image features matching for real-time seabed tracking applications. J. Mar. Eng. Technol. 2017, 16, 273–282. [Google Scholar] [CrossRef] [Green Version]

- Zhao, J.; Zhang, X.; Gao, C.; Qiu, X.; Tian, Y.; Zhu, Y.; Cao, W. Rapid mosaicking of unmanned aerial vehicle (UAV) images for crop growth monitoring using the SIFT algorithm. Remote Sens. 2019, 11, 1226. [Google Scholar] [CrossRef] [Green Version]

- Ma, W.; Wu, Y.; Liu, S.; Su, Q.; Zhong, Y. Remote sensing image registration based on phase congruency feature detection and spatial constraint matching. IEEE Access 2018, 6, 77554–77567. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, A.; Luo, N.; Gao, Y. Aerial image mosaicking based on the 6-DoF imaging model. Int. J. Remote Sens. 2020, 41, 74–89. [Google Scholar] [CrossRef]

| RMSE | Number of Feature Points | CMR (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Method | SURF | SIFT and RANSAC | Proposed Method | SURF | SIFT and RANSAC | Proposed Method | SURF | SIFT and RANSAC | Proposed Method |

| Image pair | |||||||||

| Image Pair 1 | 0.1701 | 0.1532 | 0.1483 | 2483 | 2281 | 1823 | 89 | 91 | 94 |

| Image Pair 2 | 0.1616 | 0.1239 | 0.1146 | 2849 | 2342 | 1792 | 82 | 93 | 96 |

| Image Pair 3 | 0.1304 | 0.1255 | 0.1246 | 2294 | 2329 | 2158 | 75 | 87 | 91 |

| Image Pair 4 | 0.1595 | 0.1177 | 0.1144 | 2410 | 2789 | 2332 | 94 | 92 | 90 |

| Image Pair 5 | 0.1681 | 0.1200 | 0.1075 | 2983 | 2692 | 1982 | 87 | 92 | 93 |

| Data | Mosaicking Error | ||

|---|---|---|---|

| - | SURF | SIFT and RANSAC | Proposed Method |

| Data 1 | 0.9563 | 0.8958 | 0.8061 |

| Data 2 | 1.6721 | 1.2785 | 1.0930 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abaspur Kazerouni, I.; Dooly, G.; Toal, D. Underwater Image Enhancement and Mosaicking System Based on A-KAZE Feature Matching. J. Mar. Sci. Eng. 2020, 8, 449. https://doi.org/10.3390/jmse8060449

Abaspur Kazerouni I, Dooly G, Toal D. Underwater Image Enhancement and Mosaicking System Based on A-KAZE Feature Matching. Journal of Marine Science and Engineering. 2020; 8(6):449. https://doi.org/10.3390/jmse8060449

Chicago/Turabian StyleAbaspur Kazerouni, Iman, Gerard Dooly, and Daniel Toal. 2020. "Underwater Image Enhancement and Mosaicking System Based on A-KAZE Feature Matching" Journal of Marine Science and Engineering 8, no. 6: 449. https://doi.org/10.3390/jmse8060449

APA StyleAbaspur Kazerouni, I., Dooly, G., & Toal, D. (2020). Underwater Image Enhancement and Mosaicking System Based on A-KAZE Feature Matching. Journal of Marine Science and Engineering, 8(6), 449. https://doi.org/10.3390/jmse8060449