Assessment of a Smartphone-Based Camera System for Coastal Image Segmentation and Sargassum monitoring

Abstract

1. Introduction

1.1. Image Classification—Coastal Area

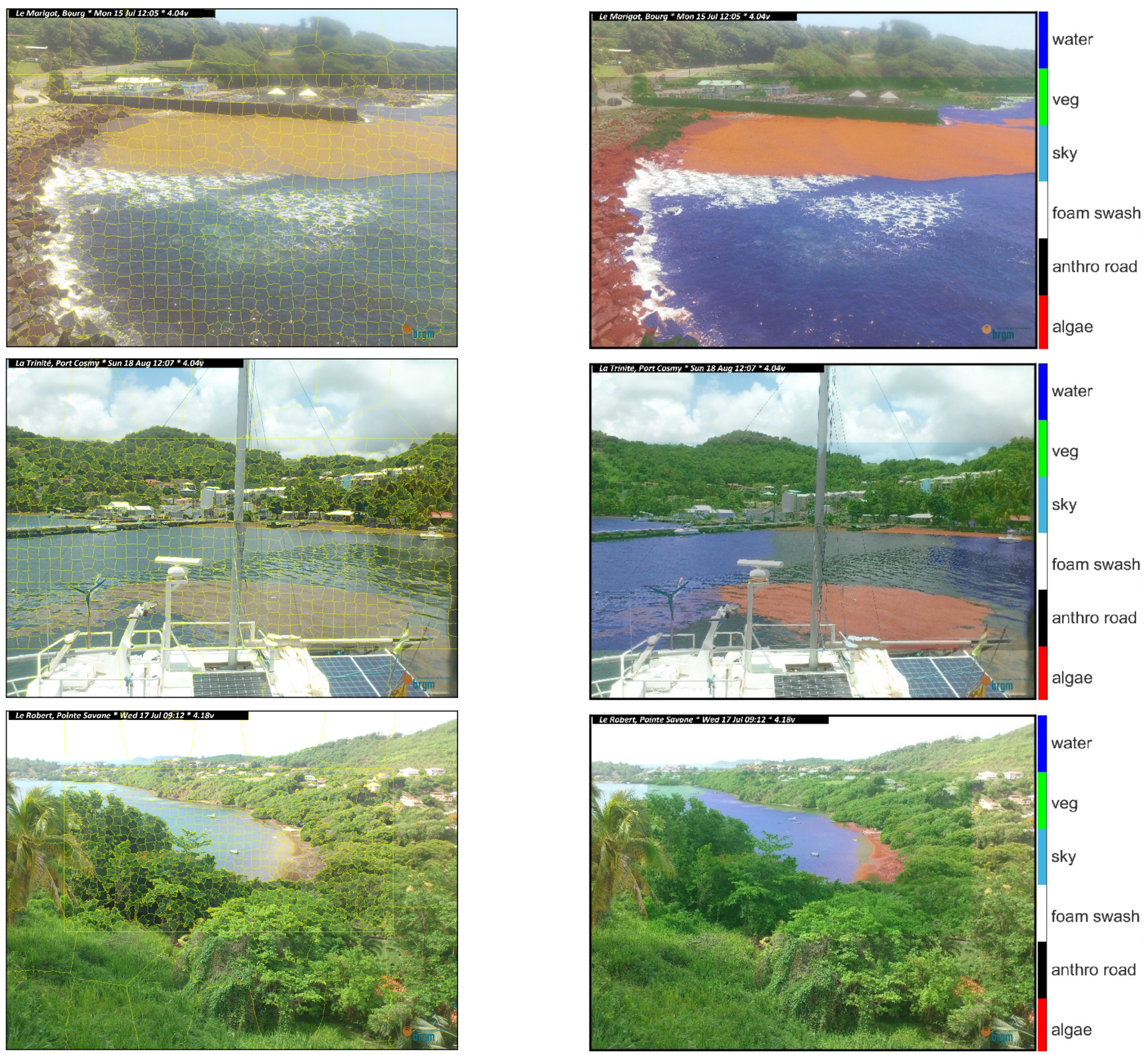

1.2. Sargassum Monitoring by Imagery

1.3. Outline and Scope

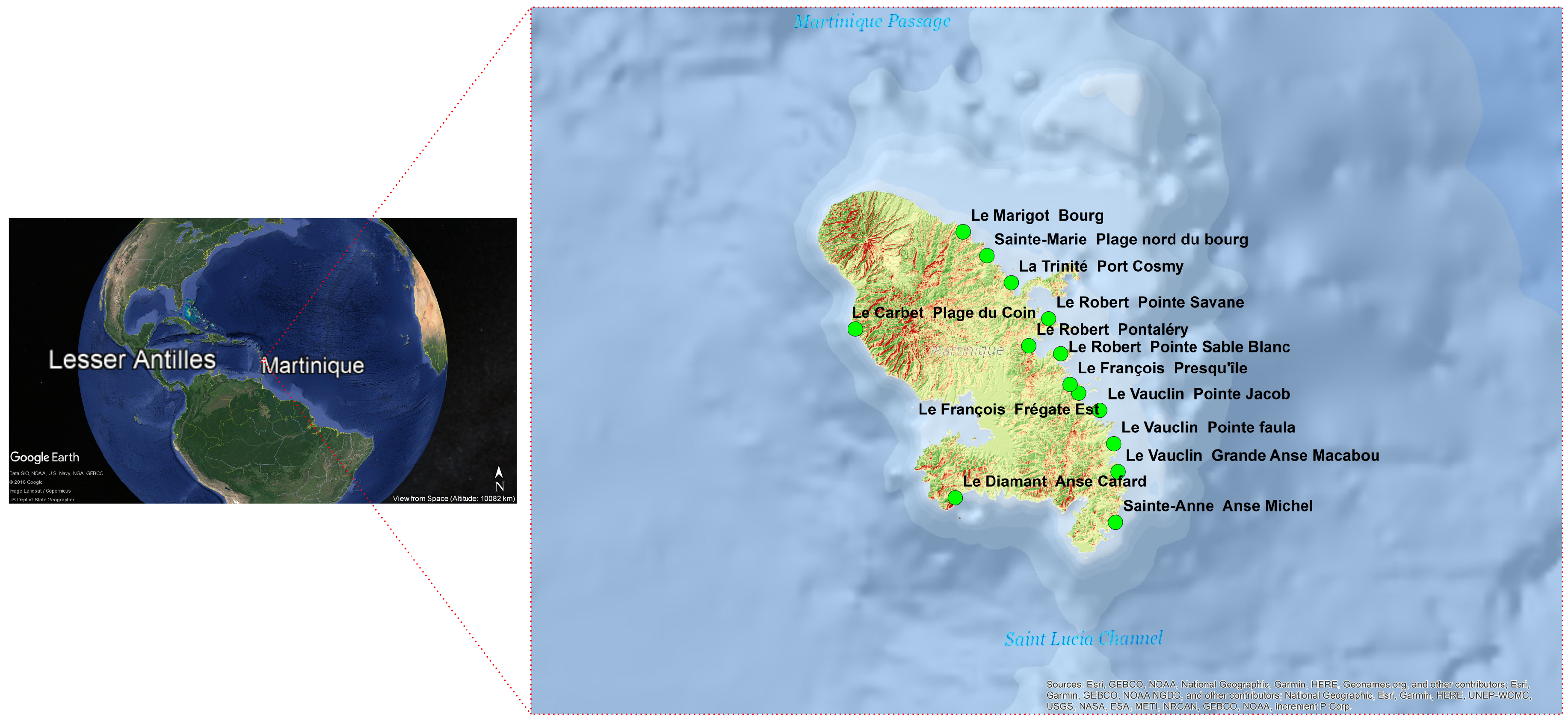

2. Study Site and Materials

3. Methods for Data Processing

3.1. Sticky-Edge Adhesive Superpixels

3.2. MobileNet CNN and Mechanisms of Deep Transfer Learning

3.3. Refining via Conditional Random Field

3.4. Workflow of STICKY-CNN-CRF Classification

4. Results

4.1. Experimental Results

4.2. Parameter Sensitivity Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Sample Availability: Samples and codes for the article are available from the authors. |

References

- Osorio, A.F.; Montoya-Vargas, S.; Cartagena, C.A.; Espinosa, J.; Orfila, A.; Winter, C. Virtual BUOY: A video-based approach for measuring near-shore wave peak period. Comput. Geosci. 2019, 133, 104302. [Google Scholar] [CrossRef]

- Almar, R.; Blenkinsopp, C.; Almeida, L.P.; Cienfuegos, R.; Catalán, P.A. Wave runup video motion detection using the Radon Transform. Coast. Eng. 2017, 130, 46–51. [Google Scholar] [CrossRef]

- Ibaceta, R.; Almar, R.; Catalán, P.A.; Blenkinsopp, C.E.; Almeida, L.P.; Cienfuegos, R. Assessing the performance of a low-cost method for video-monitoring the water surface and bed level in the swash zone of natural beaches. Remote. Sens. 2018, 10, 49. [Google Scholar] [CrossRef]

- Andriolo, U. Nearshore Wave Transformation Domains from Video Imagery. J. Mar. Sci. Eng. 2019, 7, 186. [Google Scholar] [CrossRef]

- Bergsma, E.W.; Conley, D.C.; Davidson, M.A.; O’Hare, T.J. Video-based nearshore bathymetry estimation in macro-tidal environments. Mar. Geol. 2016, 374, 31–41. [Google Scholar] [CrossRef]

- Valentini, N.; Saponieri, A.; Damiani, L. A new video monitoring system in support of Coastal Zone Management at Apulia Region, Italy. Ocean. Coast. Manag. 2017, 142, 122–135. [Google Scholar] [CrossRef]

- Ondoa, G.A.; Almar, R.; Castelle, B.; Testut, L.; Léger, F.; Sohou, Z.; Bonou, F.; Bergsma, E.W.J.; Meyssignac, B.; Larson, M. Sea Level at the Coast from Video-Sensed Waves: Comparison to Tidal Gauges and Satellite Altimetry. J. Atmos. Ocean. Technol. 2019, 36, 1591–1603. [Google Scholar] [CrossRef]

- Díaz-Gil, C.; Smee, S.L.; Cotgrove, L.; Follana-Berná, G.; Hinz, H.; Marti-Puig, P.; Grau, A.; Palmer, M.; Catalán, I.A. Using stereoscopic video cameras to evaluate seagrass meadows nursery function in the Mediterranean. Mar. Biol. 2017, 164, 137. [Google Scholar] [CrossRef]

- Beliaeff, B.; Pelletier, D. A general framework for indicator design and use with application to the assessment of coastal water quality and marine protected area management. Ocean. Coast. Manag. 2011, 54, 84–92. [Google Scholar] [CrossRef]

- Bracs, M.A.; Turner, I.L.; Splinter, K.D.; Short, A.D.; Lane, C.; Davidson, M.A.; Goodwin, I.D.; Pritchard, T.; Cameron, D. Evaluation of Opportunistic Shoreline Monitoring Capability Utilizing Existing “Surfcam” Infrastructure. J. Coast. Res. 2016, 319, 542–554. [Google Scholar] [CrossRef]

- Harley, M.D.; Kinsela, M.A.; Sánchez-García, E.; Vos, K. Shoreline change mapping using crowd-sourced smartphone images. Coast. Eng. 2019, 150, 175–189. [Google Scholar] [CrossRef]

- Andriolo, U.; Sánchez-García, E.; Taborda, R.; Andriolo, U.; Sánchez-García, E.; Taborda, R. Operational Use of Surfcam Online Streaming Images for Coastal Morphodynamic Studies. Remote. Sens. 2019, 11, 78. [Google Scholar] [CrossRef]

- Quartel, S.; Addink, E.A.; Ruessink, B.G. Object-oriented extraction of beach morphology from video images. Int. J. Appl. Earth Obs. Geoinf. 2006, 8, 256–269. [Google Scholar] [CrossRef]

- Valentini, N.; Saponieri, A.; Molfetta, M.G.; Damiani, L. New algorithms for shoreline monitoring from coastal video systems. Earth Sci. Inform. 2017, 10, 495–506. [Google Scholar] [CrossRef]

- Aarninkhof, S.G.; Turner, I.L.; Dronkers, T.D.; Caljouw, M.; Nipius, L. A video-based technique for mapping intertidal beach bathymetry. Coast. Eng. 2003, 49, 275–289. [Google Scholar] [CrossRef]

- Hoonhout, B.M.; Radermacher, M.; Baart, F.; van der Maaten, L.J. An automated method for semantic classification of regions in coastal images. Coast. Eng. 2015, 105, 1–12. [Google Scholar] [CrossRef]

- Othman, E.; Bazi, Y.; Alajlan, N.; Alhichri, H.; Melgani, F. Using convolutional features and a sparse autoencoder for land-use scene classification. Int. J. Remote Sens. 2016, 2149–2167. [Google Scholar] [CrossRef]

- Buscombe, D.; Ritchie, A. Landscape Classification with Deep Neural Networks. Geosciences 2018, 8, 244. [Google Scholar] [CrossRef]

- Chen, Y.; Ming, D.; Lv, X. Superpixel based land cover classification of VHR satellite image combining multi-scale CNN and scale parameter estimation. Earth Sci. Informatics 2019, 12, 341–363. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Aggarwal, C.C. Data Classification: Algorithms and Applications; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Roy, P.; Ghosh, S.; Bhattacharya, S.; Pal, U. Effects of degradations on deep neural network architectures. arXiv 2018, arXiv:1807.10108. [Google Scholar]

- Wang, M.; Liu, X.; Gao, Y.; Ma, X.; Soomro, N.Q. Superpixel segmentation: A benchmark. Signal Process. Image Commun. 2017, 56, 28–39. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Lv, X.; Ming, D.; Chen, Y.Y.; Wang, M. Very high resolution remote sensing image classification with SEEDS-CNN and scale effect analysis for superpixel CNN classification. Int. J. Remote. Sens. 2019, 506–531. [Google Scholar] [CrossRef]

- Chen, L.C.; Barron, J.T.; Papandreou, G.; Murphy, K.; Yuille, A.L. Semantic image segmentation with task-specific edge detection using CNNs and a discriminatively trained domain transform. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an edge: Improving semantic image segmentation with boundary detection. Isprs J. Photogramm. Remote. Sens. 2018, 135, 158–172. [Google Scholar] [CrossRef]

- Krähenbühl, P.; Koltun, V. Efficient inference in fully connected crfs with Gaussian edge potentials. In Proceedings of the Advances in Neural Information Processing Systems 24: 25th Annual Conference on Neural Information Processing Systems 2011 (NIPS 2011), Granada, Spain, 12–15 December 2011. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Stumpf, R.P. Applications of satellite ocean color sensors for monitoring and predicting harmful algal blooms. Hum. Ecol. Risk Assess. Int. J. 2001, 7, 1363–1368. [Google Scholar] [CrossRef]

- Wang, M.; Hu, C. Mapping and quantifying Sargassum distribution and coverage in the Central West Atlantic using MODIS observations. Remote Sens. Environ. 2016, 183, 350–367. [Google Scholar] [CrossRef]

- Gower, J.; Young, E.; King, S. Satellite images suggest a new Sargassum source region in 2011. Remote Sens. Lett. 2013, 764–773. [Google Scholar] [CrossRef]

- Louime, C.; Fortune, J.; Gervais, G. Sargassum Invasion of Coastal Environments: A Growing Concern. Am. J. Environ. Sci. 2017, 13, 58–64. [Google Scholar] [CrossRef]

- Hu, C. A novel ocean color index to detect floating algae in the global oceans. Remote Sens. Environ. 2009, 113, 2118–2129. [Google Scholar] [CrossRef]

- Maréchal, J.P.; Hellio, C.; Hu, C. A simple, fast, and reliable method to predict Sargassum washing ashore in the Lesser Antilles. Remote Sens. Appl. Soc. Environ. 2017, 5, 54–63. [Google Scholar] [CrossRef]

- Wang, M.; Hu, C. Predicting Sargassum blooms in the Caribbean Sea from MODIS observations. Geophys. Res. Lett. 2017, 44, 3265–3273. [Google Scholar] [CrossRef]

- Cox, S., H.O.; McConney, P. Summary Report on the Review of Draft National Sargassum Plans for Four Countries Eastern Caribbean; Report Prepared for the Climate Change Adaptation in the Eastern Caribbean Fisheries Sector (CC4FISH) Project of the Food and Agriculture Organization (FAO) and the Global Environment Facility (GEF); Centre for Resource Management and Environmental Studies, University of the West Indies: Cave Hill Campus, Barbados, 2019; p. 20. [Google Scholar]

- Nachbaur, A.; Balouin, Y.; Nicolae Lerma, A.; Douris, L.; Pedreros, R. Définition des cellules sédimentaires du littoral martiniquais; Technical Report, Rapport final; BRGM/RP-64499-FR; Orleans, France, 2015. [Google Scholar]

- Dollar, P.; Zitnick, C.L. Fast Edge Detection Using Structured Forests. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1558–1570. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 1097–1105. [Google Scholar] [CrossRef]

- Zhang, J.; Marszałek, M.; Lazebnik, S.; Schmid, C. Local features and kernels for classification of texture and object categories: A comprehensive study. Int. J. Comput. Vis. 2007, 73, 213–238. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. arXiv 2018, arXiv:1801.04381. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Nelli, F. Deep Learning with TensorFlow. In Python Data Analytics; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation With Deep Con-Volutional Nets and Fully Connected CRFs. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2015. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. Software. 2015. Available online: https://www.tensorflow.org (accessed on 23 December 2019).

- Orlando, J.I.; Blaschko, M. Learning fully-connected CRFs for blood vessel segmentation in retinal images. Med. Image Comput. Comput. Assist. Interv. 2014, 17 Pt 1, 634–641. [Google Scholar] [CrossRef]

- Van den Bergh, M.; Boix, X.; Roig, G.; Van Gool, L. SEEDS: Superpixels Extracted Via Energy-Driven Sampling. Int. J. Comput. Vis. 2015, 111, 298–314. [Google Scholar] [CrossRef]

- Holman, R.A.; Stanley, J. The history and technical capabilities of Argus. Coast. Eng. 2007, 6, 477–491. [Google Scholar] [CrossRef]

- Hariharan, B.; Arbeláez, P.; Bourdev, L.; Maji, S.; Malik, J. Semantic contours from inverse detectors. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 991–998. [Google Scholar]

- Valentini, N.; Balouin, Y.; Laigre, T.; Bouvier, C.; Belon, R.; Saponieri, A. Investigation on the capabilities of low-cost and smartphone-based coastal imagery for deriving coastal state video indicators: Applications on the upper mediterranean. Coast. Sediments 2019, 2635–2648. [Google Scholar] [CrossRef]

| Beach | Harbour | |||||

|---|---|---|---|---|---|---|

| Classes | - | Training Superpixels | Testing Superpixels | - | Training Superpixels | Testing Superpixels |

| Algae | 0.88 | 11,727 | 10,128 | 0.85 | 14,739 | 13201 |

| Anthro-road | 0.85 | 339 | 521 | 0.83 | 2319 | 1563 |

| Foam-swash | 0.88 | 11,814 | 16,230 | 0.93 | 13,026 | 230,217 |

| Sand | 0.91 | 6864 | 4260 | - | - | - |

| Sky | 0.84 | 1482 | 852 | 0.96 | 1838 | 1803 |

| Vegetation | 0.89 | 53,676 | 59,426 | 0.87 | 58,321 | 62,032 |

| Water | 0.95 | 32,679 | 25,362 | 0.93 | 26,449 | 15,260 |

| Beach | Harbour | |||||

|---|---|---|---|---|---|---|

| Classes | - | - | ||||

| Algae | 0.95 | 0.85 | 0.90 | 0.82 | 0.81 | 0.80 |

| Anthro-road | 0.95 | 0.65 | 0.77 | 0.72 | 0.8 | 0.75 |

| Foam-swash | 0.97 | 0.83 | 0.89 | 0.78 | 0.84 | 0.81 |

| Sand | 0.80 | 0.89 | 0.84 | - | - | - |

| Sky | 0.97 | 0.96 | 0.97 | 0.99 | 0.86 | 0.93 |

| Vegetation | 0.72 | 0.94 | 0.82 | 0.81 | 0.7 | 0.75 |

| Water | 0.78 | 0.93 | 0.85 | 0.81 | 0.88 | 0.85 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Valentini, N.; Balouin, Y. Assessment of a Smartphone-Based Camera System for Coastal Image Segmentation and Sargassum monitoring. J. Mar. Sci. Eng. 2020, 8, 23. https://doi.org/10.3390/jmse8010023

Valentini N, Balouin Y. Assessment of a Smartphone-Based Camera System for Coastal Image Segmentation and Sargassum monitoring. Journal of Marine Science and Engineering. 2020; 8(1):23. https://doi.org/10.3390/jmse8010023

Chicago/Turabian StyleValentini, Nico, and Yann Balouin. 2020. "Assessment of a Smartphone-Based Camera System for Coastal Image Segmentation and Sargassum monitoring" Journal of Marine Science and Engineering 8, no. 1: 23. https://doi.org/10.3390/jmse8010023

APA StyleValentini, N., & Balouin, Y. (2020). Assessment of a Smartphone-Based Camera System for Coastal Image Segmentation and Sargassum monitoring. Journal of Marine Science and Engineering, 8(1), 23. https://doi.org/10.3390/jmse8010023