1. Introduction

Maritime transport is a vital artery for the global economy facilitating the movement of over 80% of global trade by volume and more than 70% by value [

1]. In cases of increased traffic volume, it is necessary to establish control and management systems to avoid accidents and ensure safe navigation. The safe and efficient management of maritime traffic is particularly challenging in harbour approaches and port areas, where dense and heterogeneous vessel traffic operates within limited manoeuvring space. In Mediterranean ports, vessels equipped with the Automatic Identification System (AIS) often share the same waters with numerous smaller craft—such as recreational boats, fishing vessels, and local ferries—that are not required to carry AIS under the International Convention for the Safety of Life at Sea (SOLAS) [

2]. Today, maritime traffic surveillance relies on systems such as AIS and marine radar, and they form the backbone of Vessel Traffic Services (VTS) worldwide [

3]. These systems ensure the safety and efficiency of maritime traffic. The AIS is based on a transponder that transmits data on the identity, type, position and course of the ship, as well as other safety-related information, in real time. According to SOLAS, the AIS is mandatory navigational equipment for all ships engaged on international voyages of 300 gross tonnage and above and for all passenger ships regardless of their size.

AIS and radar are used by the VTS, and although they are essential, they are limited in scope. While these systems are highly effective for tracking larger commercial ships, they face well-documented limitations in coastal and harbour environments: AIS coverage is incomplete for smaller craft, and radar performance degrades with sea clutter, multipath effects, and small radar cross-sections [

4]. Several studies have highlighted detection blind spots in VTS monitoring, noting that small craft often operate unpredictably outside standard traffic lanes, increasing the complexity of collision avoidance [

5]. VTS is a coastal service established to provide information and navigational assistance, coordinate vessel movements, and monitor and manage vessel traffic in its area of responsibility (VTS area). However, during the tourist season, on a typical summer seasonal day in, e.g., the port of Split, the AIS detected 35, while in reality a total of 169 arrivals/departures of various vessels were detected [

6]. The observed significant difference indicates the importance and necessity of developing a system that can record the entire vessel traffic.

Smaller vessels, non-commercial vessels and vessels engaged exclusively in inland navigation are not required to have an AIS installed under SOLAS regulations. The inherent limitations of radar technology may affect the ability to detect small vessels. Furthermore, not every vessel is required to report to the relevant VTS service when entering or leaving the VTS area. It can be concluded that there is a gap in the monitoring of maritime traffic, in ports and their surroundings, which traditional systems fail to address, especially in the detection and tracking of smaller vessels without an AIS installed. This is particularly significant in Mediterranean passenger ports (such as ports on the Adriatic coast) where maritime traffic can vary from smaller vessels of all types, such as sailboats, training boats and speedboats, to passenger ferries and large cruise ships.

Traditional collision risk assessment methods rely primarily on AIS-derived kinematic data and, in some cases, radar plots [

3]. However, these methods cannot adequately address mixed traffic scenarios involving both AIS and non-AIS vessels.

Recent research has explored the use of computer vision and machine learning to supplement or replace conventional systems [

7,

8,

9]. Object detection frameworks such as YOLOv5–YOLOv9 have demonstrated strong performance in maritime contexts, outperforming traditional background subtraction in dynamic environments [

10], while tracking algorithms like DeepSORT have been adapted to handle multiple moving vessels [

11].

Collision risk assessment is an important parameter needed in traffic control centres, but also in future autonomous vehicle traffic [

12]. Therefore, it should be emphasised that the project goals are in line with current and future needs. The goal of the project is the development and implementation of a video system with the development of an application in the so-called augmented reality space for the so-called overlay that would warn of possible collision risk assessments.

Our goal is to develop an application that will help in assessment of collision risk. The developed augmented reality application will be based on stationary cameras placed inside and around the port, as input signals. The choice of camera position depends on the requirements of the port and port authority, and it is expected that the position will be around critical points in both ports and marinas, where the potential risk of collision is most likely. The proposed system will be particularly significant during the tourist season when there is significantly increased traffic and vessels without AIS. Vessels without AIS often sail unpredictably outside standard maritime routes and can, by sudden course changes, mislead navigators of vessels with an installed AIS, which increases the risk of collision and damage.

In this study, we propose and validate a shore-based video monitoring framework capable of detecting, tracking, and estimating the speed of both AIS and non-AIS vessels. The system fuses visual tracking with an Artificial Neural Network (ANN) to estimate vessel speeds from monocular camera footage. This information is visualised in an Augmented Reality (AR) interface, providing VTS operators with an integrated, real-time collision risk assessment.

The output parameters after the application development will be data on the assessment of the risk of collision in the AR interface. AR is increasingly present in various areas of human activity due to the integration of information with the user interface and the increasingly present Internet of Things (IoT). It should be noted that, unlike virtual reality, AR has the advantage, because it allows the user to perceive the real world, and digital information is added to the interface so that the user can use it more effectively.

The paper is organised as follows. The

Section 2 presents related works and contributions of the paper. The

Section 3 provides materials and methods. The

Section 4 presents obtained results. A discussion of the results is presented in the

Section 5.

2. Related Works and Contributions of the Paper

Research on maritime traffic monitoring and collision risk assessment has traditionally relied on AIS and radar data. AIS provides accurate vessel identification, position, course, and speed information, enabling efficient VTS operations [

3]. Radar complements AIS by detecting targets without transponders, but its performance degrades in cluttered coastal environments and with small radar cross-section targets [

4].

Hybrid AIS–radar fusion frameworks have been proposed [

4], but these approaches still depend on radar’s ability to resolve targets in high-clutter situations and do not fully cover non-AIS small craft. Some studies have explored high-frequency surface wave radar and LiDAR for detecting non-AIS vessels [

5], though such systems can be expensive and challenging to deploy in smaller ports. The emergence of computer vision and deep learning has opened new possibilities for maritime surveillance. Object detection models such as YOLOv5–YOLOv9 have demonstrated strong vessel detection performance from both static and moving platforms [

7,

8]. Li et al. [

7] showed that YOLO-based small-ship detection frameworks significantly outperform traditional methods in recall and precision. DeepSORT has been successfully adapted for maritime object tracking [

11], though challenges remain in handling occlusions and ID switching when vessels overlap or are visually similar. Estimating vessel speed and course from monocular video is an active research area. Jacob et al. [

11] employed monocular vision and GPS calibration for accurate speed estimation, while Ma et al. [

9] proposed a real-time monocular approach achieving high accuracy without expensive calibration setups.

Augmented Reality (AR) has been studied mainly for on-board navigational assistance [

13], with overlays highlighting collision threats and navigational aids. Shore-based AR applications for VTS operators remain underexplored, particularly for mixed AIS and non-AIS traffic scenarios. Our work addresses this gap by combining YOLOv9 + DeepSORT detection/tracking, ANN-based speed estimation, AIS fusion, and COLREGs-based decision logic in an AR-enabled monitoring tool for congested port environments.

Nowadays, segments or full artificial intelligence (AI) are increasingly used in many applications. Naturally, it includes maritime sector. It should be noted that AI should not be the purpose, but a tool to perform some actions. Artificial intelligence is increasingly being applied in the maritime domain, not only in research prototypes but also in operational port environments. For example, Busan Port has implemented an AI-driven governance model to enhance operational resilience, predictive maintenance, and cargo flow optimisation [

14]. Similarly, Indonesian ports are exploring AI for berth allocation, traffic prediction, and smart gate systems, while also addressing challenges such as data integration and workforce training [

15]. Beyond port operations, AI is also applied in domain-specific monitoring tasks; for instance, Kusuma et al. [

16] developed an IoT-based, real-time tidal monitoring system deployed at Kenjeran Beach, Surabaya, demonstrating the role of AI-enabled sensors in supporting safe navigation and environmental monitoring. These examples highlight that AI is not merely a theoretical concept but an emerging operational tool in diverse maritime contexts. Artificial intelligence (AI) is increasingly integrated into maritime traffic monitoring, often in combination with remote sensing (RS) technologies [

17,

18]. For instance, Nikolic et al. [

19] demonstrated the application of high-frequency surface wave radars (HFSWRs) as primary sensors for offshore safety risk assessment, showing their capability to detect non-AIS vessels beyond the radar horizon. However, the infrastructure and maintenance requirements of HFSWRs limit their applicability in smaller port environments. In a different branch of RS, Leder et al. [

20] reviewed optical methods for detecting floating marine debris, highlighting that advanced image processing techniques can operate under highly variable sea conditions. While debris detection and vessel detection share certain computational challenges, vessel detection requires more robust real-time tracking and classification.

Augmented reality (AR), mixed reality (MR), and virtual reality (VR) have emerged as cutting-edge tools in navigational safety. Vujović et al. [

21] presented a video surveillance system integrated with AR overlays for maritime safety, but their work was primarily focused on proof-of-concept visualisation, without speed estimation or decision support. Zhang et al. [

22] applied AR in waterway traffic management using sparse spatiotemporal data, which limits its real-time applicability in high-density traffic scenarios. Ujkani et al. [

23] tested MR-based remote pilotage, proving its potential for navigation assistance, although the study was limited to controlled environments. In an experimental setup, van den Oever et al. [

24] showed that AR can improve collaboration in ship navigation, while Houweling et al. [

25] quantified reductions in “head-down” time, directly linking AR use to increased situational awareness. In terms of collision risk assessment, Xu et al. [

26] visualised collision risk using sequential radar images, providing valuable situational data but excluding non-AIS vessels not visible on radar. Brozović et al. [

27] developed an avoidance route calculation method that could serve as a pre-processing module for AR-based decision support, but did not integrate vessel detection or tracking. Distance estimation is a prerequisite for speed estimation. Petković et al. [

28] proposed an instance segmentation-based method for maritime traffic surveillance, which could be adapted for our system but was not used here due to processing complexity. In multimodal approaches, Pandya et al. [

29] combined monocular cameras with mmWave radars for velocity estimation, achieving high accuracy but at the cost of additional hardware. For pure monocular vision, Lian et al. [

30] and Zhong et al. [

31] explored methods applicable to road traffic and PTZ camera setups, respectively; both offer useful principles but require adaptation for maritime contexts. Finally, in the broader field of collision avoidance, Templin et al. [

32] applied AR/VR to inland and coastal navigation safety, focusing on on-board systems. Unlike these works, our approach targets shore-based authorities and integrates YOLOv9-based detection, DeepSORT tracking, ANN-based speed estimation, and COLREGs-compliant decision logic into a unified AR interface for mixed AIS and non-AIS traffic in congested ports. In the domain of remote pilotage, Grundmann et al. [

33] provided a comprehensive technology overview, identifying the sensor, communication, and control requirements for safe shore-based vessel navigation. While their framework outlines the necessary infrastructure, it does not address integration with AI-based object detection or AR-based situational displays. Bandara et al. [

34] developed an AR lighting system to support navigation in compromised visibility, which offers a promising complement to collision risk assessment systems but is limited to on-board deployment. Expanding the evidence base, van den Oever et al. [

35] presented a systematic review of AR applications for maritime collaboration, identifying a lack of empirical studies in real port operations—an area directly addressed by our shore-based, mixed-AIS traffic monitoring approach.

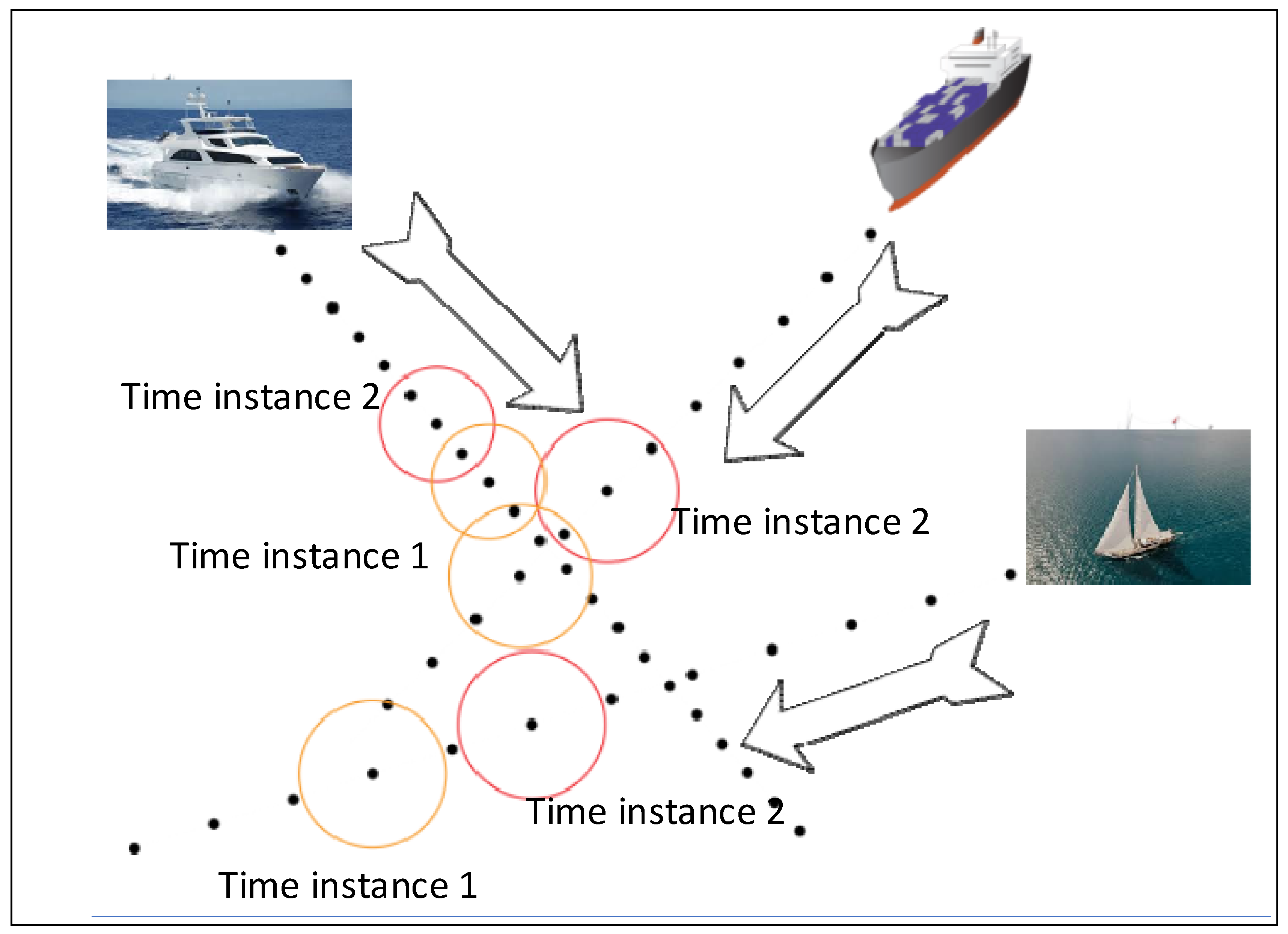

Figure 1 shows idea for possible proposed method’s showcase.

Figure 1 shows black dots emanating from three vessels. These dots are the predicted ship positions on a fixed time grid, e.g., 3 s. The following condition is fulfilled for the time points

ti of the predicted positions:

where Equation (1) represents reminder of the division of

ti with

ts, which is the time between the points.

At each current time point τ on the selected grid, the ship positions for all observed ships are predicted for all time grid points from the future time interval (τ, τ + max_prediction_time].

For all these positions and for all possible ship pairs, it is calculated whether the circles drawn around the position intersect or not. If this is the case (orange-coloured circles in

Figure 1), there is a risk of collision at this point. The figure also shows two instances with different colours (orange and red). It is possible to develop the application for any number of time instances.

The contribution of the work is the development of a model for estimating the speed of sailing objects from a stationary camera from the side of the ship, not, e.g., from a bird’s eye view. In addition, a framework for AR assistance in visualising collision risk is proposed, but for port authorities, and not for the ship’s bridge. It should be noted that a method for estimating the speed of ships using a side-mounted monocamera has been validated to detect and prevent potential collisions.

3. Materials and Methods

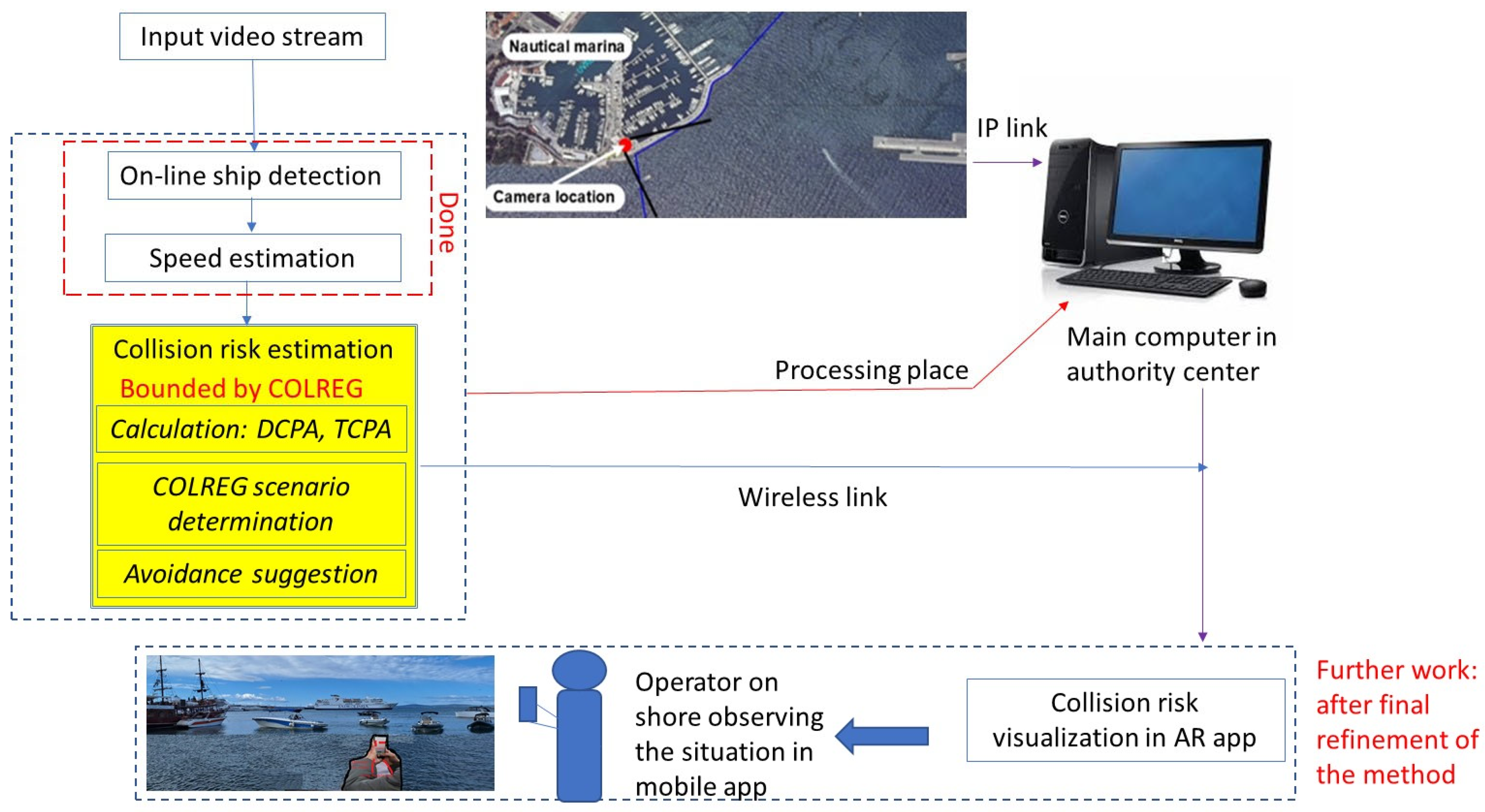

The proposed overall framework is shown in

Figure 2. It shows what is being done and what should be done.

As can be seen, some aspects are predetermined by the rules of the Convention on the International Regulations for Preventing Collisions at Sea (COLREG) [

37], others need further research. However, most of the mathematical work and programming has been completed. Only the visualisation is still missing.

Figure 2 shows the detailed system architecture of the proposed collision risk assessment framework. Each block represents a functional module: the On-line ship detection applies YOLOv9 to identify vessels in video frames; combined with DeepSORT algorithm and Kalman filtering to obtain vessel identity; the Speed Estimation block uses an Artificial Neural Network (ANN) that utilises pixel displacement, frame rate, and camera calibration parameters to estimate vessel speed; the COLREGs Module classifies encounters (head-on, crossing, overtaking), determines stand-on/give-way vessels, and adjusts the risk probability accordingly. The Collision risk estimation block combines kinematic predictions (CPA/DCPA/TCPA) with COLREG compliance checks to determine final collision risk levels, which are then presented in real-time via the Augmented Reality (AR) interface for use by the operator. It consists of sub-blocks for the calculation of CPA/DCPA/TCPA, a sub-block for the determination of the scenario and a sub-block for the generation of the avoidance suggestion.

3.1. Description of the Harbour Situation

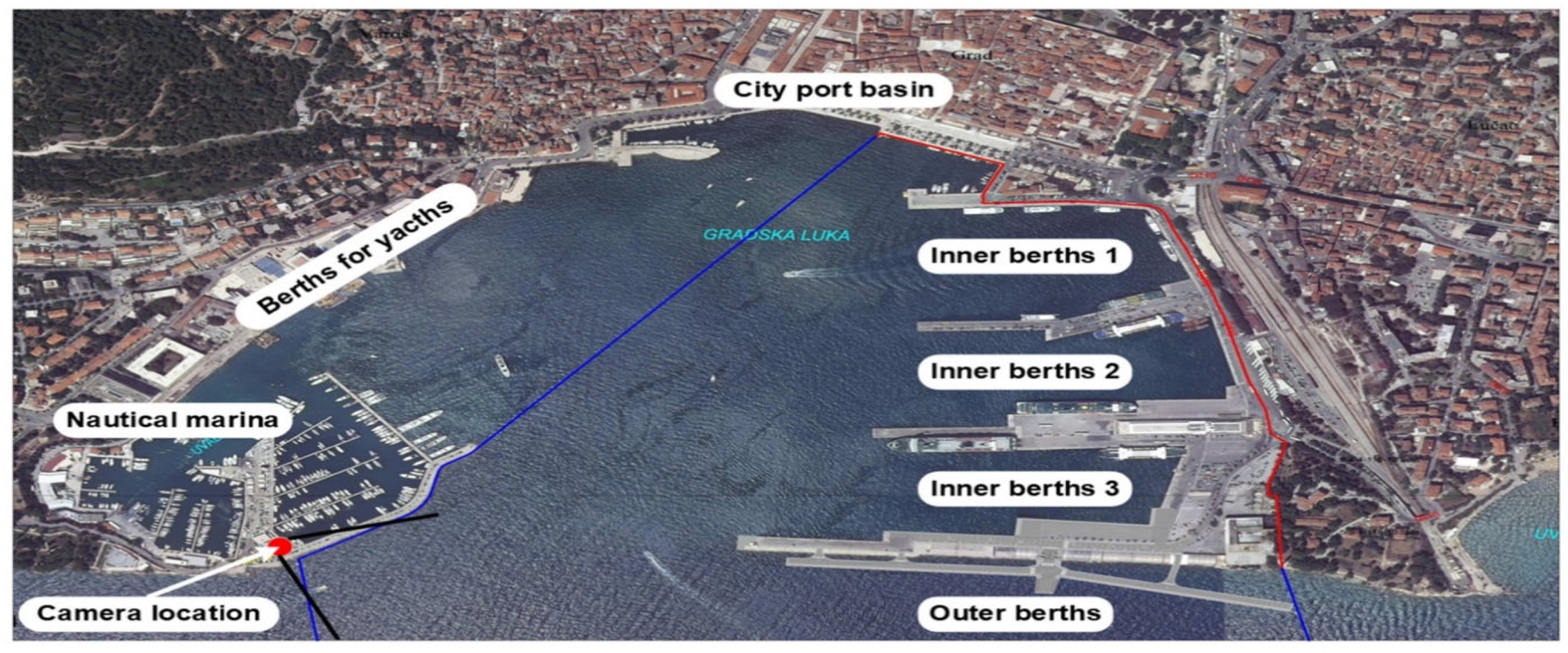

The harbour of Split was chosen as a case study because it is easily accessible for field measurements and is important as a Mediterranean passenger port with high traffic volumes.

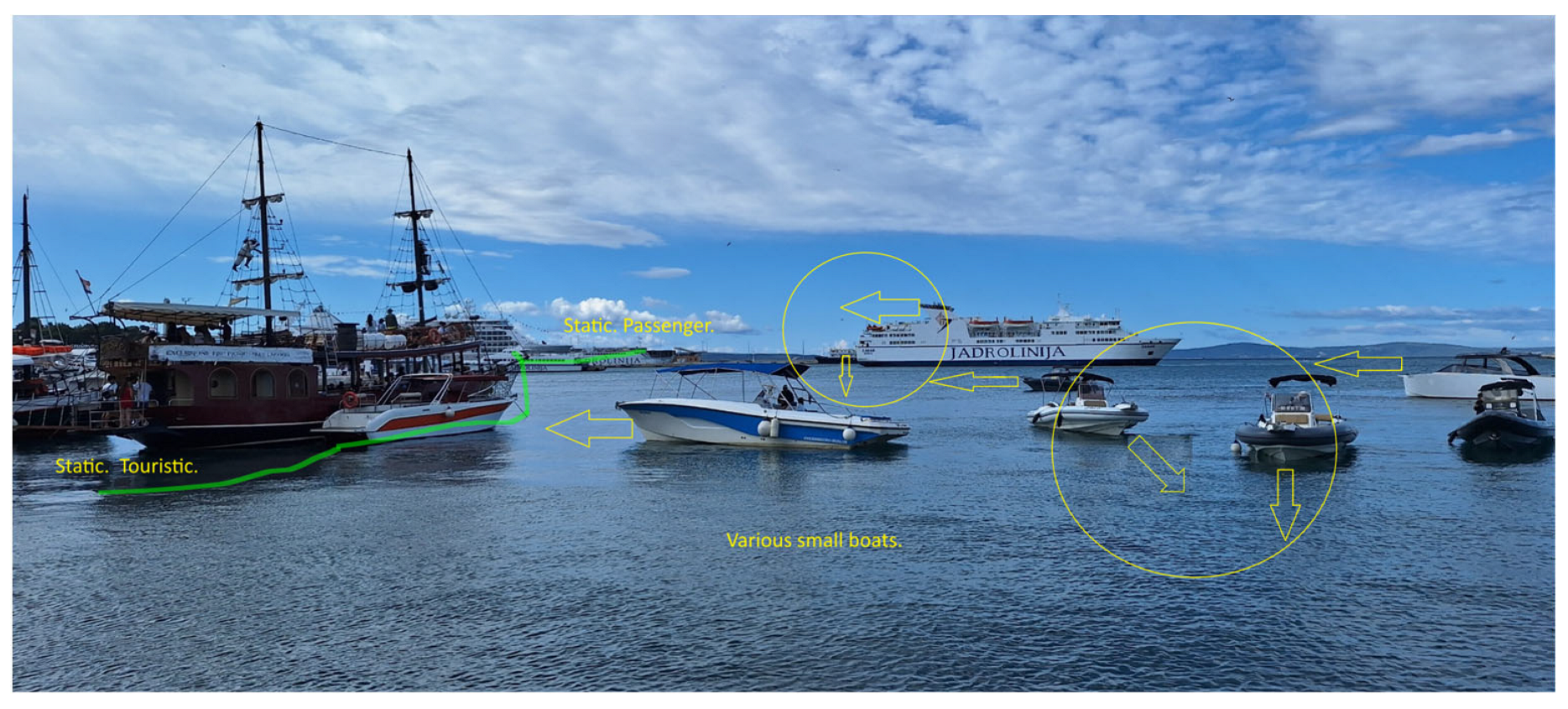

Figure 3 shows an example of harbour traffic in the port of Split. It can be seen that there are static and moving ships and boats of different sizes and types. Any assistance system should recognise which vessels are participating in the traffic, and which are not moving. Also, a human operator could recognise that some boats are not close to the ferry, but for an automated system without distance measurement or stereo vision is difficult. After all this, the developed system should correctly determine the speeds of all moving vessels and predict possible collisions. Such a system could be used wherever there is a high volume of traffic, e.g., in large marinas and straits, not just harbours. The point is that the traffic operator can use their smartphone (with AR capabilities) and the application will display the collision risk. Then the operator can send instructions to the ships to avoid such events.

The video footage was recorded at the entrance to Split harbour with a laterally mounted camera positioned to capture vessel traffic under different operating conditions. Data was captured at different times of the day and included different weather scenarios, including sunny, cloudy, and windy conditions. This approach ensured that the dataset reflected a realistic range of environmental and lighting variations typical of a busy port environment, increasing the robustness and generalisability of subsequent analyses. On

Figure 3. Arrows are showing direction of movement for every non-static vessel in field of view of camera. Also, circles are pointing out possible risk of collision indicating that crossing of lines of movement of two or more vessels. Green line represents border between static vessels and moving vessels.

Figure 4 shows the plan of the harbour of Split for ships of all kinds (except military and car transport). Blues line represents border of sea area covered with field of view of camera mounted on position marked with red circle. Red line follow edge of coast captured from side-mounted static camera. The city of Split is the economic and cultural centre of the Croatian region of Dalmatia and an important transport hub between the mainland and the islands. According to the Split Port Authority, 5,856,335 passengers and 989,842 vehicles were transported in 2023 (latest available data on the website [

38]). There is also cruise passenger traffic with almost 400,000 passengers. This makes the port of Split the largest passenger port in Croatia and the third largest passenger port in the Mediterranean in terms of the number of passengers and vehicles transported.

Due to the nature of Split harbour, maritime traffic consists mainly of passenger ships of various sizes, and no cargo ships arrive (the northern harbour is intended for cargo and navy). The inner berths 1 (see

Figure 4) are used by high-speed crafts operating on various routes between the mainland and the islands. The same berths are also used by small passenger ships (e.g., excursion boats) for tourist trips. Inner berths 2 and 3 are intended for domestic and international ferries, while the outer berths are for large passenger ships (e.g., cruise ships) or larger ferries [

6].

Apart from passengers and day trippers, the city’s harbour basin is also used by locals. They mainly use it for small private and fishing boats and, especially in the summer season, also with speedboats of all sizes for tourist transfers and for boat taxis. In the western part of the harbour there is a marina that houses sailing clubs with a number of small sailing boats. It also has berths for private and chartered sailing boats and yachts, and generates much of the traffic in the harbour basin. Next to the marina, towards the municipal harbour basin, there is an area reserved for larger yachts, sailing boats, catamarans, and excursion boats. This harbour configuration ensures the diversity of maritime traffic, as the harbour can accommodate many different types of vessels [

6].

3.2. Description of the Equipment

The training of the ANN was performed on a computer, equipped with an Intel® Core™ i7-13700F processor, Intel Products, Ho Chi Minh, Vietnam and an NVIDIA GeForce RTX 4070.

The camera used in the experiments is the Dahua DH-TPC-PT8620A-T surveillance camera [

39]. Its position is marked in

Figure 4. It is a hybrid PTZ thermal network camera with 640 × 512 VOx uncooled thermal sensor technology. It consists of 1/1.9″ 2-megapixel Sony CMOS with progressive scan and 30× optical zoom. It also supports temperature measurement and can support fire detection and fire alarm. Maximum panning speed of 160°/s, 360° endless panning movement. The operating conditions are −40 °C~+70 °C and less than 95% RH. IP connection is used to obtain video signal. Although the possibility of thermal imaging exists, only the visual spectrum was used, as most of the traffic occurs during the tourist season (summer) in daylight.

For validation, we used the Braun Rangefinder 1000 WH, produced in Eutingen, Germany, laser measurement device with the characteristics given in

Table 1. The measurements with this device were performed manually.

3.3. Implementation Details

We used the YOLOv9-s [

40] model pre-trained on the COCO dataset, followed by fine-tuning on a custom dataset of 13,000 annotated images of ships in harbour and coastal environments. Fine-tuning was performed for 100 epochs with a batch size of 16, a learning rate of 0.001, and a stochastic gradient descent (SGD) optimiser with a momentum of 0.937. The detection confidence threshold was set to 0.7, and the non-maximum suppression (NMS) IoU threshold was set to 0.5. Data augmentation included random scaling, horizontal flipping, and brightness adjustments to improve robustness in different weather and lighting conditions.

Tracking was implemented using the DeepSORT algorithm [

41] with a cosine distance metric for appearance embedding matching. The maximum cosine distance was set to 0.2, the maximum age of mismatched tracks was set to 30 frames, and the minimum number of detachments before track confirmation to 3 frames. The Kalman filter motion prediction was configured with a constant velocity model to maintain track identity during short-term occlusions.

3.4. System Reliability and Fail-Safe Measures

The system includes several fail-safe mechanisms to maintain operational integrity in the event of hardware or data interruptions:

Signal loss (video feed): if no update is received within 2 s period, the vessel is flagged as “unconfirmed” and excluded from the active collision risk calculation.

Camera failure: The operators receive an automatic alert message indicating the failed camera.

Data processing error: The system logs the event and restarts the affected module.

4. Results

The camera installed in the harbour records videos online. The computer in the faculty laboratory uses ANN to estimate the speed. This is done in real time. To check how good the estimate is, a research team is sent into the harbour to use a Braun Range-finder 1000 WH laser device. The same time stamps were used for the video and the measurement with the laser device. The measured speed (shown as a column in

Table 2) is determined with this laser device. The estimated speed (

Table 2) is calculated on the computer from the input video signal.

To analyse the results, we can calculate the mean absolute error (MAE), which is expressed as follows:

where

ve is the estimated speed,

vm is the measured speed, and

n is the number of measurements (estimates). In case of presented results, the

MAE is equal to 1.975 km/h.

We also used a Mean Square Error (MSE) and a Root Mean Squared Error (RMSE), which are defined as follows:

and RMSE is

Using the collected dataset, we evaluated the estimation accuracy for all five vessel categories. The MSE for the entire dataset was 4.73916 (km2/h2), which corresponds to an RMSE 2.1769 km/h. The errors varied considerably for the individual vessel categories. In the Large Passenger Ship category, for example, the MSE was 1.2066, the RMSE was 1.098 km/h, and the Mean Absolute Error (MAE) was 1.066 km/h. In comparison, the Motorboat category had a higher MAE of 2.90 km/h, while the Sailing Boat category had a MAE of 1.76 km/h.

Figure 5 illustrates the relationship between vessel categories and their MAE values. The Large Passenger Ship category, which contains the largest number of samples, also has the lowest MAE, suggesting that higher sample counts may contribute to improved accuracy. However, the current vessel length categories partly overlap—for instance, Sailing Boat is defined as vessels longer than 6 m, while Motorboat is defined as vessels between 7 m and 12 m. This overlap makes it impossible to directly correlate MAE with vessel size. Future work could refine these definitions into non-overlapping categories, potentially enabling stronger statistical correlations. Additionally, more granular classification could reduce errors in vessel type identification, which in turn would improve speed estimation accuracy.

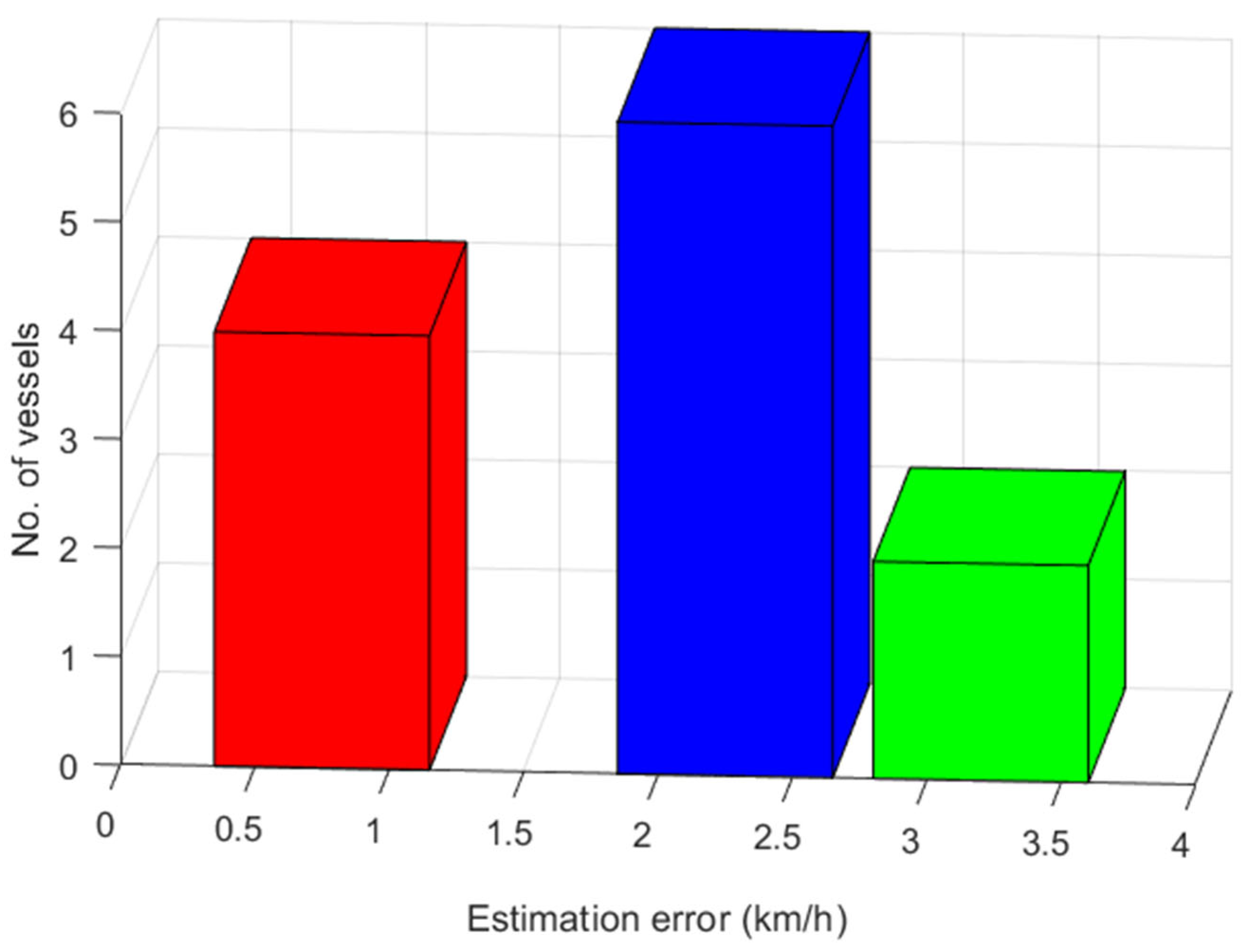

To further analyse the error distribution, we have divided the errors into three classes:

The first class is in the range of 0–1.5 km/h errors;

The second class includes errors between 1.5 and 3 km/h;

The third class is more than 3 km/h.

Figure 6 shows the distribution of the estimation error by the error classes, which were formed according to the error value. Red color represents “Large passanger ship” class, blue color “Sailing boat” and green “Motorboat” class.

To see if the results match their methods, we look for similar references and use their information on errors. The results are shown in

Table 3.

Table 3 shows that the proposed method is within the results obtained by other teams. However, the other teams did not analyse the maritime environment, but cars on land. It is expected that the results would be similar if their ANNs had been trained in a maritime dataset, but it is not absolutely certain.

During data collection, weather conditions varied between clear skies, overcast conditions, light rain, and strong glare from the sun. Detection accuracy remained above 95% in clear and overcast skies but dropped to 88% in strong glare and 84% in light rain. Tracking stability was slightly reduced in rain due to partial loss of visibility. These results suggest that while the system is robust under most conditions, unfavourable weather conditions can moderately affect performance, which will be addressed in future iterations by improving image pre-processing and re-training the model on datasets with unfavourable weather conditions.

In conclusion, the results can be summarised as follows. For all vessels analysed, the proposed system achieved a MAE of 1.98 km/h, an MSE of 4.74 (km/h)2, and an RMSE of 2.18 km/h for speed estimation. The highest relative error (21.98%) occurred for a sailing boat under variable wind conditions, and the lowest (2.83%) for a motor vessel under calm conditions.

5. Discussions

The integration of AR and ANN into speed estimation enables improved situational awareness and real-time risk assessment. However, a critical aspect of classifying vessels and estimating their kinematic parameters is to match the granularity of the classification with the distribution of the available dataset. In particular, the use of fewer vessel classes can lead to overgeneralization, where vessels with different physical and operational characteristics are grouped together. This can lead to widely varying class averages and reduce the accuracy of subsequent estimates. Conversely, the introduction of a finer classification with numerous categories can increase the risk of misclassification, especially if the number of training examples per category is no longer sufficient, leading to additional errors in speed and trajectory estimation.

To mitigate the risk of collision in harbours with mixed AIS and non-AIS traffic, video signal processing has proven to be an effective approach, especially in daylight conditions. The majority of non-AIS traffic consists of tourist, local, and leisure vessels, whose presence is significantly lower during night hours, making the detection of non-AIS vessels primarily a daytime issue.

To overcome these challenges, we propose the use of an asymmetric dataset that reflects the real distribution of vessel types and traffic profiles. This approach aims to ensure robust ANN training and improved generalisation in operational environments with inherently unbalanced traffic patterns. We propose the use of an asymmetric dataset for training with balanced real-traffic profiles, as in our research, using the Split Port Ship Classification Dataset (SPSCD).

The results presented cannot be regarded as definitive proof of the method. In further research, we will carry out more extensive measurements. After the final results of the planned research, it will be useful to fully implement and test the developed AR application. Therefore, the next step is to develop an application (and not just a framework for testing) for an augmented reality interface that displays an accident risk assessment to the user. The application will be developed on a mobile platform as well as on PC-based computers. The data from the video system is transferred to the server via a mobile communication interface using an internet connection. The platform for the development of the AR application and interface will be the Unity interface, and the application will be programmed in the high-level programming language C#.

We also expect comments from readers on how to best visualise collisions in an AR environment. Further work should include more advanced estimations to find the best solution to minimise errors. One idea is to use three-point estimates or spline curves of trajectory speed or even more points to constantly update the speed estimate and correct collision risks online.

The aim of this study was to evaluate the effectiveness of combining AR and ANN to reduce the risk of vessel collisions in harbours with mixed AIS and non-AIS traffic. The results show that the proposed system can reliably estimate the speeds of different categories of vessels—including recreational craft, motorboats, large passenger ships, sailing boats and speed crafts—based on sensor data and ANN-based analysis.

The absolute and relative errors in speed estimation, compared to estimated and directly measured vessel speeds, were mostly within acceptable ranges for practical maritime applications. Most of the vessel speed measurements showed absolute errors of less than 3 km/h, and the relative errors were mostly below 20%. Remarkably, the system achieved a relative error of only 2.83% for certain vessel cases, demonstrating robust performance even under variable maritime conditions.

A comparative analysis with the relevant literature on vehicle speed estimation, which was primarily performed in terrestrial settings, shows that the results obtained in this maritime study are competitive. For example, previous methods using monocular cameras to estimate vehicle speed have RMSE values between 0.13 and 2.3 m/s and relative errors between 10.38% and 44.66%. The proposed maritime method yielded an RMSE of 0.605 m/s and a relative error within the interval of 2.83% to 21.97%, indicating not only the validity of the method but also potentially better performance for certain categories of vessels.

From a harbour safety perspective, the observed MAE of ~2 km/h and RMSE of ~2.2 km/h are operationally acceptable. According to feedback from VTS operators and IMO guidelines, collision avoidance decisions in narrow waters are usually based on speed ranges (e.g., <5, 5–10, >10 knots) and not on precision below the knot limit. It is therefore unlikely that the current error margins will affect the collision risk classification or recommended manoeuvres, although further improvements are possible by refining the camera calibration and incorporating additional environmental compensation into the ANN speed estimation process.

It should be noted that previous work focuses predominantly on the vehicle context and does not address the specific challenges of the maritime environment, such as sea surface motion, vessel manoeuvrability and occlusion by other objects, which are likely to introduce additional complexity and variability. This underlines the importance of adapting and re-training ANN models for maritime operational data to further improve reliability and minimise risk.

From the perspective of port safety, speed estimation errors in the range of 2–3 km/h are considered operationally acceptable, especially for the assessment of collision risk in harbour approaches. According to the recommendations of the International Maritime Organisation (IMO) and feedback from local VTS operators, decisions on reaction times and collision avoidance manoeuvres in narrow port waters are generally based on speed categories (e.g., <5, 5–10, >10 knots) and not on precise values with an accuracy of 1 km/h. In this context, the observed margins of error do not significantly change the results of the risk classification. Nevertheless, further refinement of the speed estimation model, especially under challenging environmental conditions, is planned to improve accuracy and consistency.

The current study did not experimentally investigate whether the system’s AR-based collision warnings improve decision quality or reduce operator reaction time in real-world scenarios. This remains a priority for future research, with trials planned using active VTS operators and simulated high-traffic scenarios. Testing was limited to the port of Split, which, is representative of busy passenger ports in the Mediterranean, but does not reflect the variability in geography, navigational conditions and vessel types in other ports. Further validation is planned in ports with other characteristics, such as narrow estuaries, industrial harbours and high-altitude areas. Although no formal cost–benefit analysis has been carried out, preliminary estimates suggest that implementing the proposed AR + ANN system in a medium-sized port would require €10,000 to €15,000, including hardware (cameras, servers, networks) and software implementation. This would be in addition to €1000 per year for maintenance and updates.

Despite the encouraging results presented in this study, several limitations must be acknowledged, that provide guidance for future research. Firstly, the sample size comprising the vessel types measured was relatively limited, emphasising the need for further experimentation with a wider and more diverse selection of vessels. As this work represents an initial validation of the system, the dataset will be expanded in future studies to cover a wider range of vessel types, operational scenarios, and seasonal variations. Additionally, vessel categories in the current dataset partially overlap in terms of physical dimensions (e.g., motorboats and sailing boats between 8 and 12 m), which can introduce classification ambiguity and affect speed estimation accuracy. Future work will adopt a more granular classification scheme, including propulsion type and hull form. In addition, the variability of the environment—including factors such as weather conditions, sea state, and the presence of dynamic obstructions—may affect the accuracy of the system and should therefore be systematically considered in future investigations. The current approach could also benefit from closer integration with additional onboard and landside sensor systems, such as radar and LIDAR, to improve redundancy and overall reliability, especially in congested harbours. Future research should therefore prioritise the expansion of vessel and environmental datasets for training and validation of models. It will also be important to conduct comparative evaluations of the proposed AR- and ANN-based methodology with alternative machine learning techniques and conventional radar or optical systems under real operational conditions. Finally, an analysis of the user experience and ergonomic factors associated with the use of AR support tools for port operators and navigators is recommended, to facilitate effective adoption and optimise workflows.