Towards Autonomous Coordination of Two I-AUVs in Submarine Pipeline Assembly

Abstract

1. Introduction

2. Methodology

2.1. Perception

2.1.1. Neural Network Approach

2.1.2. ArUco Markers

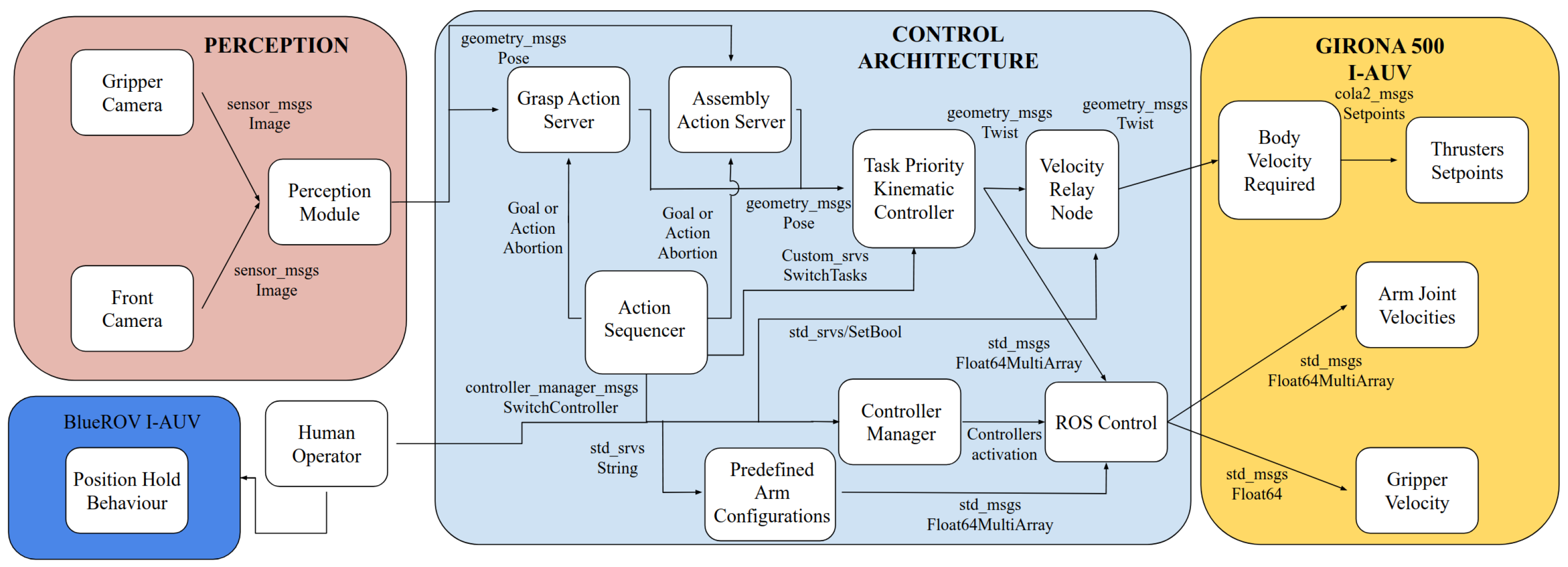

2.2. System Architecture

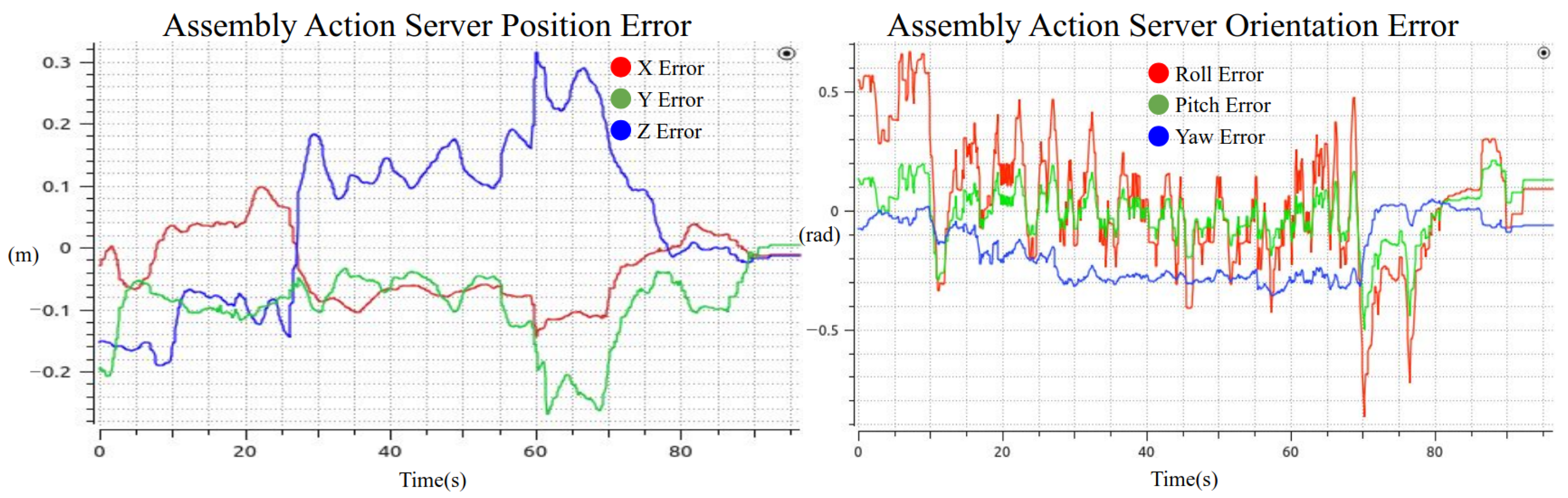

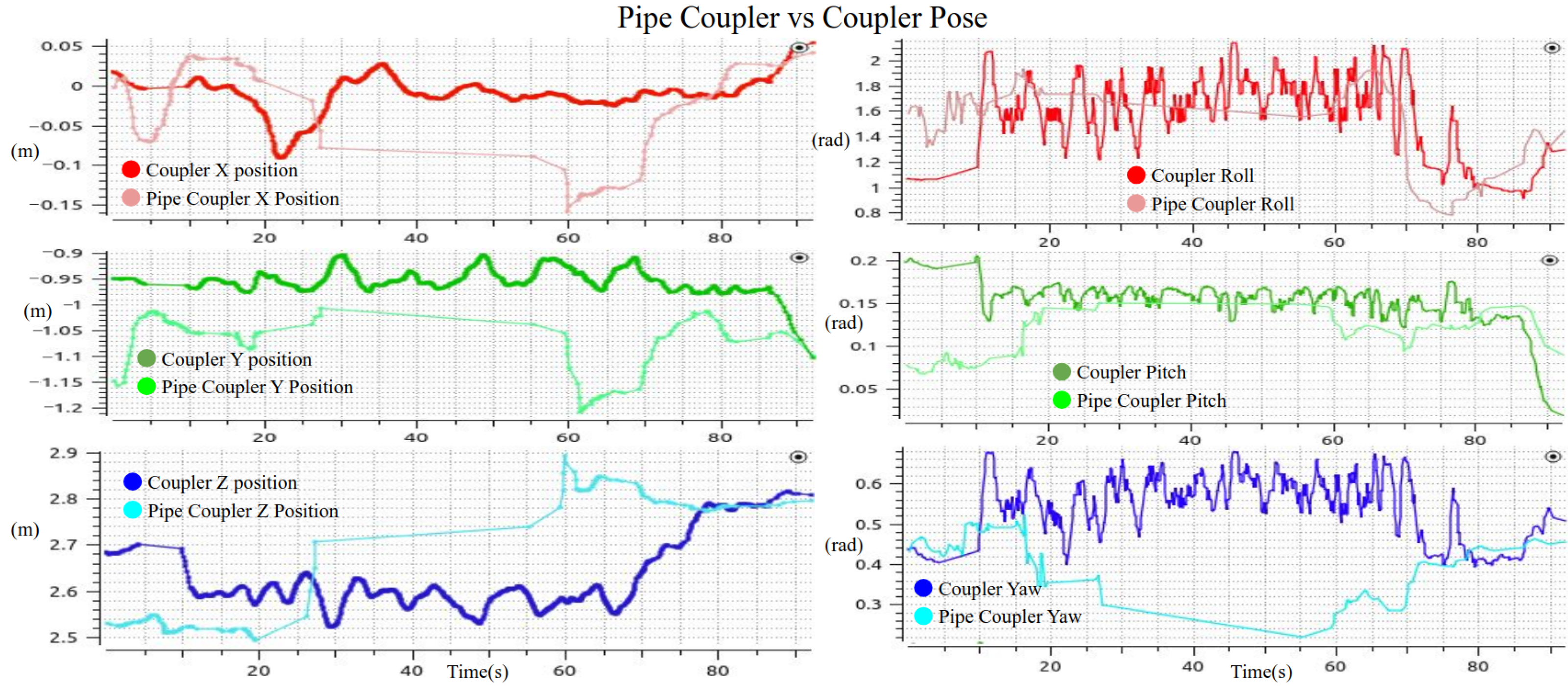

- Action servers: These modules are ROS nodes that execute tasks, providing feedback and results. For example, the Grasp Action Server reads the object pose and sends an approach pose with an offset relative to the object. This node waits for the end-effector to reach the approach pose before sending a new grasp pose closer to the object. This cycle is repeated until the end-effector reaches the final grasp pose (i.e., its pose is within a defined threshold relative to the object pose). The action can also be automatically cancelled if the error between the end-effector and the object exceeds the pre-established threshold, or if the human operator decides to cancel the autonomous behavior for any reason. Additionally, the action node publishes a feedback message containing the current position and orientation error.In the case of the Assembly Action Server, the pose of the coupling part of the pipe and the left coupling part of the coupler were used to compute the misalignment error. A proportional controller was implemented to reduce this error and allow the system to successfully perform the assembly. The output of the controller was a feed-forward velocity command sent to the kinematic controller; this velocity represents the motion the end-effector must follow to bring the misalignment error to zero.To improve the robustness of the autonomous behavior, an additional mechanism was implemented. Specifically, if the coupler were already grasped, but the frontal camera could no longer detect it (typically because the manipulator arm obstructed the camera’s field of view), the system could still estimate the left-coupling part of the coupler pose. This was achieved by using the last known transformation between the end-effector and the left-coupling part of the coupler. By combining this transformation with the transform from the World NED frame to the end-effector frame, the system was able to compute an accurate estimate of the coupler’s global pose even without visual input.

- Low-level controllers: This module includes the predefined arm configurations, the task-priority kinematic controller and the drivers required to send velocity commands to the I-AUV module. The predefined arm configurations node use the joint trajectory controller of the ROS control framework to move the arm to a predefined configuration. The task-priority controller used is based on the approach presented in [10], with small modifications to adapt it to the specific requirements of this application. These adaptations depend on the active action server: in the case of the grasping server, the input is a target pose, whereas in the assembly server, the input consists of linear and angular velocity commands. To handle both cases within a unified framework, two control tasks were implemented: one with a zero-valued proportional gain vector (used for feed-forward velocity input), and another with a unitary gain vector. Depending on the active action server, one of these tasks is enabled while the other is deactivated, allowing efficient switching between pose-based and velocity-based control. Additionally, a velocity relay node and a controller manager work alongside the ROS control node to manage the flow of velocity commands to the I-AUV. This architecture ensures that the human operator can interrupt the autonomous control at any time and switch to teleoperation if a potential collision is detected or if the safety of the system is compromised.

- High-level controllers: These controllers function as a sequencer capable of triggering any action server by sending a goal or cancelling an ongoing action. Additionally, they can switch the active task in the task-priority algorithm or send a string-based request specifying a predefined arm configuration. These configurations include positions such as fold, unfold, look down, start assembly, or home position. In parallel to the sequencer, the human operator continuously monitors the intervention. If any failure occurs or the safety of the mission is compromised, the operator can intervene and take manual control of the system.

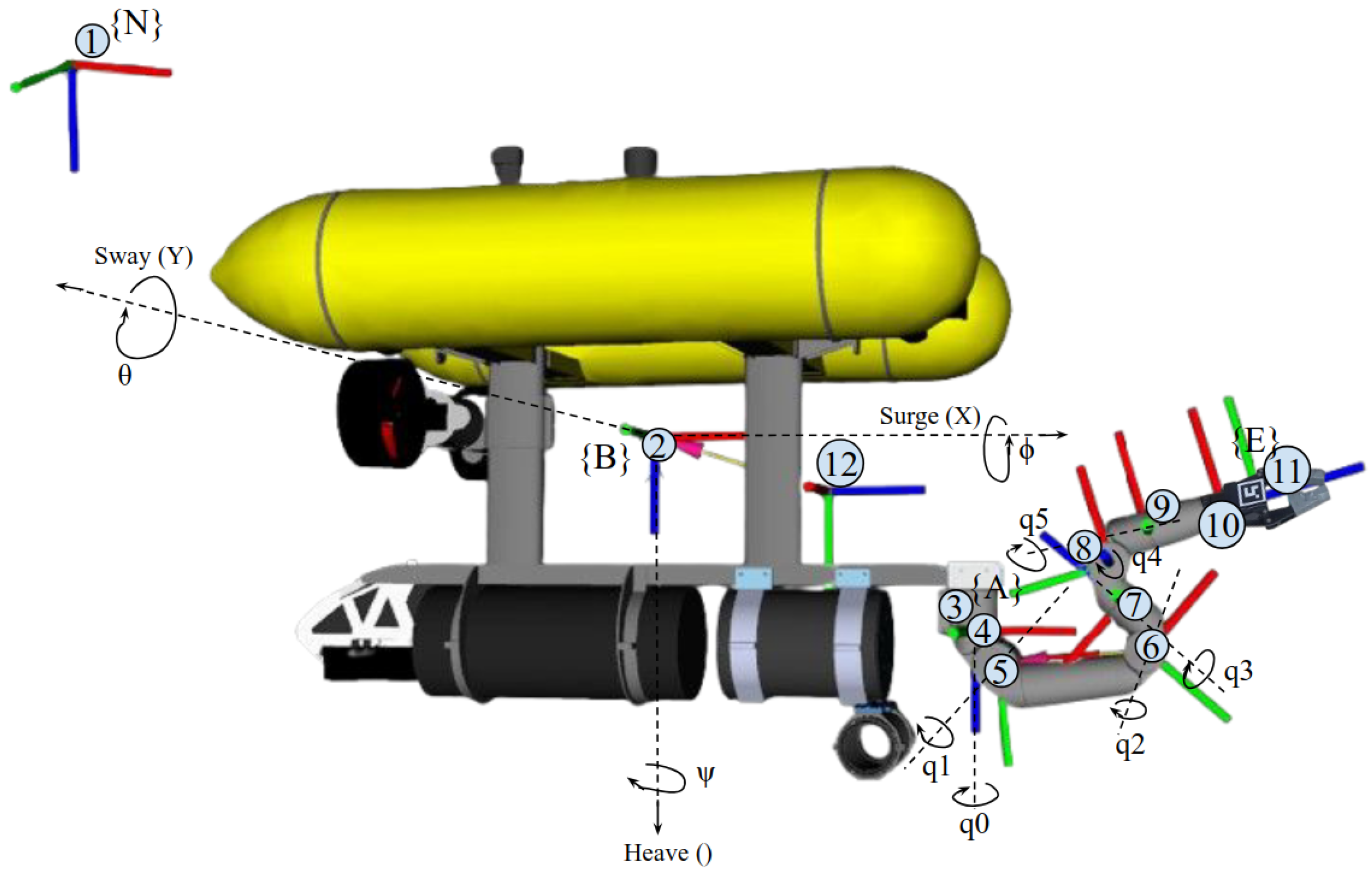

2.3. I-AUV Kinematics

2.3.1. Reference Frames

2.3.2. Definitions

2.3.3. Kinematic of Position

2.3.4. Kinematic of Velocity

2.3.5. Controllers

3. Experimental Setup

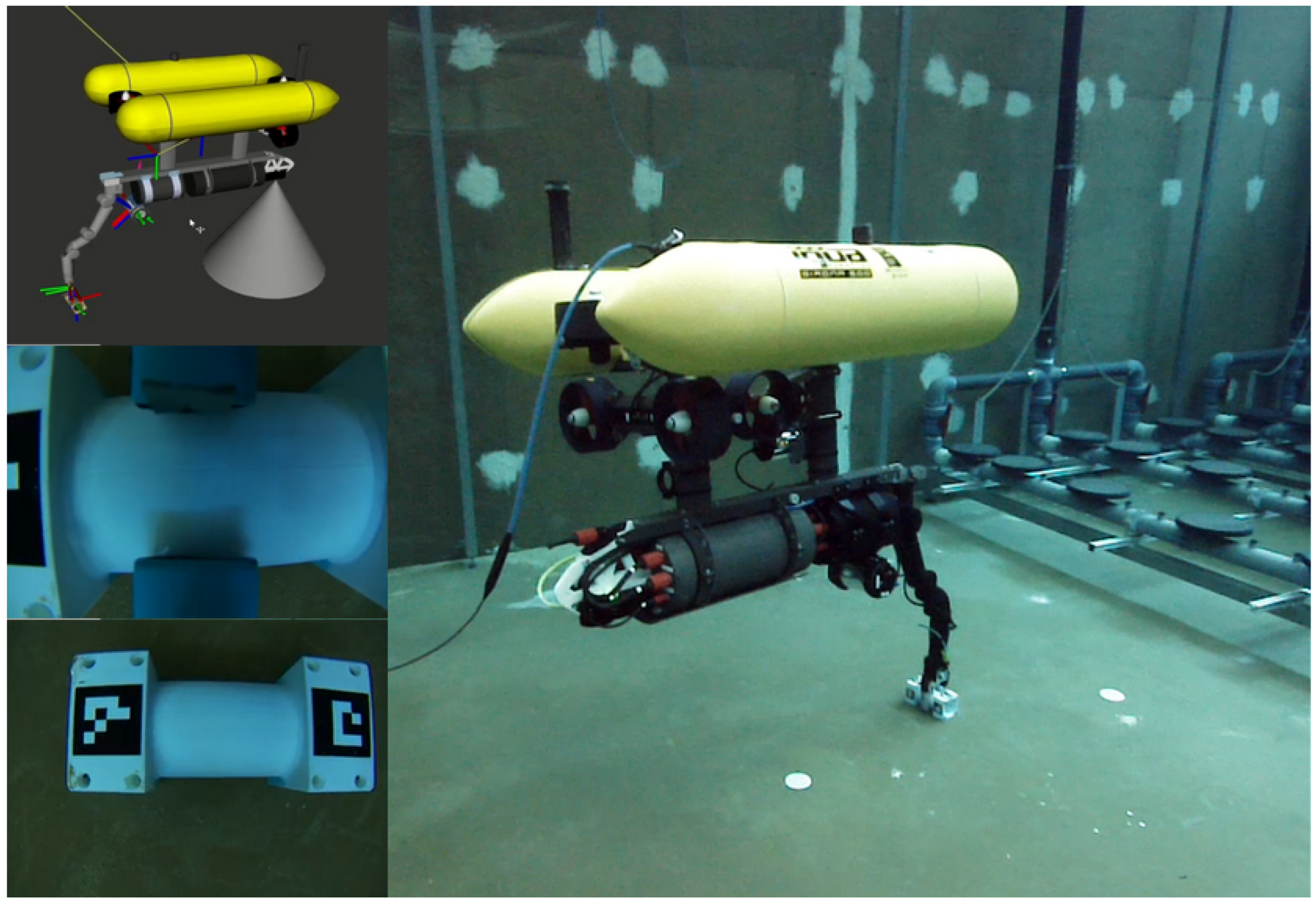

3.1. Mechatronic Integration

3.1.1. Girona 500 I-AUV

3.1.2. BlueROV I-AUV

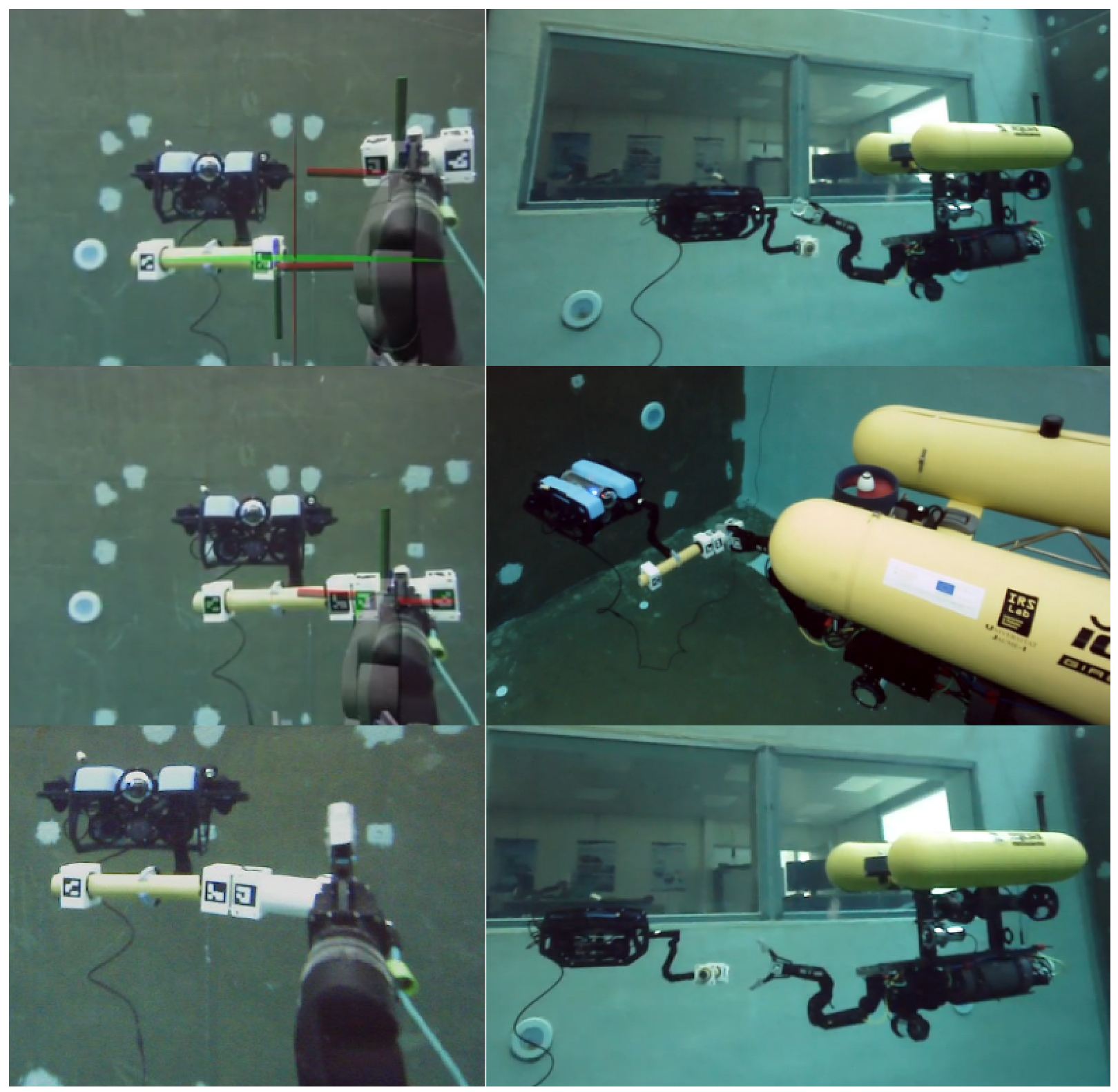

3.2. Grasp and Assembly Experiment

- Unfold the arm.

- Move the arm to the look down predefined position.

- Trigger the grasp action server.

- Move the arm to the start assembly predefined position.

- The operator moves the BlueROV I-AUV close to the Girona 500 I-AUV.

- Trigger the assembly action server and switch tasks to the end-effector configuration.

- Switch task from end-effector configuration to AUV base configuration.

- Send a pose to the AUV configuration to move away from the BlueROV I-AUV.

4. Results

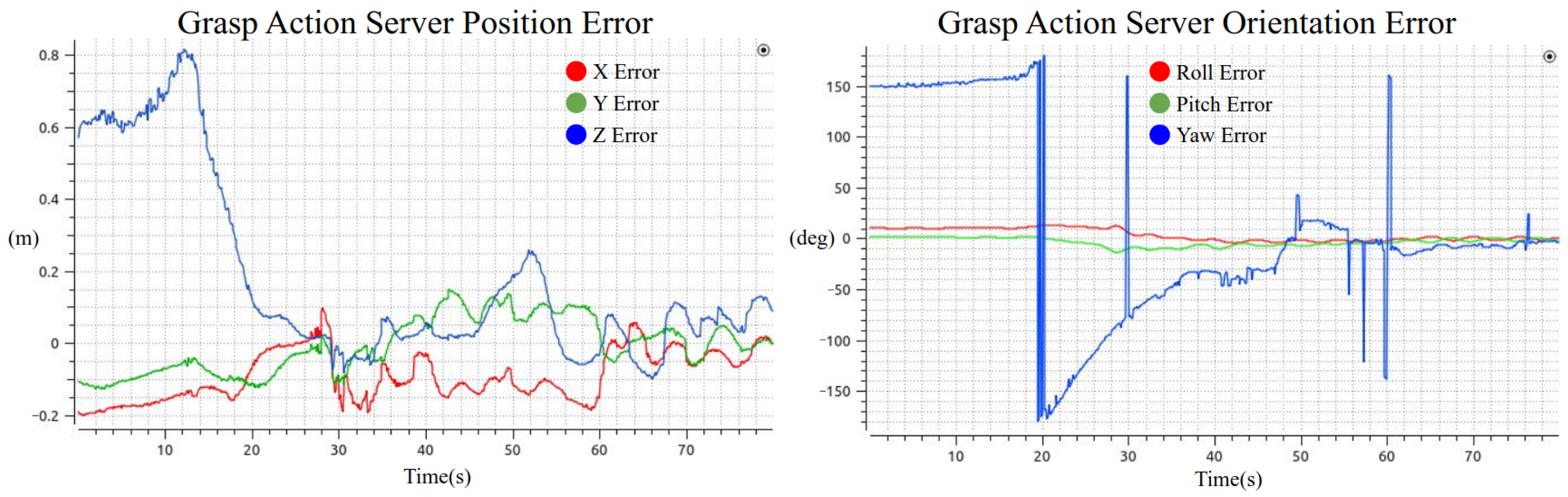

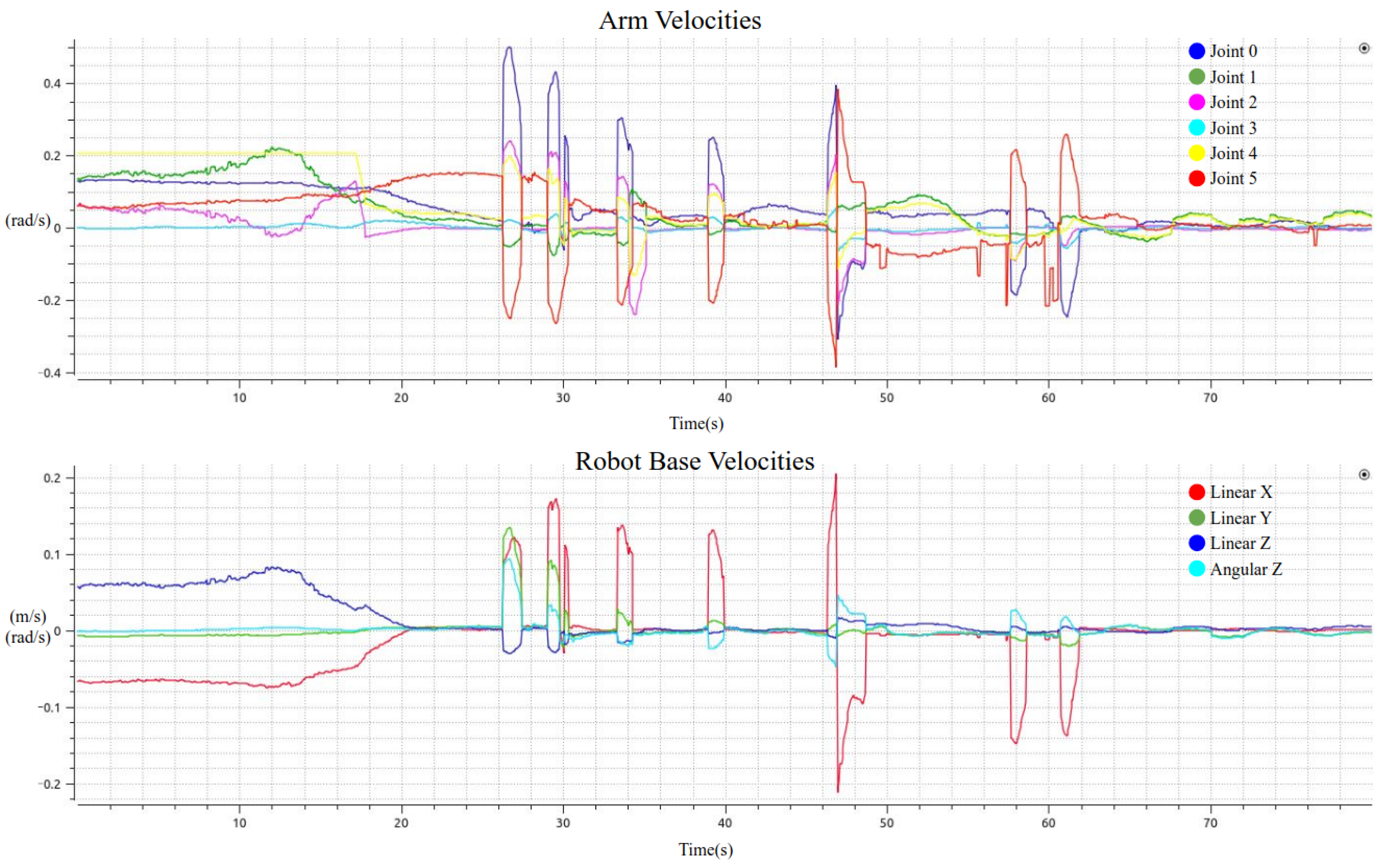

4.1. Coupler Grasping

4.2. Pipe Assembly

4.3. Action Server Error Metrics

5. Conclusions

6. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AUV | Autonomous Underwater Vehicle |

| CIRTESU | Center for Robotics and Underwater Technologies Research |

| DVL | Doppler Velocity Log |

| GCS | Ground Control Station |

| HRI | Human–Robot Interface |

| I-AUV | Autonomous Underwater Vehicle for Intervention |

| UAV | Unmanned Aerial Vehicles |

| UGV | Unmanned Ground Vehicles |

| LARS | Launching and Recovering System |

| NED | North East Down |

| ROV | Remote Operated Vehicle |

| SLAM | Simultaneous Localization and Mapping |

| USBL | Ultra Short Baseline |

| UVMS | Underwater Vehicles Manipulator Systems |

| VLC | Visual Light Communications |

References

- Yoon, S.; Qiao, C. Cooperative search and survey using autonomous underwater vehicles (AUVs). IEEE Trans. Parallel Distrib. Syst. 2010, 22, 364–379. [Google Scholar] [CrossRef]

- Petillot, Y.R.; Antonelli, G.; Casalino, G.; Ferreira, F. Underwater robots: From remotely operated vehicles to intervention-autonomous underwater vehicles. IEEE Robot. Autom. Mag. 2019, 26, 94–101. [Google Scholar] [CrossRef]

- Evans, J.; Redmond, P.; Plakas, C.; Hamilton, K.; Lane, D. Autonomous docking for Intervention-AUVs using sonar and video-based real-time 3D pose estimation [Conference presentation]. In Proceedings of the Oceans 2003 MTS/IEEE Conference, San Diego, CA, USA, 22–26 September 2003. [Google Scholar]

- Marani, G.; Choi, S.K.; Yuh, J. Underwater autonomous manipulation for intervention missions AUVs. Ocean Eng. 2009, 36, 15–23. [Google Scholar] [CrossRef]

- Prats, M.; Pomerleau, F.; Palomeras, N.; Ribas, D.; Garcia, R.; Sanz, P.J. Multipurpose autonomous underwater intervention: A systems integration perspective [Conference presentation]. In Proceedings of the 20th Mediterranean Conference on Control and Automation (MED), Barcelona, Spain, 3–6 July 2012. [Google Scholar] [CrossRef]

- Prats, M.; Ribas, D.; Palomeras, N.; Garcia, R.; Carreras, M.; Sanz, P.J. Reconfigurable AUV for intervention missions: A case study on underwater object recovery. Intell. Serv. Robot. 2012, 5, 19–31. [Google Scholar] [CrossRef]

- Carrera, A.; Palomeras, N.; Hurtós, N.; Kormushev, P.; Carreras, M. Cognitive system for autonomous underwater intervention. Pattern Recognit. Lett. 2015, 67, 91–99. [Google Scholar] [CrossRef]

- Palomeras, N.; Peñalver, A.; Massot-Campos, M.; Negre, P.L.; Fernández, J.J.; Ridao, P.; Sanz, P.J.; Oliver-Codina, G. I-AUV docking and panel intervention at sea. Sensors 2016, 16, 1673. [Google Scholar] [CrossRef]

- López-Barajas, S.; Sanz, P.J.; Marín-Prades, R.; Echagüe, J.; Realpe, S. Network congestion control algorithm for image transmission—HRI and visual light communications of an autonomous underwater vehicle for intervention. Future Internet 2025, 17, 10. [Google Scholar] [CrossRef]

- Pi, R.; Palomeras, N.; Carreras, M.; Sanz, P.J.; Oliver-Codina, G.; Ridao, P. OPTIHROV: Optically linked hybrid autonomous/remotely operated vehicle, beyond teleoperation in a new generation of underwater intervention vehicles. In Proceedings of the OCEANS 2023-Limerick, Limerick, Ireland, 5–8 June 2023. [Google Scholar]

- Burgard, W.; Moors, M.; Fox, D.; Simmons, R.; Thrun, S. Collaborative multi-robot exploration [Conference paper]. In Proceedings of the 2000 IEEE International Conference on Robotics and Automation (ICRA), San Francisco, CA, USA, 24–28 April 2000. [Google Scholar]

- Reid, R.; Cann, A.; Meiklejohn, C.; Poli, L.; Boeing, A.; Braunl, T. Cooperative multi-robot navigation, exploration, mapping and object detection with ROS [Conference paper]. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013. [Google Scholar]

- Hayajneh, M.; Al Mahasneh, A. Guidance, Navigation and Control System for Multi-Robot Network in Monitoring and Inspection Operations. Drones 2022, 6, 332. [Google Scholar] [CrossRef]

- Hinostroza, M.A.; Lekkas, A.M.; Transeth, A.; Luteberget, B.; de Jonge, C.; Sagatun, S.I. Autonomous Inspection and Maintenance Operations employing Multi-Robots [Conference paper]. In Proceedings of the 20th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Genova, Italy, 2–4 September 2024. [Google Scholar]

- Feng, Z.; Hu, G.; Sun, Y.; Soon, J. An overview of collaborative robotic manipulation in multi-robot systems. Annu. Rev. Control 2020, 49, 113–127. [Google Scholar] [CrossRef]

- Jimenez-Cano, A.E.; Martin, J.; Heredia, G.; Ollero, A.; Cano, R. Control of an aerial robot with multi-link arm for assembly tasks [Conference paper]. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 4916–4921. [Google Scholar] [CrossRef]

- Du, Z.; Li, W.; Shi, G. Multi-USV collaborative obstacle avoidance based on improved velocity obstacle method. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part A Civ. Eng. 2024, 10, 04023049. [Google Scholar] [CrossRef]

- Zhang, J.; Ren, J.; Cui, Y.; Fu, D.; Cong, J. Multi-USV task planning method based on improved deep reinforcement learning. IEEE Internet Things J. 2024, 11, 18549–18567. [Google Scholar] [CrossRef]

- Novák, F.; Báča, T.; Saska, M. Collaborative Object Manipulation on the Water Surface by a UAV-USV Team Using Tethers [Conference paper]. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024. [Google Scholar]

- López-Barajas, S.; Sanz, P.J.; Marín-Prades, R.; Gómez-Espinosa, A.; González-García, J.; Echagüe, J. Inspection operations and hole detection in fish net cages through a hybrid underwater intervention system using deep learning techniques. J. Mar. Sci. Eng. 2024, 12, 80. [Google Scholar] [CrossRef]

- Sarda, E.I.; Dhanak, M.R. A USV-Based Automated Launch and Recovery System for AUVs. IEEE J. Ocean. Eng. 2017, 42, 37–55. [Google Scholar] [CrossRef]

- González-García, J.; Gómez-Espinosa, A.; Cuan-Urquizo, E.; García-Valdovinos, L.G.; Salgado-Jiménez, T.; Cabello, J.A.E. Autonomous Underwater Vehicles: Localization, navigation, and communication for collaborative missions. Appl. Sci. 2020, 10, 1256. [Google Scholar] [CrossRef]

- Casalino, G.; Caccia, M.; Caselli, S.; Melchiorri, C.; Antonelli, G.; Caiti, A.; Indiveri, G.; Cannata, G.; Simetti, E.; Torelli, S.; et al. Underwater intervention robotics: An outline of the Italian national project MARIS. Mar. Technol. Soc. J. 2016, 50, 98–107. [Google Scholar] [CrossRef]

- IRS Lab. TWINBOT Project (2018–2021). 2021. Available online: https://blogs.uji.es/irs/projects/twinbot-2018-2021/ (accessed on 6 June 2025).

- Simetti, E.; Casalino, G. Manipulation and transportation with cooperative underwater vehicle-manipulator systems. IEEE J. Ocean. Eng. 2017, 42, 782–799. [Google Scholar] [CrossRef]

- Heshmati-alamdari, S.; Karras, G.C.; Kyriakopoulos, K.J. A distributed predictive control approach for cooperative manipulation of multiple underwater vehicle manipulator systems [Conference paper]. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Conti, R.; Meli, E.; Ridolfi, A.; Allotta, B. An innovative decentralized strategy for I-AUVs cooperative manipulation tasks. Robot. Auton. Syst. 2015, 72, 261–276. [Google Scholar] [CrossRef]

- Heshmati-Alamdari, S.; Karras, G.C.; Kyriakopoulos, K.J. A predictive control approach for cooperative transportation by multiple underwater vehicle manipulator systems. IEEE Trans. Control Syst. Technol. 2022, 30, 917–930. [Google Scholar] [CrossRef]

- Pi, R.; Cieślak, P.; Ridao, P.; Sanz, P.J. TWINBOT: Autonomous underwater cooperative transportation. IEEE Access 2021, 9, 37668–37684. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8 Models Documentation. 2025. Available online: https://docs.ultralytics.com/es/models/yolov8/#performance-metrics (accessed on 22 June 2025).

- Sanz, P.J.; Requena, A.; Inesta, J.M.; Del Pobil, A.P. Grasping the not-so-obvious: Vision-based object handling for industrial applications. IEEE Robot. Autom. Mag. 2005, 12, 44–52. [Google Scholar] [CrossRef]

- Veiga Almagro, C.; Lunghi, G.; Di Castro, M.; Centelles Beltran, D.; Marín Prades, R.; Masi, A.; Sanz, P.J. Cooperative and multimodal capabilities enhancement in the CERNTAURO human–robot interface for hazardous and underwater scenarios. Appl. Sci. 2020, 10, 6144. [Google Scholar] [CrossRef]

- Open Source Computer Vision. 2022. Available online: https://docs.opencv.org/4.x/d5/dae/tutorial_aruco_detection.html (accessed on 3 June 2025).

- Fictionlab. Opencv ArUco. 2022. Available online: https://github.com/fictionlab/ros_aruco_opencv.git (accessed on 3 June 2025).

- Reach Robotics. Inverse Kinematics and Cartesian Control. 2022. Available online: https://reachrobotics.com/blog/inverse-kinematics-and-cartesian-control/ (accessed on 16 June 2025).

- Antonelli, G. Underwater Robots (Springer Tracts in Advanced Robotics); Springer: Cham, Switzerland, 2013; Volume 96. [Google Scholar]

- Lopez-Barajas, S.; Sanz, P.J.; Marin, R.; Solis, A.; Echagüe, J.; Castañeda, H. Seguimiento de trayectoria de un AUV para la inspección de jaulas de red utilizando control por modos deslizantes. Jorn. Automática 2024, 45. [Google Scholar] [CrossRef]

- IQUA Robotics. Girona 500 AUV. 2025. Available online: https://iquarobotics.com/girona-500-auv (accessed on 10 June 2025).

- Reach Robotics. Reach Bravo 7—7-Function Robotic Manipulator. 2025. Available online: https://quote.reachrobotics.com/product/bravo-manipulators/bravo-7/ (accessed on 10 June 2025).

- Blue Robotics. BlueROV2 Heavy Configuration Retrofit Kit. 2025. Available online: https://bluerobotics.com/store/rov/bluerov2-upgrade-kits/brov2-heavy-retrofit/ (accessed on 10 June 2025).

- Reach Robotics. Reach Alpha—5-Function Robotic Manipulator. 2025. Available online: https://reachrobotics.com/products/manipulators/reach-alpha/ (accessed on 10 June 2025).

- Blue Robotics. Bar30 High-Resolution Depth/Pressure Sensor. 2025. Available online: https://bluerobotics.com/store/sensors-cameras/sensors/bar30-sensor-r1/ (accessed on 10 June 2025).

- Water Linked. DVL A50 Doppler Velocity Log. 2025. Available online: https://waterlinked.com/shop/dvl-a50-1248?attr=234,236,238 (accessed on 10 June 2025).

- QGroundControl. QGroundControl—Ground Control Station. 2025. Available online: https://qgroundcontrol.com/ (accessed on 10 June 2025).

- Logitech. F310 Gamepad. 2025. Available online: https://www.logitechg.com/es-es/products/gamepads/f310-gamepad.html (accessed on 10 June 2025).

- Ubuntu. Ubuntu 20.04 LTS (Focal Fossa)—Release Downloads. 2025. Available online: https://releases.ubuntu.com/focal/ (accessed on 10 June 2025).

- ROS. ROS Noetic Ninjemys. 2025. Available online: https://wiki.ros.org/noetic (accessed on 10 June 2025).

- ROS. RViz-ROS Visualization Tool. 2025. Available online: https://wiki.ros.org/rviz (accessed on 10 June 2025).

| # | Frame Name | Description |

|---|---|---|

| 1 | NED | North-East-Down reference frame |

| 2 | AUV_base_link | Main body frame of the AUV |

| 3 | Arm_base_link | Base frame of the manipulator mounted on the AUV |

| 4 | Arm_joint_0 | Frame at joint 0 of the manipulator (base rotation) |

| 5 | Arm_joint_1 | Frame at joint 1 (shoulder pitch) |

| 6 | Arm_joint_2 | Frame at joint 2 (elbow pitch) |

| 7 | Arm_joint_3 | Frame at joint 3 (elbow roll) |

| 8 | Arm_joint_4 | Frame at joint 4 (wrist pitch) |

| 9 | Arm_joint_5 | Frame at joint 5 (wrist roll) |

| 10 | Arm_joint_6 | Frame at joint 6 (push rod) |

| 11 | End_effector_camera | Camera mounted at the end effector |

| 12 | Front_camera | Front-facing camera on the AUV |

| Vehicle | Arm | ||||||

|---|---|---|---|---|---|---|---|

| Name | DoF | Pos. | Vel. | Name | DoF | Pos. | Vel. |

| Surge | X trans. | x | u | Joint 0 | Revolute | ||

| Sway | Y trans. | y | v | Joint 1 | Revolute | ||

| Heave | Z trans. | z | w | Joint 2 | Revolute | ||

| Roll | X rot. | p | Joint 3 | Revolute | |||

| Pitch | Y rot. | q | Joint 4 | Revolute | |||

| Yaw | Z rot. | r | Joint 5 | Revolute | |||

| Joint 6 | Prismatic | ||||||

| Component | Grasping Task | Assembly Task | ||||

|---|---|---|---|---|---|---|

| Mean | Std Dev | RMSE | Mean | Std Dev | RMSE | |

| X (m) | −0.0663 | 0.0633 | 0.0917 | −0.0323 | 0.0558 | 0.0645 |

| Y (m) | 0.0305 | 0.0505 | 0.0590 | −0.0123 | 0.0603 | 0.0616 |

| Z (m) | 0.0464 | 0.0912 | 0.1023 | 0.0427 | 0.1285 | 0.1354 |

| Euclidean error (m) | 0.1324 | 0.0695 | 0.1495 | 0.1418 | 0.0785 | 0.1621 |

| ROLL (deg) | −1.0893 | 1.5314 | 1.8793 | 2.4124 | 14.8564 | 15.0579 |

| PITCH (deg) | −3.0496 | 2.3348 | 3.8407 | −0.3495 | 6.7287 | 6.7390 |

| YAW (deg) | −3.1143 | 25.7328 | 25.9206 | −9.4132 | 7.1583 | 11.8251 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

López-Barajas, S.; Solis, A.; Marín-Prades, R.; Sanz, P.J. Towards Autonomous Coordination of Two I-AUVs in Submarine Pipeline Assembly. J. Mar. Sci. Eng. 2025, 13, 1490. https://doi.org/10.3390/jmse13081490

López-Barajas S, Solis A, Marín-Prades R, Sanz PJ. Towards Autonomous Coordination of Two I-AUVs in Submarine Pipeline Assembly. Journal of Marine Science and Engineering. 2025; 13(8):1490. https://doi.org/10.3390/jmse13081490

Chicago/Turabian StyleLópez-Barajas, Salvador, Alejandro Solis, Raúl Marín-Prades, and Pedro J. Sanz. 2025. "Towards Autonomous Coordination of Two I-AUVs in Submarine Pipeline Assembly" Journal of Marine Science and Engineering 13, no. 8: 1490. https://doi.org/10.3390/jmse13081490

APA StyleLópez-Barajas, S., Solis, A., Marín-Prades, R., & Sanz, P. J. (2025). Towards Autonomous Coordination of Two I-AUVs in Submarine Pipeline Assembly. Journal of Marine Science and Engineering, 13(8), 1490. https://doi.org/10.3390/jmse13081490