Abstract

This paper presents a cloud-based architecture for the acquisition, transmission, and processing of acoustic data from hydrophone arrays, designed to enable the detection and monitoring of both surface and underwater vehicles. The proposed system offers a modular and scalable cloud infrastructure that supports real-time and distributed processing of hydrophone data collected in diverse aquatic environments. Acoustic signals captured by heterogeneous hydrophones—featuring varying sensitivity and bandwidth—are streamed to the cloud, where several machine learning algorithms can be deployed to extract distinguishing acoustic signatures from vessel engines and propellers in interaction with water. The architecture leverages cloud-based services for data ingestion, processing, and storage, facilitating robust vehicle detection and localization through propagation modeling and multi-array geometric configurations. Experimental validation demonstrates the system’s effectiveness in handling high-volume acoustic data streams while maintaining low-latency processing. The proposed approach highlights the potential of cloud technologies to deliver scalable, resilient, and adaptive acoustic sensing platforms for applications in maritime traffic monitoring, harbor security, and environmental surveillance.

1. Introduction

Underwater acoustic sensing has undergone significant technological evolution over the past decade, transitioning from traditional standalone systems to sophisticated, interconnected networks capable of real-time data processing and analysis. The proliferation of autonomous underwater vehicles (AUVs), remotely operated vehicles (ROVs), and surface vessels in maritime environments has created an urgent need for advanced detection and monitoring systems that can operate across diverse aquatic conditions [1]. Modern hydrophone arrays provide critical capabilities for environmental monitoring, maritime security, vessel traffic management, and marine ecosystem protection [2].

The fundamental principles of underwater acoustic propagation remain consistent with established acoustic theory, yet recent advances in sensor technology, signal processing algorithms, and computational infrastructure have dramatically expanded the scope and effectiveness of hydrophone-based systems [3]. Contemporary hydrophone arrays leverage heterogeneous sensor configurations, combining devices with varying sensitivity profiles, frequency responses, and deployment geometries to optimize detection capabilities across different operational scenarios [4]. This heterogeneous approach enables system designers to balance trade-offs between spatial coverage, temporal resolution, and computational complexity while maintaining robust performance in challenging acoustic environments characterized by multipath propagation, ambient noise, and varying oceanographic conditions [5].

Moreover, recent research has demonstrated the effectiveness of distributed hydrophone networks in capturing complex acoustic signatures from multiple vessel types simultaneously [6]. Advanced beamforming techniques applied to large-aperture coherent hydrophone arrays have shown remarkable improvements in signal-to-noise ratios and directional resolution [7]. These developments have been complemented by innovations in autonomous deployment strategies, enabling long-term monitoring in remote oceanic regions where traditional cabled systems are impractical [8].

However, real-time processing of hydrophone array data presents significant computational and algorithmic challenges [9]. The primary bottlenecks are the need to perform simultaneous beamforming, signal detection, classification, and localization operations on high-bandwidth acoustic data streams [10]. Latency requirements for real-time vessel detection and tracking applications typically demand processing delays of less than a few seconds from signal acquisition to decision output [11]. Meeting these requirements while maintaining high detection sensitivity and low false alarm rates requires careful optimization of algorithm implementations and efficient utilization of processing resources [12]. The maritime environment presents additional challenges related to acoustic propagation variability, ambient noise fluctuations, and interference from multiple simultaneous sources [13]. Adaptive processing techniques that can dynamically adjust detection thresholds, beamforming weights, and classification parameters based on real-time environmental conditions have become essential for maintaining consistent performance across varying operational scenarios [14]. Machine learning approaches have shown promising results in this context [15]. The application of machine learning techniques to underwater acoustic target recognition has emerged as one of the most rapidly advancing areas in maritime sensing technology [16,17]. Deep learning approaches, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have demonstrated superior performance compared to traditional signal processing methods for vessel classification tasks [18,19]. Recent surveys indicate that machine learning-based underwater acoustic target recognition methods achieve classification accuracies exceeding 90% for common vessel types under optimal conditions [20].

To fully harness the potential of advanced signal processing and machine learning methods for underwater acoustic sensing, it is imperative to consider the underlying computational infrastructure. Traditional edge-based processing approaches often face inherent limitations in scalability, data storage, and real-time analytics capabilities when dealing with the massive data streams generated by dense hydrophone arrays. Consequently, emerging solutions have increasingly shifted toward leveraging distributed computing paradigms that can accommodate the intensive computational demands and dynamic operational scenarios typical of maritime environments [21]. This transition enables not only more efficient data handling and processing but also greater flexibility in deploying sophisticated algorithms across geographically dispersed sensor networks, setting the stage for the integration of cloud computing technologies in underwater acoustic sensing.

The integration of cloud computing technologies into underwater acoustic sensing represents a paradigm shift from edge-based processing to distributed, scalable computational architectures. Cloud-native approaches address fundamental limitations of traditional hydrophone systems, including limited on-site processing capacity, data storage constraints, and the inability to perform complex real-time analytics across multiple deployment sites [22,23]. Modern cloud platforms provide the computational elasticity required to handle the massive data volumes generated by multi-array hydrophone networks, which can produce terabytes of acoustic data daily depending on sampling rates and array configurations [24]. These systems leverage containerized microservices architectures that enable modular deployment of specialized processing algorithms, from basic signal conditioning and noise reduction to advanced machine learning inference pipelines [25]. Moreover, distributed processing architectures have proven particularly valuable for time-difference-of-arrival (TDOA) calculations and multi-array triangulation algorithms, where synchronized processing across geographically dispersed sensor nodes is essential for accurate localization [26]. Nonetheless, cloud-based implementations can dynamically allocate computational resources based on real-time detection events, scaling processing capacity during periods of high vessel traffic while maintaining cost-effective operation during quiet periods [27].

On top of it, the development of scalable distributed architectures for hydrophone data processing requires careful consideration of data flow management, computational load balancing, and fault tolerance mechanisms [28]. Modern distributed systems must accommodate variable data rates from different array configurations while providing consistent quality of service guarantees for critical detection and alert functions [29]. Microservices architectures have emerged as a preferred approach for achieving this scalability, enabling independent scaling of different processing components based on computational demand and system priorities. Edge computing integration has become increasingly important for reducing bandwidth requirements between remote hydrophone deployments and centralized processing centers [30]. Hybrid architectures that perform initial signal conditioning and event detection at the sensor level while reserving complex analytics for cloud processing have demonstrated optimal balance between processing latency and computational efficiency [31]. These approaches also provide enhanced system resilience by maintaining basic functionality even during communication disruptions between the edge devices and the central processing facilities [32]. Nonetheless, load balancing across distributed processing nodes requires sophisticated orchestration systems capable of managing heterogeneous computational resources and varying processing requirements [33].

Within this situation, this paper presents a comprehensive cloud-based architecture specifically designed for the acquisition, processing, and analysis of hydrophone array data to enable the detection and monitoring of both surface and underwater vehicles. The proposed system addresses key limitations of existing approaches by providing a scalable, distributed cloud architecture that integrates edge-based real-time processing with cloud-based advanced analytics, supporting both autonomous operation in bandwidth-constrained maritime environments and high-throughput processing of diverse acoustic signatures through adaptive deployment modes, robust fault tolerance mechanisms, and comprehensive multimodal data fusion capabilities.

The primary contributions of this research include (1) a modular cloud architecture that supports real-time streaming and processing of multi-array hydrophone data with sub-second latency requirements, (2) integration of advanced machine learning algorithms for vehicle classification and acoustic signature analysis optimized for cloud deployment, and (3) demonstration of scalable processing capabilities handling high-volume acoustic data streams from heterogeneous sensor configurations. The architectural design emphasizes modularity, fault tolerance, and computational efficiency while maintaining the flexibility required to adapt to evolving sensing requirements and technological advances.

The remainder of this paper is organized as follows: Section 2 details the multipurpose continuum-based architecture, including the edge layer, broker and communication layer, and cloud backend layer, along with the hardware configuration and acquisition system design. Section 3 presents the results from the field deployment at the Port of Valencia, including dataset generation and structure analysis demonstrating the system’s operational performance under real maritime conditions. Section 4 concludes the paper with a discussion of key findings and future research directions.

2. Materials and Methods

2.1. Overview of the Multipurpose Continuum-Based Architecture

While many of the technologies employed in this architecture—such as microservices, containerization via Docker, messaging brokers like Kafka/Redpanda, or CNNs for vessel classification—are well established in the literature, the original contribution of this work lies in the practical integration, functional validation, and real-world deployment of these components within a unified system tailored specifically to operational maritime environments.

The architecture distinguishes itself through (i) a hybrid edge-cloud design, enabling adaptive processing based on network constraints, (ii) an Automatic Identification System (AIS)-triggered detection strategy that captures only acoustically relevant events, optimizing bandwidth and storage usage, (iii) an asynchronous processing pipeline, decoupling acquisition, detection, and visualization through containerized microservices orchestrated via Kubernetes, and (iv) a field validation at the Port of Valencia, generating a temporally aligned multimodal dataset that combines hydrophone signals with AIS vessel metadata.

Compared to previous works, such as Wang et al. [6], which examines distributed detection in multi-array systems but within simulated environments, or Tesei et al. [21], which focuses on passive vessel localization without scalable cloud backends, our approach delivers a complete end-to-end system designed for modularity and operational resilience. Similarly, Ortiz et al. [20] propose a microservice-based architecture for smart port IoT, yet do not address acoustic sensing nor challenges related to maritime edge connectivity.

This combination of established technologies into a deployable, validated platform adapted to the constraints of real port environments represents a novel and valuable step toward scalable, resilient, and adaptive underwater acoustic surveillance systems. Therefore, the system presented in this work is designed around a multipurpose, distributed cloud-continuum architecture that integrates edge-based hydrophone data acquisition with scalable cloud-based analytics. This approach allows flexible deployment in a wide range of maritime scenarios—from controlled laboratory pools to open harbor environments—while supporting real-time processing and adaptive resource allocation.

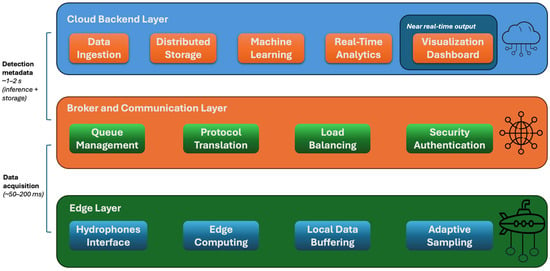

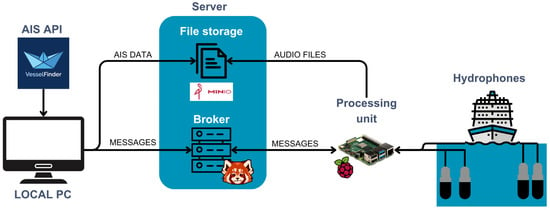

The system is organized into a three-layer architecture (see Figure 1), designed to support scalable, real-time acoustic sensing across heterogeneous environments:

Figure 1.

Cloud-based architecture.

- Edge Layer—responsible for acoustic signal acquisition from hydrophones, with optional local preprocessing or lightweight machine learning inference for low-latency scenarios.

- Broker and Communication Layer—handles message routing, metadata management, and decoupled orchestration of data flows between edge and cloud components.

- Cloud Backend Layer—delivers scalable storage, centralized processing, advanced machine learning inference, and interactive visualization services through a modular microservices architecture.

This modular architecture supports both real-time streaming and asynchronous batch processing of acoustic data, allowing it to adapt to heterogeneous deployment conditions. The design emphasizes comprehensive containerization strategies where all system components are packaged as Docker containers, enabling consistent deployment across diverse environments and facilitating horizontal scaling through Kubernetes orchestration. Each microservice implements health checks, graceful shutdown procedures, and resource limit enforcement to ensure operational reliability.

The architecture prioritizes standardized interfaces through RESTful APIs and message schemas that ensure loose coupling between components, enabling independent development, testing, and deployment cycles. The system adheres to OpenAPI specifications for service documentation and implements versioning strategies to maintain backward compatibility across different system iterations. Moreover, robust fault tolerance mechanisms form a critical foundation of the design, incorporating circuit breaker patterns, retry policies, and bulkhead isolation to prevent cascade failures and ensure system resilience. The platform delivers resource elasticity through automatic scaling policies based on CPU utilization, memory consumption, and queue depth metrics, ensuring optimal resource allocation while maintaining cost efficiency. The system implements predictive scaling algorithms that anticipate traffic patterns based on historical data and environmental factors, enabling proactive resource management. Finally, comprehensive data governance capabilities ensure adherence to data protection requirements through detailed data lineage tracking, audit logging, and compliance frameworks. The system implements robust data retention policies, anonymization procedures, and export capabilities specifically designed for regulatory reporting and compliance verification.

2.1.1. Edge Layer

The edge layer serves as the primary data acquisition interface, where acoustic signals are captured from hydrophones and optionally preprocessed or analyzed locally. This layer encompasses the following:

- Hydrophone Interface Management: Direct integration with heterogeneous hydrophone arrays featuring varying sensitivity ranges, bandwidth capabilities, and sampling rates. The system accommodates both omnidirectional and directional sensors through standardized analog-to-digital conversion interfaces.

- Edge Computing Nodes: Embedded processing units deployed near hydrophone installations, capable of performing preliminary signal conditioning, noise reduction, and feature extraction. These nodes utilize processors with dedicated signal processing capabilities, enabling local decision-making for data filtering and transmission optimization.

- Local Data Buffering: Temporary storage mechanisms that ensure data continuity during network interruptions, implementing circular buffer strategies with configurable retention policies based on available storage capacity and criticality levels.

- Adaptive Sampling Control: Dynamic adjustment of sampling parameters based on custom conditions and detected acoustic activity, optimizing bandwidth utilization while maintaining detection sensitivity.

2.1.2. Broker and Communication Layer

This intermediate layer is responsible for routing messages, managing metadata, and orchestrating the flow of data between components. Key functionalities include the following:

- Message Queue Management: Implementation of publish-subscribe patterns using Apache, Kafka, or RabbitMQ, ensuring reliable message delivery with configurable quality-of-service levels. The system supports both persistent and transient messaging modes to accommodate varying latency requirements.

- Protocol Translation: Seamless conversion between edge-native protocols (such as UDP/TCP raw streams) and cloud-compatible formats (JSON, Apache Avro, or Protocol Buffers), enabling interoperability across diverse hardware platforms.

- Load Balancing and Routing: Intelligent distribution of data streams across multiple processing endpoints based on current system load, geographic proximity, and processing requirements. The routing algorithm considers factors such as network latency, computational availability, and data locality.

- Security and Authentication: End-to-end encryption of data streams, coupled with certificate-based authentication and role-based access control (RBAC) mechanisms to ensure data integrity and authorized access.

2.1.3. Cloud Backend Layer

The cloud backend layer provides comprehensive storage, processing, machine learning inference, and visualization services through the following microservices architecture:

- Data Ingestion Services: The system incorporates scalable ingestion pipelines designed to handle high-throughput acoustic data streams through dynamic partitioning and parallel processing. Real-time streaming frameworks such as Apache Flink (v1.17) or Apache Spark (3.5.0) Structured Streaming are used to support continuous dataflow with native support for back-pressure, fault tolerance, and low-latency event handling.

- Distributed Storage Architecture: Multi-tier storage strategy utilizing hot, warm, and cold storage tiers optimized for different access patterns. Raw acoustic data, in our case, 3 s. wav files of continuous audio input, is stored in object storage systems with automatic lifecycle management, while processed features and metadata are maintained in time-series databases for rapid query response.

- Machine Learning Pipeline: Containerized ML workflows implementing various algorithms for acoustic signature detection, specifically spectrogram-based vessel classification, temporal pattern recognition, feature-based detection, or anomaly detection.

- Real-time Analytics Engine: The system supports continuous stream analytics for immediate anomaly detection and alert generation. Using sliding-window computation and Complex Event Processing (CEP), the engine detects recurring patterns, sudden spikes, or behavioral changes in real time. Detection thresholds are dynamically tunable, and adaptive learning mechanisms allow the system to recalibrate models in response to environmental drift.

- Visualization and Dashboard Services: Web-based interfaces providing real-time monitoring capabilities, historical trend analysis, and interactive acoustic signature exploration. The system implements responsive design principles and supports multiple concurrent users with role-based view customization.

2.2. Hardware Configuration

At the hardware level, the system is built around low-power edge nodes based on Raspberry Pi 4B units, which are responsible for local data acquisition and communication with the cloud infrastructure. Each Raspberry Pi is connected to a Zoom AMS-44 audio interface, enabling multi-channel high-fidelity recording with phantom power support for the hydrophones. Two types of hydrophones are used (see Table 1 for details):

Table 1.

Hydrophone specifications table.

The ASF-1 and AS-1 hydrophones provide complementary capabilities within the heterogeneous sensor network. The ASF-1 excels in controlled environments requiring high-precision spectral analysis and weak signal detection, while the AS-1 offers robust performance and cost-effectiveness for widespread deployment in challenging field conditions. Both hydrophone types integrate seamlessly with the architecture through standardized analog-to-digital conversion interfaces: the AMS-44 amplifier. The system’s adaptive sampling control accommodates the different sensitivity characteristics and optimal operating ranges, enabling dynamic resource allocation based on environmental conditions and detection requirements. The dual-sensor approach allows the system to maintain detection capability across diverse maritime scenarios—from precision monitoring in controlled harbor environments (ASF-1) to broad-area surveillance in open water conditions (AS-1), ensuring comprehensive coverage while optimizing cost-effectiveness and operational resilience.

2.3. Software Stack and Data Flow

The software architecture is organized around a message-driven pipeline, ensuring asynchronous communication and decoupling between acquisition, processing, and storage components. The core software stack includes:

- Redpanda (v23.3.2, Kafka-compatible broker): responsible for receiving, buffering, and routing JSON-formatted messages that encapsulate critical data such as the locations of newly stored audio files, detection event notifications, and associated metadata describing recording conditions or environmental parameters. Its high-throughput, low-latency characteristics ensure that messages can be reliably transported across the distributed system, supporting timely data processing and responsive system behavior even under heavy acquisition loads.

- MinIO (vRELEASE.2023-12-02T10-51-33Z, S3-compatible object storage): optimized for handling large volumes of unstructured data. MinIO serves as the repository for all recorded .wav audio files, offering efficient, scalable storage with built-in redundancy and high availability. This allows long-term preservation of the original acoustic signals, which can later be retrieved for reprocessing, validation, or advanced analysis tasks. Its compatibility with standard S3 APIs facilitates seamless integration with other cloud-based services and tools within the overall system architecture.

- MongoDB (v6.0.6): a NoSQL database used to maintain structured metadata and the results of acoustic detection processes. MongoDB’s document-oriented design enables flexible representation of diverse metadata schemas, including details such as hydrophone deployment configurations, environmental context, and processing outcomes. Fast query performance allows the system to support real-time dashboards and data exploration interfaces, providing researchers and operators with immediate access to critical information such as detection timestamps, estimated source characteristics, and system status indicators. This structured storage layer plays a central role in enabling the end-to-end traceability and analysis of acquired acoustic data.

2.4. Cloud-Edge Deployment Modes

The architecture is explicitly designed to support hybrid deployment modes: Edge and Cloud mode.

In Edge Mode, lightweight machine learning models are executed directly on the acquisition hardware, such as Raspberry Pi units integrated within the hydrophone platforms. This configuration enables real-time or near-real-time detection capabilities, which are particularly valuable in situations where low latency is critical or where network connectivity is limited or unreliable, restricting the bandwidth available for transmitting raw acoustic data to the cloud. By performing initial detection and data reduction at the source, the system can prioritize the transfer of relevant event information rather than entire audio recordings, thus optimizing resource usage and response times in bandwidth-constrained maritime environments.

In contrast, Cloud Mode reduces the computational resources of backend servers to execute more complex and resource-intensive processing tasks. These may include the application of deep learning architectures such as CNNs, ensemble methods, or other advanced algorithms capable of performing detailed classification, source separation, and multi-target detection. The availability of scalable compute power in the cloud allows for the training and execution of high-capacity models that can exploit large datasets and complex feature representations, improving the accuracy and robustness of detection and classification processes.

This dual-mode operational design provides significant adaptability, enabling the system to dynamically adjust its processing strategy according to the available infrastructure and the specific operational constraints of each mission. Whether operating autonomously in remote offshore locations or as part of an interconnected coastal monitoring network with high-speed data links, the architecture ensures that optimal detection and classification performance can be maintained without compromising efficiency or responsiveness.

2.5. Acoustic Detection Pipeline

A central component of the proposed system is the acoustic detection pipeline, which enables the identification and classification of marine vessels through the analysis of underwater acoustic signals. This pipeline integrates edge-based acquisition with cloud-based processing to deliver scalable, low-latency detection capabilities suitable for a wide range of maritime environments. It is designed to operate under varying connectivity conditions and to support both real-time inference and deferred batch processing. The following section details the end-to-end workflow, from signal acquisition by hydrophones to the visualization of detection results, highlighting the interaction between hardware, messaging infrastructure, storage services, and machine learning modules.

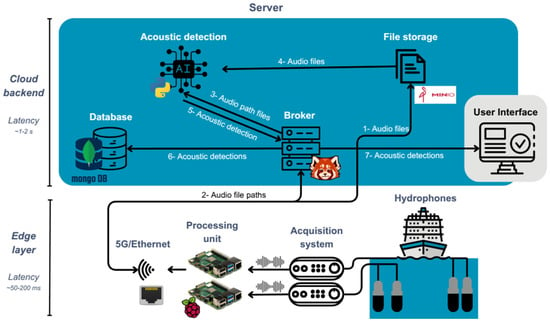

The acoustic detection pipeline is designed to enable the seamless acquisition, transmission, processing, and visualization of underwater acoustic data within a distributed and modular architecture. As shown in Figure 2, the system begins with the deployment of hydrophones in a marine environment, where they capture acoustic signals produced by both surface and submerged vessels. These analog signals are digitized by an acquisition system and relayed to a local processing unit (Raspberry Pi), which is responsible for packaging the data and ensuring communication with the server infrastructure via 5G or Ethernet connections. Once a segment of audio is recorded, the processing unit uploads the resulting .wav file to a MinIO-based cloud storage service (1). In parallel, a metadata message is dispatched to a Redpanda broker instance (2). This message includes information about the path to the audio file, the hydrophone identifier, and the acquisition timestamp. The broker plays a central role in decoupling acquisition from processing, enabling asynchronous communication and high system resilience.

Figure 2.

Overview of the distributed architecture.

Then, the cloud-based acoustic detection module continuously listens to the broker and retrieves new audio file paths as they are published (3). Upon receiving a notification, it downloads the corresponding audio file from the storage layer (4) and initiates a sequence of signal processing and machine learning operations. Upon acquisition, each audio segment undergoes a series of preprocessing steps aimed at extracting meaningful acoustic features that can reveal the presence and characteristics of different sources, such as vessel engines or propellers. These may include Short-Time Fourier Transform (STFT) calculations, Log-Mel spectrogram generation, and rule-based peak detection. After signal processing, CNNs or other algorithms may be applied to identify characteristic acoustic signatures associated with different types of vessels.

To implement vessel classification, we adopted a ResNet18 convolutional neural network architecture trained on the publicly available Ocean Networks Canada (ONC) dataset, formally known as VTUAD (Vessel Type Underwater Acoustic Data) [34]. This dataset was chosen due to its strong alignment with the nature of our use case: it provides underwater acoustic recordings annotated with real-time AIS (Automatic Identification System) data, allowing for supervised training with vessel-type labels. VTUAD is structured into five classes—cargo, tanker, tug, passenger ships, and background noise—and contains a total of 108,588 labeled one-second audio clips. These were extracted from continuous hydrophone recordings and are designed to capture discrete acoustic events such as engine cycles or propeller blade signatures. A key strength of VTUAD lies in its stratified structure based on vessel distance from the hydrophone (2–3 km, 3–4 km, and 4–6 km), which enriches the dataset with spatial variation and facilitates model generalization across operating conditions. In addition, each sample is paired with environmental metadata such as temperature, conductivity, salinity, pressure, and sound speed, recorded by CTD sensors, enabling future multi-modal analysis.

Before training, all audio samples were converted into MEL spectrograms, a widely used time-frequency representation that balances resolution and robustness and has demonstrated high performance in underwater acoustic target recognition tasks [20,35]. We merged all three distance-based subsets into a unified dataset, preserving the original train-validation-test splits to ensure consistency. The final distribution includes 94,300 samples for training, 9517 for validation, and 4771 for testing, with a relatively balanced class representation. The model was trained under a supervised learning setup using an 80/20 split for training and validation, and it achieved a validation accuracy of 0.95. A detailed analysis of the validation results shows strong overall performance, with precision, recall, and F1-scores of 0.91, 0.95, and 0.93, respectively. The background, cargo, and tug classes achieved F1-scores above 0.94, while the passengership and tanker classes, though slightly less represented in the dataset, also demonstrated high recall (0.96 and 0.98, respectively). The overall false positive rate (FPR) across all classes was approximately 5.2%, with the highest FPR observed in the passengership class at around 14%. Most false positives were associated with the passengership and tug categories, whereas false negatives were more frequent in the cargo and background classes. These results confirm the model’s capacity to generalize across diverse vessel types and support the suitability of VTUAD for robust deep learning applications in maritime acoustic classification.

Once the analysis of a segment is completed, the detection module generates a standardized JSON-formatted message (see Table 2) that encapsulates the results in a structured and interoperable manner. This message includes essential sensor metadata such as timestamps, channel identifiers, and sensor type descriptors, ensuring traceability and contextualization of the detection event (see Table 3). The core detection outcome—comprising the presence or absence of a target, along with a confidence score—is also reported, providing downstream processing modules or operators with quantitative measures of detection reliability. When classification models are applied, additional fields specifying the estimated vessel type or class are included, enriching the semantic content of the detection report. Furthermore, if geolocation algorithms are executed—leveraging data from synchronized hydrophone arrays—the message can also convey the estimated spatial position of the detected source.

Table 2.

Example of the standardized JSON-formatted message.

Table 3.

Categories included in the standardized JSON-formatted message that encapsulates the detection.

This message is returned to the broker (5) and stored in a MongoDB database (6), which serves as the structured repository for all detection metadata. The database schema supports real-time queries and efficient retrieval of acoustic event records, including sensor characteristics, environmental context, and estimated source properties. Finally, the detection results are made accessible to users through a web-based control panel (7), which dynamically visualizes incoming data and provides interfaces for temporal filtering, system monitoring, and acoustic event exploration.

This architecture supports high-frequency data acquisition and real-time inference while maintaining robustness against network variability and component failure. The modular design—allowing asynchronous message passing and cloud-native services—ensures adaptability to a broad range of maritime operational scenarios, from centralized coastal deployments to autonomous offshore nodes.

3. Results

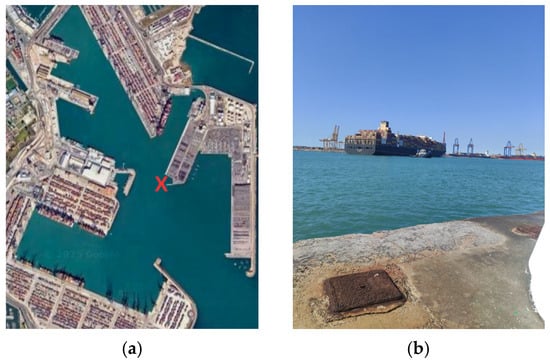

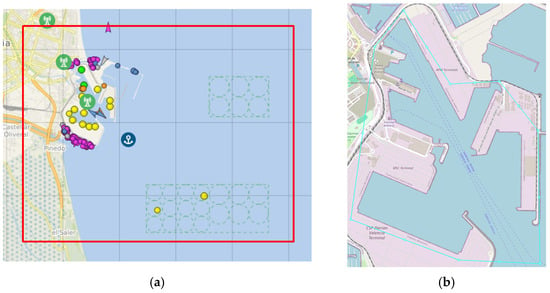

The cloud-continuum acoustic sensing system was deployed in a semi-structured operational scenario at the Port of Valencia (see Figure 3) to evaluate its functional performance, data handling capabilities, and multimodal fusion effectiveness. This deployment served as a realistic validation test for the proposed architecture under conditions of variable noise, vessel density, and network quality. The primary goal was to demonstrate the system’s ability to autonomously acquire, transmit, and process high-resolution underwater acoustic data while correlating detections with AIS-based vessel information in near real time. The following results reflect the end-to-end operation of the platform—from edge-based hydrophone data acquisition to centralized ML-driven classification, metadata fusion, and visualization—offering a comprehensive evaluation of its scalability, modularity, and detection accuracy in real maritime environments. Special emphasis is placed on the quality and structure of the dataset generated, which integrates raw audio, detection metadata, and AIS-enriched annotations for further research and model training.

Figure 3.

Area of the use case on the Valencia Port tests. (a) Area where the acoustic kit was installed, marked with a red “X”, and (b) container ship entering the port in front of the installation point.

3.1. Field Deployment in Valencia Port

The semi-controlled deployment at the Port of Valencia included a multiple-hydrophone acquisition unit located near active dock areas (red point in Figure 3a). This strategic location offers a direct line of sight to the main navigation channel used by large container vessels (see Figure 3b). The site provides an optimal acoustic vantage point for capturing high-quality underwater noise signatures from both incoming and outgoing maritime traffic. Its proximity to high-density shipping lanes ensures a diverse dataset of vessel types and operational conditions, and secure installation, essential for robust detection and classification tasks.

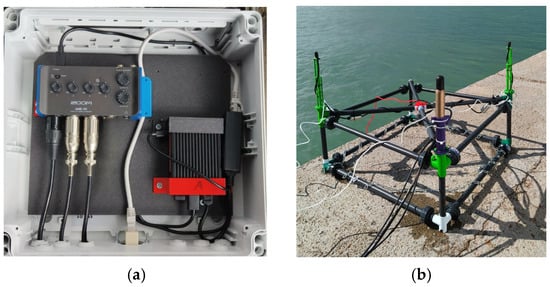

This edge node consisted of a Raspberry Pi 4B connected to one ASF-1 and two AS-1 hydrophones through a Zoom AMS-44 interface (see Figure 4a). The hydrophones were mounted on a submerged structure to ensure robust underwater coupling (see Figure 4b) placed on a concrete surface 2 m below sea level. The nodes were connected via Ethernet, enabling live streaming and fallback to prevent delays in uploads during network degradation.

Figure 4.

Final hardware used in Valencia Port tests. (a) Hydrophone connection to the amplifier and (b) hydrophone structure for robust coupling.

3.2. Dataset Generation via Acoustic-AIS Integration in the Platform

To facilitate the creation of a multimodal dataset for the characterization and identification of maritime traffic, a custom data acquisition platform has been developed. This system integrates underwater acoustic monitoring with AIS data to provide a rich, timestamped dataset suitable for advanced signal processing and vessel classification tasks. To achieve this, the platform is composed of four interconnected components (see Figure 5): (1) AIS data retrieval Via an external PC, (2) a centralized server managing data flow and storage, (3) a distributed acquisition unit with underwater hydrophones, and (4) a processing unit handling audio preprocessing and communication with the server.

Figure 5.

Use case architecture and pipeline.

The process begins with the integration of AIS data. Real-time vessel traffic information is retrieved using the VesselFinder API from a local PC, which collects messages containing timestamped vessel positions, speeds, headings, and identifiers such as MMSI and vessel type of the area monitored, which includes Valencia Port and surroundings (see Figure 6a). These AIS messages are then forwarded to the centralized server, where they are stored in the MinIO service. In order to optimize the dataset records, the AIS data is filtered to only capture audio files when there is activity in the port premises. Therefore, from the data retrieved from AIS, the vessels whose coordinates are not included in the commercial port premises (see Figure 6b) are filtered out. Additionally, as there are always moored vessels sending AIS data in this area, another filter is applied to determine the activity in this area: thresholding their speed by 1 knot. Therefore, if the vessel speed is higher than 1 knot and it is located inside the port coordinates, it is considered that it is performing an operation and there is acoustic activity to be recorded, so a message notifying this is sent to the RedPanda broker.

Figure 6.

AIS API area monitored. (a) Inside the red square, the original area receiving information from the AIS API. This includes the area of the port of Valencia, where most vessels can be seen as color dots according to their category, and surroundings where vessels wait for their turn to enter the port, and (b) the commercial port area, shown the main infrastructure area in purple and water in blue, surrounded by a polygon in blue that delimited the area to filter activity of interest.

On the other side, the processing unit (Raspberry Pi) is subscribed to the same topic of the broker. Once the RedPanda broker receives the notification of vessel activity within the defined port area, it triggers a recording session by communicating with the corresponding underwater acoustic acquisition unit. It will automatically stop if no new AIS message indicating such activity is received within a 5-minute window. This conditional activation minimizes unnecessary data capture and reduces energy and storage consumption, making the system particularly suitable for autonomous or remote deployments in port environments. The 5-minute window refers to the monitoring period during which vessel activity is evaluated using AIS data. If no further AIS signal indicating vessel movement is received during this window, the recording session is automatically stopped. This approach ensures coverage of both active vessel passages and background acoustic conditions when the port is quiet. Each AIS entry is timestamped and later matched to the closest corresponding 3 s audio segment. Although the current analysis uses a single label per segment, the system supports assigning multiple labels per file to account for overlapping vessel activities, allowing further refinement in future analysis.

Each unit is designed to operate autonomously and capture underwater sound at a sampling frequency of 32 kHz and 24-bit resolution, ensuring the fidelity required for detailed acoustic analysis. Recordings are segmented into 3 s intervals and stored in uncompressed PCM (.wav) format, which balances high temporal resolution and broad frequency coverage with reasonable data sizes of approximately 1.5 MB per audio. This configuration enables the reliable capture of both tonal features—such as propeller blade rates—and broadband noise components typically associated with vessel propulsion and maneuvering.

The acquisition units are equipped with a local processing device, usually a compact single-board computer such as a Raspberry Pi, responsible for managing the recording sessions. Upon receiving the activation signal from the broker, the processing unit initiates the capture, buffers the audio locally, and performs file structuring and timestamping. It then transmits the audio files to the centralized server via Ethernet connectivity. Simultaneously, it maintains communication with the RedPanda broker to report that a new audio file has been uploaded and to monitor port activity from AIS data. This modular setup allows each unit to operate independently while remaining tightly integrated with the central data management architecture.

To ensure consistency and enable more advanced analytical operations, such as source localization or beamforming, all hydrophone units are temporally synchronized. This synchronization is achieved internally within the data acquisition system. All hydrophones are connected to the same multi-channel recording device, which captures signals from different hydrophones as separate channels in a single audio file. This configuration ensures that their recordings share a common time base, preserving relative timing across channels with high precision. Additionally, identical cable lengths were used for all hydrophones to minimize discrepancies in signal transmission time from the sensor to the acquisition unit. Accurate timestamping across distributed sensors allows the system to reconstruct coherent acoustic scenes, even from spatially separated points. This temporal alignment is essential not only for characterizing vessel acoustic signatures with high precision but also for enabling future functionalities such as multi-node tracking, direction-of-arrival estimation, and adaptive noise modeling. Together, this workflow provides a robust and extensible framework for generating a high-resolution, context-aware dataset that supports both detection and classification tasks in real-world maritime environments.

This end-to-end workflow—from AIS ingestion and message brokering to acoustic capture and synchronized transmission—forms the basis of the robust and extensible data acquisition platform. It supports the continuous creation of a multimodal dataset that links acoustic events to identifiable vessel movements, enabling comprehensive analyses of maritime activity in busy port environments.

3.3. Dataset Summary and Analysis

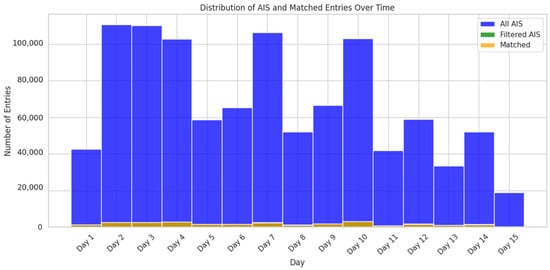

Over a 15-day deployment at the Port of Valencia, from the 16th of May 2025 until the end of the month, the cloud-continuum acoustic monitoring system generated a comprehensive multimodal dataset that combines underwater audio recordings with vessel metadata derived from Automatic Identification System (AIS) signals. This section presents a detailed overview of the dataset and analyzes its composition, statistical characteristics, and operational insights.

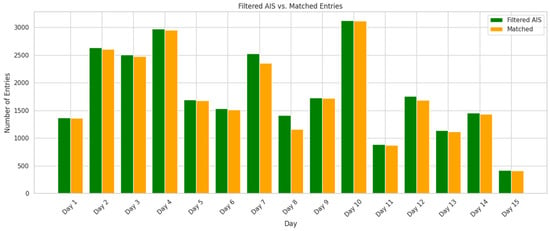

The platform initially recorded 1,374,324 raw AIS entries over the campaign period and 273,185 raw audio records, which comprised more than 410 GB of data. After applying geographic and behavioral filters to isolate active vessels within the port area—specifically, those inside the designated commercial zone and moving at speeds exceeding 1 knot—only 35,034 entries were retained, as most of the data received belongs to moored vessels of the surrounding leisure port. This rigorous filtering effectively reduced noise from static or irrelevant transmissions, focusing the analysis on operationally significant vessel passages. Out of the filtered AIS entries, 26,467 entries were successfully matched with acoustic events. This yields a match rate of 75.6%, suggesting strong synchronization between AIS-derived activity and the acoustic detection pipeline, while also highlighting the robustness of the system in aligning asynchronous, multimodal inputs.

A summary of these recordings is displayed in Figure 7, containing the number of AIS entries, filtered entries, and matched detections across the 15-day window. It can be observed that a peak recording activity occurred around days 3–4, day 7, and day 10, each showing over 100,000 filtered entries and 2500–3000 matched acoustic detections (zoomed in Figure 8). Conversely, Days 12–15 show a sharp drop in both AIS and matched entries, potentially indicating reduced vessel activity due to port schedules, weather, or operational constraints. This variability demonstrates the system’s ability to dynamically adapt to maritime traffic patterns, maintaining high throughput during busy periods and conserving resources when activity subsides.

Figure 7.

Distribution of AIS-filtered and matched audio entries over the 15 days of recording.

Figure 8.

Distribution of filtered and matched audio entries over the 15 days of recording.

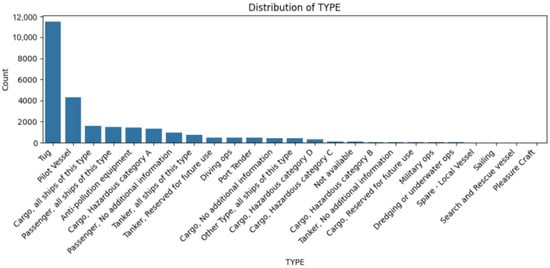

Regarding the vessel type distribution of the final dataset (see Figure 9), i.e., audio files matched with filtered AIS entries, the dataset is clearly dominated by tugboats, which account for nearly 12,000 entries—more than double the next most frequent category. Pilot vessels follow with over 4000 detections, reflecting the operational focus of the port, where support and maneuvering vessels are highly active and acoustically prominent. Additional vessel types, such as cargo ships, passenger vessels, and anti-pollution equipment, also contribute significantly to the dataset. Their presence ensures a diverse range of acoustic signatures, from high-frequency tonal patterns to low-frequency propulsion noise. A long tail of lower-frequency categories—such as military ops, sailing, search and rescue, and pleasure craft—enhances the dataset’s heterogeneity. This diversity is particularly valuable for training generalized machine learning models capable of distinguishing between vessel classes in real-world, noisy maritime environments. The dataset’s operational skew toward port-specific and support vessels makes it especially representative of busy, service-intensive port scenarios, ideal for smart harbor applications and traffic classification systems.

Figure 9.

Distribution of the types of vessels in matched audio entries.

From the total collected acoustic data, 26,467 high-fidelity audio segments were successfully matched with AIS-verified vessel movements. Each segment is 3 s long and recorded at 32 kHz/24-bit resolution. This yields ~22 h of matched audio, representing a focused, high-quality subset of acoustically confirmed maritime events. This is an average of ~1765 matched segments per day over the 15-day period, reflecting the system’s effectiveness in isolating relevant sound events from background noise. Importantly, these matched recordings were obtained using an event-driven acquisition strategy. Rather than recording continuously, the system was triggered by real-time AIS filtering (based on vessel position and motion), ensuring that only contextually relevant acoustic data was captured.

This approach not only minimizes storage and processing demands but also enhances the dataset’s signal-to-noise ratio, making it ideal for supervised training, vessel signature modeling, and classification system validation. However, when applying the ResNet50 model trained on the ONC dataset to the recordings collected at the Port of Valencia, a significant drop in classification performance was observed. While the ONC corpus provides clean and relatively homogeneous samples, the real port environment introduces domain-specific acoustic variability, including background noise from dockside operations, multipath reflections, and a broader mix of vessel types. These factors hinder model generalization and highlight a domain shift between public datasets and field conditions. As such, improving model robustness through port-specific dataset augmentation, domain adaptation techniques, and resilient training strategies constitutes a key priority for future research. Additionally, the alignment of timestamped acoustic events with AIS attributes (e.g., MMSI, speed, heading) facilitates future extensions in trajectory prediction, beamforming-based localization, and context-aware anomaly detection.

3.4. System Performance Evaluation: Scalability and Resilience

The field deployment at the Port of Valencia enabled an initial evaluation of the system’s scalability and resilience under real maritime operating conditions. Over the 15-day period, the platform processed more than 275,000 audio segments and over 50,000 AIS entries, with peak daily loads exceeding 3000 matched detections.

The cloud backend, orchestrated via Kubernetes, adapted to varying traffic by dynamically scaling the number of processing containers from a baseline of 3 up to 6 replicas during peak load conditions. This elastic scaling maintained a stable end-to-end latency below 2 s for approximately 90% of detection events, based on broker-to-database timing logs. Message routing through Redpanda remained responsive, with low queuing delays even during high-frequency acquisition windows.

In terms of resilience, the edge layer effectively handled network interruptions through local buffering and automatic retransmission mechanisms. During three documented connectivity interruptions, each lasting between 30 s and 2 min, all data and detection messages were successfully recovered and transmitted without loss. These recovery events confirm the system’s robustness in bandwidth-constrained and operationally dynamic port environments.

3.5. Comparative Discussion with Alternative Architectures

To contextualize the advantages of the proposed system, it is useful to compare it conceptually with conventional architectural models commonly used in underwater acoustic monitoring. Compared to traditional edge-only systems, which perform all signal processing locally, our hybrid approach provides greater flexibility and scalability. Edge-only designs are typically constrained by limited processing power and storage, making it difficult to apply complex machine learning algorithms or manage large datasets over time. In contrast, the SMAUG architecture enables lightweight processing at the edge for low-latency responses while delegating high-complexity analytics and long-term storage to the cloud.

Conversely, centralized server-based systems can offer powerful processing capabilities but are less resilient in low-connectivity environments and may suffer from high transmission latency or data loss. Our architecture mitigates these issues by employing local buffering, event-driven acquisition, and adaptive deployment modes that ensure robust operation even with intermittent network access. Overall, the system achieves a balanced trade-off between autonomy, scalability, and responsiveness, adapting to the operational constraints of real maritime environments more effectively.

4. Conclusions

This paper has presented a comprehensive cloud-based architecture for underwater acoustic sensing that successfully addresses the computational and scalability needs for hydrophone systems. The proposed multipurpose continuum-based architecture demonstrates significant advances in real-time vessel detection and monitoring through the integration of edge computing, distributed cloud processing, and advanced machine learning techniques.

The system’s modular design, comprising edge data acquisition nodes, broker and communication layers, and scalable cloud backend services, has proven effective in handling high-volume acoustic data streams while maintaining sub-second processing latencies. The deployment of heterogeneous hydrophone configurations (ASF-1 MKII and AS-1) within the architecture provides optimal balance between detection sensitivity and cost-effectiveness, enabling comprehensive coverage across diverse maritime scenarios from controlled harbor environments to open water conditions.

The successful field deployment at the Port of Valencia validates the practical applicability of the proposed architecture. Over the 2-week campaign, the system autonomously processed over 275,000 high-fidelity acoustic segments and 53,000 AIS records, demonstrating robust operational performance under real-world maritime conditions. The event-driven data acquisition strategy, triggered by AIS vessel activity detection, proved highly effective in optimizing resource utilization while maintaining comprehensive monitoring capabilities. This approach resulted in a structured, multimodal dataset that integrates raw acoustic waveforms, detection metadata, and AIS-derived annotations, providing valuable resources for future machine learning model development and validation.

The integration of containerized microservices architecture with message-driven pipelines ensures system resilience and adaptability to varying operational requirements. The dual-mode deployment capability—supporting both edge-based real-time processing and cloud-based complex analytics—provides exceptional flexibility for diverse maritime monitoring scenarios. The system’s ability to dynamically scale computational resources based on detection events while maintaining cost-effective operation during low-activity periods represents a significant advancement over traditional fixed-capacity systems.

The correlation of acoustic detections with AIS data streams demonstrates the effectiveness of multimodal fusion approaches in enhancing detection accuracy and providing contextual awareness in busy maritime environments. This integration capability positions the system as a valuable tool for various applications, including environmental monitoring, maritime security, vessel traffic management, and marine ecosystem protection.

Future work will focus on expanding the machine learning pipeline to incorporate more sophisticated deep learning architectures, including transformer-based models for temporal pattern recognition and federated learning approaches for distributed model training across multiple deployment sites. Additionally, the integration of advanced geospatial processing capabilities for multi-array triangulation and the development of predictive analytics for vessel behavior modeling represent promising directions for system enhancement.

Author Contributions

Conceptualization, A.G.; methodology, F.P.C., A.F.G. and Á.G.; software, F.P.C., A.F.G. and V.R.B.; validation, F.P.C. and A.F.G.; formal analysis, A.B.-H.; investigation, F.P.C., A.F.G., A.B-H. and A.G.; resources, Á.G.; data curation, A.F.G.; writing—original draft preparation, A.G., A.F.G., F.P.C., Á.G. and A.G.; writing—review and editing, A.G., A.F.G., F.P.C., Á.G. and A.G.; visualization, F.P.C. and A.F.G.; supervision, F.P.C. and A.B.-H.; project administration, Á.G.; funding acquisition, Á.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work has partially been supported by the European Commission within the context of the project SMAUG, funded under EU Horizon Europe Grant Agreement 101121129. This work has been supported by Grant PID2023-146540OB-C42 funded by MCIN/AEI/10.13039/501100011033.

Data Availability Statement

Data available on request due to security restrictions.

Acknowledgments

The authors acknowledge the support of Fundación Valencia Port for their help with permits, installations, and logistics for the trials in Valencia Port.

Conflicts of Interest

Authors Francisco Pérez Carrasco and Alberto García were employed by the company FAV Innovation and Technologies. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Notes

| 1 | https://ambient.de/en/products/asf-1-mkii-hydrophone-mit-48v-phantomspeisung (accessed on 27 July 2025) |

| 2 | https://www.aquarianaudio.com/as-1-hydrophone.html (accessed on 27 July 2025) |

References

- Climent, S.; Sanchez, A.; Capella, J.V.; Meratnia, N.; Serrano, J.J. Underwater Acoustic Wireless Sensor Networks: Advances and Future Trends in Physical, MAC and Routing Layers. Sensors 2014, 14, 795–833. [Google Scholar] [CrossRef] [PubMed]

- Hildebrand, J.; Wiggins, S.; Baumann-Pickering, S.; Frasier, K.; Roch, M.A. The past, present and future of underwater passive acoustic monitoring. J. Acoust. Soc. Am. 2024, 155 (Suppl. S3), A96. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, Y.; Liu, W. End-to-end underwater acoustic transmission loss prediction with adaptive multi-scale dilated network. J. Acoust. Soc. Am. 2025, 157, 382–395. [Google Scholar] [CrossRef]

- Wu, S.; Huang, J.; Gu, H.; Pang, Y.; Liu, W.; Hong, M.; Chen, W.; Qiu, J.; Zhu, J.; Zhang, T.; et al. Ultra-fine cable fiber optic hydrophone array for acoustic detection. In Proceedings of the Volume 12792, Eighteenth National Conference on Laser Technology and Optoelectronics, 127920K, Shanghai, China, 16 October 2023. [Google Scholar] [CrossRef]

- Jiang, W.; Tong, F. Exploiting dynamic sparsity for time reversal underwater acoustic communication under rapidly time varying channels. Appl. Acoust. 2021, 172, 107648. [Google Scholar] [CrossRef]

- Wang, L.; Yang, Y.; Liu, X.; Chen, P. Distributed signal detection in underwater multi-array systems with partial spatial coherence. In Proceedings of the OCEANS 2017, Aberdeen, UK, 19–22 June 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Zhu, C.; Seri, S.G.; Mohebbi-Kalkhoran, H.; Ratilal, P. Long-Range Automatic Detection, Acoustic Signature Characterization and Bearing-Time Estimation of Multiple Ships with Coherent Hydrophone Array. Remote. Sens. 2020, 12, 3731. [Google Scholar] [CrossRef]

- Kong, D.F.N.; Shen, C.; Tian, C.; Zhang, K. A New Low-Cost Acoustic Beamforming Architecture for Real-Time Marine Sensing: Evaluation and Design. J. Mar. Sci. Eng. 2021, 9, 868. [Google Scholar] [CrossRef]

- Ferguson, E.L.; Williams, S.B.; Jin, C.T. Improved Multipath Time Delay Estimation Using Cepstrum Subtraction. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 551–555. [Google Scholar] [CrossRef]

- Zhou, M.; Zhang, H.; Lv, T.; Gao, Y.; Duan, Y. Spatial diversity processing mechanism based on the distributed underwater acoustic communication system. PLoS ONE 2024, 19, e0296117. [Google Scholar] [CrossRef]

- Zhu, C.; Wang, D.; Tesei, A.; Ratilal, P. Comparing passive localization methods for ocean vehicles from their underwater sound received on a coherent hydrophone array. J. Acoust. Soc. Am. 2018, 144 (Suppl. S3), 1910. [Google Scholar] [CrossRef]

- Zha, Z.; Yan, X.; Ping, X.; Wang, S.; Wang, D. Deblurring of Beamformed Images in the Ocean Acoustic Waveguide Using Deep Learning-Based Deconvolution. Remote. Sens. 2024, 16, 2411. [Google Scholar] [CrossRef]

- Asolkar, P.; Das, A.; Gajre, S.; Joshi, Y. Tropical littoral ambient noise probability density function model based on sea surface temperature. J. Acoust. Soc. Am. 2016, 140, EL452–EL457. [Google Scholar] [CrossRef]

- Middleton, D. Adaptive Processing of Underwater Acoustic Signals in Non-Gaussian Noise Environments: I. Detection in the Space-Time Threshold Regimes. In Adaptive Methods in Underwater Acoustics; NATO ASI Series; Urban, H.G., Ed.; Springer: Dordrecht, Germany, 1985; Volume 151. [Google Scholar] [CrossRef]

- Luo, X.; Chen, L.; Zhou, H.; Cao, H. A Survey of Underwater Acoustic Target Recognition Methods Based on Machine Learning. J. Mar. Sci. Eng. 2023, 11, 384. [Google Scholar] [CrossRef]

- Karst, J.; McGurrin, R.; Gavin, K.; Luttrell, J.; Rippy, W.; Coniglione, R.; McKenna, J.; Riedel, R. Enhancing Maritime Domain Awareness Through AI-Enabled Acoustic Buoys for Real-Time Detection and Tracking of Fast-Moving Vessels. Sensors 2025, 25, 1930. [Google Scholar] [CrossRef]

- Samonte, M.J.C.; Laurenio, E.N.B.; Lazaro, J.R.M. Enhancing Port and Maritime Cybersecurity Through AI—Enabled Threat Detection and Response. In Proceedings of the 2024 8th International Conference on Smart Grid and Smart Cities (ICSGSC), Shanghai, China, 25–27 October 2024; pp. 412–420. [Google Scholar] [CrossRef]

- Marin, J.M.; Gunawan, W.H.; Rjeb, A.; Ashry, I.; Sun, B.; Ariff, T.; Kang, C.H.; Ng, T.K.; Park, S.; Duarte, C.M.; et al. Marine Soundscape Monitoring Enabled by AI-Aided Integrated Distributed Sensing and Communications. Adv. Devices Instrum. 2025, 6, 89. [Google Scholar] [CrossRef]

- Khan, M.M.H.; Liu, Z.; Prabhu, R.; Zheng, H.; Javied, A.; Bouma, H.; Yitzhaky, Y.; Kuijf, H.J. Ship detection and identification for maritime security and safety based on IMO numbers using deep learning. In Proceedings of the Artificial Intelligence for Security and Defence Applications II, SPIE, Edinburgh, UK, 16–19 September 2024. [Google Scholar] [CrossRef]

- Doan, V.-S.; Huynh-The, T.; Kim, D.-S. Underwater Acoustic Target Classification Based on Dense Convolutional Neural Network. IEEE Geosci. Remote. Sens. Lett. 2022, 19, 1500905. [Google Scholar] [CrossRef]

- Wang, J.; Ma, L.; Chen, W. Design of underwater acoustic sensor communication systems based on software-defined networks in big data. Int. J. Distrib. Sens. Netw. 2017, 13, 1550147717719672. [Google Scholar] [CrossRef]

- Santos, R.; Orozco, J.; Micheletto, M.; Ochoa, S.F.; Meseguer, R.; Millan, P.; Molina, C. Real-Time Communication Support for Underwater Acoustic Sensor Networks. Sensors 2017, 17, 1629. [Google Scholar] [CrossRef] [PubMed]

- Beng, K.T.; Vishnu, H.; Ho, A.; Choong, N.Y.; Yusong, W.; Hexeberg, S.; Chitre, M.; Tun, K. Cloud-Enabled Passive Acoustic Monitoring Array for Real-Time Detection of Marine Mammals. In Proceedings of the OCEANS 2024—Halifax, Halifax, NS, Canada, 23–26 September 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Hayes, J.P.; Kolar, H.R.; Akhriev, A.; Barry, M.G.; Purcell, M.E.; McKeown, E.P. A Real-Time Stream Storage and Analysis Platform for Underwater Acoustic Monitoring; IBM Journal of Research and Development: Armonk, NY, USA, 2013; Volume 57, pp. 15:1–15:10. [Google Scholar] [CrossRef]

- Ortiz, G.; Boubeta-Puig, J.; Criado, J.; Corral-Plaza, D.; Garcia-De-Prado, A.; Medina-Bulo, I.; Iribarne, L. A microservice architecture for real-time IoT data processing: A reusable Web of things approach for smart ports. Comput. Stand. Interfaces 2022, 81, 103604. [Google Scholar] [CrossRef]

- Tesei, A.; Meyer, F.; Been, R. Tracking of multiple surface vessels based on passive acoustic underwater arrays. J. Acoust. Soc. Am. 2020, 147, EL87–EL92. [Google Scholar] [CrossRef]

- Ahmad, Z.; Acarer, T.; Kim, W. Optimization of Maritime Communication Workflow Execution with a Task-Oriented Scheduling Framework in Cloud Computing. J. Mar. Sci. Eng. 2023, 11, 2133. [Google Scholar] [CrossRef]

- Vihman, L.; Kruusmaa, M.; Raik, J. Systematic Review of Fault Tolerant Techniques in Underwater Sensor Networks. Sensors 2021, 21, 3264. [Google Scholar] [CrossRef]

- Elbamby, M.S.; Perfecto, C.; Liu, C.-F.; Park, J.; Samarakoon, S.; Chen, X.; Bennis, M. Wireless Edge Computing with Latency and Reliability Guarantees. Proc. IEEE 2019, 107, 1717–1737. [Google Scholar] [CrossRef]

- Satyanarayanan, M. The Emergence of Edge Computing. Computer 2017, 50, 30–39. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Javed, A.; Robert, J.; Heljanko, K.; Främling, K. IoTEF: A Federated Edge-Cloud Architecture for Fault-Tolerant IoT Applications. J. Grid Comput. 2020, 18, 57–80. [Google Scholar] [CrossRef]

- Laha, J.; Pattnaik, S.; Chaudhury, K.S. Dynamic Load Balancing in Cloud Computing: A Review and a Novel Approach. EAI Endorsed Trans. Internet Things 2024, 10. [Google Scholar] [CrossRef]

- Domingos, L.C.; Santos, P.E.; Skelton, P.S.; Brinkworth, R.S.; Sammut, K. An Investigation of Preprocessing Filters and Deep Learning Methods for Vessel Type Classification with Underwater Acoustic Data. IEEE Access 2022, 10, 117582–117596. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, H.; Huang, W. Fast ship radiated noise recognition using three-dimensional mel-spectrograms with an additive attention based transformer. Front. Mar. Sci. 2023, 10, 1280708. [Google Scholar] [CrossRef]

- ISO 8601; Date and Time Format. ISO: Geneva, Switzerland, 2017.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).