1. Introduction

Ocean waves exert significant forces on offshore structures, potentially inducing large motions in floating systems that affect both operational efficiency and fatigue life. Predicting wave propagation in space and time is the focus of many studies, using a variety of both physics-based and, more recently, data-driven models. Away from shore, irregular ocean waves have typically been represented by linear superposition, leading to a spectral representation in the frequency domain, with related spectral and statistical parameters (e.g., significant wave height, peak spectral period, etc). This approach helps determine, or forecast, extreme sea-state parameters for various analyses and design purposes. However, a spectral representation lacks phase, i.e., individual wave information, which becomes extremely important for many applications such as optimizing motion control strategies of a floating structure. For example, Li et al. [

1] concluded that wave energy converters have increased efficiency when their control mechanism assimilates phase-resolved wave predictions. In the context of floating wind systems, phase-resolved predictions are essential for the development of active control strategies and digital twin frameworks. Alkarem et al. [

2] conducted reconstruction and forecast of floater’s motion supporting a wind turbine under the assumptions that upstream wave information and wave prediction–reconstruction models are available and reliable to reconstruct wave fields near the floating platform. Albertson et al. [

3] validated three wave reconstruction prediction models: a linear wave theory (LWT)-based model with a wave dispersion corrected for nonlinearity and a 2

-order wave model with nonlinear dispersive properties initialized by a linear prediction, against fully nonlinear potential flow simulations. The models appeared to provide reasonable short-term predictions at the float that can later be used for real-time control of float motions using a moving mass or ballast. Beyond renewable energy applications, seaborne additive manufacturing (SAM) [

4] and underwater 3D printing [

5] are other examples where the short-term phase-resolved predictions are crucial to ensure accurate operations at sea.

Physics-based predictions of ocean wave fields require using a physically relevant model and “inverting” it to fit it to observations (i.e., by minimizing the mean square error of simulated to measured), e.g., [

3,

6]. This is called the nowcast. Once a nowcast of the ocean surface is obtained on the basis of some measurements, the fitted model can then be used to perform a forecast of the wave surface elevation expected at the location and future time of interest [

7]. Desmars et al. [

8] validated the use of this algorithm, both numerically and experimentally, using non-uniformly distributed wave gauges, representing a spatial sampling using an optical sensor (e.g., LIDAR). They confirmed that the prediction accuracy converged as the amount of input data involved during the inversion process increased. Naaijen and Huijsmans [

9] compared wave elevation predicted by their model against measured wave elevations at various distances downstream for different wave conditions and showed that the prediction horizon can be extended further than the theoretical limits. For models based on LWT, the predictable space–time zone can be determined based on the wave group velocities of the fastest and slowest components of the reconstructed wave field [

10]. Qi et al. [

11] investigated the variation of the theoretical phase-resolved predictable zone in space–time for multi-directional irregular wave fields and considered optimal deployment to maximize predictable zone space–time volume.

For severe sea-states, more advanced wave models have been developed that account for wave nonlinearity (to some order), which affects both wave shape and phase speed, e.g., [

8,

12,

13,

14] For instance, Grilli et al. [

6] and Nouguier et al. [

13] developed nonlinear free surface reconstruction algorithms, using an efficient Lagrangian-based choppy model [

12] and the improved, hybrid variation [

14] and validated them for 1D and 2D irregular surface waves, using simulated LIDAR data to create relevant data sets. More recently, Kim et al. [

15] conducted experiments and validated these models using 2D data, concluding that accurate wave forecasting in multidirectional seas, compared to that in unidirectional seas, requires accounting for both directional and frequency components of the wave field.

While physics-based models can achieve near real-time reconstruction and predictions as long as nonlinear effects are weak or negligible [

3,

15,

16,

17], the reconstruction problem (i.e., determining the state of the wave field, wave components, nonlinear couplings, etc.) and the time it takes to invert, integrate equations, and generate predictions are major challenges to the practicality of using such models in severe sea-states [

18], unless powerful computational hardware is embarked onto the marine structure. As an alternate approach, advanced machine learning tools, when properly trained on relevant data, have promising potential to accelerate these processes and even enhance the accuracy of the predictions [

19]. For instance, the proposed model by Zhang et al. [

20], which is based on a variational Bayesian machine learning approach, outperformed conventional models in terms of prediction accuracy by reducing the error as much as 55% and length of the predictable zone by expanding it by as much as 74%. Jörges et al. [

21] used long short-term memory (LSTM), a recursive neural network (RNN) type, to predict nearshore significant wave heights. Mohaghegh et al. [

18] investigated a machine learning technique that accurately handles wave predictions with more than two orders of magnitude quicker than numerically solving governing equations. Kagemoto [

22] developed a LSTM model to predict experimental and numerical irregular wave trains and extrapolated their model to forecast the motion response of a floating body. They concluded that the model is capable of producing reasonably accurate predictions in spite of nonlinear effects present in the data. Duan et al. [

23] constructed a neural-network-based wave prediction model (ANN-WP) where they utilized experimental data to train, validate, and conduct comparison studies with linear wave prediction (LWP) algorithms and proved that ANN-WP is superior in performance to LWP.

Zhang et al. [

20] demonstrated that uncertainty quantification (UQ) can be used in conjunction with phase-resolved real-time wave prediction to (1) expand the predictable zone, which is otherwise quite conservative when using LWT-based models; (2) inform the control algorithm of the level of confidence to perform a control action based on an uncertainty score for a given predicted value. Silva and Maki [

24] applied similar uncertainty quantification using the Monte Carlo dropout approach proposed by Gal and Ghahramani [

25] to perform system identification for 6-DOF ship motions in waves under various upstream wave probe setups. Law et al. [

26] used a higher-order spectral–numerical wave tank (HOS-NWT) method developed by Bonnefoy et al. [

27] and Ducrozet et al. [

28] to generate datasets to train their artificial neural network for two wave steepness values, a limiting and a mean steepness value. Harris [

29] developed a data-driven model that is faster than real-time. Their choice of machine learning approach was the time-series dense encoder (TiDE) as it has shown good balance between model complexity, stability, and computational time. Li et al. [

30] developed a three-dimensional, phase-resolved ocean wave forecast based on a wave tank experiment where they used various machine learning methods including recursive and convolutional neural networks.

Even though prior research efforts demonstrated that machine learning (ML) models can generate accurate and rapid forecasts of phase-resolved ocean wave fields, primarily through numerical simulations, e.g., [

18,

22,

23,

24,

26] and, to a lesser extent, through experimental validation, e.g., [

15,

29,

30], limited attention is paid to their resilience and generalizability under realistic offshore sensing conditions. Additionally, the number and placement of upstream wave probes in these studies are fixed and highly controlled, e.g., [

23,

26,

31]. In contrast, real-world deployments, particularly in deep water, often involve dynamic or uncertain probe locations due to drifting buoys. To the author’s knowledge, the research conducted by Qi et al. [

11] was the only study where an analytical LWT-based predictable zone was derived based on moving measured probes and their reconstruction method validated in their subsequent publication [

32]. However, no uncertainty assessment was conducted on the predictable zone induced by moving probes. Upstream data could become unavailable due to shadowing effects or occlusion in optical measurements due to low visibility or splashing. These variations can significantly alter the spatial distribution of the available data used for wave reconstruction. The issue is further exacerbated when optical sensors such as LIDARs are employed. Data loss may also occur due to equipment malfunction, shadowing effects, or the inability to detect the free surface location reliably. Despite these challenges being common in practical applications, and despite the increasing use of ML-models, the impact of data sparsity and phase misalignment on forecast quality remains underexplored, particularly for experimental datasets.

To address these gaps, the present work evaluates the performance and resilience of ML-based wave field reconstruction methods under realistic, variable data acquisition scenarios. In the context of this paper, a model’s resilience is defined by the model’s ability to maintain acceptable performance despite uncertain or incomplete input. Specifically, we selected two ML-models; LSTM and TiDE. The LSTM model is extensively used for the reconstruction and prediction of time-series in various related fields such as the work of Jörges et al. [

21] but has also been reported to have higher prediction error compared to other advanced models [

30]. The TiDE model, as validated by Harris [

29], can outperform other traditional ML-models while still being computationally inexpensive. In addition, the predictable zone can be quite dynamic as demonstrated by Naaijen and Huijsmans [

9], Qi et al. [

11], and Zhang et al. [

20]. We introduce a

-trimming algorithm for adjusting the forecast horizon based on uncertainty quantification derived from a historical forecast. This way, we provide sensitivity analyses of ML models for data masking and temporal phase shifts using both high-order spectral simulation numerical wave tank and wave basin experiments. The comparison summarized in

Table 1 highlights how this study addresses several key gaps in the current literature and compares to other existing research.

The remainder of this paper is organized as follows.

Section 2 outlines the experimental and numerical setup, introduces the forecasting models, and describes the implementation of data masking and the

-trimming algorithm.

Section 3 presents the results from the numerical wave tank study, including a performance comparison of baseline and

-trimmed variations during masking effects. The experimental investigation is also included in

Section 3, focusing on data sparsity during the experiment and phase misalignment. A broader discussion of findings, limitations, and future implications of offshore deployment are discussed in

Section 4. The study outcomes and future work are concluded in

Section 5.

2. Methodology

This paper presents results and a sensitivity analysis of wave reconstruction–prediction models conducted on both experimentally and numerically generated data. We designed the problem by building appropriate ML models and tested them under different scenarios simulating various conditions after fitting them.

2.1. Experimental and Numerical Setup

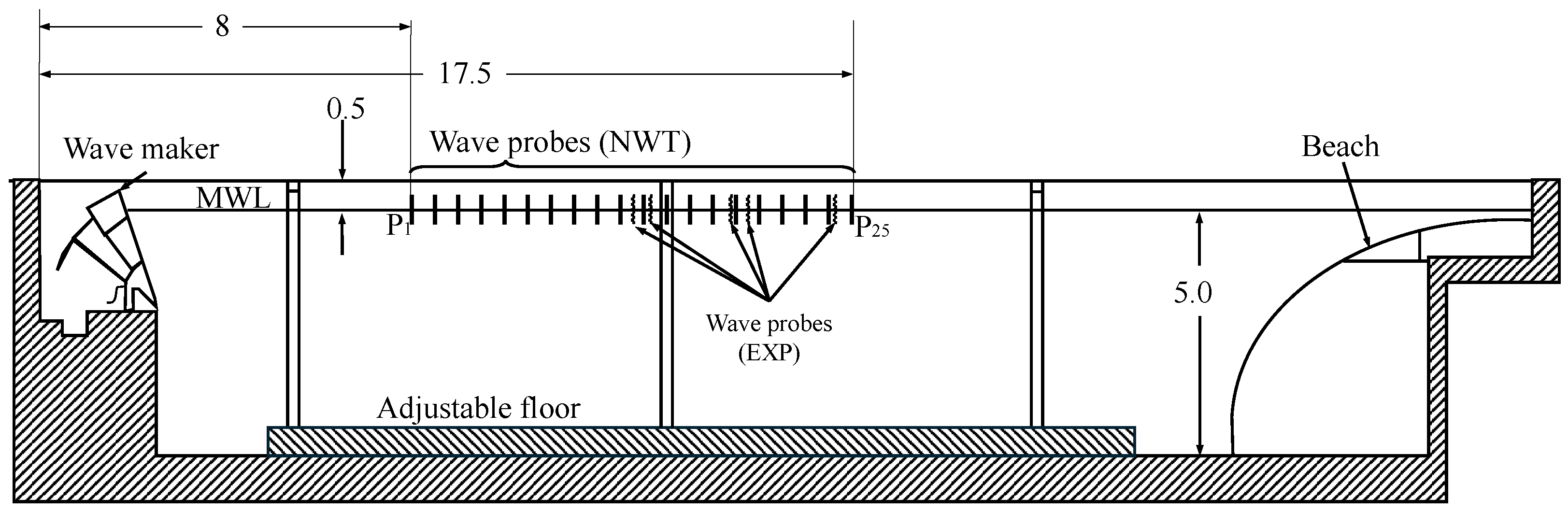

Accurate short-term wave forecasting is crucial for the active control of floating structures, with applications ranging from improving energy efficiency in wave energy converters to reducing wave-induced fatigue in floating wind turbine platforms. To achieve that, reliable upstream wave information must be available. The experimental campaign presented here was designed to emulate this requirement. Five wave probes were deployed with two closely spaced upstream closest to the wave maker, two downstream, and one located furthest downstream at the location of the floating wind turbine to be tested in later campaigns, as reported in Fowler [

33] and Alkarem et al. [

2]. The experiment took place at the Harold Alfond wind-wave (W

2) basin at the Advanced Structures and Composites Center located at the University of Maine. A 1:70 Froude scaling was applied. The basin layout and probe distribution used for analysis are illustrated in

Figure 1.

To complement the experimental study, The basin was numerically replicated using the higher-order spectral numerical wave tank (HOS-NWT) model developed by Bonnefoy et al. [

27] and Ducrozet et al. [

28], hereafter referred to as NWT. The simulated tank dimensions are 30 m in length, 10 m in width, and 5 m in depth. A wave absorbing beach is positioned at 85% of the tank length and configured with an absorption strength of 90%. In addition to the experimental probe configuration, additional virtual wave probes in the numerical wave tank were incorporated to enhance spatial resolution, resulting in a total of 25 probes distributed primarily at 0.5 m intervals.

2.2. Wave Forecasting Model Development

Two machine-learning-based models were investigated in this study. These models were trained using available data from either physical experiments (EXP) or the numerical wave tank (NWT) simulations. During testing, past data from all sensors were provided as input. The internal mapping learned during training is designed to correlate free surface elevations recorded by upstream probes with their subsequent propagation toward downstream locations. As a result, the models can forecast future wave elevations downstream based on historical probe measurements.

The first model is a recurrent neural network (RNN) of the multivariate long short-term memory (LSTM) type. LSTMs are well-regarded for their ability to retain both short- and long-term temporal dependencies with a forget gate to regulate the flow of past information, making them effective for time-series forecasting [

34]. The second model is time-series dense encoder (TiDE), a state-of-the-art deep learning architecture proposed by Das et al. [

35] designed to be more efficient and to potentially outperform more complex models. For time-series manipulation and model implementation, we used the open-source Python library Darts, developed by Unit8 SA [

36].

Both models were trained using consistent hyperparameter settings: 1000 epochs, a batch size of 32, a hidden layer size of 100, a dropout rate of 0.1, and an optimizer learning rate of 0.001. A probabilistic forecasting framework was adopted through the use of the Laplace likelihood, which enables the models to produce a distribution of likely outcome to generate uncertainty bounds for each prediction. Performing uncertainty quantification (UQ) is crucial in the context of phase-resolved, ocean wave forecasting as it allows the systematic evaluation of confidence levels associated with each prediction. This is particularly useful when control systems are influenced by phase-resolved predictive models to reduce risks and high fatigue on these systems.

In both models, the same input–output structure was employed: a historical sequence of length,

m is used to forecast a future horizon of

n steps. A ratio of

is utilized. This implies that for a prediction horizon of

, the model observes two seconds of past data to predict one second ahead. The value of

n was initially set to 120. With a temporal resolution of

, this corresponds to a forecasting horizon of

. This selection was informed by the range of group velocities

associated with the sea-state under consideration, given all numerical probes are active. We use group velocity since the energy content of the wave field propagates downstream with the group velocity, given in LWT by

where

and

k denote the angular wave frequency and wavenumber, respectively, and

h is the water depth. In sufficiently deep water conditions (i.e.,

), the group velocity asymptotically approaches half the wave phase velocity,

.

Since the multivariate forecasting problem is complex to define, we present here a uni-variate counterpart for simplicity. We create a forecast of the surface elevation target,

y, given a known dynamic history of itself and other covariates,

x, which can be written as

In the TiDE model, the upstream probes were considered as covariates (meant to help build the prediction, but their signal is not included in the forecast). However, the LSTM used all probes as variables. Due to causality, this would generate wrong forecasts at the most upstream probes, and the propagation of that error downstream is possible.

To quantify the error between the predicted variable,

y, and the observed signal,

, we calculated the mean absolute error (MAE) metric as

Two sea-states were being investigated in this research, a moderate one (SS1) and a severe one (SS2), both described by a JONSWAP spectrum using three parameters: the significant wave height,

, peak wave period,

, and the peak enhancement factor,

. These parameters are detailed in

Table 2.

2.3. Data Masking

We simulated two scenarios concerning the input data fed into the models. The first involves a temporal Monte Carlo dropout (or masking) of input signals from upstream buoys, representing either short- or long-term data loss. Short-term masking affects multiple sensors and occurs frequently in both space and time but only for short durations, . This setup evaluates challenges such as intermittent signal acquisition—caused, for example, by low-visibility conditions affecting LIDAR—or shadowing effects. In contrast, long-term masking of a limited number of sensors tests the model’s resilience in maintaining predictive accuracy under conditions of sensor malfunction, power loss, or prolonged shadowing effects.

This methodology addresses a key challenge in data-driven modeling: handling highly irregular spatiotemporal input, such as LIDAR data, and converting it into a structured format suitable for models requiring uniform input.

Figure 2 illustrates the adopted masking strategy used to simulate missing or corrupted input data via a Monte Carlo-style dropout mechanism. To implement this, time-series signals are first segmented into consecutive windows of size

. Each window is then assigned a random number drawn from a uniform distribution between 0 and 1. If the random number exceeds a predefined probability threshold

, the corresponding window is masked by multiplying the signal with a factor of zero, effectively simulating data loss. By adjusting

, one can control the frequency of dropout events throughout the signal. The figure presents two representative examples using different values of

: one with short-term masking and another with longer windows. The shorter windows result in more frequent dropout patterns, mimicking high-frequency signal interruptions. In contrast, the longer windows introduce more persistent, low-frequency masking, representing prolonged data outages. This dual-scale masking approach allows us to assess the model’s resilience to both transient and extended periods of data unavailability.

The second scenario introduces a phase shift to signals from upstream buoys. This simulates real-time data collection from floating wave sensors, which may drift and change position due to ocean currents and wave forces, resulting in positive or negative phase shifts in the recorded signals.

Figure 3 shows an example of this effect. We assess model resilience to such phase discrepancies and quantify the corresponding degradation in predictive performance.

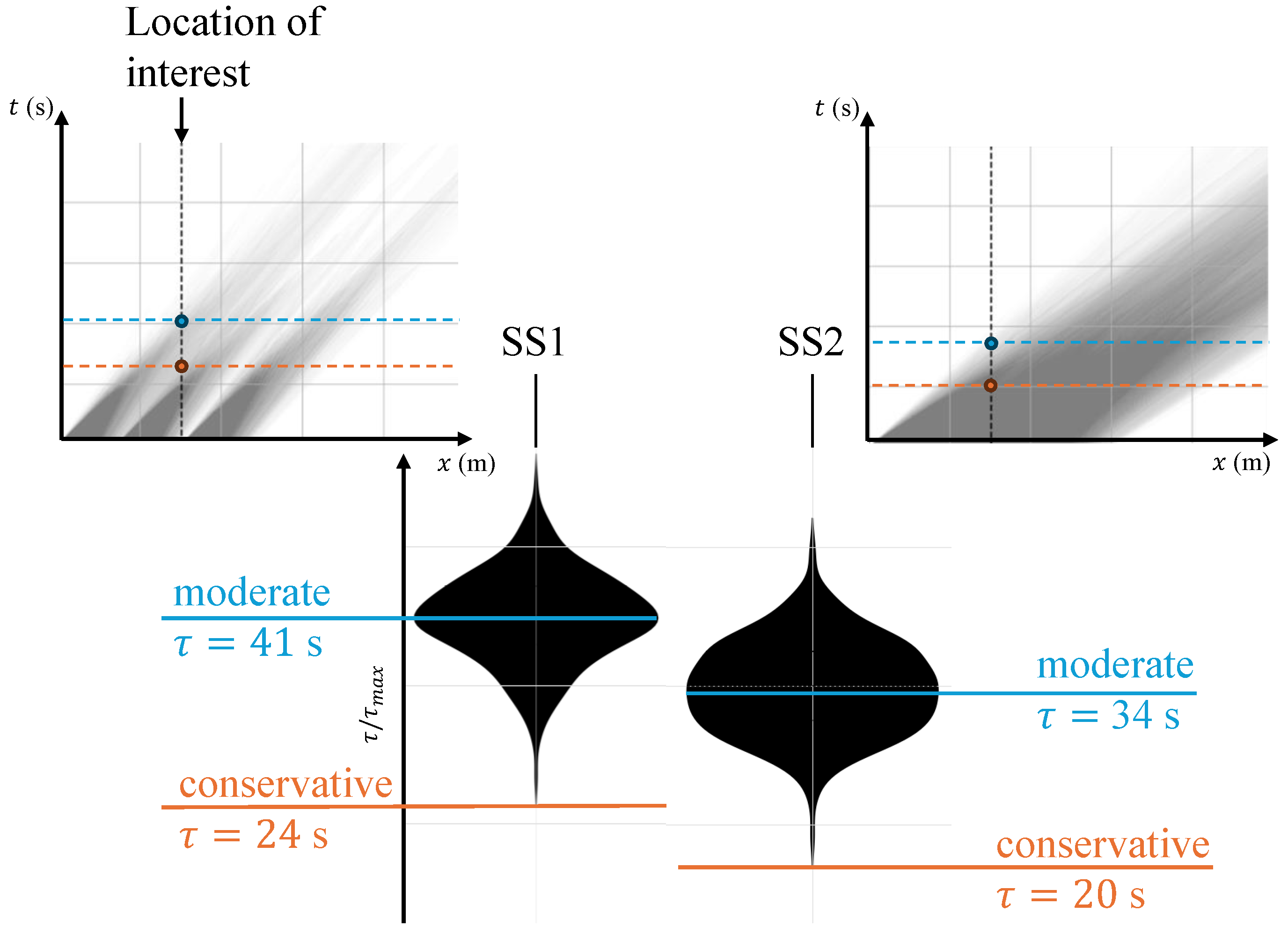

2.4. -Trimming Algorithm

Zhang et al. [

20] used uncertainty-based parameterization to define a predictable zone for wave forecasting. In this study, we extended this approach to multivariate signal processing, incorporating uncertainty levels derived from historical forecasts.

First, we computed the uncertainty level for each target signal,

as follows:

where

and

represent the 97.5th and 2.5th percentiles of the predicted distribution for

. We then defined a minimum uncertainty threshold,

below which the forecast

was considered reliable, i.e., when

; where

M is the number of output signals, and

and

are the minimum and maximum observed uncertainties for each signal

j.

Models that adapt their prediction horizon based on this threshold were referred to as -trimmed models, for which , the horizon, was computed as the time during which the uncertainty stays below . For each historical forecast, this time horizon was computed by the following:

smoothing the uncertainty signal using a 1D convolution with a Gaussian kernel,

finding the total time during which , and

summing the number of valid time steps and multiplying by the time resolution to obtain .

Two types of -trimming algorithm were defined:

Moderate-type: for where was selected based on the peak of the distribution of valid horizons (or the average of all peaks in case of multivariate).

Conservative-type: which used the smallest that occurred in the distribution, beyond which the uncertainty threshold can be violated.

Figure 4a shows the resulting

distribution (via violin plots) for the TiDE model applied to numerical wave tank data, focusing on the most downstream probes.

Figure 4b illustrates a single forecast stride contributing to this distribution, highlighting how

increases downstream as the model benefits from accumulating upstream information. This trend is also reflected in the violin plots.

4. Discussion

The numerical investigation of wave forecasting errors using the various models demonstrated that when sufficient upstream data are available, the machine learning models are capable of accurately forecasting phase-resolved ocean waves, even in the presence of data loss. However, the role of uncertainty quantification is critical: without constraining prediction uncertainty, errors can exceed acceptable thresholds, potentially jeopardizing the reliability of downstream applications, such as wave-aware control systems.

The results showed that long-term data masking leads to significantly wider uncertainty bounds compared to short-term masking. This indicates that prolonged data loss introduces higher variability in model outputs. The use of a -trimming algorithm mitigates this effect, maintaining lower error levels by adjusting the prediction horizon based on previous forecast uncertainty levels.

Although the LSTM model exhibited lower average error values (

Figure 5), it also suffered from causality-related issues. Specifically, erroneous predictions at the most upstream probes can propagate downstream if intermediate probes do not provide sufficient supplementary information. Moreover, LSTM models require longer training times due to the need to forecast multiple targets simultaneously. TiDE models, in contrast, provide a good balance between accuracy and computational cost, as was also pointed out by Harris [

29].

TiDE models showed strong performance under densely spaced upstream probes. However, under experimental conditions with coarser spatial probe resolution and more energetic sea-states, the moderate-type model exhibited a degraded accuracy. To address this, we introduced a conservative-type

-trimming algorithm with shorter prediction horizons, derived from uncertainty thresholds. This version demonstrated greater resilience to both data loss and phase shifts. For instance, MAE was reduced by 30% when the conservative variation was used during short and long-term masking, as seen in

Figure 8. Additionally, an increase in MAE by about 0.5 m was observed with a deviation of ±3 s from the optimal phase alignment. The same error accumulated when using the conservative approach at ±4 s.

The phase shift analysis reveals that drifting upstream probes can degrade the wave forecast accuracy downstream, more severely than complete data masking. This insight suggests the potential value of dynamically deactivating unreliable sensors in real time, rather than feeding uncertain signals into the models. Moreover, for data acquisition systems with irregular spatial or temporal coverage—such as LIDAR—the model should be trained assuming a maximum upstream sensor grid. Irregularities can then be handled via dynamic masking to reflect real-time sensor availability based on acquired data locations.

Despite the promising results, several limitations of this study must be acknowledged. The models were trained and tested under fixed sea-state conditions (specific and ) and are not generalizable across varying sea states without retraining. In real-time applications, a classifier would be required to identify the prevailing sea state and activate the corresponding trained model. Additionally, the dynamic behavior of drifting buoys was not explicitly modeled. Instead, sensor drift was approximated by applying phase shifts to fixed probes. This simplification neglects wave transient changes due to wave dispersion, as well as the impact of buoy motion on measurement accuracy.

Building upon these findings, future work will explore the following directions. The -trimming algorithm can be further enhanced by including performance-guided limitations instead of purely uncertainty-based. This involves dynamically adjusting the forecast horizon to ensure error remains below a threshold determined from weighted historical forecast performance. The authors are also interested in extending the methodology to real ocean field deployments where wave conditions include short-crested, multi-directional, and wave–current interaction effects. This will allow for validation in more complex and nonlinear environments. Furthermore, wave forecasting model can be integrated with floating platform simulations to predict wave-induced loads and platform responses in real time, enabling more effective digital twin and control applications.

Overall, these results support the development of flexible, uncertainty-aware forecasting models that are resilient to realistic operational challenges such as missing data, probe drift, and coarse spatial resolution in offshore wave monitoring systems and control strategies.

5. Conclusions

This study evaluated the performance and resilience of ML models for phase-resolved ocean wave forecasting under conditions of incomplete and uncertain upstream data flow. Using both numerical wave tank simulations and experimental datasets, we tested two ML models; LSTM and TiDE, across scenarios involving data masking, phase shifts, and spatial sparsity of upstream probes. We demonstrated that the -trimming algorithm that limits the prediction horizon based on uncertainty levels effectively reduces wave forecasting errors by 46% for the TiDE model and 22% for the LSTM model with a masking probability threshold of .

While LSTM models showed lower average errors, they were more computationally demanding and prone to causality-related inaccuracies at upstream locations. The TiDE model, especially in its conservative -trimmed form, offered a more resilient alternative under experimental constraints, including probe drift and reduced sensor availability. For instance, a reduction of 30% in forecast error was observed under short and long-term masking.

These findings reinforce the importance of incorporating uncertainty quantification and phase-awareness into ML-based forecasting frameworks for offshore applications. In practice, many ocean measurement systems operate in dynamic and uncertain environments where upstream data availability is inconsistent. The -trimming method offers a practical mechanism to regulate forecast reliability in real time without requiring model retraining or structural modifications.

While this study demonstrates the viability of -trimmed forecasting under sparse and uncertain data conditions, several limitations remain. The models were trained for specific sea states and do not generalize across varying conditions without retraining or classification-based switching. Additionally, drifting probe effects were simplified as static phase shifts, omitting dynamic amplitude and dispersion-related changes that occur in real deployments. Future work will address these limitations by extending the method to real ocean field data with multidirectional and nonlinear wave effects and by integrating forecasting with floating structure response models. An adaptive, performance-guided version of -trimming will also be explored to maintain target error thresholds in real time.