The TSformer: A Non-Autoregressive Spatio-Temporal Transformers for 30-Day Ocean Eddy-Resolving Forecasting

Abstract

1. Introduction

2. Data

2.1. 3D Eddy-Resolving Ocean Physical Reanalysis Data

2.2. 2D Remote Sensing Auxiliary Dataset for Surface Forcing

2.3. Evaluation Dataset

2.4. Data Pre-Processing and Model Domain

3. Methods

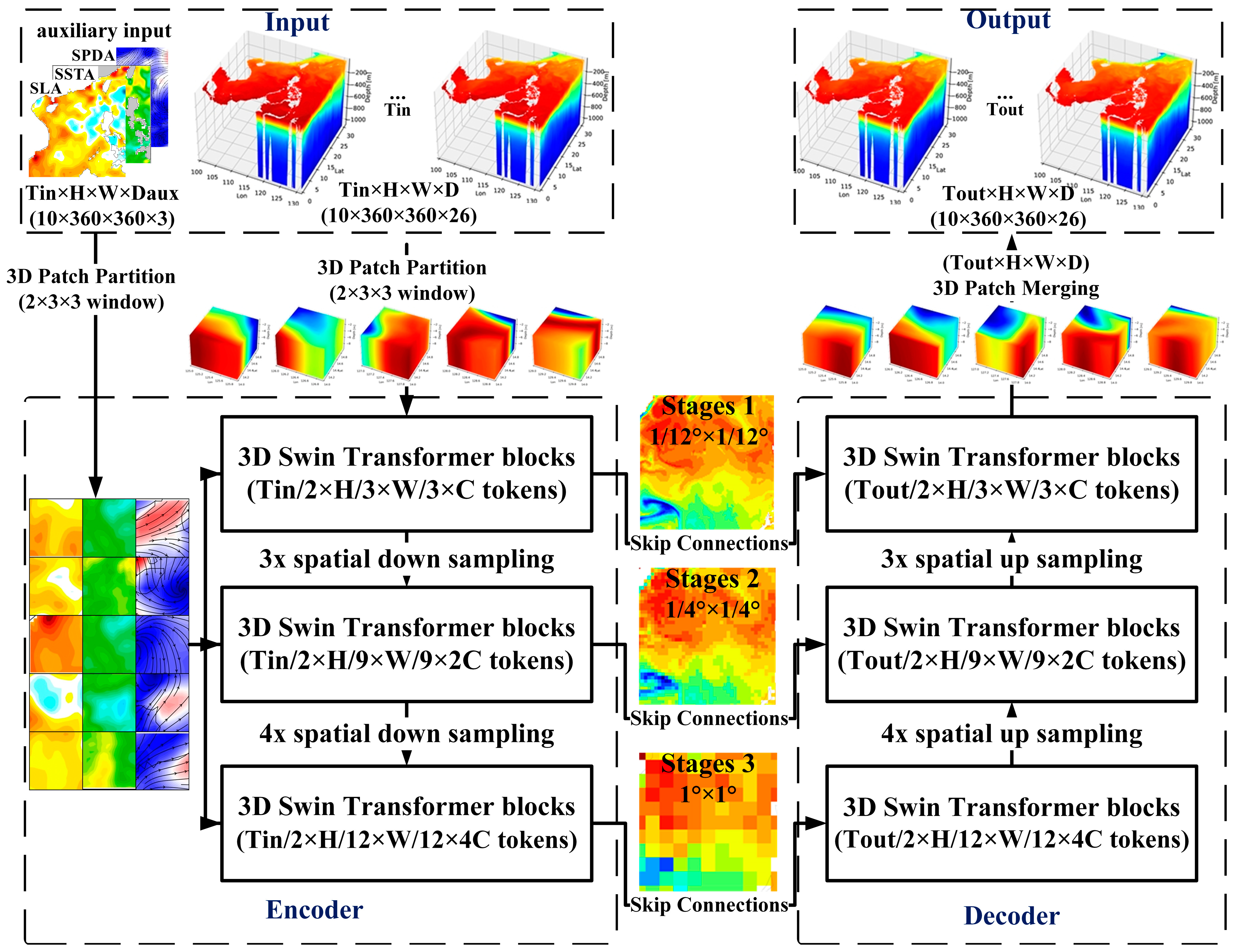

3.1. TSformer Model Architecture

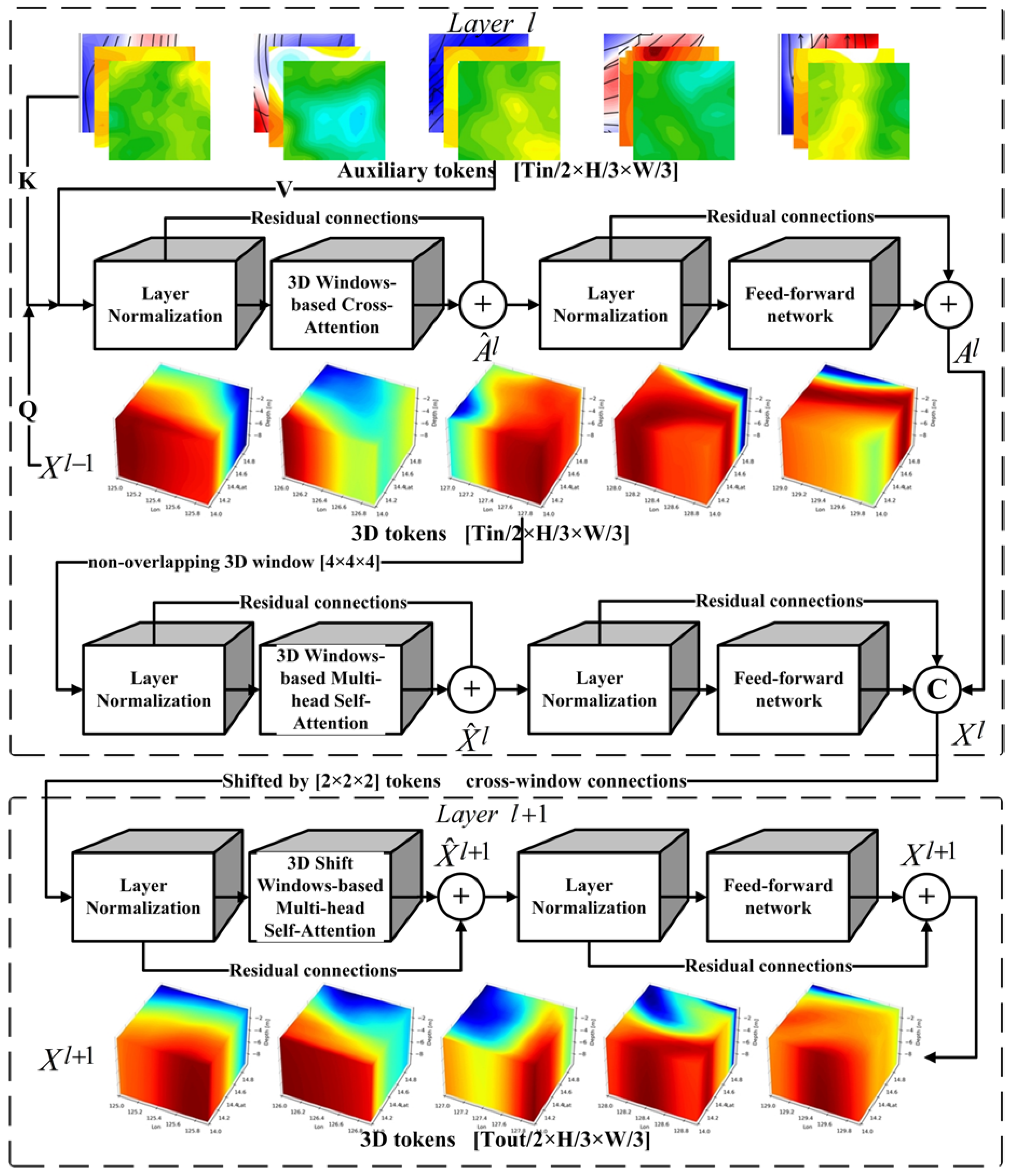

3.2. 3D Swin Transformer Block

3.3. Non-Autoregressive Methods

3.4. Train and Hyperparameters

4. Operational Forecast Results

4.1. Metrics

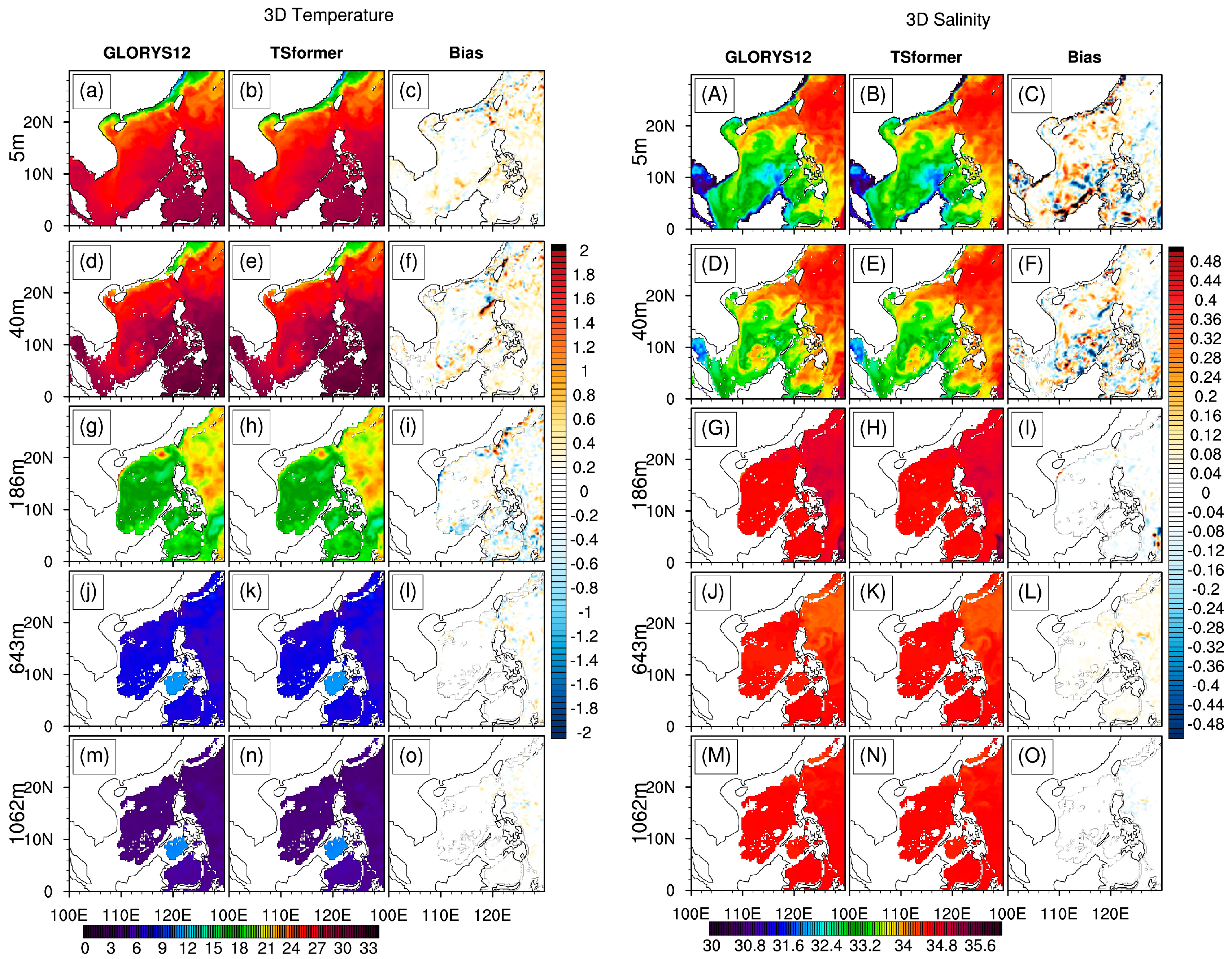

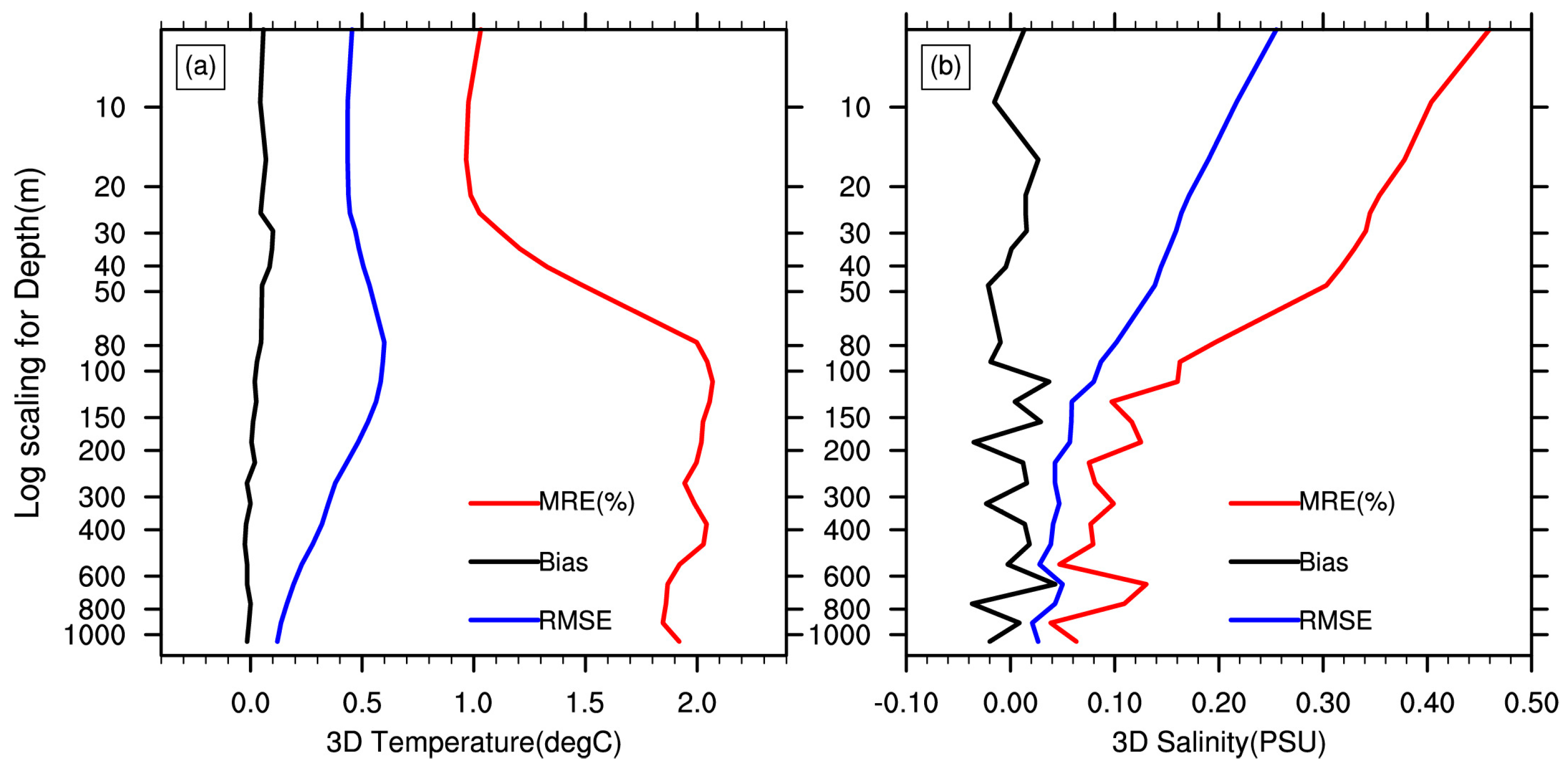

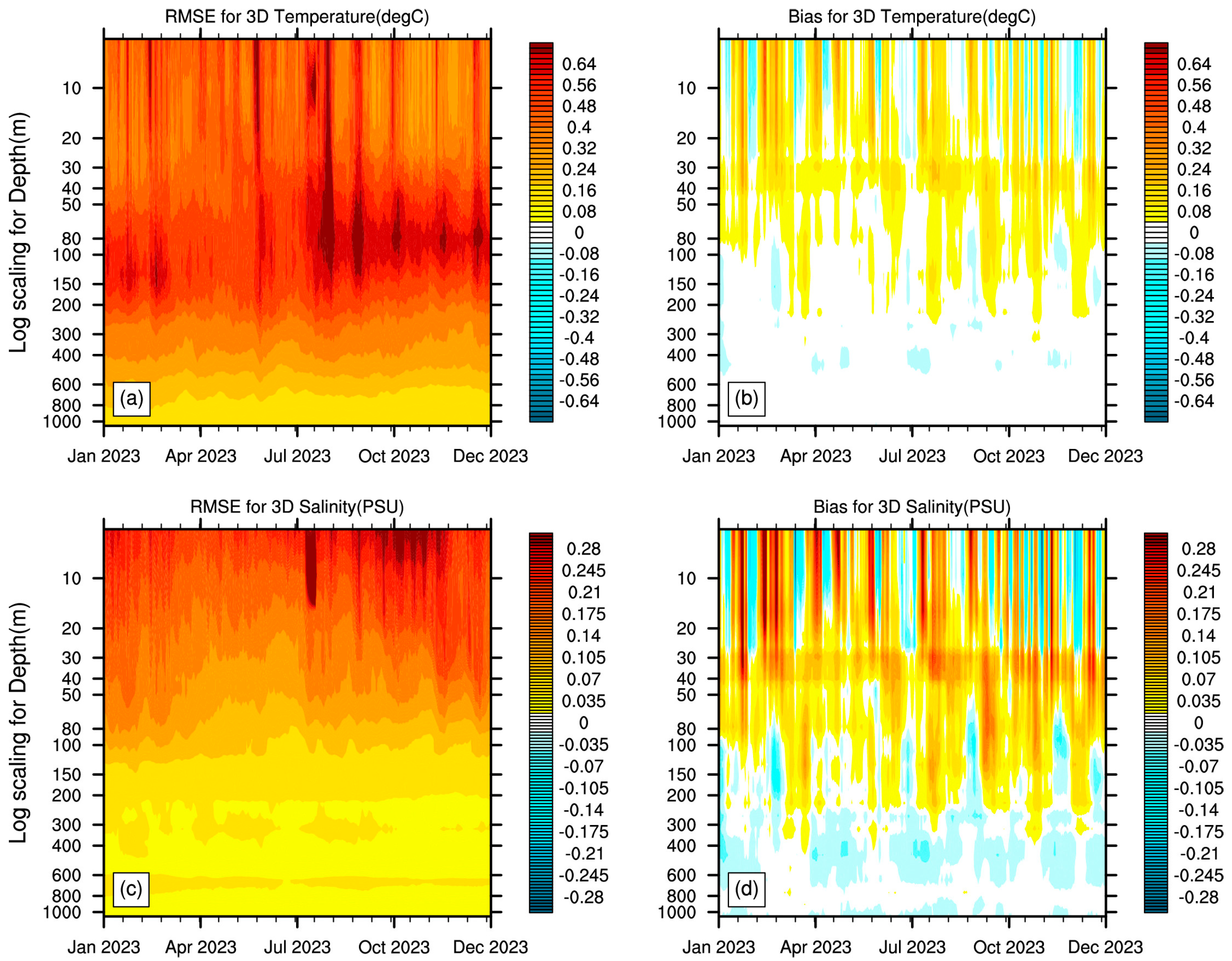

4.2. 3D TS Forecast Results Evaluation with GLORYS12V1

4.3. TS Vertical Profiles Evaluation with Argo

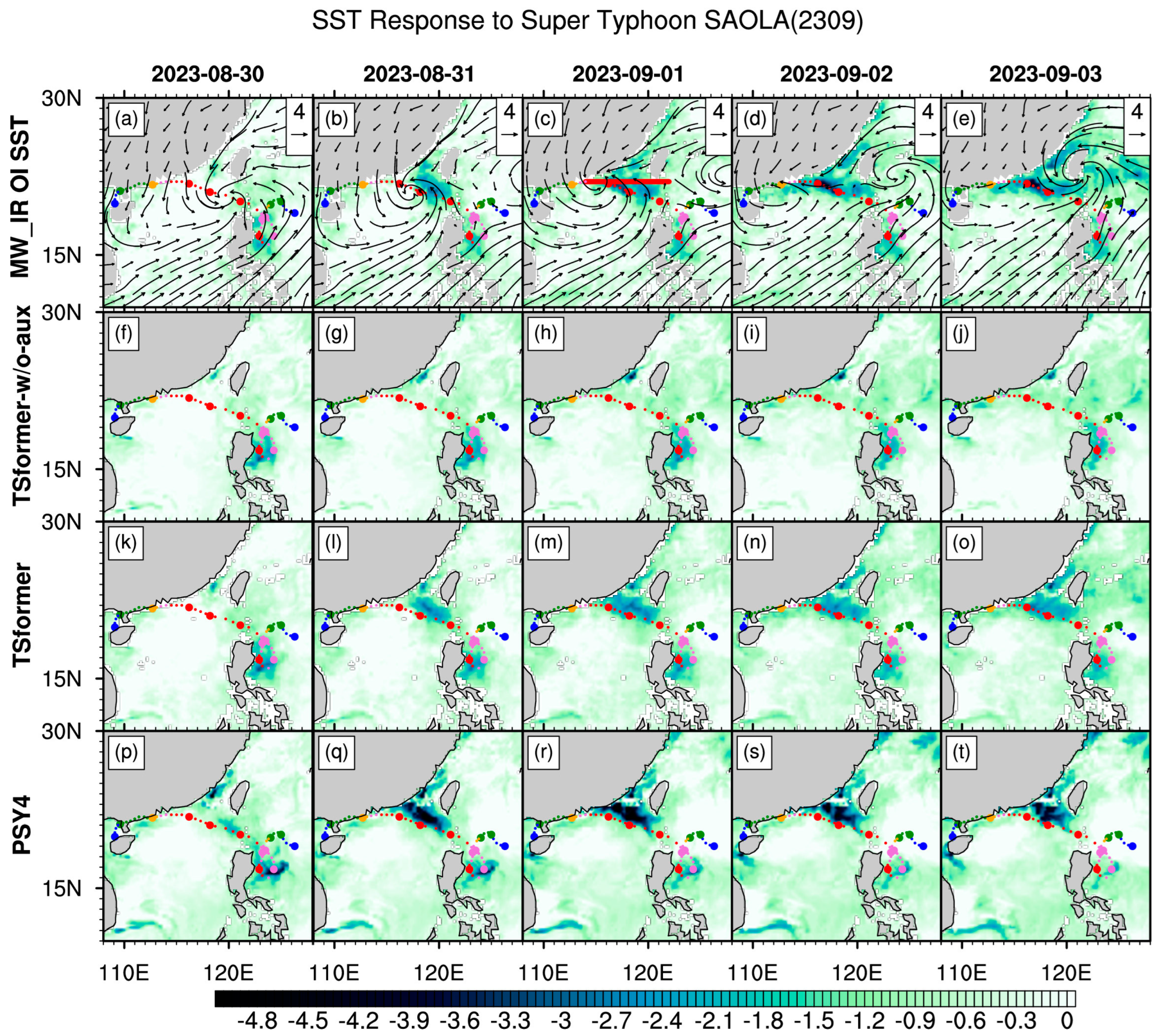

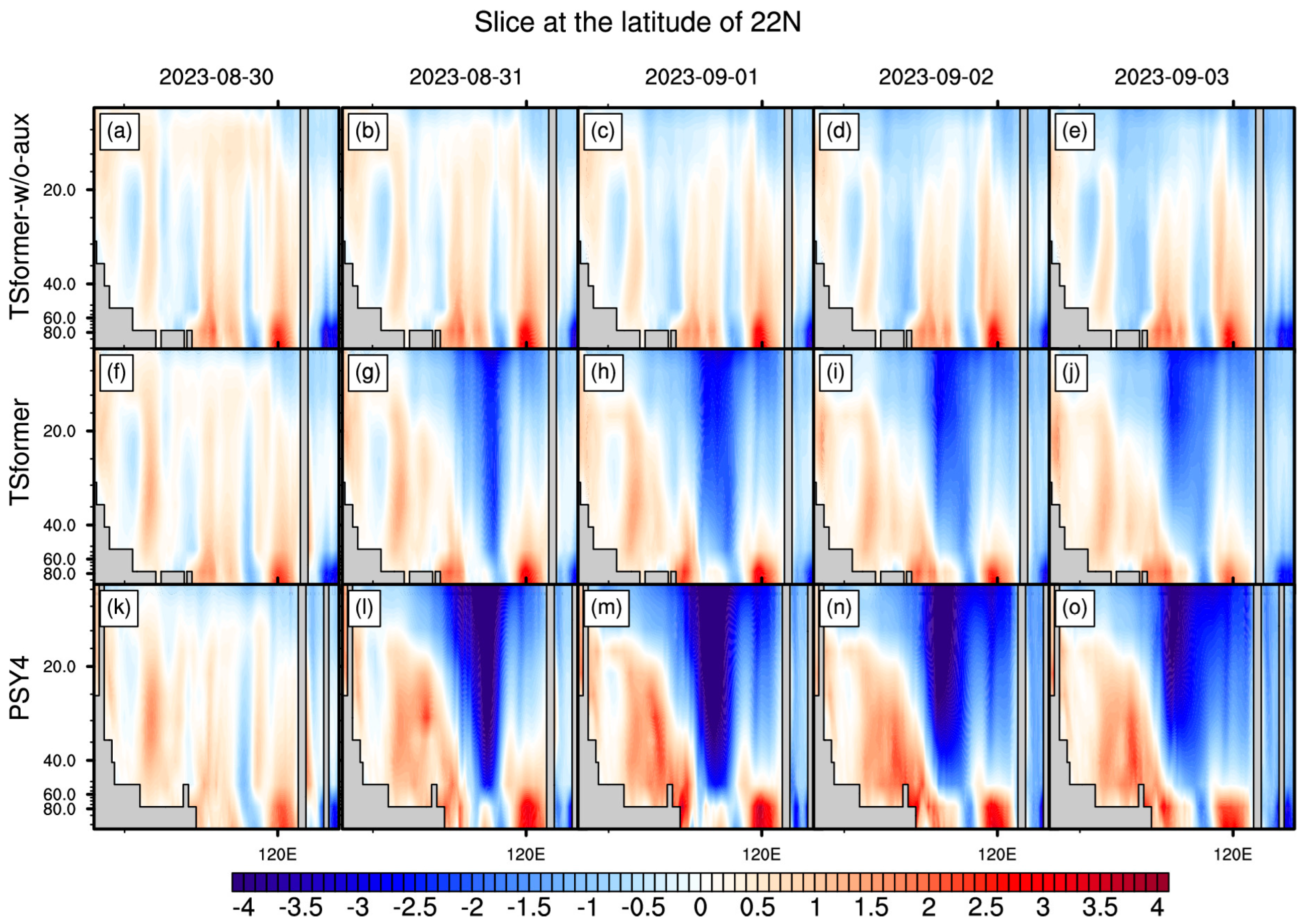

4.4. SST Cooling Evaluation with Satellite Observation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SST | Sea Surface Temperature |

| SLA | Sea-Level Anomaly |

| SPD | Wind Speed |

| PSY4 | Mercator Ocean Physical System |

| MW_IR OI SST | the Optimally Interpolated daily SST products using microwave and infrared data at a 9 km resolution |

| SCS | South China Sea |

| 3D TS | 3D Temperature and Salinity |

| TSformer | A Non-autoregressive Spatio-temporal Transformers for 30-day Ocean Eddy-Resolving Forecasting |

| RNN | Recurrent Neural Networks |

| CNN | Convolutional Neural Networks |

| 3D-W-CA | 3D Window-based Cross-Attention |

| 3D-SW-MSA | 3D Shifted Window-based multi-head self-attention |

| 3D-W-MSA | 3D Window-based multi-head self-attention |

| FFN | Feed-forward network |

| LN | Layer Normalization |

References

- Suthers, I.M.; Young, J.W.; Baird, M.E.; Roughan, M.; Everett, J.D.; Brassington, G.B.; Byrne, M.; Condie, S.A.; Hartog, J.R.; Hassler, C.S.; et al. The Strengthening East Australian Current, Its Eddies and Biological Effects—An Introduction and Overview. Deep. Res. Part II Top. Stud. Oceanogr. 2011, 58, 538–546. [Google Scholar] [CrossRef]

- Cheng, L.; Abraham, J.; Hausfather, Z.; Trenberth, K. How Fast Are the Oceans Warming? Science 2019, 363, 128–129. [Google Scholar] [CrossRef]

- Fox-Kemper, B.; Hewitt, H.T.; Xiao, C.; Aðalgeirsdóttir, G.; Drijfhout, S.S.; Edwards, T.L.; Golledge, N.R.; Hemer, M.; Kopp, R.E.; Krinner, G. Ocean, Cryosphere and Sea Level Change. Climate Change 2021: The Physical Science Basis. Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change; Zhai, P., Pirani, A., Eds.; IPCC: Geneva, Switzerland, 2021. [Google Scholar]

- Liu, X.; Wang, Y.; Zhang, H.; Guo, X. Susceptibility of Typical Marine Geological Disasters: An Overview. Geoenviron. Disasters 2023, 10, 10. [Google Scholar] [CrossRef]

- Burnet, W.; Harper, S.; Preller, R.; Jacobs, G.; Lacroix, K. Overview of Operational Ocean Forecasting in the US Navy: Past, Present, and Future. Oceanography 2014, 27, 24–31. [Google Scholar] [CrossRef]

- Blockley, E.W.; Martin, M.J.; McLaren, A.J.; Ryan, A.G.; Waters, J.; Lea, D.J.; Mirouze, I.; Peterson, K.A.; Sellar, A.; Storkey, D. Recent Development of the Met Office Operational Ocean Forecasting System: An Overview and Assessment of the New Global FOAM Forecasts. Geosci. Model Dev. 2014, 7, 2613–2638. [Google Scholar] [CrossRef]

- Semtner, A.J., Jr.; Chervin, R.M. A Simulation of the Global Ocean Circulation with Resolved Eddies. J. Geophys. Res. Ocean. 1988, 93, 15502–15522. [Google Scholar] [CrossRef]

- Gurvan, M.; NEMO Team. NEMO Ocean Engine (Version v3.6). 2017, p. 1472492. Available online: https://epic.awi.de/id/eprint/39698/1/NEMO_book_v6039.pdf (accessed on 10 May 2025).

- Guo, H.; Chen, Z.; Zhu, R.; Cai, J. Increasing Model Resolution Improves but Overestimates Global Mid-Depth Circulation Simulation. Sci. Rep. 2024, 14, 29356. [Google Scholar] [CrossRef]

- Zhang, S.; Fu, H.; Wu, L.; Li, Y.; Wang, H.; Zeng, Y.; Duan, X.; Wan, W.; Wang, L.; Zhuang, Y.; et al. Optimizing High-Resolution Community Earth System Model on a Heterogeneous Many-Core Supercomputing Platform. Geosci. Model Dev. 2020, 13, 4809–4829. [Google Scholar] [CrossRef]

- Xiao, B.; Qiao, F.; Shu, Q.; Yin, X.; Wang, G.; Wang, S. Development and Validation of a Global 1∕32° Surface-Wave–Tide–Circulation Coupled Ocean Model: FIO-COM32. Geosci. Model Dev. 2023, 16, 1755–1777. [Google Scholar] [CrossRef]

- Li, X.; Liu, B.; Zheng, G.; Ren, Y.; Zhang, S.; Liu, Y.; Gao, L.; Liu, Y.; Zhang, B.; Wang, F. Deep-Learning-Based Information Mining from Ocean Remote-Sensing Imagery. Natl. Sci. Rev. 2021, 7, 1584–1605. [Google Scholar] [CrossRef]

- Ravuri, S.; Lenc, K.; Willson, M.; Kangin, D.; Lam, R.; Mirowski, P.; Fitzsimons, M.; Athanassiadou, M.; Kashem, S.; Madge, S.; et al. Skilful Precipitation Nowcasting Using Deep Generative Models of Radar. Nature 2021, 597, 672–677. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Long, M.; Chen, K.; Xing, L.; Jin, R.; Jordan, M.I.; Wang, J. Skilful Nowcasting of Extreme Precipitation with NowcastNet. Nature 2023, 619, 526–532. [Google Scholar] [CrossRef]

- Berbić, J.; Ocvirk, E.; Carević, D.; Lončar, G. Application of Neural Networks and Support Vector Machine for Significant Wave Height Prediction. Oceanologia 2017, 59, 331–349. [Google Scholar] [CrossRef]

- Wolff, S.; O’Donncha, F.; Chen, B. Statistical and Machine Learning Ensemble Modelling to Forecast Sea Surface Temperature. J. Mar. Syst. 2020, 208, 103347. [Google Scholar] [CrossRef]

- Weyn, J.A.; Durran, D.R.; Caruana, R.; Cresswell-Clay, N. Sub-seasonal Forecasting with a Large Ensemble of Deep-learning Weather Prediction Models. J. Adv. Model. Earth Syst. 2021, 13, e2021MS002502. [Google Scholar] [CrossRef]

- Ham, Y.G.; Kim, J.H.; Luo, J.J. Deep Learning for Multi-Year ENSO Forecasts. Nature 2019, 573, 568–572. [Google Scholar] [CrossRef]

- Ashkezari, M.D.; Hill, C.N.; Follett, C.N.; Forget, G.; Follows, M.J. Oceanic Eddy Detection and Lifetime Forecast Using Machine Learning Methods. Geophys. Res. Lett. 2016, 43, 12234–12241. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Wang, Y.; Jiang, L.; Yang, M.H.; Li, L.J.; Long, M.; Fei-Fei, L. Eidetic 3D LSTM: A Model for Video Prediction and Beyond. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019; p. 10. [Google Scholar]

- Xiao, C.; Tong, X.; Li, D.; Chen, X.; Yang, Q.; Xv, X.; Lin, H.; Huang, M. Prediction of Long Lead Monthly Three-Dimensional Ocean Temperature Using Time Series Gridded Argo Data and a Deep Learning Method. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102971. [Google Scholar] [CrossRef]

- Wang, G.; Wang, X.; Wu, X.; Liu, K.; Qi, Y.; Sun, C.; Fu, H. Hybrid Multivariate Deep Learning Network for Multistep Ahead Sea Level Anomaly Forecasting. J. Atmos. Ocean. Technol. 2022, 39, 285–301. [Google Scholar] [CrossRef]

- Dong, C.; Xu, G.; Han, G.; Bethel, B.J.; Xie, W.; Zhou, S. Recent Developments in Artificial Intelligence in Oceanography. Ocean. Res. 2022, 2022, 9870950. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16X16 Words: Transformers for Image Recognition At Scale. In Proceedings of the ICLR 2021—9th International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Han, T.; Guo, S.; Ling, F.; Chen, K.; Gong, J.; Luo, J.; Gu, J.; Dai, K.; Ouyang, W.; Bai, L. Fengwu-Ghr: Learning the Kilometer-Scale Medium-Range Global Weather Forecasting. arXiv 2024, arXiv:2402.00059. [Google Scholar]

- Kurth, T.; Subramanian, S.; Harrington, P.; Pathak, J.; Mardani, M.; Hall, D.; Miele, A.; Kashinath, K.; Anandkumar, A. Fourcastnet: Accelerating Global High-Resolution Weather Forecasting Using Adaptive Fourier Neural Operators. In Proceedings of the Platform for Advanced Scientific Computing Conference, Davos, Switzerland, 26–28 June 2023; pp. 1–11. [Google Scholar]

- Lam, R.; Sanchez-Gonzalez, A.; Willson, M.; Wirnsberger, P.; Fortunato, M.; Alet, F.; Ravuri, S.; Ewalds, T.; Eaton-Rosen, Z.; Hu, W.; et al. Learning Skillful Medium-Range Global Weather Forecasting. Science 2023, 382, 1416–1421. [Google Scholar] [CrossRef] [PubMed]

- Bi, K.; Xie, L.; Zhang, H.; Chen, X.; Gu, X.; Tian, Q. Accurate Medium-Range Global Weather Forecasting with 3D Neural Networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 9992–10002. [Google Scholar]

- Gao, Z.; Shi, X.; Wang, H.; Zhu, Y.; Wang, Y.B.; Li, M.; Yeung, D.-Y. Earthformer: Exploring Space-Time Transformers for Earth System Forecasting. Adv. Neural Inf. Process. Syst. 2022, 35, 25390–25403. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11999–12009. [Google Scholar]

- Chen, L.; Zhong, X.; Zhang, F.; Cheng, Y.; Xu, Y.; Qi, Y.; Li, H. FuXi: A Cascade Machine Learning Forecasting System for 15-Day Global Weather Forecast. npj Clim. Atmos. Sci. 2023, 6, 190. [Google Scholar] [CrossRef]

- Chen, L.; Zhong, X.; Li, H.; Wu, J.; Lu, B.; Chen, D.; Xie, S.-P.; Wu, L.; Chao, Q.; Lin, C.; et al. A Machine Learning Model That Outperforms Conventional Global Subseasonal Forecast Models. Nat. Commun. 2024, 15, 6425. [Google Scholar] [CrossRef]

- Wang, X.; Wang, R.; Hu, N.; Wang, P.; Huo, P.; Wang, G.; Wang, H.; Wang, S.; Zhu, J.; Xu, J. Xihe: A Data-Driven Model for Global Ocean Eddy-Resolving Forecasting. arXiv 2024, arXiv:2402.02995. [Google Scholar]

- Patrick, M.; Campbell, D.; Asano, Y.; Misra, I.; Metze, F.; Feichtenhofer, C.; Vedaldi, A.; Henriques, J.F. Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12493–12506. [Google Scholar]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale Vision Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 6824–6835. [Google Scholar]

- Yan, S.; Xiong, X.; Arnab, A.; Lu, Z.; Zhang, M.; Sun, C.; Schmid, C. Multiview Transformers for Video Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3333–3343. [Google Scholar]

- Jean-Michel, L.; Eric, G.; Romain, B.-B.; Gilles, G.; Angélique, M.; Marie, D.; Clément, B. The Copernicus Global 1/12 Oceanic and Sea Ice GLORYS12 Reanalysis. Front. Earth Sci. 2021, 9, 698876. [Google Scholar] [CrossRef]

- Mears, C.; Lee, T.; Ricciardulli, L.; Wang, X.; Wentz, F. Improving the Accuracy of the Cross-Calibrated Multi-Platform (CCMP) Ocean Vector Winds. Remote Sens. 2022, 14, 4230. [Google Scholar] [CrossRef]

- Good, S.; Fiedler, E.; Mao, C.; Martin, M.J.; Maycock, A.; Reid, R.; Roberts-Jones, J.; Searle, T.; Waters, J.; While, J.; et al. The Current Configuration of the OSTIA System for Operational Production of Foundation Sea Surface Temperature and Ice Concentration Analyses. Remote Sens. 2020, 12, 720. [Google Scholar] [CrossRef]

- Wong, A.P.S.; Wijffels, S.E.; Riser, S.C.; Pouliquen, S.; Hosoda, S.; Roemmich, D.; Gilson, J.; Johnson, G.C.; Martini, K.; Murphy, D.J.; et al. Argo Data 1999–2019: Two Million Temperature-Salinity Profiles and Subsurface Velocity Observations From a Global Array of Profiling Floats. Front. Mar. Sci. 2020, 7, 700. [Google Scholar] [CrossRef]

- Chao, G.; Wu, X.; Zhang, L.; Fu, H.; Liu, K.; Han, G. China Ocean ReAnalysis (CORA) Version 1.0 Products and Validation for 2009–18. Atmos. Ocean. Sci. Lett. 2021, 14, 100023. [Google Scholar] [CrossRef]

- Sun, W.; Wang, J.; Zhang, J.; Ma, Y.; Meng, J.; Yang, L.; Miao, J. A New Global Gridded Sea Surface Temperature Product Constructed from Infrared and Microwave Radiometer Data Using the Optimum Interpolation Method. Acta Oceanol. Sin. 2018, 37, 41–49. [Google Scholar] [CrossRef]

- Wang, X.; Wang, C.; Han, G.; Li, W.; Wu, X. Effects of Tropical Cyclones on Large-Scale Circulation and Ocean Heat Transport in the South China Sea. Clim. Dyn. 2014, 43, 3351–3366. [Google Scholar] [CrossRef]

- Tuo, P.; Yu, J.Y.; Hu, J. The Changing Influences of ENSO and the Pacific Meridional Mode on Mesoscale Eddies in the South China Sea. J. Clim. 2018, 32, 685–700. [Google Scholar] [CrossRef]

- Liu, Z.; Ning, J.; Cao, Y.; Wei, Y.; Zhang, Z.; Lin, S.; Hu, H. Video Swin Transformer. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3192–3201. [Google Scholar]

- Courtier, P.; Thépaut, J.-N.; Hollingsworth, A. A Strategy for Operational Implementation of 4D-Var, Using an Incremental Approach. Q. J. R. Meteorol. Soc. 1994, 120, 1367–1387. [Google Scholar] [CrossRef]

- Xiao, Y.; Bai, L.; Xue, W.; Chen, K.; Han, T.; Ouyang, W. FengWu-4DVar: Coupling the Data-Driven Weather Forecasting Model with 4D Variational Assimilation. arXiv 2023, arXiv:2312.12455. [Google Scholar]

- Dee, D.P.; Uppala, S.M.; Simmons, A.J.; Berrisford, P.; Poli, P.; Kobayashi, S.; Andrae, U.; Balmaseda, M.A.; Balsamo, G.; Bauer, P.; et al. The ERA-Interim Reanalysis: Configuration and Performance of the Data Assimilation System. Q. J. R. Meteorol. Soc. 2011, 137, 553–597. [Google Scholar] [CrossRef]

- Lorenc, A.C.; Rawlins, F. Why Does 4D-Var Beat 3D-Var? Q. J. R. Meteorol. Soc. A J. Atmos. Sci. Appl. Meteorol. Phys. Oceanogr. 2005, 131, 3247–3257. [Google Scholar] [CrossRef]

- Llugsi, R.; El Yacoubi, S.; Fontaine, A.; Lupera, P. Comparison between Adam, AdaMax and Adam W Optimizers to Implement a Weather Forecast Based on Neural Networks for the Andean City of Quito. In Proceedings of the 2021 IEEE Fifth Ecuador Technical Chapters Meeting (ETCM), Cuenca, Ecuador, 12–15 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Zhao, Y.; Gu, A.; Varma, R.; Luo, L.; Huang, C.-C.; Xu, M.; Wright, L.; Shojanazeri, H.; Ott, M.; Shleifer, S.; et al. Pytorch Fsdp: Experiences on Scaling Fully Sharded Data Parallel. arXiv 2023, arXiv:2304.11277. [Google Scholar] [CrossRef]

- Lellouche, J.M.; Greiner, E.; Le Galloudec, O.; Garric, G.; Regnier, C.; Drevillon, M.; Benkiran, M.; Testut, C.E.; Bourdalle-Badie, R.; Gasparin, F.; et al. Recent Updates to the Copernicus Marine Service Global Ocean Monitoring and Forecasting Real-Time 1 g 12° High-Resolution System. Ocean Sci. 2018, 14, 1093–1126. [Google Scholar] [CrossRef]

- Levine, R.A.; Wilks, D.S. Statistical Methods in the Atmospheric Sciences. J. Am. Stat. Assoc. 2000, 95, 344. [Google Scholar] [CrossRef]

- Shriver, J.F.; Hurlburt, H.E.; Smedstad, O.M.; Wallcraft, A.J.; Rhodes, R.C. 1/32° Real-Time Global Ocean Prediction and Value-Added Over 1/16° Resolution. J. Mar. Syst. 2007, 65, 3–26. [Google Scholar] [CrossRef]

- Qu, T. Upper-Layer Circulation in the South China Sea. J. Phys. Oceanogr. 2000, 30, 1450–1460. [Google Scholar] [CrossRef]

- Yi, D.L.; Melnichenko, O.; Hacker, P.; Potemra, J. Remote Sensing of Sea Surface Salinity Variability in the South China Sea. J. Geophys. Res. Ocean. 2020, 125, e2020JC016827. [Google Scholar] [CrossRef]

- Zhao, Z.; Yang, S.; Wang, H.; Yuan, T.; Ren, K. The Remote Effects of Typhoons on the Cold Filaments in the Southwestern South China Sea. Remote Sens. 2024, 16, 3293. [Google Scholar] [CrossRef]

- Guan, S.; Li, S.; Hou, Y.; Hu, P.; Liu, Z.; Feng, J. Increasing Threat of Landfalling Typhoons in the Western North Pacific Between 1974 and 2013. Int. J. Appl. Earth Observ. Geoinf. 2018, 68, 279–286. [Google Scholar] [CrossRef]

- Potter, H.; Drennan, W.M.; Graber, H.C. Upper Ocean Cooling and Air-Sea Fluxes Under Typhoons: A Case Study. J. Geophys. Res. Ocean. 2017, 122, 7237–7252. [Google Scholar] [CrossRef]

- Wang, X.D.; Han, G.J.; Qi, Y.Q.; Li, W. Impact of Barrier Layer on Typhoon-Induced Sea Surface Cooling. Dyn. Atmos. Ocean. 2011, 52, 367–385. [Google Scholar] [CrossRef]

- Jullien, S.; Marchesiello, P.; Menkes, C.E.; Lefèvre, J.; Jourdain, N.C.; Samson, G.; Lengaigne, M. Ocean Feedback to Tropical Cyclones: Climatology and Processes. Clim. Dyn. 2014, 43, 2831–2854. [Google Scholar] [CrossRef]

- Bender, M.A.; Ginis, I.; Kurihara, Y. Numerical Simulations of Tropical Cyclone-ocean Interaction with a High-resolution Coupled Model. J. Geophys. Res. Atmos. 1993, 98, 23245–23263. [Google Scholar] [CrossRef]

- Vincent, E.M.; Lengaigne, M.; Vialard, J.; Madec, G.; Jourdain, N.C.; Masson, S. Assessing the Oceanic Control on the Amplitude of Sea Surface Cooling Induced by Tropical Cyclones. J. Geophys. Res. Ocean. 2012, 117, C5. [Google Scholar] [CrossRef]

- Karnauskas, K.B.; Zhang, L.; Emanuel, K.A. The Feedback of Cold Wakes on Tropical Cyclones. Geophys. Res. Lett. 2021, 48, e2020GL091676. [Google Scholar] [CrossRef]

| Hyperparameters | Value |

|---|---|

| Input Size | 10 × 360 × 360 × 26 |

| 10 × 360 × 360 × 3 | |

| Output Size | 10 × 360 × 360 × 26 |

| Loss Function | RMSE |

| Optimizer | AdamW |

| Learning Rate | 0.001 |

| β1 | 0.9 |

| β2 | 0.999 |

| Batch Size | 16 |

| Weight Decay | 0.00001 |

| Learning Rate Decay | Cosine |

| Max Training Epochs | 200 |

| Warm Up Percentage | 10% |

| Early Stop | True |

| Early Stop Patience | 10 |

| Parameters | 222 million |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, G.; Hou, M.; Qin, M.; Wu, X.; Gao, Z.; Chao, G.; Zhang, X. The TSformer: A Non-Autoregressive Spatio-Temporal Transformers for 30-Day Ocean Eddy-Resolving Forecasting. J. Mar. Sci. Eng. 2025, 13, 966. https://doi.org/10.3390/jmse13050966

Wang G, Hou M, Qin M, Wu X, Gao Z, Chao G, Zhang X. The TSformer: A Non-Autoregressive Spatio-Temporal Transformers for 30-Day Ocean Eddy-Resolving Forecasting. Journal of Marine Science and Engineering. 2025; 13(5):966. https://doi.org/10.3390/jmse13050966

Chicago/Turabian StyleWang, Guosong, Min Hou, Mingyue Qin, Xinrong Wu, Zhigang Gao, Guofang Chao, and Xiaoshuang Zhang. 2025. "The TSformer: A Non-Autoregressive Spatio-Temporal Transformers for 30-Day Ocean Eddy-Resolving Forecasting" Journal of Marine Science and Engineering 13, no. 5: 966. https://doi.org/10.3390/jmse13050966

APA StyleWang, G., Hou, M., Qin, M., Wu, X., Gao, Z., Chao, G., & Zhang, X. (2025). The TSformer: A Non-Autoregressive Spatio-Temporal Transformers for 30-Day Ocean Eddy-Resolving Forecasting. Journal of Marine Science and Engineering, 13(5), 966. https://doi.org/10.3390/jmse13050966